Paper Menu >>

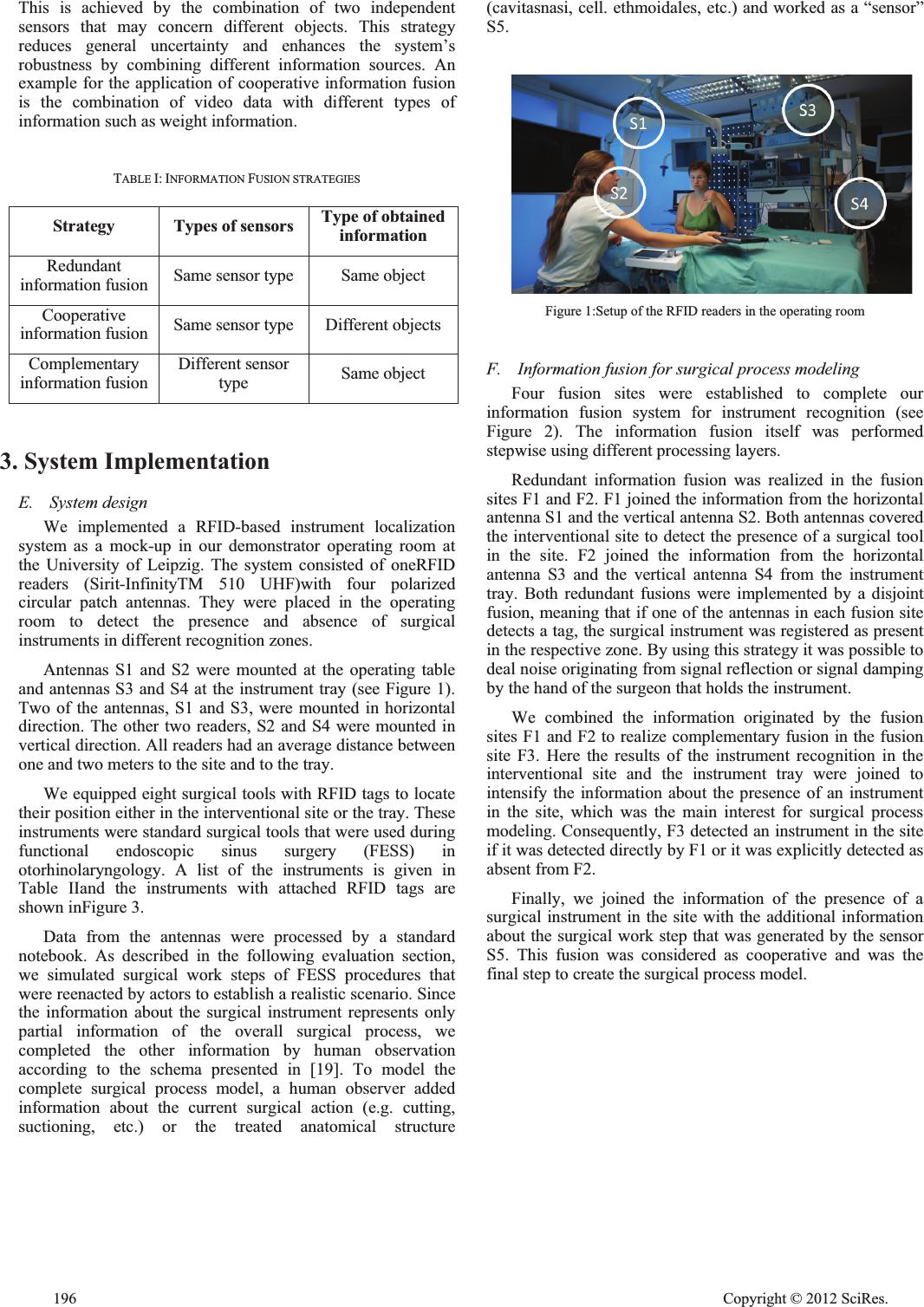

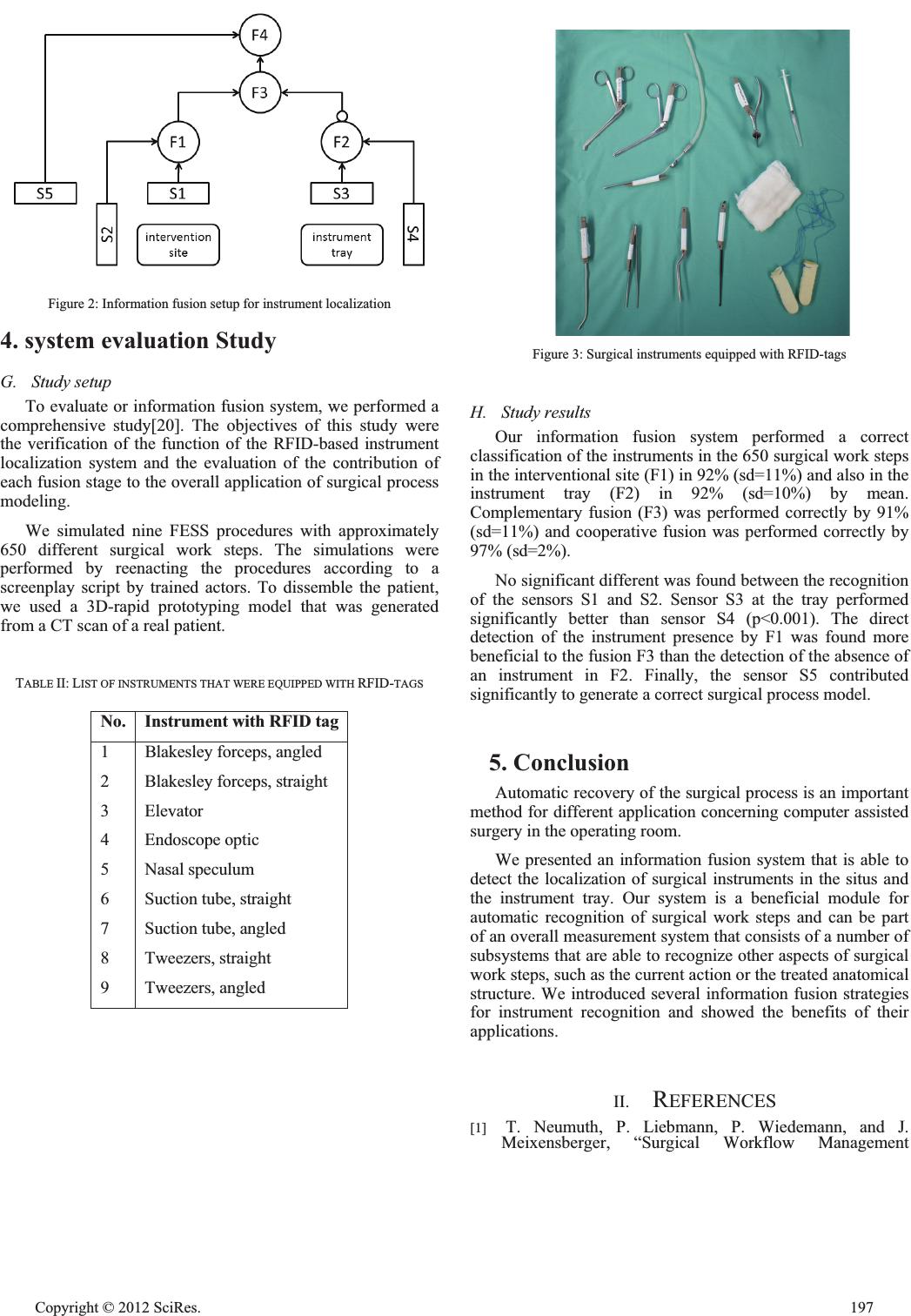

Journal Menu >>

Information Fusion for Process Acquisition in the Operating Room Thomas Neumuth, Christian Meißner Innovation Center Computer Assisted Surgery (ICCAS)University of Leipzig Leipzig, Germany firstname.lastname@iccas.de Abstract—The recognition of surgical processes in the operating room is an emerging research field in medical engineering. We present the design and implementation of a instrument localization system that is based on information fusion strategies to enhance its recognition power. The system was implemented using RFID technology. It monitored the presence of surgical tools in the interventional site and the instrument tray and combined the measured information by applying redundant, complementary, and cooperative information fusion strategy to achieve a more comprehensive model of the current situation. An evaluation study was performed that showed a correct classification rate of 97% for the system. Keywords-surgical procedure, workflow, proces modeling, operating room, computer-assisted surgery, information fusion 1) 1.Introduction Acquisition and modeling of surgical processes in the operating room is an emerging research field in medical engineering. The on-hand availability of surgical process models enables several technologies and applications, such as assessment of surgical strategies, evaluation of surgical assist systems, or process optimization. Technical applications like workflow management support in the operating room rely on process information too[1]. For these applications, the workflow management system needs to know the underlying process it has to support. Therefore, the recognition of the process is an indispensable step. One current research objective is the automatic recognition of surgical activities or partial information of them. Several approaches focused on the recognition of partial process information from surgical processes. Main data sources were the recognition of surgical gestures or tool in video data [2–6], from kinematic data from telemanipulators[7] or from virtual environments [8]. Other works emphasized the recognition of surgical actions by using force/torque signatures [9] or acceleration sensors [10].Additionally, eye movements of the surgeon [11], the location of the OR staff [12], or the interpretation of patient’s vital parameter [13] were used to recover surgical activities. We present the design, implementation, and evaluation of an online instrument recognition system that uses RFID data to identify information about surgical activities. Our design uses different information fusion strategies to optimize the recognition abilities of the system. 2. Information fusion strategies The process of interrelation of data and information from different sources is called information fusion [14–17]. For this purpose, data are matched, correlated, and combined to create an abstract, but also more appropriate and more precise view of the measured object or scene. Durrant-Whyte [18]introduced a classification scheme that distinguishes several information fusion strategies according to sensor types that are used for acquisition. He differentiated redundant, complementary, and cooperative information fusion. An overview of the information fusion strategies is presented in Table I. B. Redundant information fusion If redundant information fusion is applied, information is acquired by sensors of a similar type. Here it is the objective to compensate measurement errors by combining multiple sensors. By applying this strategy, each sensor detects the same measurement parameters of the same object independently from other sensors. By following this strategy it is possible to enhance robustness and the margin of error of the overall system. An example for redundant information fusion is the supervision of an area with different cameras. Using several cameras decreases the chance that single spots in the supervised area are hidden from one of the cameras. C. Complementary information fusion Complementary information fusion is performed by combining different sensors with non-redundant information about the measured objects. The sensors work independent from each other and are applied to acquire a more complete representation of the current situation. An example for complementary information fusion is the supervision of different areas with video cameras to obtain a more complete supervision. D. Cooperative information fusion Cooperative information fusion is applied to derive information or data from several sensors of different types. Open Journal of Applied Sciences Supplement:2012 world Congress on Engineering and Technology Cop y ri g ht © 2012 SciRes.195  This is achieved by the combination of two independent sensors that may concern different objects. This strategy reduces general uncertainty and enhances the system’s robustness by combining different information sources. An example for the application of cooperative information fusion is the combination of video data with different types of information such as weight information. TABLE I: INFORMATION FUSION STRATEGIES Strategy Types of sensors T y pe of obtained information Redundant information fusion Same sensor type Same object Cooperative information fusion Same sensor type Different objects Complementary information fusion Different sensor type Same object 3. System Implementation E. System design We implemented a RFID-based instrument localization system as a mock-up in our demonstrator operating room at the University of Leipzig. The system consisted of oneRFID readers (Sirit-InfinityTM 510 UHF)with four polarized circular patch antennas. They were placed in the operating room to detect the presence and absence of surgical instruments in different recognition zones. Antennas S1 and S2 were mounted at the operating table and antennas S3 and S4 at the instrument tray (see Figure 1). Two of the antennas, S1 and S3, were mounted in horizontal direction. The other two readers, S2 and S4 were mounted in vertical direction. All readers had an average distance between one and two meters to the site and to the tray. We equipped eight surgical tools with RFID tags to locate their position either in the interventional site or the tray. These instruments were standard surgical tools that were used during functional endoscopic sinus surgery (FESS) in otorhinolaryngology. A list of the instruments is given in Table IIand the instruments with attached RFID tags are shown inFigure 3. Data from the antennas were processed by a standard notebook. As described in the following evaluation section, we simulated surgical work steps of FESS procedures that were reenacted by actors to establish a realistic scenario. Since the information about the surgical instrument represents only partial information of the overall surgical process, we completed the other information by human observation according to the schema presented in [19]. To model the complete surgical process model, a human observer added information about the current surgical action (e.g. cutting, suctioning, etc.) or the treated anatomical structure (cavitasnasi, cell. ethmoidales, etc.) and worked as a “sensor” S5. Figure 1:Setup of the RFID readers in the operating room F. Information fusion for surgical process modeling Four fusion sites were established to complete our information fusion system for instrument recognition (see Figure 2). The information fusion itself was performed stepwise using different processing layers. Redundant information fusion was realized in the fusion sites F1 and F2. F1 joined the information from the horizontal antenna S1 and the vertical antenna S2. Both antennas covered the interventional site to detect the presence of a surgical tool in the site. F2 joined the information from the horizontal antenna S3 and the vertical antenna S4 from the instrument tray. Both redundant fusions were implemented by a disjoint fusion, meaning that if one of the antennas in each fusion site detects a tag, the surgical instrument was registered as present in the respective zone. By using this strategy it was possible to deal noise originating from signal reflection or signal damping by the hand of the surgeon that holds the instrument. We combined the information originated by the fusion sites F1 and F2 to realize complementary fusion in the fusion site F3. Here the results of the instrument recognition in the interventional site and the instrument tray were joined to intensify the information about the presence of an instrument in the site, which was the main interest for surgical process modeling. Consequently, F3 detected an instrument in the site if it was detected directly by F1 or it was explicitly detected as absent from F2. Finally, we joined the information of the presence of a surgical instrument in the site with the additional information about the surgical work step that was generated by the sensor S5. This fusion was considered as cooperative and was the final step to create the surgical process model. 196 Cop y ri g ht © 2012 SciRes.  Figure 2: Information fusion setup for instrument localization 4. system evaluation Study G. Study setup To evaluate or information fusion system, we performed a comprehensive study[20]. The objectives of this study were the verification of the function of the RFID-based instrument localization system and the evaluation of the contribution of each fusion stage to the overall application of surgical process modeling. We simulated nine FESS procedures with approximately 650 different surgical work steps. The simulations were performed by reenacting the procedures according to a screenplay script by trained actors. To dissemble the patient, we used a 3D-rapid prototyping model that was generated from a CT scan of a real patient. TABLE II: LIST OF INSTRUMENTS THAT WERE EQUIPPED WITH RFID-TAGS No. Instrument with RFID ta g 1 2 3 4 5 6 7 8 9 Blakesley forceps, angle d Blakesley forceps, straight Elevator Endoscope optic Nasal speculum Suction tube, straight Suction tube, angled Tweezers, straight Tweezers, angled Figure 3: Surgical instruments equipped with RFID-tags H. Study results Our information fusion system performed a correct classification of the instruments in the 650 surgical work steps in the interventional site (F1) in 92% (sd=11%) and also in the instrument tray (F2) in 92% (sd=10%) by mean. Complementary fusion (F3) was performed correctly by 91% (sd=11%) and cooperative fusion was performed correctly by 97% (sd=2%). No significant different was found between the recognition of the sensors S1 and S2. Sensor S3 at the tray performed significantly better than sensor S4 (p<0.001). The direct detection of the instrument presence by F1 was found more beneficial to the fusion F3 than the detection of the absence of an instrument in F2. Finally, the sensor S5 contributed significantly to generate a correct surgical process model. 5. Conclusion Automatic recovery of the surgical process is an important method for different application concerning computer assisted surgery in the operating room. We presented an information fusion system that is able to detect the localization of surgical instruments in the situs and the instrument tray. Our system is a beneficial module for automatic recognition of surgical work steps and can be part of an overall measurement system that consists of a number of subsystems that are able to recognize other aspects of surgical work steps, such as the current action or the treated anatomical structure. We introduced several information fusion strategies for instrument recognition and showed the benefits of their applications. II. REFERENCES [1] T. Neumuth, P. Liebmann, P. Wiedemann, and J. Meixensberger, “Surgical Workflow Management Cop y ri g ht © 2012 SciRes.197  Schemata for Cataract Procedures. Process Model-based Design and Validation of Workflow Schemata,” Methods of Information in Medicine, vol. 51, no. 4, May 2012. [2] N. Padoy, T. Blum, S.-A. Ahmadi, H. Feussner, M.-O. Berger, and N. Navab, “Statistical Modeling and Recognition of Surgical Workflow,” Medical Image Analysis, vol. 16, no.3, pp632-641, Apr 2012. [3] G. Sudra, A. Becker, M. Braun, S. Speidel, B. P. Mueller-Stich, and R. Dillmann, “Estimating similarity of surgical situations with case-retrieval-nets,” Stud Health Technol Inform, vol. 142, pp. 358–363, 2009. [4] F. Lalys, L. Riffaud, X. Morandi, and P. Jannin, “Automatic phases recognition in pituitary surgeries by microscope images classification,” presented at the IPCAI 2010, 2010. [5] L. Bouarfa, P. P. Jonker, and J. Dankelman, “Discovery of high-level tasks in the operating room,” J Biomed Inform, Jan. 2010. [6] H. Lin and G. Hager, “User-independent models of manipulation using video contextual cues,” presented at the MICCAI, London, 2009. [7] B. Varadarajan, C. Reiley, H. Lin, S. Khudanpur, and G. Hager, “Data-derived models for segmentation with application to surgical assessment and training,” Med Image Comput Comput Assist Interv, vol. 12, no. Pt 1, pp. 426–434, 2009. [8] A. Darzi and S. Mackay, “Skills assessment of surgeons,” Surgery, vol. 131, no. 2, pp. 121–124, Feb. 2002. [9] J. Rosen, B. Hannaford, C. G. Richards, and M. N. Sinanan, “Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills,” IEEE Trans Biomed Eng, vol. 48, no. 5, pp. 579–591, May 2001. [10] A. Ahmadi, N. Padoy, K. Rybachuk, H. Feußner, S. Heining, and N. Navab, “Motif Discovery in OR Sensor Data with Application to Surgical Workflow Analysis and Activity Detection,” presented at the MICCAI Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI), London, 2010. [11] A. James, D. Vieira, B. Lo, A. Darzi, and G.-Z. Yang, “Eye-Gaze Driven Surgical Workflow Segmentation,” in Proc. of Medical Image Computing and Computer- Assisted Intervention (MICCAI 2007), vol. LNCS 4792, N. Ayache, S. Ourselin, and A. Maeder, Eds. 2007, pp. 110–117. [12] A. Nara, K. Izumi, H. Iseki, T. Suzuki, K. Nambu, and Y. Sakurai, “Surgical workflow analysis based on staff’s trajectory patterns,” presented at the MICCAI Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI), London, 2010. [13] Y. Xiao, P. Hu, H. Hu, D. Ho, F. Dexter, C. F. Mackenzie, F. J. Seagull, and R. P. Dutton, “An algorithm for processing vital sign monitoring data to remotely identify operating room occupancy in real-time,” Anesth. Analg, vol. 101, no. 3, pp. 823–829, table of contents, Sep. 2005. [14] D. Hall and J. LLinas, “An Introduction to Multisensor data fusion,” 1997, vol. 85(1), pp. 6–23. [15] Xiong N. and Svensson P., “Multi-sensor management for information fusion: issues and approaches,” Information Fusion, vol. 3, pp. 163–186, Jun. 2002. [16] J. Llinas, C. Bowman, G. Rogova, A. Steinberg, E. Waltz, and F. White, “Revisiting the JDL Data Fusion Model II,” IN P. SVENSSON AND J. SCHUBERT (EDS.), PROCEEDINGS OF THE SEVENTH INTERNATIONAL CONFERENCE ON INFORMATION FUSION (FUSION 2004, vol. 2, p. 1218–1230, 2004. [17] M. M. Kokar, J. A. Tomasik, and J. Weyman, “Formalizing classes of information fusion systems,” Information Fusion, vol. 5, no. 3, pp. 189–202, Sep. 2004. [18] H. F. Durrant-Whyte, “Sensor Models and Multisensor Integration,” The International Journal of Robotics Research, vol. 7, no. 6, pp. 97 –113, Dec. 1988. [19] T. Neumuth, P. Jannin, G. Strauss, J. Meixensberger, and O. Burgert, “Validation of knowledge acquisition for surgical process models,” J Am Med Inform Assoc, vol. 16, no. 1, pp. 72–80, 2009. [20] T. Neumuth and C. Meissner, “Online recognition of surgical instruments by information fusion,” Int J Comput Assist Radiol Surg, vol. 7, no. 2, pp. 297–304, Mar. 2012. 198 Cop y ri g ht © 2012 SciRes. |