Paper Menu >>

Journal Menu >>

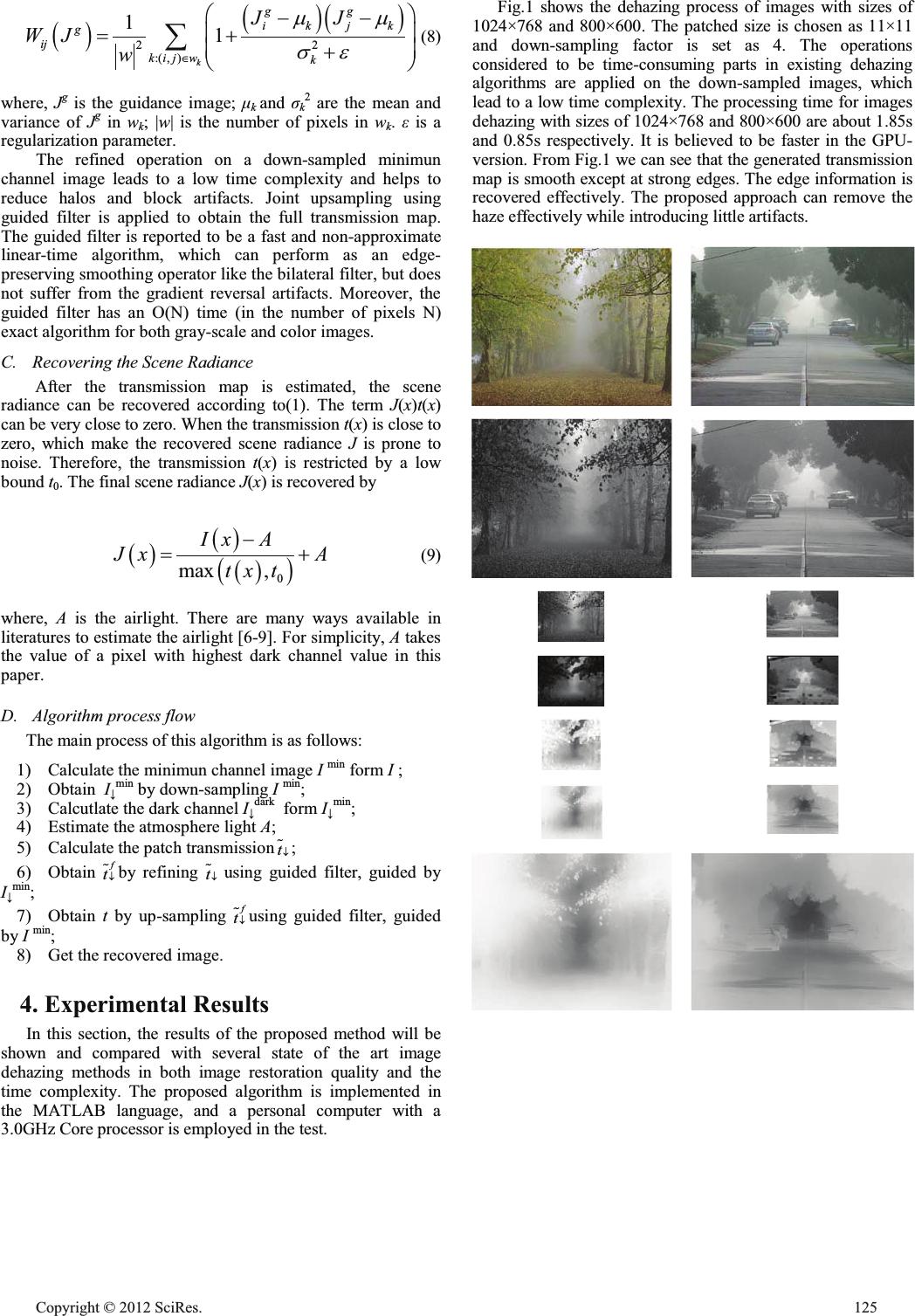

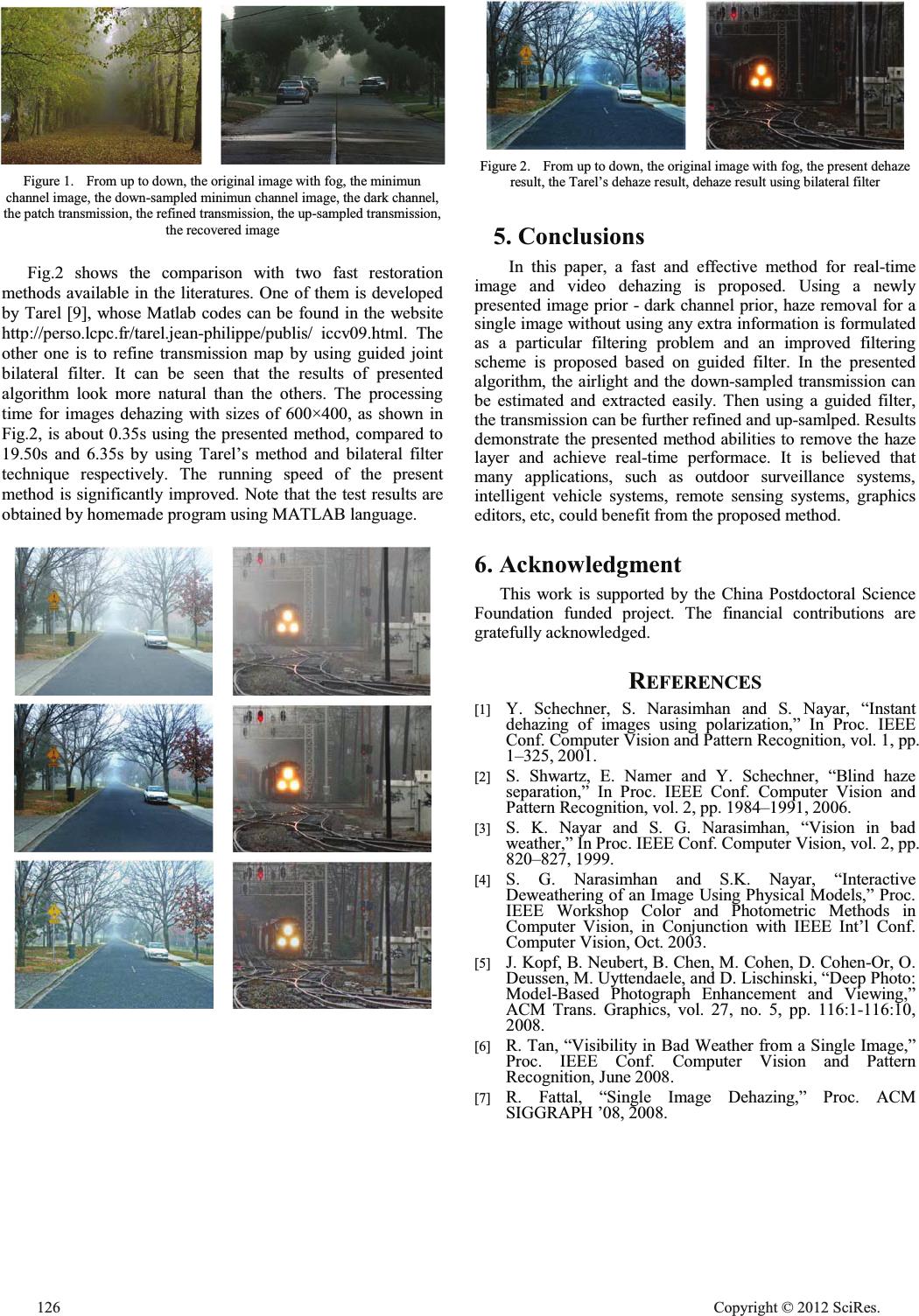

Dehazing for Image and Video Using Guided Filter Zheqi Lin School of Information Science & Technology, Sun Yat-sen University, Guangzhou, 510006, China Shenzhen Key Laboratory of Digital Living Network and Content Service Research Institute of Sun Yat-sen University in Shenzhen, Shenzhen, 518057,China linzheqi@gmail.com Xuansheng Wang School of Information Science & Technology, Sun Yat-sen University, Guangzhou, 510006, China Shenzhen Key Laboratory of Digital Living Network and Content Service Research Institute of Sun Yat-sen University in Shenzhen, Shenzhen, 518057,China wxs111111@163.com Abstract—Poor visibility in bad weather, such as haze and fog, is a major problem for many applications of computer vision. Thus, haze removal is highly required for receiving high performance of the vision algorithm. In this paper, we propose a new fast dehazing method for real-time image and video processing. The transmission map estimated by an improved guided filtering scheme is smooth and respect with depth information of the underlying image. Results demonstrate that the proposed method achieves good dehazeing effect as well as real-time performance. The proposed algorithm, due to its speed and ability to improve visibility, may be used with advantages as pre-processing in many systems ranging from surveillance, intelligent vehicles, to remote sensing. Keywords- Image dehazing; dark channel prior; guided filter; down-sampling 1. Introduction Computer vision system can be used for many ourdoor applications, such as video surveillance, remote sensing, and intelligent vehicles. Virtually all computer vision tasks or computational photography algorithms assume that the input images are taken in clear weather and robust feature detection are achieved in high quality image. Unfortunately, this is not always true in many situations. The quality of a captured image in bad weather is usually degraded by the presence of haze or fog in the atmosphere, since the incident light to a camera is attenuated and the image contrast is reduced. This will become a major problem in many computer vision applications. The performance of many vision algorithms such as feature detection and photometric analysis, will inevitably suffer from the biased and low-contrast scene radiance. Dehazing is the process to remove haze effects in captured images and reconstruct the original colors of natural scenes, which will be a useful pre-processing for input images and required for receiving high performance of the vision algorithm. However, haze removal is a challenging problem since the degradation is spatial-variant, it depends on the unknown scene depth. In the literature, a few approaches have been proposed by using multiple images or additional information. For example, polarization-based methods [1, 2] remove the haze effect through two or more images taken with different degrees of polarization; scene depths are estimated from multiple images of the same scene that are captured in different weather conditions when using depth-based methods [3-5]. Although these methods may produce impressive results, they are impractical, because the requirements cannot always be satisfied and make them difficult to meet with real-time applications of images with changing scenes. In order to overcome the drawback of multiple images dehazing algorithms, many researchers focus on achieving haze removal results from a single degraded image. Tan [6] developed the contrast maximization technique for haze removal relied on the observations that images with enhanced visibility have more contrast than images plagued by bad weather. Under the assumption that the transmission and the surface shading are locally uncorrelated, Fattal [7] presented a method for estimating the transmission in hazy scenes. He et al. [8] propose a novel prior—dark channel prior—for single image haze removal, which is based on the statistics of outdoor haze-free images. Combining a haze imaging model and a soft matting interpolation method, they can recover a high-quality haze-free image. The success of these methods lies on using a stronger prior or assumption. However, a common drawback of these methods above is their computational cost and time complexity. To improve the efficiency of image dehazing, Tarel [9] proposed a fast dehazing algorithm using a median filter; this algorithm was very efficient, but as the median filter is not conformal and edge-preserving, although the received atmosphere veil are smooth, it does not respect with the depth information of the scene. Xiao and Gan [10] obtain an initial atmosphere scattering light through median filtering, then refine it by guided joint bilateral filtering to generate a new atmosphere veil which removes the abundant texture information and recovers the depth edge information. In this paper, an improved guided filtering scheme is proposed for the approximation of the transmission map for real-time image and video dehazing process. The proposed corresponding author: Zheqi Lin Open Journal of Applied Sciences Supplement:2012 world Congress on Engineering and Technology Cop y ri g ht © 2012 SciRes.123  method makes good use of the effective image prior—dark channel prior and guided filter upsampling. An accurate the transmission map can be estimated effectively. The article is structured as follows. Section II presents the fog model used. Section III describes the proposed dehazing algorithm. Section IV presents simulation results. Finally, Section V concludes the paper. 2. Haze modeling The observed brightness of a capture image in the presence of haze can be modeled based on the atmospheric optics [6, 7, 11] via ()()()(1 ())IxJxtxAtx (1) where, I(x) is the observed haze image, J(x) is scene irradiance (the clear haze-free image), A is the airlight that represents the ambient light in the atmosphere. t(x)ę[0, 1] is the transmission of the light reflected by the object, which indicates the depth information of the scene objects directly. J(x)t(x)on the right- hand side is called direct attenuation, which describes the scene radiance and its decay in the medium. The second term A(1-t(x)) is the atmospheric veil (atmospheric scattering light), which causes fuzzy, color shift, and distortion in the scene. The goal of haze removal is to recover J(x), A and t (x) from I(x). 3. Single Image Dehazing In this section, the proposed method will be described in detail. The rough down-sampled transmission and the airlight are estimated firstly, then the transmission is smoothed and up- sampled using a guided filter, and finally the haze-free image is restored. A.Extract the Transmission The core of haze removal for an image is to estimate the airlight and transmission map. Assuming the airlight is already known, to recover the hazefree image, the transmission map should be extracted first. He et al. [8] found that the minimum intensity in the non-sky patches on hazefree outdoor images should have a very low value, which is called dark channel prior. Formally, for an image J, the dark channel value of a pixel x is defined as: ^` ,, () ()minmin( ()) dark c crgb yx Jx Jy : (2) where, Jc is a color channel of J; ȍ(x) is a patch around x. By assuming the transmission in a local patch is constant and taking the min operation to both the patch and three color channels, the haze imaging model in (1) can be transformed as: ^` ^` ,, () ,, () () minmin () () ()minmin()(1 ()) c c crgb yx c c crgb yx Iy A Jy tx tx A : : §· ¨¸ ©¹ §· ¨¸ ©¹ (3) where, ()tx is the patch transmission. Since A is always positive and the dark channel value of a haze-free image J tends to be zero according to the dark channel prior, we have ^` ,, () () minmin ()0 c c crgb yx Jy A : §· o ¨¸ ©¹ (4) Then the transmission can be extracted simply by: ^` ,, () () () 1minmin() c c crgb yx Iy tx A : §· ¨¸ ©¹ (5) Although the dark channel prior is not a good prior for the sky regions, fortunately, both sky regions and non-sky regions can be well handled by (5) since the sky is infinitely distant and its transmission is indeed close to zero. In practice, the atmosphere is not absolutely free of any particle even in clear weather. Therefore, a constant parameter Ȧ (0<Ȧ1) is introduced into(5) to keep a small amount of haze for the distant objects: ^` ,, () () () 1minmin() c c crgb yx Iy tx A Z : §· ¨¸ ©¹ (6) The estimated transmission maps using (6) is reasonable. The main problems are some halos and block artifacts. This is because the transmission is not always constant in a patch. Several techniques were proposed to refine the transmission map, such as soft matting and guided joint bilateral filter. These techniques were applied on the transmission maps of the original foggy images and usually several operations should be used to achieve a good result, which could be computational intensive. For image haze removal, the time complexity is a critical problem that needs to be addressed. High time complexity of dehazing may make the algorithm impracticable. B.Refine the Transmission To improve the efficiency, in the present implementation, the transmission map is obtained form a down-sampled minimun channel image. Then, it is refined and up-sampled by using guided filter, which can be explicitly expressed by [12]: () gj iij j tWJt ¦ (7) 124 Cop y ri g ht © 2012 SciRes.  22 :( ,) 11 k gg ikjk g ij kij wk JJ WJ w PP VH §· ¨¸ ¨¸ ©¹ ¦ (8) where, Jg is the guidance image; ȝkand ık 2 are the mean and variance of J g in wk; |w| is the number of pixels in wk. İis a regularization parameter. The refined operation on a down-sampled minimun channel image leads to a low time complexity and helps to reduce halos and block artifacts. Joint upsampling using guided filter is applied to obtain the full transmission map. The guided filter is reported to be a fast and non-approximate linear-time algorithm, which can perform as an edge- preserving smoothing operator like the bilateral filter, but does not suffer from the gradient reversal artifacts. Moreover, the guided filter has an O(N) time (in the number of pixels N) exact algorithm for both gray-scale and color images. C.Recovering the Scene Radiance After the transmission map is estimated, the scene radiance can be recovered according to(1). The term J(x)t(x) can be very close to zero. When the transmission t(x) is close to zero, which make the recovered scene radiance J is prone to noise. Therefore, the transmission t(x) is restricted by a low bound t0. The final scene radiance J(x) is recovered by 0 max , Ix A Jx A tx t (9) where, A is the airlight. There are many ways available in literatures to estimate the airlight [6-9]. For simplicity, A takes the value of a pixel with highest dark channel value in this paper. D.Algorithm process flow The main process of this algorithm is as follows: 1)Calculate the minimun channel image Imin form I ; 2)Obtain IĻmin by down-sampling Imin; 3)Calcutlate the dark channel IĻdark form IĻmin; 4)Estimate the atmosphere light A; 5)Calculate the patch transmission t p ; 6)Obtain f t p by refining t p using guided filter, guided by IĻmin; 7)Obtain t by up-sampling f t p using guided filter, guided by Imin; 8)Get the recovered image. 4. Experimental Results In this section, the results of the proposed method will be shown and compared with several state of the art image dehazing methods in both image restoration quality and the time complexity. The proposed algorithm is implemented in the MATLAB language, and a personal computer with a 3.0GHz Core processor is employed in the test. Fig.1 shows the dehazing process of images with sizes of 1024×768 and 800×600. The patched size is chosen as 11×11 and down-sampling factor is set as 4. The operations considered to be time-consuming parts in existing dehazing algorithms are applied on the down-sampled images, which lead to a low time complexity. The processing time for images dehazing with sizes of 1024×768 and 800×600 are about 1.85s and 0.85s respectively. It is believed to be faster in the GPU- version. From Fig.1 we can see that the generated transmission map is smooth except at strong edges. The edge information is recovered effectively. The proposed approach can remove the haze effectively while introducing little artifacts. Cop y ri g ht © 2012 SciRes.125  Figure 1. From up to down, the original image with fog, the minimun channel image, the down-sampled minimun channel image, the dark channel, the patch transmission, the refined transmission, the up-sampled transmission, the recovered image Fig.2 shows the comparison with two fast restoration methods available in the literatures. One of them is developed by Tarel [9], whose Matlab codes can be found in the website http://perso.lcpc.fr/tarel.jean-philippe/publis/ iccv09.html. The other one is to refine transmission map by using guided joint bilateral filter. It can be seen that the results of presented algorithm look more natural than the others. The processing time for images dehazing with sizes of 600×400, as shown in Fig.2, is about 0.35s using the presented method, compared to 19.50s and 6.35s by using Tarel’s method and bilateral filter technique respectively. The running speed of the present method is significantly improved. Note that the test results are obtained by homemade program using MATLAB language. Figure 2. From up to down, the original image with fog, the present dehaze result, the Tarel’s dehaze result, dehaze result using bilateral filter 5. Conclusions In this paper, a fast and effective method for real-time image and video dehazing is proposed. Using a newly presented image prior - dark channel prior, haze removal for a single image without using any extra information is formulated as a particular filtering problem and an improved filtering scheme is proposed based on guided filter. In the presented algorithm, the airlight and the down-sampled transmission can be estimated and extracted easily. Then using a guided filter, the transmission can be further refined and up-samlped. Results demonstrate the presented method abilities to remove the haze layer and achieve real-time performace. It is believed that many applications, such as outdoor surveillance systems, intelligent vehicle systems, remote sensing systems, graphics editors, etc, could benefit from the proposed method. 6. Acknowledgment This work is supported by the China Postdoctoral Science Foundation funded project. The financial contributions are gratefully acknowledged. REFERENCES [1] Y. Schechner, S. Narasimhan and S. Nayar, “Instant dehazing of images using polarization,” In Proc. IEEE Conf. Computer Vision and Pattern Recognition, vol. 1, pp. 1–325, 2001. [2] S. Shwartz, E. Namer and Y. Schechner, “Blind haze separation,” In Proc. IEEE Conf. Computer Vision and Pattern Recognition, vol. 2, pp. 1984–1991, 2006. [3] S. K. Nayar and S. G. Narasimhan, “Vision in bad weather,” In Proc. IEEE Conf. Computer Vision, vol. 2, pp. 820–827, 1999. [4] S. G. Narasimhan and S.K. Nayar, “Interactive Deweathering of an Image Using Physical Models,” Proc. IEEE Workshop Color and Photometric Methods in Computer Vision, in Conjunction with IEEE Int’l Conf. Computer Vision, Oct. 2003. [5] J. Kopf, B. Neubert, B. Chen, M. Cohen, D. Cohen-Or, O. Deussen, M. Uyttendaele, and D. Lischinski, “Deep Photo: Model-Based Photograph Enhancement and Viewing,” ACM Trans. Graphics, vol. 27, no. 5, pp. 116:1-116:10, 2008. [6] R. Tan, “Visibility in Bad Weather from a Single Image,” Proc. IEEE Conf. Computer Vision and Pattern Recognition, June 2008. [7] R. Fattal, “Single Image Dehazing,” Proc. ACM SIGGRAPH ’08, 2008. 126 Cop y ri g ht © 2012 SciRes.  [8] K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” In Proc. IEEE Conf. Computer Vision and Pattern Recognition, 2009. [9] J. Tarel and N. Hautiere, “Fast visibility restoration from a single color or gray level image,” In Proc. IEEE Conf. Computer Vision, pp. 2201–2208, 2009. [10] Chunxia Xiao, Jiajia Gan, “Fast image dehazing using guided joint bilateral filter,” Vis Comput, vol. 28, pp. 713- 721, 2012 [11] S.G. Narasimhan and S.K. Nayar, “Vision and the Atmosphere,” International Journal of Computer Vision, vol. 48, pp. 233-254, 2002. [12] K. He, J. Sun and X. Tang, “Guided image filtering,” In Computer Vision – ECCV 2010, vol. 6311, pp. 1–14, 2010. Cop y ri g ht © 2012 SciRes.127 |