Paper Menu >>

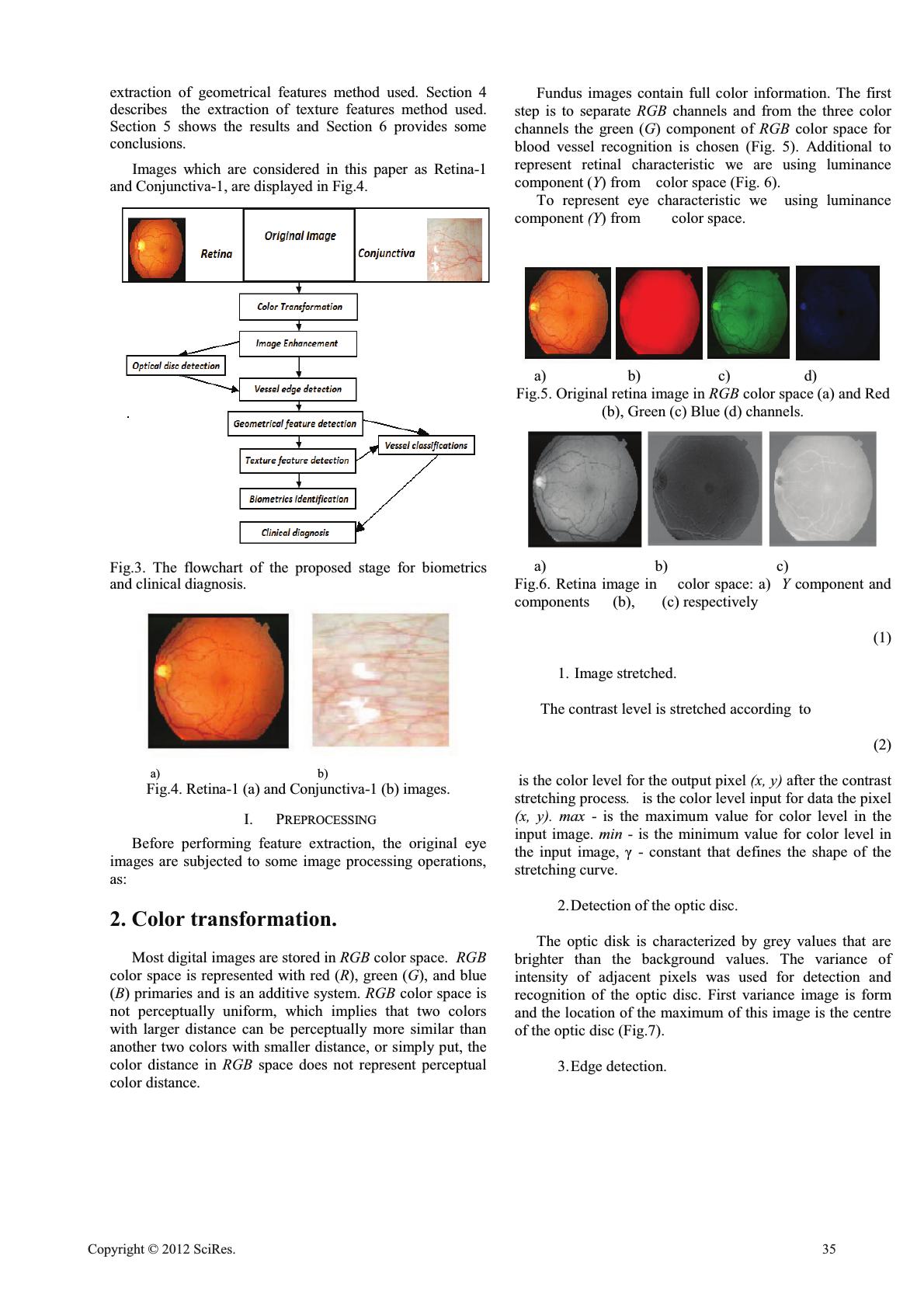

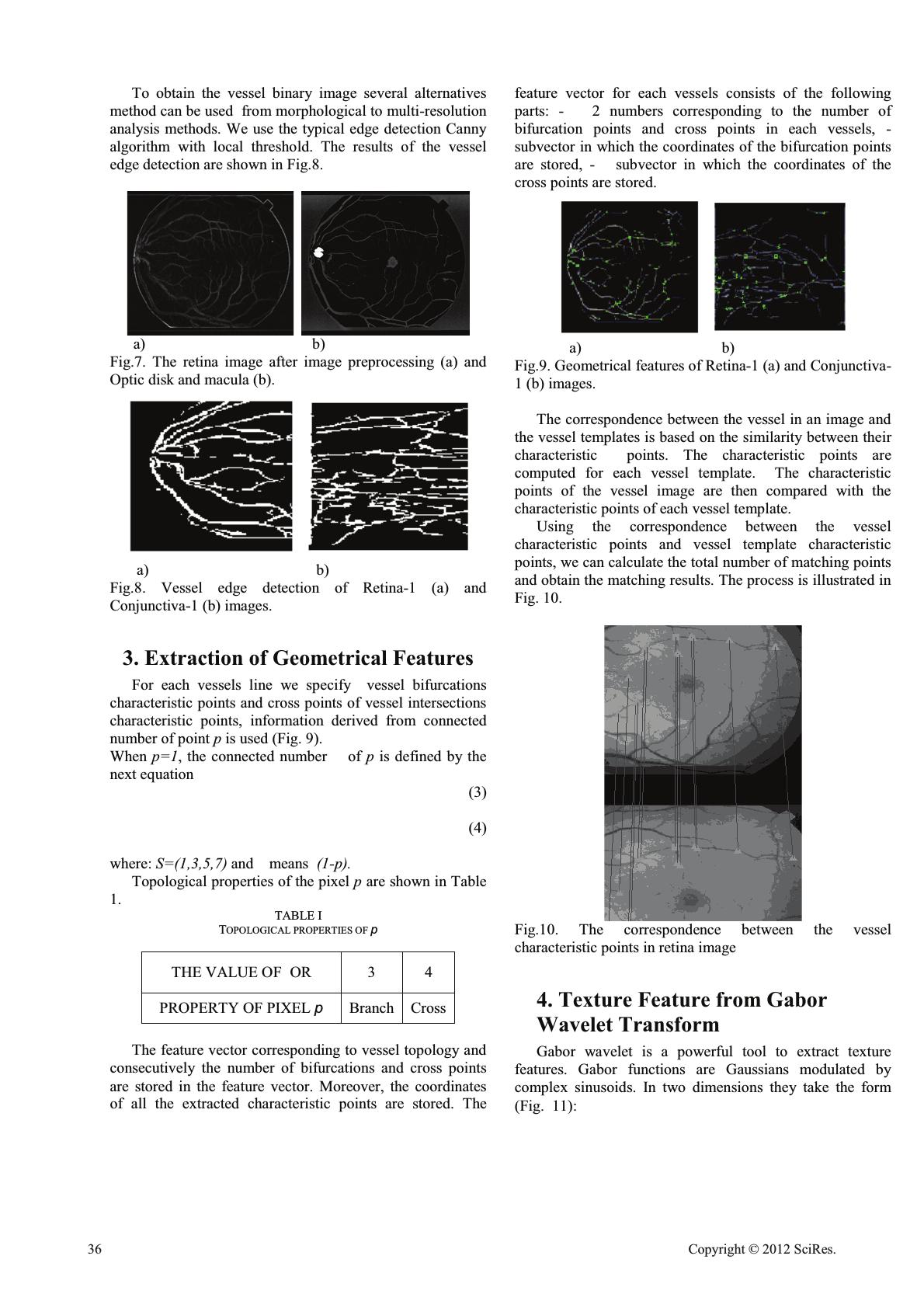

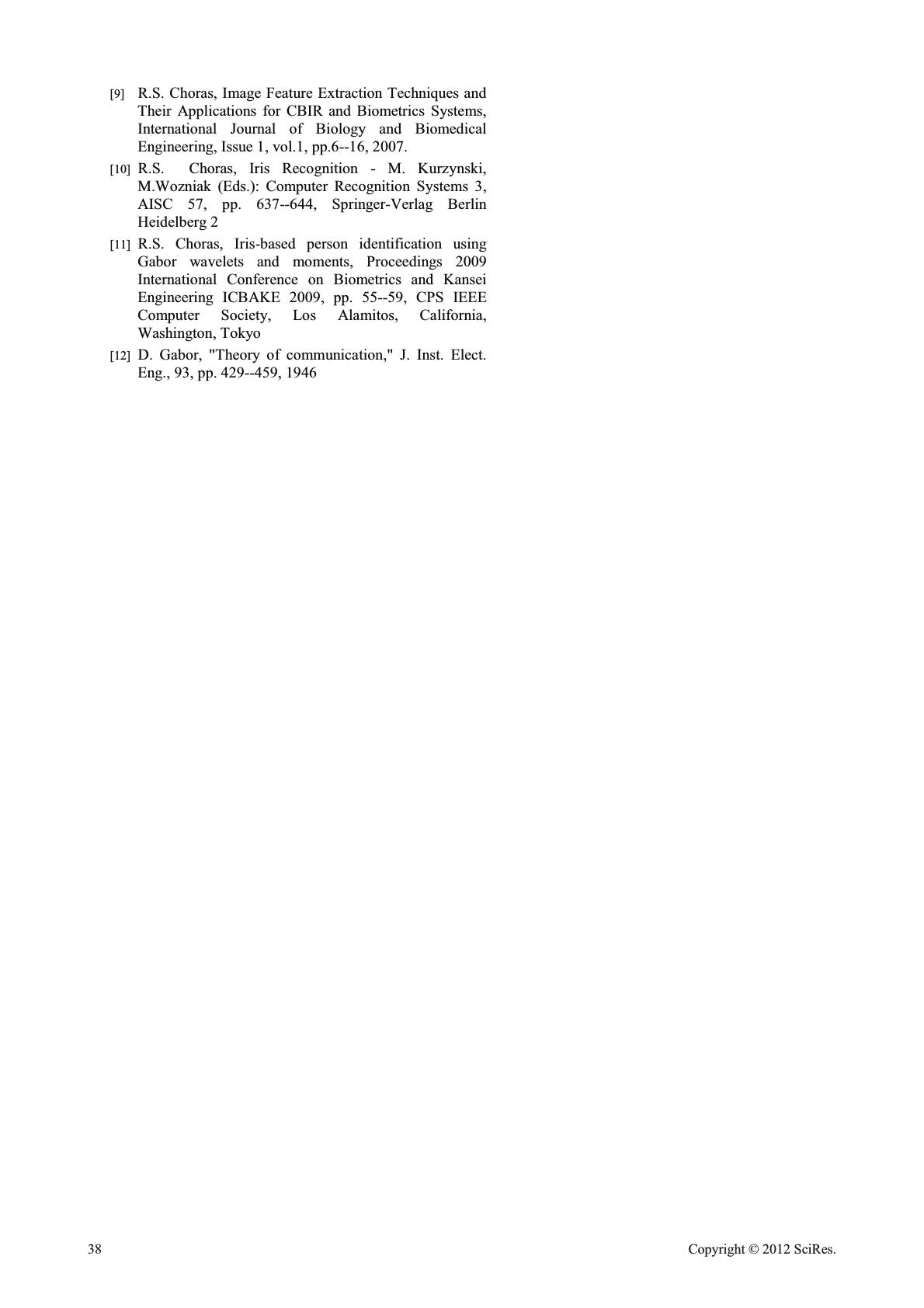

Journal Menu >>

Automatic Feature Extraction from Ocular Images Institute of Telecommunications University of Technology & Life Sciences 85-796 Bydgoszcz, Poland Email: choras@utp.edu.pl Abstract—Ocular images processing is an important task in: i) biometrics system based on retina and/or sclera images, and ii) in clinical ophthalmology diagnosis of diseases like various vascular disorders. We presents a general framework for image processing of ocular images with a particular view on feature extraction. The method uses the set of geometrical and texture features and based on the information of the complex vessel structure of the retina and sclera. The feature extraction contains the image preprocessing, locating and segmentation of the region of interest (ROI). The image processing of ROI and the feature extraction are proceeded, and then the feature vector is determined for the human recognition and ophthalmology diagnosis. Keywords-Retina image, Conjunctiva image, Feature extraction, Gabor transform, Texture features 1. Introduction The general eye anatomy is presented in Fig.1. In this paper an approach is presented in which the vessel system of the retina and conjunctiva is automatically detected and classified for human identification/verification and ophthalmology diagnosis. Fig.1. The eye anatomy. The retina is a thin layer of cells at the back of the eyeball of vertebrates. It is the part of the eye which converts light into nervous signals. It is lined with special photoreceptors which translate light into signals to the brain. The main features of a fundus retinal image were defined as the optic disc, fovea, and blood vessels. Every eye has its own totally unique pattern of blood vessels. The unique structure of the blood vessels in the retina has been used for biometric identification and ophthalmology diagnosis. The conjunctiva is a thin, clear, highly vascular and moist tissue that covers the outer surface of the eye (sclera). Conjunctival vessels can be observed on the visible part of the sclera. A biometric system is a pattern recognition system that recognizes a person on the basis of a feature vector derived from a specific physiological or behavioral characteristic that the person possesses. The problem of resolving the identity of a person can be categorized into two fundamentally distinct types of problems with different inherent complexities: (i) verification and (ii) identification. Verification (also called authentication) refers to the problem of confirming or denying a person's claimed identity (Am I who I claim to be?). Identification (Who am I?) refers to the problem of establishing a subjects identity. We propose a new modality for eye-based personal identification that uses the blood vessel in ocular images (retina, conjunctiva). The acquisition process requires collaboration from the user and it is sometimes perceived as intrusive (Fig.2). Fig.2. Typical Eye Vessel Acquisition and Biometrics System. In diagnostic ophthalmology the main problem is the detection of feature points of vessel structure (bifurcations and crossovers) and its misclassification. The paper is arranged as follows: in Section 2 a description of the preprocessing (color transformation, edge detection, etc.) method is presented. Section 3 describes the Open Journal of Applied Sciences Supplement:2012 world Congress on Engineering and Technology 34 Cop y ri g ht © 2012 SciRes.  extraction of geometrical features method used. Section 4 describes the extraction of texture features method used. Section 5 shows the results and Section 6 provides some conclusions. Images which are considered in this paper as Retina-1 and Conjunctiva-1, are displayed in Fig.4. Fig.3. The flowchart of the proposed stage for biometrics and clinical diagnosis. a) b) Fig.4. Retina-1 (a) and Conjunctiva-1 (b) images. I. PREPROCESSING Before performing feature extraction, the original eye images are subjected to some image processing operations, as: 2. Color transformation. Most digital images are stored in RGB color space. RGB color space is represented with red (R), green (G), and blue (B) primaries and is an additive system. RGB color space is not perceptually uniform, which implies that two colors with larger distance can be perceptually more similar than another two colors with smaller distance, or simply put, the color distance in RGB space does not represent perceptual color distance. Fundus images contain full color information. The first step is to separate RGB channels and from the three color channels the green (G) component of RGB color space for blood vessel recognition is chosen (Fig. 5). Additional to represent retinal characteristic we are using luminance component (Y) from color space (Fig. 6). To represent eye characteristic we using luminance component (Y) from color space. a) b) c) d) Fig.5. Original retina image in RGB color space (a) and Red (b), Green (c) Blue (d) channels. a) b) c) Fig.6. Retina image in color space: a) Y component and components (b), (c) respectively (1) 1. Image stretched. The contrast level is stretched according to (2) is the color level for the output pixel (x, y) after the contrast stretching process. is the color level input for data the pixel (x, y). max - is the maximum value for color level in the input image. min - is the minimum value for color level in - constant that defines the shape of the stretching curve. 2.Detection of the optic disc. The optic disk is characterized by grey values that are brighter than the background values. The variance of intensity of adjacent pixels was used for detection and recognition of the optic disc. First variance image is form and the location of the maximum of this image is the centre of the optic disc (Fig.7). 3.Edge detection. Cop y ri g ht © 2012 SciRes.35  To obtain the vessel binary image several alternatives method can be used from morphological to multi-resolution analysis methods. We use the typical edge detection Canny algorithm with local threshold. The results of the vessel edge detection are shown in Fig.8. a) b) Fig.7. The retina image after image preprocessing (a) and Optic disk and macula (b). a) b) Fig.8. Vessel edge detection of Retina-1 (a) and Conjunctiva-1 (b) images. 3. Extraction of Geometrical Features For each vessels line we specify vessel bifurcations characteristic points and cross points of vessel intersections characteristic points, information derived from connected number of point pis used (Fig. 9). When p=1, the connected number of p is defined by the next equation (3) (4) where: S=(1,3,5,7) and means (1- p ). Topological properties of the pixel p are shown in Table 1. TABLE I TOPOLOGICAL PROPERTIES OF p THE VALUE OF OR 3 4 PROPERTY OF PIXEL p Branch Cross The feature vector corresponding to vessel topology and consecutively the number of bifurcations and cross points are stored in the feature vector. Moreover, the coordinates of all the extracted characteristic points are stored. The feature vector for each vessels consists of the following parts: - 2 numbers corresponding to the number of bifurcation points and cross points in each vessels, - subvector in which the coordinates of the bifurcation points are stored, - subvector in which the coordinates of the cross points are stored. a) b) Fig.9. Geometrical features of Retina-1 (a) and Conjunctiva- 1 (b) images. The correspondence between the vessel in an image and the vessel templates is based on the similarity between their characteristic points. The characteristic points are computed for each vessel template. The characteristic points of the vessel image are then compared with the characteristic points of each vessel template. Using the correspondence between the vessel characteristic points and vessel template characteristic points, we can calculate the total number of matching points and obtain the matching results. The process is illustrated in Fig. 10. Fig.10. The correspondence between the vessel characteristic points in retina image 4. Texture Feature from Gabor Wavelet Transform Gabor wavelet is a powerful tool to extract texture features. Gabor functions are Gaussians modulated by complex sinusoids. In two dimensions they take the form (Fig. 11): 36 Cop y ri g ht © 2012 SciRes.  (5) where (x, y) is the pixel position in the spatial domain, is the scaling parameters of the filter , is the radial center frequency, , and specifies the orientation of the Gabor filters. The second term of the Gabor filter, , compensates for the DC value because the cosine component has nonzero mean while the sine component has zero mean. Fig.11. Real (a) and imaginery (b) parts of Gabor wavelets and Gabor kernels with different orientations (c). Gabor filtered output of the image is obtained by the convolution of the image with Gabor function for each of the orientation/spatial frequency (scale) orientation. Given an image (6) The normalized retina or conjunctiva images are divided into blocks. The size of each block in our application is . Each block is filtered with Eq. (6). A set of parameters of the Gabor filters is used as and . In this case we have 12 filters. But the Gabor feature vector with all the 12 filters becomes very redundant and correlative and the dimension of Gabor feature vector is large. The dimension of the Gabor feature vector with 12 filters will result in where is the size of each ROI block and t is number of blocks. To reduce dimension of feature vector, we select few Gabor filters (Fig. 12) without degrading the recognition performance. In this case we have only 6 filters. Thus we have pattern vector with elements for each blocks. In our application we used only 3 global texture features for each t blocks: (7) (8) (9) where k, l is block image dimension. The feature vector is constructed using and as feature components. We defined the vectors of features as follows: (10) The first part of the contains the number of bifurcation and crossing points with corresponding coordinates for each tblocks. The features in second part of are listed as follows Fig.12. Selected Gabor filter bank. 5. Conclusion A new method for recognition retina vessel and conjunctiva vessel images was presented. This method based on geometrical, and Gabor features. This paper analyses the details of the proposed method. Retina vessel and conjunctiva vessel images can be used for personal identification. Experimental results have demonstrated that this approach is promising to improve retina recognition for person identification. Furthermore conjunctiva vessel images proposed method is suitable to improve eye diagnosis. REFERENCES [1] K.G. Goh, M.L. Lee, W. Hsu, H. Wang, ADRIS: An Automatic Diabetic Retinal Image Screening System, Medical Data Mining and Knowledge Discovery, Springer-Verlag, 2000. [2] W. Hsu, P.M.D.S. Pallawala, M.L. Lee, A.E. Kah-Guan, The Role of Domain Knowledge in the Detection of Retinal Hard Exudates, IEEE Computer Vision and Pattern Recognition, Hawaii, Dec 2001. [3] H. Li, O. Chutatape, Automated feature extraction in color retinal images by a model based approach, IEEE Trans. Biomed. Eng., 2004, vol. 51, pp. 246 -- 254. [4] N.M. Salem, A.K. Nandi, Novel and adaptive contribution of the red channel in pre-processing of colour fundus images, Journal of the Franklin Institute, 2007, p. 243--256. [5] C. Kirbas, K. Quek, Vessel extraction techniques and algorithm: a survey, Proceedings of the 3rd IEEE Symposium on BioInfomratics and Bioengineering (BIBE' 03), 2003. [6] S. Chang, D. Shim, Sub-pixel Retinal Vessel Tracking and Measurement Using Modified Canny Edge Detection Method, Journal of Imaging Science and Technology, March-April 2008 issue. [7] T. Chanwimaluang, G. Fan, An efficient algorithm for extraction of anatomical structures in retinal images, Proc. IEEE International Conference on Image Processing, pp. 1093--1096, (Barcelona, Spain. [8] H. Farzin, H. Abrishami-Moghaddam, M.S. Moin, A novel retinal identification system, EURASIP Journal on Advances in Signal Processing, vol. 2008, Article ID 280635, 2008. Cop y ri g ht © 2012 SciRes.37  [9] R.S. Choras, Image Feature Extraction Techniques and Their Applications for CBIR and Biometrics Systems, International Journal of Biology and Biomedical Engineering, Issue 1, vol.1, pp.6--16, 2007. [10] R.S. Choras, Iris Recognition - M. Kurzynski, M.Wozniak (Eds.): Computer Recognition Systems 3, AISC 57, pp. 637--644, Springer-Verlag Berlin Heidelberg 2 [11] R.S. Choras, Iris-based person identification using Gabor wavelets and moments, Proceedings 2009 International Conference on Biometrics and Kansei Engineering ICBAKE 2009, pp. 55--59, CPS IEEE Computer Society, Los Alamitos, California, Washington, Tokyo [12] D. Gabor, "Theory of communication," J. Inst. Elect. Eng., 93, pp. 429--459, 1946 38 Cop y ri g ht © 2012 SciRes. |