Paper Menu >>

Journal Menu >>

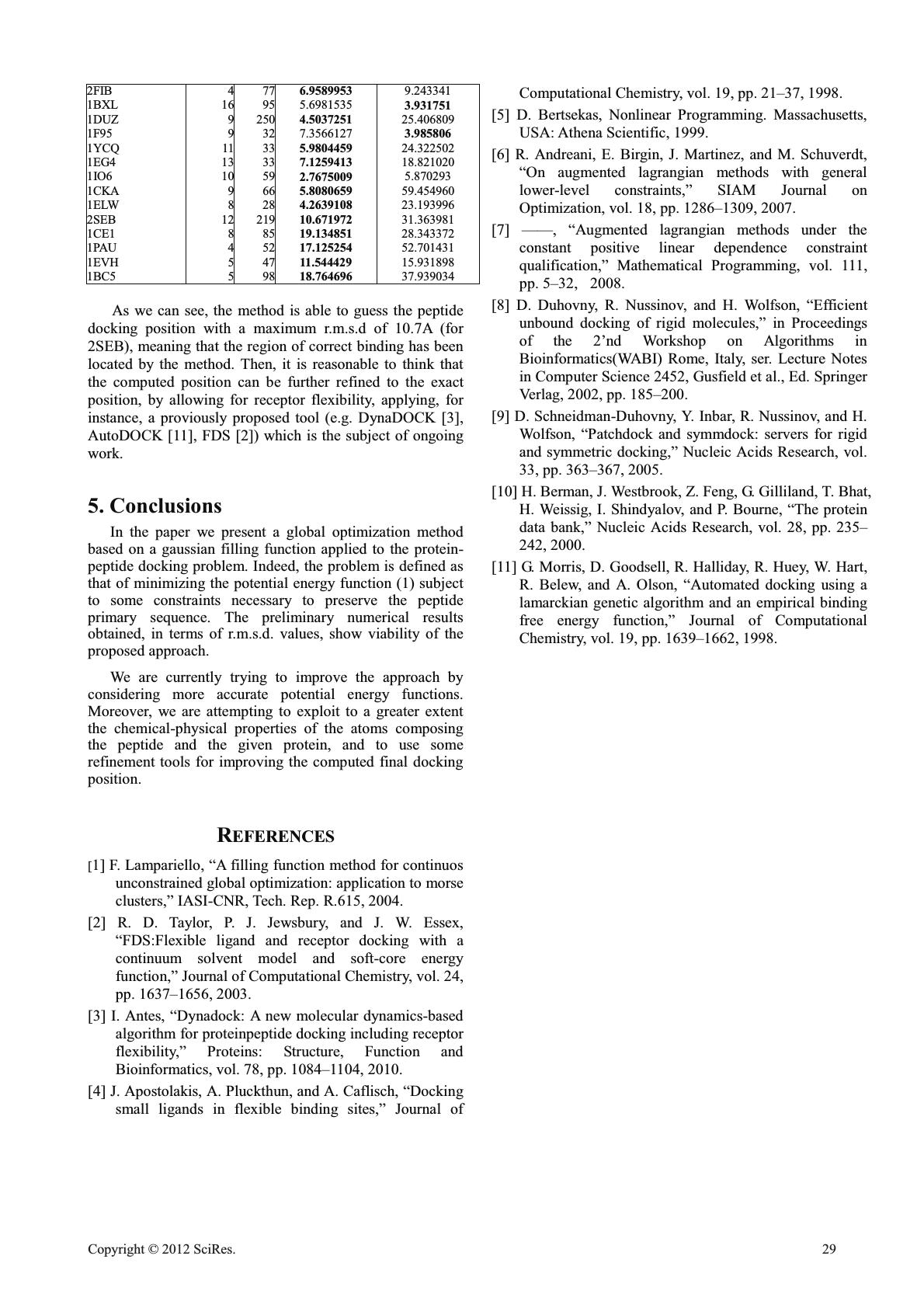

Global optimization of protein-peptide docking by a filling function method Francesco Lampariello, Giampaolo Liuzzi Istituto di Analisi dei Sistemi ed Informatica (IASI) CNR Rome, Italy giampaolo.liuzzi@iasi.cnr.it Abstract—Molecular docking programs play a crucial role in drug design and development. In recent years, much attention has been devoted to the protein-peptide docking problem in which docking of a flexible peptide with a known protein is sought. In this work we present a new docking algorithm which is based on the use of a filling function method for continuos constrained global optimization. Indeed, the protein-peptide docking position is sought by minimizing the conformational potential energy subject to constraints necessary to maintain the primary sequence of the given peptide. The resulting global optimization problem is difficult mainly for two reasons. First, the problem is large scale in constrained global optimization; second, the energy function is multivariate non-convex so that it has many local minima. The method is based on the device of modifying the original objective function once a local minimum has been attained by adding to it a filling term. This allows the overall algorithm to escape from local minima thus, ultimately, giving the algorithm ability to explore large regions in the peptide conformational space. We present numerical results on a set of benchmark docking pairs and comparison with the well-known software package for molecular docking PacthDock. Keywords-Protein-peptide docking; potential reduction; continuous global optimization 1. Introduction In this paper we address the problem of docking small peptide molecules, for example, drug candidates, onto a given protein model. Molecular docking programs play a crucial role in drug design and development. In recent years, much attention has been devoted to this problem where docking of a flexible peptide with a known protein is sought. We consider a docking algorithm based on the use of a filling function method for continuos unconstrained global optimization [1]. The correct protein-peptide docking position is obtained by minimizing the function representing the total potential energy according to a specific mathematical model. In order to preserve the primary sequence of the given peptide it is necessary to take into account some constraints on the problem variables, and then we construct the Lagrangian of the original problem. The resulting optimization problem has two main features; it is a large-scale one in constrained global optimization, and the total potential energy function has many local minima. Once a local minimum has been found, the method modifies the original objective function by adding to it a filling term. This allows the algorithm to escape from the local minimum so that it may explore large regions in the search space. The approaches proposed in the literature are based on the minimization of successive approximations of some potential energy function, obtained by introducing some suitable parameters (see, e.g. [2], [3]) in order to simplify the single minimization step. Moreover, these methods determine the docking between the peptide and a prefixed small part of the protein, namely the prefixed receptor or binding site. Most of these methods allow for receptor flexibility [4], [3], [2]. The minimization process is based on a multi-start strategy that uses the steepest descent or conjugate gradient methods as local minimization tools. 2. The Energy Model As regards the mathematical model employed, we assume that the protein tertiary structure and the peptide primary residue sequence are known and we denote by N and M the number of peptide and protein residues, respectively. In order to find the docking pocket and position of the peptide onto the protein, we consider that peptide is flexible in such a way that all its atoms have three degrees of freedom. Moreover, the given peptide primary residue sequence is not modified while calculating the docking position by means of suitable simple nonlinear constraints. Let ELJ ij =4 ij [ ( ıij rij ) 12 í ( ıij rij ) 6 ] ,EC ij =qiqj 0rij be the Lennard-Jones and Coulomb potentials, respectively. They represent the interaction between protein atom i and peptide atom j and ri j denotes their distance. Hence, we consider the following total potential energy function [3], E ( r ) = ( E LJ ij +E C ij ) (1) Open Journal of Applied Sciences Supplement:2012 world Congress on Engineering and Technology 26 Cop y ri g ht © 2012 SciRes.  where the summation is calculated over all pairs ( N×M ) of atoms. 3. Global Optimization Approach Here we consider the problem of finding the docking position of a given peptide by taking into account all the given protein atoms, so that the binding site is not prefixed, but directly determined by the algorithm. In order to avoid that the peptide primary sequence is modified, we consider the following constraints between the carbon alpha atoms of the peptide residues: r i,i+1 c =3.8,䌔i= 1,…,N í1, (2) and r i,k c 2,䌔i= 1,…,N í2,k=i+2,…,N . (3) Therefore, our algorithm computes the final docking position by searching for a global minimum of E(r) subject to (2) and (3). For the sake of clarity, let us consider the general constrained nonlinear global optimization problem globminf ( x ) s.t.x䌜F, (4) where x䌜ξ n , F= { x䌜ξn:g ( x ) 0 } , f :ξ n ĺξ, g :ξ n ĺξ m . Let us assume, as usual, that f and g are continuously differentiable functions and that f (x) is radially unbounded on the feasible set. Now, let L ( [Ȝ ) =f ( x ) ȜTg ( x ) , with Ȝ䌜ξm, be the Lagrangian function for Problem (4). A pair ( x¿Ȝ¿ ) is a Karush-Kuhn-Tucker (KKT) pair if [5] g ( x¿ ) 0,g ( x¿ ) TȜ¿ =0, Ȝ¿0, 䌛L ( x¿Ȝ¿ ) =0. (5) Under some mild regularity assumptions on the constraint functions, if x ¿ is a local minimum point of Problem (4), we have [5]: Proposition 1: Let x ¿ be a local minimum of Problem (4). Then, a vector Ȝ ¿ 䌜ξ m of KKT multipliers exists such that ( x¿Ȝ¿ ) is a KKT pair. Furthermore, the hessian of the Lagrangian function 䌛x 2L(x*,Ȝ*) is positive definite. Finally, we assume that a local optimization routine is available, namely, a routine that, given an arbitrary starting point x, provides a local minimum of Problem (4). More in particular, as local optimization engine we use the augmented Lagrangian algorithm ALGENCAN [6], [7]. A.The filling function technique Starting from a point randomly chosen in ξn, let x 0 be the local minimum reached by the local optimization routine and Ȝ0䌜ξm be the vector of corresponding KKT multipliers. In order to search over the various minima for finding that corresponding to the lowest f value, we have to escape the basin of attraction of the current minimum x0. To do this, we consider the quadratic model of Problem (4) in x0, that is, q ( x;x 0 ) =1 2 ( xíx 0 ) T H 0 ( xíx 0 ) , (6) where H 0 is the Hessian of the Lagrangian function for Problem (4) computed at x 0 Then, we construct the following gaussian-based function ij ( x;x 0 ) =ȕ 1íexp ( íĮT ( x;x 0 ) ) íȕ (7) whe r e Į and ȕ are positive scalars, and we consider the new function obtained by addingto the original function f ( x ) ࡂ f ( x;x 0 ) =f ( x ) ij ( x;x 0 ) . (8) Since q ( x 0 ;x 0 ) =0 we have ij ( x 0 ;x 0 ) =+ so that ࡂ f ( x 0 ;x 0 ) =+. Moreover, since ij ( x;x0 ) >0, for allx, ࡂ f ( x;x0 ) >f ( x ) ,䌔x. Then, ij ( x;x 0 ) ĺ0, and ࡂf ( x;x 0 ) ĺf ( x ) as 䌹xíx0䌹ĺ+, that is far from x 0 in any direction. Therefore, function (8) is substantially the original function modified only locally, i.e., obtained by “filling” f within a neighborhood of x0, whose aplitude can be varied by taking different values of the parameter Į. Thus, by applying the local search routine tostarting from a point near x0, we reach a minimum point ࡂx which can not be x 0 and which is not a minimum of f. Now, by reapplying the local search to the original Cop y ri g ht © 2012 SciRes.27  function f (x) starting from ࡂx, either the same point x 0 is reached, or a new minimum point is found. In the first case, the value of Į is not sufficiently low to escape the basin of attraction of x0, and a lower Į value is needed. B.The global optimization procedure The global optimization algorithm can be summarized in the following scheme. Global Optimization Algorithm (GOAL) Data. s0,ǻV , integers p10, and R1. Step 0. Set r= 1 and Ȝ 1. Step 1. Generate at random a point Ѻ x䌜ξ n , compute f ( Ѻx ) and apply the local search routine for minimizing f(x). Let x 0 be the minimum reached; set x ( r ) =x 0 . Step 2. If r= 1, set f min =f ( x 0 ) ,x min =x 0, else, if 䌹x0íx ( k ) 䌹<, for an index k=1,…,rí1, set Ȝ Ȝ1, and if Ȝr go to Step 1; otherwise STOP. Step 3. Set i= 1 and fl=f ( Ѻx ) . Step 4. Compute the parameters(see (9) below), whe r e s= s 0 + ( ií1 ) ǻV . Step 5. Starting fro m x 0 ǻ[minimize ࡂ f ( x;x 0 ) . Let ࡂx i be the minimum reached; minimize f(x) starting from ࡂx i , and let xi be the minimum obtained. Step 6. If 䌹x i íx ( k ) 䌹>,for allk=1,…,r, and fl<f ( xi ) ,set fl=f ( xi ) ,and xl=xi.Seti=i+ 1, and if ip go to Step 4. Step 7. If fl<f min ,set fmin=f l,and xmin =xl. If r<R, se tr=r+ 1;otherwise STOP. If f l <f ( Ѻx ) ,then set x ( r ) =x l ,x 0 =x l ,and go to Step 3; otherwise, set Ȝ 1and go to Step 1. The value of which is the lowest distance between two minimum points over which they are considered distinct, should be chosen taking into account the stopping criterion of the local search routine. Note that the procedure, differently from the classical multistart algorithm, terminates after the prefixed maximum number R of restarts have been performed, regardless the number of initial points chosen at random. Thus, it is even possible that a new restarting minimum point is always found, i.e., from Step 6 the algorithm never returns to Step 1, so that only one initial random point is employed. As regards the parameters Įand ȕ in function (7), in order to establish the amplitude of the neighborhood of a minimum xo where the function f is filled, we take Į 9 s 2, ȕ ¥ 3s, (9) where s is the distance from x0. Thus, the prefixed distance s corresponds to 3ı deviation of the Gaussian function in (6). The procedure employs p increasing values of the prefixed distance s, and correspondingly the function f is filled within neighborhoods of x 0 larger and larger. 4. Preliminary Results In this Section we present some preliminary numerical results and comparison with the well-known software package for molecular docking PatchDock [8], [9] in terms of computed protein-peptide dockings for 23 protein- peptide pairs. We implemented our method in double precision Fortran90 and run the code on an Intel core 2 duo processor with 4GB Ram under Linux operatig system by usin g ǻV V 0 =0.1, =10 í3 ,p= 25and R=50. We applied our method to proteins in complex with specific ligands which are taken from the PDB [10]. The obtained results are summarized in Table I where we report, for each protein-peptide pair: (a) the name of the PDB entry; (b) the number of residues, NPE and MPR, composing, respectively, the peptide and the protein; (c) the avarage RMSD (root mean square distance) in Angstrom (A) between the computed and the known peptide docking position obtained by running our code (GOAL) and PatchDock from ten randomly chosen starting peptide positions. In the table we use boldface to highlight a success. As it can be seen, GOAL outperforms PatchDock on 19 out of 23 pairs. T T TA A AB B BL L LE E EI I I DISTANCES BETWEEN COMPUTED AND KNOWN POSITIONS GOAL PatchDock PDB nameNPEMP R RMSD RMSD 1A30(A) 1A30(B) 1AWQ 1I31 1G3F 1VWG 1AB9 1BE9 1GUX 3 3 6 6 9 8 10 5 9 99 99 58 97 69 47 51 35 142 9.7270784 10.265117 8.6928129 7.7272010 6.9038916 8.6765423 7.9983487 6.5084529 9.7264299 15.969474 15.969474 24.954113 22.708910 6.3274 45 7.6029 35 11.081827 32.219646 32.202145 28 Cop y ri g ht © 2012 SciRes.  2FIB 1BXL 1DUZ 1F95 1YCQ 1EG4 1IO6 1CKA 1ELW 2SEB 1CE1 1PAU 1EVH 1BC5 4 16 9 9 11 13 10 9 8 12 8 4 5 5 77 95 250 32 33 33 59 66 28 219 85 52 47 98 6.9589953 5.6981535 4.5037251 7.3566127 5.9804459 7.1259413 2.7675009 5.8080659 4.2639108 10.671 972 19.134 851 17.125 254 11.54 442 9 18.764 696 9.243341 3.931751 25.406809 3.985806 24.322502 18.821020 5.870293 59.454960 23.193996 31.363981 28.343372 52.701431 15.931898 37.939034 As we can see, the method is able to guess the peptide docking position with a maximum r.m.s.d of 10.7A (for 2SEB), meaning that the region of correct binding has been located by the method. Then, it is reasonable to think that the computed position can be further refined to the exact position, by allowing for receptor flexibility, applying, for instance, a proviously proposed tool (e.g. DynaDOCK [3], AutoDOCK [11], FDS [2]) which is the subject of ongoing work. 5. Conclusions In the paper we present a global optimization method based on a gaussian filling function applied to the protein- peptide docking problem. Indeed, the problem is defined as that of minimizing the potential energy function (1) subject to some constraints necessary to preserve the peptide primary sequence. The preliminary numerical results obtained, in terms of r.m.s.d. values, show viability of the proposed approach. We are currently trying to improve the approach by considering more accurate potential energy functions. Moreover, we are attempting to exploit to a greater extent the chemical-physical properties of the atoms composing the peptide and the given protein, and to use some refinement tools for improving the computed final docking position. REFERENCES [1] F. Lampariello, “A filling function method for continuos unconstrained global optimization: application to morse clusters,” IASI-CNR, Tech. Rep. R.615, 2004. [2] R. D. Taylor, P. J. Jewsbury, and J. W. Essex, “FDS:Flexible ligand and receptor docking with a continuum solvent model and soft-core energy function,” Journal of Computational Chemistry, vol. 24, pp. 1637–1656, 2003. [3] I. Antes, “Dynadock: A new molecular dynamics-based algorithm for proteinpeptide docking including receptor flexibility,” Proteins: Structure, Function and Bioinformatics, vol. 78, pp. 1084–1104, 2010. [4] J. Apostolakis, A. Pluckthun, and A. Caflisch, “Docking small ligands in flexible binding sites,” Journal of Computational Chemistry, vol. 19, pp. 21–37, 1998. [5] D. Bertsekas, Nonlinear Programming. Massachusetts, USA: Athena Scientific, 1999. [6] R. Andreani, E. Birgin, J. Martinez, and M. Schuverdt, “On augmented lagrangian methods with general lower-level constraints,” SIAM Journal on Optimization, vol. 18, pp. 1286–1309, 2007. [7] ——, “Augmented lagrangian methods under the constant positive linear dependence constraint qualification,” Mathematical Programming, vol. 111, pp. 5–32, 2008. [8] D. Duhovny, R. Nussinov, and H. Wolfson, “Efficient unbound docking of rigid molecules,” in Proceedings of the 2’nd Workshop on Algorithms in Bioinformatics(WABI) Rome, Italy, ser. Lecture Notes in Computer Science 2452, Gusfield et al., Ed. Springer Verlag, 2002, pp. 185–200. [9] D. Schneidman-Duhovny, Y. Inbar, R. Nussinov, and H. Wolfson, “Patchdock and symmdock: servers for rigid and symmetric docking,” Nucleic Acids Research, vol. 33, pp. 363–367, 2005. [10] H. Berman, J. Westbrook, Z. Feng, G. Gilliland, T. Bhat, H. Weissig, I. Shindyalov, and P. Bourne, “The protein data bank,” Nucleic Acids Research, vol. 28, pp. 235– 242, 2000. [11] G. Morris, D. Goodsell, R. Halliday, R. Huey, W. Hart, R. Belew, and A. Olson, “Automated docking using a lamarckian genetic algorithm and an empirical binding free energy function,” Journal of Computational Chemistry, vol. 19, pp. 1639–1662, 1998. Cop y ri g ht © 2012 SciRes.29 |