Paper Menu >>

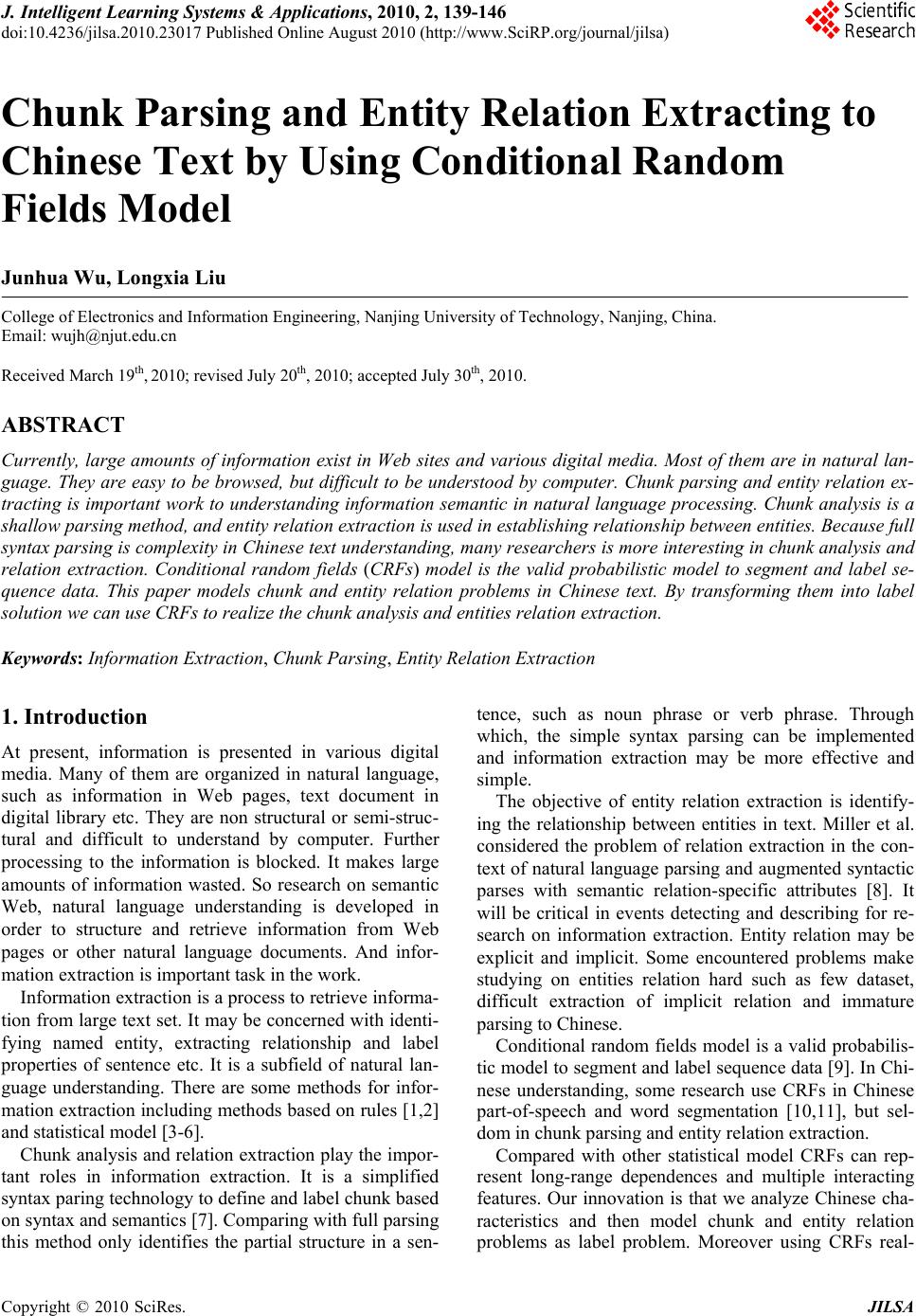

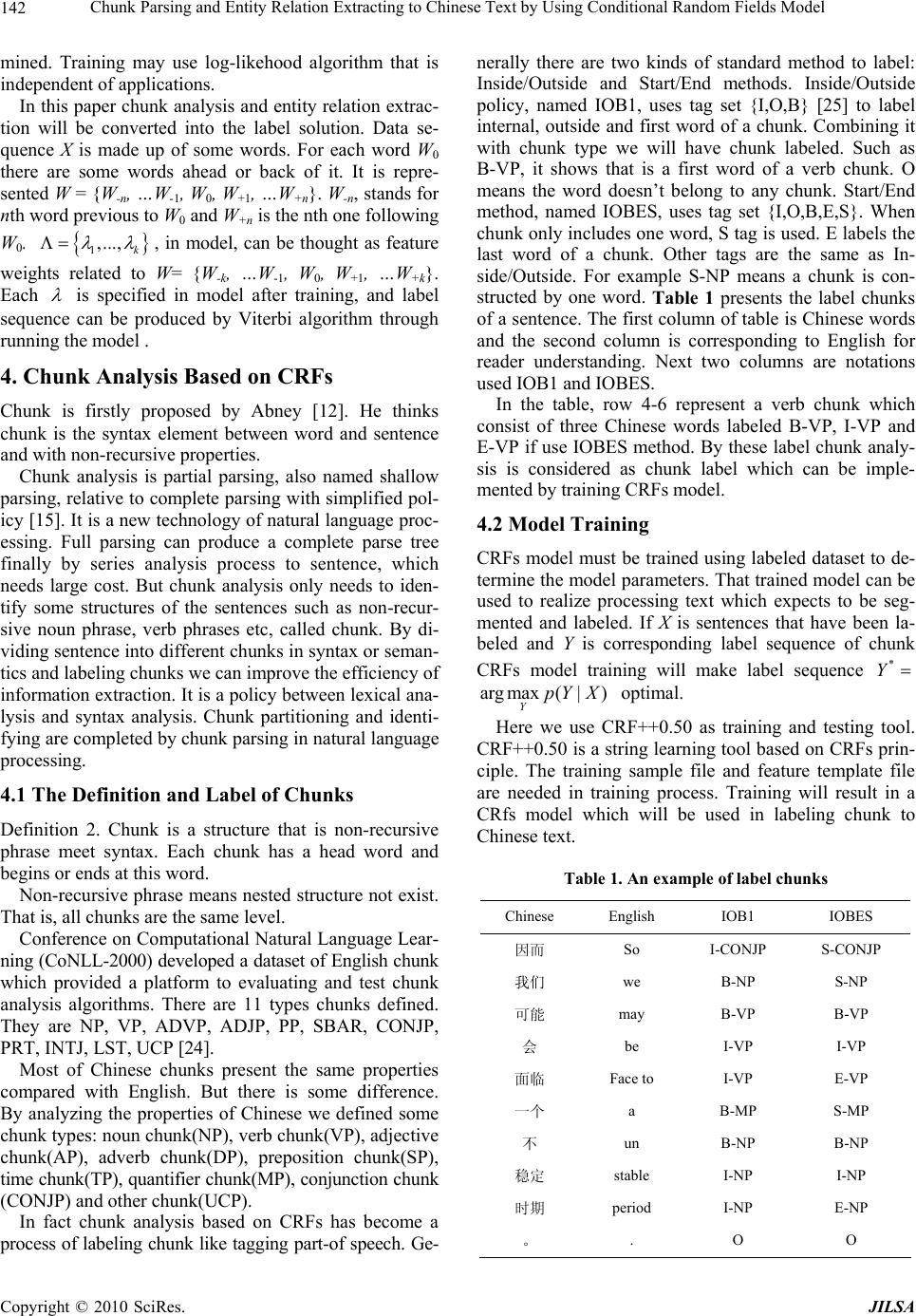

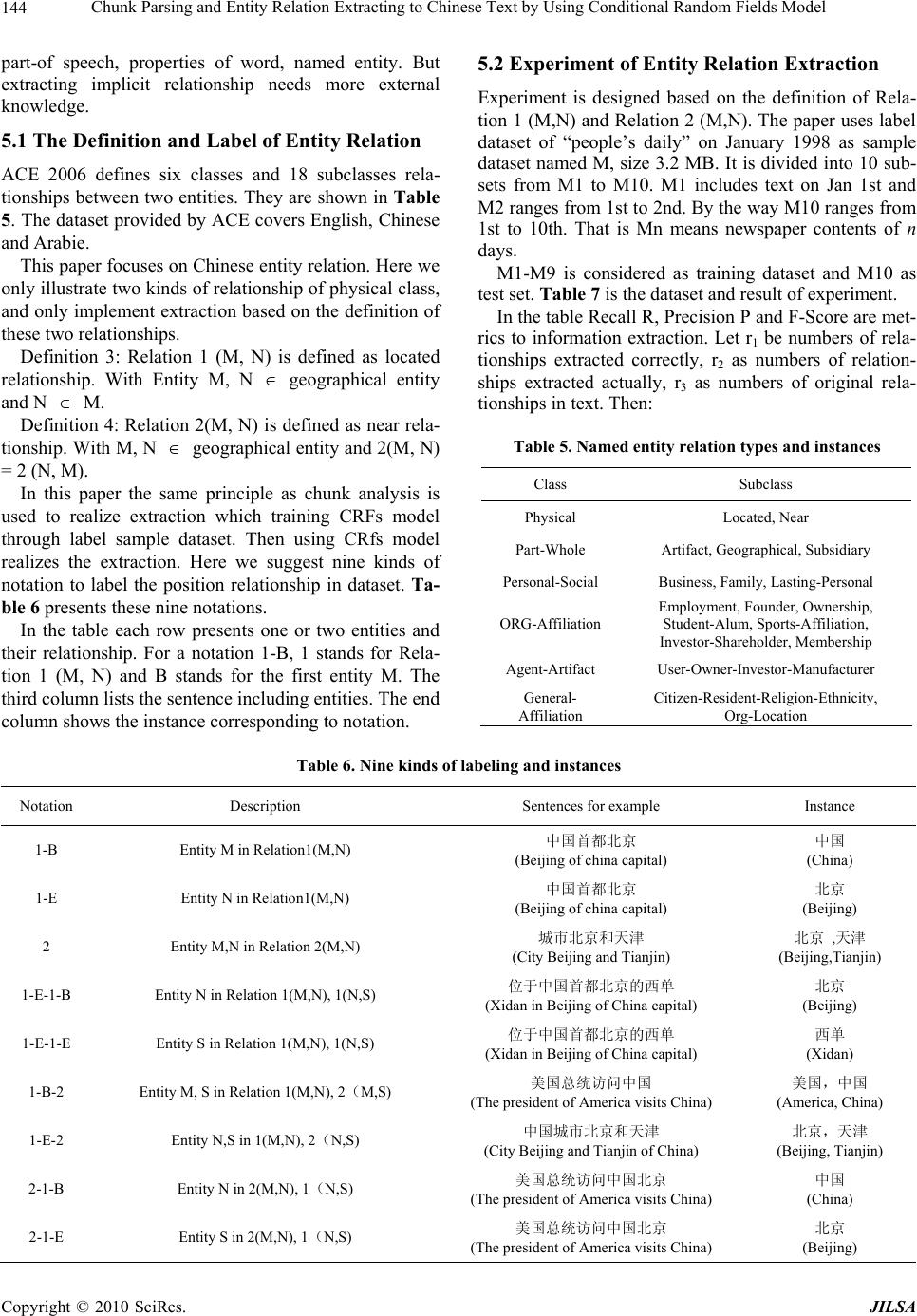

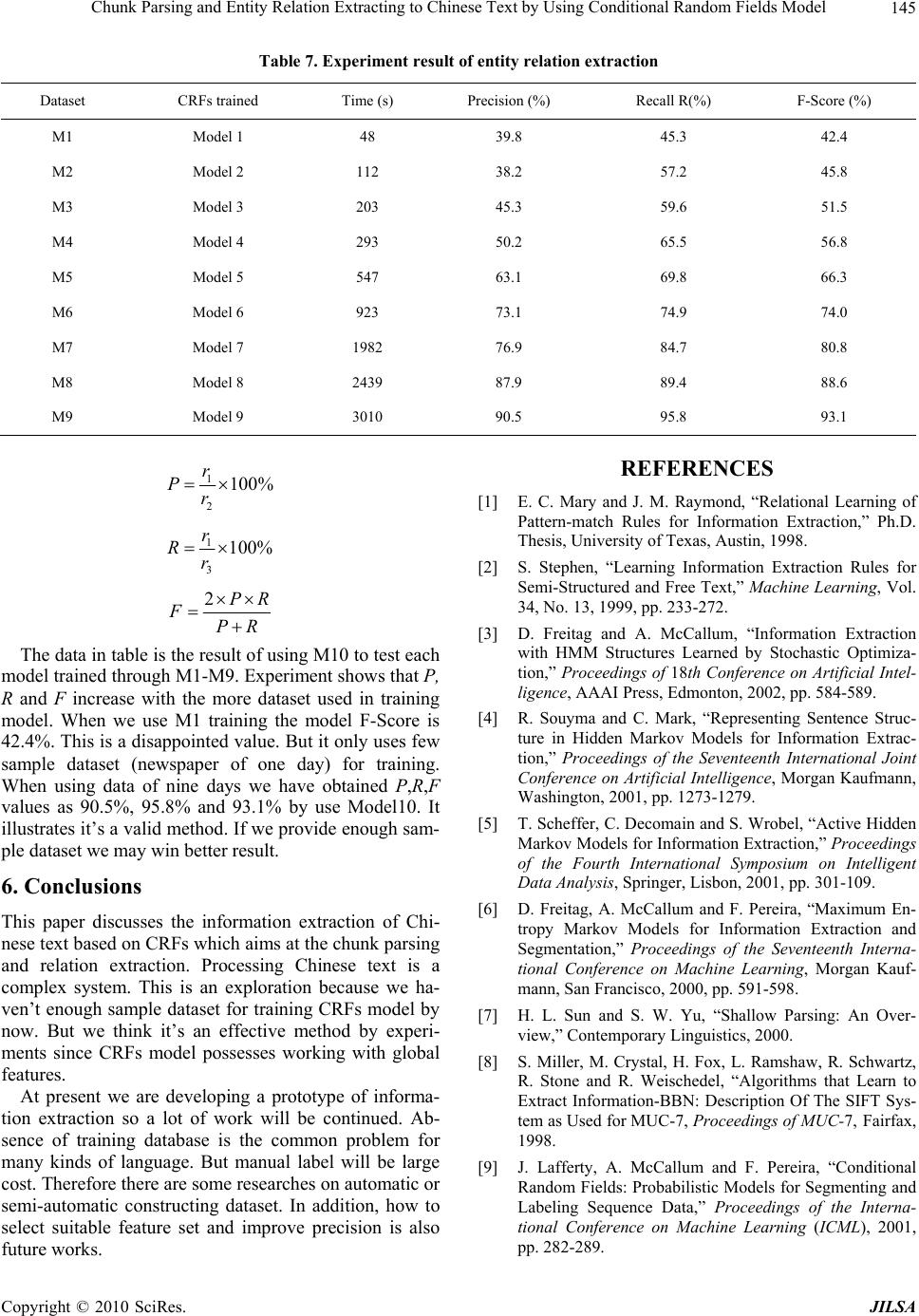

Journal Menu >>

J. Intelligent Learning Systems & Applications, 2010, 2, 139-146 doi:10.4236/jilsa.2010.23017 Published Online August 2010 (http://www.SciRP.org/journal/jilsa) Copyright © 2010 SciRes. JILSA 139 Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model Junhua Wu, Longxia Liu College of Electronics and Information Engineering, Nanjing University of Technology, Nanjing, China. Email: wujh@njut.edu.cn Received March 19th, 2010; revised July 20th, 2010; accepted July 30th, 2010. ABSTRACT Currently, large amounts of information exist in Web sites and various digital media. Most of them are in natural lan- guage. They are easy to be browsed, but difficult to be understood by computer. Chunk parsing and entity relation ex- tracting is important work to understanding information semantic in natural language processing. Chunk analysis is a shallow parsing method, and entity relation extraction is used in establishing relationship between entities. Because full syntax parsing is complexity in Chinese text understanding, many researchers is more interesting in chunk analysis and relation extraction. Conditional random fields (CRFs) model is the valid probabilistic model to segment and label se- quence data. This paper models chunk and entity relation problems in Chinese text. By transforming them into label solution we can use CRFs to realize the chunk analysis and entities relation extraction. Keywords: Information Extraction, Chunk Parsing, Entity Relation Extraction 1. Introduction At present, information is presented in various digital media. Many of them are organized in natural language, such as information in Web pages, text document in digital library etc. They are non structural or semi-struc- tural and difficult to understand by computer. Further processing to the information is blocked. It makes large amounts of information wasted. So research on semantic Web, natural language understanding is developed in order to structure and retrieve information from Web pages or other natural language documents. And infor- mation extraction is important task in the work. Information extraction is a process to retrieve informa- tion from large text set. It may be concerned with identi- fying named entity, extracting relationship and label properties of sentence etc. It is a subfield of natural lan- guage understanding. There are some methods for infor- mation extraction including methods based on rules [1,2] and statistical model [3-6]. Chunk analysis and relation extraction play the impor- tant roles in information extraction. It is a simplified syntax paring technology to define and label chunk based on syntax and semantics [7]. Comparing with full parsing this method only identifies the partial structure in a sen- tence, such as noun phrase or verb phrase. Through which, the simple syntax parsing can be implemented and information extraction may be more effective and simple. The objective of entity relation extraction is identify- ing the relationship between entities in text. Miller et al. considered the problem of relation extraction in the con- text of natural language parsing and augmented syntactic parses with semantic relation-specific attributes [8]. It will be critical in events detecting and describing for re- search on information extraction. Entity relation may be explicit and implicit. Some encountered problems make studying on entities relation hard such as few dataset, difficult extraction of implicit relation and immature parsing to Chinese. Conditional random fields model is a valid probabilis- tic model to segment and label sequence data [9]. In Chi- nese understanding, some research use CRFs in Chinese part-of-speech and word segmentation [10,11], but sel- dom in chunk parsing and entity relation extraction. Compared with other statistical model CRFs can rep- resent long-range dependences and multiple interacting features. Our innovation is that we analyze Chinese cha- racteristics and then model chunk and entity relation problems as label problem. Moreover using CRFs real-  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 140 izes the chunk analysis and relation extraction. 2. Related Work A number of approaches currently have been used for natural language tasks as part of speech tagging and en- tity extraction. They are usually based on rules or statis- tic models. Text chunk divides a text in syntactically correlated parts of words. Steven introduced chunks [12] firstly. Many machine learning approaches, such as Memory-based Learning (MBL) [13], Transformation-based Learning (TBL) [14], and Hidden Markov Models (HMMs), have been applied to text chunking [15] for parsing. Named entity is important linguistic unit. So there are many works such as named entity recognition, disam- biguation, and relationship extraction on it [16-20]. The problem of relation extraction is starting to be addressed within the natural language processing and machine learning communities. Since it is proposed, many meth- ods have been suggested. Methods based on knowledge base were used in decision of relation extraction firstly. But it is difficult to construct knowledge base. Therefore some methods based on machine learning were emerged, such as feature-based [16], kernel-based [17] method. Approach kernel-based is a valid one for relation ex- tracting, but its training and testing time is long for large amounts of data. 2.1 Model for Information Extraction A lot of research to information extraction is based on machine learning methods using statistic model because by the model sentence can be segmented and labeled. Statistical language model is a probability model which estimate probability of expected text sequence by com- puting probability. These models are concerned with Hidden Markov Model (HMM), Maximum Entropy Model (ME), Maximum Entropy Markov Model (MEMM) and conditional random fields model CRFs. Our method is also based on statistic model. Hidden Markov models (HMMs) are a powerful pro- babilistic tool for modeling sequential data, and have been applied with success to many text-related tasks, such as part-of-speech tagging, text segmentation and information extraction [6]. HMM can be considered as a finite state machine that presents states and transition chains of an application. The model is built either by manual or training. Usually extracting text information is concerned with training and labeling. Maximum likeli- hood and Baum-Welch algorithm are used to learning sample data labeled or unlabeled. And then Viterbi algo- rithm is used to label state sequence with maximum probability in text needed processing. HMM is easy to build. It needn’t large dictionary or rule sets with well flexibilities. There are many improved HMM model and their application in information extrac- tion. Freitag and McCallum’s paper [3] uses stochastic optimization to search the fittest HMM. Souyma Ray and Mark Crave [4] choose HMM to represent sentence structure. Scheffer T, Decomain C and Wrobel S [5] pro- poses a method which uses active learning to minimize the label data for HMM training. But HMM is a genera- tive model and independent hypothesis is needed, so it will ignore the context of information and lead to an un- expected result. Maximum Entropy (ME) method [21] converts the se- quence label into data classifying. Its principle can be stated as follows [22]: 1) Reformulate the different information sources as constraints to be satisfied by the target (combined) esti- mate. 2) Among all probability distributions that satisfy these constraints, choose the one that has the highest en- tropy. The advantage of ME is [21]: It makes the least as- sumptions about the distribution being modeled other than those imposed by constraints and given by the prior information. The framework is completely general in that almost any consistent piece of probabilistic information can be formulated a constraint. Moreover, if the con- straints are consistent, that is there exists a probability function which satisfies them, then amongst all probabil- ity functions which satisfy the constraints, there is a unique maximum entropy. This ME method will lost sequence properties. So a model combining ME and MM (Markov Model) is emer- ged, that is MEMM [6]. In MEMM, the HMM transition and observation func- tions are replaced by a single function P(s|s’,o) that pro- vides the probability of the current state s given the pre- vious state s’ and the current observation o. In this model, as in most applications of HMMs, the observations are given—reflecting the fact that we don’t actually care about their probability, only the probability of the state sequence (and hence label sequence) they induce. Conditional probability of transition between states is introduced in MEMM, which makes the arbitrary choice of properties possible. But MEMM is partial model which needs normalization for each node. Therefore only a localized optimization value is obtained. Also the pro- blem named length bias and label bias [9] may be caused. It means the method will ignore those not in training dataset. 2.2 Label Bias Classical discriminative Markov models, maximum en- tropy taggers (Ratnaparkhi, 1996), and MEMMs, as well as non-probabilistic sequence tagging and segmentation models with independently trained next-state classifiers are all potential victims of the label bias problem [9]. Copyright © 2010 SciRes. JILSA  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 141 Consider a MEMM model shown in Figure 1 which is a finite-state acceptor for shallow parsing of two sen- tences: The robot wheels Fred round. The robot wheels are round. Here [B-NP] etc. are labels for sentence. NP, VP, ADJP and PP mean Noun Phrase, Verb Phrase, Adjective Phrase and Prep Phrase. B or I stand for word location, begin or inter of a phrase. It is obvious that sum of transition probability is 1 from a state i to other adjacent states. Because there is only one transition in state 3 and 7, while current state and observed value Fred are specified, conditional prob- ability of next state is: (4|3,)(8|7,)pFredpFred1 But this equation will face to some problems if there isn’t existing a transition from state 7 to state 8 while observe value is Fred in training dataset. Generally a low probability is specified if an unknown event exists in training dataset. But for state with single output, the fol- low equation have to be given: 7 (|7,) 1 allstates fromstate to psFred It means that the observed value Fred is ignored. This will result in that label sequence is not related to ob- served sequence. That is label bias. Proper solutions require models that account for whole state sequences at once by letting some transitions “vote” more strongly than others depending on the correspond- ing observations [9]. Lafferty suggests a global model CRFs that can solve the problems discussed before. Instead of local normal- izing CRFs can realize global processing, so a global opti- mization value will be produced. CRFs is a new graph model of probability which can represent the long-range dependences and multiple interacting features. Domain knowledge is represented conveniently by the model. McCallum use this model to process named entity recog- nition [23]. His experiments shows F value is 84.04% Figure 1. Finite-state acceptor for shallow parsing of two sentences while processing English, F value is 68.11% while proc- essing German. Hong mingcai uses CRFs to label Chi- nese part-of speech [11]. But information extraction of Chinese is still a difficult task presented in many sub- fields such as chunk analysis and entity relation extrac- tion. So this paper explores the methods about chunk analysis and entity relation extraction to Chinese text based on CRFs. 3. Conditional Random Fields (CRFs) Model Conditional random fields model is a probabilistic model to segment and label sequence data based on statistic. It is a non-directional graph model that can compute condi- tional probability of output sequence when conditioned on input sequence of model. Definition 1 [9]. Let G = (V,E) be a graph such that Y = (Yv) v2∈V , so that Y is indexed by the vertices of G. Then (X,Y) is a conditional random field in case, when conditioned on X, the random variables Y v obey the Markov property with respect to the graph: P(Yv |X, Yw ,w ≠ v) =P(Yv |X, Yw, w ~ v), where w ~ v means that w and v are neighbors in G. CRFs is a random field globally conditioned on the observation X. if X = {x1, x2, … xn} is specified as data sequence needed label then Y = {y1, y2, … yn}is the result data which have been segmented or labeled by the model. The model computes the joint distribution over the label sequence Y given X instead of only defining next state in terms of current state. The conditional probability of label sequence Y de- pends on the global interactional features with different weight. Assume 1,..., k is a vector of features, con- ditional probability, for a given X, PΛ(Y| X) is defined as follow: 1 1 1 (|)exp, , , T kk tt tk X pYXfy yXt Z (1) 1 1 exp, , , T Xkkt Ytk t Z fy yXt (2) Zx is a normalized value that makes the total probability of all state sequence is 1 for given X. 1 (,,, kt t) f yyXt is a feature function to mark the feature at position t and t-1 for observed X. Its value is between 0 and 1. 1,..., k is corresponding to the context of data se- quence and is a weight set of 1 (,,, kt t) f yyXt . If we want to use the CRFs model to obtain expected result the critical task is training model. A model trained can produce optimization P(Y|X), that is *arg max Y Y (| )pY X. It also means 1,..., k will be deter- Copyright © 2010 SciRes. JILSA  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 142 mined. Training may use log-likehood algorithm that is independent of applications. In this paper chunk analysis and entity relation extrac- tion will be converted into the label solution. Data se- quence X is made up of some words. For each word W0 there are some words ahead or back of it. It is repre- sented W = {W-n, …W-1, W0, W+1, …W+n}. W-n, stands for nth word previous to W0 and W+n is the nth one following W0. 1,...,k , in model, can be thought as feature weights related to W= {W-k, …W-1, W0, W+1, …W+k}. Each is specified in model after training, and label sequence can be produced by Viterbi algorithm through running the model . 4. Chunk Analysis Based on CRFs Chunk is firstly proposed by Abney [12]. He thinks chunk is the syntax element between word and sentence and with non-recursive properties. Chunk analysis is partial parsing, also named shallow parsing, relative to complete parsing with simplified pol- icy [15]. It is a new technology of natural language proc- essing. Full parsing can produce a complete parse tree finally by series analysis process to sentence, which needs large cost. But chunk analysis only needs to iden- tify some structures of the sentences such as non-recur- sive noun phrase, verb phrases etc, called chunk. By di- viding sentence into different chunks in syntax or seman- tics and labeling chunks we can improve the efficiency of information extraction. It is a policy between lexical ana- lysis and syntax analysis. Chunk partitioning and identi- fying are completed by chunk parsing in natural language processing. 4.1 The Definition and Label of Chunks Definition 2. Chunk is a structure that is non-recursive phrase meet syntax. Each chunk has a head word and begins or ends at this word. Non-recursive phrase means nested structure not exist. That is, all chunks are the same level. Conference on Computational Natural Language Lear- ning (CoNLL-2000) developed a dataset of English chunk which provided a platform to evaluating and test chunk analysis algorithms. There are 11 types chunks defined. They are NP, VP, ADVP, ADJP, PP, SBAR, CONJP, PRT, INTJ, LST, UCP [24]. Most of Chinese chunks present the same properties compared with English. But there is some difference. By analyzing the properties of Chinese we defined some chunk types: noun chunk(NP), verb chunk(VP), adjective chunk(AP), adverb chunk(DP), preposition chunk(SP), time chunk(TP), quantifier chunk(MP), conjunction chunk (CONJP) and other chunk(UCP). In fact chunk analysis based on CRFs has become a process of labeling chunk like tagging part-of speech. Ge- nerally there are two kinds of standard method to label: Inside/Outside and Start/End methods. Inside/Outside policy, named IOB1, uses tag set {I,O,B} [25] to label internal, outside and first word of a chunk. Combining it with chunk type we will have chunk labeled. Such as B-VP, it shows that is a first word of a verb chunk. O means the word doesn’t belong to any chunk. Start/End method, named IOBES, uses tag set {I,O,B,E,S}. When chunk only includes one word, S tag is used. E labels the last word of a chunk. Other tags are the same as In- side/Outside. For example S-NP means a chunk is con- structed by one word. Table 1 presents the label chunks of a sentence. The first column of table is Chinese words and the second column is corresponding to English for reader understanding. Next two columns are notations used IOB1 and IOBES. In the table, row 4-6 represent a verb chunk which consist of three Chinese words labeled B-VP, I-VP and E-VP if use IOBES method. By these label chunk analy- sis is considered as chunk label which can be imple- mented by training CRFs model. 4.2 Model Training CRFs model must be trained using labeled dataset to de- termine the model parameters. That trained model can be used to realize processing text which expects to be seg- mented and labeled. If X is sentences that have been la- beled and Y is corresponding label sequence of chunk CRFs model training will make label sequence * Y optimal. arg max ( |) YpY X Here we use CRF++0.50 as training and testing tool. CRF++0.50 is a string learning tool based on CRFs prin- ciple. The training sample file and feature template file are needed in training process. Training will result in a CRfs model which will be used in labeling chunk to Chinese text. Table 1. An example of label chunks Chinese English IOB1 IOBES 因而 So I-CONJP S-CONJP 我们 we B-NP S-NP 可能 may B-VP B-VP 会 be I-VP I-VP 面临 Face to I-VP E-VP 一个 a B-MP S-MP 不 un B-NP B-NP 稳定 stable I-NP I-NP 时期 period I-NP E-NP 。 . O O Copyright © 2010 SciRes. JILSA  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 143 The training sample file is made up of some blocks and each block represents a sentence. The block form of training sample file is presented in Table 2. There is a blank row between blocks. Each block in- cludes some tokens and each token is a label word in one row. First column is the Chinese word and next column is the English word to help understanding. Third column lists the properties of the word (may be more than one column). Last column is the tag notations. In section 3 we know some feature weights used in representing context of a word W0. So it is important to select feature set. Generally context of a word and their properties are very useful for decision of feature. That means we can use some words which are previous or succeed to word W0 as features. Features may be N-gram. Features {…W-2, W-1, W0, W1, W2…} is named Uni-gram basic features and {…W-2, W-1, W-1, W0, W0, W1, W1, W2…} is named Bi-gram basic features. Here Wn stands for a word. In addition, advanced features {… WP-2, WP-1, WP0, WP1, WP2 …} which combine the word and its property together are also used to improve result of analysis. P means property of a word. Table 3 shows various features of word “赤字” (deficit) in Table 2. The last column of Table 3 is English word corresponding to Chinese word. So an observing window of token W0 need to be given for training. The window includes W0 and some words before and after it, that is W ={ W-n,W-(n-1), …,W0, …, Wn-1, Wn}. Table 4 is an example about observing window. It is used as feature source for training vector 1,..., k . Larger window provides more context feature, but it will increase the cost of processing. Too small window may Table 2. Form of experiment dataset sample Chinese token English tokenProperty Notation 他 He PRP B-NP 认为 reckons VBZ B-VP 当前的 current JJ I-NP 赤字 deficit NN I-NP 将 will MD B-VP 缩小 narrow VB I-VP 到 to TO B-PP 仅 only RB B-NP 1800 18000 CD I-NP 万 thousands CD I-NP 9月 september NNP B-NP . . O Table 3. Feature instance Feature Feature itemFeature value Value in English W-2 认为 reckons W-1 当前的 current W0 赤字 deficit W1 将 will Uni-gram basic features W2 缩小 narrow W-1W0 当前的/赤字 current/ deficit Bi-gram basic features W0W1 赤字/将 deficit/will WP-1, 当前的/JJ current/JJ Uni-gram advanced features WP1 将/MD Will/MD Table 4. Observing window of features Feature position Description Chinese example W = W0 Token 当前的 W = W-1 Last word of token 认为 W = W+1 Next word of token 赤字 W = W0W+1 Token and next word 当前的 赤字 W = W-1W0W+1 Last word, token and next word 认为 当前的 赤字 lose important features. So we define windows size as 5, that is W = {W-2, W-1, W0, W1, W2}. The template file of features defines the feature item for training. After training 1,..., k is produced, that is CRFs model has been available. 5. Entity Relation Extraction Entity is the basic element in natural text, such as place, role, organization, thing etc. Entities play important roles in natural language text. Generally there are some rela- tionships between them. Such as locating, belong to, ad- jacent and so on. These relationships may be explicit or implicit. Implicit relationship needs reasoning by know- ledge. Entity relation extraction is the process of identi- fying the relationship between entities in text and label- ing them. It is not only an important work in information extraction but also useful in automatic answer or seman- tic network. Testing from MUC shows that many systems are able to process named entity to large of English document [26]. But entity relation extraction to Chinese may be difficult. As we known machine learning is the valid method for extracting, but it needs dataset labeled. Cur- rently, “People’s Daily” labeled by Beijing university is perhaps a better choice. This dataset has been labeled in Copyright © 2010 SciRes. JILSA  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model Copyright © 2010 SciRes. JILSA 144 5.2 Experiment of Entity Relation Extraction part-of speech, properties of word, named entity. But extracting implicit relationship needs more external knowledge. Experiment is designed based on the definition of Rela- tion 1 (M,N) and Relation 2 (M,N). The paper uses label dataset of “people’s daily” on January 1998 as sample dataset named M, size 3.2 MB. It is divided into 10 sub- sets from M1 to M10. M1 includes text on Jan 1st and M2 ranges from 1st to 2nd. By the way M10 ranges from 1st to 10th. That is Mn means newspaper contents of n days. 5.1 The Definition and Label of Entity Relation ACE 2006 defines six classes and 18 subclasses rela- tionships between two entities. They are shown in Table 5. The dataset provided by ACE covers English, Chinese and Arabie. This paper focuses on Chinese entity relation. Here we only illustrate two kinds of relationship of physical class, and only implement extraction based on the definition of these two relationships. M1-M9 is considered as training dataset and M10 as test set. Table 7 is the dataset and result of experiment. In the table Recall R, Precision P and F-Score are met- rics to information extraction. Let r1 be numbers of rela- tionships extracted correctly, r2 as numbers of relation- ships extracted actually, r3 as numbers of original rela- tionships in text. Then: Definition 3: Relation 1 (M, N) is defined as located relationship. With Entity M, N geographical entity and N M. Definition 4: Relation 2(M, N) is defined as near rela- tionship. With M, N geographical entity and 2(M, N) = 2 (N, M). Table 5. Named entity relation types and instances Class Subclass Physical Located, Near Part-Whole Artifact, Geographical, Subsidiary Personal-Social Business, Family, Lasting-Personal ORG-Affiliation Employment, Founder, Ownership, Student-Alum, Sports-Affiliation, Investor-Shareholder, Membership Agent-Artifact User-Owner-Investor-Manufacturer General- Affiliation Citizen-Resident-Religion-Ethnicity, Org-Location In this paper the same principle as chunk analysis is used to realize extraction which training CRFs model through label sample dataset. Then using CRfs model realizes the extraction. Here we suggest nine kinds of notation to label the position relationship in dataset. Ta- ble 6 presents these nine notations. In the table each row presents one or two entities and their relationship. For a notation 1-B, 1 stands for Rela- tion 1 (M, N) and B stands for the first entity M. The third column lists the sentence including entities. The end column shows the instance corresponding to notation. Table 6. Nine kinds of labeling and instances Notation Description Sentences for example Instance 1-B Entity M in Relation1(M,N) 中国首都北京 (Beijing of china capital) 中国 (China) 1-E Entity N in Relation1(M,N) 中国首都北京 (Beijing of china capital) 北京 (Beijing) 2 Entity M,N in Relation 2(M,N) 城市北京和天津 (City Beijing and Tianjin) 北京 ,天津 (Beijing,Tianjin) 1-E-1-B Entity N in Relation 1(M,N), 1(N,S) 位于中国首都北京的西单 (Xidan in Beijing of China capital) 北京 (Beijing) 1-E-1-E Entity S in Relation 1(M,N), 1(N,S) 位于中国首都北京的西单 (Xidan in Beijing of China capital) 西单 (Xidan) 1-B-2 Entity M, S in Relation 1(M,N), 2(M,S) 美国总统访问中国 (The president of America visits China) 美国,中国 (America, China) 1-E-2 Entity N,S in 1(M,N), 2(N,S) 中国城市北京和天津 (City Beijing and Tianjin of China) 北京,天津 (Beijing, Tianjin) 2-1-B Entity N in 2(M,N), 1(N,S) 美国总统访问中国北京 (The president of America visits China) 中国 (China) 2-1-E Entity S in 2(M,N), 1(N,S) 美国总统访问中国北京 (The president of America visits China) 北京 (Beijing)  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 145 Table 7. Experiment result of entity relation extraction Dataset CRFs trained Time (s) Precision (%) Recall R(%) F-Score (%) M1 Model 1 48 39.8 45.3 42.4 M2 Model 2 112 38.2 57.2 45.8 M3 Model 3 203 45.3 59.6 51.5 M4 Model 4 293 50.2 65.5 56.8 M5 Model 5 547 63.1 69.8 66.3 M6 Model 6 923 73.1 74.9 74.0 M7 Model 7 1982 76.9 84.7 80.8 M8 Model 8 2439 87.9 89.4 88.6 M9 Model 9 3010 90.5 95.8 93.1 1 2 100% r Pr 1 3 100% r Rr 2PR FPR The data in table is the result of using M10 to test each model trained through M1-M9. Experiment shows that P, R and F increase with the more dataset used in training model. When we use M1 training the model F-Score is 42.4%. This is a disappointed value. But it only uses few sample dataset (newspaper of one day) for training. When using data of nine days we have obtained P,R,F values as 90.5%, 95.8% and 93.1% by use Model10. It illustrates it’s a valid method. If we provide enough sam- ple dataset we may win better result. 6. Conclusions This paper discusses the information extraction of Chi- nese text based on CRFs which aims at the chunk parsing and relation extraction. Processing Chinese text is a complex system. This is an exploration because we ha- ven’t enough sample dataset for training CRFs model by now. But we think it’s an effective method by experi- ments since CRFs model possesses working with global features. At present we are developing a prototype of informa- tion extraction so a lot of work will be continued. Ab- sence of training database is the common problem for many kinds of language. But manual label will be large cost. Therefore there are some researches on automatic or semi-automatic constructing dataset. In addition, how to select suitable feature set and improve precision is also future works. REFERENCES [1] E. C. Mary and J. M. Raymond, “Relational Learning of Pattern-match Rules for Information Extraction,” Ph.D. Thesis, University of Texas, Austin, 1998. [2] S. Stephen, “Learning Information Extraction Rules for Semi-Structured and Free Text,” Machine Learning, Vol. 34, No. 13, 1999, pp. 233-272. [3] D. Freitag and A. McCallum, “Information Extraction with HMM Structures Learned by Stochastic Optimiza- tion,” Proceedings of 18th Conference on Artificial Intel- ligence, AAAI Press, Edmonton, 2002, pp. 584-589. [4] R. Souyma and C. Mark, “Representing Sentence Struc- ture in Hidden Markov Models for Information Extrac- tion,” Proceedings of the Seventeenth International Joint Conference on Artificial Intelligence, Morgan Kaufmann, Washington, 2001, pp. 1273-1279. [5] T. Scheffer, C. Decomain and S. Wrobel, “Active Hidden Markov Models for Information Extraction,” Proceedings of the Fourth International Symposium on Intelligent Data Analysis, Springer, Lisbon, 2001, pp. 301-109. [6] D. Freitag, A. McCallum and F. Pereira, “Maximum En- tropy Markov Models for Information Extraction and Segmentation,” Proceedings of the Seventeenth Interna- tional Conference on Machine Learning, Morgan Kauf- mann, San Francisco, 2000, pp. 591-598. [7] H. L. Sun and S. W. Yu, “Shallow Parsing: An Over- view,” Contemporary Linguistics, 2000. [8] S. Miller, M. Crystal, H. Fox, L. Ramshaw, R. Schwartz, R. Stone and R. Weischedel, “Algorithms that Learn to Extract Information-BBN: Description Of The SIFT Sys- tem as Used for MUC-7, Proceedings of MUC-7, Fairfax, 1998. [9] J. Lafferty, A. McCallum and F. Pereira, “Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data,” Proceedings of the Interna- tional Conference on Machine Learning (ICML), 2001, pp. 282-289. Copyright © 2010 SciRes. JILSA  Chunk Parsing and Entity Relation Extracting to Chinese Text by Using Conditional Random Fields Model 146 [10] Y. Y. Luo and D. G. Huang, “Chinese Word Segmenta- tion Based on the Marginal Probabilities Generated by CRFs,” Journal of Chinese Information Processing, Vol. 23, No. 5, 2009, pp. 3-8. [11] M.-C. Hong, K. Zhang, J. Tang and J.-Z. Li “A Chinese Part-of-Speech Tagging Approach Using Conditional Random Fields,” Computer Science, Vol. 33, No. 10, 2006, pp. 148-152. [12] S. P. Abney and C. Tenny, “Parsing by Chunks. Principle based Parsing: Computation and Psycholinguistics,” Klu- wer Academic Publishers, Dordrecht, 1991, pp. 257-278. [13] F. Erik, “Tjong Kim Sang and Sabine Buch holz. Intro- duction to the Conll-2000 Shared Task: Chunking,” Pro- ceedings of CoNLL-2000 and LLL2000, Lisbin, 2000, pp. 127-132. [14] L. Ramshaw and M. Marcus, “Text Chunking Using Transformation-Based Learning,” In: D. Yarovsky and K. Church, Eds., Proceedings of the Third Workshop on Very Large Corpora, Association for Computational Lin- guistics, Somerset, 1995, pp. 82-94. [15] J. Hammerton, M. Osborne, S. Armstrong and W. Daelemans, “Introduction to Special Issue on Machine Learning Approaches to Shallow Parsing,” Journal of Machine Learning Research, Vol. 2, No. 3, 2002, pp. 551-558. [16] K. Nanda, “Combining Lexical, Syntactic and Semantic Features with Maximum Entropy Models for Extracting Relations,” Proceedings of the ACL 2004 on Interactive poster and demonstration sessions, Barcelona, 2004, pp. 22-25. [17] D. Zelenko, C. Aone and A. Richardella, “Kernel Meth- ods for Relation Extraction,” Journal of Machine Learn- ing Research, Vol. 3, 2003, pp. 1083-1106. [18] C. Whitelaw, A. Kehlenbeck, N. Petrovic, et al., “Web- Scale Named Entity Recognition,” Proceeding of ACM 17th Conference on Information and Knowledge Man- agement, Napa Valley, 2008, pp. 123-132. [19] Z. Q. Chen, D. V. Kalashnikov and S. Mehrotra, “Adap- tive Graphical Approach to Entity Resolution,” Proceed- ings of ACM IEEE Joint Conference on Digital Libraries, Vancouver, 2007, pp. 204-213. [20] X. P. Han and J. Zhao, “Person Name Disambiguation Based on Web-Based Person Mining and Categorization,” 2nd Web People Search Evaluation Workshop in con- junction with WWW2009, Madrid, 2009. [21] S. D. Pietra, R. L. Mercer and S. Roukos, “Adaptive Lan- guage Modeling Using Minimum Discriminate Estima- tion,” Proceedings of the Speech and Natural Language DARPA Workshop, San Francisco, 1992, pp. 103-106. [22] R. Rosenfeld, “Adaptive Statistical Language Modeling: A Maximum Entropy Approach,” Ph.D. Thesis, School of Computer Science, Carnegie Mellon University, Pitts- burgh, 1994. [23] A. McCallum and W. Li, “Early Results for Named En- tity Recognition with Conditional Random Fields Feature Induction and Web-Enhanced Lexicons,” Proceedings of CoNLL-2003 Association for Computational Linguistics, Daelemans, 2003, pp. 188-191. [24] K. Tjong, E. F. Sang and S. Buchholz, “Introduction to the CoNLL-2000 Shared Task: Chunking,” Proceedings of CoNLL-2000 and LLL-2000 Association for Computa- tional Linguistics, Lisbon, 2000, pp. 127-132. [25] K. Tjong, E. F. Sang and J. Veenstra, “Representing Text Chunks,” Proceedings of EACL’99, Association for Computational Linguistics, Bergen, 1995, pp. 173-179. [26] J. Zhao, “A Survey on Named Entity Recognition, Dis- ambiguation and Cross 2 Lingual Conference Resolu- tion,” Journal of Chinese Information Processing, Vol. 23, No. 2 March 2009, pp. 3-17. Copyright © 2010 SciRes. JILSA |