Applied Mathematics

Vol.06 No.08(2015), Article ID:58451,17 pages

10.4236/am.2015.68131

Formulation of a Preconditioned Algorithm for the Conjugate Gradient Squared Method in Accordance with Its Logical Structure

Shoji Itoh1, Masaaki Sugihara2

1Division of Science, School of Science and Engineering, Tokyo Denki University, Saitama, Japan

2Department of Physics and Mathematics, College of Science and Engineering, Aoyama Gakuin University, Kanagawa, Japan

Email: itosho@acm.org

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution-NonCommercial International License (CC BY-NC).

http://creativecommons.org/licenses/by-nc/4.0/

Received 22 June 2015; accepted 27 July 2015; published 30 July 2015

ABSTRACT

In this paper, we propose an improved preconditioned algorithm for the conjugate gradient squared method (improved PCGS) for the solution of linear equations. Further, the logical structures underlying the formation of this preconditioned algorithm are demonstrated via a number of theorems. This improved PCGS algorithm retains some mathematical properties that are associated with the CGS derivation from the bi-conjugate gradient method under a non-preconditioned system. A series of numerical comparisons with the conventional PCGS illustrate the enhanced effectiveness of our improved scheme with a variety of preconditioners. This logical structure underlying the formation of the improved PCGS brings a spillover effect from various bi-Lanczos-type algorithms with minimal residual operations, because these algorithms were constructed by adopting the idea behind the derivation of CGS. These bi-Lanczos-type algorithms are very important because they are often adopted to solve the systems of linear equations that arise from large-scale numerical simulations.

Keywords:

Linear Systems, Krylov Subspace Method, Bi-Lanczos Algorithm, Preconditioned System, PCGS

1. Introduction

In scientific and technical computation, natural phenomena or engineering problems are described through numerical models. These models are often reduced to a system of linear equations:

(1)

(1)

where A is a large, sparse coefficient matrix of size ,

,

is the solution vector, and

is the solution vector, and

is the right-hand side (RHS) vector.

is the right-hand side (RHS) vector.

The conjugate gradient squared (CGS) method is a way to solve (1) [1] . The CGS method is a type of bi- Lanczos algorithm that belongs to the class of Krylov subspace methods.

Bi-Lanczos-type algorithms are derived from the bi-conjugate gradient (BiCG) method [2] [3] , which assumes the existence of a dual system

(2)

(2)

Characteristically, the coefficient matrix of (2) is the transpose of A. In this paper, we term (2) a “shadow system”.

Bi-Lanczos-type algorithms have the advantage of requiring less memory than Arnoldi-type algorithms, another class of Krylov subspace methods.

The CGS method is derived from BiCG. Furthermore, various bi-Lanczos-type algorithms, such as BiCGStab [4] , GPBiCG [5] and so on, have been constructed by adopting the idea behind the derivation of CGS. These bi-Lanczos-type algorithms are very important because they are often adopted to solve systems in the form of (1) that arise from large-scale numerical simulations.

Many iterative methods, including bi-Lanczos algorithms, are often applied together with some preconditioning operation. Such algorithms are called preconditioned algorithms; for example, preconditioned CGS (PCGS). The application of preconditioning operations to iterative methods effectively enhances their performance. Indeed, the effects attributable to different preconditioning operations are greater than those produced by different iterative methods [6] . However, if a preconditioned algorithm is poorly designed, there may be no beneficial effect from the preconditioning operation.

Consequently, PCGS holds an important position within the Krylov subspace methods. In this paper, we identify a mathematical issue with the conventional PCGS algorithm, and propose an improved PCGS1. This improved PCGS algorithm is derived rationally in accordance with its logical structure.

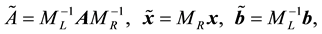

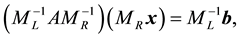

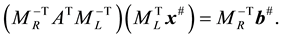

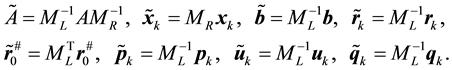

In this paper, preconditioned algorithm and preconditioned system refer to solving algorithms described with some preconditioning operator M (or preconditioner, preconditioning matrix) and the system converted by the operator based on M, respectively. These terms never indicate the algorithm for the preconditioning operation itself, such as “incomplete LU decomposition”, “approximate inverse”, and so on. For example, for a preconditioned system, the original linear system (1) becomes

(3)

(3)

(4)

(4)

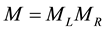

under the preconditioner

. Here, the matrix and the vector in the preconditioned system are denoted by the tilde

. Here, the matrix and the vector in the preconditioned system are denoted by the tilde . However, the conversions in (3) and (4) are not implemented; rather, we construct the preconditioned algorithm that is equivalent to solving (3).

. However, the conversions in (3) and (4) are not implemented; rather, we construct the preconditioned algorithm that is equivalent to solving (3).

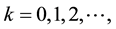

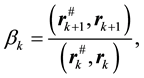

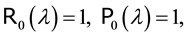

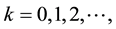

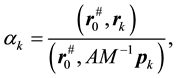

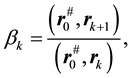

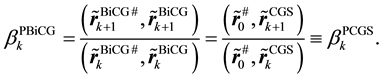

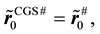

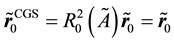

This paper is organized as follows. Section 2 provides an overview of the derivation of the CGS method and the properties of two scalar coefficients ( and

and ). This forms an important part of our argument for the preconditioned BiCG and PCGS algorithms in the next section. Section 3 introduces the PCGS algorithm. An issue with the conventional PCGS algorithm is identified, and an improved algorithm is proposed. In Section 4, we present some numerical results to demonstrate the effect of the improved PCGS algorithm with a variety of preconditioners. As a consequence, the effectiveness of the improved algorithm is clearly established. Finally, our conclusions are presented in Section 5.

). This forms an important part of our argument for the preconditioned BiCG and PCGS algorithms in the next section. Section 3 introduces the PCGS algorithm. An issue with the conventional PCGS algorithm is identified, and an improved algorithm is proposed. In Section 4, we present some numerical results to demonstrate the effect of the improved PCGS algorithm with a variety of preconditioners. As a consequence, the effectiveness of the improved algorithm is clearly established. Finally, our conclusions are presented in Section 5.

2. Derivation of the CGS Method and Preconditioned Algorithm of the BiCG Method

In this section, we derive the CGS method from the BiCG method, and introduce the preconditioned BiCG algorithm.

2.1. Structure of the BiCG Method

BiCG [2] [3] is an iterative method for linear systems in which the coefficient matrix A is nonsymmetric. The algorithm proceeds as follows:

Algorithm 1. BiCG method:

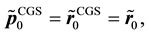

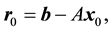

is an initial guess,

is an initial guess,

, set

, set ,

,

, e.g.,

, e.g.,

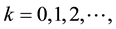

For

End Do

BiCG implements the following Theorems.

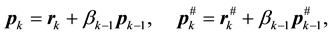

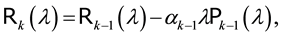

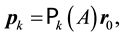

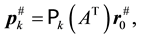

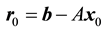

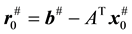

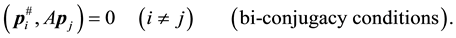

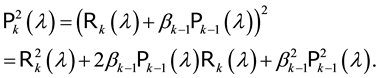

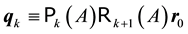

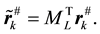

Theorem 1 (Hestenes et al. [8] , Sonneveld [1] , and others.)2 For a non-preconditioned system, there are recurrence relations that define the degree k of the residual polynomial

where

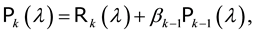

Using the polynomials of Theorem 1, the residual vector for the linear system (1) and the shadow residual vector for the shadow system (2) can be written as

These probing direction vectors are represented by

where

Theorem 2 (Fletcher [2] ) The BiCG method satisfies the following conditions:

2.2. Derivation of the CGS Method

The CGS method is derived by transforming the scalar coefficients in the BiCG method to avoid the

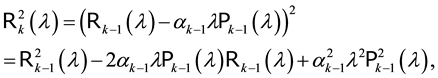

and the following theorem can be applied.

Theorem 3 (Sonneveld [1] ) The CGS coefficients

Proof. We apply

to (16) and (17). Then,

□

The CGS method is derived from BiCG by Theorem 4.

3In this paper, if we specifically distinguish this algorithm, we write

4In this paper, we use the superscript “CGS” alongside

Theorem 4 (Sonneveld [1] ) The CGS method is derived from the linear system’s recurrence relations in the BiCG method under the property of equivalence between the coefficients

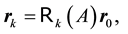

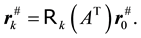

the solution vector

Proof. The coefficients

Further, we can apply

Thus, we have derived the CGS method4.

Algorithm 2. CGS method:

For

End Do

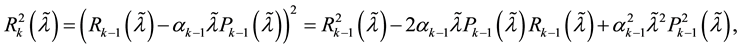

The following Proposition 5 and Corollary 1 are given as a supplementary explanation for Algorithm 2. These are almost trivial, but are comparatively important in the next section’s discussion.

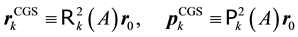

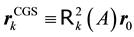

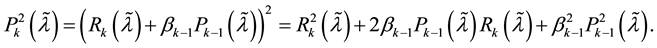

Proposition 5 There exist the following relations:

where

Proof. Equation (23) follows because (18) for

Equation (24) is derived as follows. Applying (18) to (16), we obtain

This equation shows that the inner product of the CGS on the right is obtained from the inner product of the BiCG on the left. Therefore,

Hereafter,

Corollary 1. There exists the following relation:

where

2.3. Derivation of Preconditioned BiCG Algorithm

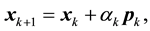

In this subsection, the preconditioned BiCG algorithm is derived from the non-preconditioned BiCG method (Algorithm 1). First, some basic aspects of the BiCG method under a preconditioned system are expressed, and a standard preconditioned BiCG algorithm is given.

When the BiCG method (Algorithm 1) is applied to linear equations under a preconditioned system:

we obtain a “BiCG method under a preconditioned system” (Algorithm 3). We denote this as “PBiCG”.

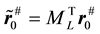

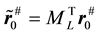

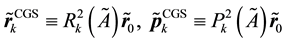

In this paper, matrices and vectors under the preconditioned system are denoted with “

Algorithm 3. BiCG method under the preconditioned system:

For

End Do

5If we wish to emphasize different methods, a superscript is applied to the relevant vectors to denote the method, such as

6We represent the polynomials R and P in italic font to denote a preconditioned system.

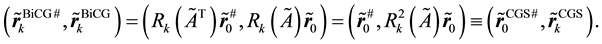

We now state Theorem 6 and Theorem 7, which are clearly derived from Theorem 1 and Theorem 2, respectively.

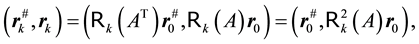

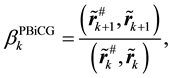

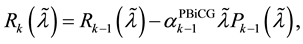

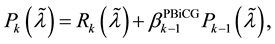

Theorem 6. Under the preconditioned system, there are recurrence relations that define the degree k of the residual polynomial

where

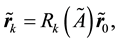

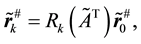

Using the polynomials of Theorem 6, the residual vectors of the preconditioned linear system (25) and the shadow residual vectors of the following preconditioned shadow system:

can be represented as

respectively. The probing direction vectors are given by

respectively. Under the preconditioned system,

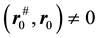

Remark 1. The shadow systems given by (31) do not exist, but it is very important to construct systems in which the transpose of the matrix

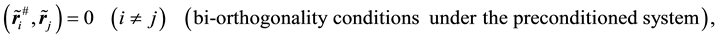

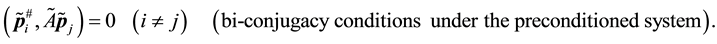

Theorem 7. The BiCG method under the preconditioned system satisfies the following conditions:

Next, we derive the standard PBiCG algorithm. Here, the preconditioned linear system (25) and its shadow system (31) are formed as follows:

Definition 1. On the subject of the PBiCG algorithm, the solution vector is denoted as

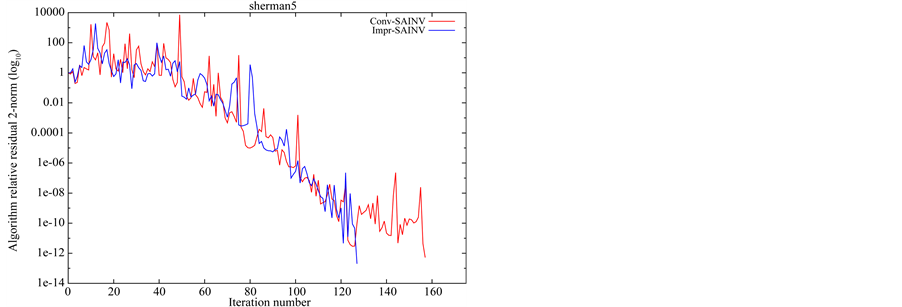

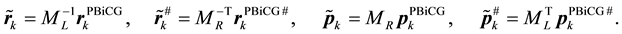

Using this notation, each vector of the BiCG under the preconditioned system given by Algorithm 3 is converted as below:

Substituting the elements of (40) into (36) and (37), we have

Consequently, (26) and (27) become

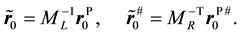

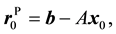

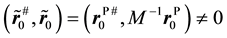

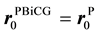

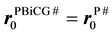

Before the iterative step, we give the following Definition 2.

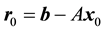

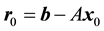

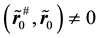

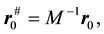

Definition 2. For some preconditioned algorithms, the initial residual vector of the linear system is written as

We adopt the following preconditioning conversion after (40).

Consequently, we can derive the following standard PBiCG algorithm [9] [10] .

Algorithm 4. Standard preconditioned BiCG algorithm:

For

End Do

Algorithm 4 satisfies

Remark 2. Because we apply a preconditioning conversion such as

In this section, we have shown that

3. Improved PCGS Algorithm

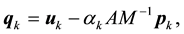

In this section, we first explain the derivation of PCGS, and present the conventional PCGS algorithm. We identify an issue with this conventional PCGS algorithm, and propose an improved PCGS that overcomes this issue.

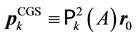

3.1. Derivation of PCGS Algorithm

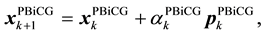

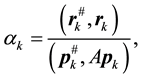

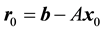

Figure 1 illustrates the logical structure of the solving methods and preconditioned algorithms discussed in this paper.

Figure 1. Relation between BiCG, CGS, and their preconditioned algorithms.

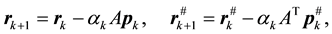

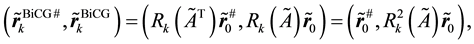

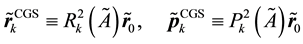

Typically, PCGS algorithms are derived via a “CGS method under a preconditioned system” (Algorithm 5).

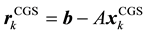

Algorithm 5 is derived by applying the CGS method (Algorithm 2) to the preconditioned linear system (25). In this section, the vectors and

Algorithm 5. CGS method under the preconditioned system:

For

End Do

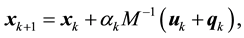

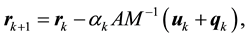

The conventional PCGS algorithm (Algorithm 6) is derived via the CGS method, as shown in Figure 1, but this algorithm does not reflect the logic of subsection 2.2 in its preconditioning conversion. In contrast, our proposed improved PCGS algorithm (Algorithm 7) directly applies the derivation from BiCG to CGS to the PBiCG algorithm, thus maintaining the logic from subsection 2.2.

3.2. Conventional PCGS and Its Issue

The conventional PCGS algorithm is adopted in many documents and numerical libraries [4] [9] [11] . It is derived by applying the following preconditioning conversion to Algorithm 5:

This gives the following Algorithm 6 (“Conventional PCGS” in Figure 1).

Algorithm 6. Conventional PCGS algorithm:

For

End Do

This PCGS algorithm was described in [4] , which proposed the BiCGStab method, and has been employed as a standard approach as affairs stand now.

This version of PCGS seems to be a compliant algorithm on the surface, because the operation

This is different to the conversion given by (40), and we cannot obtain equivalent coefficients to

3.3. Derivation of the CGS Method from PBiCG

In this subsection, we present an improved PCGS algorithm (“Improved PCGS” in Figure 1). We formulate this algorithm by applying the CGS derivation process to the BiCG method directly under the preconditioned system (PBiCG, Algorithm 3).

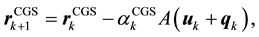

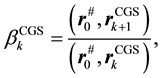

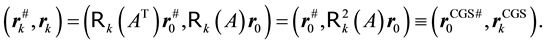

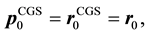

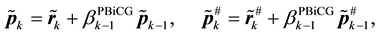

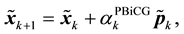

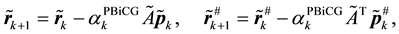

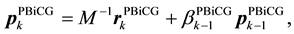

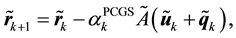

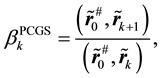

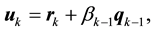

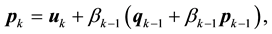

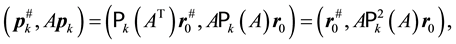

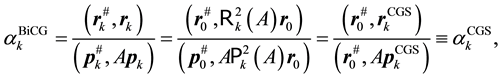

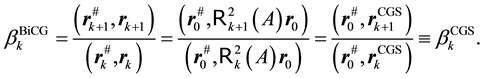

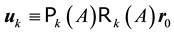

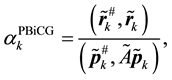

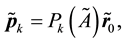

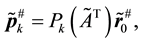

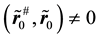

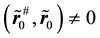

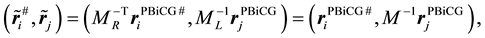

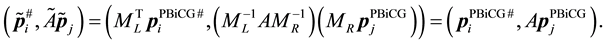

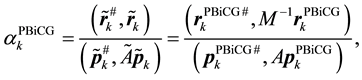

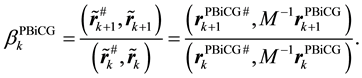

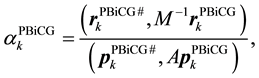

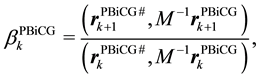

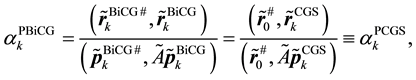

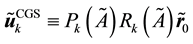

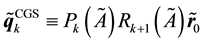

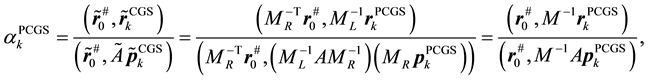

The polynomials (32)-(35) of the residual vectors and the probing direction vectors in PBiCG are substituted for the numerators and denominators of

and apply the following Theorem 8.

Theorem 8. The PCGS coefficients

Proof. We apply

to (54) and (55). Then,

□

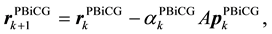

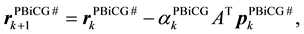

The PCGS method is derived from PBiCG using Theorem 9.

Theorem 9. The PCGS method is derived from the linear system’s recurrence relations in the PBiCG method under the property of equivalence between the coefficients

Proof. The coefficients

Further, we can apply

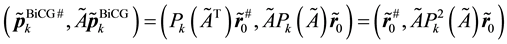

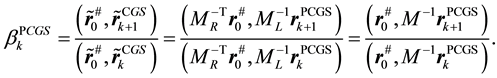

The following Proposition 10 and Corollary 2 are given as a supplementary explanation under the preconditioned system.

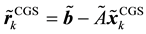

Proposition 10. There exist the following relations:

where

Proof. Equation (61) follows because (56) for

Equation (62) is derived as follows. Applying (56) to (54), we obtain

This equation shows that the inner product of the PCGS on the right is obtained from the inner product of the PBiCG on the left. Therefore,

Hereafter,

Corollary 2. There exists the following relation:

where

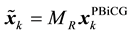

The CGS preconditioning conversion given by

7We apply the superscript “PCGS” to

As a consequence, the following improved PCGS algorithm is derived7.

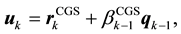

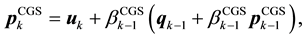

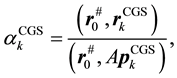

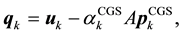

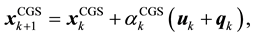

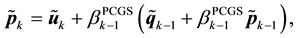

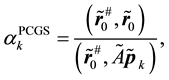

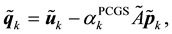

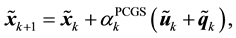

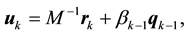

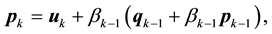

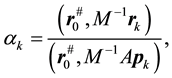

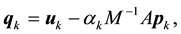

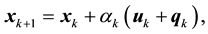

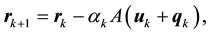

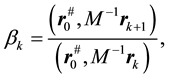

Algorithm 7. Improved preconditioned CGS algorithm:

For

End Do

Algorithm 7 can also be derived by applying the following preconditioning conversion to Algorithm 5. Here, we treat the preconditioning conversions of

The number of preconditioning operations in the iterative part of Algorithm 7 is the same as that in Algorithm 6.

4. Numerical Experiments

In this section, we compare the conventional and improved PCGS algorithms numerically.

The test problems were generated by building real unsymmetric matrices corresponding to linear systems taken from the Tim Davis collection [12] and the Matrix Market [13] . The RHS vector

The numerical experiments were executed on a DELL Precision T7400 (Intel Xeon E5420, 2.5 GHz CPU, 16 GB RAM) running the Cent OS (kernel 2.6.18) and the Intel icc 10.1 compiler.

The results using the non-preconditioned CGS are listed in Table 1.

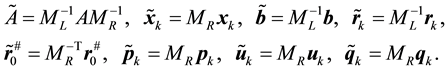

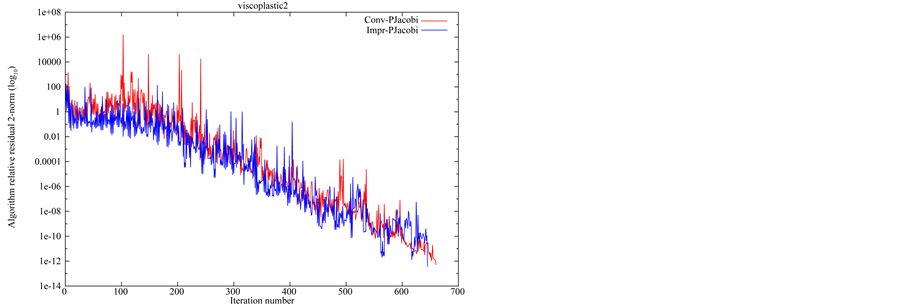

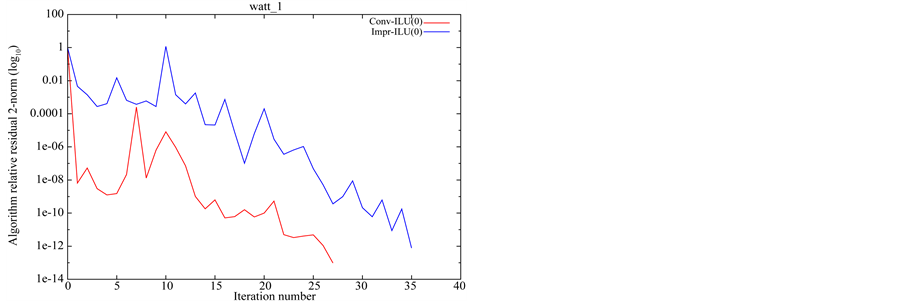

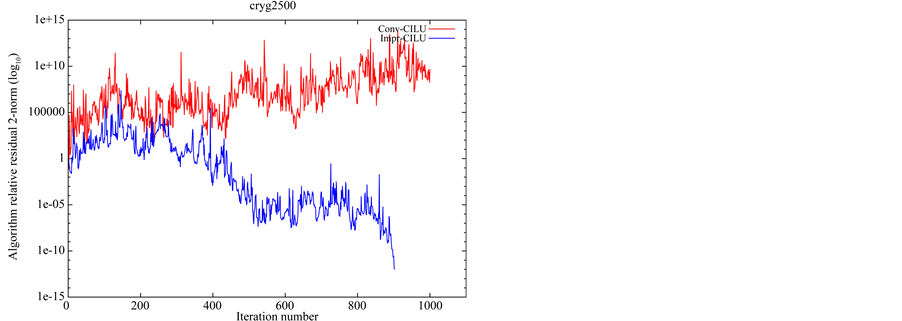

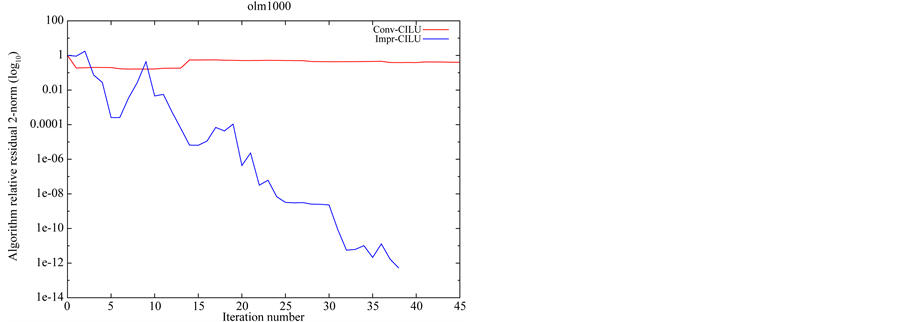

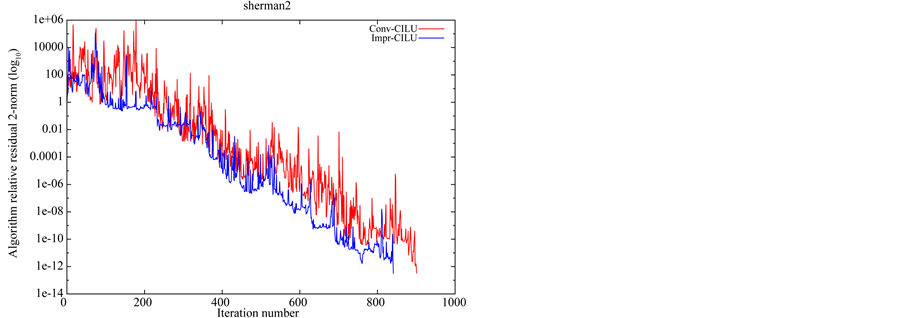

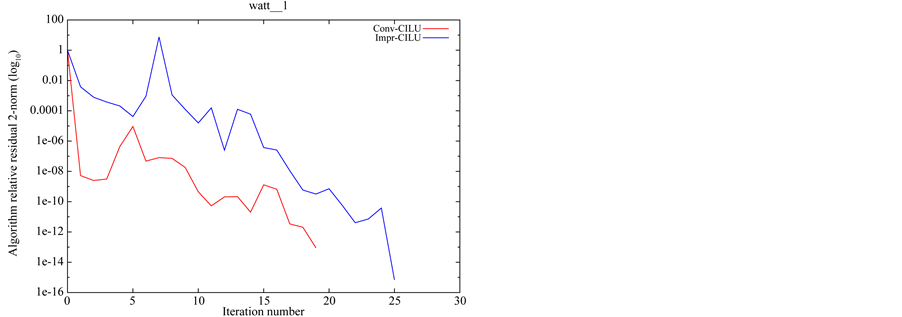

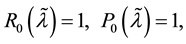

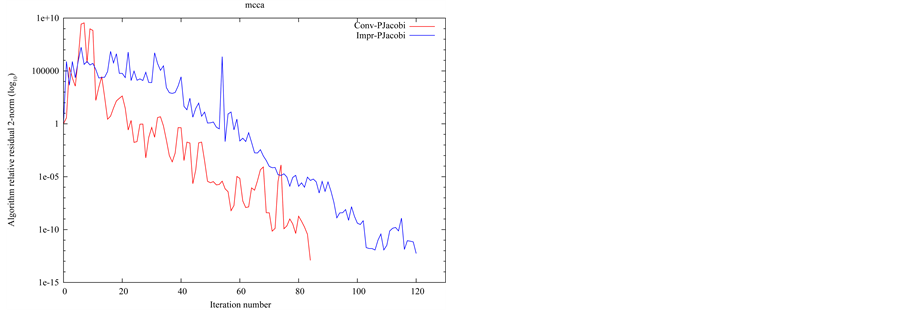

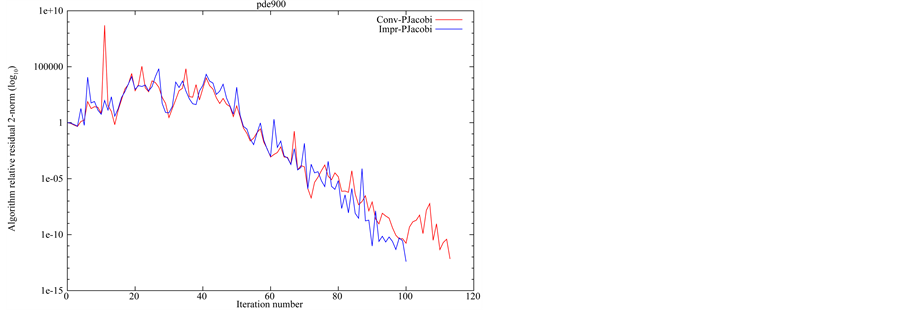

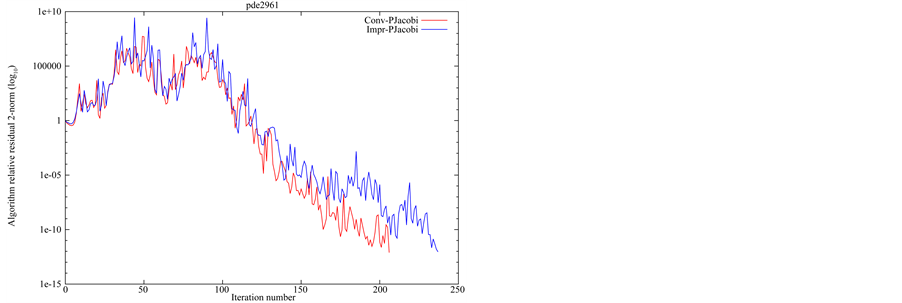

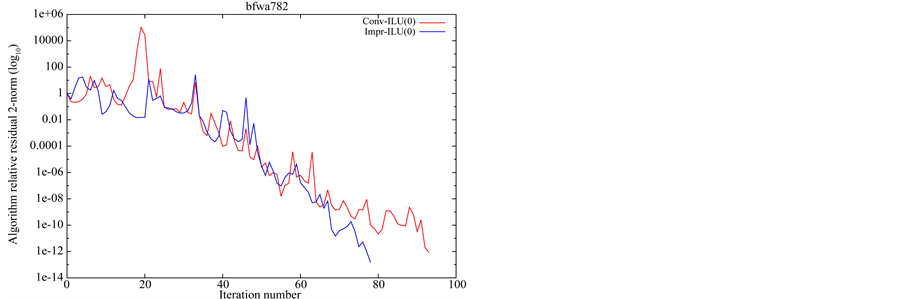

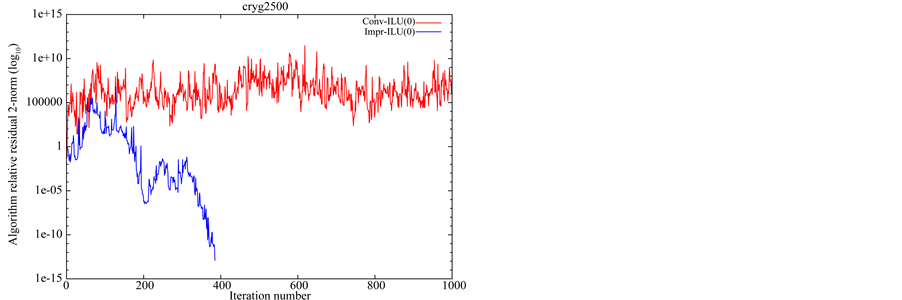

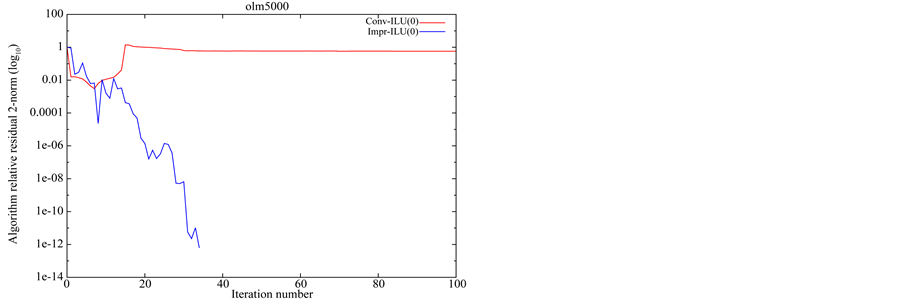

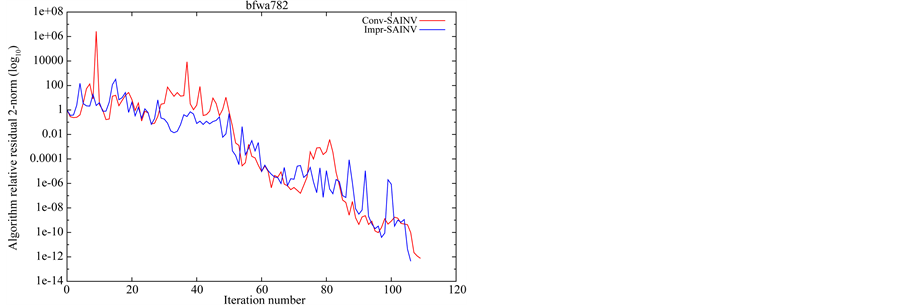

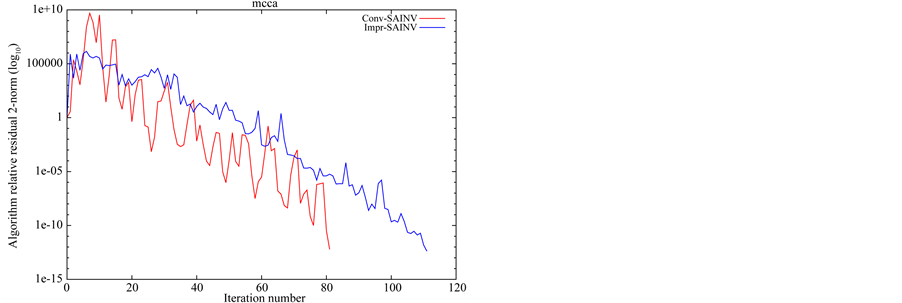

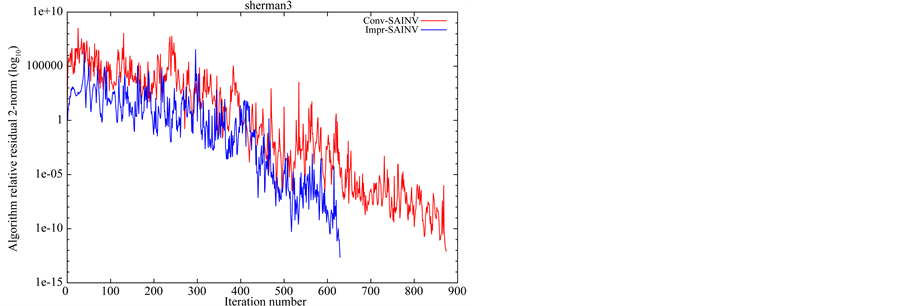

The results given by the conventional PCGS and the improved PCGS are listed in Tables 2-5. Each table adopts a different preconditioner in Lis [14] : “(Point-)Jacobi”, “ILU(0)”8, “SAINV”, and “Crout ILU”. In these tables, significant advantages of one algorithm over the other are emphasized by bold font9. Additionally, matrix names given in italic font in Table 1 encounter some difficulties. The evolution of the convergence for each preconditioner is shown in Figures 2-5. In this paper, we do not compare the computational speed of these preconditioners.

In many cases, the results given by the improved PCGS are better than those from the conventional algorithm. We should pay particular attention to the results from matrices “mcca”, “mcfe” and “watt_1”. In these cases, it appears that the conventional PCGS converges faster with any preconditioner, but the TRE values are worse than those from the improved algorithm. The iteration number for the conventional PCGS is not emphasized by bold font in these instances. The consequences of this anomaly are worth investigating further, possibly by analyzing them under PBiCG. This will be the subject of future work.

Table 1. Numerical results for a veriety of test problems (CGS).

In this table, “N” is the problem size and “NNZ” is the number of nonzero elements. The items in each column are, from left to right, the number of iterations required to converge (denoted “Iter.”), the true relative residual log10 2-norm (denoted by “TRR”, calculated as

5. Conclusions

In this paper, we have developed an improved PCGS algorithm by applying the procedure for deriving CGS to the BiCG method under a preconditioned system, and we also have presented some mathematical theorems underlying the deriving process’s logic. The improved PCGS does not increase the number of preconditioning operations in the iterative part of the algorithm. Our numerical results established that solutions obtained with the proposed algorithm are superior to those from the conventional algorithm for a variety of preconditioners.

However, the improved algorithm may still break down during the iterative procedure. This is an artefact of certain characteristics of the non-preconditioned BiCG and CGS methods, mainly the operations based on the bi-orthogonality and bi-conjugacy conditions. Nevertheless, this improved logic can be applied to other bi- Lanczos-based algorithms with minimal residual operations.

In future work, we will analyze the mechanism of the conventional and improved PCGS algorithms, and consider other variations of this algorithm. Furthermore, we will consider other settings of the initial shadow residual vector

Table 2. Numerical results for a veriety of test problems (Jacobi-CGS).

Figure 2.Converging histories of relative residual 2-norm (Jacobi-CGS). Red line: Conventional PCGS, Blue line: Improved PCGS.

Table 3.Numerical results for a veriety of test problems (ILU(0)-CGS).

Figure 3.Converging histories of relative residual 2-norm (ILU(0)-CGS). Red line: Conventional PCGS, Blue line: Improved PCGS.

Table 4.Numerical results for a veriety of test problems (SAINV-CGS).

Figure 4. Converging histories of relative residual 2-norm (SAINV-CGS). Red line: Conventional PCGS, Blue line: Improved PCGS.

Table 5. Numerical results for a veriety of test problems (CroutILU-CGS).

Figure 5. Converging histories of relative residual 2-norm (CroutILU-CGS). Red line: Conventional PCGS, Blue line: Improved PCGS.

Acknowledgements

This work is partially supported by a Grant-in-Aid for Scientific Research (C) No. 25390145 from MEXT, Japan.

Cite this paper

ShojiItoh,MasaakiSugihara, (2015) Formulation of a Preconditioned Algorithm for the Conjugate Gradient Squared Method in Accordance with Its Logical Structure. Applied Mathematics,06,1389-1406. doi: 10.4236/am.2015.68131

References

- 1. Sonneveld, P. (1989) CGS, A Fast Lanczos-Type Solver for Nonsymmetric Linear Systems. SIAM Journal on Scienti fic and Statistical Computing, 10, 36-52.

http://dx.doi.org/10.1137/0910004 - 2. Fletcher, R. (1976) Conjugate Gradient Methods for Indefinite Systems. In: Watson, G., Ed., Numerical Analysis Dundee 1975, Lecture Notes in Mathematics, Vol. 506, Springer-Verlag, Berlin, New York, 73-89.

- 3. Lanczos, C. (1952) Solution of Systems of Linear Equations by Minimized Iterations. Journal of Research of the National Bureau of Standards, 49, 33-53.

http://dx.doi.org/10.6028/jres.049.006 - 4. Van der Vorst, H.A. (1992) Bi-CGSTAB: A Fast and Smoothly Converging Variant of Bi-CG for the Solution of Nonsymmetric Linear Systems. SIAM Journal on Scientific and Statistical Computing, 13, 631-644.

http://dx.doi.org/10.1137/0913035 - 5. Zhang, S.-L. (1997) GPBi-CG: Generalized Product-Type Methods Based on Bi-CG for Solving Nonsymmetric Linear Systems. SIAM Journal on Scientific Computing, 18, 537-551.

http://dx.doi.org/10.1137/s1064827592236313 - 6. Itoh, S. and Sugihara, M. (2010) Systematic Performance Evaluation of Linear Solvers Using Quality Control Techniques. In: Naono, K., Teranishi, K., Cavazos, J. and Suda, R., Eds., Software Automatic Tuning: From Concepts to State-of-the-Art Results, Springer, 135-152.

- 7. Itoh, S. and Sugihara, M. (2013) Preconditioned Algorithm of the CGS Method Focusing on Its Deriving Process. Transactions of the Japan SIAM, 23, 253-286. (in Japanese)

- 8. Hestenes, M.R. and Stiefel, E. (1952) Methods of Conjugate Gradients for Solving Linear Systems. Journal of Research of the National Bureau of Standards, 49, 409-435.

http://dx.doi.org/10.6028/jres.049.044 - 9. Barrett, R., Berry, M., Chan, T.F., Demmel, J., Donato, J., Dongarra, J., et al. (1994) Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods. SIAM.

http://dx.doi.org/10.1137/1.9781611971538 - 10. Van der Vorst, H.A. (2003) Iterative Krylov Methods for Large Linear Systems. Cambridge University Press, Cambridge.

http://dx.doi.org/10.1017/CBO9780511615115 - 11. Meurant, G. (2005) Computer Solution of Large Linear Systems. Elsevier, Amsterdam.

- 12. Davis, T.A. The University of Florida Sparse Matrix Collection.

http://www.cise.ufl.edu/research/sparse/matrices/ - 13. Matrix Market Project.

http://math.nist.gov/MatrixMarket/ - 14. SSI Project, Lis.

http://www.ssisc.org/lis/

NOTES

1This improved PCGS was proposed in Ref. [7] from a viewpoint of deriving process’s logic, but this manuscript demonstrates some mathematical theorems underlying the deriving process’s logic. Further, numerical results using ILU(0) preconditioner only are shown in Ref. [7] , but the results using a variety of typical preconditioners are shown in this manuscript.

2Of course, [8] discuss the conjugate gradient method for a symmetric system.

8Values of “zero” for ILU(0) indicates the fill-in level, that is, “no fill-in”.

9Because the principal argument presented in this paper concerns the structure of the algorithm for PCGS based on an improved preconditioning transformation procedure, the time required to obtain numerical solutions and the convergence history graphs (Figures 2-5) are provided as a reference. As mentioned before, there is almost no difference between Algorithm 6 and Algorithm 7 as regards the number of operations in the iteration, which would exert a strong influence on the computation time.