Creative Education 2012. Vol.3, Special Issue, 784-795 Published Online October 2012 in SciRes (http://www.SciRP.org/journal/ce) http://dx.doi.org/10.4236/ce.2012.326117 Copyright © 2012 SciRes. 784 Exploring Collaborative Training with Educational Computer Assisted Simulations in Health Care Education: An Empirical Ecology of Resources Study Lars O. Häll Department of Education, Umeå University, Umeå, Sweden Email: lars-olof.hall@pedag.umu.se Received August 29th, 2012; revised September 27th, 2012; accepted October 9th, 2012 This study explores collaborative training with educational computer assisted simulations (ECAS) in health care education. The use of simulation technology is increasing in health care education (Issenberg et al., 2005; Bradley, 2006), and research is addressing the techniques of its application. Calls have been made for informing the field with existing and future educational research (e.g. Issenberg et al., 2011). This study investigates and examines collaboration as a technique for structuring simulation training. Part of a larger research and development project (Häll et al., 2011; Häll & Söderström, 2012), this paper pri- marily utilizes qualitative observation analysis of dentistry students learning radiology to investigate the challenges that screen-based simulation technology poses for collaborative learning. Grounded in Luckin’s ecology of resources framework (Luckin, 2010) and informed by computer-supported collabora- tive learning (CSCL) research, the study identifies some disadvantages of free collaboration that need to be dealt with for collaboration to be a beneficial technique for ECAS in health care education. The dis- cussion focuses on the use of scripts (Weinberger et al., 2009) to filter the interactions between the learner and the more able partner, supporting the collaborative-learning activity and enhancing learning with ECAS in health care education. Keywords: Collaborative Learning; Collaborative Scripts; Computer Simulation; Design; Dentistry Education; Ecology of Resources Exploring Collaborative Training with Educational Computer Assisted Simulations in Health Care Education Health care education faces both challenges particular to it and common to higher education. Although apprenticeship learning through clinical practice has been common in physi- cian training, this teaching method poses a seemingly innate contradiction with patient safety. Resistance to this teaching method stems from concern for patients exposed to it during the course of their treatment. Simulations offer a potential means of overcoming this contradiction, of “bridging practices” of edu- cation and profession (Rystedt, 2002). As students’ opportuni- ties for clinical training decrease because of altered patient expectations, the Bologna Accord, changes in practitioner mo- bility, and new forms of governance and training, it has been suggested that health care education is, and should be, under- going a paradigm shift to an educational model focused more on documented expertise than clinical practice (Aggarwal & Darzi, 2006; Debas et al., 2005; Luengo et al., 2009, Tanner, 2004). Simulation technology, in and of itself, is not sufficient to support learning; the appropriate techniques for its application are also needed (Gaba, 2004; Issenberg et al., 2011). Recently, calls have been made to widen the scope of research into the educational applications of simulation technology by informing it about existing and future educational theories, concepts, and research (Issenberg et al., 2011). Research should be directed not only at simulation technology in general (Issenberg et al., 2005) but at the different types of simulations technology in specific contexts, because simulations and training come in various forms and shapes (Gaba, 2004), and trying to generalize about, for example, computer simulations and full-scale trauma-team simulations together is not helpful for researchers or practitioners, especially in a field with explicitly interven- tionist ambitions. Similar arguments have been put forth by researchers working with other forms of technology-enhanced learning (Dillenbourg et al., 1996). This study, therefore, is limited to educational computer assisted simulations (ECAS) and attempts to explore peer collaboration as a technique to apply ECAS in health care education. Since the 1970s, educational research has directed much in- terest (Dillenbourg et al., 1996; Johnson & Johnson, 1999) toward how individual development is related to interactions in social and cultural contexts (Vygotsky, 1978; Wertsch, 1998; Luckin, 2010). Extending Vygotsky’s concept of the zone of proximal development, Luckin (2010) would argue that re- searching techniques for ECAS application should be about “re-designing learning contexts” of which ECAS are a part. This research needs to explore and analyze the learner’s ecol- ogy of resources and interactions with these resources in order to identify potential adjustments that could support negotiations between the learner and the more able partner and, thereby, help the more able partner identify the learner’s needs and draw attention to appropriate resources, or create a zone of proximal adjustment (p. 28). This support can help the learner to act on a  L. O. HÄLL more advanced level and, by appropriating these scaffolded interactions, develop further than possible through individual efforts. Central categories of resources (or available assistance) with which learners interact with are tools (e.g., a simulator), people (e.g., peers), skills and knowledge (e.g., dental imaging and related subjects), and environment (e.g., social and physical locale). These resources are interrelated, and learners’ interac- tion with them is filtered, for example, by the organization and administration of ECAS training sessions (for a general model, see Luckin, 2010: p. 94). One of the ‘social resources for individual development’ that has been investigated within educational research is cooperative and collaborative learning in groups (Dillenbourg et al., 1996; Johnson & Johnson, 1999). In Luckin’s framework, group peers can be categorized as a people resource that serves the role of the more able partners. Collaborative learning research has yielded strong empirical support for the positive effects of peer group learning in beneficial conditions (Johnson & Johnson, 1999). Amid the proliferation of personal computers and com- puter-supported and -based instruction since the 1990s, the field of computer-supported collaborative learning (CSCL) devel- oped to meet the challenge of exploiting computers’ potential to support collaborative learning. CSCL focused on the mediating role of cultural artifacts (Wertsch, 1999), or tools in Luckin’s terms, and a number of means to support different aspects of collaborative learning have been conceptualized and developed (Dillenbourg et al., 2009). As CSCL matured, developmental processes have become a focus of investigation (Dillenbourg et al., 2009), primarily because of the influence of by socio-cog- nitive and sociocultural theory (Dillenbourg et al., 1996) and ideas about scaffolding (Wood et al., 1976). From a Luckinian perspective, group peers can be regarded as potential more able partners, helping the learner to perform and learn on a higher level. In order to support the more able partner’s ability to help the individual learner develop, CSCL researchers and designers drawn upon empirical research to try to foster an intersubjective construction of meaning (Stahl, 2011) or a “mutual engagement of participants in a coordinated effort to solve the problem together” (Roschelle & Teasley, 1995, p. 70). Such fostering is achieved by redesigning learner contexts and is often related to the organizational and adminis- trative filters in Luckin’s model. Early small-group learning research’s main contribution was to urge seeking cooperative, rather than competitive, interactions (Johnson & Johnson, 1989). The value of cooperation stems from its encouragement of cer- tain interactional patterns, such as giving elaborated explana- tions or receiving these explanations and taking advantage of the opportunity to grasp and apply them (Webb, 1989). In addi- tion, negotiation supports learning because of the social process of mutual adjustment of positions appropriated by individuals (Dillenbourg et al., 1996). In fact, individuals need to make their reasoning and strategies explicit in order to establish a joint strategy and to identify conflicts to be resolved by a co-constructed solution (Dillenbourg et al., 1996; Rogoff, 1990, 1991). Simulations are mediational resources that can help form a shared referent (Roschelle & Teasley, 1995) among students but, in and of themselves, are not enough. Patterns such as elaborated explanations are more likely to occur amid some conditions, such as in certain group compositions (Webb, 1989), or when groups are explicitly encouraged to do so, for example, by scaffolding scripts (Weinberger et al., 2005, 2009). Groups might need support to avoid various silent and passive tenden- cies (Mulryan, 1992) and to achieve a sense of individual ac- countability for the performance of the group (Slavin, 1983, 2010). Previous research has noted that the way the system provides feedback can hinder, as well as support, beneficial interactions (Fraisse, 1987, referred to in Dillenbourg et al., 1996). Luckin’s framework is not specifically concerned with the traditional CSCL questions; her focus is wider and includes non-peer, non-collaborative activities. The overlap between them, however, is harmonious, and CSCL research is a useful resource for ecology researchers focusing on peer interactions. For Luckin (2010), “it is this relationship between learner and More Able Partners that needs to be the focus of scaffolding attention.” because it reveals the learner’s “current understand- ing and learning needs” and enables the more able partner to “identify and possibly introduce a variety of types of assistance from available resources” (p. 28). CSCL contributes by pin- pointing important factors in cases when group peers enact the role of the more able partner. Peer collaboration seems to have been paid little attention in the health care simulation field. With the exceptions of the studies by De Leng et al. (2009), Rystedt and Lindwall (2004), and Hmelo-Silver (2003), it cannot be traced in any significant way in the widely cited review by Issenberg et al. (2005) or in the current research agenda (Issenberg et al., 2011). Most re- search on factors influencing learning with ECAS in higher education, instead, consider 1) individual participants’ charac- teristics, such as prior clinical experience (Brown et al., 2010; Hassan et al., 2006; Liu et al., 2008) and metacognitive abilities (Prins et al., 2006); 2) simulation characteristics, such as re- peatability (Brunner et al., 2004; Smith et al,. 2001), progres- sion (Aggarwal et al., 2006), and participation requirements (Kössi & Loustarinen, 2009); 3) simulation integration, such as the chronological relation to lectures (See et al., 2010), work shifts (Naughton et al., 2011), and video-based instructions (Holzinger et al., 2009); and d) support during training, such as the type and quality of feedback (Van Sickle et al., 2007), pro- vision of contextualized questions (Hmelo & Day, 1999), and the teacher-student ratio (Dubrowski & MacRae, 2006). Successfully delivering support to health care students train- ing collaboratively within their ECAS ecology of resources (Luckin, 2010; Häll & Söderström, 2012) requires more know- ledge about what kind of support is needed in this type of ecol- ogy. The learner in this paper is a dental student learning about dental radiology and the principle of motion parallax (the pri- mary skills) supported by a peer collaboration group (the pri- mary more able partner) and the radiology simulator (the pri- mary Tool; Nilsson, 2007). During one-hour sessions, they work in free (unscripted) collaboration with specific tasks which they chose themselves from a pool offered by the simu- lator (central resource filters). Previous analysis of this ecology of resources has been performed within the Learning Radiology in Simulated Environments project (Nilsson et al., 2006; Nils- son, 2007; Söderström et al., 2008; Häll et al., 2009; Söder- ström & Häll, 2010; Häll & Söderström, 2012; Häll et al., 2011; Söderström et al., 2012). Results have indicated, among other things, challenges in the relationship between learner and the more able partners (a Type 2 issue in ecology of resources terms; Luckin, 2010: p. 131) manifested, for example, as sig- nificant differences in how much collaborative verbal space respective learners possess during collaboration, with some students standing out as more silent. From the collaborative Copyright © 2012 SciRes. 785  L. O. HÄLL learning perspective sketched out here, these differences may hinder the negotiation between learner and more able partner sought by both Luckin and CSLC researchers. Significant dif- ferences, however, were also found in the proficiency devel- opment of these silent students (Häll et al., 2011), and this study seeks the causes in the groups’ collaborative problem- solving processes. Investigating interactive patterns of more and less successful groups is common in CSCL and allows identification of opportunities to adjust interactions with scaf- folding filters. This article contributes to the growing research on learning through applications of health care simulations by exploring collaborative training with ECAS from ecology of resources perspective and with a particular focus on the influence of the interaction between silent learners and their potential more able partners in relation on learning outcomes. The overall question this study asked is: What differentiates, and unites, the interac- tive behavior of silent learners and potential more able partners in successful and unsuccessful free collaborative training in an ECAS ecology of resources? What are the implications for structuring an ECAS training with peer collaboration? In other words, what opportunities for adjustment emerge, and how can they be seized? Three empirical questions follow from this research aim: 1) How does a more successful triad collabora- tively solve a given task in the screen-based radiology simula- tor? 2) How does a less successful triad collaboratively solve the same task? 3) What unites and separates the interactive behaviors of these groups in regards to challenges facing the negotiation between silent learner and more able partner? As its empirical foundation, this study uses transcribed ob- servations of the groups’ simulation training sessions, which permits following the problem-solving process, expressed in the students’ verbal activity, as it progresses. Such detail takes up extensive space, so snapshots of the respective groups’ process while engaged in the same task, exploring the same anatomical area, will be taken. This method permits identifica- tion of similar and distinguishing traits for the groups, which have similar pre-training test scores. Based on this analysis, this paper will discuss the need for adjustments in the relationship between learners and more able partners and collaborative scripts (Dillenbourg & Hong, 2008; Weinberger, 2010) as a potential resource for such adjustments in collaborative learn- ing with screen-based ECAS in health care education. This study can be regarded as a “fine-grained analysis of the details of a particular element and interaction” (Luckin, 2010: p. 126), related particularly to the second of the three steps in the ecol- ogy of resources redesign framework (p. 118); this paper does not report a full redesign cycle. The Luckinian ecology of re- sources perspective has yet to be represented within research on health care simulations, but with its focus on redesigning simu- lation contexts according to research, it fits very well within the trajectory of the field. Method The empirical contribution of this paper is a comparison of two groups—triads—of dentistry students working together during an hour of simulation training on the topic of radiology. Participants were recruited from a population of undergraduate students in the dentistry program at a Swedish university taking a course in oral and maxillofacial radiology. Although this pa- per reports on a subset of 3 + 3 students, a total of 36 students participated in the original study. Volunteers participated in a pre-test, training, and a post-test. The overall design of the study is presented in Table 1. Radiology Simulator and Trai ning Sessions The radiology simulator used in this study is basically a standard PC equipped with simulation software, as illustrated in Figure 1. It has two monitors, one displaying a three-dimen- sional (3-D) anatomical model, X-ray tube, and film, and the other displaying two-dimensional (2-D) X-ray images. The control peripherals include a standard keyboard and mouse and a special pen-like mouse device and roller-ball mouse. Using the simulator, students can perform real-time radiographic ex- aminations of a patient’s jaw, which is one of the examinations studied and practiced in the students’ courses. The simulator allows the user to position the 3-D model of the patient, the X-ray tube, and the film. X-ray images can then be exposed at will by students and immediately displayed by the simulator as geometrically correct radiographs rendered from the positions of the models. Other possible exercises that can be done on the simulator include replication of standard and incorrect views. Change in the 2-D X-ray image can be seen in real time as the model is manipulated (a technique called fluoroscopy) and experimented on in an improvised manner. During the one-hour long training sessions, the groups worked collaboratively with the simulator, supervised by a teacher primarily acting as technical support. This set-up can be described as free collaboration with students themselves decid- ing how to manage things. Proficiency Tests The students’ proficiency in interpreting radiographs was evaluated before and after the exercises. Two dental scientists Table 1. Study design. Input Process Output Variable Pre-training proficiency Simulation training Post-training proficiency Evaluation Proficiency testObservation Proficiency test Figure 1. Illustration of a dentistry student working with a jaw model on the radiological VR simulator. Copyright © 2012 SciRes. 786  L. O. HÄLL teaching at the dentistry program developed the proficiency test that measured subject proficiency using the criteria in this course at the dentistry program. The test consisted of three subtests: a principle test, a projection test, and a radiography test; each part was graded from 0 to 8 for a total of 24 possible points. The principle subtest assessed participants’ understand- ing of the principles of motion parallax. The projection subtest evaluated participants’ ability to apply the principles of motion parallax and, based on basic sketches, requires basic under- standing of anatomy. The radiography subtest assessed partici- pants’ ability to locate object details in authentic radiographic images utilizing motion parallax. Participants were asked to report the relative depth of specified object details in pairs of radiographs. The proficiency analyses in this study are based on the total scores from all three subtests. Previously, these profi- ciency tests have been used to compare students training with ECAS and conventional alternatives (Häll et al., 2011). Observation of Simulation Training Using Video Recordings To enable analysis and comparison of the collaborative training process as expressed in peer interaction, the simulation sessions were recorded using a DV camera, a common method among researchers in health care education (Heath et al., 2010; Koschmann et al., 2011; Rystedt & Lindwall, 2004). The cam- era was placed so that the upper, facial half of students was visible. Automated computer logs showing what activities stu- dents were doing when were used to support the analysis, be- cause the screens were hidden from view. The log records in- clude type of task; anatomical area; timestamps for start, feed- back, and finish; and scores on the initial and final solutions. Analysis of the video-recorded training process occurred in two phases. Initially, a quantitative analysis divided the recordings into time segments of 1 minute, and in each segment, it was noted who was the dominant speaker and the dominant operator of the simulator. Such data is presented in Figure 2. One re- searcher performed all coding of the training sessions and, in order to produce a measure of the coding stability (Krippen- dorff, 2004), re-coded one session and compared the results for each category with the original analysis. The percent agreement between original coding and re-coding was and 98% for opera- tion and verbal space, respectively. (a) (b) Figure 2. Illustration of how often in percentage of sixty 1-minute time segments that each group member dominated the verbal space. (a) Mary, Ava, Eli; (b) Marc, Alex, Hera. Groups were selected for further analysis based on data about equality in the distribution of control over verbal space and the simulator and for proficiency development. These groups are described in the next section. The recordings of these selected groups were transcribed. Transcription was inspired by Heath et al. (2010) but kept basic and, in this paper, limited to verbal actions and interactions with the simulator that started or ended phases in the problem-solving process. Although interaction analysts champion the idea that nonverbal interaction is equally important as verbal (Jordan & Henderson, 1995), for the sake of this particular analysis, nonverbal interaction has been ex- cluded. The chosen recordings were viewed repeatedly and loosely transcribed in full, which allowed for the groups’ typical inter- action patterns to be identified and for their differences to emerge. Continued viewing and reading of the transcripts and referencing log data about task, time on task, and solution suc- cessfulness led to the choice of one snapshot from each group. This snapshot is taken from an instance in which the two groups engaged in the same type of analysis (the same simula- tor task) of the same anatomical area with equally unsuccessful results. While a snapshot is always just a snapshot, these sce- narios were chosen because they illustrate interactive patterns, challenges, and differences that recurred throughout the training sessions. The snapshots were transcribed in more detail. The Groups—A Closer Look The two groups selected for analysis in this paper represent the most successful and the least successful groups in the original study, as defined by group proficiency development from pre-test to post-test. The composition of these groups is similar in a few relevant aspects. They have very similar pre-test scores, differing by only 3 points on a 72-point scale, and had a very similar distribution of scores between group members (see Table 2). Additionally, in each group, the mem- bers share the distribution of collaborative space quite un- equally, with one participant less verbal (i.e., relatively silent). Figure 2 illustrates how often each group member dominates the verbal activity during the one-hour training session, based on a quantitative count of coded 1-minute time-segments. It is thus a group-relative definition and not based on absolute counts of contributions. All names are fictitious. The silent participants followed in this text are Mary and Marc. They spend roughly the same amount of time operating the simulator and had comparable scores on the pre-training proficiency test, as illustrated in Table 2. They differ signifi- cantly, however, on post-test scores; Mary develops from 8 to 15, while Marc stays at 7. Table 2. Proficiency test scores and development of group members. Group MemberPre-test score Post-test score Development Mary’s group Mary 8 15 7 Ava 14 16 2 Eli 20 20 0 Marc’s group Marc 7 7 0 Alex 18 15 −3 Hera 14 15 1 Copyright © 2012 SciRes. 787  L. O. HÄLL In peer collaboration studies, one hypothesis about the causes of differences in relative space holds that individual differences in content competence are important (Mulryan, 1992; Cohen, 1994). Indeed, the pre-test scores for these study groups show that the least active participants (Mary and Marc) have signifi- cantly lower scores than their peers and that the most active participants (Eli and Alex) are at top of their group. Another hypothesis is that social relationships are important (Häll et al., 2011). Data from a post-training survey (not included in this paper) that the more active participants have stronger social relationships and socialize privately with each other but not with the silent student. Other factors, such as self-efficacy and social status, have been suggested to have an impact (Webb, 1992; Fior, 2008). Such factors may have contributed to creat- ing the differences in verbal activity, but they do not explain the differences in development between groups. The Analyze Beam Direction Task The task in which students engage in the following data is related to three aspects of radiological examinations; it is stan- dardized and follows a certain structure. In essence, students are presented with a task that requires them to interpret radio- graphic images and to operate the simulator (scene and objects). When students have positioned the simulation objects in what they deem to be the correct way and requested feedback from the simulator (by pressing a button labeled “next”), they are given numerical information about the distance between their own model-position and the correct model-position. Based on this feedback, groups are given the opportunity to re-position the model before submitting their final solution. In addition, they receive numerical feedback about the actual position of their final solution relative to the correct position. A correct/ good-enough solution elicits a beeping sound from the simula- tor, along with the numerical and visual feedback. An insuffi- cient solution elicits only visual and numerical feedback. Simulator log files show that students follow these steps in almost every situation (although sometimes they switch tasks before finishing or restart the simulator). As well, the tran- scripts make it clear that these steps are the major topics of discussion during the training session. Results This section details the collaborative problem-solving proc- ess for two groups working on the same task as manifested in transcriptions of their verbal activity. The transcripts illustrate each group’s first attempt at the analyze beam direction task of a particular anatomical area. Both groups previously had con- ducted this type of analysis on other areas of the body. Both groups first propose solutions that equally incorrect according to the simulator’s criteria and other tasks that they solve. Their solution-producing processes differ, however, as do their re- sponses to feedback and, as previously mentioned, their profi- ciency development. While both groups’ problem-solving processes vary somewhat over the course of the training session, these transcripts capture some of the differences between the groups that are recurring issues in the ecology studied in the Learning Radiology in Simulated Environments project. As such, these transcripts both illustrate what separates the prob- lem-solving process of more successful and less successful collaborative behavior in this ecology and indicate opportuni- ties to support both type of groups. The problem-solving proc- ess of Mary’s group is presented first because it is easier to follow. Transcript 1 — M ary ’s Gro up S ol vi ng an Anal yze Beam Direction Task Step 1. Picking a task. The first excerpt shows Mary’s group picking and starting a new task. The process starts with Ava asking loudly if it is possible to change dental area without changing the type of task (1). She states the question relatively loudly, inviting the nearby teacher to answer. The teacher ex- plains that it is not possible to do so (2) and later supports Ava’s task-starting procedure (5). Eli suggests a particular area of investigation (3), to which Ava agrees and asks for confir- mation (6), which she receives from Eli (7). Excerpt 1. Mary’s group picking a task. 1 Ava Can’t you change area without changing task? (6) [To change…] 2 Teacher[No you have to go back] and push “8” to change area, yeah. 3 Eli Canine. (3) Lower canine. 4 Mary <Inaudible whisper> 5 TeacherThen you choose which task to do, yeah. 6 Ava Should we try this one? 7 Eli Yes. Step 2. Taking the first radiograph. Next, Eli, the operator, begins the process of maneuvering the 3-D models into a fa- vorable position for taking an initial photograph of the tooth and states that he has found the position (8). Ava suggests that he should pull the camera back a bit, effectively zooming out and capturing a wider area (9). Mary agrees with Ava’s sugges- tion (10), joining Mary in a laugh at Eli’s operations (11). Eli suggests a new position, starting to state that it is very precise (12), and is rewarded with laughter in which he joins (13). Ava asks Eli if this is how he usually takes his photos (14) to which he agrees that it does seem like it (16). Ava finishes the first part of the exercise by clicking to produce the photograph, which also generates a random second photograph used to con- trast the first (17). Excerpt 2. Mary’s group taking the initial radiograph. 8 Eli Here it is. 9 Ava Pull back a bit. 10 Mary Yeah. 11 Ava Mary ((Laughing)). 12 Eli There. That looks [exactly…] 13 Ava Mary Eli [((Laughing))] 14 Ava Is this how you do your shots? 15 Mary ((Inaudible whisper)). 16 Eli Yes. (3). Looks like it. 17 Ava <Clicks to end step> Copyright © 2012 SciRes. 788  L. O. HÄLL Step 3. Comparing radiographs and finding a solution. The next excerpt begins with Ava delivering a one-word inter- pretation of the differences between the self-made radiograph and the one generated by the simulator (18). She gets support from Mary (19), and Eli requests a clarification of the nature of something shown (20). Mary starts to suggest a maneuver but is halted (21). Eli revises the initial interpretation and starts to explain why (23) but is halted by Mary delivering counter- evidence (23). Ava contributes more evidence (24), initially corrected but then supported by Mary (25). Eli cautiously sup- ports this latest evidence but quickly starts to question it, sug- gesting how to re-position the camera (26). Ava tries to deliver more counter-evidence but (27), but Eli interrupts her, intent on trying out his thought. He explains his reasoning (28) and then concurs with the initial conclusion put forth by his peers (29). Ava suggests that one of the radiographs is not what it should be (31) and gets confirmation from Ava (23). Eli argues for a maneuver (33, 35) and gets some support from Ava (34, 36). Mary signals when she thinks they have reached the correct position (37), as does Eli (38). Ava asks for more time for a final check (39) before she clicks to finish the task and receive feedback (40). Excerpt 3. Mary’s group comparing radiographs and finding a solution. 18 Ava Distal. 19 Mary Yeah, it’s distal. 20 Eli What’s that [white stuff we see?] 21 Mary [Maybe a bit...] 22 Eli No, it is more mesial. Isn’t the one on the right more …? 23 Mary No, no, but this is the premolar here. 24 Ava And you get six as well. [And there is no overlap between them, so it is more ortoradial to five and four, four and five.] 25 Mary [Three. Yeah it has to be.] (4) That’s right… 26 Eli Yeah. (3) Exactly. (4) But wait. (2) You take it more. (2) You take THAT one more frontal. 27 Ava No. (1) So you [have]… 28 Eli [Yes], what happens then? (1) No, you have to do it more from the front exactly. When the image is on the exact spot. I’m thinking a bit like you’re moving the image as well, but you’re not really. 29 Eli There. (1) It is taken more distal. 30 Ava Yes. (1) Then it’s ortoradial to four then. 31 Mary Feels this not a canine shot? It [must] be a premolar shot. 32 Ava [No.] 33 Eli Especially, it went it went right in the beam direction, so you see in the beam direction [above there]. 34 Ava [Mm.] Mm. 35 Eli Then we’ll just take it towards us. (5) 36 Ava Yeah. Mm. 37 Mary There. 38 Eli There. 39 Ava Wait a bit. I’ll just see…(7) 40 Ava ((Click to end step)) Step 4. Dealing with feedback and correcting the solution. The next excerpt deals with feedback on an incorrect solution. The excerpt begins with the students expressing their surprise nearly in unison (40 - 42). Eli immediately starts to interpret how the simulator’s solution differs from theirs, but he trails off (44). Mary states her interpretation (44). Eli concludes that their own solution was “crap” (45) and then states an interpretation of the differences between the two radiographs (46), receiving support from Ava (47). Mary begins to support Eli (48), but he interrupts, adding that this is something for which they should have accounted (49), and again receives support from Ava (50). Mary restates her previous interpretation with a slight modifi- cation (51), but Eli changes his mind, interrupts Mary, and states an interpretation opposed to Mary’s (52). Mary restates her interpretation and points to a specific element of the radio- graphs as support (53), receiving support from Ava (54) and finally also from Eli (55). Mary begins to present additional evidence (56), but Ava interrupts, insisting that Eli should cor- rect the solution so that they can receive more feedback on how the camera should have been positioned (57). Mary whispers almost inaudibly to herself (58) as the model is moved into position and new feedback presented (59). Ava acknowledges the solution (60), and Eli concludes that their previous inter- pretation was correct (61). Ava states that this interpretation is in accordance with normal practice (62) and receives support from Eli (63). The task is finished, and the students move on to the next challenge. Excerpt 4. Mary’s group dealing with feedback and correcting the solution. 40Ava[Oh.] 41Eli [What!?] 42Mary[Oh.] 43Eli We took it too… 44MaryMore from abo-, more above they took it. 45Eli Well, that’s crap. 46Eli Of course, they’ve become shorter. The roots have become much shorter. 47AvaMm. 48Mary[Yeah that…] 49Eli [One should] have spotted that 50AvaMm. 51MarySo they took it quite a bit [from above]. 52Eli [Or no they] haven’t become shorter at all. 53MaryThey [have become rather] short in comparison to this image. 54Ava[Yeah...] 55Eli Yeah. I suppose they have. 56Mary[This one sure has backed up]. 57Ava [But try, try to put it] so you see where it should have been. (2) Put the white on the blue there. 58MaryThere <inaudible> 59 <Simulator signals that a correct solution has been reached> 60AvaMm. 61Eli Way up, they went. 62AvaYeah, that’s how they take them, of course. 63Eli Yep. Copyright © 2012 SciRes. 789  L. O. HÄLL Summary and analysis of the problem-solving process of Mary’s group. The task cycle in Mary’s group moves through four steps, or topics, of interaction: 1) picking a task, 2) taking the first radiograph, 3) comparing radiographs and finding a solution, and 4) dealing with feedback and correcting the solu- tion. These steps are connected to significant events in the in- teraction with the simulator, and the last two steps are more directly related to problem-solving. From the beginning, it is clear that the more able partners (Ava and Eli) are taking charge of the activity. By externalizing questions (“Can’t you change …”) and directives (“Canine. Lower canine”), they enable a partially shared activity. How- ever, their reasons for and goals in picking a particular task or area of investigation are not negotiated or even shared, and neither is Mary (the silent listener) involved. The production of the first radiograph is done with a little mutual regulation (“Pull it back a little”) of the operation of simulator through playful orders. While this radiograph can be taken from different posi- tions, it would be helpful later on for the students to understand and remember how it was taken. Again, the more able partners primarily contribute to the verbal exchanges. The comparison of radiographs begins with a one-word statement of a more able partner’s interpretation of the primary difference between them (“Distal”). Although Mary agrees with this interpretation, the operating more able partner is not convinced, and the following interactions are motivated by this tension. Eli begins with elic- iting a clarification of what is shown on screen (“What’s this white stuff we see?”) and receives such from Mary soon there- after (“This is the premolar”). As such, the two are opening a joint construction of a shared understanding of what significant information is shown in the radiographs. Ava’s contribution (“There’s no overlap between them, so it must be ...”) demon- strates how the principles of motion parallax serve as a resource mediating an interpretation. By focusing on specific elements in the radiographs and relating these to principles for interpreta- tion, Ava makes the reasons for her interpretations clear, which is acknowledged by confirmation from her peers. This interac- tion is the only example of such an explicit reference. Mary’s contributions receive little direct response from the more able partners; they are not accepted as data upon which to act. In fact, it is quite rare for the operating more able partner to give any recognition that the others’ input is being taken into con- sideration, although he externalizes parts of his own reasoning throughout the production of the initial radiograph. Faced with negative feedback from the simulator, the group shares the surprise of their failure. They quickly start to detail the differences between their solution and the correct one, first by Mary “(More from above, they took it”). Eli then grounds this observation in a specific indicator of this difference (“The roots are much shorter”). After changing their minds and re- peating the evidence, the group settles for this interpretation, which turns out to be correct. Although the group seems to co-create a shared understanding of their failure, they do not engage in explanation of its causes or how to avoid it in future tasks. This analysis of a successful group during an unsuccessful task reveals opportunities for teachers and designers to adjust the silent listener’s ecology of resources by better supporting the more able partners to help the learner. Such support would, for example, encourage more direct engagement in the co-con- struction of interpretations and solutions and make more ex- plicit the relation between observation, (radiological) principles, and interpretation. Transcript 2—M arc’s Gro up Sol ving an Analyze Beam Direction Task Step 1. Picking a task. The next transcript illustrates Marc’s group engaging in the same task in the same anatomical area. The excerpt starts with Marc trying to decide which area to investigate and what type of task to do. He almost starts one type of task but realizes that it is not what he wants, and he changes his mind two more times (1). Hera starts to laugh (2), eliciting laughter from Marc, who states that he can’t decide what to do (3). Still laughing, Hera points out that he will have to learn them all in the end (4). Marc agrees and decides on the analyze beam direction (5). Alex provides technical support by pointing to the button to start such a task (6). Marc finds the button and begins the task (7). Excerpt 5. Marc’s group picking a task. 1Marc A canine, I think we’ll take. And fluoroscopy. (3) No, that, no I want, I’m sorry, I don’t want fluoroscopy. I’ll take that. No, not that one either. 2Hera ((Laughing)). 3Marc((Laughing)). I’ve got [decision a…That one!] 4Hera [((Laughing)). You have to be able to learn all of them, Marc, you know.] 5MarcOh yeah. ((Laughing)). Analyze beam direction. 6Alex You’ve got it up there. 7Marc<Click to end step> Step 2. Taking the first radiograph. Marc restates the task, indicating that it is what he is trying to achieve (8). He receives support from Hera (9), repeats her words, and picks this action. (10). Excerpt 6. Marc’s group taking the first radiograph. 8 MarcThen, let’s just see, observe direction. (2). Mm. 9 HeraMm, looking good. 10MarcLooking good. (3) Then we’ll take it. <Click to end step> Step 3. Comparing radiographs and finding a solution. Marc starts to suggest an interpretation but trails off (11). Alex fills in by stating his interpretation in two parts (12). Hera sug- gests a revision of the second part of Alex’s interpretation (13), which Alex (14) and Marc (15) quickly adopt. Alex and Hera start whispering softly with each other (16), while Marc whis- pers inaudibly to himself (17) until he signals that he is satisfied with the position (18). Excerpt 7. Marc’s group comparing radiographs and finding a solution. 11Marc That one’s more. … (5) Hold on … 12Alex It’s more superior (1) and (1) eh [mesial]. 13Hera [Distal], it’s distal. Copyright © 2012 SciRes. 790  L. O. HÄLL 14 Alex Yeah, distal. 15 Marc Yes, distal, yeah. (1) Exactly. 16 Alex Hera [((Whispering to each other)).] 17 Marc [((Talking inaudibly to himself)).] 18 Marc [There. <Click to end step>.] Step 4. Dealing with feedback and correcting the solution. Alex and Hera keep whispering to each other (19), and Marc keeps talking softly to himself (20) until he finds the right spot and is rewarded by a beep from the simulator (21). This success elicits an expression of surprise from Marc (22) and apprecia- tion from Hera, along with a question of whether he managed to solve the task without needing to correct it (23). Marc lies that this is the case (24), and Hera teases Alex for not having achieved this (25). Alex calls out the lie, adding that he did, in fact, achieve this (26). Everyone laughs (27), and the students move on to the next task. Excerpt 8. Marc’s group dealing with feedback and correcting the solution. 19 Alex Hera [((Whispering to each other))] 20 Marc [Noo, yeah. And there. <Click to end step>] 21 <Simulator signals that a correct solution has been reached.> 22 Marc Oh. 23 Hera Neat! Did you get a beep right away? 24 Marc Mhm. 25 Hera He got you there, sourpuss! 26 Alex Nuh, he did not! [Marc’s worthless at lying]. (2) I DID get that! 27 All [((Laughing.))] Summary and analysis of collaboration in Marc’s group compared to Mary’s group. The task cycle in Marc’s group follows the same structure as Mary’s group but in a condensed form due to the lack of interaction between peers. For this rea- son, comparisons to Mary’s group are made here and not in a separate section. As the operator, the usually silent learner is given charge of picking a new task, and the more able partners try not to be involved in this decision. This set-up differs from Mary’s group in which the silent learner was not included in this process. Although Marc comments on his actions and re- veals his decision-making process, the criteria for choosing a task are, as in Mary’s group, not shared. Hera jokingly suggests that Marc is trying to make it easy on himself (“You have be able to learn them all, Marc, you know”). The production of an initial radiograph is done with even less interaction than in Mary’s group. Marc indicates that he has found the spot (“Mm”) and gets acknowledgement from Hera (“Mm, looking good”). When comparing the radiographs, Marc tries to take charge by delivering an interpretation but trails off. The more able part- ners quickly state their conclusion (“It is more superior and, eh, mesial”), and Marc agrees. The more able partners then drop their focus on the task-solving activity, perhaps considering it to be completed, and engage in private whispering while Marc tries to finish the task on his own. There are no attempts at dis- cussing the relationship between radiographs and anatomical knowledge, the principles for motion parallax, and their con- clusion, as Ava did in Mary’s group. There is also no mutual regulation of the positioning of the model, i.e., the operation- alization of the conclusion. The step ends with Marc denoting that he has found a spot (“There”). As the more able partners continue to whisper privately, Marc corrects the solution on his own, accompanied by opaque commentary (“Noo, yeah. And there”). Unlike in Mary’s group, there are no attempts at reach- ing a shared conclusion about the differences between their failed solution and the correct one and, thus, no collective at- tempts to figure out the causes of the failure. Instead, it seems as if Marc tries to pretend that initial solution was correct, a lie for which he first is praised and then called out by Alex, who ends the task by stating that he has achieved it, indicating an underlying logic of individual competition. The rationale for the collaboration in Marc’s group seems to be that the operator should execute the regulative conclusions of the more able partners. In his attempt at deceiving the more able partners, Marc indicates concern for social confirmation rather than developing his understanding of radiological ex- aminations. By disengaging from the task-solving activity after delivering conclusions without explanations, the more able partners demonstrate a lack of interest in the activity as a chance to learn from each other. Correcting Marc without ex- plaining why, they show an interest in getting the task done rather than learning. In this group, the silent learner enacts the role of an operator being observed and, insufficiently, regulated. In Mary’s group, the collaborative rationale is characterized more by mutual engagement in solving the problem together and creating shared understanding, at least among the more able partners, evidenced by the examples of the elicitation of infor- mation and more elaborate explanations. However, as indicated by the often absent recognition of Mary’s contributions, that group, too, still have room for greater engagement in the rela- tion between the silent learner and more able partners. Discussion Exploring free peer collaborative training with ECAS in healthcare education, this paper has investigated the interactive behavior of silent learners and potential more able partners in successful and unsuccessful sessions and indicated some op- portunities for the adjustment of these ecologies of resources. This investigation has been achieved by analyzing the collabo- rative problem-solving process in more successful and less successful triads including a silent learner and by comparing the two groups. The paper contributes to the “observational studies that evaluate the highest and lowest achievers” for which Issenberg et al. (2011: p. 157) call in order to explore and develop techniques for applying ECAS. This study’s ap- plication has revealed a few challenges, or opportunities, for adjustment that health educators and designers will want to utilize to make collaborative learning a beneficial technique for enhancing learning with ECAS. These challenges are discussed next, followed by the potential means for meeting these chal- lenges by adjusting the ecologies through filtering scripts. Opportunities for Adjustments of the Rel ation between Silent Learners and More Able Partners The general challenge for the groups studied in this paper is Copyright © 2012 SciRes. 791  L. O. HÄLL to actually engage in collaborative activities and learning, or for the silent learner and more able partners to engage in interac- tions aimed at constructing a shared understanding of the chal- lenges and potential solutions in the problem-solving process. There is a risk, most clearly manifested in Marc’s group, of individualized task-solving. Enacting the role of operator, Marc is basically left to complete the task on his own, with little conceptual interaction with and regulation from the more able partners, apart from a few opaque conclusions. What the more able partners do contribute—unelaborated conclusions with no explicit reasoning—is at best unhelpful and may even be coun- terproductive (Webb, 1989). A similar, but at the same time opposite, tendency can be discerned in Mary’s group, in which the silent learner who enacts the role of observer has to struggle for her contributions to be accepted as regulatory by the more able partners. Shared by the more and less successful group, this tendency acts an exclusionary force that can create and contribute to structuring silent and passive tendencies in differ- ent forms (Mulryan, 1992) and contradicts the role of more able partners as described by Luckin (2010). However, while Marc’s less successful group demonstrates a lack of interest in mutually exploring options, ensuring they have a shared understanding of the relevant information, what principles to draw upon, and their implications—in short, fo- cused on getting it done—Mary’s more successful group does manifest these behaviors to a degree, most apparently in the actions preceding and following the initial solution (steps 3 and 4). While everyone in Mary’s group approves the solution and is involved in trying to understand what was wrong with it, neither of those behaviors exist in Marc’s group. While the goal of Marc’s group seems to be doing the tasks, Mary’s group at least moves toward learning by doing. (In fact, the operator in Marc’s group, Alex, states in the transcript from a later task that: “You learn the program, but I don’t think you get very good at ‘What is this in reality?’”) That two groups with similar pre-training proficiency both complete simulation tasks but only one develops significantly from pre- to post-test indicates that although the simulation enables content learning, simply completing the tasks is not enough for development to occur. Another factor is required, and that may just be a shift in incen- tive from “getting it done” to engaging with the tasks with the motivation of collaboratively developing conceptual under- standing, to negotiations between learners and more able part- ners about “what is this in reality?” While the more successful group does engage with the feed- back given to them, they do not back-track the chain of inter- pretations and decisions that led to this faulty solution. They stop at concluding what information they missed but do not discuss why they missed it or how to avoid making the same mistake in the future. This behavior may be due to the simulator feedback focusing on the fault, and not the cause. Relevant feedback has previously been put posited as the most important factor in the effective use of EASC (Issenberg et al., 2005; McGaghie et al., 2010), and I have argued that in this ecology, what type of feedback students receive impacts the future ac- tions they take (Häll et al., 2011). Students can possess overconfidence in the mutuality of their understanding, which might have contributed to the minimal regulation of the learner in Marc’s group. Although the simula- tion is a mediational resource that can act as a referent among students (Roschelle & Teasley, 1995), shared understanding does not happen automatically. The plain fact that the opera- tor’s verbalized interpretation at times prompts objections from his peers shows that shared understanding is not always achieved. The information displayed on the screen can be in- terpreted in many ways, and differences in conceptualizations will remain hidden as long as students do not state them explic- itly. Problem solving unaccompanied by explicit reasoning makes it much harder for the non-operating and silent partici- pants to keep up and disrupts mutual responsibility for the end result. In these two groups, the students’ reasons for picking a par- ticular task remain unclear. Perhaps the operator chooses the task that she finds most interesting. However, viewing these collaborative simulations as learning opportunities for all mem- bers and as a chance for the learner and the more able partners to construct a shared understanding of the learner’s needs and potentially beneficial resources (appropriate tasks), the groups could benefit from informing their choices with a shared con- ception of need. This sense, for example, could be supported by a computer-generated overview of the completed tasks and the performance of these tasks. Future Research and Design — A Case f or Collaborative Scripts as Filters Between Resource Elements in an Ecology of Resources Meeting these challenges would be in line with the overall aims of Luckin’s redesign and with the CSCL research sketched in the introduction. It is apparent that free collaboration, as applied in the Learning Radiology in Simulated Environments studies, faces serious challenges and that a supportive structure is needed. From the perspectives of Luckin and CSCL, EASC software and its application are of interest (Stahl, 2011). In response to challenges identified within CSCL structure peer groups, European CSCL research (Fischer et al., 2007) has focused on the related techniques of scripts (Weinberger et al., 2009) and roles (O’Malley, 1992; Blaye et al., 1991) as poten- tially beneficial ways of structuring the application. Such scripts could act as adjusting filters between the learner and the people resource that more able partners act as in Luckin’s framework. Collaborative scripts have some similarities with theatre scripts (Weinberger et al., 2009) and with simulation scenarios (Kollar et al., 2006) that are central to research on full-scale medical simulations (Dieckmann, 2008). The major similarities include the distribution of roles, sequencing of actions and ac- tivities, and more or less detailed descriptions of how to enact the roles and scenario (Weinberger et al., 2009). These col- laborative scripts, which can also be referred to as external micro scripts (Kollar et al., 2006), are materialized in cultural artifacts and mediate collaborative activity. They “represent the procedural knowledge learners have not yet developed” (Wein- berger et al., 2009: p. 162) and are complementary to the ex- perience of the participants (or internal scripts). Like training wheels, scripts are particularly important for students in novel situations but need to be adapted or faded out as the learners master the tools and become more competent actors or self- regulated learners to avoid impeding learning (Dillenbourg, 2002). The script acts as a scaffold, or filter, enabling learners to do something they would not or could not without support, to develop more and appropriate this external support. CSCL scripts are aimed at helping students build and maintain a shared understanding, thereby supporting the collaborative process and Copyright © 2012 SciRes. 792  L. O. HÄLL conceptual development. Scripts are directed at inducing ex- plicit verbal interaction, such as explanations, negotiations, ar- gumentations, and mutual-regulation (Weinberger et al., 2009). Earlier research has indicated that such scripts might, for example, encourage perceived low achievers to participate more (Fior, 2008), increase discourse levels (Rummel & Spada, 2005), induce a sense of engagement (Soller, 2001), support an open atmosphere by creating distance from arguments due to role changes (Strijbos et al., 2004), induce expectations that promote learning (Renkl, 1997, referred in Weinberger et al., 2009), and support acquisition of argumentative knowledge (Stegmann et al., 2007). Of course, what the scripts achieve is limited by the designers’ intentions, and the actual script speci- fications are influenced by the designers’ ideas about students’ needs and what activities promote learning and by the practical ramifications of the educational context. These limitations can be informed by an analysis of student activities, general and particular learning theory, and curricula. Earlier scripts ex- plored jigsaw grouping (Aronson et al., 1978), conflict group- ing (Weinberger et al., 2005), and reciprocal teaching (Palin- scar & Brown, 1984). Although these pre-existing scripts can act as a source of inspiration, none seems directly applicable in the ECAS ecology explored in this paper. While the development and refinement of a script for sup- porting collaborative learning with the radiology simulator will be the subject of future work, an attempt could be made along the following lines. In response to the challenges presented above and inspired by Palinscar and Brown’s “reciprocal teaching” (1984; Morris et al., 2010), a simple script could be built around a refinement of the roles identified for the silent learner, operator, and regulator. The roles shift upon task com- pletion so the learner can experience both. The operator roughly acts as a demonstrating teacher and is given primary responsi- bility for explaining the tasks for himself and for his peers, demonstrating the steps that need to be taken, and showing how to do them in order to complete a task. The operator also ex- plains the relations among the primary resources of observation (information in the radiographs), the guiding principles (motion parallax, for example), and the interpretation and implications of the operation of the simulator. These actions would boost the power of the elaborated explanation (Webb, 1989; Fonseca & Chi, 2010), create an understanding of challenges and potential solutions shared with more able partners, and make possible fine, mutual regulation and negotiation. Additionally, the op- erator gets the input of the more able partners to inform major decisions, such as the initial interpretation of radiographs and its implication for the final solution. The observer and regulator’s task is to make clear whatever appears as ambiguous, on a conceptual or operative level, re- garding what is done and how and why it is done. Questions are asked prompt the current action and are negotiated before fur- ther action is taken. The questioner’s role may be supported by generic questions such as “Why do you conclude that X is Y?” While persuasion is primarily the operator’s role, the regulators must ensure that the arguments are based on sound evidence. The regulator is also tasked with summarizing the completed task, which can encourage the regulator to engage deeply with the operator’s actions and thought processes and to reflect thoroughly on the given. More useful feedback, though, might need to be built into the simulator. Support for the enactment of this script could be given in written instructions and teacher-led introductions and integrated into the software, for example, through just-in-time information and more elaborated feedback. Following such a script structure is likely to slow the task-solving cycle but offers the advantage of making every task contribute more to the learner’s develop- ment. These scripts would thus act as filters that mediate learn- ers’ interactions with their ecology of resources, supporting their negotiation with peers and enabling them to act as more able partners. These scripts would increase the value of simula- tions as a means of overcoming the contradiction between learning and patient safety posed by the apprenticeship model of health care education. This script, of course, is merely an outline of an idea that might change when put in the context of a full-scale ecology of resources redesign effort. It is possible that qualitative analysis of additional samples would refine the conclusions drawn from the empirical data presented in this article. And quantitative content-analyses structured by categories inferred from these qualitative findings could be used to investigate the generality of identified interaction patterns to a larger population (Steg- mann & Fischer, 2011). Once developed, we would need to evaluate how the scripts actually influence peer interaction under a number of variable conditions. In educational practice, one size will not fit all. What is needed is instead multiple scripts and/or a mechanism for adapting these scripts to needs that vary between learners as well as within learners over time. Weinberger views the “continuous adaptation of scripts to learners’ needs and knowledge” as a major challenge for script research (Weinberger et al., 2009: p. 155). This should be a relevant area of future research. A different course of action which may require more intru- sive re-design of the simulator would be in line with the sepa- rate control over shared space paradigm suggested by Kerwalla et al. (2005, 2008). This approach would enable all participat- ing students to display their individual solutions on the same screen, encouraging comparisons and discussions. However, even with such a multi-user interface, students seem to need some sort of script, because the technology on its own cannot correct poor collaborative skills (Luckin, 2010: p. 70). Acknowledgements This paper draws upon data from the Learning Radiology in Simulated Environments project, a research and development collaboration between Umeå University and Stanford Univer- sity funded by the Wallenberg Global Learning Network. REFERENCES Aronson, B., Blaney, N., Stephan, C., Sikes, J., & Snapp, M. (1978). The jigsaw classroom. Beverly Hills, CA: Sage. Aggarwal, R., & Darzi, A. (2006). Technical-skills training in the 21st century. New England Journal o f Medicine, 355, 2695-2696. doi:10.1056/NEJMe068179 Aggarwal, R., Grantcharov, T., Moorthy, K., Hance, J., & Darzi, A. (2006). A competency-based virtual reality training curriculum for the acquisition of laparoscopic psychomotor skill. American Journal of Surgery, 191, 128-133. doi:10.1016/j.amjsurg.2005.10.014 Blaye, A., Light, P. H., Joiner, R., & Sheldon, S. (1991). Joint planning and problem solving on a computer-based task. British Journal of Developmental Psychology, 9, 471-483. doi:10.1111/j.2044-835X.1991.tb00890.x Bradley, P. (2006). The history of simulation in medical education and possible future directions. Medical Education, 40, 254-262. doi:10.1111/j.1365-2929.2006.02394.x Copyright © 2012 SciRes. 793  L. O. HÄLL Brunner, W. C., Korndorffer, J. R., Sierra, R., Massarweh, N. N., Dunne, J. B., Yau, C. L., & Scott, D. J. (2004). Laparoscopic virtual reality training: Are 30 repetitions enough? Journal of Surgical Re- search, 122, 150-156. doi:10.1016/j.jss.2004.08.006 Cohen, E. G. (1994). Restructuring the classroom: Conditions for pro- ductive small groups. Review of Educational Research, 64, 1-35. doi:10.3102/00346543064001001 Debas, H. T., Bass, B. L., Brennan, M. R., Flynn, T. C., Folse, R., Freischlag, J. A., Friedmann, P., Greenfield, L. J., Jones, R. S., Lewis, F. R., Malangoni, M. A., Pellegrini, C. A., Rose, E. A., Sachdeva, A. K., Sheldon, G. F., Turner, P. L., Warshaw, A. L., Welling, R. E., & Zinner, M. J. (2005). American surgical association blue ribbon committee report on surgical education: 2004. Annals of Surgery, 241, 1-8. doi:10.1097/01.sla.0000150066.83563.52 De Leng, B. A., Muijtjens, A. M., & Van der Vleuten, C. P. (2009). The effect of face-to-face collaborative learning on the elaboration of computer-based simulated cases. Simulation in Healthcare, 4, 217- 222. doi:10.1097/SIH.0b013e3181a39612 Dieckmann, P., & Rall, M. (2008). Designing a scenario as a simulated clinical experience: The TuPASS scenario script. In R. Kyle, & B. W. Murray (Eds.), Clinical simulation: Operations, engineering, and management (pp. 541-550). Burlington: Academic Press. doi:10.1016/B978-012372531-8.50096-0 Dillenbourg, P., Baker, M., Blaye, A., & O’Malley, C. (1996). The evolution of research on collaborative learning. In E. Spada, & P. Reiman (Eds.), Learning in humans and machine: Towards an inter- disciplinary learning science (pp. 189-211). Oxford: Elsevier. Dillenbourg, P. (2002). Over-scripting CSCL: The risks of blending collaborative learning with instructional design. In P. A. Kirschner (Ed.), Three worlds of CSCL. Can we support CSCL? (pp. 61-91). Heerlen: Open Universiteit Nederland. Dillenbourg, P., & Hong, F. (2008). The mechanics of macro scripts. International Journal of Computer-Supported Collaborative Learn- ing, 3, 5-23. doi:10.1007/s11412-007-9033-1 Dillenbourg, P., Järvelä, S., & Fischer, F. (2009). The evolution of research on computer-supported collaborative learning. In N. Bala- cheff, S. Ludvigsen, T. de Jong, A. Lazonder, & S. Barnes (Eds.), Technology enhanced learning (pp. 3-19). Berlin: Springer. doi:10.1007/978-1-4020-9827-7_1 Dubrowski, A., & MacRae, H. (2006). Randomised, controlled study investigating the optimal instructor: Student ratios for teaching su- turing skills. Medical Education, 40, 59-63. doi:10.1111/j.1365-2929.2005.02347.x Fior, M. N. (2008). Self and collective efficacy as correlates of group participation: A comparison of structured and unstructured computer- supported collaborative learning conditions. Unpublished Master’S Thesis, Victoria: University of Victoria. Fischer, F., Kollar, I., Mandl, H., & Haake, J. M. (Eds.) (2007). Script- ing computer-supported collaborative learning: Cognitive, computa- tional, and educational perspectives. New York: Springer. doi:10.1007/978-0-387-36949-5 Fonseca, B. A., & Chi, M. T. H. (2010). Instruction based on self- explanation. In R. E. Mayer, & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 296-321). New York: Rout- ledge. Fraisse, J. (1987). Study of the disruptive role of the partner in the discovery of a cognitive strategy in children 11 years during social interaction. Bulletin de Psychologie, 382, 943-952. Gaba, D. M. (2004). The future vision of simulation in health care. Quality and Safety in H e a lth Care, 13, 2-10. doi:10.1136/qshc.2004.009878 Hassan, I., Maschuw, K., Rothmund, M., Koller, M., & Gerdes, B. (2006). Novices in surgery are the target group of a virtual reality training laboratory. European Surgical Research, 38, 109-113. doi:10.1159/000093282 Heath, C., Hindmarsh J., & Luff, P. (2010). Video in qualitative re- search. London: Sage. Hmelo, C., & Day, R. (1999). Contextualized questioning to scaffold learning from simulations. Computers & Education, 32, 151-164. doi:10.1016/S0360-1315(98)00062-1 Hmelo-Silver, C. E. (2003). Analyzing collaborative knowledge con- struction: Multiple methods for integrated understanding. Computers & Education, 41, 397-420. doi:10.1016/j.compedu.2003.07.001 Holzinger, A., Kickmeier-Rust, M. D., Wassertheurer, S., & Hessinger, M. (2009). Learning performance with interactive simulations in medical education: Lessons learned from results of learning complex physiological models with the Haemodynamics Simulator. Com- puters & Education, 52, 292-301. doi:10.1016/j.compedu.2008.08.008 Häll, L. O., Söderström, T., Nilsson, T., & Ahlqvist, J. (2009). Integrating computer based simulation training into curriculum—Complicated and time consuming? In K. Fernstrom, & J. Tsolakidis (Eds.), Read- ings in technology and education: Proceedings of ICICTE 2009 (pp. 90-98). Fraser Valley: University of the Fraser Valley Press. Häll, L. O., & Söderström. (2012). Designing for learning in com- puter-assisted health care simulations. In J. O. Lindberg, & A. D. Olofsson (Eds.), Informed design of educational technologies in high er education: Enhanced learning and teaching (pp. 167-192). Hershey, PA: IGI Global. doi:10.4018/978-1-61350-080-4.ch010 Häll, L. O., Söderström, T., Nilsson, T., & Ahlqvist, J. (2011). Col- laborative learning with screen-based simulation in health care edu- cation: An empirical study of collaborative patterns and proficiency development. Journal of Computer Assisted Learning, 27, 448-461. doi:10.1111/j.1365-2729.2011.00407.x Issenberg, S. B., McGaghie, W. C., Petrusa, E. R. I., Lee, G. D., & Scalese, R. J. (2005). Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic re- view. Medical Teacher, 27, 10-28. doi:10.1080/01421590500046924 Issenberg, B., Ringsted, C., Ostergaard, D., & Dieckmann, P. (2011). Setting a research agenda for simulation-based healthcare education: A synthesis of the outcome from an utstein style meeting. Simulation in Healthcare, 6, 155-167. doi:10.1097/SIH.0b013e3182207c24 Johnson, D. W., & Johnson, R. T. (1999). Learning together and alone. Cooperative, competitive and individualistic learning (5th ed.). Bos- ton, MA: Allyn & Bacon. Jordan, B., & Henderson, A. (1995). Interaction analysis: Foundations and practice. The Journal of the Learning Sciences, 4, 39-103. doi:10.1207/s15327809jls0401_2 Kollar, I., Fischer, F., & Hesse, F. W. (2006). Collaboration scripts—A conceptual analysis. Educational Psychology Review, 18, 159-185. doi:10.1007/s10648-006-9007-2 Koschmann, T., LeBaron, C., Goodwin, C., & Feltovich, P. (2011). “Can you see the cystic artery yet?” A simple matter of trust. Journal of Pragmatics, 43, 521-541. doi:10.1016/j.pragma.2009.09.009 Krippendorff, K. (2004). Content analysis: An introduction to its me- thodology. Thousand Oaks, CA: Sage. Kössi, J., & Luostarinen, M. (2009). Virtual reality laparoscopic simu- lator as an aid in surgical resident education: Two years’ experience. Scandinavian Journal of Surgery, 98, 48-54. Liu, H. C., Andre, T., & Greenbowe, T. (2008). The impact of learner’s prior knowledge on their use of chemistry computer simulations: A case study. Journal of Science Education and Technology, 17, 466- 482. doi:10.1007/s10956-008-9115-5 Luckin, R. (2010). Re-designing learning contexts. Technology-rich, learner centered ecologies. London: Routledge. Luengo, V., Aboulafia, A., Blavier, A., Shorten, G., Vadcard, L., & Zottmann, J. (2009) Novel technology for learning in medicine. In N. Balacheff, S. Ludvigsen, T. de Jong, A. Lazonder & S. Barnes (Eds.), Technology enhanced learning (pp. 105-120). Berlin: Springer. doi:10.1007/978-1-4020-9827-7_7 McGaghie, W. C., Issenberg, S. B., Petrusa, E. R., & Scalese, R. J. (2010). A critical review of simulation-based medical education re- search: 2003-2009. Medical Education, 44, 50-63. doi:10.1111/j.1365-2923.2009.03547.x Mulryan, C. M. (1992). Student passivity in cooperative small groups in mathematics. Journal of Educational Research, 85, 261-273. doi:10.1080/00220671.1992.9941126 Naughton, P. A., Aggarwal, R., Wang, T. T., Van Herzeele, I., Keeling, A. N., Darzi, A. W., & Cheshire, N. J. W. (2011). Skills training after night shift work enables acquisition of endovascular technical skills on a virtual reality simulator. Journal of Vascular Surgery, 53, 858- 866. doi:10.1016/j.jvs.2010.08.016 Copyright © 2012 SciRes. 794  L. O. HÄLL Copyright © 2012 SciRes. 795 Nilsson, T., Söderström, T., Häll, L. O., & Ahlqvist, J. (2006). Col- laborative learning efficiency in simulator-based and conventional radiology training. The 10th European Congress of DentoMaxillo- Facial Radiology. Leuven [Not Published]. Nilsson, T. A. (2007). Simulation supported training in oral radiology. Methods and impact in interpretative skill. Doctoral Dissertation, Umeå: Umeå University. O’Malley, C. (1992). Designing computer systems to support peer learning. European Journal of Psych ology of Education, 7, 339-352. doi:10.1007/BF03172898 Palincsar, A. S., & Brown, A. L. (1984). Reciprocal teaching of com- prehension-fostering and comprehension-monitoring activities. Cog- nition and Instruc tion, 1, 117-175. doi:10.1207/s1532690xci0102_1 Prins, F. J., Veenman, M. V. J., & Elshout, J. J. (2006). The impact of intellectual ability and metacognition on learning: New support for the threshold of problematicity theory. Learning and Instruction, 16, 374-387. doi:10.1016/j.learninstruc.2006.07.008 Renkl, A. (1997). Lernen durch Lehren—Zentrale Wirkmechanismen beim kooperativen Lernen. Wiesbaden: Deutschber Universitäts-Ver- lag. Rogoff, B. (1990). Apprenticeship in thinking: Cognitive development in social context. Oxford: Oxford University Press. Rogoff, B. (1991). Social interaction as apprenticeship in thinking: guided participation in spatial planning. In L. Resnick, J. Levine, & S. Teasley (Eds.), Perspectives on socially shared cognition (pp. 349- 364). Washington, DC: APA Books. doi:10.1037/10096-015 Roschelle, J., & Teasley, S. (1995). The construction of shared knowl- edge in collaborative problem solving. In C. E. O’Malley (Ed.), Computer supported collaborative learning (pp. 69-97). Heidelberg: Springer-Verlag. doi:10.1007/978-3-642-85098-1_5 Rummel, N., & Spada, H. (2005). Learning to collaborate: An instruct- tional approach to promoting collaborative problem solving in com- puter-mediated settings. The Journal of the Learning Sciences, 14, 201-241. doi:10.1207/s15327809jls1402_2 Rystedt, H. (2002). Bridging practices: Simulations in education for the health care professions. Doctoral Dissertation, Gothenburg: Goth- enburg University. Rystedt, H., & Lindwall, O. (2004). The interactive construction of learning foci in simulation-based learning environments: A case study of an anesthesia course. PsychNology Journal, 2, 165-188. See, L. C., Huang, Y. H., Chang, Y. H., Chiu, Y. J., Chen, Y. F., & Napper, V. S. (2010). Computer-enriched instruction (CEI) is better for preview material instead of review material: An example of a biostatistics chapter, the central limit theorem. Computers & Educa- tion, 55, 285-291. doi:10.1016/j.compedu.2010.01.014 Slavin, R. E. (1983). Cooperative learning. New York: Longman. Slavin, R. E. (2010). Instruction based on cooperative learning. In R. E. Mayer, & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 334-360). New York: Routledge. Smith, C. D., Farrell, T. M., McNatt, S. S., & Metreveli, R. E. (2001). Assessing laparoscopic manipulative skills. American Journal of Surgery, 181, 547-550. doi:10.1016/S0002-9610(01)00639-0 Soller, A. (2001). Supporting social interaction in an intelligent col- laborative learning system. International Journal of Artificial Intelli- gence in Education, 12, 166-187. Stahl, G. (2011). Past, present and future of CSCL. The Knowledge Building Summer Institute 2011 and CSCL2011 Post-Conference at the South China Normal University. Guangzhou [Not Published]. URL. http://GerryStahl.net/pub/cscl2011guangzhou.ppt.pdf Stegmann, K., Weinberger, A., & Fischer, F. (2007). Facilitating argu- mentative knowledge construction with computer-supported colla- boration scripts. International Journal of Computer-Supported Col- laborative Learning, 2, 421-447. doi:10.1007/s11412-007-9028-y Stegmann, K., & Fischer, F. (2011). Quantifying qualities in collabora- tive knowledge construction. The analysis of online discussions. In S. Puntambekar, G. Erkens, & C. Hmelo-Silver (Eds.), Analyzing in- teractions in CSCL: Methods, approaches and issues (pp. 247-268). Berlin: Springer. doi:10.1007/978-1-4419-7710-6_12 Söderström, T., Häll, L. O., Nilsson, T., & Ahlqvist, J. (2008). How does computer based simulator training impact on group interaction and proficiency development? In Proceedings of ICICte 2008: Readings in Technology and Education (pp. 650-659). Fraser Valley: University of Fraser Valley. URL. http://www.icicte.org/ICICTE2008Proceedings/soderstrom065.pdf Söderström, T., & Häll, L. O. (2010). Computer assisted simulations— Students’ experiences of learning radiology. The Conference of the Australian Association for Research in Education. Melbourne [Not Published]. Söderström, T., Häll, L. O., Nilsson, T., & Ahlqvist, J. (2012). Patterns of interaction and dialogue in computer assisted simulation training. Procedia—Social and Behavioral Sciences, 4 6, 2825-2831. doi:10.1016/j.sbspro.2012.05.571 Tanner, C. A. (2004). Nursing education research: Investing in our future. Journal of Nursing Education, 43, 99-100. Van Sickle, K. R., Gallagher, A. G., & Smith, C. D. (2007). The effect of escalating feedback on the acquisition of psychomotor skills for laparoscopy. Surgical Endoscopy and Other Interventional Tech- niques, 21, 220-224. doi:10.1007/s00464-005-0847-5 Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press. Webb, N. M. (1989). Peer interaction and learning in small groups. International Journal of Educational Research, 13, 21-39. doi:10.1016/0883-0355(89)90014-1 Weinberger, A., Ertl, B., Fischer, F., & Mandl, H. (2005). Epistemic and social scripts in computer-supported collaborative learning. In- structional Science, 33, 1-30. doi:10.1007/s11251-004-2322-4 Weinberger, A., Kollar, I., Dimitriadis, Y., Mäkitalo-Siegl, K., & Fischer, F. (2009). Computer-supported collaboration scripts. Perspectives from educational psychology and computer science. In N. Balacheff, S. Ludvigsen, T. de Jong, A. Lazonder, & S. Barnes (Eds.), Tech- nology enhanced learning (pp. 3-19). Berlin: Springer. doi:10.1007/978-1-4020-9827-7_10 Wertsch, J. (1998). Mind as action. New York: Oxford University Press. Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring and problem solving. Journal of Child Psychology and Psychiatry, 17, 89-100. doi:10.1111/j.1469-7610.1976.tb00381.x

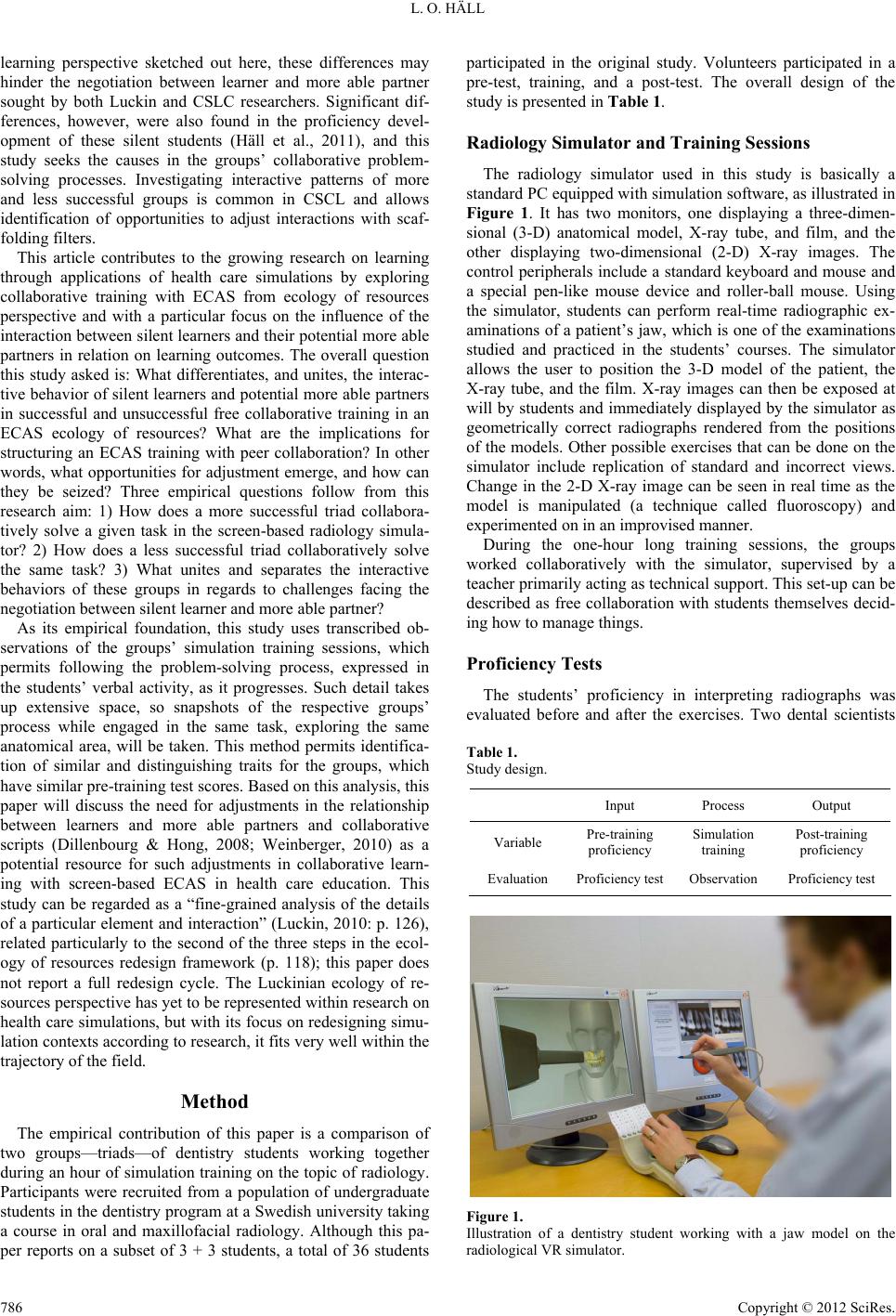

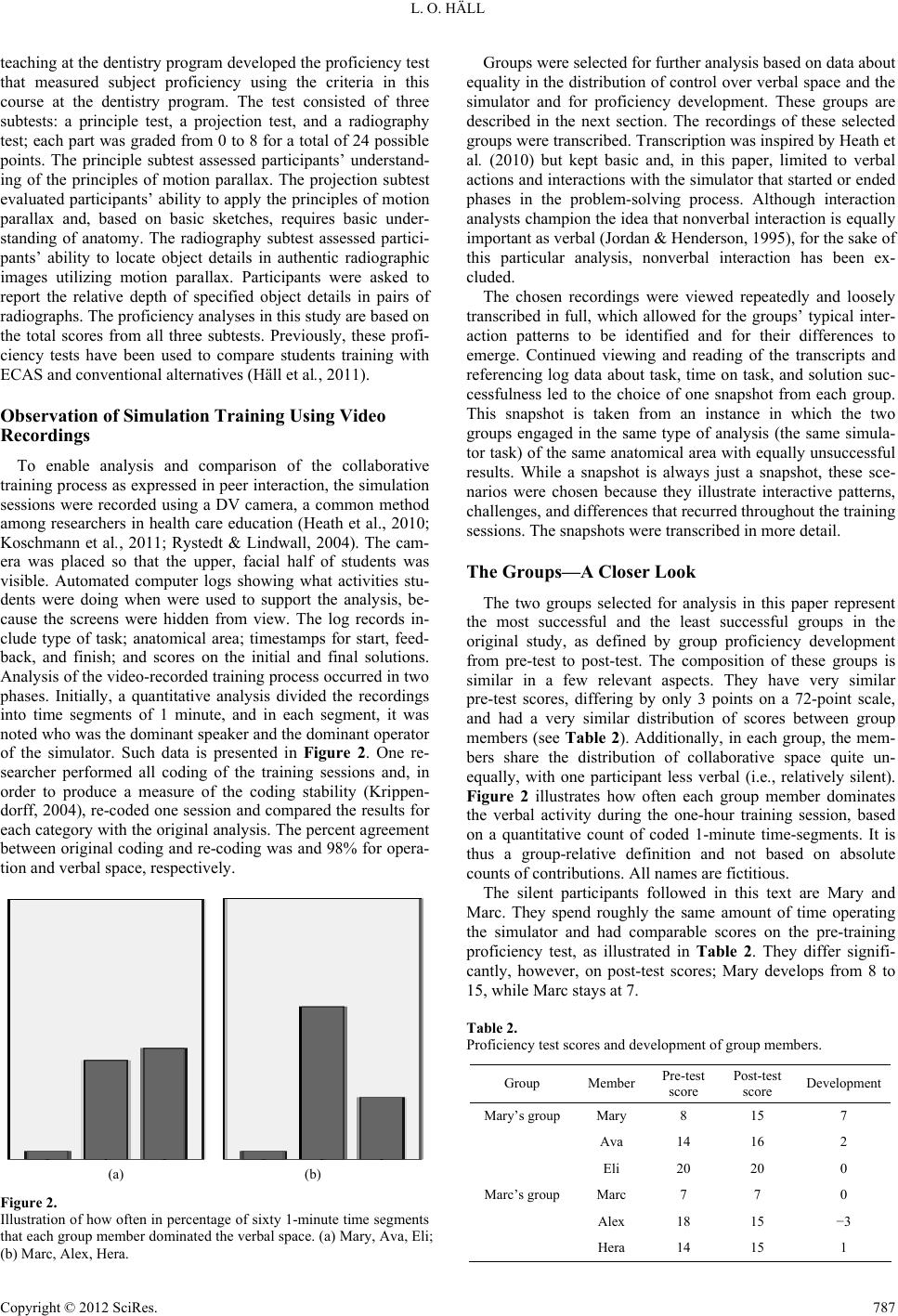

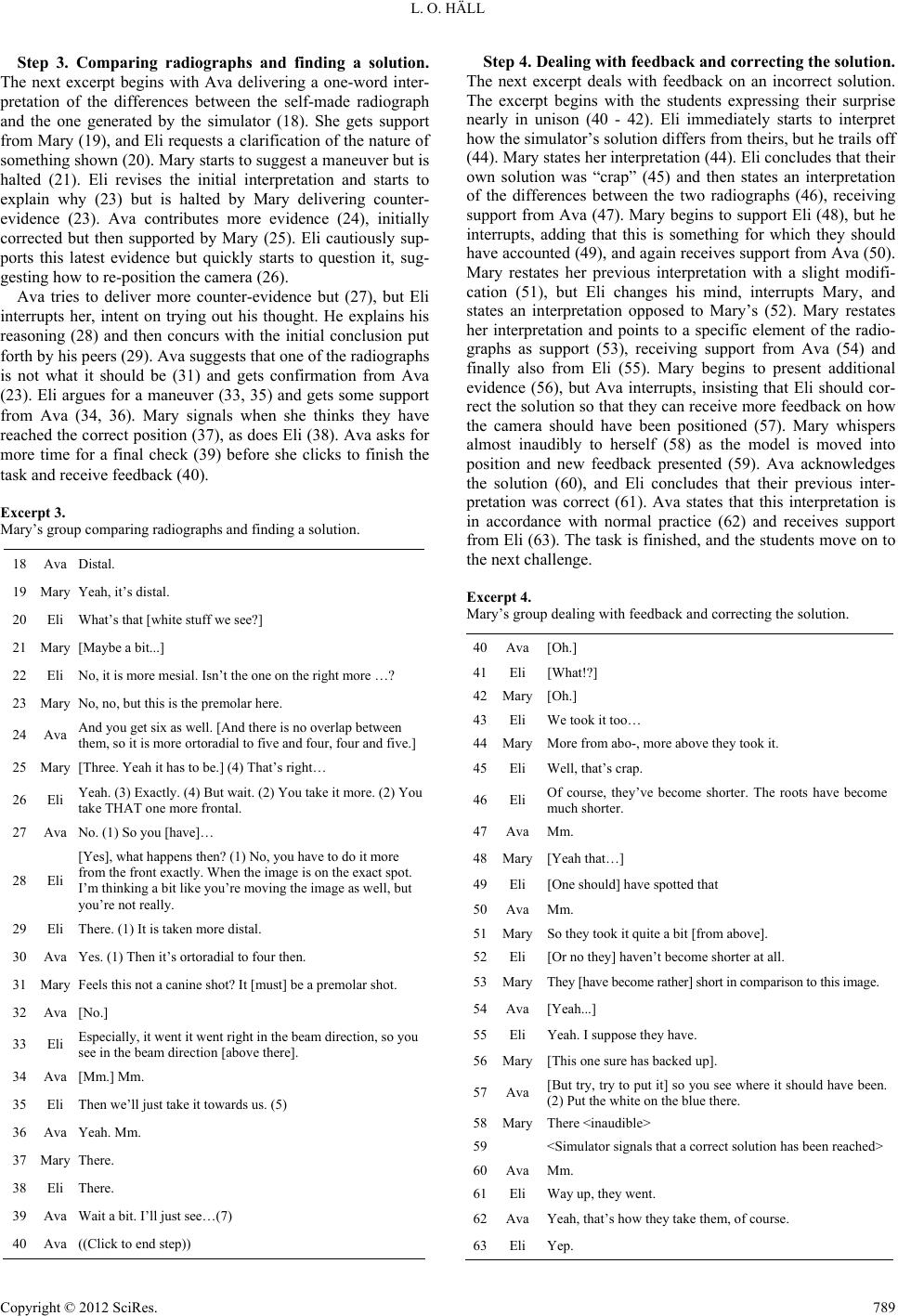

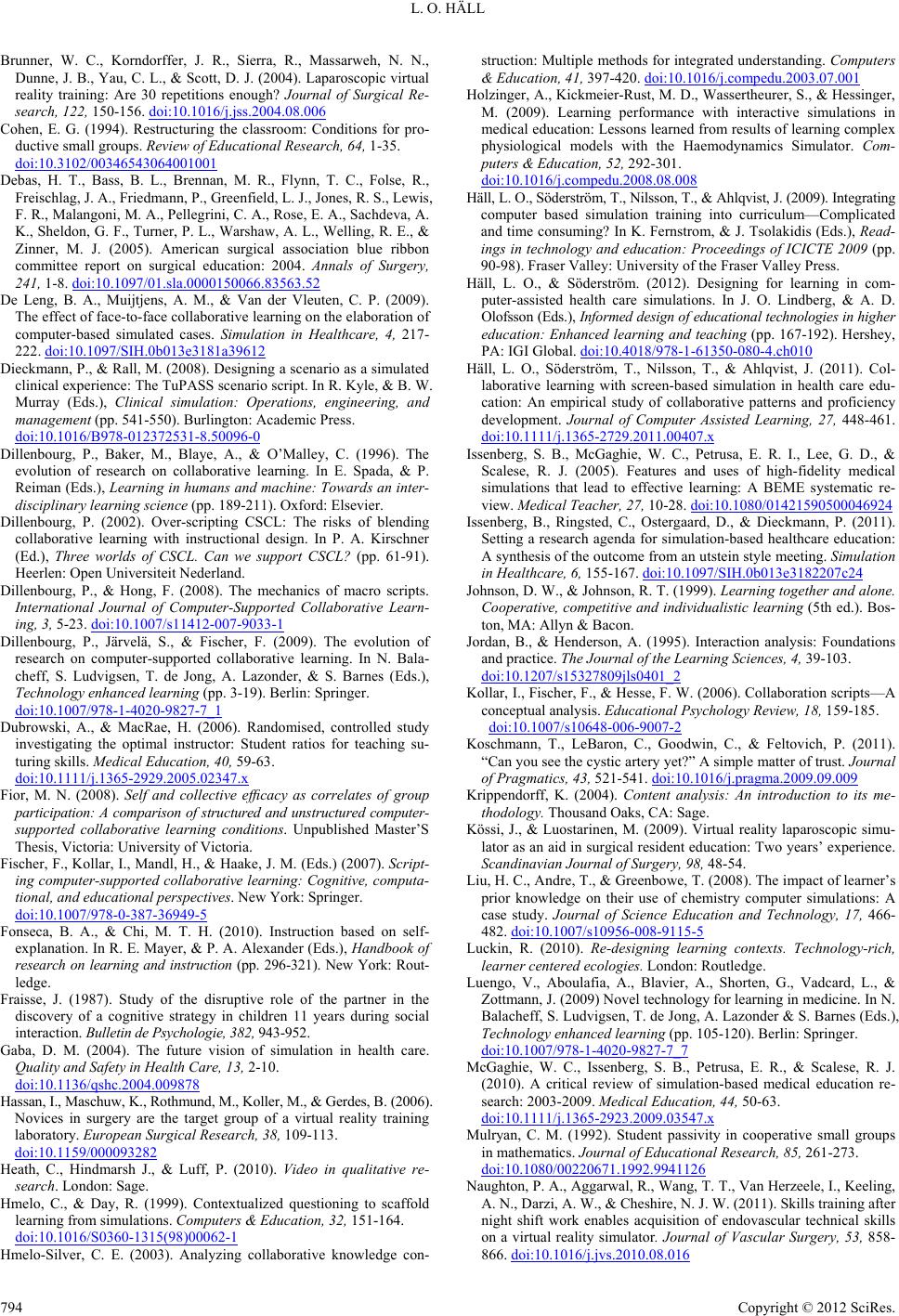

|