Paper Menu >>

Journal Menu >>

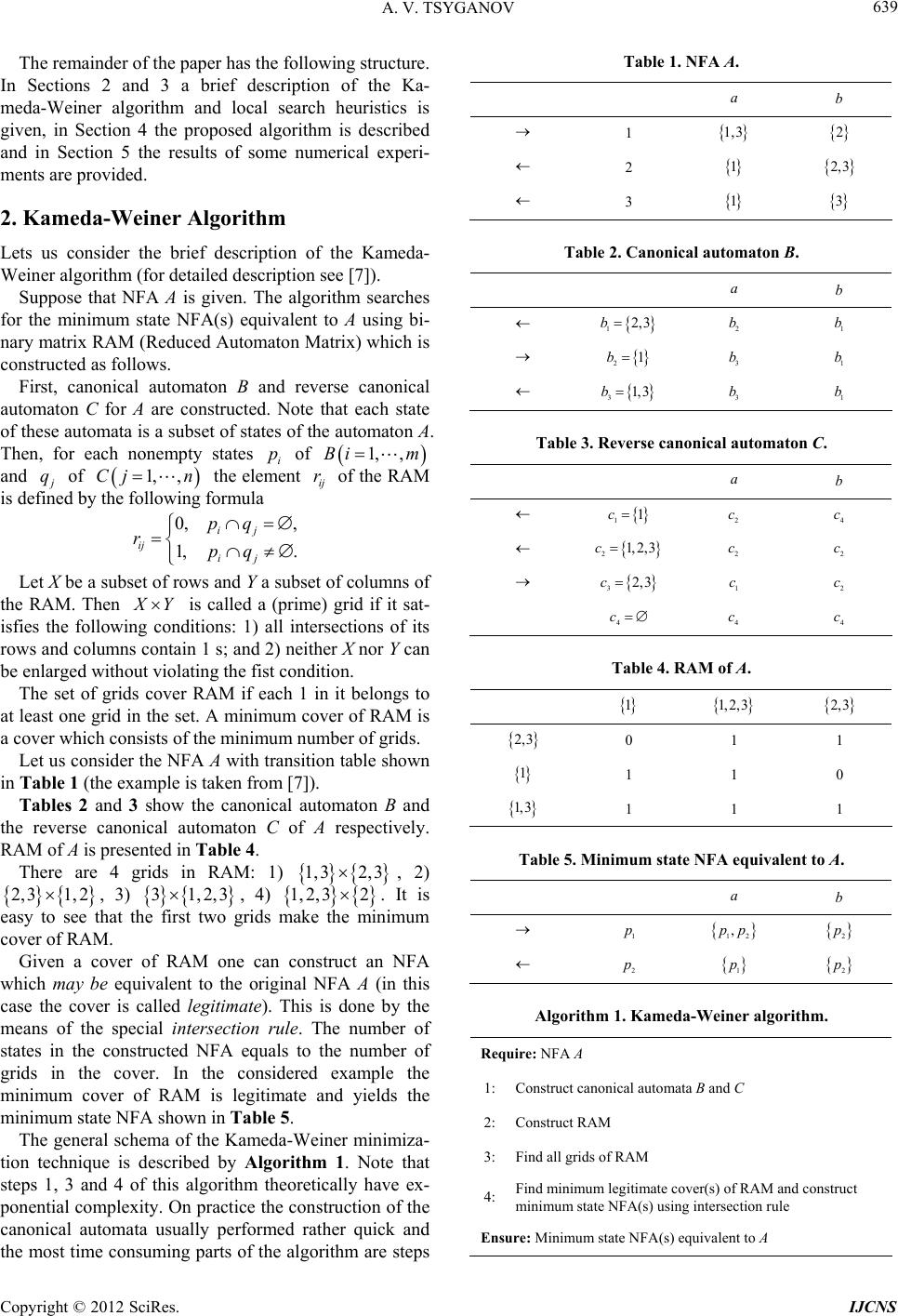

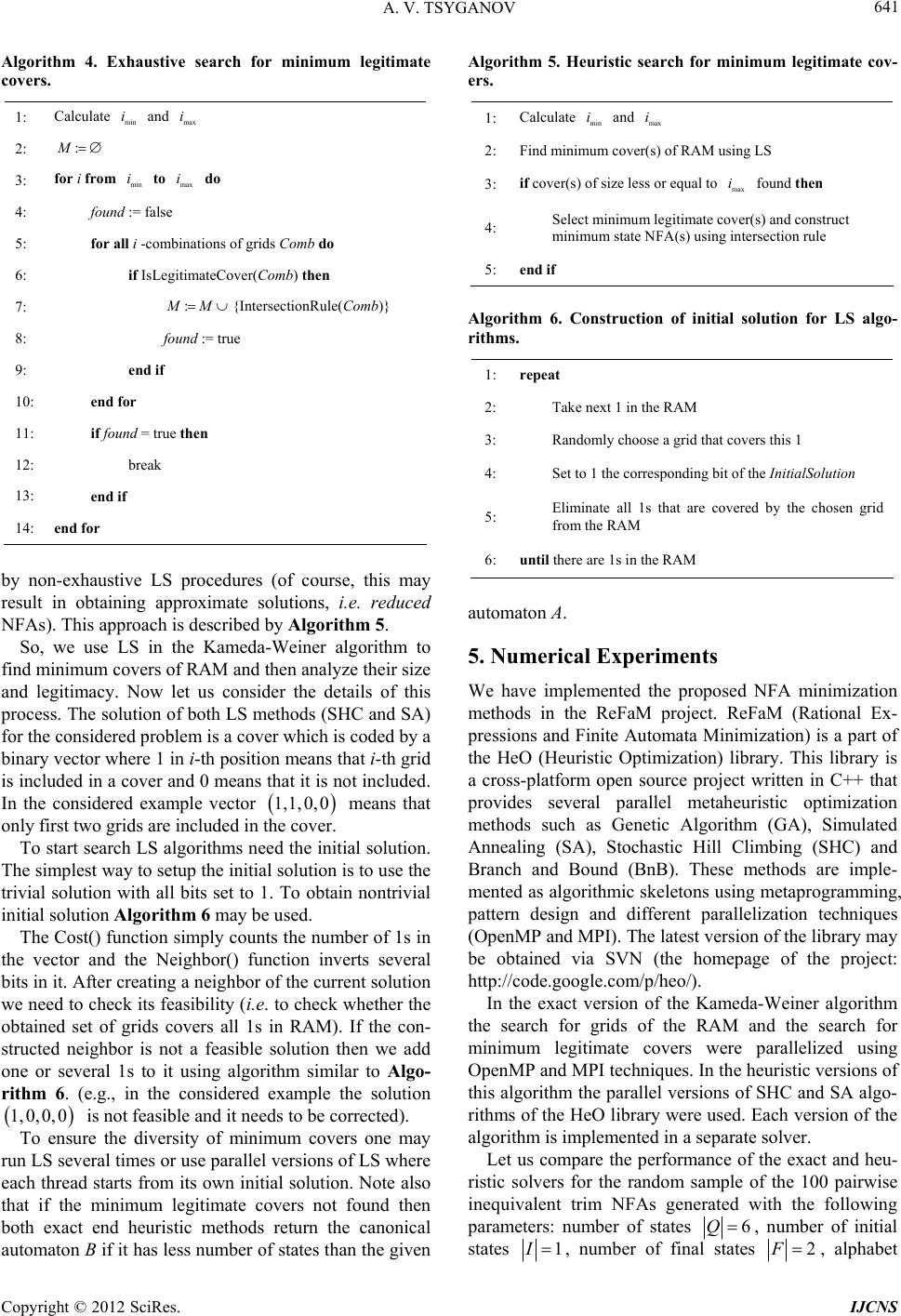

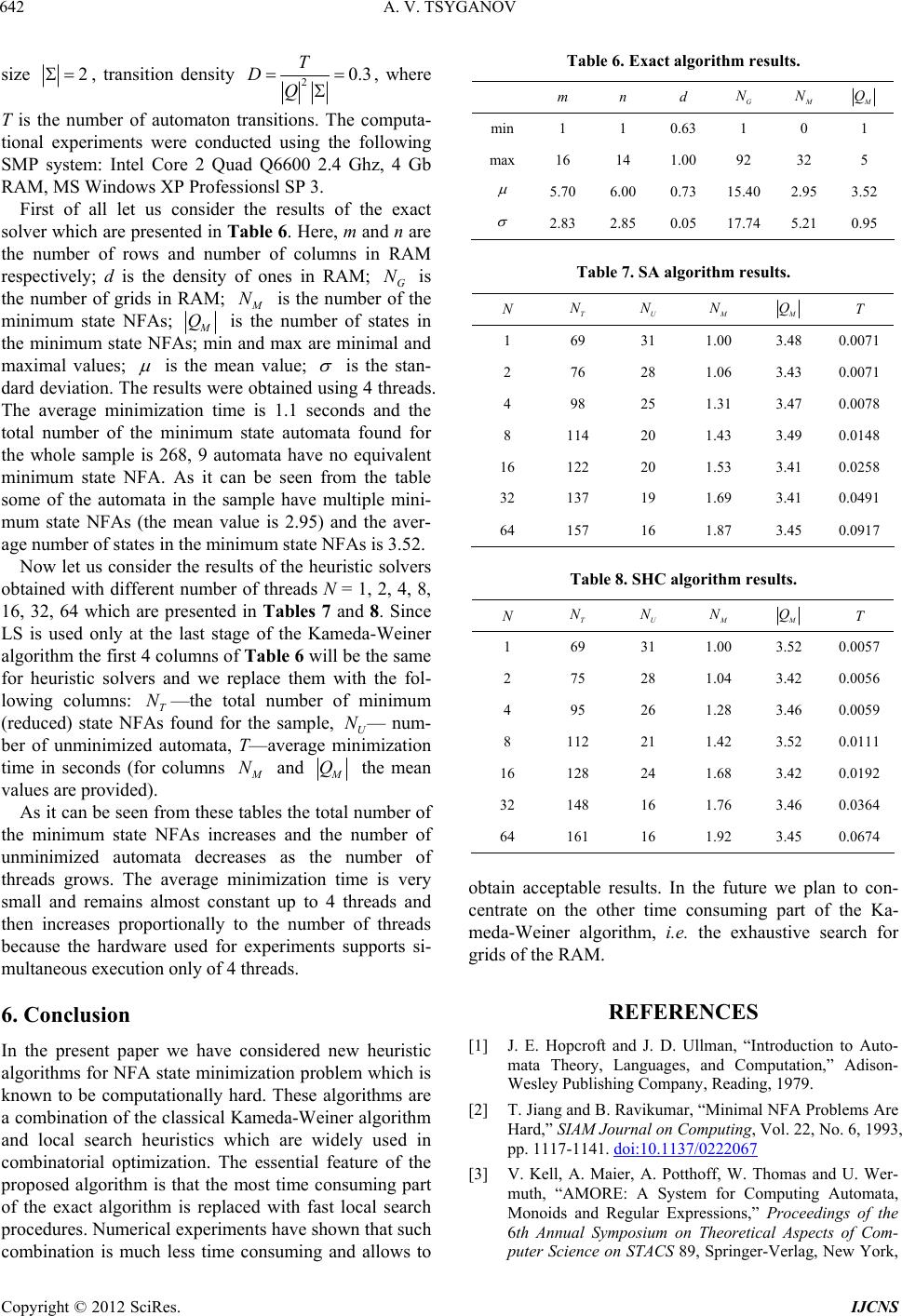

Int. J. Communications, Network and System Sciences, 2012, 5, 638-643 http://dx.doi.org/10.4236/ijcns.2012.529074 Published Online September 2012 (http://www.SciRP.org/journal/ijcns) Local Search Heuristics for NFA State Minimization Problem* Andrey V. Tsyganov Department of Higher Mathematics, Ulyanovsk State Pedagogical University, Ulyanovsk, Russia Email: andrew.tsyganov@gmail.com Received July 2, 2012; revised July 26, 2012; accepted August 6, 2012 ABSTRACT In the present paper we introduce new heuristic methods for the state minimization of nondeterministic finite automata. These methods are based on the classical Kameda-Weiner algorithm joined with local search heuristics, such as sto- chastic hill climbing and simulated annealing. The description of the proposed methods is given and the results of the numerical experiments are provided. Keywords: Nondeterministic Finite Automata; State Minimization; Heuristics; Local Search; Parallelism 1. Introduction Finite automata (FA) are widely used in various fields and especially in the theory of formal languages. We suppose that the reader is familiar with the basics of automata theory (see, for example, [1]) and provide only some necessary definitions. Let be an alphabet and n where i be a word. A set of words* is called a language 12 waa a :ia L . ,,,, The nondeterministic finite automaton (NFA) is a 5-tuple A QIF Q QQ , where is a finite set of states, is a finite alphabet, is a transi- tion relation, I Q and F Q are respectively the sets of initial and final states. Transitions of the automa- ton A are often described by the transition function . The NFA is called deterministic (DFA) iff :Q 2 Q 1I and ,:a ,1qa qQ (or ,1qa ). Finite automata may be used to recognize and define languages. Two automata are called equivalent if they recognize one and the same language. For each NFA the equivalent DFA may be constructed using the powerset construction process (each state of such DFA is a subset of states of the original NFA). For a word the reverse word is 12 n waa a 1nn waa a 1 , for a language L the reverse language is LwwL ,,,, and for an automaton A QIF the reverse automaton is ,, ,, A QFI where ,,qaq ij iff ,,qaq ji For a given language L a DFA which recognizes it and has the minimum possible number of states is called the canonical automaton and a DFA which recognizes . L and has the minimum possible number of states is called the reverse canonical automaton (these automata are unique for L up to isomorphism). The NFA state minimization problem is formulated as follows: for a given NFA A find an automaton which is equivalent to it and has the minimum possible number of states. Note that solution of this problem may not be unique. As it is shown in [2] the state minimization problem for NFA is PSPACE-complete. The worst case complexity for the same problem for DFA is logOQ Q . All known exact NFA state minimization methods use different types of exhaustive search and are computa- tionally hard. Very often they become impractical even for relatively small automata. This is one of the reasons why they are not implemented in software tools that deal with finite automata and related structures, such as AMoRE [3], FSM [4], Vaucanson [5], JFlap [6]. More- over, only few of such tools provide heuristic NFA state minimization algorithms. In the present paper we propose new heuristic methods for NFA state minimization problem which are based on the classical Kameda-Weiner algorithm [7] and well- known local search heuristics (metaheuristics). The nov- elty of these methods is that the most time consuming part of the exact algorithm is replaced with fast heuristic procedures. The obtained methods are not exact but they allow to reduce minimization time. *The work is supported by the Ministry of Education and Science o f the Russian Federation (grant n umber 1.919.2011). C opyright © 2012 SciRes. IJCNS  A. V. TSYGANOV 639 The remainder of the paper has the following structure. In Sections 2 and 3 a brief description of the Ka- meda-Weiner algorithm and local search heuristics is given, in Section 4 the proposed algorithm is described and in Section 5 the results of some numerical experi- ments are provided. 2. Kameda-Weiner Algorithm Lets us consider the brief description of the Kameda- Weiner algorithm (for detailed description see [7]). Suppose that NFA A is given. The algorithm searches for the minimum state NFA(s) equivalent to A using bi- nary matrix RAM (Reduced Automaton Matrix) which is constructed as follows. First, canonical automaton B and reverse canonical automaton C for A are constructed. Note that each state of these automata is a subset of states of the automaton A. Then, for each nonempty states i of and p 1, ,Bi m j q of the element of the RAM is defined by the following formula , ij r 1,Cj n , . ij ij pq pq 0, 1, ij r Let X be a subset of rows and Y a subset of columns of the RAM. Then X Y 1,3 2,3 2, 1,2,3 2 ab is called a (prime) grid if it sat- isfies the following conditions: 1) all intersections of its rows and columns contain 1 s; and 2) neither X nor Y can be enlarged without v iolating the fist condition. The set of grids cover RAM if each 1 in it belongs to at least one grid in the set. A minimum cover of RAM is a cover which consists of the minimum number of grids. Let us consider the NFA A with transitio n table shown in Table 1 (the example is taken from [7]). Tables 2 and 3 show the canonical automaton B and the reverse canonical automaton C of A respectively. RAM of A is presented in Table 4. There are 4 grids in RAM: 1) , 2) , 3) , 4) . It is easy to see that the first two grids make the minimum cover of RAM. 3 1,2 31,2,3 Given a cover of RAM one can construct an NFA which may be equivalent to the original NFA A (in this case the cover is called legitimate). This is done by the means of the special intersection rule. The number of states in the constructed NFA equals to the number of grids in the cover. In the considered example the minimum cover of RAM is legitimate and yields the minimum state NFA shown in Table 5. The general schema of the Kameda-Weiner minimiza- tion technique is described by Algorithm 1. Note that steps 1, 3 and 4 of this algorithm theoretically have ex- ponential complexity. On practice the construction of the canonical automata usually performed rather quick and the most time consuming parts of the algorithm are steps Table 1. NFA A. 1 1,3 2 2 1 2,3 3 1 3 Table 2. Canonical automaton B. ab 12,3b2 b1 b 21b3 b1 b 31,3b3 b1 b Table 3. Reverse canonical automaton C. ab 11c2 c4 c 21, 2,3c2 c2 c 32,3c1 c2 c 4 c 4 c4 c Table 4. RAM of A. 1 1,2,3 2,3 2,3 0 1 1 1 1 1 0 1,3 1 1 1 Table 5. Minimum state NFA equivalent to A. ab 1 p 12 , p p 2 p 2 p 1 p 2 p Algorithm 1. Kameda-Weiner algorithm. Require: NFA A 1:Cons truct canonical automata B and C 2:Const ruct RAM 3:Find all g rids of RAM 4: Find minimum legiti mate c ove r(s) of RAM and construct minimum state NFA(s) using intersec tion rule Ensure: Minimum state NFA(s) equivalent to A Copyright © 2012 SciRes. IJCNS  A. V. TSYGANOV 640 3 and 4 which perform the exhaustive search for grids and covers respectively. 3. Local Search Heuristics Local Search (LS) is a group of metaheuristic optimiza- tion techniques which are widely used especially in com- binatorial optimization. Its general schema is described by Algori thm 2. Each LS algorithm starts from some initial solution (line 1) and then iteratively updates it (lines 2 - 4) until stop condition is satisfied (in the most simple case it is the maximum number of steps). The Neighbor() function finds neighbors of the current solution Solution and the Update() function changes the current solution depending on the found neighbors. The quality of the solutions is compared using the special Cost() function. The simplest LS algorithm is called Hill Climbing (HC). In HC the Update() function always selects the best neighbor of the current solution (the steepest move). The Stochastic Hill Climbing (SHC) is a variant of HC which randomly chooses one of the neighbors and decides whether to move to it or to consider another neighbor. The disadvantage of the HC algorith ms is that th ey can easily stuck in the local optimum. The Simulated An- nealing (SA) is a more complicated LS algorithm which tries to avoid this problem. Algorithm 3 shows in detail the minimization process using SA. The distinctive featur e of the algorithm is the usage of the control parameter T (temperature) which slowly de- creases as the number of iterations k increases. The Neighbor() function generates a neighbor of the current solution and the Update() function accepts it with the probability 1, P if 0; e,if 0, T ost Solution (1) where . Cost NewSolutionC As it follows from (1) the algorithm always accepts the generated neighbor if it is not worse than the current so- lution. If the neighbor is worse than the current solution it is accepted with some probability which decreases as the temperature decreases. So, the worse moves are made more often in the beginning of the optimization process. In classical SA the best solution is not stored (lines 2, 3, 8 - 12 are missing) and the algorithm returns the current solution. More details on heuristic optimization algorithms can be found in [8,9]. 4. Combinig Kameda-Weiner Algorithm with Local Search Heuristics First of all let us consider in more detail the last step of Algorithm 2. Local search. Require: InitialSolution 1: Solution := InitialSolution 2: repeat 3: Solution := Update(Neigh bo r(Solution)) 4: until StopCondition() Ensure: Solution Algorithm 3. Simulated annealing. Require: InitialSolution 1: Solution := InitialSolution 2: BestSolution := Solution 3: BestCost := Cost(Solution) 4: T := InitialTemperatue() 5: k := 0 6: while not StopCondition() do 7: NewSolution := Neighbor(Solution) 8: NewCost := Cost(NewSolution) 9: if NewCost < BestCost then 10: BestSolution := NewSolution 11: BestCost := NewCost 12: end if 13: Solution := Update(Solution, NewSolution, T) 14: k := k + 1 15: T := Update Temperature(T, k) 16: end while Ensure: BestSolution the Kameda-Weiner algorithm, i.e. the exhaustive search for minimum legitimate covers (see Algorithm 4). Here M is a set of minimum state NFAs. IsLegitimateCover() function tests whether the set of grids is a legitimate cover and IntersectionRule() constructs NFA using the cover. The bounds and of the main loop may min be calculated as follows: imax i min 2 log B iN where B N is the number of states in canonical automaton B, min, 1, 1iNNN max GA B N where G N is the number of grids in RAM, A is the number of states in A and B is the number of states in B. NFor each step of the outer loop in the inner loop (lines 5 - 10) all possible i-combinations of grids have to be analyzed. The idea of the heuristic methods proposed in this paper is to replace this computationally hard process Copyright © 2012 SciRes. IJCNS  A. V. TSYGANOV 641 Algorithm 4. Exhaustive search for minimum legitimate covers. 1: Calculate and min imax i 2: :M min imax i : MM 3: for i from to do 4: found := false 5: for all i -combinations of grids Comb do 6: if IsLegitimateCover(Comb) then 7: {IntersectionRule(Comb)} 8: found := true 9: end if 10: end for 11: if found = true then 12: break 13: end if 14: end for by non-exhaustive LS procedures (of course, this may result in obtaining approximate solutions, i.e. reduced NFAs). This approach is described by Algorithm 5. So, we use LS in the Kameda-Weiner algorithm to find minimum covers of RAM and then analyze their size and legitimacy. Now let us consider the details of this process. The solution of both LS methods (SHC and SA) for the considered problem is a cover which is coded by a binary vector where 1 in i-th position means that i-th grid is included in a cover and 0 means that it is no t included. In the considered example vector means that only first two grids are included in the cover. 1,1,0,0 1,0,0,0 min imax To start search LS algorithms need the initial so lution. The simplest way to setup the initial solution is to use the trivial solution with all bits set to 1. To obtain nontrivial initial solution Algorithm 6 may be used. The Cost() function simply counts the number of 1s in the vector and the Neighbor() function inverts several bits in it. After creating a neighbor of the current solutio n we need to check its feasibility (i.e. to check whether the obtained set of grids covers all 1s in RAM). If the con- structed neighbor is not a feasible solution then we add one or several 1s to it using algorithm similar to Algo- rithm 6. (e.g., in the considered example the solution is not feasible and it needs to be corrected ). To ensure the diversity of minimum covers one may run LS several times or use parallel versions of LS where each thread starts from its own initial solution. Note also that if the minimum legitimate covers not found then both exact end heuristic methods return the canonical automaton B if it has less number of states than the given Algorithm 5. Heuristic search for minimum legitimate cov- ers. 1: Calculate and i 2:Find minimum cover(s) of RAM using LS 3: if cover(s) of size less or equal to found then max i 4: Selec t mi n i mum le g i t i ma t e cover(s) and construct minimum state NFA(s) using intersection rule 5: end if Algorithm 6. Construction of initial solution for LS algo- rithms. 1: repeat 2: Take next 1 in the RAM 3: Randomly choose a grid that covers this 1 4: Set to 1 the corresponding bit of the InitialSolution 5: Eliminate all 1s that are covered by the chosen grid from the RAM 6: until there are 1s in the RAM automaton A. 5. Numerical Experiments We have implemented the proposed NFA minimization methods in the ReFaM project. ReFaM (Rational Ex- pressions and Finite Automata Minimization) is a part of the HeO (Heuristic Optimization) library. This library is a cross-platform open source project written in C++ that provides several parallel metaheuristic optimization methods such as Genetic Algorithm (GA), Simulated Annealing (SA), Stochastic Hill Climbing (SHC) and Branch and Bound (BnB). These methods are imple- mented as algorithmic skeletons using metaprogramming, pattern design and different parallelization techniques (OpenMP and MPI). The latest version of the library may be obtained via SVN (the homepage of the project: http://code.google.com/p/heo/). In the exact version of the Kameda-Weiner algorithm the search for grids of the RAM and the search for minimum legitimate covers were parallelized using OpenMP and MPI techniques. In the heuristic versions of this algorithm the parallel versions of SHC and SA algo- rithms of the HeO library were used. Each version of the algorithm is implemented in a separate solver. Let us compare the performance of the exact and heu- ristic solvers for the random sample of the 100 pairwise inequivalent trim NFAs generated with the following parameters: number of states 6Q, number of initial states 1I , number of final states 2F, alphabet Copyright © 2012 SciRes. IJCNS  A. V. TSYGANOV 642 size 2 , transition density 20.3 T Q N D, where T is the number of automaton transitions. The computa- tional experiments were conducted using the following SMP system: Intel Core 2 Quad Q6600 2.4 Ghz, 4 Gb RAM, MS Wind o ws XP P r of es si onsl SP 3. First of all let us consider the results of the exact solver which are presen ted in Table 6. Here, m and n are the number of rows and number of columns in RAM respectively; d is the density of ones in RAM; G is the number of grids in RAM; M N is the number of the minimum state NFAs; M Q is the number of states in the mini mum state NFAs; min and max are minimal and maximal values; is the mean value; is the stan- dard deviation. The results were obtained using 4 threads. The average minimization time is 1.1 seconds and the total number of the minimum state automata found for the whole sample is 268, 9 automata have no equivalent minimum state NFA. As it can be seen from the table some of the automata in the sample have multiple mini- mum state NFAs (the mean value is 2.95) and the aver- age number of states in the minimum state NFAs is 3.52. Now let us consider the results of the heuristic solvers obtained with different number of threads N = 1, 2, 4, 8, 16, 32, 64 which are presented in Tables 7 and 8. Since LS is used only at the last stage of the Kameda-Weiner algorithm the first 4 columns of Table 6 will be the same for heuristic solvers and we replace them with the fol- lowing columns: T—the total number of minimum (reduced) state NFAs found for the sample, U— num- ber of unminimized automata, T—average minimization time in seconds (for columns NN M N and M Q G N the mean values are provided). As it can be seen from these tables the total number of the minimum state NFAs increases and the number of unminimized automata decreases as the number of threads grows. The average minimization time is very small and remains almost constant up to 4 threads and then increases proportionally to the number of threads because the hardware used for experiments supports si- multaneous execution only of 4 threads. 6. Conclusion In the present paper we have considered new heuristic algorithms for NFA state minimization problem which is known to be computationally hard. These algorithms are a combination of the classical Kameda-Weiner algorithm and local search heuristics which are widely used in combinatorial optimization. The essential feature of the proposed algorithm is that the most time consuming part of the exact algorithm is replaced with fast local search procedures. Numerical experiments have shown that such combination is much less time consuming and allows to Table 6. Exact algorithm results. m n d M N M Q min 1 1 0.63 1 0 1 max 16 14 1.00 92 32 5 5.70 6.00 0.73 15.40 2.95 3.52 2.83 2.85 0.05 17.74 5.21 0.95 Table 7. SA algorithm results. T NU N N M N M Q T 1 69 31 1.00 3.48 0.0071 2 76 28 1.06 3.43 0.0071 4 98 25 1.31 3.47 0.0078 8 114 20 1.43 3.49 0.0148 16 122 20 1.53 3.41 0.0258 32 137 19 1.69 3.41 0.0491 64 157 16 1.87 3.45 0.0917 Table 8. SHC algorithm results. T NU N N M N M Q T 1 69 31 1.00 3.52 0.0057 2 75 28 1.04 3.42 0.0056 4 95 26 1.28 3.46 0.0059 8 112 21 1.42 3.52 0.0111 16 128 24 1.68 3.42 0.0192 32 148 16 1.76 3.46 0.0364 64 161 16 1.92 3.45 0.0674 obtain acceptable results. In the future we plan to con- centrate on the other time consuming part of the Ka- meda-Weiner algorithm, i.e. the exhaustive search for grids of the RAM. REFERENCES [1] J. E. Hopcroft and J. D. Ullman, “Introduction to Auto- mata Theory, Languages, and Computation,” Adison- Wesley Publishing Company, Reading, 1979. [2] T. Jiang and B. Ravikumar, “Minimal NFA Problems Are Hard,” SIAM Journal on Computing, Vol. 22, No. 6, 1993, pp. 1117-1141. doi:10.1137/0222067 [3] V. Kell, A. Maier, A. Potthoff, W. Thomas and U. Wer- muth, “AMORE: A System for Computing Automata, Monoids and Regular Expressions,” Proceedings of the 6th Annual Symposium on Theoretical Aspects of Com- puter Science on STACS 89, Springer-Verlag, New York, Copyright © 2012 SciRes. IJCNS  A. V. TSYGANOV Copyright © 2012 SciRes. IJCNS 643 1989, pp. 537-538. [4] M. Mohri, F. Pereira and M. Riley, “AT&T General- Purpose Finite-State Machine Software Tools,” 1997. http://www.research.att.com/sw/tools/fsm [5] S. Lombardy, R. Poss, Y. Régis-Gianas and J. Sa- karovitch, “Introducing VAUCANSON,” In: O. H. Ibarra and Z. Dang, Eds., Implementation and Application of Automata, CIAA 2003, Santa Barbara, 16-18 July 2003, pp. 96-107. [6] S. H. Rodger, “JFLAP: An Interactive Formal Languages and Automata Package,” Jones and Bartlett Publishers, Inc., USA, 2006. [7] T. Kameda and P. Weiner, “On the State Minimization of Nondeterministic Finite Automata,” IEEE Transactions on Computers, Vol. C-19, No. 7, 1970, pp. 617-627. doi:10.1109/T-C.1970.222994 [8] J. Hromkovic, “Algorithmics for Hard Problems—Intro- duction to Combinatorial Optimization, Randomization, Approximation, and Heuristics,” Springer, Berlin, 2001. [9] F. Glover and G. A. Kohenberger, “Handbook of Meta- heuristics,” Kluwer Academic Publishers, Boston, 2003. |