Journal of Intelligent Learning Systems and Applications

Vol.4 No.1(2012), Article ID:17549,9 pages DOI:10.4236/jilsa.2012.41002

A Comparative Study of Nonlinear Time-Varying Process Modeling Techniques: Application to Chemical Reactor

![]()

LARA Automatique, Tunis, Tunisia.

Email: errachdi_ayachi@yahoo.fr, ihsen.saad@enit.rnu.tn, mohamed.benrejeb@enit.rnu.tn

Received December 27th, 2010; revised May 12th, 2011; accepted May 22nd, 2011

Keywords: Nonlinear systems; time-varying systems; multi layer perceptron; radial basis function; gradient descent; genetic algorithms; optimization

ABSTRACT

This paper proposes the design and a comparative study of two nonlinear systems modeling techniques. These two approaches are developed to address a class of nonlinear systems with time-varying parameter. The first is a Radial Basis Function (RBF) neural networks and the second is a Multi Layer Perceptron (MLP). The MLP model consists of an input layer, an output layer and usually one or more hidden layers. However, training MLP network based on back propagation learning is computationally expensive. In this paper, an RBF network is called. The parameters of the RBF model are optimized by two methods: the Gradient Descent (GD) method and Genetic Algorithms (GA). However, the MLP model is optimized by the Gradient Descent method. The performance of both models are evaluated first by using a numerical simulation and second by handling a chemical process known as the Continuous Stirred Tank Reactor CSTR. It has been shown that in both validation operations the results were successful. The optimized RBF model by Genetic Algorithms gave the best results.

1. Introduction

Neural networks are widely used in the characterization of nonlinear systems [1-7], time-varying time-delay nonlinear systems [8] and they are applied in various applications [9-12].

The system may be with invariant parameters or timevarying parameters. The variation of some system may be such as; system with slow time-varying parametric uncertainties [13,14], with arbitrarily rapid time-varying parameters in a known compact set [15], with rapid timevarying parameters which converge asymptotically to constants [16], and with unknown parameters with arbitrarily fast and nonvanishing variations [17].

Using MLP architecture depends on various parameters, for instance the number of hidden layers, the number of neurons in each hidden layers, the activation function and the learning rate. These parameters present a difficulty to find the suitable architecture of the MLP.

A renewed interest in Radial Basis Function (RBF) neural network has been found in recent years in various application areas such as modeling and control [1-2], pattern recognition [18] identifying malfunctions of dynamical systems in the case of the frequency multiplier [19], and in the case of jump phenomenon [20], determining the optimal choice of machine tools [21], predicting 2D structure of proteins [22], classification [23, 24], solving systems of equations [25], analysis of the interaction of multi-input multi-output [26] and modeling of robots [27].

Using an RBF leads to a general model structure is less complex than that produced by an MLP network. The computational complexity induced by their learning is less than that induced by learning the MLP networks. The RBF network performance depends, to a choice of activation function [1], the number of hidden neurons and synaptic weights. By the time-varying nature of parameters the RBF methods are not applicable. However, the RBF is well used in invariant-system. The MLP is used in estimation of time-varying time-delay nonlinear system [17]. In this work, we investigate the possibility of extending the well conventional methods to model a nonlinear system in presence of time-varying parameters.

Several methods such as iterative methods [28-32] with the gradient descent method and evolutionary algorithms [32] that genetic algorithms are used in this paper to optimize the structure and determine the parameters of the RBF model. On the other hand, the gradient descent method is used to optimize the MLP network [33].

This paper focuses on the optimization of radial basis functions architecture, and compares it to the MLP architecture. The proposed algorithms are applied to timevarying nonlinear systems. The RBF using genetic algorithms gave the best results.

This paper is organized as follows. Nonlinear system modeling by MLP and RBF network is presented at the second and the third section. A comparative study between the MLP and RBF model, applied to two examples of nonlinear systems is presented in the forth section. Conclusions are given in the fifth section.

2. Nonlinear System Modeling by MLP

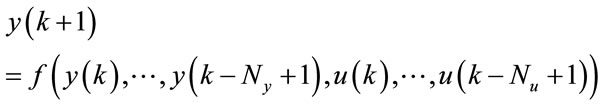

Modeling a nonlinear system from its input-output can be for several models. Among these models, the NARMA (Nonlinear Auto-Regressive Moving Average) [19] is used; its expression is given by the following equation:

(1)

(1)

where  is a nonlinear mapping,

is a nonlinear mapping,  and

and  are the input and output vector,

are the input and output vector,  and

and  are the maximum input and output lags, respectively. In this paper, the coefficients of the model (1) depend on time.

are the maximum input and output lags, respectively. In this paper, the coefficients of the model (1) depend on time.

The used MLP in this paper is to describe the nonlinear system (1). The objective of the modeling is to obtain an MLP that its output follows the output of the system.

2.1. Structure of MLP

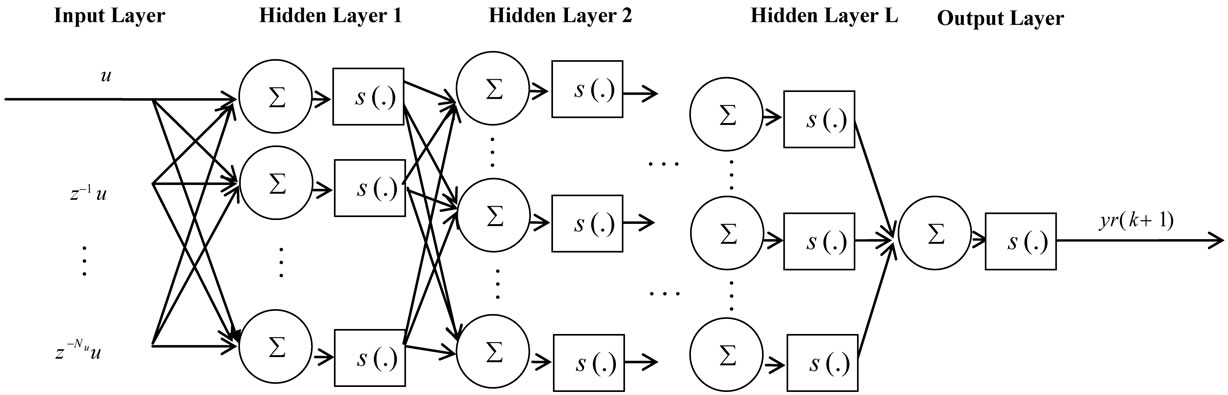

The multilayer perceptron network consists of an input layer, an output layer and usually one or more hidden layers. Figure 1 shows the architecture of MLP network employed for modeling a nonlinear system. It has an input layer of  neurons,

neurons,  hidden layers, each hidden layer contains

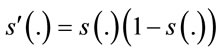

hidden layers, each hidden layer contains  neurons and one neuron in the only output layer. The sigmoid activation function,

neurons and one neuron in the only output layer. The sigmoid activation function,  , is used.

, is used.

For the nonlinear system (1), if no knowledge about the structure of the nonlinearity of the system is available such system is considered as a “black box” system modeling.

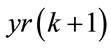

The output of MLP model  is given by the following equation:

is given by the following equation:

(2)

(2)

where  is a sigmoid activation function,

is a sigmoid activation function,  is the output vector of MLP.

is the output vector of MLP. ,

,  ,

,  and

and  are the synaptic weights of MLP.

are the synaptic weights of MLP.  is the input vector of MLP.

is the input vector of MLP.

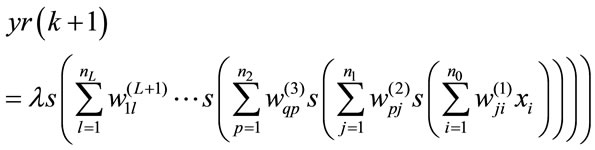

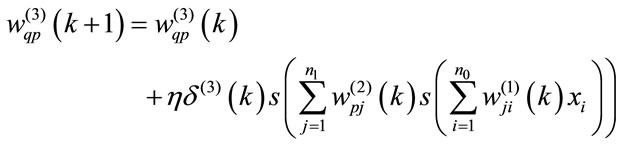

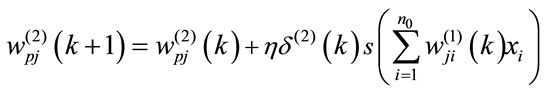

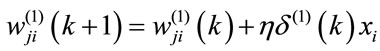

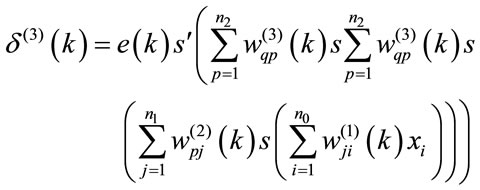

2.2. Optimization of MLP

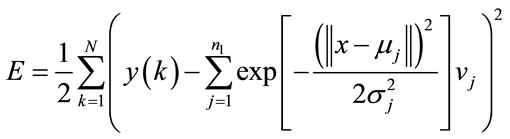

Among the optimization methods of MLP, the gradient descent method is used in this paper. Optimization of the MLP is to minimize the mean square error E.

(3)

(3)

where  is a function cost.

is a function cost.

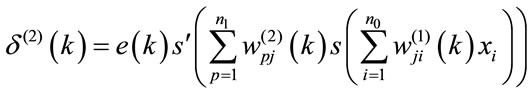

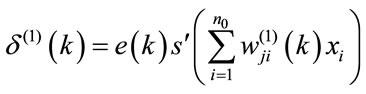

In this section, 2 hidden layers are taken into account with a single input layer and one output layer, the result of optimization is given by equations (4) to (10):

(4)

(4)

(5)

(5)

(6)

(6)

with:

(7)

(7)

Figure 1. Multilayer perceptron feedforward neural network (MLP).

(8)

(8)

(9)

(9)

(10)

(10)

3. Nonlinear System Modeling by RBF

As we did with the MLP model, the RBF is used to describe the nonlinear system (1).

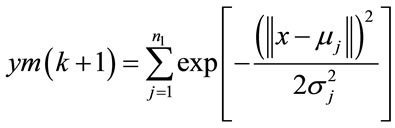

3.1. Structure of RBF

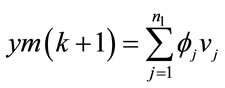

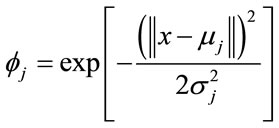

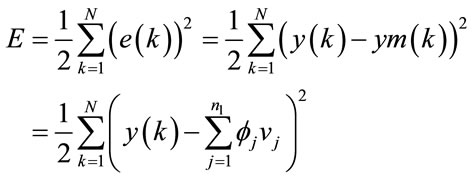

The RBF consists of only three layers; an input layer, an output layer and usually one hidden layers contains a hidden radial basis function. The RBF model calculates a linear combination of radial basis functions as is given by the following equation:

(11)

(11)

where  is the output vector of RBF.

is the output vector of RBF.  is the synaptic weights of RBF and

is the synaptic weights of RBF and  is a Gaussian activation function:

is a Gaussian activation function:

(12)

(12)

3.2. Optimization Methods of RBF

Compared to the MLP, the RBF contains a very small number of parameters. The purpose of optimizing RBF is to determine ,

,  and

and  by minimizing the function cost

by minimizing the function cost .

.

(13)

(13)

In order to find the minimum of ,

,  and

and  two strategies are proposed in the literature for finding the minimum of

two strategies are proposed in the literature for finding the minimum of . The first is based on supervised methods or algorithms using direct time-consuming calculation to determine the minimum of

. The first is based on supervised methods or algorithms using direct time-consuming calculation to determine the minimum of . The second adopts a hybrid scheme (less costly in computation time) to determine the minimum of

. The second adopts a hybrid scheme (less costly in computation time) to determine the minimum of . Solving these problems can be by various methods such as iterative methods (the Gradient Descent method) and evolutionary algorithms (Genetic Algorithm).

. Solving these problems can be by various methods such as iterative methods (the Gradient Descent method) and evolutionary algorithms (Genetic Algorithm).

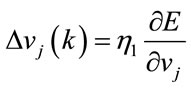

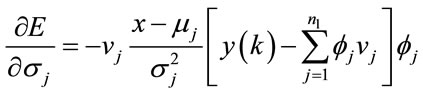

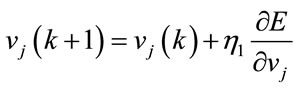

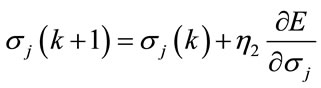

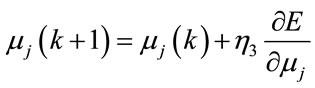

3.2.1. Optimization of RBF Using Gradient Descent

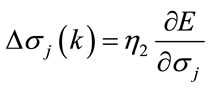

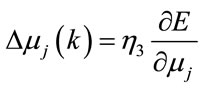

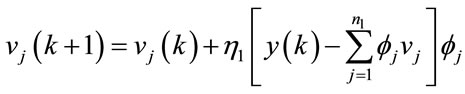

The principle of the GD method is applied to optimize the parameters of the RBF model. It uses the rules of delta:

(14)

(14)

(15)

(15)

(16)

(16)

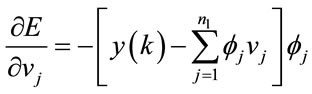

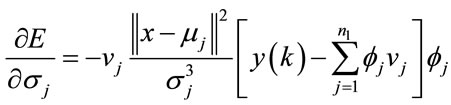

Hence the calculation of partial derivatives introduced by the following equations:

(17)

(17)

(18)

(18)

(19)

(19)

finally, we obtain

(20)

(20)

(21)

(21)

(22)

(22)

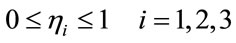

The learning rate  satisfies the following condition:

satisfies the following condition:

(23)

(23)

is nonlinear in the parameters, which calls for finding the minimum, the use of an iterative algorithm that requires an arbitrary initialization of RBF network parameters and a suitable choice of

is nonlinear in the parameters, which calls for finding the minimum, the use of an iterative algorithm that requires an arbitrary initialization of RBF network parameters and a suitable choice of . To maximize the chances of finding the global minimum of

. To maximize the chances of finding the global minimum of , several initialization parameters and therefore more training is needed, which increases the computing time.

, several initialization parameters and therefore more training is needed, which increases the computing time.

Another method is to optimize separately the parameters of the hidden layer (the centers  and the widths

and the widths ) by genetic algorithms and the synaptic weights between the hidden layer and output layer by the gradient descent method.

) by genetic algorithms and the synaptic weights between the hidden layer and output layer by the gradient descent method.

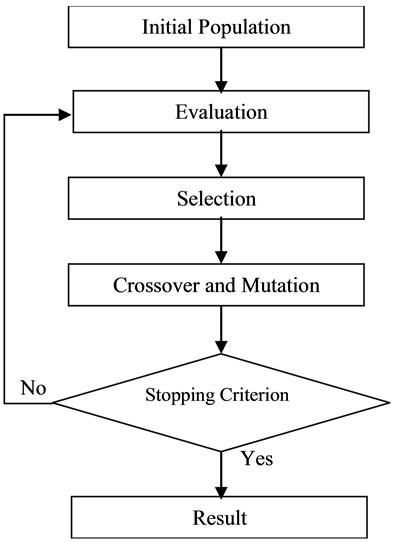

3.2.2. Optimization of RBF Using Genetic Algorithms

The genetic algorithm is an optimization algorithm based on techniques derived from genetics and natural evolution: crossover, mutation, selection. The GA is often used for optimization of RBF [34-38].

In this paper, the GA is used in order to optimize separately the parameters of the hidden layer (the centers  and the widths

and the widths ) of the RBF model.

) of the RBF model.

To find suitable parameters, five elements of GA are called:

• A population is generated randomly. The population size is chosen to achieve a compromise between computation time and solution quality.

• The evaluation of each individual is performed by an evaluation function called fitness function. This function represents the only link between the physical problem and GA. In this paper, the used fitness function is given by the following equation.

(24)

(24)

with  and

and  are respectively the

are respectively the  and the

and the  which are used also in the following equation:

which are used also in the following equation:

(25)

(25)

• Once the evaluation of generation is realized, it makes a selection from the fitness function. In this paper, the tournament selection is used.

• The crossover operator is designed to enrich the diversity of the population by manipulating the genes of individuals existing in the population. In the other hand, the mutation operator involves the inversion of a bit in a chromosome. The mutation that mathematically guarantees the global optimum can be reached.

• The stopping criterion indicates that the solution is sufficiently approximate the optimum. In this paper, the maximum number of generations is chosen as stopping criterion.

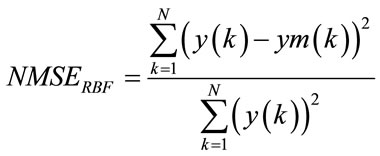

Figure 2 shows the organization of GA to find the minimal parameters of the hidden layer.

The obtained parameters ( ,

, ) by GA are used also in the following equation:

) by GA are used also in the following equation:

(26)

(26)

and the synaptic weights are calculated using the gradient descend method:

(27)

(27)

4. Comparative Study of Models

The effectiveness of the suggested methods applied to

Figure 2. Organization of genetic algorithm.

the identification of behavior of two nonlinear timevarying systems are demonstrated by simulation experiments.

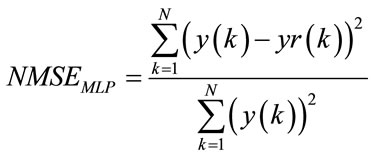

The performance of MLP and RBF models are evaluated by Normalized root Mean Square Error between the system output and the model output, denoted .

.

(28)

(28)

and

(29)

(29)

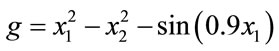

4.1. Nonlinear Time Varying-System

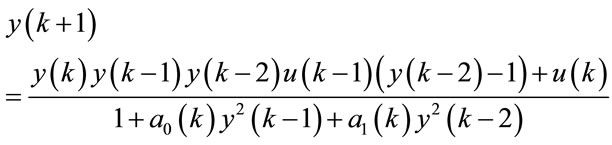

We consider the nonlinear time-varying system described by input-output model:

(30)

(30)

with:

(31)

(31)

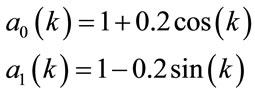

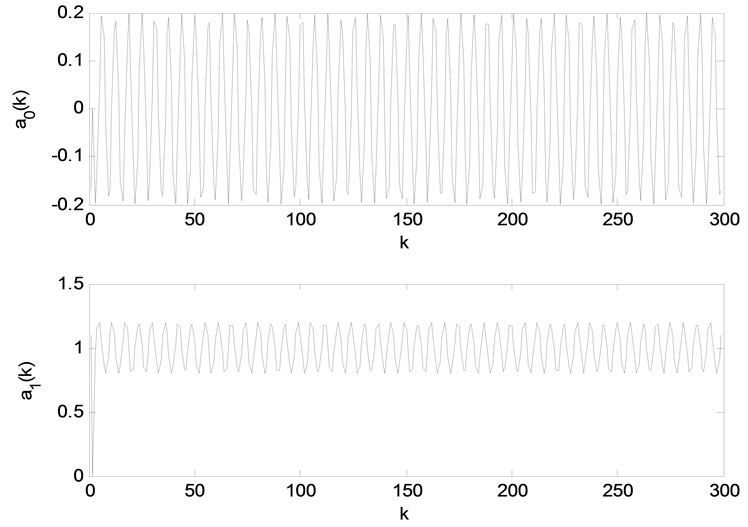

The trajectory of  and

and  are given in figure 3.

are given in figure 3.

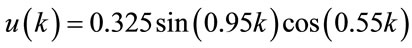

The input  is sinusoidal signal and it is defined by the following equation:

is sinusoidal signal and it is defined by the following equation:

Figure 3. a0(k) and a1(k) trajectories.

(32)

(32)

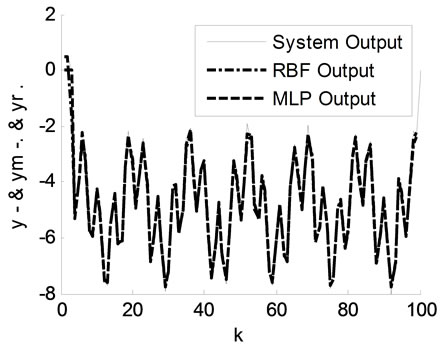

In figure 4, the time-varying system responses, the MLP model and the optimized RBF model by the GD method are presented. In this simulation figure, the MLP parameters are ,

,  ,

,  and

and  . The obtained

. The obtained  is

is . However, the RBF parameters are

. However, the RBF parameters are ,

,  and

and . The obtained

. The obtained  is

is  .

.

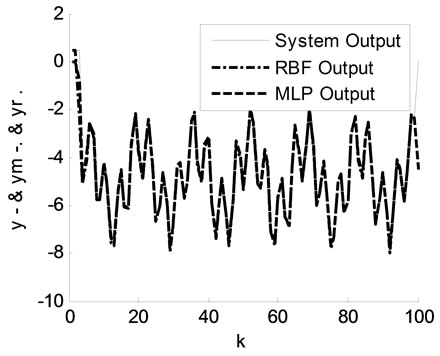

In figure 5, the time-varying system responses, the MLP model and the optimized RBF model by GA are illustrated. In this simulation the same MLP parameters are taken, while the RBF parameters are ,

,  ,

,  and

and . The obtained

. The obtained  is

is .

.

In these two figures (4 and 5), it is clear that the responses of MLP and RBF models follow the system response although the variation of parameters.

In one hand, the obtained MLP model is found by several tests of parameters and of learning. The large number of MLP parameter increases the difficulty of its use. However, the simplicity of RBF makes modeling is simple and takes much less training time.

In figure 4, the optimized RBF model by the gradient descent method depends on an expensive time of training and depends on different learning rate ( ,

,  and

and ) while, in figure 5, the parameter of RBF model (

) while, in figure 5, the parameter of RBF model ( and

and ) are finding separately by the GA and the synaptic weights (

) are finding separately by the GA and the synaptic weights ( ) by the DG method, hence the model is faster than the previous.

) by the DG method, hence the model is faster than the previous.

Noise Effect of Time-Varying Nonlinear System

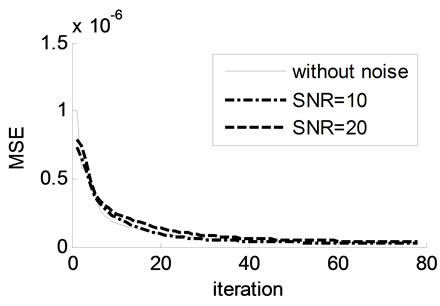

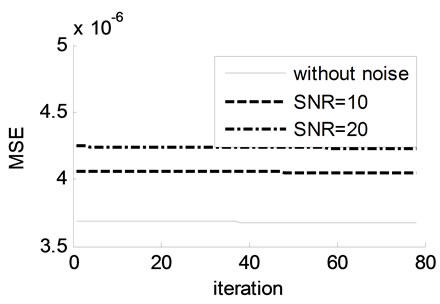

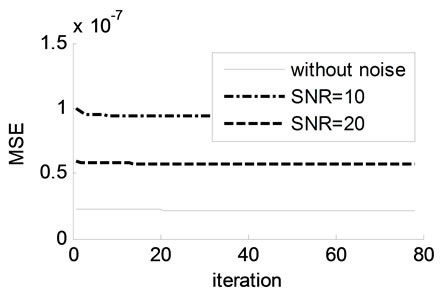

To validate the quality of the proposed algorithm, an added white noise is used. The influence of the noise of modeling, the Signal Noise Ratio (SNR) is used. The figures 6(a)-(c) present the  evolution of different SNR for the both models.

evolution of different SNR for the both models.

(33)

(33)

where  and

and  are respectively the output average value and noise average value.

are respectively the output average value and noise average value.

Figure 4. The responses of time-varying system, MLP model and the optimised RBF model by GD.

Figure 5. The responses of time-varying system, MLP model and the optimised RBF model by GA.

(a)

(a) (b)

(b) (c)

(c)

Figure 6. Evolution of MSE of different SNR: (a) MLP; (b) Optimized RBF model with GD method; (c) Optimized RBF model with GA.

In these three figures 6(a)-(c), we remark firstly the error goes down when the SNR value goes high, then the lowest MSE is obtained when the GA is used (figure 6(c)). Finally, in these all figures we see that the responses of MLP and RBF models follow the time-varying system response despite of the variation of parameters and an added noise.

4.2. Chemical Reactor

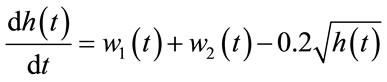

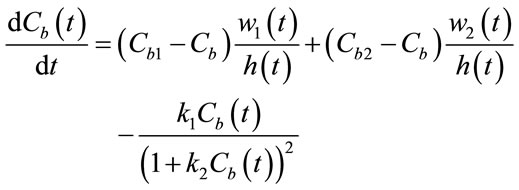

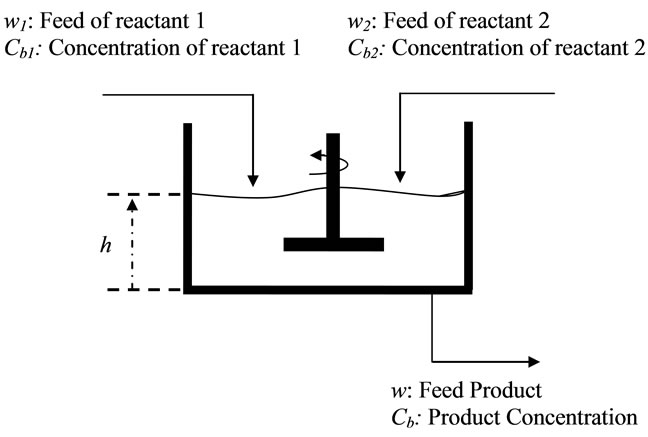

To test the effectiveness of the MLP and RBF models we test them on a Continuous Stirred Tank Reactor, CSTR, which is a type of slowly time-varying nonlinear system used for the conduct of the chemical reactions [39-41]. However, the input-output are used in discrete time. A diagram of the reactor is given in the figure 6. The physical equations describing the process are (34) and (35):

(34)

(34)

(35)

(35)

where  is the height of the mixture in the reactor,

is the height of the mixture in the reactor,  (respectively

(respectively ) is the feed of reactant 1 (respectively reactant 2) and

) is the feed of reactant 1 (respectively reactant 2) and  (respectively

(respectively ) is the concentration of reactant 1(respectively reactant 2).

) is the concentration of reactant 1(respectively reactant 2).  is the feed product of reaction and its concentration is

is the feed product of reaction and its concentration is .

. ,

,  ,

,  and

and  are consumption reactant rate. They are assumed to be constant. The temperature in the reactor is assumed constant and equal to the ambient temperature. The feed of reactant

are consumption reactant rate. They are assumed to be constant. The temperature in the reactor is assumed constant and equal to the ambient temperature. The feed of reactant  and the concentration

and the concentration  are the input of the process however

are the input of the process however  represents its output. A diagram of the reactor is given in the figure 7.

represents its output. A diagram of the reactor is given in the figure 7.

For the purpose of the simulations the CSTR model of the reactor provided with Simulink-Matlab is used.

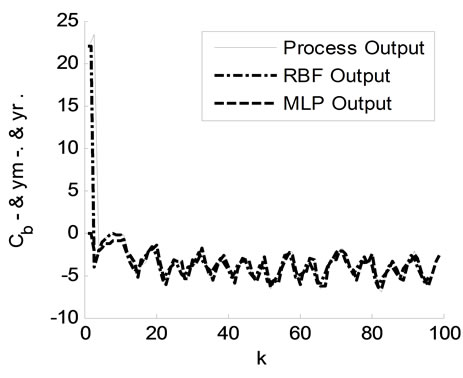

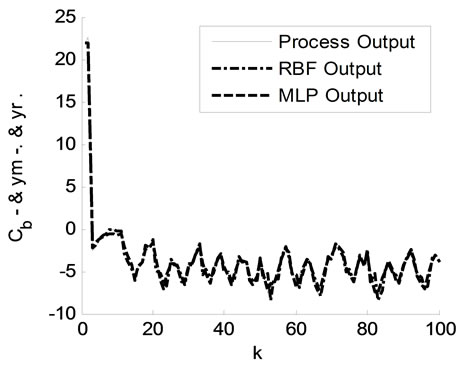

In figure 8, the responses of the chemical reactor, the model that produced by MLP and optimized RBF model by the gradient descent method are presented. In figure 9, the responses of the chemical reactor, the MLP model and the optimized RBF model by genetic algorithms are illustrated.

In figures 8 and 9, the responses of the optimized RBF model by the GD method and MLP model follow the response of chemical reactor. Indeed, in figure 8, the MLP model is carried out with 5 neurons in input layer, 25 neurons in first hidden layer, 22 neurons in hidden layer and the learning rate equal to 0.4. However, the RBF model depends only 3 layers, the second and the only hidden layer contains 11 neurons. The used parameters in the GD method are ,

,  and

and . In contrary, in Figure 9, the parameter of

. In contrary, in Figure 9, the parameter of

Figure 7. Chemical reactor diagram.

Figure 8. The responses of process, MLP model and the optimized RBF model by GD.

Figure 9. The responses of process, MLP model and the optimized RBF model by GA.

RBF model are optimized separately ( and

and ) using GA and (

) using GA and ( ) using gradient descent. These parameters are

) using gradient descent. These parameters are ,

,  ,

,  and

and .

.

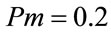

The NMSE is given in the table below:

From this table, the NMSE computed in the RBF model optimized by the genetic algorithms method is lower than that found by applying the gradient descent method which proves that the evolutionary algorithms give good accuracy for modeling methods of dynamical systems.

5. Conclusion

This paper has dealt with the study and the comparison of two systems modeling techniques the multilayer network model and the radial basis function neural network model. These two approaches are applied in a class of nonlinear system with time-varying parameters. It has been shown that the MLP architecture depends on various parameters and of course a much training time. However, the RBF model depends on the synaptic weights, center and width of its function. In this paper, the RBF model is optimized by gradient descent method and genetic algorithms. Each optimized RBF models are compared with multilayer perceptron. Mean square error is carried out to evaluate performance of both models and the influence of an additive noise on the identification qualities. These models have been tested for modeling of chemical reactor and results are successful. The RBF model optimized by genetic algorithms showed good performance compared to that optimized by gradient descent method.

REFERENCES

- V. T. S. Elanayar and C. S. Yung, “Radial Basis Function Neural Network for Approximation and Estimation of Nonlinear Stochastic Dynamic,” IEEE Transactions on Neural Networks, Vol. 5, No. 4, 1994, pp. 594-603. doi:10.1109/72.298229

- P. Borne, M. Benrejeb and J. Haggege, “Les Réseaux de Neurones. Présentation et Applications,” Editions Ophrys, Paris, 2007.

- S. Chabaa, A. Zeroual and J. Antari, “Identification and Prediction of Internet Traffic Using Artificial Neural Networks,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 3, 2010, pp. 147-155.

- A. Errachdi, I. Saad and M. Benrejeb, “On-Line Identification Method Based on Dynamic Neural Network,” International Review of Automatic Control, Vol. 3, No. 5, 2010, pp. 474-479.

- H. Vijay and D. K. Chaturvedi, “Parameters Estimation of an Electric Fan Using ANN,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 1, 2010, pp. 43-48.

- A. Mishra and Zaheeruddin, “Design of Hybrid Fuzzy Neural Network for Function Approximation,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 2, 2010, pp. 97-109.

- A. Errachdi, I. Saad and M. Benrejeb, “Internal Model Control for Nonlinear Time-Varying System Using Neural Networks,” 11th International Conference on Sciences and Techniques of Automatic Control & Computer Engineering, Monastir, 19-21 December 2010, pp. 1-13.

- Y. Tan, “Time-Varying Time-Delay Estimation for Nonlinear Systems Using Neural Networks,” International Journal of Applied Mathematics and Computer Science, Vol. 14, No. 1, 2004, pp. 63-68.

- R. Sollacher and H. Gao, “Towards Real-World Applications of Online Learning Spiral Recurrent Neural Networks,” Journal of Intelligent Learning Systems & Applications, Vol. 1, No. 1, 2009, pp. 1-27.

- S. H. Ling, “A New Neural Network Structure: Node-toNode-Link Neural Network,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 1, 2010, pp. 1- 11.

- J. C. Garcia Infante, J. Jesús Medel Juárez and J. C. Sánchez García, “Evolutive Neural Net Fuzzy Filtering: Basic Description,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 1, 2010, pp. 12-18.

- K. N. Sujatha and K. Vaisakh, “Implementation of Adaptive Neuro Fuzzy Inference System in Speed Control of Induction Motor Drives,” Journal of Intelligent Learning Systems & Applications, Vol. 2, No. 2, 2010, pp. 110- 118.

- R. H. Middleton and G. C. Goodwin, “Adaptive Control of Time-Varying Linear Systems,” IEEE Transactions on Automatic Control, Vol. 33, No. 2, 1988, pp. 150-155. doi:10.1109/9.382

- F. Giri, M. Saad, J. M. Dion and L. Dugard, “Pole Placement Direct Adaptive Control for Time-Varying Ill-Modeled Plants,” IEEE Transactions on Automatic Control, Vol. 35, 1990, pp. 723-726. doi:10.1109/9.53553

- K. S. Tsakalis and P. A. Ioannou, “Linear Time-Varying Systems: Control and Adaptation,” Prentice-Hall, Upper Saddle River, 1993.

- R. Marino and P. Tomei, “Adaptive Control of Linear Time-Varying Systems,” Automatica, Vol. 39, No. 4, 2003, pp. 651-659. doi:10.1016/S0005-1098(02)00287-X

- R.-H. Chi, S.-L. Sui and Z.-S. Hou, “A New DiscreteTime Adaptive ILC for Nonlinear Systems with TimeVarying Parametric Uncertainties,” ACTA Automatica Sinica, Vol. 34, No. 7, 2008, pp. 805-808. doi:10.3724/SP.J.1004.2008.00805

- M. R. Berthold, “A Time Delay Radial Basis Function Network for Phoneme Recognition,” IEEE Rerthold Intel Corporation, Santa Clara, 1994, pp. 4470-4472.

- M. Zarouan, J. Haggège and M. Benrejeb, “Anomalies de Fonctionnement de Systèmes Dynamiques et Modélisation par Réseaux de Neurones des Non-Linearités: Cas de la Démultiplication de Fréquence,” Premières Journées Scientifiques des Jeunes Chercheurs en Genie Electrique et Informatique, 23-24 March 2001, Sousse-Tunisie, pp. 123-128.

- J. Haggège, M. Zarouan and M. Benrejeb, “Anomalies de Fonctionnement et Modélisation de systèMes Dynamiques par réSeaux de Neurones: Cas du Phénomène de Saut,” Premières Journées Scientifiques des Jeunes Chercheurs en Genie Electrique et Informatique, 23-24 March 2001, Sousse-Tunisie, pp. 134-138.

- S. Allali and M. Benrejeb, “Application des Réseaux de Neurones Multicouches pour le Choix Optimal des Machines Outils,” 4 ème Conférence Internationale JTEA, Hammamet, 12-14 March 2006.

- H. Bouziane, B. Messabih and A. Chouarfia, “Prédiction de la Structure 2D des Protéines par les Réseaux de Neurones,” Communications of the IBIMA, Vol. 6, 2008, pp. 201-207.

- J. Park and I. Sandberg, “Universal Approximation Using Radial-Basis-Function Networks,” Neural Computation, Vol. 3, No. 2, 1991, pp. 246-257. doi:10.1162/neco.1991.3.2.246

- A. Sifaoui, A. Abdelkrim, S. Alouane and M. Benrejeb, “On new RBF Neural Network Construction Algorithm for Classification,” SIC, Vol. 18, No. 2, 2009, pp. 103- 110.

- A. Golbabai, M. Mammadov and S. Seifollahi, “Solving a System of Nonlinear Integral Equations by an RBF Network,” Computer and Mathematics with Applications, Vol. 57, No. 10, 2009, pp. 1651-165. doi:10.1016/j.camwa.2009.03.038

- X. Chengying and C. S. Yung, “Interaction Analysis for MIMO Nonlinear Systems Based on a Fuzzy Basis Function Network Model,” Fuzzy Sets and Systems, Vol. 158, No. 18, 2007, pp. 2013-2025. doi:10.1016/j.fss.2007.02.012

- S. S. Chiddarwar and N. R. Babu, “Comparison of RBF and MLP Neural Networks to Solve Inverse Kinematic Problem for 6R Serial Robot by a Fusion Approach,” Engineering Applications of Artificial Intelligence, Vol. 23, No. 7, 2010, pp. 1-10.

- R. P. Brent, “Fast Training Algorithms for Multilayer Neural Net,” IEEE Transactions on Neural Networks, Vol. 5, No. 6, 1991, pp. 989-993.

- M. T. Hagan and M. Menhaj, “Training Feedforward Networks with Marquardt Algorithm,” IEEE Transactions on Neural Networks, Vol. 5, No. 6, 1994, pp. 989- 993. doi:10.1109/72.329697

- R. A. Jacobs, “Increased Rates of Converge through Learning Rate Adaptation,” Neural Networks, Vol. 1, No. 4, 1988, pp. 295-307. doi:10.1016/0893-6080(88)90003-2

- D. C. Park., M. A. El Sharkawi and R. J. Marks II, “An Adaptively Trained Neural Network,” IEEE Transactions on Neural Networks, Vol. 2, No. 3, 1991, pp. 334-345. doi:10.1109/72.97910

- M. Schoenauer and Z. Michalewicz, “Evolutionary Computation,” Control and Cybernetics, Vol. 26, No. 3, 1997, pp. 307-338.

- A. Errachdi, I. Saad and M. Benrejeb, “Neural Modeling of Multivariable Nonlinear System. Variable Learning Rate Case,” Intelligent Control and Automation, Vol. 2, No. 3, 2011, pp. 165-175.

- W. L. Cheol and C. S. Yung, “Growing Radial Basis Function Networks Using Genetic Algorithm and Orthogonalization,” International Journal of Innovative Computing, Information and Control ICIC International, Vol. 5, No. 11A, 2009, pp. 3933-3948.

- G. Lin, H. De-Shuang and Z. Wenbo, “Combining Genetic Optimization with Hybrid Learning Algorithm for Radial Basis Function Neural Networks,” Electronic Letters, Vol. 39, No. 22, 2003, pp. 1600-1601.

- T. Renato and O. M. J. Luiz, “Selection of Radial Basis Functions via Genetic Algorithms in Pattern Recognition Problems,” 10th Brazilian Symposium on Neural Networks, Salvador, 26-30 October 2008, pp. 71-176.

- M. L. Huang and Y. H. Hung, “Combining Radial Basis Function Neural Network and Genetic Algorithm to Improve HDD Driver IC Chip Scale Package Assembly Yield,” Expert Systems with Applications, Vol. 34, No. 1, 2008, pp. 588-595. doi:10.1016/j.eswa.2006.09.030

- R. Niranjan and G. Ranjan, “Filter Design Using Radial Basis Function Neural Network and Genetic Algorithm for Improved Operational Health Monitoring,” Applied Soft Computing, Vol. 6, No. 2, 2006, pp. 154-169. doi:10.1016/j.asoc.2004.11.002

- C. Junghui and H. Tien-Chih, “Applying Neural Networks to On-Line Updated PID Controllers for Nonlinear Process Control,” Journal of Process Control, Vol. 14, No. 2, 2004, pp. 211-230. doi:10.1016/S0959-1524(03)00039-8

- J. Van Grop, J. Schoukens and R. Pintelon, “Learning Neural Networks with Noise Inputs Using the Errors in Variables Approach,” IEEE Transactions on Neural Networks, Vol. 11, No. 2, 2000, pp. 402-414. doi:10.1109/72.839010

- H. Demuth, M. Beale and M. Hagan, “Neural Network Toolbox 5,” User’s Guide, The MathWorks, Natick, 2007.

Nomenclature

: process output,

: process output,

: process input,

: process input,

: unknown function,

: unknown function,

: output delay,

: output delay,

: input delay,

: input delay,  ,

,

: output of MLP,

: output of MLP,

: input vector of (MLP or RBF),

: input vector of (MLP or RBF),

: number of hidden layer,

: number of hidden layer,

: number of nodes of input layer,

: number of nodes of input layer,

: number of nodes of hidden layer,

: number of nodes of hidden layer,

: number of nodes of output layer,

: number of nodes of output layer,

: synaptic weights of MLP,

: synaptic weights of MLP,

: activation function,

: activation function,

: learning rate,

: learning rate,

: regularization coefficient,

: regularization coefficient,

: output of RBF,

: output of RBF,

: hidden radial basis function,

: hidden radial basis function,

: hidden center,

: hidden center,

: hidden width,

: hidden width,

: synaptic weights of RBF,

: synaptic weights of RBF,

: crossover probability,

: crossover probability,

: mutation probability,

: mutation probability,

: size generation,

: size generation,

: white noise,

: white noise,

: number of observationsSNR: Signal Noise Ratio.

: number of observationsSNR: Signal Noise Ratio.