Journal of Software Engineering and Applications

Vol.5 No.7(2012), Article ID:19725,8 pages DOI:10.4236/jsea.2012.57052

Aspects of Replayability and Software Engineering: Towards a Methodology of Developing Games

![]()

Lane Department of Computer Science & Electrical Engineering, West Virginia University, Morgantown, USA.

Email: kralljoe@gmail.com, tim@menzies.us

Received April 13th, 2012; revised May 10th, 2012; accepted 21st, 2012

Keywords: Games; Entertainment; Design; Software; Engineering; Replayability

ABSTRACT

One application of software engineering is the vast and widely popular video game entertainment industry. Success of a video game product depends on how well the player base receives it. Of research towards understanding factors of success behind releasing a video game, we are interested in studying a factor known as Replayability. Towards a software engineering oriented game design methodology, we collect player opinions on Replayability via surveys and provide methods to analyze the data. We believe these results can help game designers to more successfully produce entertaining games with longer lasting appeal by utilizing our software engineering techniques.

1. Introduction

The video game entertainment industry has acquired enormous value in the recent past, eclipsing over $25 billion in annual revenue per year according to the ESA (Entertainment Software Association) in 2011 [1]. All individuals involved in creating video games must take their roles very seriously in light of the industry they hope to empower. Perhaps the most important role is that of the software engineer. Much of game development is based on experience; the risk of releasing a game-product is high. Project failures aside, only 20% of released video games are successful in the sense of turning a profit [2]. This is a strikingly low number that we hope to see improved by utilizing software engineering techniques described within this paper.

Towards the improvement of this number, we should like to study the factors that determine success. Two factors of interest are Replayability and Playability. We will discuss these in further detail in Section 2.

We note an issue of tremendous economic significance as follows. Gaming vendors across the world compete for the attention of audiences with access to many vendors. These vendors are very interested, to say the least, in how to attract and retain their market share via devoted, repeat customers. We believe that the concept of Replayability is essential to attracting such a loyal player base, and is important to study.

The main result of this paper is as follows: Games are different. That is, in regarding Replayability, we find that effects which hold for general classes of games do not hold for specific games. To put that another way, lessons learned (about Replayability) for one group of games may not hold for others.

This result has much practical significance; we do not know, yet, how to reason about general classes of games (perhaps because we do not yet know how to best cluster the space of all games). Regardless, our practical message is clear: we may need to reason about each new game in a different manner.

This raises the issue of how to reason about games. In this paper we propose the following:

1) Gather data.

2) Classify data.

3) Find differences between data and classes.

Our third point is somewhat tricky since it requires a summary of some quite complex statistics. In this paper, we propose JDK Diagrams1 where two nodes are linked if they are statistically indistinguishable. As shown in Section 5, JDK Diagrams offer a very succinct visualization of some quite complex statistical reasoning.

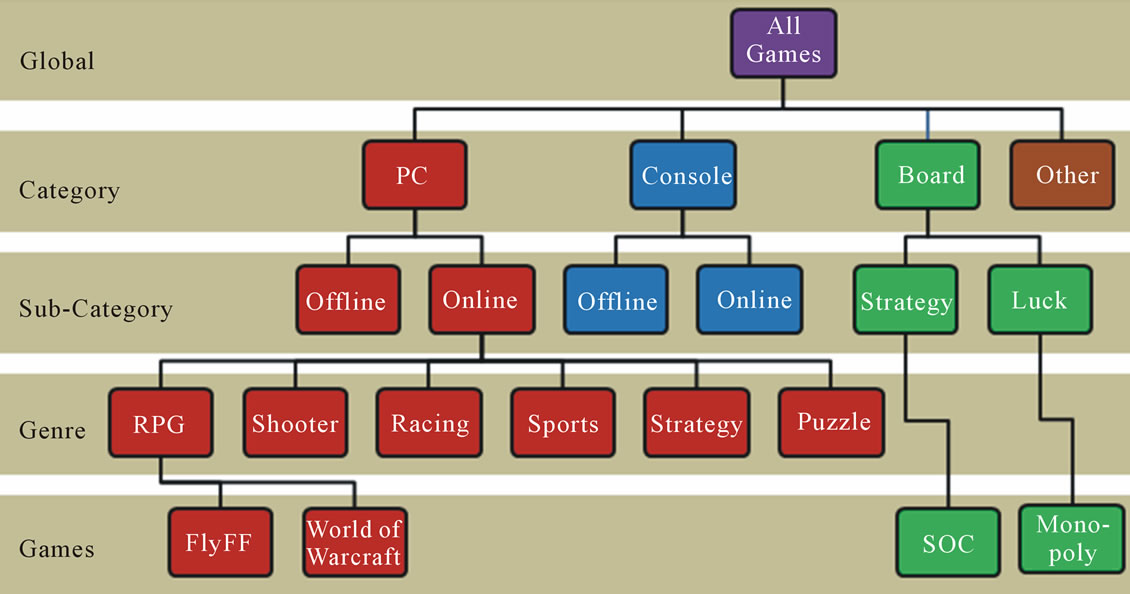

In this paper, we begin an investigation on the existence of ecological effects between two categories (nodes from Figure 1) of games. It would be of utmost interest to find out that effects which work for the global category of all games is what works for any individual game— such a relationship is an ecological effect between those two categories, but it would be extremely naïve to believe it exists. We are more interested in finding such relationships for categories of games such as the categories

Figure 1. Visualization of gaming categories. Each node is a category of gaming, and the leaves are instances of games.

that we will describe in section 4.

The remainder of this paper is segmented as follows. In Section 2 we provide a background on entertainment in games, expressing our views on game play. In section 3 we address our core concept: Replayability. Section 4 holds our methods for data collection and the survey instrumentation used. An analysis of the gaming data is made in section 5, using our JDK Diagrams, in which we argue the existence of different ecological effects. In section 6 we boldly propose a game design methodology that can be used to make “better” games, using our data. Finally in section 7 we draw our conclusions and suggest future directions of our research.

Note: Our data and instrumentation are made publically available on the web; refer to these at the last page of our paper, listed at the end of the references section. These two links point to our resulting datasets as well as a generic games survey that we developed for use in gathering player opinions on Replayability.

2. Background

The following subsections provide background information on our gaming views to accommodate the remainder of this paper.

2.1. Playability and Replayability

Two important concepts or factors of success in the release of video games are Playability and Replayability; the latter is discussed widely, for example in [3-6]. These two concepts describe how well the player base receives the game, e.g. enjoyability. However, such concepts hinge into the field of cognitive psychology. One concept that we see a lot within this field is that of immersion, and is the subject of many papers [7-11], and sometimes referred instead as to mediated experience or presence.

Immersion provides the user with a sense of being in the entertainment world as opposed to the real world. When immersed into the virtual entertainment world as though it were real, a person’s mind is easily taken adrift as the time seems to flow by quickly.

To accommodate the idea of immersion in games, there has long ago been an idea originally proposed by Johan Huizinga in Homo Ludens, described as the Magic Circle [12]. It is worth mentioning however, that Salen and Zimmerman, authors of Rules of Play [10], are often accredited with first applying the idea of the magic circle to games. This imaginary sphere of sorts is a mental construct developed by players when they play video games. When inside the magic circle, the game world is believable and the player is immersed into the virtual world. Players enter and exit from the circle often, so we are often interested in preventing distractions. To be more precise with our terminology, and as an overview:

• Immersion is the process of developing and entering into the magic circle.

• Distraction is the process of exiting the circle and breaking the provided illusion.

Distractions are usually caused by disruptions to game play such as complex interfaces, difficult controls or annoying graphics and sound. Distractions may also be caused by software runtime issues, network delay or uncontrollable physical distractions in the player environment.

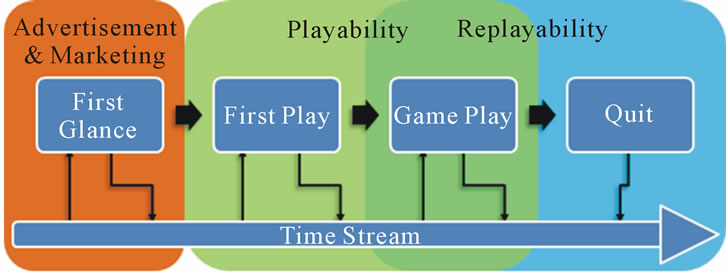

We say that if distractions are limited, then immersion is possible. In other words, Playability is what allows players to immerse with a game. Research on this area is one of three main areas (along with Advertising & Marketing, and Replayability) of game studies described in Figure 2. We feel these three areas are among the most important research areas of games to study as software engineers.

Figure 2. Game play stages.

In contrast to Playability, we describe Replayability as a measure of In-Game Retention (IGR). To reiterate our terminology:

• Playability is the property of any activity that indicates if the activity can yield enjoyability. That is, a binary measure of whether a game can be enjoyed or not.

• Replayability is a quantifiable measure to the enjoyability of a game. That is, a measure of how long a person can enjoy a game before it becomes boring.

Consider the simple activity of bouncing a tennis ball off of a wall and catching it. Playability then depends on how the ball bounces back. If the wall is smooth and the ball can bounce, then the activity is playable. However, how long can a person bounce the ball before they become bored? Replayability describes the answer to this question. Video games must possess both of these qualities.

2.2. Stages of Game Play

Figure 2 distinguishes three main areas of research focus for game developers. It also represents stages of game play. These stages represent phases of game play that the player cycles through when playing any video game, and they also present a general idea of what is important to study as game developers, through learning why players quit. Below we describe each in detail.

Stage 1: First Glance. This first stage involves a person becoming aware of a certain game title and making decisions to purchase it. Factors such as box art, game name, renown and player reviews (as well as their personal taste in games) affect a person’s decisions. Vendors will want their game to get lots of first glances, so advertising and marketing strategies must be discussed to release a successful game.

Stage 2: First Play. In the second stage, players have already made the purchase and will begin playing their game for the first time. Prior to play, expectations of the game are made by the player. One such major expectation is playability of the game. If the game cannot limit distractions (as defined earlier), then the game cannot be fun, and the player will not pass this stage. Other possible expectations within the game concern the quality of graphics, total play hours, and many others. Another very important expectation is of Replayability. Players hope that their game will last a while.

First Play may not seem important if the gamer has already purchased the game, but this could not be more wrong. If a player is disappointed in their purchase (i.e. expectations not met), then this will negatively affect the game’s renown. As players write reviews and discuss games socially, a game may find itself, and other releases by the same vendor, struggling to sell. So indeed, First Play is very important regarding the success of a release.

Stage 3: Game Play. In this stage, which may begin any time after a game’s beginning, we are faced with our question on how to keep a player in the game. The premise of Playability remains of great concern, but Replayability is of most importance. A virtual world is only so vast; players will eventually become so accustomed to its environment such that the freshness of being somewhere exciting is lost. The goal of replayability is to delay stage 4 as long as possible. As such, replayability will often make or break a game.

Stage 5: Quit. This stage may sound like something undesirable, but it is necessary to all forms of entertainment, and especially of games. All players will eventually reach this stage—either by completing the game, exhausting its value, or finding one’s self at an impasse with their expectations of the game. They will then enter into what we have labeled as the Time Stream. Here, the game is not being played so that what once lost its luster and freshness may again become replayable. In a sense, what has become boring has been given a chance to “recharge” back into an enjoyable experience.

3. Aspects of Replayability

After we experience something for the first time, often we would like to experience it once more. If so, then we say the experience is replayable. We aim to use Replayability in a quantifiable way, by breaking it into six aspects.

Lucian Smith breaks Replayability into different aspects fairly well concerning adventure games: Mastery, Impact, Completion and Experience [3]. In our studies, we add two more to this list: Social and Challenge. These two additions help accommodate the variety of different games. We will describe each aspect in detail as follows.

1) Playing for Social. We play for social reasons, be it face-to-face or over the internet with strangers. Social reasons can be diverse; being social itself is sometimes a form of entertainment in being able to reach out and talk to others and make new friends (or enemies).

2) Playing for Challenge. We play games because we see it as a challenge in life. Overcoming these often frustrating obstacles will produce an effect of euphoria, as can be described by Zillmann’s Excitation Transfer theory [13] (Fun is amplified by many failures before success). We then often turn that euphoria into bragging rights.

3) Playing for Experience. The reasons we watch movies or read books can be replicated as reasons why we play games. Perhaps the most nebulous of all aspects; we play games because they appeal to us in genuinely unique ways that provide long lasting, memorable experiences.

4) Playing for Mastery. We play games because we want to master all that the game has to offer. We play competitively and want to become the best. Mastery is about pushing one’s self towards success, as is heavily emphasized in sports and other forms of competition all over the world. After all, gaming is a sport, too.

5) Playing for Impact. Sometimes called Random, we play games because we enjoy some level of control over events in the game. When events are specifically fated, we lose this level of control in the game. Without free will to do as the player wishes, the effect of Impact is lost.

6) Playing for Completion. We play games because we enjoy being able to complete them and relish in the accomplishments associated with uncovering all aspects in a game. Story-driven games particularly rely on the Completion aspect, as do movies and books. We play, watch or read because we want to know what happens next.

4. Research Methods and Data

This section discusses our instrumentation for data collection. We deployed several surveys via online web forums to collect player opinions regarding Aspects of Replayability in games. As noted previously, our data is made publically available for the purposes of reproducibility.

In this paper we discuss surveys about several categories of games; two of which are specific games, and the other two are general category of games. Note that in the future we will be gathering data for many other categories as well. The choices to study these categories were based on personal interest and popularity, and are listed just below.

• FlyFF = Fly For Fun is a popular free to play Massively Multiplayer Online Role Playing Game (MMORPG).

• SOC = The Settlers of Catan is a popular board game developed by Klaus Teuber.

• All-BG = All Board Games; the general category consisting of all games played via table-top settings with a game “board” of sorts.

• All-G = All Games; the global category consisting of all games across all general categories of gaming. We may also refer to this category as the Frattesi category, named after the first author in [14].

Our fourth gaming category is a study by Frattesi et al. [14] where data was collected to generalize all different kinds of games. Our instrumentation is based on their methods. Although Mastery was omitted from the study, Frattesi et al. report that Experience is the dominant factor, i.e. all other Aspects of Replayability scored lower and Experience was most important. It is necessary to note however, that their study was dominated significantly by certain games; Call of Duty (COD), League of Legends (LoL), and Halo accounted for at least one-third of the sample. This, we expect, skews the data towards these dominating categories of games and encourages us to study these games in the future.

Basic demographics were sampled, along with standard gaming data such as how often respondents played games. This kind of information helps to filter some bias around the results. Survey questions regarding Aspects of Replayability were presented as statements in which the respondent was asked to indicate how much they agreed with the statement (definitions included in the statements) on a five-point Likert Scale. An example is as follows:

“Rate how much you agree using the scale below. Mastery: You have a goal of becoming the best and mastering every concept and skill within the game. In other words, you play for Mastery.”

Each survey was deployed on public forums across the World Wide Web (WWW), for periods roughly a week long. Of sample sizes, our surveys reported 242, 113, and 111 individual respondents, respectively for FlyFF, SOC, and All-BG. The Frattesi dataset reported 256 respondents.

Key threats to validity concern whether forum communities represent a random sample or not. Our data only samples players who visit online forums. While FlyFF is an online game that relies on its forums for community interaction, the threat is of higher concern for board games, considering that board gamers don’t need the internet to play. It was suggested to investigate public gatherings of board game groups to collect opinions directly.

5. Analysis

For the purpose of a statistical analysis, we reorganized our data (including Frattesi) to construct a single dataset. The samples of this dataset come from the responses of each survey and are organized into three columns: Category (X1), Aspect (X2) and Score (Y). Category refers to a category of gaming, and Aspect refers to one of the Aspects of Replayability. Score is the response of a respondent, mapped into the [0, 1] space (0 = strongly disagree, 1 = strongly agree), and this represents a player’s opinion on the importance of that aspect of Replayability for that category. In other words, the score is a measure of Replayability for that category and aspect.

We are interested in the difference of scores between different categories regarding each of the Aspects of Replayability. The analysis of this dataset can be overviewed as follows.

1) Check for evidence of differences.

2) Identify specific differences.

3) Visualize differences.

4) Quantify differences.

5) Analyze differences.

Step 1: Check for evidence of differences. As a first step, an Analysis of Variance test (ANOVA) at the 95% level of confidence is performed on the dataset. This tells us that differences among the samples in the dataset do indeed exist, and are worth studying further. To clarify, a “difference” is defined as a statistically significant difference in the dataset where differences affect the response variable (Y). If there were no differences in our data (according to the ANOVA test), then it is of little use to further analyze the data.

Step 2: Identify specific differences. Our next step is to use a test of multiple comparisons to uncover the specifics of these differences within the dataset. A number of methods of multiple comparisons are available, varying in power and error of test. Towards the more conservative (less error) end of the spectrum, we use a method called Tukey’s Honestly Significant Difference (Tukey’s HSD) [15]. This procedure is simpler than some other methods in that it uses a single critical difference. Since the dataset has varying group sizes, we instead use a slight variant of the method called the Tukey-Kramer Method [16]. Groups are, for example, pairs of category and aspect such as FlyFF:Mastery. Since we have four categories and six aspects, and noting the omission of the Frattesi:Mastery group, there are 23 groups. The method of Tukey-Kramer is summarized with the formula below. For two groups, their difference is significant only if it is larger or equal to the critical difference given at the right side of Equation (1). We apply this procedure at the 95% level of confidence.

(1)

(1)

A note of multiple comparison methods: if we consider an ordered list of means such that A > B > C > D, then we do not need to make every possible comparison. The difference |A – C| is smaller than |A – D|, so that if |A – D| is insignificant, then |A – C| will also be insignificant.

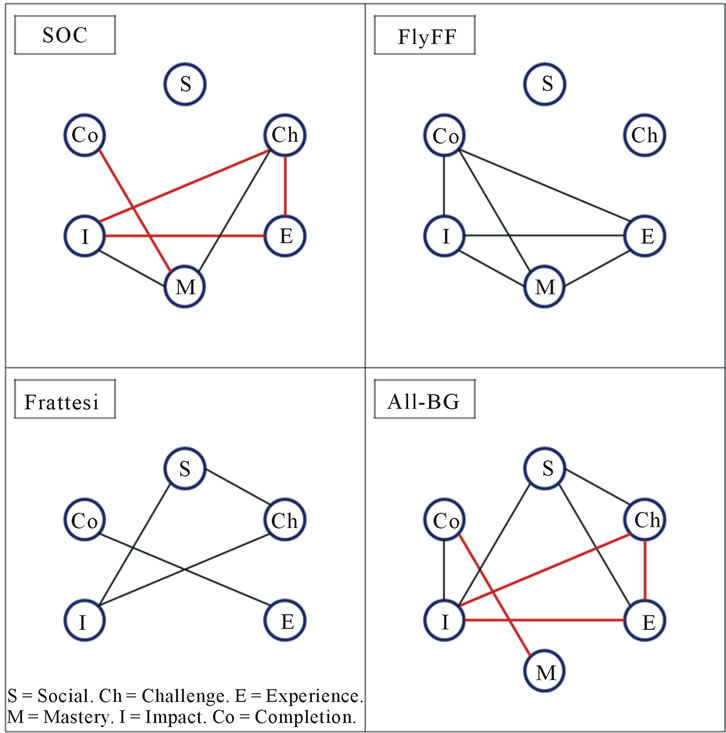

Step 3: Visualize differences. We provide visualizations of the Tukey-Kramer results by constructing simple vertex & edge graphs that we call JDK Diagrams (“Just Don’t Kare”), where each vertex represents one of the Aspects of Replayability. An edge lies between two vertices if and only if the difference between them (as given by the Tukey-Kramer result) is insignificant; i.e. we just do not care about their difference. Each category of games yields a different JDK Diagram. We show each of these in Figure 3, as undirected graphs, where each vertex is labeled via our Schemico acronym.

Step 4: Quantify differences. JDK Diagrams give us a way to visualize the results of the Tukey-Kramer method, but they also provide a method of reasoning the existence of ecological effects between categories of gaming. Based on how similar two JDK Diagrams are, we can infer whether ecological effects exist.

To compare graphs and discover how similar they are, we utilize a non-standard, simple metric of our own design as follows. Two graphs A and B are called similar if the expression  is true, where

is true, where  is defined as the number of common edges of A and B, and where

is defined as the number of common edges of A and B, and where  is defined as the number of distinct edges of A and B, and

is defined as the number of distinct edges of A and B, and  is some threshold value between 0 and 1. The value

is some threshold value between 0 and 1. The value

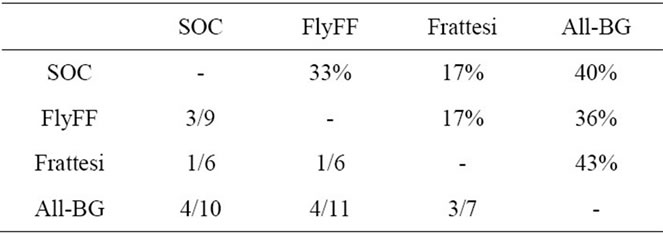

is called the similarity level of the two graphs. We provide the results of this metric applied to each JDK Diagram in Table 1.

is called the similarity level of the two graphs. We provide the results of this metric applied to each JDK Diagram in Table 1.

Step 5: Analyze differences. Finally, given a simple table of numbers to look at, we can analyze the gaming data very easily. The most similar pairs of JDK Diagrams

Figure 3. JDK Diagrams for each category. Highlighted in dark red are edges in common between the All-BG and SOC categories.

Table 1. Similarity levels between JDK Diagrams. Percent forms are shown in the top diagonal, and fractions in the lower.

are All-BG versus Frattesi and All-BG versus SOC. If we set the threshold level at 40%, then for these two categories there exist ecological effects. The existence of ecological effects here depends entirely how high or low the threshold value is. Since 40% is a low value, it may be difficult to accept that there are ecological effects, but, compared to the other numbers from Table 1, 40% is fairly high. We will need to further investigate the categories of games to learn why the number is so low, but we can try to reason about why the similarity level is low. In Figure 4, we propose what these differences are and how they affect replay in board games, providing a discussion as follows.

Board games can differ along two dimensions: number of players and intensity of play. Of intensity in playing board games, there are very competitive games which must be based on strategy, and the less competitive games based more on luck. When there are many players (>2), the aspect of social is more important. When the game is more competitive, the aspect of mastery is more important.

6. Proposing a Design Methodology

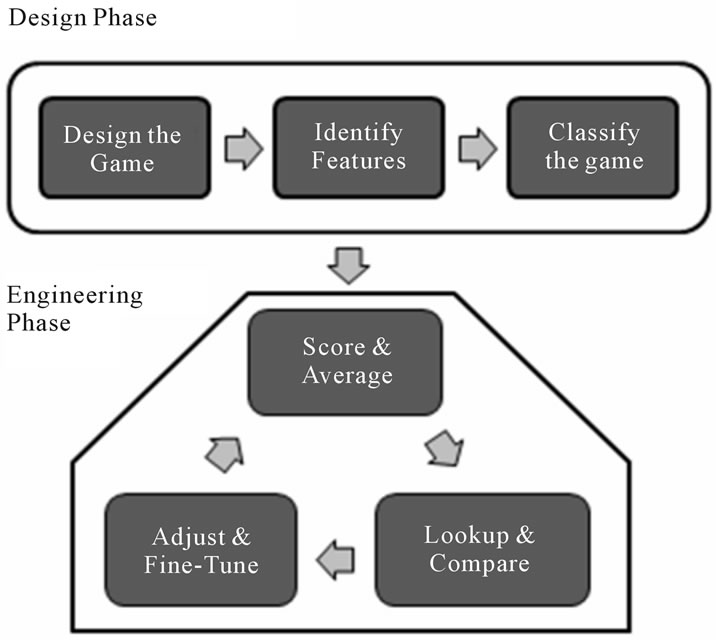

In the previous section, we attempted to show that ecological effect exists between some categories such as between SOC and All-BG. We believe there may be other ecological effects for other neighboring categories of games, such as FlyFF versus All-MMORPG. Here we propose a game design methodology (see Figure 5) to improve the success of a game’s release, which greatly depends on the existence of ecological effects.

1) List the Game’s Features. Beginning with a very basic idea of the game, plan ahead by outlining the key features contained within. We define a feature as anything that the player can do or experience as sensation within the game.

2) Estimate Scores for the Game’s Features. Estimate each feature by guessing how important they are for each of the six Aspects of Replayability, assigning values between 0 and 1, where 0 is “strongly disagree that the feature is important” and 1 is “strongly agree”.

3) Average the Feature Scores. Compute the mathematical average of scores for each aspect and feature. It

Figure 4. Expanding classes of board games into four categories based on effects to Aspects of Replayability.

Figure 5. Game design methodology.

may be necessary to weight some features based on how often they are experienced during the game.

4) Determine the Game’s Category. Estimate which category of gaming this game falls in, e.g. Competitive Board Game.

5) Lookup Scores for that Category. From learned results and previously analyzed datasets, lookup the scores for that category and discover player opinions regarding Replayability.

6) Adjust the Game. Compare the estimated averaged scores with the scores looked up from step five. This is where fine-tuning of the game takes place, and it can be done by tweaking features of the game such that they can be newly estimated and averaged to match the looked up scores of step five.

A game can be tuned so that there are more features that attribute to certain Aspects of Replayability. Below we discuss this in more detail. This section also serves to further detail each of the Aspects of Replayability.

1) Designing for Social: Games should cater to player needs of socializing with other gamers. Interaction between players must be emphasized. Features in the game should promote social play, such as teaming together and peer versus peer (PVP) combat.

2) Designing for Completion: Games should lure the player into wanting to complete either the game or parts of the game. Typically, compelling stories or attractive game play are what drives a player. Games which have many features waiting at the end often keep a player in the game until they have experienced them all.

3) Designing for Experience: It is not easy to provide guidelines for designing experience; there are no such tips for writing a good book or movie—games built for experience are no different. This is why we say this aspect is perhaps the most nebulous of all. To design for experience, the game must be unique and memorable. Superb stories, rich virtual-cultures and vast worlds are often the most memorable qualities of games.

4) Designing for Challenge: The concept of challenge is in providing features which lead gamers into feeling that only the elite can accomplish them. Often, we see this level of challenge made available in post-content material. As a premise of playability, a game must neither be too difficult nor too easy. Designing for Challenge is a task of fitting the game into the appropriate Flow Zone [17,18], where appropriate flow is much higher for very challenging aspects in the post-content.

5) Designing for Mastery: Games must provide features such as leveling up, upgrading equipment, and “training” to become as mastered as possible in the game. Competitive games boast a drive towards mastery. Of successful competitive games, the heights of mastery must be incredibly difficult to attain.

6) Designing for Impact: Randomness is often a key element within games. Although, when the game is too random, the player may feel out of control with the events in a game. When designing for impact, provide games which make the user feel completely in control of their fate in that different actions almost always change the outcome of the game. Multiple endings and nonlinearity are two examples of good impact-features.

7. Conclusions

In this paper we have provided a viewpoint on studying games from a software engineering perspective and have contributed the following.

1) A method of studying games.

2) Gaming datasets.

3) A method of analyzing our gaming datasets.

4) A methodology of using learned knowledge via our methods to develop games.

We have proposed a generalized method of studying games by collecting data, classifying the data and analyzing it. We have also proposed a way to analyze the data (JDK Diagrams report a Tukey-Kramer analysis where linked nodes show ranges that are statistically indistinguishable). Using JDK Diagrams, we have analyzed data from board games and MMORPGs, and from a prior study by Frattesi et al. In essence, we are trying to show a method of applying techniques of software engineering to the game development process.

From this paper, we urge a little research caution. In our view, researchers need to exercise more care before they prematurely generalize their results. In analyzing our data, we have shown that there are no ecological effects between instances of games (SOC/FlyFF) and All-G. Although it would be a very good result if such an ecological effect existed (we could apply what works for All-G, and it should work for any game), we instead turn to showing the existence of ecological effects between categories and instances of games. We have shown that there is a higher level of similarity for some categories, such as between SOC and All-BG. Since we believe SOC is a member of All-BG, this result is useful if we accept that ecological effects exist here. But since the level of similarity is still quite low, we may need to further deepen the categorization of such games.

We believe that it is possible to use our results to guide the development of games. Such a methodology would help designers to fine-tune their design plans and create a more enjoyable, more successful game, by changing or adding features to the game that match should better match the player expectations of that game in terms of the Aspects of Replayability.

We are interested in what other categories of games exist and in further categorizing others such that data for different games in the same category are as similar as possible. Genres of games such as Shooter and Action may not be the best classification of categories, as we wish to find ecological effects for games and their categories. In the future, we propose work on the following areas:

1) Discover or refine categories of games.

2) Collect more data.

3) Learn how to collect data faster and better.

4) Further refine how we analyze gaming data.

5) Further refine our design methodology.

REFERENCES

- Entertainment Software Association, “2011 Sales, Demographic and Usage Data: Essential Facts about the Computer and Video Game Industry,” 2012. http://www.theesa.com/facts/pdfs/ESA_EF_2011.pdf

- Computerandvideogames.com, “News: 4% Report Is False, Says Forbes Interviewee,” 2012. http://www.computerandvideogames.com/202401/4-report-is-false-says-forbes-interviewee/

- L. Smith, “Essay on Replayability,” Spod Central, 2012. http://spodcentral.org/~lpsmith/IF/Replay.summary.html

- C. Wells, “Replayability in Video Games,” Neolegacy. net, 2012. http://www.neolegacy.net/replayability-in-video-games

- E. Adams, “Replayability Part 1: Narrative,” Gamasutra, 2012. http://www.gamasutra.com/view/feature/3074/replayability_part_one_narrative.php

- E. Adams, “Replayability Part 2: Game Mechanics,” Gamasutra, 2012. http://www.gamasutra.com/view/feature/3059/replayability_part_2_game_.php

- M. Lombard and T. Ditton, “At the Heart of It All: The Concept of Presence,” Journal of Computer Mediated Communications, Vol. 3, No. 2, 1997.

- E. Brown and P. Cairns, “A Grounded Investigation of Game Immersion,” Proceedings of the CHI Conference on Extended Abstracts on Human Factors in Computing Systems, Vienna, 24-29 April 2004, pp. 1297-1300. doi:10.1145/985921.986048

- L. Ermi and F. Mäyrä, “Fundamental Components of the Gameplay Experience: Analysing Immersion,” Proceedings of the DiGRA Conference on Changing Views: Worlds in Play, Vancouver, 16-20 June 2005.

- K. Salen and E. Zimmerman, “Rules of Play: Game Design Fundamentals,” The MIT Press, Cambridge, 2004.

- A. McMahan, “Immersion, Engagement, and Presence: A Method for Analyzing 3-D Video Games,” The Video Game Theory Reader, Routledge, London, 2003, pp. 67- 86.

- J. Huizinga, “Homo Ludens: A Study of the Play Element in Culture,” Breacon Press, Boston, 1955.

- D. Zillmann, “Transfer of Excitation in Emotional Behavior,” 1983.

- T. Frattesi, D. Griesbach, J. Leith, T. Shaffer and J. DeWinter, “Replayability of Video Games,” IQP, Worcester Polytechnic Institute, Worcester, 2011.

- S. Dowdy, S. Wearden and D. Chilko, “Statistics for Research,” 3rd Edition, Wiley-Interscience, New York, 2004.

- R. Griffiths, “Multiple Comparison Methods for Data Review of Census of Agriculture Press Releases,” Proceedings of the Survey Research Methods Section, American Statistical Association, Boston, 1992, pp. 315- 319.

- M. Csikszentmihalyi, “Flow: The Psychology of Optimal Experience,” Harper Perennial, London, 1990.

- J. Chen, “Flow in Games (and Everything Else),” Communications of the ACM, Vol. 50, No. 4, 2007, pp. 31-34. doi:10.1145/1232743.1232769

NOTES

1Short for “Just Don’t Kare”, named after the first author.