Advances in Pure Mathematics

Vol.06 No.04(2016), Article ID:64966,11 pages

10.4236/apm.2016.64017

A Novel Approach to Probability

Oded Kafri

Kafri Nihul Ltd., Tel Aviv, Israel

Copyright © 2016 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 16 December 2015; accepted 21 March 2016; published 24 March 2016

ABSTRACT

When 𝑃 indistinguishable balls are randomly distributed among 𝐿 distinguishable boxes, and considering the dense system , our natural intuition tells us that the box with the average number of balls 𝑃/𝐿 has the highest probability and that none of boxes are empty; however in reality, the probability of the empty box is always the highest. This fact is with contradistinction to sparse system

, our natural intuition tells us that the box with the average number of balls 𝑃/𝐿 has the highest probability and that none of boxes are empty; however in reality, the probability of the empty box is always the highest. This fact is with contradistinction to sparse system  (i.e. energy distribution in gas) in which the average value has the highest probability. Here we show that when we postulate the requirement that all possible configurations of balls in the boxes have equal probabilities, a realistic “long tail” distribution is obtained. This formalism when applied for sparse systems converges to distributions in which the average is preferred. We calculate some of the distributions resulted from this postulate and obtain most of the known distributions in nature, namely: Zipf’s law, Benford’s law, particles energy distributions, and more. Further generalization of this novel approach yields not only much better predictions for elections, polls, market share distribution among competing companies and so forth, but also a compelling probabilistic explanation for Planck’s famous empirical finding that the energy of a photon is ℎ𝑣.

(i.e. energy distribution in gas) in which the average value has the highest probability. Here we show that when we postulate the requirement that all possible configurations of balls in the boxes have equal probabilities, a realistic “long tail” distribution is obtained. This formalism when applied for sparse systems converges to distributions in which the average is preferred. We calculate some of the distributions resulted from this postulate and obtain most of the known distributions in nature, namely: Zipf’s law, Benford’s law, particles energy distributions, and more. Further generalization of this novel approach yields not only much better predictions for elections, polls, market share distribution among competing companies and so forth, but also a compelling probabilistic explanation for Planck’s famous empirical finding that the energy of a photon is ℎ𝑣.

Keywords:

Probability, Statistics, Benford’s Law, Zipf’s Law, Planck’s Law, Configurational Entropy

1. Introduction

Probability theory is an important branch of mathematics because of its huge economic significance. Since our knowledge about the future is limited, the most effective decisions rely on statistics.

Usually it is assumed that similar underlying events should have a similar resultant expectation value. From the law of large numbers [1] we expect that a large number of trials will yield the average value. From the central limit theorem [2] we expect that the scattered deviations from average will be distributed as in a Gaussian curve (the normal distribution).

Accordingly, when pollsters try to predict the results of a poll, i.e. elections, they treat each party individually. They find its expected number of seats from a small sample. Then they combine the results of all the parties together and obtain the expected distribution of the seats among the parties in the election. The a priori assumption of any pole is that the distribution of the seats among the parties, if the people vote randomly, is equal.

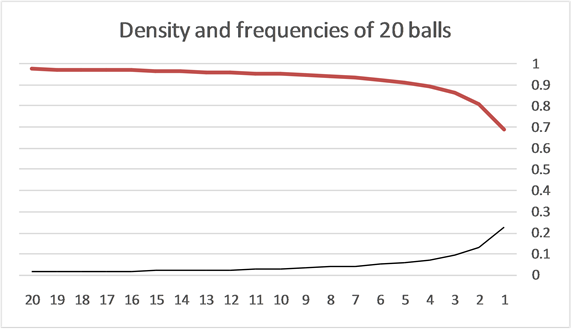

Based on the second law of thermodynamics, we suggest an alternative assumption that should be made, namely, that any configuration of seats among the parties should have an equal probability. When we calculate the distribution of seats under this assumption, we obtain an unequal distribution of seats among parties which is very similar to that obtained in real elections, see Figure 3. Nevertheless, each of the parties has an equal a priori probability to win each of the unequal number of seats of the distribution.

To generalize the analysis we call each party a box, and the available seats in parliament, balls. In statistical physics a box is called a state, and a possible configuration of the balls in the boxes is called a microstate. The logarithm of the number of microstates is called entropy [3] . The second law of thermodynamics states that a system in equilibrium has maximum entropy. Maximum entropy exists when all the microstates have an equal probability and all the states have an equal probability.

We distinguish between two kinds of statistical systems:

Dense systems, namely, systems in which the number of balls is bigger than the number of boxes.

Sparse systems, in which the number of balls is much smaller than the number of boxes and/or no more than one ball can exist in a box.

For example, elections are dense systems, since there are more seats than parties. Similarly, a best sellers’ list is dense as the number of readers is greater than the number of the books. In wealth distribution, there are more dollar bills than people, there are more surfers per second than sites in the internet, and more listeners than songs, etc. It seems that many of the human’s social activities can be described by dense systems statistics.

On the other hand lotteries are a sparse systems, since the number of winners is smaller than the number of tickets sold. The sparse systems statistics are very useful in describing distributions in space, because in many cases two objects cannot occupy the same space, i.e. two buildings cannot coexist in the same area. Similarly, two atoms or molecules cannot coexist in the same state.

In order to visualize the difference between the classic approach and the new approach to probability let us look at 12 balls distributed randomly in 3 distinct boxes. The average is 4, therefore most people will assume that the best guess is to find 4 balls in any randomly chosen box. The second best guesses are 3 and 5 balls in a box. Yet, in reality, if you have to gamble your best bet should be an empty box.

The guess of 4 balls is based on the law of large numbers, and the guess of 3 and 5 balls are based on the central limit theorem. However, the probability to find 4 balls in a box is only 10%, 3 balls is 11%, 1 ball is 13%, and the most probable is an empty box which has 14% probability. These probabilities were calculated according to the new approach and it reflects the known fact that there is a higher probability to be poor than to be rich.

The reason for that counterintuitive result is found in the microstates of this system. For example: there are only 3 different configurations in which boxes with 12 balls exist; namely, 12, 0, 0; 0, 12, 0; and 0, 0, 12. For these configurations, there are only 3 full boxes with 12 balls, while the number of empty boxes is double that, namely 6. Empty boxes are found, in addition, in many other configurations as well. This is the intuitive explanation why the expectation of the number of the boxes having n balls is monotonically increasing as n decreases.

It is not easy to count the number of appearances of the boxes with n balls in all the microstates, as it requires tedious combinatorial calculations because the number of microstates is exploding very fast. Moreover, the numbers are specific to any values of balls and boxes. However, the techniques of maximizing the entropy, which was first suggested by Planck yields a proven good approximations. The reason why entropy maximization yields the best solution to these problems is because the entropy is defined in equilibrium, in which the number of microstates is maximum because each microstate has an equal probability.

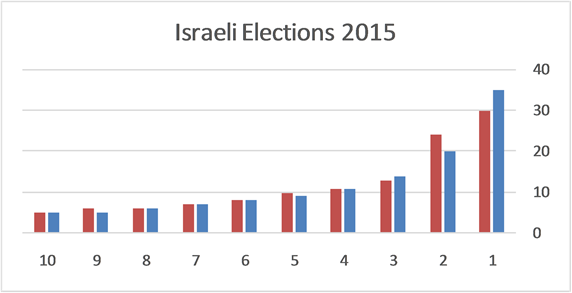

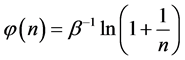

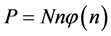

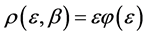

From the requirement of an equal probability to each microstate, we can derive the relative number of boxes  that have n balls. We call

that have n balls. We call  frequency, and the balls distribution function is calculated by

frequency, and the balls distribution function is calculated by .

.

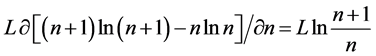

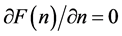

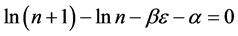

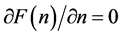

The relation between n and  is derived by maximizing the entropy, using the Lagrange multipliers technique.

is derived by maximizing the entropy, using the Lagrange multipliers technique.

We can extend the theory more by giving the balls a “property”. This is done by letting the balls have energy, assets, links etc.; In this case the property distribution calculations are similar to the calculations of the energy distribution among particles in statistical mechanics.

These kinds of probabilities are of great importance in statistics, econometrics, information theory and physics. Here we address the calculation of these probabilities in a unified way. In statistical mechanics this kind of analysis is called maximum entropy [4] , in information theory - Shannon limit [5] , and in classical physics - thermodynamic equilibrium [3] .

As is shown hereafter, this analysis yields for integer number of balls in a box: Zipf’s law [6] [7] , Benford’s law [8] - [10] , Elections votes distribution, Planck’s law [11] , Bose-Einstein distribution [12] [13] , Fermi-Dirac distribution and Maxwell-Boltzmann Distributions [14] - [16] .

When the balls have a property, a chemical potential usually appears in the distribution functions.

It should be noted that maximum entropy techniques were used in probability theory and in econometrics [17] [18] . However, the entropy’s expression that was used in these publications, namely, Boltzmann-Gibbs entropy [19] is approximated for infinitely small , as opposed to the examples discussed in this paper in which

, as opposed to the examples discussed in this paper in which . The Boltzmann-Gibbs entropy is applicable to sparse systems and yields the Maxwell-Boltzmann distribution in which the boxes with average values of balls have the highest probabilities. For example, in ideal gas the highest number of molecules have the average energy. Similarly the highest number of people have average height. This is with contradistinction to the example of 12 balls distributed in 3 boxes in which the highest probability is to find an empty box, similarly to the wealth distribution in which is it easier to find a poor man than to find a rich man.

. The Boltzmann-Gibbs entropy is applicable to sparse systems and yields the Maxwell-Boltzmann distribution in which the boxes with average values of balls have the highest probabilities. For example, in ideal gas the highest number of molecules have the average energy. Similarly the highest number of people have average height. This is with contradistinction to the example of 12 balls distributed in 3 boxes in which the highest probability is to find an empty box, similarly to the wealth distribution in which is it easier to find a poor man than to find a rich man.

2. Entropy, Frequency and Distribution for Integer Number of Balls

The model consists of identical indistinct balls and identical distinct boxes. The balls have no interaction between themselves and are distributed randomly among the boxes in a way that maximizes the entropy of the system under various constraints. We show how various statistical laws and distributions are derived using this model.

3. Dense Systems

Here there are no inherent limitations to the number of the balls in a box. Namely, it can be any number up to the total number of balls or any limit that we artificially impose.

We consider two constraints: the first is the total number of balls P in the boxes, and the second is the total number of the boxes. When the balls have a property, the constraint on the number of balls is replaced by a constraint on the total amount of property.

When the balls are pure logical entities like numbers, we say that the balls have no chemical potential. A reader of a book or a seat in election has no chemical potential. When the balls consist of a measurable quantity, the balls have chemical potential. For example, balls of a given weight or a given amount of energy have chemical potential. Similarly, balls with assets, have chemical potential.

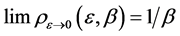

Suppose that we have P identical indistinct balls and L identical distinct boxes. The number of the distinguishable ways to arrange the balls in the boxes (microstates) is given by the number of the configurations as was first suggested by Planck,

(1)

(1)

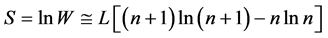

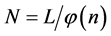

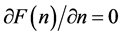

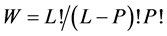

We designate  and apply the Stirling formula

and apply the Stirling formula , we obtain [11] an expression for the entropy S,

, we obtain [11] an expression for the entropy S,

(2)

(2)

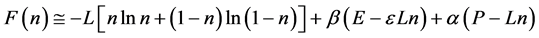

We designate the relative number of the boxes with n balls by  and therefore the number the different groups of boxes having n balls is

and therefore the number the different groups of boxes having n balls is , or,

, or,

(3)

(3)

where L and P of Equation’s (3) are the two constraints of the system. The left one is the constraint on the total number of boxes L, and the right one is the constraint on the total number of balls P.

Example 1 The Distribution of Balls in the Boxes: Zipf’s Law and Planck-Benford Law

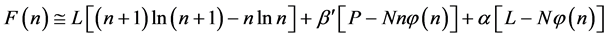

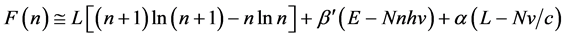

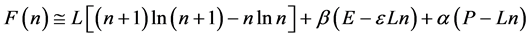

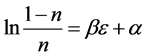

To write the Lagrange function we substitute the entropy from Equation (2) and add the constraints for P and L from Equation (3). The Lagrange equation

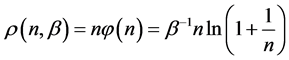

where

Since

where we designate

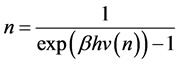

Equation (5) is the probabilistic Planck equation. It should be noted here that L is canceled out in the derivation and we remain with knowledge only about the boxes with balls. Where

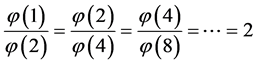

which is Zipf’s Law. From Equation (5) it is seen that Zipf’s law is obtained when the number of balls in a box n is large. Zipf’s law is known in its outcome that,

which was first found in texts in many languages [6] , namely, the most frequent word appears in long texts twice as many times as the second most frequent word, and the second most frequent word appears twice as many times as the fourth most frequent word and so forth.

Equation (5) can be written also in a different way, namely,

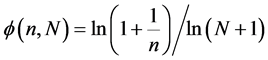

To obtain the normalized relative frequency of a box with n balls, we divide the frequency by the total number of boxes with balls,

Which yields,

Choosing the value of N reflects the difficulties arises from the subjective nature of the entropy. If we go back to our example of 12 balls distributed in 3 boxes, N may be 3 or 12, depending on the question that we ask. If we want to know the distribution of particles in 3 boxes, than

We call Equation (7) Planck-Benford law [3] [20] . It is worth noting that in Equation (7) the Lagrange multipliers were cancelled out in the normalization. This makes this law universal, as it independent in the total number of balls and the total number of boxes. This is the reason why Benford’s law and Zipf’s law were discovered so many years ago. However, the Lagrange multiplier

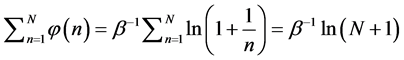

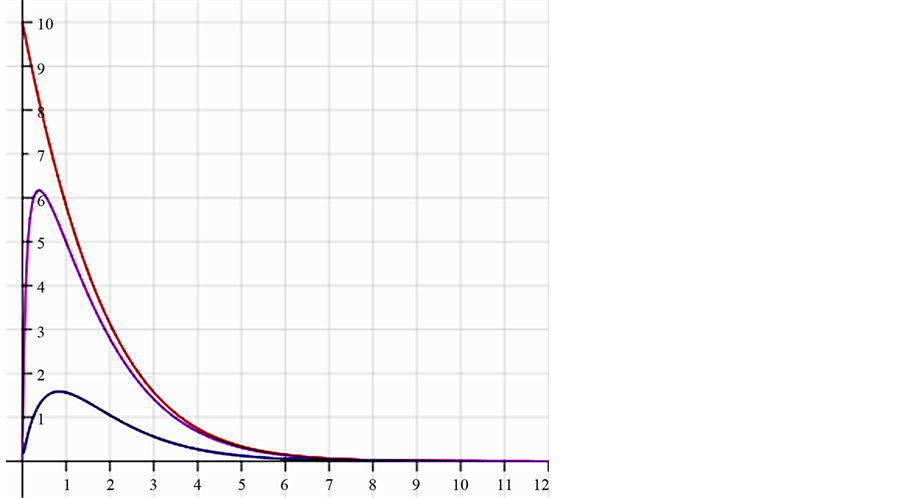

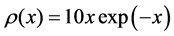

In Figure 1, the density distribution function

Zipf found his ratio of the frequencies of words in 1949 [6] [7] . Zipf attributed the phenomenon to psychological argumentations of efficiency. It is shown here that the reason is probability.

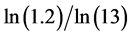

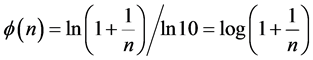

Example 2 Applications of Planck-Benford Law: Benford’s Law

When we substitute

Namely, Benford’s law is the maximum entropy distribution when the boxes (digits) may have 1, 2, 3, 4, 5, 6, 7, 8 or 9 balls in a box, see Figure 2. The Planck-Benford distribution excludes the empty boxes (the digits zero) as was explained previously and this is the reason why Benford’s law is attributed erroneously to the first digit which is never 0. It is worth noting that Zipf’s law and Benford’s law are about normalized frequencies and are not density distributions. Usually we are interested in the density distribution function

It should be noted that N is the number of the distinguishable boxes that have different number of balls. The number of the boxes in the ensemble which is the total number of digits in the file is not relevant. Benford’s law was found in the normalized relative frequencies of occurrences of the first digits of logarithmic tables by New-

Figure 1. The distribution of 20 balls in 20 boxes. The X axis is the number of balls in a box and the Y axis is the probability/density. The lower black curve is the normalized relative probability of the boxes with n balls [Equation (7)]. It is seen that the probability to find a small number of balls in a box is higher for small numbers. The upper orange curve is the density distribution

Figure 2. The normalized relative frequencies of boxes with n balls where

comb who also guessed empirically Equation (9) in 1881 [8] . Later it was discovered to be correct in many random decimal data by Benford in 1938 [9] .

Example 3 Applications of Planck-Benford Law: Prior Distributions of Polls

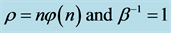

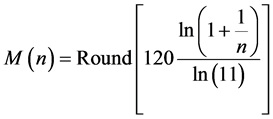

Equation (7) may be useful for predicting the prior distribution of polls. For example, in the Israeli parliament there were (eventually) 10 parties competing for 120 seats in the March 2015 election. We can use Equation (6) to find the equilibrium distribution of seats among the ten parties. This problem is different from Benford’s law. Here, the number of the different boxes is equal to the number of boxes. In Benford’s law, we ask what the normalized frequency of boxes having n balls. In polls we ask how the seats will be divided among the parties. Here we take

the parties. Therefore, the number of seats of the n’s party is calculated by

Figure 3 shows that the distribution of the seats in the Israel parliament obeys Planck Benford’s law, which shows that no major forgery was made. It is worth noting that no free parameter was used in this calculation.

Example 4 Planck Law and Planck-Einstein Assumption

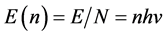

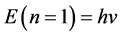

We have derived the probabilistic Planck equation, Equation (5), for pure logical entities that we call balls. Now let us assume that each ball has an amount of energy

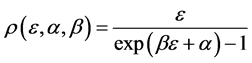

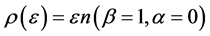

which yields the famous Planck equation,

The present probabilistic outcome of Planck-Einstein assumption that

When the modes have the same energy, a smaller number of balls in a box means more energy per ball, and since boxes with a smaller number of balls appear more frequently, the frequency of the radiation is higher. It means that Planck’s empirical assumption that the higher the frequency, the higher the photon energy, that was empirically invoked by him to obtain the Blackbody Radiation formula, namely

Figure 3. The distribution of 120 seats among 10 parties. The right blue bars are the Planck-Benford distribution and the left orange bars are the actual distribution of seats.

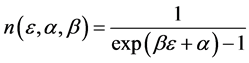

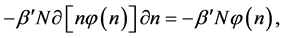

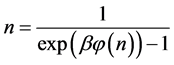

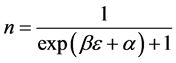

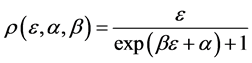

Example 5 Balls with Property: Bose-Einstein Distribution

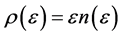

Now let us assume that the balls are static and have a simple property

We ask that

and obtain

and the property distribution function

Equation (13) is the Bose-Einstein distribution. We saw that photons that are eventually pure energy behave like balls in boxes. However objects with a chemical potential in which the number of balls and their energies are independently constrained have chemical potential.

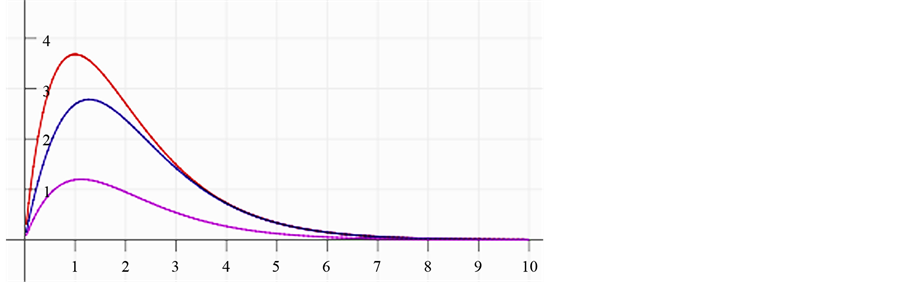

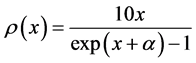

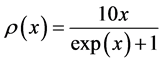

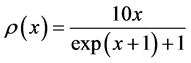

In Figure 4 we provide three plots of Equation (13), for

In the plots the functions are multiplied by an arbitrary number-10, namely the curves are

The upper plot of Figure 1, the upper plot of Figure 4 and the plot of Blackbody radiation that appears in the textbooks look entirely different. These differences are worth an explanation. In Figure 1 we plotted

In most textbook the energy flux of the radiation is plotted and therefore the density function is multiplied by the number of the radiation modes per a given volume. The number of modes N in a given volume is proportional

Figure 4. The upper red curve is the Planck distribution and the purple and blue below are the Bose-Einstein distributions with finite chemical potentials.

to

In the lower graphs of Figure 4 we see that the chemical potential has a dramatic effect that causes the Planck monotonically decreasing function to be similar to Maxwell-Boltzmann function.

Bose-Einstein distribution was suggested by Bose in 1924 [12] and by Einstein in 1925 [13] .

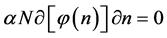

4. Sparse Systems

Example 6 Fermi-Dirac and Maxwell-Boltzmann Distributions

Now we look at a system in which no more than one ball can exist in a box. The number of the configurations is given by

We ask that

We obtain

When

We see in Figure 5 that the three curves are very similar and can easily be confused.

Maxwell-Boltzmann Distribution was first suggested by Maxwell in 1860 [14] . Fermi-Dirac distribution was suggested by Fermi and Dirac in 1926 [15] [16] .

5. Discussion

The formalism of maximizing the entropy using Lagrange multipliers technique is widely used in statistical mechanics to find thermal equilibrium energy distributions i.e. [4] . Ensemble of a fixed amount of particles, energy and volume is called a microcanonical ensemble. Here we strip the energy and volume off their physical identities and remain only with balls and boxes obeying the statistical second law, namely, equal probabilities to the boxes and equal probabilities to all the configurations of the balls in the boxes. An equal probability for any box yields an equal distribution of particles in the boxes. However, an equal distribution is not a maximum entropy solution. In nature, a statistical system tends to reach the equilibrium distribution in which entropy is maximum. To maximize the entropy one needs to add an equal probability requirement to the microstates.

Zipf’s law was found by Zipf in the frequency of words in texts in many languages. Benford’s law which is known also as the “first digit law” was found in the first digit of logarithmic tables (Benford’s law is true for any digit in random decimal file regardless of its location). Many scientific papers were published about these distributions which are, in fact, merely different outcomes of the old Planck law. Benford’s law is used for fraud detection in tax reports [21] . Similarly, it is possible to use Planck-Benford law described in this article for fraud detection in elections and surveys in addition to its predictive value. Moreover, if we have different companies competing in the same market we can see if there is monopolistic power that distorts the equilibrium distribution obtained by Planck-Benford law. If this theory would be applied in economics, each corporate would be able to calculate the equilibrium distribution of salaries among its employees. If the compensation distribution fits equilibrium, probably the employees will accept the salaries’ gaps. If the salary distributions fits equilibrium, than

A surprising result of this theory is that by adding a property to the balls we obtain a chemical potential. In physics, chemical potential is known as a change in the potential energy due to chemical reaction or phase transition. In fact chemical potential is an outcome of the constraint on the number of boxes. Increasing

It should be mentioned that Planck made historic assumption [11] that the energy of a photon is quantized; namely the energy of a radiation mode is

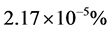

It is important to note that in all the derivations presented in this paper we use Stirling formula. The Stirling formula yields inaccurate values for small numbers. In the example of 12 balls distributed randomly in 3 boxes

Figure 5. The upper red distribution is the Maxwell-Boltzmann, the blue distribution below is the Fermi-Dirac without chemical potential, and the purple distribution is the Fermi-Dirac with a chemical potential. All these distributions look similar.

that was presented previously, the exact combinatorial calculation shows that the empty box has the highest probability (14%), the second most probable is a box with one ball (13%), and the least expected is a box with 12 balls (1%). However, using Planck-Benford law we obtain 27% for box with 1 ball, 3% for box with 12 balls and no value for empty boxes. The reason for this discrepancy is that we have not constrained the number of boxes in the entropy maximization process. The Planck-Benford law is independent of the number of boxes. If we use combinatorial calculation for 12 balls in 3 boxes, or in 4 boxes or in 100 boxes we will obtain different values for any number of boxes. Planck-Benford law is universal in this aspect, however we give up the knowledge of the probability of empty boxes and accurate values for small number of boxes and balls.

6. Summary

We present a simple and effective way to calculate many real-life probabilities and distributions such as votes in election polls, market share distributions among competing companies, wealth distributions among populations and many others. By superseding classic probability theory with the maximum entropy principle we are able to calculate these complex distributions easily, and empirical real-life results strongly corroborate this novel approach. In addition, it is shown here that this new approach yields the well-known distributions of Zipf’s Law (with Pareto 80:20 law being its outcome), Benford’s Law (which is the unequal distribution of digits in random data files), as well as energy distributions among particles in physics. This paper unifies everyday probability calculations done by surveyors, gamblers and economists with the calculations done by physicists, therefore enabling the applications of the methodology of statistical physics in economics and the life sciences.

Acknowledgements

We want to thank H. Kafri and Y. Kafri for many comments. Special thanks to Alex E. Kossovsky for many comments and especially for the combinatorial calculations of balls and boxes.

Cite this paper

OdedKafri, (2016) A Novel Approach to Probability. Advances in Pure Mathematics,06,201-211. doi: 10.4236/apm.2016.64017

References

- 1. Stark, P.B. The Law of Large Numbers.

http://stat-www.berkeley.edu/~stark/Java/lln.htm - 2. Stein, C. (1972) A Bound for the Error in the Normal Approximation to the Distribution of a Sum of Dependent Random Variables. Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, 583-602.

- 3. See for Example Kafri, O. and Kafri, H. (2013) Entropy—God’s Dice Game. Create Space, 208-210.

http://www.entropy-book.com/ - 4. Tribus, M. and Levine, R.D., Eds. (1979) The Maximum Entropy Formalism. MIT Press, Cambridge.

- 5. Shannon, C.E. (1948) A Mathematical Theory of Communication. Bell System Technical Journal, 27, 379-423, 623-656.

- 6. Zipf, G.K. (1949) Human Behavior and the Principle of Least-Effort. Addison-Wesley, Boston.

- 7. Gabarix, X. (1999) Zipf’s Law for Cities: An Explanation. The Quarterly Journal of Economics, 114, 739-767.

http://dx.doi.org/10.1162/003355399556133 - 8. Newcomb, S. (1881) Note on the Frequency of Use of the Different Digits in Natural Numbers. American Journal of Mathematics, 4, 39-401.

http://dx.doi.org/10.2307/2369148 - 9. Benford, F. (1938) The Law of Anomalous Numbers. Proceedings of the American Mathematical Society, 78, 551-572.

- 10. Kossovsky, A.E. (2015) Benford’s Law. World Scientific Publishing, Singapore.

- 11. Planck, M. (1901) On the Law of Distribution of Energy in the Normal Spectrum. Annalen der Physik, 4, 553-562.

http://dx.doi.org/10.1002/andp.19013090310 - 12. Bose, S.N. (1924) Plancks Gesetz und Lichtquantenhypothese. Zeitschrift für Physik, 26, 178.

http://dx.doi.org/10.1007/BF01327326 - 13. Einstein, A. (1925) Quantentheorie des einatomigen idealen Gases. Sitzungsberichte der Preussischen Akademie der Wissenschaften, 1, 3.

- 14. Maxwell, J.C. (1860) Illustrations of the Dynamical Theory of Gases. Part I. On the Motions and Collisions of Perfectly Elastic Spheres. Philosophical Magazine Series 4, 19, 19-32.

- 15. Fermi, E. (1926) Sulla quantizzazione del gas perfetto monoatomico. Rendiconti Lincei, 3, 145-149. (In Italian)

- 16. Dirac, P.A.M. (1926) On the Theory of Quantum Mechanics. Proceedings of the Royal Society, Series A, 112, 661-677.

http://dx.doi.org/10.1098/rspa.1926.0133 - 17. Ullah, A. (1996) Entropy, Divergence and Distance Measures with Econometric Applications. Journal of Statistical Planning and Inference, 49, 141.

- 18. http://en.wikipedia.org/wiki/Maximum_entropy_probability_distribution

- 19. Back, C. (2009) Generalize Information and Entropy Measures in Physics. Contemporary Physics, 50, 495-510.

http://dx.doi.org/10.1080/00107510902823517 - 20. Kafri, O. (2009) The Distributions in Nature and Entropy Principle.

http://arxiv.org/abs/0907.4852 - 21. Nigrini, M. (1996) A Taxpayer Compliance Application of Benford’s Law. The Journal of the American Taxation Association, 18, 72-91.

- 22. Kafri, O. (2014) Money, Information and Heat in Networks Dynamics. Mathematical Finance Letters, 2014, Article ID: 4.

- 23. Kafri, O. (2014) Follow the Multitude—A Thermodynamic Approach. Natural Science, 6, 528-531.

http://dx.doi.org/10.4236/ns.2014.67051