Advances in Pure Mathematics

Vol.06 No.01(2016), Article ID:62839,9 pages

10.4236/apm.2016.61002

Wavelet-Based Density Estimation in Presence of Additive Noise under Various Dependence Structures

N. Hosseinioun

Statistics Department, Payame Noor University, 19395-4697 Tehran, Iran

Copyright © 2016 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 4 December 2015; accepted 16 January 2016; published 19 January 2016

ABSTRACT

We study the following model: . The aim is to estimate the distribution of X when only

. The aim is to estimate the distribution of X when only  are observed. In the classical model, the distribution of

are observed. In the classical model, the distribution of  is assumed to be known, and this is often considered as an important drawback of this simple model. Indeed, in most practical applications, the distribution of the errors cannot be perfectly known. In this paper, the author will construct wavelet estimators and analyze their asymptotic mean integrated squared error for additive noise models under certain dependent conditions, the strong mixing case, the b-mixing case and the r-mixing case. Under mild conditions on the family of wavelets, the estimator is shown to be

is assumed to be known, and this is often considered as an important drawback of this simple model. Indeed, in most practical applications, the distribution of the errors cannot be perfectly known. In this paper, the author will construct wavelet estimators and analyze their asymptotic mean integrated squared error for additive noise models under certain dependent conditions, the strong mixing case, the b-mixing case and the r-mixing case. Under mild conditions on the family of wavelets, the estimator is shown to be  -consistent and fast rates of convergence have been established.

-consistent and fast rates of convergence have been established.

Keywords:

Additive Noise, Density Estimation, Dependent Sequence, Rate of Convergence, Wavelet

1. Introduction

In practical situations, direct data are not always available. One of the classical models is described as follows:

where  stands for the random samples with unknown density

stands for the random samples with unknown density  and

and  denotes the i.i.d. random noise with density g. To estimate the density

denotes the i.i.d. random noise with density g. To estimate the density  is a deconvolution problem. Among the nonparametric methods of deconvolution, one can find estimation by model selection (e.g. Comte, Rozenhole and Taupin [1] ), wavelet thresholding (e.g. [2] ), kernel smoothing (e.g. Carroll and Hall, [3] ), spline deconvolution or spectral cut-off (e.g. Johannes [4] ) and Meister [5] basically on the effect of noise misspecification. However, a problem frequently encountered is that the proposed estimator is not everywhere positive, and therefore is not a valid probability density.

is a deconvolution problem. Among the nonparametric methods of deconvolution, one can find estimation by model selection (e.g. Comte, Rozenhole and Taupin [1] ), wavelet thresholding (e.g. [2] ), kernel smoothing (e.g. Carroll and Hall, [3] ), spline deconvolution or spectral cut-off (e.g. Johannes [4] ) and Meister [5] basically on the effect of noise misspecification. However, a problem frequently encountered is that the proposed estimator is not everywhere positive, and therefore is not a valid probability density.

Sometimes, this problem can be circumvented by repeated observations of the same variable of interest, each time with an independent error. This is the model of panel data (see for example Li and Vuong [6] , Delaigle, Hall and Meister [7] , or Neumann [8] and references therein). On the other hand, there are many application fields where it is not possible to do repeated measurements of the same variable. So, information about the error distribution can be drawn from an additional experiment: a training set is used by experimenters to estimate the noise distribution. Think of  as a measurement error due to the measuring device, then preliminary calibration measures can be obtained in the absence of any signal X (this is often called the instrument line shape of the measuring device).

as a measurement error due to the measuring device, then preliminary calibration measures can be obtained in the absence of any signal X (this is often called the instrument line shape of the measuring device).

Odiachi and Prieve [9] study the effect of additive noise in Total Internal Reflection Microscopy (TIRM) experiments. This is an optical technique for monitoring Brownian fluctuations in separation between a single microscopic sphere and a flat plate in aqueous medium. See Carroll and Hall [3] , Devroye [10] , Fan [11] , Liu and Taylor [12] , Masry [13] , Stefanski and Carroll [14] , Zhang [15] , Hesse [16] , Cator [17] , Delaigle and Gijbels [18] for mainly kernel methods and Koo [19] for a spline method, Efromovich [20] for particular strategy in supersmooth case and Meister (2004), on the effect of noise misspecification.

In this paper, we extend Geng and Wang [21] (Theorems 4.1 and 4.2) for certain dependent. More precisely, we prove that the linear wavelet estimator attains the standard rate of convergence i.e. the optimal one with additive noise for more realistic and standard dependent conditions as plynomial strong mixing dependence, the b-mixing dependence and r-mixing dependence. The properties of wavelet basis allow us to apply sharp probabilistic inequalities which improve the performance of the considered linear wavelet estimator.

The organization of the paper is as follows. Assumptions on the model are presented in Section 2. Section 3 is devoted to our linear wavelet estimator and a general result. Applications are set in Section 5, while technical proofs are collected in Section 6.

2. Estimation Procedure

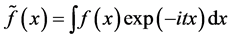

The Fourier transform of  is defined as follows:

is defined as follows:

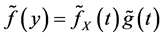

It is well known that  for

for . Let N be a positive integer. We assume that there exist constants

. Let N be a positive integer. We assume that there exist constants  and

and

One can easily find an example

which is the Laplace density and

We consider an orthonormal wavelet basis generated by dilations and translations of a father Daubechies-type wavelet and a mother Daubechies-type wavelet of the family db2N (see [22] ) Further details on wavelet theory can be found in Daubechies [22] and Meyer [23] . For any

With appropriated treatments at the boundaries, there exists an integer

forms an orthonormal basis of

where

with the usual modifications if

Tsybakov [24] . We define the linear wavelet estimator

where

Such an estimator is standard in nonparametric estimation via wavelets. For a survey on wavelet linear estimators in various density models, we refer to [25] . Note that by Plancherel formula, we have

In 1999, Pensky and Vidakovic [26] investigate Meyer wavelet estimation over Sobolev spaces and

Our work is related to the paper of Geng and Wang [21] , since our estimator is similar and we borrow a useful Lemma from that study. Geng and Wang [21] prove that, under mild conditions on the family of wavelets, the estimators are shown to be

3. Optimality Results

The main result of the paper is the upper bound for the mean integrated square error of the wavelet estimator

We refer to [24] and [30] for a detailed coverage of wavelet theory in statistics. The asymptotic performance of our estimator is evaluated by determining an upper bound of the MISE over Besov balls. It is obtained as sharp as possible and coincides with the one related to the standard i.i.d. framework.

Theorem 3.1. Consider

a) there exists constants

b) for any

Let

Naturally, the rate of convergence in Theorem 4.1 is obtained to be as sharp as possible.

4. Applications

The three following subsections investigate separately the strong mixing case, the r-mixing case and the b-mixing case, which occur in a large variety of applications.

4.1. Application to the Strong Mixing Dependence

We define the m-th strong mixing coefficient of

where

Applications on strong mixing can be found in [15] [31] and [32] . Among various mixing conditions used in the literature, a-mixing has many practical applications. Many stochastic processes and time series are known to be a-mixing. Under certain weak assumptions autoregressive and more generally bilinear time series models are strongly mixing with exponential mixing coefficients. The a-mixing dependence is reasonably weak; it is satisfied by a wide variety of models including Markov chains, GARCH-type models and discretely observed discussions.

Proposition 4.1. Consider the strong mixing case as defined above. Suppose that there exist two constants

then

4.2. Application to the r-Mixing Dependence

Let

where

Proposition 4.2. Consider the r-mixing case as defined above. Furthermore, there exist two constants

then

4.3. Application to the b-Mixing Dependence

Let

where the supremum is taken over all finite partitions

Full details can be found in e.g. [29] [31] [33] and [34] .

Proposition 4.3. Consider the b mixing case as defined above. Furthermore, there exist two constants

then

5. Proofs

In this section, we investigate the results of Section 3 under the assumptions of Section 4.

Moreover, C denotes any constant that does not depend on l, k and n.

Proof of Theorem 3.1. Since we set

Following the lines of Geng and Wang [21] , with Plancherel formula, it is easy to say

where

and

on the other hand, it follows from the stationarity of

where

For upper bound of

By (6) and inequality obtained in Lemma 6 in [2] , we have,

Therefore

It follows from (5) that

Therefore, combining (7) to (11), we obtain

On the other hand, as we define

It follows from (13) and (14) and the assumption on

Now the proof of Theorem 3.1 is complete.

Proof of Proposition 5.1. We apply the Davydov inequality for strongly mixing processes (see [29] ); for any

Since we have

therefore

Now the proof is finished by (14), (15) and (16).

Proof of Proposition 5.2. Applying the covariance inequality for r-mixing processes (see Doukahn [32] ), we have

Hence by the same technique we use in (8), we obtain

Proof of Proposition 5.3. Since

where b is a function such that

Cite this paper

N.Hosseinioun, (2016) Wavelet-Based Density Estimation in Presence of Additive Noise under Various Dependence Structures. Advances in Pure Mathematics,06,7-15. doi: 10.4236/apm.2016.61002

References

- 1. Comte, F., Rozenholc, Y. and Taupin, M.-L. (2006) Penalized Contrast Estimator for Density Deconvolution. The Canadian Journal of Statistics, 34, 431-452.

http://dx.doi.org/10.1002/cjs.5550340305 - 2. Fan, J. and Koo, J.Y. (2002) Wavelet Deconvolution. IEEE Transactions on Information Theory, 48, 734-747.

http://dx.doi.org/10.1109/18.986021 - 3. Caroll, R.J. and Hall, P. (1988) Optimal Rates of Convergence for Deconvolving a Density. Journal of the American Statistical Association, 83, 1184-1186.

http://dx.doi.org/10.1080/01621459.1988.10478718 - 4. Johannes, J. (2009) Deconvolution with Unknown Error Distribution. Annals of Statistics, 37, 2301-2323.

http://dx.doi.org/10.1214/08-AOS652 - 5. Meister, A. (2004) On the Effect of Misspecifying the Error Density in a Deconvolution. Canadian Journal of Statistics, 32, 439-449.

- 6. Li, T. and Vuong, Q. (1998) Nonparametric Estimation of the Measurement Error Model Using Multiple Indicators. Journal of Multivariate Analysis, 65, 139-165.

http://dx.doi.org/10.1006/jmva.1998.1741 - 7. Delaigle, A., Hall, P. and Meister, A. (2008) On Deconvolution with Repeated Measurements. Annals of Statistics, 36, 665-685.

http://dx.doi.org/10.1214/009053607000000884 - 8. Neumann, M.H. (2007) Deconvolution from Panel Data with Unknown Error Distribution. Journal of Multivariate Analysis, 98, 1955-1968.

http://dx.doi.org/10.1016/j.jmva.2006.09.012 - 9. Odiachi, P. and Prieve, D. (2004) Removing the Effects of Additive Noise in Term. Journal of Colloid and Interface Science, 270, 113-122.

http://dx.doi.org/10.1016/S0021-9797(03)00548-4 - 10. Devroye, L. (1989) Consistent Deconvolution in Density Estimation. Canadian Journal of Statistics, 17, 235-239.

http://dx.doi.org/10.2307/3314852 - 11. Fan, J. (1991) On the Optimal Rates of Convergence for Nonparametric Deconvolution Problems. Annals of Statistics, 19, 1257-1272.

http://dx.doi.org/10.1214/aos/1176348248 - 12. Liu, M.C. and Taylor, R.L. (1989) A Consistent Nonparametric Density Estimator for the Deconvolution Problem. Canadian Journal of Statistics, 17, 427-438.

http://dx.doi.org/10.2307/3315482 - 13. Masry, E. (1991) Multivariate Probability Density Deconvolution for Stationary Random Processes. IEEE Transactions on Information Theory, 37, 1105-1115.

http://dx.doi.org/10.1109/18.87002 - 14. Stefanski, L. and Carroll, R.J. (1990) Deconvoluting Kernel Density Estimators. Statistics, 21, 169-184.

http://dx.doi.org/10.1080/02331889008802238 - 15. Zhang, C.-H. (1990) Fourier Methods for Estimating Mixing Densities and Distributions. Annals of Statistics, 18, 806-831.

http://dx.doi.org/10.1214/aos/1176347627 - 16. Hesse, C.H. (1999) Data-Driven Deconvolution. Journal of Nonparametric Statistics, 10, 343-373.

http://dx.doi.org/10.1080/10485259908832766 - 17. Cator, E.A. (2001) Deconvolution with Arbitrarily Smooth Kernels. Statistics & Probability Letters, 54, Article ID: 205214.

http://dx.doi.org/10.1016/s0167-7152(01)00083-9 - 18. Delaigle, A. and Gijbels, I. (2004) Bootstrap Bandwidth Selection in Kernel Density Estimation from a Contaminated Sample. Annals of the Institute of Statistical Mathematics, 56, 1947.

http://dx.doi.org/10.1007/BF02530523 - 19. Koo, J.-Y. (1999) Logspline Deconvolution in Besov Space. Scandinavian Journal of Statistics, 26, 73-86.

http://dx.doi.org/10.1111/1467-9469.00138 - 20. Efromovich, S. (1997) Density Estimation for the Case of Super-Smooth Measurement Error. Journal of the American Statistical Association, 92, 526-535.

http://dx.doi.org/10.1080/01621459.1997.10474005 - 21. Geng, Z. and Wang, J. (2015) The Mean Consistency of Wavelet Density Estimators. Journal of Inequalities and Applications, 2015, 111.

http://dx.doi.org/10.1186/s13660-015-0636-1 - 22. Daubechies, I. (1992) Ten Lectures on Wavelets. CBMS-NSF Regional Conference Series in Applied Mathematics.

http://dx.doi.org/10.1137/1.9781611970104 - 23. Meyer, Y. (1992) Wavelets and Operators. Cambridge University Press, Cambridge.

- 24. Hardle, W., Kerkyacharian, G., Picard, D. and Tsybakov, A. (1997) Wavelets, Approximation and Statistical Applications. Springer, New York.

- 25. Tsybakov, A.B. (2009) Introduction to Nonparametric Estimation. Springer, Berlin.

- 26. Pensky, M. and Vidakovic, B. (1999) Adaptive Wavelet Estimator for Nonparametric Density Deconvolution. The Annals of Statistics, 27, 2033-2053.

- 27. Lounici, K. and Nickl, R. (2011) Global Uniform Risk Bounds for Wavelet Deconvolution Estimators. The Annals of Statistics, 39, 201-231.

http://dx.doi.org/10.1214/10-AOS836 - 28. Li, R. and Liu, Y. (2014) Wavelet Optimal Estimations for a Density with Some Additive Noises. Applied and Computational Harmonic Analysis, 36, 2.

- 29. Doukhan, P. (1994) Mixing. Properties and Examples. Lecture Notes in Statistics, 85, Springer Verlag, New York.

- 30. Antoniadis, A. (1997) Wavelets in Statistics: A Review (with Discussion). Journal of the Italian Statistical Society, Series B, 6, 97-144.

http://dx.doi.org/10.1007/BF03178905 - 31. Bradley, R.C. (2005) Basic Properties of Strong Mixing Conditions. A Survey and Some Open Questions, Probability Surveys, 2, 107-144.

- 32. Fryzlewicz, P. and Rao, S.S. (2011) Mixing Properties of ARCH and Time-Varying ARCH Processes. Bernoulli, 17, 320-346.

http://dx.doi.org/10.3150/10-BEJ270 - 33. Carrasco, M. and Chen, X. (2002) Mixing and Moment Properties of Various GARCH and Stochastic Volatility Models. Econometric Theory, 18, 17-39.

http://dx.doi.org/10.1017/S0266466602181023 - 34. Volkonskii, V.A. and Rozanov, Y.A. (1959) Some Limit Theorems for Random Functions I. Theory of Probability and Its Applications, 4, 178-197.

http://dx.doi.org/10.1137/1104015