Advances in Linear Algebra & Matrix Theory

Vol.05 No.03(2015), Article ID:59314,13 pages

10.4236/alamt.2015.53008

A Subspace Iteration for Calculating a Cluster of Exterior Eigenvalues

Achiya Dax

Hydrological Service, Jerusalem, Israel

Email: dax20@water.gov.il

Copyright © 2015 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 25 June 2015; accepted 29 August 2015; published 1 September 2015

ABSTRACT

In this paper we present a new subspace iteration for calculating eigenvalues of symmetric matrices. The method is designed to compute a cluster of k exterior eigenvalues. For example, k eigenvalues with the largest absolute values, the k algebraically largest eigenvalues, or the k algebraically smallest eigenvalues. The new iteration applies a Restarted Krylov method to collect information on the desired cluster. It is shown that the estimated eigenvalues proceed monotonically toward their limits. Another innovation regards the choice of starting points for the Krylov subspaces, which leads to fast rate of convergence. Numerical experiments illustrate the viability of the proposed ideas.

Keywords:

Exterior Eigenvalues, Symmetric Matrices, Subspace Iterations, Interlacing, Restarted Krylov Methods

1. Introduction

In this paper we present a new subspace iteration for calculating a cluster of k exterior eigenvalues of a given symmetric matrix, . Other names for such eigenvalues are “peripheral eigenvalues” and “extreme eigenvalues”. As with other subspace iterations, the method is best suited for handling large sparse matrices in which a matrix-vector product needs only

. Other names for such eigenvalues are “peripheral eigenvalues” and “extreme eigenvalues”. As with other subspace iterations, the method is best suited for handling large sparse matrices in which a matrix-vector product needs only  flops. Another underlying assumption is that

flops. Another underlying assumption is that  is considerably smaller than n. Basically there are two types of subspace iterations for solving such problems. The first category regards “block” versions of the Power method that use frequent orthogonalizations. The eigenvalues are extracted with the Rayleigh-Ritz procedure. This kind of method is also called “orthogonal iterations” and “simultaneous iterations”. The second category uses the Rayleigh-Ritz process to achieve approximation from a Krylov subspace. The Restarted Lanczos method turns this approach into a powerful tool. For detailed discussions of these topics see, for example, [1] -[19] .

is considerably smaller than n. Basically there are two types of subspace iterations for solving such problems. The first category regards “block” versions of the Power method that use frequent orthogonalizations. The eigenvalues are extracted with the Rayleigh-Ritz procedure. This kind of method is also called “orthogonal iterations” and “simultaneous iterations”. The second category uses the Rayleigh-Ritz process to achieve approximation from a Krylov subspace. The Restarted Lanczos method turns this approach into a powerful tool. For detailed discussions of these topics see, for example, [1] -[19] .

The new iteration applies a Krylov subspace method to collect information on the desired cluster. Yet it has an additional flavor: it uses an interlacing theorem to improve the current estimates of the eigenvalues. This enables the method to gain speed and accuracy.

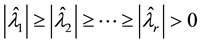

If G happens to be a singular matrix, then it has zero eigenvalues, and any orthonormal basis of Null (G) gives the corresponding eigenvectors. However, in many practical problems we are interested only in non-zero eigenvalues. For this reason the coming definitions of the term “a cluster of k exterior eigenvalues” do not include zero eigenvalues. Let r denote the rank of G and assume that k < r. Then G has r non-zero eigenvalues that can be ordered to satisfy

(1.1)

(1.1)

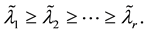

or

(1.2)

(1.2)

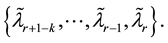

The new algorithm is built to compute one of the following four types of target clusters that contain k extreme eigenvalues.

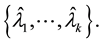

A dominant cluster

(1.3)

(1.3)

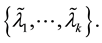

A right-side cluster

(1.4)

(1.4)

A left-side cluster

(1.5)

(1.5)

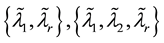

A two-side cluster is a union of a right-side cluster and a left-side cluster. For example,  , and so forth.

, and so forth.

Note that although the above definitions refer to clusters of eigenvalues, the algorithm is carried out by computing the corresponding k eigenvectors of G. The subspace that is spanned by these eigenvectors is called the target space. The restriction of the target cluster to include only non-zero eigenvalues means that the target space is contained in Range(G). For this reason the search for the target space is restricted to Range(G).

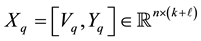

Let us turn now to describe the basic iteration of the new method. The qth iteration,  , is composed of the following five steps. The first step starts with a matrix

, is composed of the following five steps. The first step starts with a matrix  that contains “old” information on the target space, a matrix

that contains “old” information on the target space, a matrix  that contains “new” information, and a matrix

that contains “new” information, and a matrix  that includes all the known information. The matrix Xq has

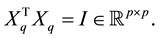

that includes all the known information. The matrix Xq has  orthonormal columns. That is

orthonormal columns. That is

(Typical values for  are

are

Step 1: Eigenvalues extraction. First compute the Rayleigh quotient matrix

Then compute k eigenpairs of Sq which correspond to the target cluster. (For example, if it is desired to compute a right-side cluster of G, then compute a right-side cluster of Sq.) The corresponding k eigenvectors of Sq are assembled into a matrix

which is used to compute the related matrix of Ritz vectors,

Step 2: Collecting new information. Compute a matrix

Step 3: Discard redundant information. Orthogonalize the columns of Bq against the columns of

Step 4: Build an orthonormal basis. Compute a matrix,

whose columns form an orthonormal basis of

Step 5: Define

which ensures that

The above description is aimed to clarify the purpose of each step. Yet there might be better ways to carry out the basic iteration. The restriction of the search to Range(G) is important when handling low-rank matrices. However, if G is known to be a non-singular matrix, then there is no need to impose this restriction.

The plan of the paper is as follows. The interlacing theorems that support the new method are given in the next section. Let

2. Interlacing Theorems

In this section we establish a useful property of the proposed method. We start with two well-known interlacing theorems, e.g., [6] [7] [19] .

Theorem 1 (Cauchy interlace theorem) Let

Let the symmetric matrix

denote the eigenvalues of H. Then

and

In particular, for

Corollary 2 (Poincaré separation theorem) Let the matrix

The next theorem seems to be new. It sharpens the above results by removing zero eigenvalues.

Theorem 3 Assume that the non-zero eigenvalues of G satisfy (1.2) where

Let the matrix

and

Proof. Let the matrix

Let us return now to consider the qth iteration of the new method,

and let the eigenvalues of the matrix

be denoted as

Then the Ritz values which are computed at Step 1 are

and these values are the eigenvalues of the matrix

Similarly,

are the eigenvalues of the matrix

Therefore, since the columns of

On the other hand from Theorem 3 we obtain that

Hence by combining these relations we see that

for

Assume now that the algorithm is aimed at computing a cluster of k left-side eigenvalues of G,

Then similar arguments show that

for

Recall that a two-sides cluster is the union of a right-side cluster and a left-side one. In this case the eigenvalues of Sq that correspond to the right-side satisfy (2.9) while eigenvalues of Sq that correspond to the left-side satisfy (2.10). A similar situation occurs in the computation of a dominant cluster, since a dominant cluster is either a right-side cluster, a left-side cluster, or a two-sides cluster.

3. The Krylov Information Matrix

It is left to explain how the information matrices are computed. The first question to answer is how to define the starting matrix X1. For this purpose we consider a Krylov subspace that is generated by the vectors

where

The main question is how to define the information matrix

which is needed in Step 2. Following the Krylov subspace approach, the columns of

where

The ability of a Krylov subspace to approximate a dominant subspace is characterized by the Kaniel-Paige- Saad (K-P-S) bounds. See, for example, ([5] , pp. 552-554), ( [7] , pp. 242-247), ( [13] , pp. 272-274), and the references therein. One consequence of these bounds regards the angle between

where vector of ones.

Another consequence of the K-P-S bounds is that a larger Krylov subspace gives better approximations. This suggests that using (3.2)-(3.3) with a larger

A different argument that supports the last observation comes from the interlacing theorems: Consider the use of (3.2)-(3.3) with two values of

4. Treating a Non-Peripheral Cluster

A cluster of eigenvalues is called peripheral if there exists a real number,

A second difficulty comes from the following phenomenon. To simplify the discussion we concentrate on a left-side cluster of a positive semi-definite matrix that has several zero eigenvalues. In this case the target cluster is composed from the k smallest non-zero eigenvalues of G. Then, once the columns of

One way to overcome the last difficulty is to force

Step 3*: As before, the step starts by orthogonalizing the columns of

A second possible remedy is to force

Step 5*: Compute the matrices

whose columns form an orthonormal basis of

In the experiments of Section 8, we have used Step 3* to compute a left-side cluster of Problem B, and Step 5* was used to compute a left-side cluster of Problem C. However the above modifications are not always helpful, and there might be better ways to correct the algorithm.

5. Acceleration Techniques

In this section we outline some possible ways to accelerate the rate of convergence. The acceleration is carried out in Step 2 of the basic iteration, by providing a “better” information matrix,

5.1. Power Acceleration

In this approach the columns of

where

5.2. Using a Shift

The shift operation is carried out by replacing (5.1) with

where

Assume first that G is a positive definite matrix and that we want to compute a left-side cluster (a cluster of the smallest eigenvalues). Then (5.1) is replaced by (5.2), where

A similar tactic is used for calculating a two-sides cluster. In this case the shift is computed by the rule

In other words, the shift estimates the average value of the largest and the smallest (algebraically) eigenvalues of G. A more sophisticated way to implement the above ideas is outlined below.

5.3. Polynomial Acceleration

Let the eigenvalues of G satisfy (2.1) and let the real numbers

define the monic polynomial

Then the eigenvalues of the matrix polynomial

are determined by the relations

Moreover, the matrices G and

The idea here is to choose the points

As with orthogonal iterations, the use of Chebyshev polynomials enables effective implementation of this idea. In this method there is no need in the numbers

5.4. Inverse Iterations

This approach is possible only in certain cases, when G is invertible and the matrix-vector product

In practice

The use of (5.4) is helpful for calculating small eigenvalues of a positive definite matrix. If other clusters are needed then

6. Orthogonal Iterations

In this section we briefly examine the similarity and the difference between the new method and the Orthogonal Iterations method. The last method is also called Subspace Iterations and Simultaneous Iterations, e.g., [1] [4] [5] [7] -[10] [13] -[15] . It is aimed at computing a cluster of k dominant eigenvalues, as defined in (1.3). The simplest way to achieve this goal is, perhaps, to apply a “block” version of the Power method on an

where

Step 1: Compute the product matrix

Step 2: Compute the Rayleigh quotient matrix

and its k dominant eigenvalues

Step 3: Compute a matrix

Let the eigenvalues of G satisfy (1.1). Then for

where

If at the end of Step 2 the matrix

A comparison of the above orthogonal iteration with the new iteration shows that both methods need about the same amount of computer storage, but the new method doubles the computational effort per iteration. The adaptation of orthogonal iterations to handle other peripheral clusters requires the shift operation. Another difference regards the rate of convergence. In orthogonal iterations the rate is determined by the ratio

7. Restarted Lanczos Methods

The current presentation of the new method is carried out by applying the Krylov information matrix (3.2)-(3.4). This version can be viewed as a “Restarted Krylov method”. The Restarted Lanczos method is a sophisticated implementation of this approach that harnesses the Lanczos algorithm to reduce the computational effort per iteration. As before, the method is aimed at computing a cluster of

The qth iteration,

which have been obtained by applying

Step 1: Compute the eigenvalues of

denote the computed eigenvalues, where the first

Step 2: Compute a

such that

Step 3: The above QR factorization is used to build a new

This pair of matrices has the property that it can be obtained by applying

Step 4: Continue

The IRLM iterations are due to Sorensen [11] . The name “Implicitly restarted” refers to the fact that the starting vector (which initiates the restarted Lanczos process) is not computed. For detailed description of this iteration, see ( [1] , pp. 67-73), and [11] . See also [13] [15] .

A different implementation of the Restarted Lanczos idea, the Thick-Restarted Lanczos (TRLan) method, was proposed by Wu and Simon [17] . The qth iteration,

Step 1: Compute

which is used to compute the related matrix of Ritz vectors,

Step 2: Let

and

Step 3: The vector

Step 4: Continue

that has mutually orthonormal columns and satisfy

where

with

Step 5: Use a sequence of Givens rotations to complete the reduction of

For detailed description of the above iteration see [17] [18] . Both IRLM and TRLan are carried out with reorthogonalization of the Lanczos vectors. The TRLan method computes the Ritz vectors while IRLM avoids this computation. Yet the two methods are known to be mathematically equivalent.

One difference between the new method and the Restarted Lanczos approach lies in the computation of the Rayleigh quotient matrix. In our method this computation requires additional

A second difference lies in the starting vector of the

A third difference arises when using acceleration techniques. Let us consider for example the use of power acceleration with

The new method can be viewed as generalization of the Restarted Lanczos approach. The generalization is carried out by replacing the Lanczos process with standard orthogonalization. This simplifies the algorithm and clarifies the main reasons that lead to fast rate of convergence. One reason is that each iteration builds a new Krylov subspace, using an improved starting vector. A second reason comes from the orthogonality requirement: The new Krylov subspace is orthogonalized against the current Ritz vectors. It is this orthogonalization that ensures successive improvement. (The Restarted Lanczos algorithms achieve these tasks in implicit ways.)

8. Numerical Experiments

In this section we describe some experiments that illustrate the behavior of the proposed method. The test matrices have the form

where

The term “random orthonormal” means that

Type A matrices, where

Type B matrices, where

Type C matrices, where

Type D matrices, where

and

The difference between the computed Ritz values and the desired eigenvalues of

The figures in Tables 1-4 provide the values of

The new method is implemented as described in Section 3. It starts by orthonormalizing a random Krylov matrix of the form (3.1). The information matrix,

Table 1. Computing a dominant cluster with the new iteration.

Table 2. Computing a dominant cluster with orthogonal iterations.

Table 3. Computing a left-side cluster with the new iteration.

Table 4. The use of Power acceleration to compute a dominant cluster of Type A matrix.

Table 1 describes the computation of dominant clusters with the new method. The reading of this table is simple: We see, for example, that after performing 6 iterations on a Type B matrix,

Another observation stems from the first rows of these tables: We see that a random Krylov matrix gives a better start than a random starting matrix.

The ability of the new method to compute a left-side cluster is illustrated in Table 3. Note that the Type B matrix and the Type C matrix are positive semidefinite matrices, in which the left-side cluster is composed from the smaller non-zero eigenvalues of

The merits of Power acceleration are demonstrated in Table 4. On one hand it enables us to use a smaller

9. Concluding Remarks

The new method is based on a modified interlacing theorem which forces the Rayleigh-Ritz approximations to move monotonically toward their limits. The current presentation concentrates on the Krylov information matrix (3.2)-(3.4), but the method can use other information matrices. The experiments that we have done are quite encouraging, especially when calculating peripheral clusters. The theory suggests that the method can be extended to calculate certain non-peripheral clusters, but in this case we face some difficulties due to rounding errors. Further modifications of the new method are considered in [3] .

Cite this paper

AchiyaDax, (2015) A Subspace Iteration for Calculating a Cluster of Exterior Eigenvalues. Advances in Linear Algebra & Matrix Theory,05,76-89. doi: 10.4236/alamt.2015.53008

References

- 1. Bai, Z., Demmel, J., Dongarra, J., Ruhe, A. and van der Vorst, H. (1999) Templates for the Solution of Algebraic Eigenvalue Problems: A Practical Guide. SIAM, Philadelphia.

- 2. Bauer, F.L. (1957) Das Verfahren der Treppeniteration und verwandte Verfahren zur Losung algebraischers Eigenwertprobleme. Zeitschrift für angewandte Mathematik und Physik ZAMP, 8, 214-235.

http://dx.doi.org/10.1007/BF01600502 - 3. Dax, A. Restarted Krylov Methods for Calculating Exterior Eigenvalues of Large Matrices. Tech. Rep., Hydrological Service of Israel, in Preparation.

- 4. Demmel, J.W. (1997) Applied Numerical Linear Algebra. SIAM, Philadelphia.

http://dx.doi.org/10.1137/1.9781611971446 - 5. Golub, G.H. and Van Loan, C.F. (1983) Matrix Computations. Johns Hopkins University Press, Baltimore.

- 6. Horn, R.A. and Johnson, C.R. (1985) Matrix Analysis. Cambridge University Press, Cambridge.

http://dx.doi.org/10.1017/CBO9780511810817 - 7. Parlett, B.N. (1980) The Symmetric Eigenvalue Problem. Prentice-Hall, Englewood Cliffs.

- 8. Reinsch, C.H. (1971) Simultaneous Iteration Method for Symmetric Matrices. In: Wilkinson, J.H. and Reinsch, C.H., Eds., Handbook for Automatic Computation (Linear Algebra), Springer-Verlag, New York, 284-302.

- 9. Rutishauer, H. (1969) Computational Aspects of F. L. Bauer’s Simultaneous Iteration Method. Numerische Mathematik, 13, 4-13.

http://dx.doi.org/10.1007/BF02165269 - 10. Rutishauser, H. (1970) Simultaneous Iteration Method for Symmetric Matrices. Numerische Mathematik, 16, 205-223.

http://dx.doi.org/10.1007/BF02219773 - 11. Sorensen, D.C. (1992) Implicit Application of Polynomial Filters in a k-Step Arnoldi Method. SIAM Journal on Matrix Analysis and Applications, 13, 357-385.

http://dx.doi.org/10.1137/0613025 - 12. Stewart, G.W. (1969) Accelerating the Orthogonal Iteration for the Eigenvalues of a Hermitian Matrix. Numerische Mathematik, 13, 362-376.

http://dx.doi.org/10.1007/BF02165413 - 13. Stewart, G.W. (2001) Matrix Algorithms, Volume II: Eigensystems. SIAM, Philadelphia.

- 14. Trefethen, L.N. and Bau III, D. (1997) Numerical Linear Algebra. SIAM, Philadelphia.

http://dx.doi.org/10.1137/1.9780898719574 - 15. Watkins, D.S. (2007) The Matrix Eigenvalue Problem: GR and Krylov Subspace Methods. SIAM, Philadelphia.

http://dx.doi.org/10.1137/1.9780898717808 - 16. Wilkinson, J.H. (1965) The Algebraic Eigenvalue Problem. Clarendon Press, Oxford.

- 17. Wu, K. and Simon, H. (2000) Thick-Restarted Lanczos Method for Large Symmetric Eigenvalue Problems. SIAM Journal on Matrix Analysis and Applications, 22, 602-616.

http://dx.doi.org/10.1137/S0895479898334605 - 18. Yamazaki, I., Bai, Z., Simon, H., Wang, L. and Wu, K. (2010) Adaptive Projection Subspace Dimension for the Thick-Restart Lanczos Method. ACM Transactions on Mathematical Software, 37, 1-18.

http://dx.doi.org/10.1145/1824801.1824805 - 19. Zhang, F. (1999) Matrix Theory: Basic Results and Techniques. Springer-Verlag, New York.

http://dx.doi.org/10.1007/978-1-4757-5797-2