Paper Menu >>

Journal Menu >>

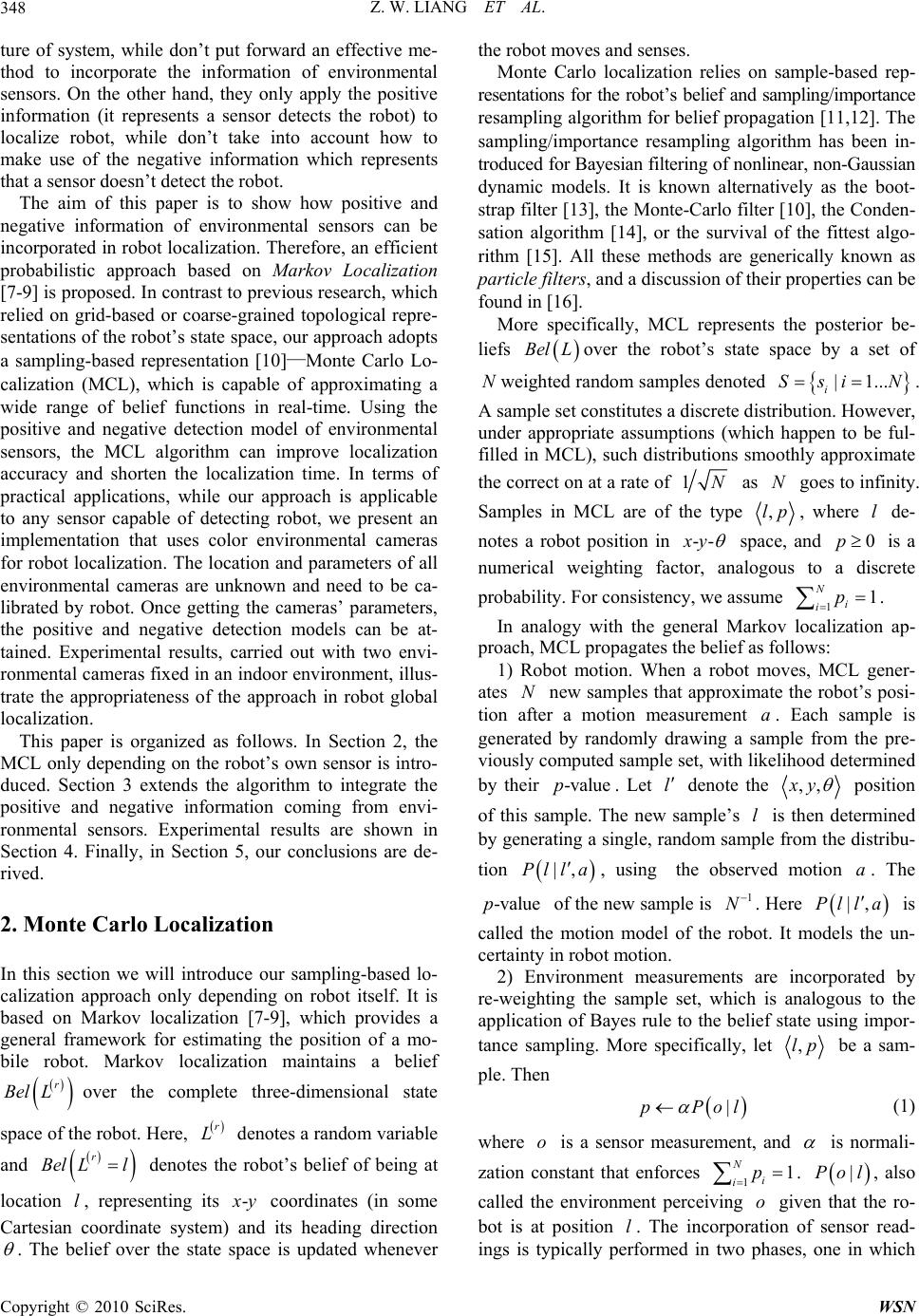

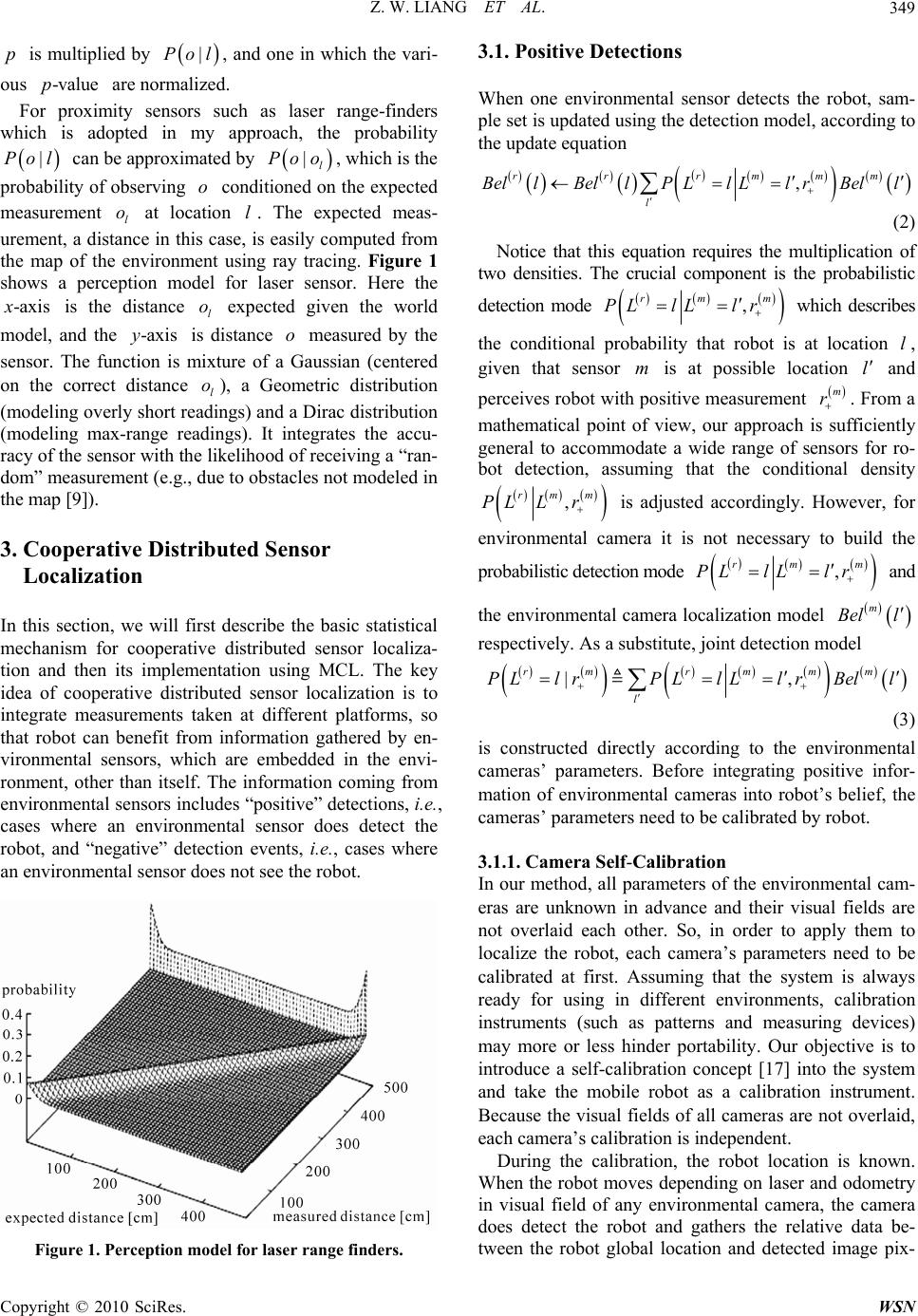

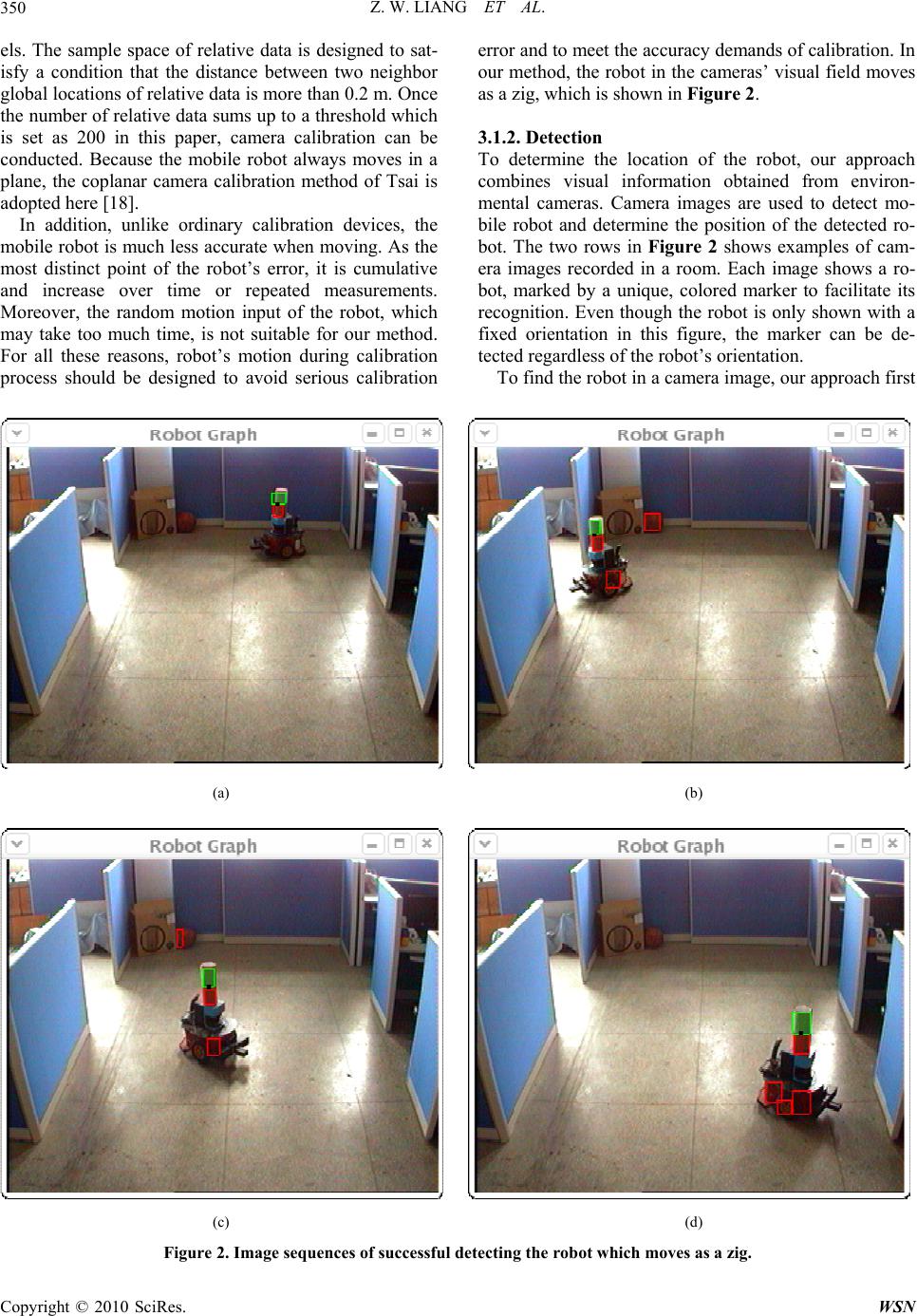

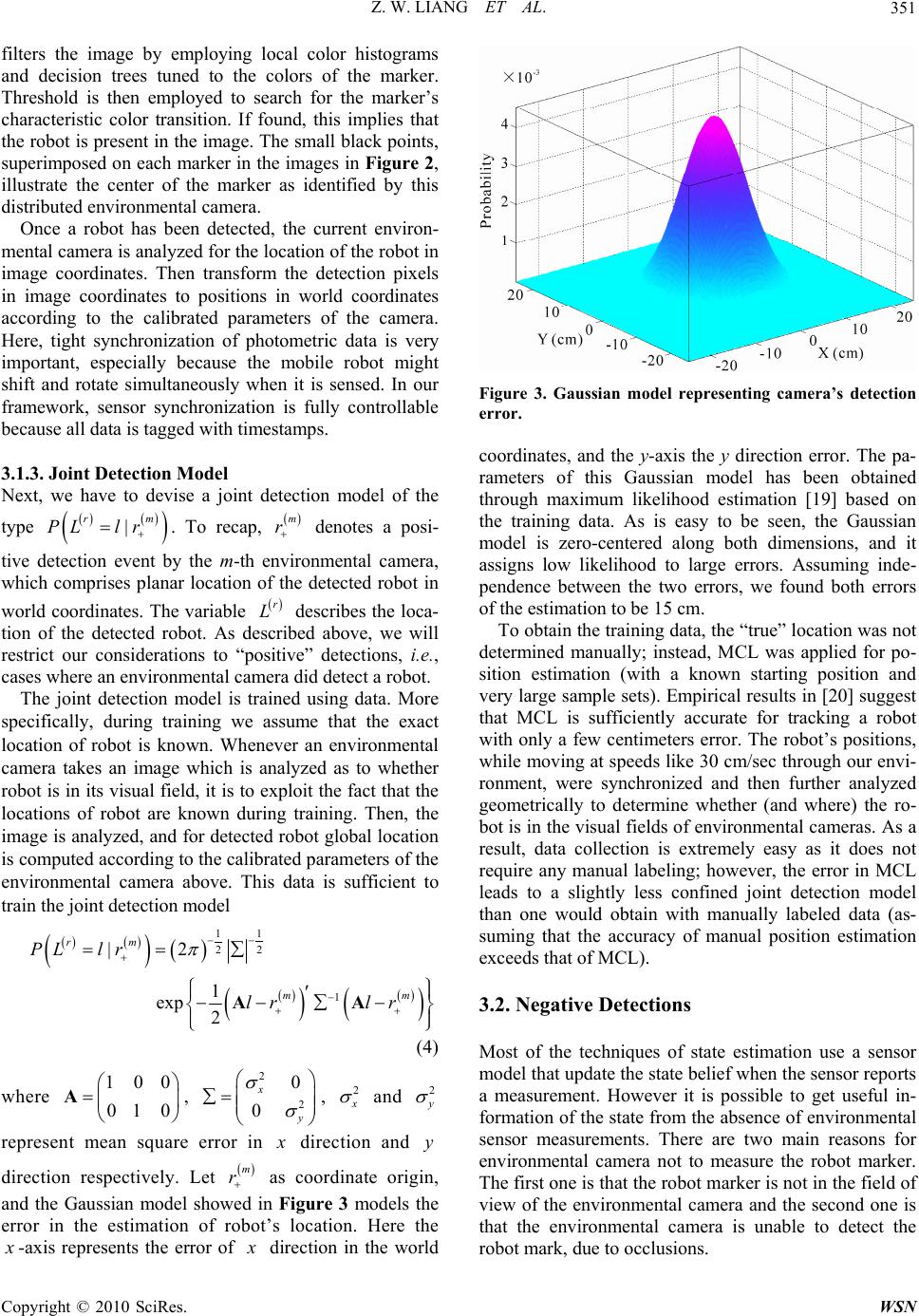

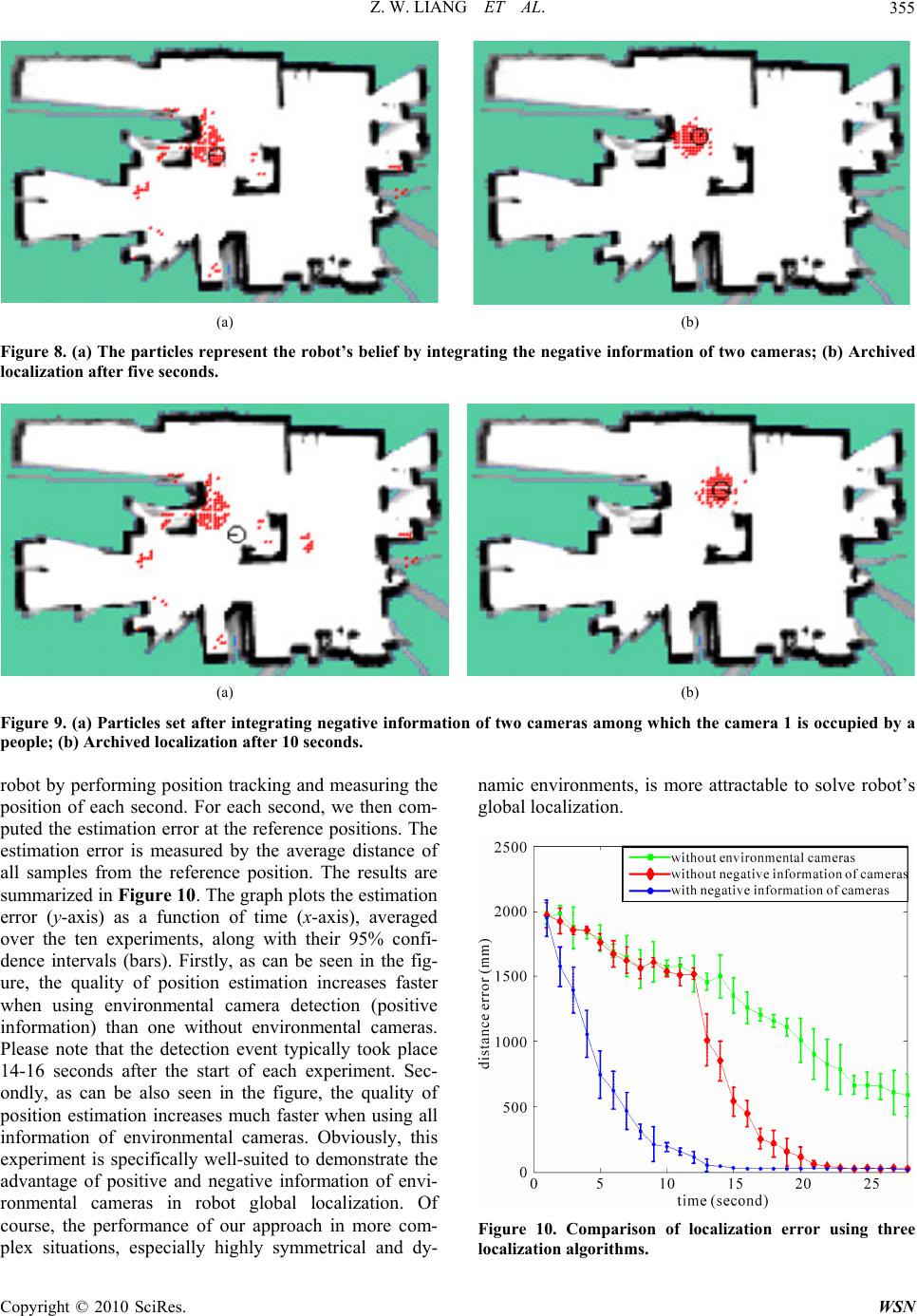

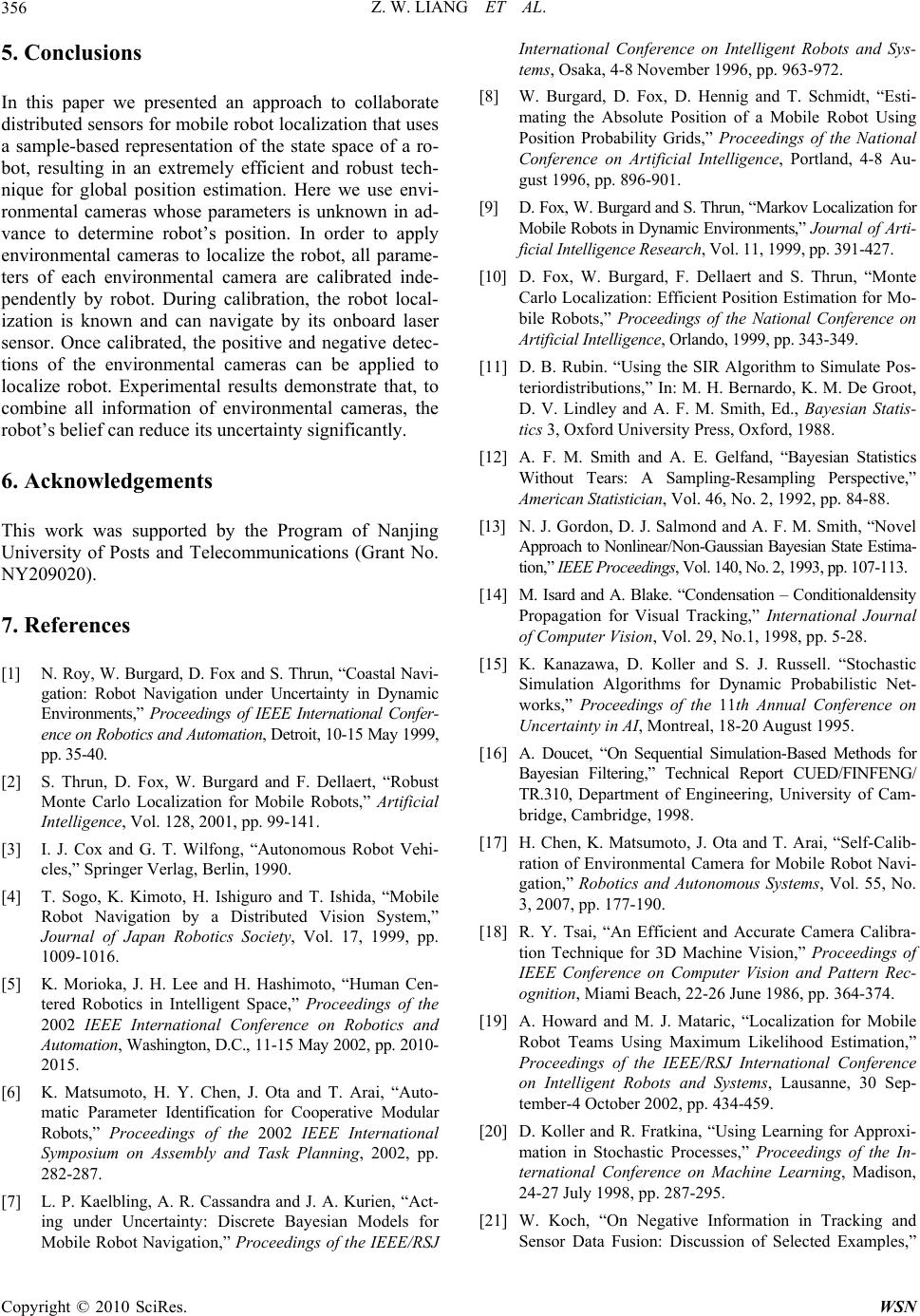

Wireless Sensor Network, 2010, 2, 347-357 doi:10.4236/wsn.2010.24046 Published Online May 2010 (http://www.SciRP.org/journal/wsn) Copyright © 2010 SciRes. WSN Cooperative Distributed Sensors for Mobile Robot Localization Zhiwei Liang, Songhao Zhu College of Automation, Nanjing University of Post s an d T e l ecom mu nic ations, Nanjing, China E-mail: lzhw_ly@hotmail.com Received December 24, 2009; revised February 22, 2010; accepted March 17, 2010 Abstract This paper presents a probabilistic algorithm to collaborate distributed sensors for mobile robot localization. It uses a sample-based version of Markov localization—Monte Carlo Localization (MCL), capable of local- izing mobile robots in an any-time fashion. During robot localization given a known environment model, MCL method is employed to update robot’s belief whichever information (positive or negative) attained from environmental sensors. Meanwhile, an implementation is presented that uses color environmental cam- eras for robot detection. All the parameters of each environmental camera are unknown in advance and need be calibrated independently by robot. Once calibrated, the positive and negative detection models can be built up according to the parameters of environmental cameras. A further experiment, obtained with the real robot in an indoor office environment, illustrates it has drastic improvement in global localization speed and accuracy using our algorithm. Keywords: Monte Carlo Localization, Environmental Camera, Positive Information, Negative Information 1. Introduction Mobile robot localization is the problem of estimating a robot’s pose (location, orientation) relative to its envi- ronment. The localization problem is a key problem in mobile robotics. There are two classes of localization problem, position tracking and global localization. In position tracking, a robot knows its initial position [1] and only needs to reduce uncertainty in the odometer reading. If the initial position is no t known or the robo t is kidnapped to somewhere, the problem is one of global localization, i.e., the mobile robot has to estimate its global position th rough a sequence of sensing actions [2]. In recent years, a flurry of publications on localization document the importance of the problem. Occasionally, it has been referred to as “the most fundamental problem to providing a mobile robot with autonomous capabili- ties” [3]. So far, virtually all existing work addresses localiza- tion only using sensors onboard mobile robot. However, in robot navigation, the robot cannot always determine its unique situation only by local sensing information since the sensors are prone to errors and a slight change of the robot’s situation deteriorates the sensing results. Along with the rapid development of computer networks and multimedia technology, research on how to make an ‘intelligent’ environment for the robot to fulfill the same functions makes sense, especially in home environment. In this case, various sensors are embedded into the envi- ronment (environmental sensors), and communication between the robot and environmental sensors is utilized. Sogo et al. [4] proposed a distributed vision system for navigating mobile robots in a real world setting. To ob- tain robustness and flexibility, the system consisted of redundant vision agents connected to a computer net- work. These agents provided information for robots by organizing the communication between vision agents. Morioka et al. [5] defined the space in which many vi- sion sensors and intelligent devices are distributed as an intelligent space. Mobile robots exist in this space as physical agents that provide humans with services. A concept called a distributed modular robot system was also proposed in [6]. In that robot system, a modular ro- bot was defined as a mono-functional robot (either a sensor or an actuator) with a radio communication unit and a processing unit. Such robots were usually small and could be easily attached to operational objects or dispersed into the environment. A modular robot system for object transportation was developed by using several distributed-placed camera modules and wheel modules. All studies mentioned above mostly focus on the struc-  Z. W. LIANG ET AL. 348 ture of system, while don’t put forward an effective me- thod to incorporate the information of environmental sensors. On the other hand, they only apply the positive information (it represents a sensor detects the robot) to localize robot, while don’t take into account how to make use of the negative information which represents that a sensor doesn’t detect the robot. The aim of this paper is to show how positive and negative information of environmental sensors can be incorporated in robo t localization. Therefore, an efficient probabilistic approach based on Markov Localization [7-9] is proposed. In contrast to previous research, which relied on grid-based or coarse-grained topological repre- sentations of the robot’s state space, our approach adopts a sampling-based representation [10]—Monte Carlo Lo- calization (MCL), which is capable of approximating a wide range of belief functions in real-time. Using the positive and negative detection model of environmental sensors, the MCL algorithm can improve localization accuracy and shorten the localization time. In terms of practical applications, while our approach is applicable to any sensor capable of detecting robot, we present an implementation that uses color environmental cameras for robot localization . The location and parameters of all environmental cameras are unknown and need to be ca- librated by robot. Once getting the cameras’ parameters, the positive and negative detection models can be at- tained. Experimental results, carried out with two envi- ronmental cameras fixed in an indoor enviro nment, illus- trate the appropriateness of the approach in robot global localization. This paper is organized as follows. In Section 2, the MCL only depending on the robot’s own sensor is intro- duced. Section 3 extends the algorithm to integrate the positive and negative information coming from envi- ronmental sensors. Experimental results are shown in Section 4. Finally, in Section 5, our conclusions are de- rived. 2. Monte Carlo Localization In this section we will introduce our sampling-based lo- calization approach only depending on robot itself. It is based on Markov localization [7-9], which provides a general framework for estimating the position of a mo- bile robot. Markov localization maintains a belief over the complete three-dimensional state space of the robot. Here, denotes a random variable and denotes the robot’s belief of being at location , representing its r Bel L BelL l r L rl - x y coordinates (in some Cartesian coordinate system) and its heading direction . The belief over the state space is updated whenever the robot moves and senses. Monte Carlo localization relies on sample-based rep- resentations for the robot’s belief and sampling/importance resampling algorithm for belief propagation [11,12]. The sampling/importance resampling algorithm has been in- troduced for Bayesian filtering of nonlinear, non-Gaussian dynamic models. It is known alternatively as the boot- strap filter [13], the Monte-Carlo filter [10], the Conden- sation algorithm [14], or the survival of the fittest algo- rithm [15]. All these methods are generically known as particle filters, and a discussion of their properties can be found in [16]. More specifically, MCL represents the posterior be- liefs BelL over the robot’s state space by a set of weighted random samples denoted . A sample set constitutes a discrete distribution. However, under appropriate assumptions (which happen to be ful- filled in MCL), such distributions smoothly approximate the correct on at a rate of N |1... i Ssi N 1N as goes to infinity. Samples in MCL are of the type N ,lp, where de- notes a robot position in l -- x y space, and is a numerical weighting factor, analogous to a discrete probability. For cons istency, we assume 0 1 p 1i p N i . In analogy with the general Markov localization ap- proach, MCL propagates the belief as follows: 1) Robot motion. When a robot moves, MCL gener- ates new samples that approximate the robot’s posi- tion after a motion measurement . Each sample is generated by randomly drawing a sample from the pre- viously computed sample set, with lik elihood determined by their . Let Na -valuepl denote the ,, x y position of this sample. The new sample’s is then determined by generating a single, random sample from the distribu- tion l |,laPl , usingthe observed motion . The of the new sample is . Here a -vaplue 1 N Pl a|,l is called the motion model of the robot. It models the un- certainty in robot motion. 2) Environment measurements are incorporated by re-weighting the sample set, which is analogous to the application of Bayes rule to the belief state using impor- tance sampling. More specifically, let ,lp be a sam- ple. Then |pPo l (1) where is a sensor measurement, and o is normali- zation constant that enforces 1. 1 N i ip |Pol, also called the environment perceiving given that the ro- bot is at position . The incorporation of sensor read- ings is typically performed in two phases, one in which o l Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL.349 p Po -ax is multiplied by , and one in which the vari- ous are normalized. |Pol o l o xis l o -valuep |l xis For proximity sensors such as laser range-finders which is adopted in my approach, the probability can be approximated by , which is the probability of observing conditioned on the expected measurement at location . The expected meas- urement, a distance in this case, is easily computed from the map of the environment using ray tracing. Figure 1 shows a perception model for laser sensor. Here the is the distance expected given the world model, and the is distance o measured by the sensor. The function is mixture of a Gaussian (centered on the correct distance ), a Geometric distribution (modeling overly short readings) and a Dirac distribution (modeling max-range readings). It integrates the accu- racy of the sensor with the likelihood of receiving a “r an- dom” measurement (e.g., due to obstacles not modeled in the map [9]). |l Poo l o y l -a 3. Cooperative Distributed Sensor Localization In this section, we will first describe the basic statistical mechanism for cooperative distributed sensor localiza- tion and then its implementation using MCL. The key idea of cooperative distributed sensor localization is to integrate measurements taken at different platforms, so that robot can benefit from information gathered by en- vironmental sensors, which are embedded in the envi- ronment, other than itself. The information coming from environmental sensors includes “positive” detections, i.e., cases where an environmental sensor does detect the robot, and “negative” detection events, i.e., cases where an environmental sensor does not see the robot. Figure 1. Perception model for laser range finders. 3.1. Positive Detections When one environmental sensor detects the robot, sam- ple set is updated using the detection model, according to the update equation , r rr mmm l B el lBel lPLlLlrBell (2) Notice that this equation requires the multiplication of two densities. The crucial component is the probabilistic detection mode , rm PL lLlr m which describes the conditional probability that robot is at location , given that sensor is at possible location ll m and perceives robot with po sitive measurement . From a mathematical point of view, our approach is sufficiently general to accommodate a wide range of sensors for ro- bot detection, assuming that the conditional density m r , rmm PL Lr is adjusted accordingly. However, for environmental camera it is not necessary to build the probabilistic detection m ode m , m lr Bel rm PL lL and the environmental camera localization model l respectively. As a substitute, joint detection model |, rr mmm l PL lrPL lLlrBell m (3) is constructed directly according to the environmental cameras’ parameters. Before integrating positive infor- mation of environmental cameras into robot’s belief, the cameras’ parameters need to be calibrated by robot. 3.1.1. Camera Self-Calibration In our method, all parameters of the environmental cam- eras are unknown in advance and their visual fields are not overlaid each other. So, in order to apply them to localize the robot, each camera’s parameters need to be calibrated at first. Assuming that the system is always ready for using in different environments, calibration instruments (such as patterns and measuring devices) may more or less hinder portability. Our objective is to introduce a self-calibration concept [17] into the system and take the mobile robot as a calibration instrument. Because the visual fields of all cameras are not overlaid, each camera’s calibration is independent. During the calibration, the robot location is known. When the robot moves depending on laser and odometry in visual field of any environmental camera, the camera does detect the robot and gathers the relative data be- tween the robot global location and detected image pix- Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL. Copyright © 2010 SciRes. WSN 350 els. The sample space of relative data is designed to sat- isfy a condition that the distance between two neighbor global locations of relative data is more than 0.2 m. Once the number of relative data sums up to a threshold which is set as 200 in this paper, camera calibration can be conducted. Because the mobile robot always moves in a plane, the coplanar camera calibration method of Tsai is adopted here [18]. In addition, unlike ordinary calibration devices, the mobile robot is much less accurate when moving. As the most distinct point of the robot’s error, it is cumulative and increase over time or repeated measurements. Moreover, the random motion input of the robot, which may take too much time, is not suitable for our method. For all these reasons, robot’s motion during calibration process should be designed to avoid serious calibration error and to meet the accuracy demands of calibration. In our method, the robot in the cameras’ visual field moves as a zig, which is shown in Figure 2. 3.1.2. Detection To determine the location of the robot, our approach combines visual information obtained from environ- mental cameras. Camera images are used to detect mo- bile robot and determine the position of the detected ro- bot. The two rows in Figure 2 shows examples of cam- era images recorded in a room. Each image shows a ro- bot, marked by a unique, colored marker to facilitate its recognition. Even though the robot is only shown with a fixed orientation in this figure, the marker can be de- tected regardless of the robot’s orientation. To find the robot in a camera image, our approach first (a) (b) (c) (d) Figure 2. Image sequences of successful detecting the robot which moves as a zig.  Z. W. LIANG ET AL. Copyright © 2010 SciRes. WSN 351 m filters the image by employing local color histograms and decision trees tuned to the colors of the marker. Threshold is then employed to search for the marker’s characteristic color transition. If found, this implies that the robot is present in the image. The small black points, superimposed on each marker in the images in Figure 2, illustrate the center of the marker as identified by this distributed environmental camera. Once a robot has been detected, the current environ- mental camera is analyzed for the location of the robot in image coordinates. Then transform the detection pixels in image coordinates to positions in world coordinates according to the calibrated parameters of the camera. Here, tight synchronization of photometric data is very important, especially because the mobile robot might shift and rotate simultaneously when it is sensed. In our framework, sensor synchronization is fully controllable because all data is tagged with timestamps. 3.1.3. J oint Detecti on Model Next, we have to devise a joint detection model of the type . To recap, denotes a posi- tive detection event by the m-th environmental camera, which comprises planar location of the detected robot in world coordinates. The variable describes the loca- tion of the detected robot. As described above, we will restrict our considerations to “positive” detections, i.e., cases where an environmental camera did detect a robot. | r PL lr m r r L The joint detection model is trained using data. More specifically, during training we assume that the exact location of robot is known. Whenever an environmental camera takes an image which is analyzed as to whether robot is in its visual field, it is to exploit the fact that the locations of robot are known during training. Then, the image is analyzed, and for detected robot global location is computed according to the calibrated parameters of the environmental camera above. This data is sufficient to train the joint detection model 1 1 2 2 1 |2 1 exp 2 rm m PL lr lr lr AA m (4) where , 100 01 0 A2 2 0 0 x y , Figure 3. Gaussian model representing camera’s detection error. coordinates, and the y-axis the y direction error. The pa- rameters of this Gaussian model has been obtained through maximum likelihood estimation [19] based on the training data. As is easy to be seen, the Gaussian model is zero-centered along both dimensions, and it assigns low likelihood to large errors. Assuming inde- pendence between the two errors, we found both errors of the estimation to be 15 cm. To obtain the training data, the “true” location was not determined manually; instead, MCL was applied for po- sition estimation (with a known starting position and very large sample sets). Empirical results in [20] suggest that MCL is sufficiently accurate for tracking a robot with only a few centimeters error. The robot’s positions, while moving at speeds like 30 cm/sec through our envi- ronment, were synchronized and then further analyzed geometrically to determine whether (and where) the ro- bot is in the visual fields of environmental cameras. As a result, data collection is extremely easy as it does not require any manual labeling; however, the error in MCL leads to a slightly less confined joint detection model than one would obtain with manually labeled data (as- suming that the accuracy of manual position estimation exceeds that of MCL). 3.2. Negative Detections Most of the techniques of state estimation use a sensor model that update the state belief when the sensor reports a measurement. However it is possible to get useful in- formation of the state from the absence of environmental sensor measurements. There are two main reasons for environmental camera not to measure the robot marker. The first one is that the robot marker is not in the field of view of the environmental camera and the second one is that the environmental camera is unable to detect the robot mark, due to occlusions. 2 x and 2 y represent mean square error in x direction and direction respectively. Let as coordinate origin, and the Gaussian model showed in Figure 3 models the error in the estimation of robot’s location. Here the y m r x -axis represents the error of x direction in the world  Z. W. LIANG ET AL. 352 This situation of no detecting a robot mark can be modeled by considering the environmental camera field of view and by using an obstacle detection to identify occlusions as shown: |,,obs rrmrm BelL lBelL lTrL l vm m m (5) where , represents the negative information of envi- ronmental sensor, describes the visibility area of the sensor and represents the occlusion area. 0 and ob s |,,obs1 or obs m mrm m m ll Tr Llll v vv m r-thm m v obs m The negative information has been applied to target tracking using the event of not detecting a target as evi- dence to update the probability density function [21]. In that work negative information means that the target is not located in the visual area of the sensor and since the target is known to exist it is certainly outside the area. In robot localization domain, the work of Hoffmann et al. [22] on negative information in ML considers negative information the absence of landmark sensor measurements. Occlusions are identified using a visual sensor that scans colors of the ground to determine if there is free area or obstacle. The environment is soccer field in green with white lines. So, if a different color is identified, it means that an obstacle could be occluding the visibility of a landmark. In the cooperative distributed sensor localization problem for mobile robot, negative information can also mean the absence of detections (in the case that an envi- ronmental sensor does not detect the robot), which con- figures a lack of group information. In this case, the neg- ative detection measurement can provide the useful in- formation that the robot is not located in the visibility area of an environmental sensor. In some cases, it can be essential information as it could improve the pose belief of the robot in short time. Our contribution in this paper is the proposal of a neg- ative detection model and its incorporation into MCL approach based on distributed sensors. Consider an en- vironmental camera, within a known environmental and its field of view as shown in Figure 4(a). If the envi- ronmental camera does not detect the robot, negative information is reported, which states that the robot is not in the visibility area of the camera, as depicted in Figure 4(a). The information gathered from Figure 4(a) is true if we consider that there are no occlusions. In order to ac- count for occlusions it is necessary to sense the environ- ment to identify free areas or occupied areas. For envi- ronmental cameras, we apply background subtraction approach described in [23] to detect the occupied areas. If it is identified as an occupied area it means that the robot could b e occluded by an obstacle. In this case, it is possible to use geometric inference to determine which part of the visual area can be used as negative detection information, as shown in Figure 4(b). 3.3. Cooperative Distributed Sensor Localization According to above positive and negative information, the cooperative distributed sensor localization algorithm for robot is summarized in Table 1. (a) (b) Figure 4. (a) Negative information; (b) Occlusion in the field of view. Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL.353 Table 1. MCL algorithm to cooperate distributed sensors. for each location do /*initialize the belief*/ l 0 rr B ellP Ll end for forever do if the robot receives new laser inputs do /*apply the laser perception model*/ o for each location do l rr B el lPolBel l end for end if if the robot receives new odometry readings do /*apply the motion model*/ a for each location do l , rr l B el lPlalBel l end for end if if the robot receives positive information from the-th environmental sensor do /*apply the positive detection model*/ m for each location do l , rr rmmm l B el lBel lPLlLlrBell end for end if if the robot receives negative information from the -th environmental sensor do /*apply the negative detection mode l* / m for each location do l |,,obs rrmrm BelLl BelLlTrLl v m end for end if end forever 4. Experimental Results In this section we present experiments conducted with real robot. The mobile robot used is Pioneer3 DX, which is equipped with a laser sensor. In whole experiments, the number of samples is fixed to 400. Figure 5(a) shows our experimental setup along with a part of the occupancy grid map used for position estimation, and that two cameras are fixed on the wall applied to detect and localize the robot. Figure 5(a) also shows the visual fields of the two environmental cameras (the purple rec- tangle regions) and the path from A to C taken by Pio- neer 3 DX with laser sensor, which was in the process of global localization. Figure 5(b) represents the uncertain belief of the robot on point A f rom scratch. N In order to evaluate the benefits of collaborative dis- tributed sensor localization for robot, three different types of experiment are performed using the above de- ployment. The first one is that the robot performs global localization by using the positive information of envi- ronmental cameras, and the field of each camera is not occupied. The second one is to use positiv e and negative information of environmental cameras whose fields of view are not occupied for robot localization. Compared with the second on e, the only difference of the last one is that visual area of camera one is partly occupied. 4.1. No Occlusions and Only Using Positive Information Before robot passes point B (shown in Figure 6(a)), the robot is still highly uncertain about its exact location only depending on its onboard laser sensor. The key event, illustrating the utility of cooperation in localiza- tion, is a detection event. More specifically, the envi- ronmental camera 1 detects the robot as it mo ves through its visual field (see Figure 7). Using the joint detection model described in Section 3, the robot integrates the positive information into its current belief. The effect of this integration on rob ot’s belief is sh own in Figure 6(b). As this figure illustrates, this single incident almost completely resolves the uncertainty in robot’s belief and shortens the time of robot global localization effectively. 4.2. No Occlusions and Using All Information It can be seen from Figure 8(a) that the particles existing in the visibility area of two cameras are disappeared due to using the negative information. After five seconds, the effect of this integration on robot’s belief is shown in Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL. 354 A B C v (1) v (2) Camera 1 Camera 2 (a) (b) Figure 5. (a) Experimental setup; (b) The sample cloud represents the robot’s belief on point A from scratch. (a) (b) Figure 6. (a) Sample set before passing point B; (b) Achieved localization by integrating the positive information of camera 1. Figure 7 . D ete cti on ev ent of cam era 1 on robot pa ssin g po int B. Figure 8(b). Compared with experiment one, Localiza- tion results obtained with negative detection information into robot global localization are more accurate and pro- vide the ability to localize robot more quickly. 4.3. Occlusions and Using All Information In this experiment, we take into account the camera one being occupied by a people. The camera one applies background subtraction approach described in [23] to detect the occupied areas. The detection result is shown in Figure 4(b). Due to the occlusion, the particles exist- ing in the occupied areas are still reserved (see Figure 9(a)). After ten seconds, the effect of robot’s belief is described in Figure 9(b). From the experiment, it can be seen that though the camera one is partly occupied, the accuracy of the localization is still greatly improved us- ing the negative detection information compared with experiment one. 4.4. Localization Error Analysis In the case of no occlusions, we conducted ten times for the first two experiments and compared the performance to conventional MCL for robot which ignores environ- mental cameras’ detections. To measure the performance o localization we determined the true locations of the t Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL.355 (a) (b) Figure 8. (a) The particles represent the robot’s belief by integrating the negative information of two cameras; (b) Archived localization after five seconds. (a) (b) Figure 9. (a) Particles set after integrating negative information of two cameras among which the camera 1 is occupied by a people; (b) Archived localization after 10 seconds. robot by performing position tracking and measuring the position of each second. For each second, we then com- puted the estimation erro r at the reference positions. Th e estimation error is measured by the average distance of all samples from the reference position. The results are summarized in Figure 10. The graph plots the estimation error (y-axis) as a function of time (x-axis), averaged over the ten experiments, along with their 95% confi- dence intervals (bars). Firstly, as can be seen in the fig- ure, the quality of position estimation increases faster when using environmental camera detection (positive information) than one without environmental cameras. Please note that the detection event typically took place 14-16 seconds after the start of each experiment. Sec- ondly, as can be also seen in the figure, the quality of position estimation increases much faster when using all information of environmental cameras. Obviously, this experiment is specifically well-suited to demonstrate the advantage of positive and negative information of envi- ronmental cameras in robot global localization. Of course, the performance of our approach in more com- plex situations, especially highly symmetrical and dy- namic environments, is more attractable to solve robot’s global localization. Figure 10. Comparison of localization error using three localization algorithms. Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL. 356 5. Conclusions In this paper we presented an approach to collaborate distributed sensors for mobile robo t localization that uses a sample-based representation of the state space of a ro- bot, resulting in an extremely efficient and robust tech- nique for global position estimation. Here we use envi- ronmental cameras whose parameters is unknown in ad- vance to determine robot’s position. In order to apply environmental cameras to localize the robot, all parame- ters of each environmental camera are calibrated inde- pendently by robot. During calibration, the robot local- ization is known and can navigate by its onboard laser sensor. Once calibrated, the positive and negative detec- tions of the environmental cameras can be applied to localize robot. Experimental results demonstrate that, to combine all information of environmental cameras, the robot’s belief can reduce its uncertainty significantly. 6. Acknowledgements This work was supported by the Program of Nanjing University of Posts and Telecommunications (Grant No. NY209020). 7. References [1] N. Roy, W. Burgard, D. Fox and S. Thrun, “Coastal Navi- gation: Robot Navigation under Uncertainty in Dynamic Environmen ts,” Proceedings of IEEE International Confer- ence on Robotics and Automation, Detroit, 10-15 May 1999, pp. 35-40. [2] S. Thrun, D. Fox, W. Burgard and F. Dellaert, “Robust Monte Carlo Localization for Mobile Robots,” Artificial Intelligence, Vol. 128, 2001, pp. 99-141. [3] I. J. Cox and G. T. Wilfong, “Autonomous Robot Vehi- cles,” Springer Verlag, Berlin, 1990. [4] T. Sogo, K. Kimoto, H. Ishiguro and T. Ishida, “Mobile Robot Navigation by a Distributed Vision System,” Journal of Japan Robotics Society, Vol. 17, 1999, pp. 1009-1016. [5] K. Morioka, J. H. Lee and H. Hashimoto, “Human Cen- tered Robotics in Intelligent Space,” Proceedings of the 2002 IEEE International Conference on Robotics and Automation, Washington, D.C., 11-15 May 2002, pp. 201 0- 2015. [6] K. Matsumoto, H. Y. Chen, J. Ota and T. Arai, “Auto- matic Parameter Identification for Cooperative Modular Robots,” Proceedings of the 2002 IEEE International Symposium on Assembly and Task Planning, 2002, pp. 282-287. [7] L. P. Kaelbling, A. R. Cassandra and J. A. Kurien, “Act- ing under Uncertainty: Discrete Bayesian Models for Mobile Robot Navigation,” Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Sys- tems, Osaka, 4-8 November 1996, pp. 963-972. [8] W. Burgard, D. Fox, D. Hennig and T. Schmidt, “Esti- mating the Absolute Position of a Mobile Robot Using Position Probability Grids,” Proceedings of the National Conference on Artificial Intelligence, Portland, 4-8 Au- gust 1996, pp. 896-901. [9] D. Fox, W. Burgard and S. Thrun, “Markov Localization for Mobile Ro bots i n Dyna mic E nviron ments, ” Journal of Arti- ficial Intelligence Research, Vol. 11, 1999, pp. 391-427. [10] D. Fox, W. Burgard, F. Dellaert and S. Thrun, “Monte Carlo Localization: Efficient Position Estimation for Mo- bile Robots,” Proceedings of the National Conference on Artificial Intelligence, Orlando, 1999, pp. 343-349. [11] D. B. Rubin. “Using the SIR Algorithm to Simulate Pos- teriordistributions,” In: M. H. Bernardo, K. M. De Groot, D. V. Lindley and A. F. M. Smith, Ed., Bayesian Statis- tics 3, Oxford University Press, Oxford, 1988. [12] A . F. M. Smith and A. E. Gelfand, “Bayesian Statistics Without Tears: A Sampling-Resampling Perspective,” American Statistician, Vol. 46, No. 2, 1992, pp. 84-88. [13] N. J. Gordon, D. J. Salmond and A. F. M. Smith, “Novel Approach to Nonlinear/Non-Gaussian Bayesian State Estima- tion,” IEEE Proceedings, Vol. 140, No. 2, 1993, pp. 1 07-113. [14] M. Isard and A. Blake. “Condensation – Conditionaldensity Propagation for Visual Tracking,” International Journal of Computer Vision, Vol. 29, No.1, 1998, pp. 5-28. [15] K. Kanazawa, D. Koller and S. J. Russell. “Stochastic Simulation Algorithms for Dynamic Probabilistic Net- works,” Proceedings of the 11th Annual Conference on Uncertainty in AI, Montreal, 18-20 August 1995. [16] A. Doucet, “On Sequential Simulation-Based Methods for Bayesian Filtering,” Technical Report CUED/FINFENG/ TR.310, Department of Engineering, University of Cam- bridge, Cambridge, 1998. [17] H. Chen, K. Matsumoto, J. Ota and T. Arai, “Self-Calib- ration of Environmental Camera for Mobile Robot Navi- gation,” Robotics and Autonomous Systems, Vol. 55, No. 3, 2007, pp. 177-190. [18] R. Y. Tsai, “An Efficient and Accurate Camera Calibra- tion Technique for 3D Machine Vision,” Proceedings of IEEE Conference on Computer Vision and Pattern Rec- ognition, Miami Beach, 22-26 June 1986, pp. 364-374. [19] A. Howard and M. J. Mataric, “Localization for Mobile Robot Teams Using Maximum Likelihood Estimation,” Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, 30 Sep- tember-4 October 2002, pp. 434-459. [20] D. Koller and R. Fratkina, “Using Learning for Approxi- mation in Stochastic Processes,” Proceedings of the In- ternational Conference on Machine Learning, Madison, 24-27 July 1998, pp. 287-295. [21] W. Koch, “On Negative Information in Tracking and Sensor Data Fusion: Discussion of Selected Examples,” Copyright © 2010 SciRes. WSN  Z. W. LIANG ET AL. Copyright © 2010 SciRes. WSN 357 7th International Conference on Information Fusion (FU- SION 2004), Stockholm, 28 June-1 July 2004, pp. 91-98. [22] J. Hoffmann, M. Spranger, D. Gohring and M. Jungel, “Making Use of What you don’t See: Negative Information in Markov Localization,” Proceedings of the IEEE/RSJ In- ternational Conference on Intelligent Robots and Systems, Edmonton, 2-6 August 2005, pp. 854-859. [23] A. Mittal and N. Paragios, “Motion-Based Background Subtraction Using Adaptive Kernel Density Estimation,” Proceedings of the 2004 IEEE Computer Society Confer- ence on Computer Vision and Pattern Recognition, Vol. 2, Washington, D.C., 27 June-2 July 2004, pp. 302-309. |