Journal of Signal and Information Processing

Vol.4 No.2(2013), Article ID:31296,4 pages DOI:10.4236/jsip.2013.42029

Medical Image Fusion Based on Wavelet Multi-Scale Decomposition

![]()

1College of Information Engineering, Southwest University of Science and Technology, Mianyang, China; 2College of Life Science and Engineering, Southwest University of Science and Technology, Mianyang, China.

Email: zhuhuiping@swust.edu.cn

Copyright © 2013 Huiping Zhu et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received January 9th, 2013; revised February 11th, 2013; accepted February 22nd, 2013

Keywords: Wavelet Transform; Image Fusion; Regional Variance Improvement; Fusion Rule

ABSTRACT

This paper describes a method to decompose multi-scale information from different source medical image using wavelet transformation. The data fusion between CT image and MRI image is implemented based on the coefficients fusion rule which included choice of regional variance and weighted average wavelet information. The result indicates that this method is better than WMF, LEF and RVF on fusion results, details and target distortion.

1. Introduction

Medical image fusion generally refers to match and superpose the same lesion area of image which acquired from 2 or more different medical imaging equipment, which obtain complementary information, and increase the amount of information to make the clinical diagnosis and treatment more accurate and complete.

Wavelet transform can decompose the image into approximation images and detail images which are representing different structure and facilitate to extract originnal image structure and detail information [1-3]. It is a hot research of medical image fusion for its perfect reconfiguration. Wavelet transform is considered a breakthrough method of Fourier analysis. Known as the “mathematical microscope”, it have locality in space and frequency domain to multi-scale analysis information through computing functions such as telescopic and shift. Earliest wavelet transform in image fusion study is the thermal images and visual multi-image fusion, medical image fusion has also been a lot of applications in present [4-6].

Head CT images and MRI images have been study in this paper. According to the choice of multi-wavelet based on multi-wavelet transform or fusion operator, the rule of weighting maximum fusion, local energy fusion and regional variance of image fusion are presented. Experimental results show that the choice based on regional variance and the weighting average fusion proposed in this paper achieved good fusion effect.

2. Principle of Wavelet Decomposition and Reconstruction

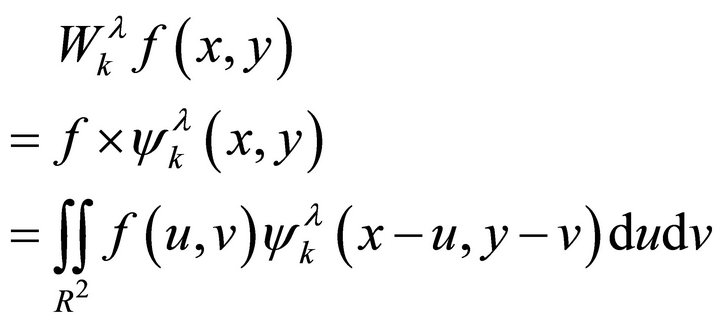

Mallat algorithm has been used to decompose and reconstruct of image signal. Suppose  is two-dimensional image and analysis based on two-dimensional multi-resolution:

is two-dimensional image and analysis based on two-dimensional multi-resolution:

(1)

(1)

where k is different decomposition scales, λ is three different high-frequency components , ψλ(x, y) is 2D generating wavelet function which composed by Scaling function and Wavelet function.

, ψλ(x, y) is 2D generating wavelet function which composed by Scaling function and Wavelet function.

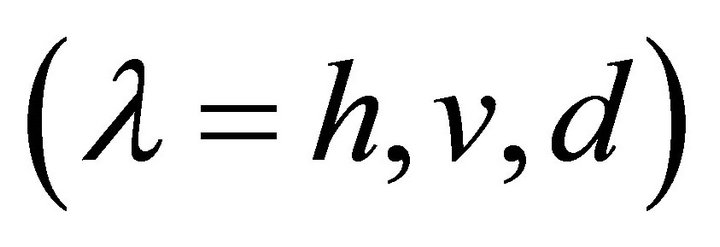

Then based on 2D Mallat decomposition algorithm, Wavelet coefficients define as follows:

(2)

(2)

Each wavelet coefficient  can be consider a Sub-images, which is corresponding to horizontal high frequency component and vertical high frequency components, the diagonal direction of the high frequency components of the image. Low-frequency subimage reflects the average characteristics of the original image, and high frequency sub-images reflect the level of the original image edge, vertical edge and diagonal edge features. The purpose of image wavelet decomposition is decomposing different characteristics in different frequency domains. After reconstructing image by inverse wavelet transform and reconstruction algorithm defined as follows:

can be consider a Sub-images, which is corresponding to horizontal high frequency component and vertical high frequency components, the diagonal direction of the high frequency components of the image. Low-frequency subimage reflects the average characteristics of the original image, and high frequency sub-images reflect the level of the original image edge, vertical edge and diagonal edge features. The purpose of image wavelet decomposition is decomposing different characteristics in different frequency domains. After reconstructing image by inverse wavelet transform and reconstruction algorithm defined as follows:

(3)

(3)

where H and G is conjugate filter,  and

and  is conjugate transport matrix of H and G.

is conjugate transport matrix of H and G.

3. Medical Image Fusion Method

The same parts of slice images acquired by different medical equipment have similar low-frequency components and disparate frequency components. For high frequency components of integration is the key to medical image fusion, so it is important that distinguished high frequency and low frequency components in image processing by apply different fusion operator and fusion rule.

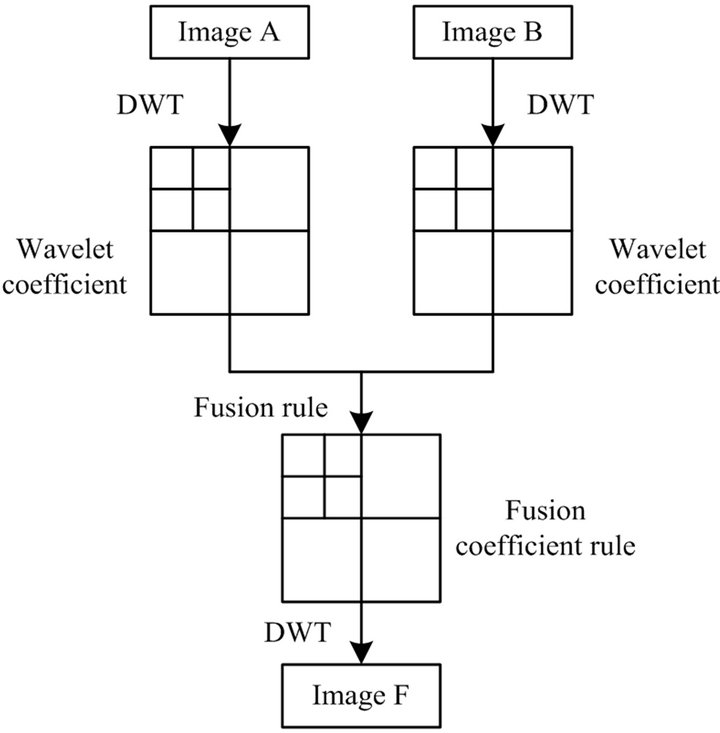

The process of wavelet multi-scale decomposition image fusion is shown in Figure 1.

The process of Fusion as follows:

1) To decompose source image A, B by K-layer wavelet decomposition, and get 3K + 1 pieces of sub-images

Figure 1. Image fusion process based on wavelet transform.

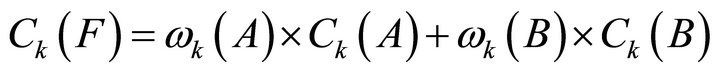

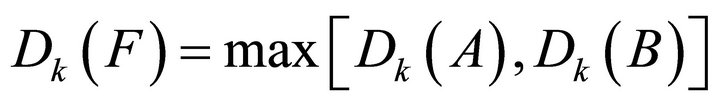

3.1. Weighted Maximum-Fusion Rule

After wavelet transform, the images to be fused which used the weighted average method to get the low-frequency coefficient matrix of the fused image in the corresponding directions on low-frequency coefficient, and used the method of selecting a larger value in the corresponding directions on high-frequency coefficient. Fused image will be acquired by wavelet coefficient matrix after inverse transformation. Corresponding algorithm is:

(4)

(4)

(5)

(5)

where  and

and  are weighted coefficient.

are weighted coefficient.

3.2. Local Energy-Fusion Rule

Wavelet fusion based on local energy use different local characteristically operator in high-frequency and lowfrequency. The part of high-frequency acquired local energy of image as fusion operator and match degree of local energy as fusion threshold. After compare match degree to given threshold and local energy of fusion image, extremum fusion method has been adopt. Weighted local medium method has been chosen in low-frequency part.

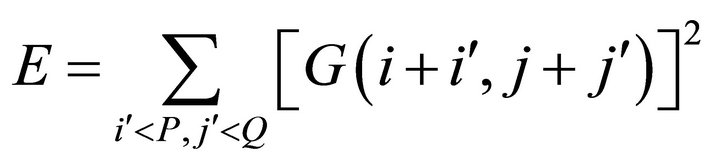

Local energy (E) and local medium  are local characteristic of image. The local energy of any area which center is

are local characteristic of image. The local energy of any area which center is  in image G has been defines as following:

in image G has been defines as following:

(6)

(6)

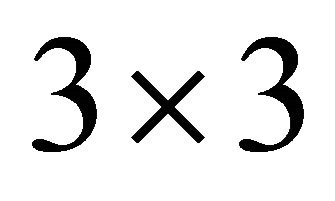

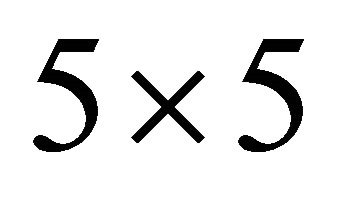

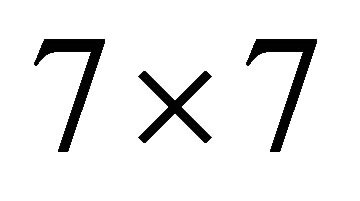

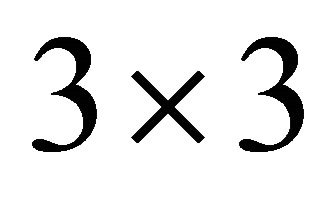

P and Q are defined size of the local area (such as ,

,  ,

, ).

).

Local medium  defined as follows:

defined as follows:

(7)

(7)

Match degree of corresponding local energy in two images defined as follows:

(8)

(8)

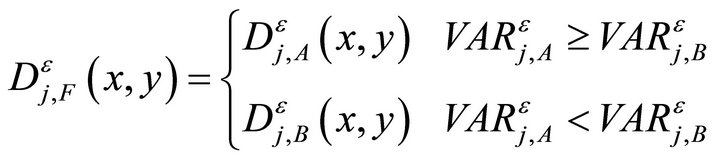

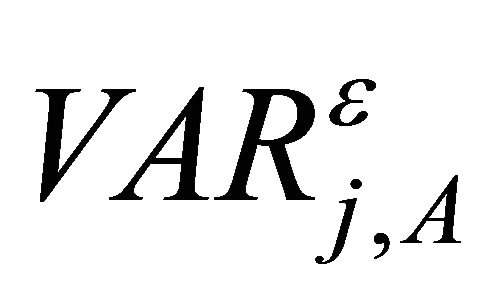

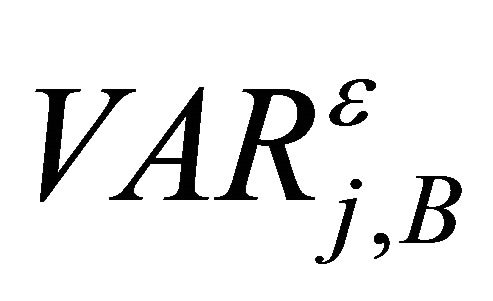

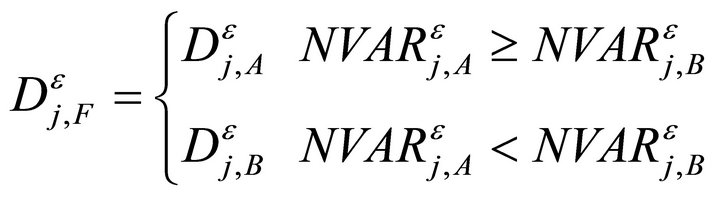

3.3. Regional Variance Fusion Rule

The rule of regional variance fusion is that: after multiwavelet transforming, wavelet coefficient of fusion image F defined as which is wavelet coefficient max-variance of the current processing pixel as the center of a local area (generally ,

,  ,

,  , here, take

, here, take ) in image A or B.

) in image A or B.

(9)

(9)

where j is decomposition scale from 1 to N,  is source image A and B’s variance on

is source image A and B’s variance on  direction in j.

direction in j.

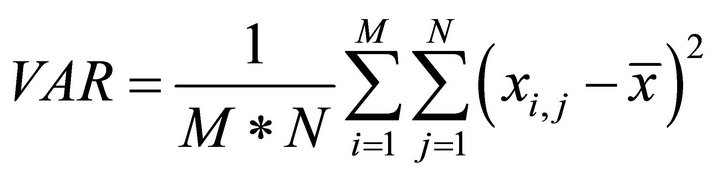

VAR defines as following:

(10)

(10)

where M and N are the number of rows and columns of local area  is a gray value of pixel in current local area,

is a gray value of pixel in current local area, ![]() is gray average value of pixel in current local area.

is gray average value of pixel in current local area.

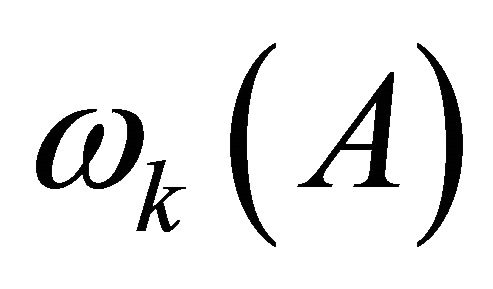

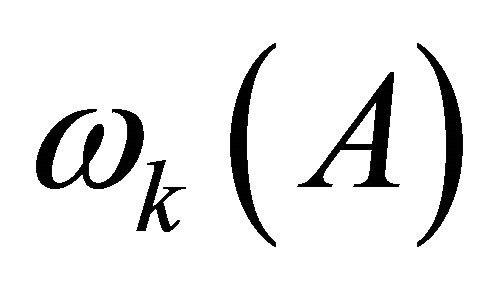

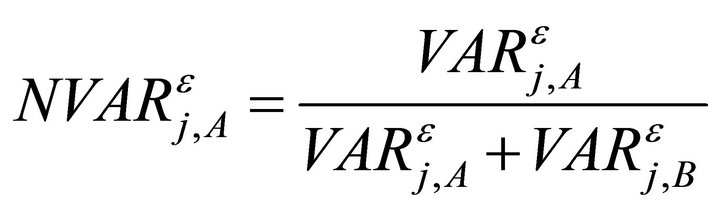

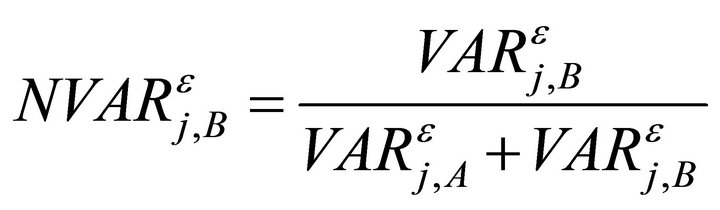

3.4. Rule Based on Regional Variance and Weighted Average Fusion

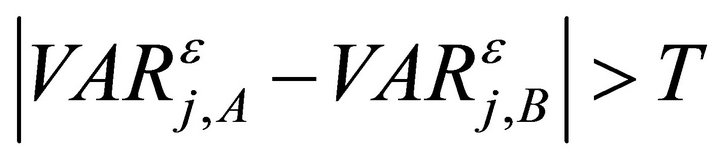

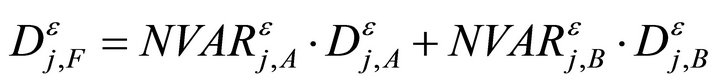

The rule of regional variance fusion takes pixel which has larger local variance as fusion coefficient. Fusion coefficient choose one image’s coefficient to cover another image’s coefficient. When the two images’ corresponding pixels local variance difference is not significant, some useful information will be lost. The fusion image will be distorted. For this, this paper present an improved fusion method based on local variance. The fusion rules are as follows:

Firstly, finding the high-frequency sub-image decomposed layers j(1 to N) of source image A and B; then each direction  related pixel’s local variance record as

related pixel’s local variance record as ,

, .

.

Normalized function as follows:

(11)

(11)

(12)

(12)

Define T as threshold , general 0.5 to 1.

, general 0.5 to 1.

If

Then

(13)

(13)

If

Then

(14)

(14)

On the corresponding decomposed layer and direction, pixels which have larger local VAR has been taken as fusion wavelet coefficient when difference is significant between images A and B after normalized and illustrated that one image’s detail information larger than another. If normalized local VAR between two images closed, it is indicated that two images are rich in details and weighted average fusion operator has been taken to confirm fusion wavelet coefficient. It could keep image signal’s detail feature clearly, avoid loss of information, reduce noise and ensure consistency.

4. Experimental Results

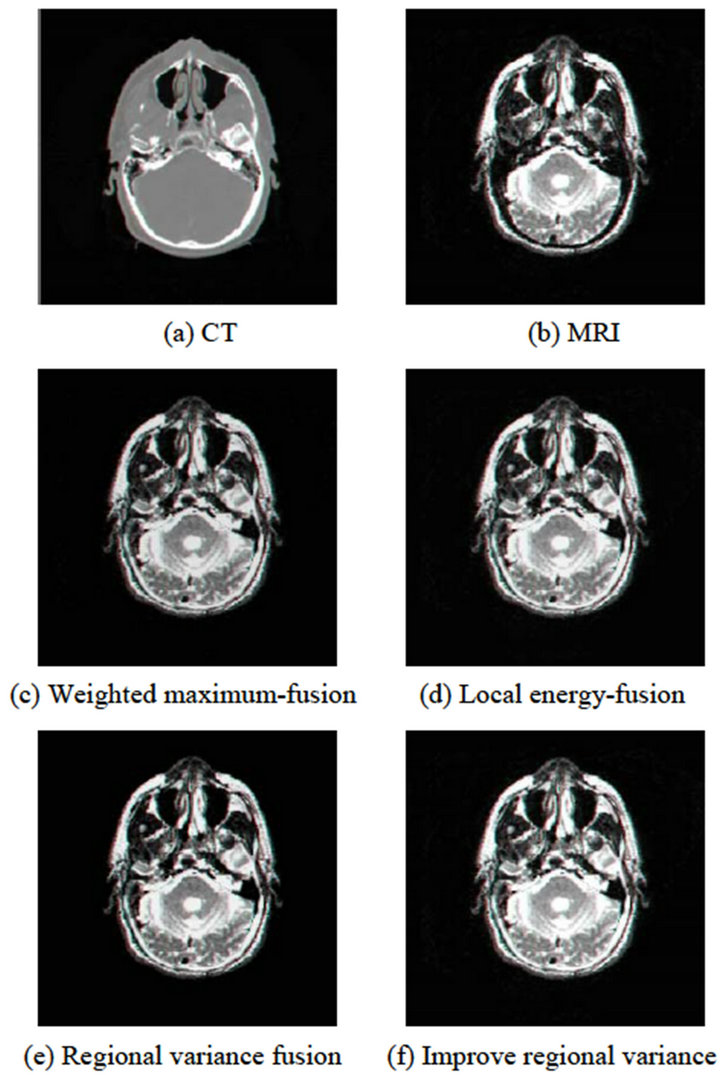

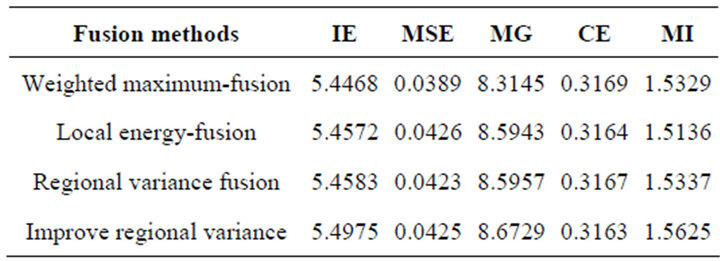

The CT and MRI image used in this experiment have been redistricted already. The fusion result shown in Figure 2. Information Entropy (IE), mean square deviation (MSE), average gradient (MG), cross-entropy (CS) and mutual information (MI) have be used to measure

Figure 2. The fusion results of CT and MRI images.

Table 1. Comparison for four fusion methods.

comprehensive quantitative evaluation. The principle of Fusion performance evaluation is that, in the same set of fusion experiments, the larger IE, MSE, MG and MI and smaller CE get by some kind fusion method, the method have better performance.

Performance results of different methods shown in Table 1. Figure 2 and Table 1 show that, the best result has been getting by the fusion method of based on choice of regional variance and weighted average wavelet information. More detail information and more amount of information get from image which avoid target edge distortion and fusion image clarity improved.

5. Conclusion

Wavelet decomposition is an image fusion of multi-scale, multi-resolution. Proposed in this paper, the fusion method of based on choice of regional variance and weighted Average wavelet information is better than WMF, LEF and RVF on fusion results, details and target distortion.

REFERENCES

- S. G. Mallat, “A Theory for Multiresolution Signal Decomposition: The Wavelet Representation,” IEEE Transaction on Pattern Analysis and Machine Intelligence, Vol. 11, No. 7, 1989, pp. 674-693. doi:10.1109/34.192463

- T. N. T. Goodman and S. L. Lee, “Wavelets of Multiplicity,” Transactions of the American Mathematical Society, Vol. 342, No. 1, 1994, pp. 307-324.

- H. Li, B. S. Manjunath and S. K. Mitra, “Multisensor Image Fusion Using the Wavelet Transform,” Graphical Models and Image Processing, Vol. 57, No. 3, 1995, pp. 235-245. doi:10.1006/gmip.1995.1022

- C. Y. Wen and J. K. Chen, “Multi-Resolution Image Fusion Technique and Its Application to Forensic Science,” Forensic Science International, Vol. 140, No. 2, 2004, pp. 217-232. doi:10.1016/j.forsciint.2003.11.034

- W. J. Wang, P. Tang and C. G. Zhu, “A Wavelet Transform Based Image Fusion Method,” Journal of Image and Graphics, Vol. 6A, No. 11, 2001, pp. 1130-1134.

- G. Piella, “New Quality Measures for Image Fusion,” The 7th International Conference on Information Fusion, 2004, pp. 542-546.