Open Journal of Philosophy 2012. Vol.2, No.1, 32-37 Published Online February 2012 in SciRes (http://www.SciRP.org/journal/ojpp) http://dx.doi.org/10.4236/ojpp.2012.21005 Copyright © 2012 SciRes. 32 Free Will and Advances in Cognitive Science Leonid Perlovsky1,2 1Harvard University, Charlestown, USA 2AFRL, WPAFB, USA Email: leonid@seas. harvard.edu Received August 10th, 2011; revised September 20th, 2011; accepted S eptember 25th, 2011 Freedom of will is fundamental to morality, intuition of self, and normal functioning of society. However, science does not provide a clear logical foundation for this idea. This paper considers the fundamental argument against free will, so called reductionism, and why the choice for dualism against monism, fol- lows logically. Then, the paper summarizes unexpected conclusions from recent discoveries in cognitive science. Classical logic turns out not to be a fundamental mechanism of the mind. It is replaced by dy- namic logic. Mathematical and experimental evidence are considered conceptually. Dynamic logic count- ers logical arguments for reductionism. Contemporary science of mind is not reducible; free will can be scientifically accepted along with scientific monism. Keywords: Free Will; Cognitive Science; Philosophy; Logic; Dynamic Logic; Reductionism; Mind Introduction Most contemporary philosophers and scientists do not be- lieve that freedom of will exists (Bering, 2010). Scientific ar- guments against reality of free will can be summarized as fol- lows (Wikipedia, 2010a). Scientific method is fundamentally monistic: spiritual events, states, and processes (the mind) are to be explained based on laws of matter, from material states and processes in the brain. The basic premise of science is cau- sality, future states are determined by current states, according to the laws of physics. If physical laws are deterministic, there is no free will, since determinism is opposite to freedom. If physical laws contain probabilistic elements or quantum inde- terminacy, there is no free will either, since indeterminism and randomness are opposite from freedom (Lim, 2008; Bielfeldt, 2009). Free will, however, has a fundamental position in many cul- tures. Morality and judicial systems are base d on free will. De- nying free will threatens to destroy the entire social fabric of the society (Rychlak, 1983; Glassman, 1983). Free will also is a fundamental intuition of self. Most of people on Earth would rather part with science than with the idea of free will (Bering, 2010). Most people, including many philosophers and scientists, refuse to accept that their decisions are governed by same laws of nature as a piece of rock by the road wayside or a leaf flown by the wind. (e.g. Libet, 1999; Velmans, 2003). Yet reconciling scientific causality and freedom of will remains an unsolved problem. Monism and Dualism The above arguments assume scientific monism: spiritual states of the mind are produced by material processes in the brain. It seems scientific monism, accepting the unity of matter and spirit, fundamentally contradicts freedom of will. This po- sition of monism denying free will was accepted by B. Spinoza among many great thinkers (Wikipedia, 2010b). Other great thinkers could not accept this conclusion, rejected monism and chose dualism: spiritual and material substances are different in principle and governed by different laws. Among dualists are R. Descartes (Wikipedia, 2010c), and D. Chalmers (Wikipedia, 2010d). Rejecting monism and accepting dualism (of matter and spirit) also contradicts the fundamentals of our culture. Dualis- tic position attempts to separate laws of spirit and matter, how- ever there is no scientific law to accomplish it. Therefore dual- ism cannot serve as a foundation of science. The only basis for separating laws of spirit and matter, it seems, is to accept as material that which is currently explained by science, and de- clare spiritual that which seems unexplainable. Any hypothesis attempting such a separation of spirit and matter at any moment in history would be falsified by science many times over. The monistic view that spirit and matter are of the same substance is not only the basic foundation of science, but also corresponds to the fundamental theological positions of most world relig- ions. The set of issues involving free will, monism and dualism, science, religions, and cultural traditions is difficult to reconcile (e.g. Chalmers, 1995; Velmans, 2008). The main difficulty is sometimes summarized as reductionism: if the highest spiritual values could be scientifically reduced to biological explanations, eventually they would be reduced to chemistry, to physics, and there would be no difference between laws governing the mind and spiritual values on the one hand, and a leaf flown with the wind on the other. Reductionism and Logic Physical biology has explained the molecular foundations of life, DNA and proteins. Cognitive science has explained many mental processes by material processes in the brain. Yet, mo- lecular biology is far away from mathematical models relating processes in the mind to DNA and proteins. Cognitive science only approaches some foundations of perception and simplest actions (Perlovsky, 2006a). Nobody ever yet has been able to scientifically reduce highest spiritual processes and values to laws of physics. All reductionist arguments and difficulties of  L. PERLOVSKY free will discussed above, when applied to highest spiritual processes, have not been based on mathematical predictive models with experimentally verifiable predictions—the essence and hallmark of science. All of these arguments and doubts were based on logical arguments. Logic has been considered a fundamental aspect of science since its very beginning and fundamental to human reason during more than two thousand years. Yet, no scientist will consider logical argument sufficient, in absence of predictive scientific models, confirmed by ex- perimental observations. In the 1930s a mathematical logician Gödel (1934) discov- ered fundamental deficiencies of logic. These deficiencies of logic are well known to scientists and are considered among the most fundamental mathematical results of the twentieth century. Nevertheless logical arguments continue to exert powerful in- fluence on scientists and non-scientists alike. Let me repeat that most scientists to do not believe in free will. This rejection of fundamental cultural values and an intuition of self without scientific evidence seems a glaring contradiction. Sure, there have to be equally fundamental psychological reasons, most likely acting unconsciously. The rest of the paper analyzes these reasons and demonstrates that the discussed doubts are indeed unfounded. To understand the new arguments we will look into the recent developments in cognitive science and mathematical models of the mind. Recent Cognitive Theories and Data Attempts to develop mathematical models of the mind (compu- tational intelligence) have for decades encountered irresolvable problems related to computational complexity. All developed approaches, including artificial intelligence, pattern recognition, neural networks, fuzzy logic and others faced complexity of computations, the number of operations exceeding the number of all elementary interactions in the universe (Bellman, 1961; Minsky, 1975; Winston, 1984; Perlovsky, 1998, 2001, 2006a, 2006b). A mathematical analysis of this complexity problem related it to the difficulties of logic demonstrated by Gödel (1934); it turned out that complexity was a manifestation of Gödelian incompleteness in finite systems, such as computers or brains, (Perlovsky, 1996, 2001). Difficulties of computa- tional intelligence turned out related to a most fundamental mathematical result of the 20th century. A different type of logic was necessary for overcoming the difficulty of complexity. Dynamic logic is a process-logic, a process “from vague to crisp,” from vague statements, condi- tions, models to crisp ones (Perlovsky, 1987, 1989, 2001, 2006a, 2006b, 2010b; Perlovsky & McManus, 1991). Dynamic logic is not a collection of static statements (such as “this is a chair” or “if A then B”); it is a dynamic logic-process. Dynamic logic was applied to solving a number of engineering problems that could not have been solved for decades because of the mathematical difficulties of complexity (Perlovsky, 1989, 1994, 2001, 2004, 2007a, 2007b, 2007c, 2007d, 2010b, 2010d; Per- lovsky, Chernick, & Schoendorf, 1995; Perlovsky, Schoendorf, Burdick, & Tye 1997; Perlovsky et al., 1997; Tikhanoff et al., 2006; Perlovsky & Deming, 2007; Deming & Perlovsky, 2007; Perlovsky & Kozma, 2007; Perlovsky & Mayorga, 2008; Per- lovsky & McManus, 1991). These engineering breakthroughs became possible because dynamic logic mathematically models perception and cognition in the brain-mind. A basic property of dynamic logic is that it describes perception and cognition as processes in which vague (fuzzy) mental representations evolve into crisp representations. More generally, dynamic logic de- scribes interaction between bottom-up and top-down signals (to simplify, signals from sensor organs, and signals from memory). Mental representations in memory, sources of top-down signals, are vague; during perception and cognition processes they in- teract with bottom-up signals, and evolve into crisp mental representations; crispness of the final states correspond to cris- pness of the bottom-up representations, e.g., retinal images of objects in front of our eyes. Initial vague representations and the dynamic logic process from vague to crisp are unconscious; only the final states, in which top-down representations match patterns in bottom-up signals, are available to consciousness and mentally perceived as approximatel y l ogical states. During recent decades much became known about neural mechanisms of the mind-brain, especially about mechanisms of perception at the lower levels of the mental hierarchy (Gross- berg, 1988). This foundation makes it possible to verify the vagueness of initial states of mental representations. It is known that visual imagination is created by top-down signals. If one closes one’s eyes, and imagines an object, seen just a second ago, this imagination gives an idea of properties of mental rep- resentations of the object. The imagined object is vague com- pared with the object perceived with opened eyes. If we open our eyes, it seems that we immediately perceive the object crisply and consciously. However, it is known that it takes ap- proximately 160 ms to perceive the object crisply and con- sciously; therefore the neural mechanisms acting during these 160 ms are unconscious. This crude experimental verification of dynamic logic predictions was confirmed in detailed neuro- imaging experiments (Bar et al., 2006; Perlovsky, 2009c). Men- tal representations in memory are vague and less conscious with closed eyes; with opened eyes they are not conscious. Opened eyes mask vagueness of initial mental states from our consciousness. Dynamic logic mathematically models a psy- chological theory of Perceptual Symbol System (Barsalou, 1999; Perlovsky & Ilin, 2010b). In this theory symbols in the brain are processes simulating experiences, and they are mathe- matically modeled by dynamic logic process es . Hierarchy of the Mind Attempts to develop mathematical models of the mind. The mind is organized into an approximate hierarchy. At the lower levels of the hierarchy we perceive sensory features. We do not normally experience free will with regard to functioning of our sensor systems. Higher up the mind perceives objects, still higher, situations, abstract concepts. Each next higher level contains more general and more abstract mental representations. These representations are built (learned) on top of lower level representations, and correspondingly, representations at every higher level are vaguer and less conscious (Perlovsky, 2006a, 2006c, 2006d, 2007b, 2007c; Perl ovsky , 2008; Per lovsky , 2010a, 2010c; Mayorga & Perlovsky, 2008). For example, at a lower level the mind may perceive objects, such as a computer, a chair, a desk, bookshelves with books; each object is perceived due to a representation, which organizes perceptual features into the unified object; at this low level perception mechanisms function autonomously, mostly unconsciously, and free will is not experienced. At a higher level, the mind perceives a situa- tion, say a professor’s office, which is perceived due to a cor- responding representation as an organized whole made up of Copyright © 2012 SciRes. 33  L. PERL OVSKY Copyright © 2012 SciRes. 34 objects. We experience free will about, say moving objects and arranging furniture in our office. At still higher levels the mind cognizes ideas of a University, or a system of education, due to representations at corresponding levels. And even if we appre- ciate that an individual ability of changing educational system might be limited, st ill we experience free will to think about it. Whereas in everyday mundane experience we know that our freedom is limited in many ways, still, at higher levels of the mind we experience intuitions or ideas of free will and self, possessing free will. Many people doubt that free will exists, for the reasons of scientific causality and reducibility discussed above. Therefore I remind that even at the level of simple object perception, mental representations (in absence of actual objects) are vague and barely conscious. Higher up, on top of several vague and less conscious levels of the hierarchy, contents of representa- tions are vague and unconscious. However, believing in free will, despite severe limitations of our freedom in real life, con- sciously or unconsciously, is extremely important for individual survival, for achieving higher goals, and for evolution of cul- tures (Glassman, 1987; Bielfeldt, 2009). In animal kingdom “belief in free will” acts instinctively, their psyche is unified. Similarly this question did not appear in the mind of our early progenitors. In human mind, for hundreds of thousands of years belief in free will directed actions of early humans uncon- sciously. An intuition of free will is a recent cultural achieve- ment. For example, in Homer Iliad, only Gods possess free will; 100 years later Ulysses demonstrates a lot of free will (Jaynes, 1976). Clearly, conscious contemplation of free will is a cul- tural construct. It become necessary with evolution and differ- entiation of consciousness and culture. Majority of cultures existing today have well developed ideas about free will, reli- gious and educational systems for installing these ideas in the minds of every next generation. But does free will really exist? To answer this question, and even to understand the meaning of really we will now consider how ideas exist in culture, and how existence of ideas in cultural consciousness differs from ideas in individual cognition (cultural consciousness refers to what is conscious in culture, in its texts, practices, etc.). Language and Cognition Cultures accumulate knowledge and transmit it from genera- tion to generation mostly due to language. Mechanisms of in- teractions between language and cognition (Perlovsky, 2004, 2007e, 2009a, 2009b; Fontanari & Perlovsky, 2007, 2008a, 2008b; Perlovsky & Ilin, 2010a, 2010b) explain why language is acquired in childhood, whereas higher cognition requires much longer. How are correct connections learned between words and objects, among the multitude of incorrect ones (no amount of experience would be sufficient to overcome compu- tational complexity of learning these connections)? Why does not human-level cognition evolve in animals without language? What, exactly, are the similarities and differences between lan- guage and cogniti o n? According to the given references, these and other properties of cognition-language interaction are explained due to the mechanism of the dual model hierarchy (Figure 1). This figure illustrates the dual hierarchy of the mind, a cognitive hierarchy from sensory signals, to objects, to situations, to abstract con- cepts… and a parallel hierarchy of language from words, to phrases, from concrete to abstract meanings. The dual model Figure 1. The dual hierarchy of language and cognition. Language learning is grounded in surrounding language at all levels of the hierarchy. Learning of embodied cognitive models is grounded in direct experience of sensory-motor percep- tions only at the lower levels. At higher levels, their learning from experience has to be guided by contents of language models. Language representations are crisp after about age of five; cognitive representations gradually acquire crisper content throughout life and at high levels remain vague and unconscious.  L. PERLOVSKY along with dynamic logic suggests that a newborn brain con- tains separate place-holders for future representations of lan- guage and cognitive contents. Initial contents are vague and non- specific. The newborn mind has no image-representations, say for chairs, or sound-representations for an English word chair. Yet connections between placeholders for future cognitive and language representations are inborn. Inborn connections be- tween cognitive and language brain areas are not surprising, Arbib (2005) suggested that such connections existed due to the mechanism of mirror neurons millions of years before language ability evolved. Due to these inborn connections, word and object representations are acquired correctly connected: as one part of the dual model (a word or object representation) is learned, becomes crisper and more specific, the other part of the dual model is learned in correspondence with the first one. Objects that are directly observed can be learned without lan- guage (like in animals). However, abstract ideas cannot be di- rectly observed; they cannot be learned from experience as useful combinations of objects, because of computational com- plexity of such learning. Therefore, cognitive representations of abstract ideas can be learned from experience only due to guidance by language. Language can be learned from surrounding language without real-life experience, because it exists in the surrounding lan- guage ready-made at all levels of the mind hierarchy. This is the reason language is acquired in childhood, whereas learning corresponding cognitive representations requires much experi- ence. Learning language can proceed fast, because it is grounded in surrounding language at all hierarchical levels. But cognition is grounded in direct experience only at the bottom levels of perception. At higher levels of abstract ideas, learning cognitive representations from experience is guided by already learned language representations. Abstract ideas that do not exist in language (in culture or in personal language) usually cannot be perceived or cognized and their existence are not noticed, until first they are learned in language. Language grounds and supports learning of the correspond- ing cognitive representations, similar to the eye supporting learning of an object representation in the opened-closed eye experiment. Language serves as inner mental eyes for abstract ideas. The fundamental difference, however, is that language “eyes” cannot be closed at will. The crisp and conscious lan- guage eyes mask vague and barely conscious cognitive repre- sentations. Therefore we cannot perceive them. If we do not have necessary experience, our cognitive representations are vague and unconscious and language representations are taken for this abstract knowledge. It is obvious with children, but it also persists through life. Because language contains wealth of cultural information, we are capable of reasonable judgments, even without direct life experience. This discussion is directly relevant to Maimonides’ interpret- tation of the Original Sin (Levine & Perlovsky, 2008), Adam was expelled from paradise because he did not want to think, but ate from the tree of knowledge to acquire existing knowl- edge ready-made. In terms of Figure 1, he acquired language knowledge from surrounding language but not in cognitive representations from his own experience. This discussion is also directly relevant to the difference between much discussed (Noble Prize 2002) irrational heuristic decision-making discov- ered by Tversky & Kahneman (1974, 1981) and decision- making based on personal experience and careful thinking, grounded in learning and driven by the knowledge instinct (Le- vine & Perlovsky, 2008; Perlovsky, Bonniot-Cabanac, & Ca- banac, 2010). In those cases when life experience is insufficient and cognitive representations are vague, crisp and conscious language representations substitute for the cognitive ones. This substitution is smooth and unconscious, so that we do not no- tice (without specific scientific training) when we speak from real life experience, or from language-based knowledge (heu- ristics). Language-based knowledge accumulates millennial wisdom and could be very good, but it is not the same as per- sonal cognitive knowledge combining cultural wisdom with life experience. It might sound tautologically that we are conscious only about consciousness, and unconscious about unconscious- ness. But it is not a tautology that we have no idea of nearly 99% of our mind functioning. Our consciousness jumps from one tiny conscious and logical island in our mind to another one, across an ocean of vague unconscious, yet our consciousness keeps “us” sure that we are conscious all the time, and that logic is a fundamental mechanism of perception and cognition. Because of this property of consciousness, even after Gödel, most scientists have remained sure that logic is the main mechanism of the mind. Return now to the question, does free will really exist? Ac- cording to this paper, the question about whether free will ex- ists in the sense of resolving the free-will vs determinism de- bate, this question exists in classical logic, but it does not exist as a fundamental scientific question. Because of the properties of mental representations near the top of the mind hierarchy this question cannot be answered within classical logic. How can the question about free will be answered within the developed theory of the mind? Free will does not exist in in- animate matter. First, free will exists as a cultural concept. Contents of this concept include all related discussions in cul- tural texts, literature, poetry, art, in cultural norms. This cultural knowledge gives the basis for developing corresponding lan- guage representations in individual minds; language representa- tions are mostly conscious. Clearly individuals differ by how much cultural contents they acquire from surrounding language and culture. The dual model suggests that based on this per- sonal language representation of free will, every individual develops his or her personal cognitive representation of this idea, which assembles his or her related experiences in real life, language, thinking, acting, into a coherent whole. Conclusion Contents of cognitive representation of free will determine personal thinking, responsibility, will, and actions, which one exercises in his or her life. Clearly, due to a hierarchy of vague representations, the concept of free will is far removed from physical laws controlling molecular interactions. Therefore logi- cal arguments about reducibility are plainly wrong. Logic is not a fundamental mechanism of the mind. Mathematical details of the corresponding cognitive models, supporting experimental evidence, and future directions of experimental and theoretical research are discussed in the given references. Among these directions for future research are experimental verification of interaction between language and cognition. Psychological and neuroimaging experiments shall be used to confirm that lan- guage and cognitive representations are neurally connected before either of them become crisp; high level abstract ideas are first become conscious and crisp in language, and then gradu- ally become conscious and crisp in cognition; language repre- Copyright © 2012 SciRes. 35  L. PERL OVSKY sentations are crisp and conscious long before cognitive repre- sentations become equally crisp and conscious; the higher up in the mental hierarchy the vaguer and less conscious are cogni- tive representations; many abstract cognitive representations remain vague and unconscious throughout life, even so people can fluently talk about them. Some of these ideas are being experimentally tested, and have received partial support. This paper addressed a fundamental philosophical issue of how one could scientifically accept an idea of free will, while humans are collections of atoms and molecules having no free- dom. For centuries this consideration has been propelled by logic toward the idea of reductionism, logically denying a pos- sibility of free will. We explained belief in logic in many scien- tists and philosophers, even in those well familiar with Göde- lian theory, by fundamental properties of consciousness: we are conscious only about logical or near logical states of the mind. We resolved this difficulty by pointing out that logic, although prominent in consciousness, is not a fundamental mechanism of the mind. Dynamic logic, proven experimentally, models the human mind as an approximate hierarchy of vaguer and vaguer representations. This model eliminates logical arguments of reductionism (supporting those scientists denying it earlier) and supports the agreement between free will, scientific monism, and science. REFERENCES Arbib, M. (2005). From monkey-like action recognition to human lan- guage: An evolutionary framework for neurolinguistics. Behavioral and Brain Sciences, 28, 105-167. doi:10.1017/S0140525X05000038 Bar, M. et al. (2006). Top-down facilitation of visual recognition. PNAS, 103, 449-454. doi:10.1073/pnas.0507062103 Barsalou, L. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577-660. Bellman, R. (1961). Adaptive control processes. Princeton, NJ: Prince- ton University Press. Bering, J. (2010). Scientists say free will probably doesn’t exist, but urge: “Don’t stop believing!” Scientific American Mind. URL (last checed 6 April 2010). http://www.scientificamerican.com/blog/post.cfm?id=scientists-say-f ree-will-probably-d-2010-04-06 Bielfeldt, D. (2009). Freedom and neurobiology: Reflections on free will, language, and political power. Zygon, 44, 999-1002. doi:10.1111/j.1467-9744.2009.01048.x Chalmers, D. J. (1995). Facing up to the problem of consciousness. Journal of Consciousness Studies, 2, 200- 219. Deming, R., & Perlovsky, L. (2007). Concurrent multi-target localiza- tion, data association, and navigation for a swarm of flying sensors, Information Fusion, 8, 316-330. doi:10.1016/j.inffus.2005.11.001 Fontanari, J., & Perlovsky, L. (2007). Evolving compositionality in evolutionary language games. IEEE Transactions on Evolutionary Computations, 11, 758-769. doi:10.1109/TEVC.2007.892763 Fontanari, J., & Perlovsky, L. (2008a). How language can help dis- crimination in the neural modeling fields framework. Neural Net- works, 21, 250-256. doi:10.1016/j.neunet.2007.12.007 Fontanari, J., & Perlovsky, L. (2008b). A game theoretical approach to the evolution of structured communication codes. Theory in Biosci- ences, 127, 205-214. doi:10.1007/s12064-008-0024-1 Glassman, R. (1983). Free will has a neural substrate: Critique of Jo- seph F. Rychlak’s discovering free will and personal responsibility. Zygon, 18, 17-82. doi:10.1111/j.1467-9744.1983.tb00498.x Gödel, K. (1934). Kurt Gödel collected works, I. In S. Feferman et al., (Ed.), Cambridge, MA: Oxford University Press. Grossberg, S. (1988). Neural networks and natural intelligence. Cam- bridge, MA: MIT Press. Jaynes, J. L. (1976). The origin of consciousness in the breakdown of the bicameral mind. Boston, M A: Houghton Mifflin Co. Levine, D., & Perlovsky, L. (2008). Neuroscientific insights on biblical myths: Simplifying heuristics versus careful thinking: Scientific analysis of millennial spiritual issues. Zygon, Journal of Science and Religion, 43, 797-821. Libet B. (1999). Do we have free will? Journal of Consciousness Stud- ies, 6, 47-57. Lim, D. (2008). Did my neurons make me do it? Philosophical and neurobiological perspectives on moral responsibility and free will. Zygon, 43, 748-753. doi:10.1111/j.1467-9744.2008.00953.x Mayorga, R., & Perlovsky, L. (Eds.) (2008). Sapient systems. London: Springer. Minsky, M. (1975). A framework for representing knowledge. In P. H. Winston (Ed.), The psychology of computer vision. New York: McGraw-Hill Book. Perlovsky, L. (1987). Multiple sensor fusion and neural networks. DARPA Neural Network Study. Perlovsky, L. (1989). Cramer-rao bounds for the estimation of normal mixtures. Pattern Recognition Letters, 10, 141-148. doi:10.1016/0167-8655(89)90079-2 Perlovsky, L. (1994). A model based neural network for transient signal processing. Neural Netwo rks, 7, 565-572. doi:10.1016/0893-6080(94)90113-9 Perlovsky, L. (1996). Gödel theorem and semiotics. Proceedings of the Conference on Intelligent Systems and Semiotics ’96, Gaithersburg, 2, 14-18. Perlovsky, L. (1998). Conundrum of combinatorial complexity. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20, 666-670. doi:10.1109/34.683784 Perlovsky, L. (2001). Neural networks and intellect: Using model based concepts. New York: Oxford University Press. Perlovsky, L. (2004). Integrating language and cognition. IEEE Con- nections, 2, 8-12. Perlovsky, L. (2006a). Toward physics of the mind: Concepts, emotions, consciousness, and symbols. Physics of Life Reviews, 3, 23-55. doi:10.1016/j.plrev.2005.11.003 Perlovsky, L. (2006b). Neural networks, fuzzy models and dynamic logic. In R. Köhler, & A. Mehler (Ed.), Aspects of automatic text analysis (Festschrift in Honor of Burghard Rieger) (pp. 363-386). Heidelberg: Springer. doi:10.1007/978-3-540-37522-7_17 Perlovsky, L. (2006c) . Music—The first principle. Musical Theater. http://www.ceo.spb.ru/libretto/kon_lan/ogl.shtml Perlovsky, L. (2006d). Joint evolution of cognition, consciousness, and music. Lectures in Musicology, School of Music. Columbus: Ohio State University. Perlovsky, L. (2007a). Neural dynamic logic of consciousness: The knowledge instinct. In R. Kozma, & L. Perlovsky (Ed.), Neurody- namics of higher-level cognition and consciousness. Heidelberg: Sp- ringer-Verlag. doi:10.1007/978-3-540-73267-9_5 Perlovsky, L. (2007b). Cognitive high level information fusion. Infor- mation Sciences, 177, 2099-2118. doi:10.1016/j.ins.2006.12.026 Perlovsky, L. (2007c). Modeling field theory of higher cognitive func- tions. In A. Loula, R. Gudwin, & J. Queiroz (Ed.), Artificial cogni- tion systems (pp. 64-105). Hershey, PA: Idea Gro up . Perlovsky, L. (2007d). Evolution of languages, consciousness, and cultures. IEEE Computational Intelligence Magazine, 2, 25-39. doi:10.1109/MCI.2007.385364 Perlovsky, L. (2007e). Symbols: Integrated cognition and language. In R. Gudwin, & J. Queiroz (Eds.), Semiotics and intelligent systems development (pp. 121-151 ). Hershey, PA: Idea Group. Perlovsky, L. (2008). Sapience, consciousness, and the knowledge instinct. (Prolegomena to a physical theory). In R. Mayorga, & L. I. Perlovsky, (Ed s.), Sapient systems. London: Springer. Perlovsky, L. (2009a). Language and cognition. Neural Networks, 22, 247-257. doi:10.1016/j.neunet.2009.03.007 Perlovsky, L. (2009b). Language and emotions: Emotional sapir-whorf hypothesis. Neural Networks, 22, 518-526. doi:10.1016/j.neunet.2009.06.034 Perlovsky, L. (2009c). “Vague-to-crisp” neural mechanism of percep- tion. IEEE Tra ns ac tio ns o n N eu ra l Networks, 20, 1363-1367. Copyright © 2012 SciRes. 36  L. PERLOVSKY Copyright © 2012 SciRes. 37 doi:10.1109/TNN.2009.2025501 Perlovsky, L. (2010a). Intersections of mathematical, cognitive, and aesthetic theories of mind. Psychology of Aesthetics, Creativity, and the Arts, 4, 11-17. doi:10.1037/a0018147 Perlovsky, L. (2010b) . Neural mechanisms of the mind, aristotle, zadeh, & fMRI. IEEE Transactions on Neural Networks, 21, 718-733. doi:10.1109/TNN.2010.2041250 Perlovsky, L. ( 2010c). The mind is not a kludge. Skeptic, 15, 50-55. Perlovsky, L. (2010d). http://www.leonid-perlovsky.com Perlovsky, L., Bonniot, C., Marie, C., & Cabanac, M. (2010). Curiosity and pleasure. Webmed Central Psychology, 1, WMC001275. Perlovsky, L., Chernick, J. l., & Schoendorf, W. (1995). Multi-sensor ATR and identification friend or foe using MLANS. Neural Net- works, 8, 1185-1200. doi:10.1016/0893-6080(95)00078-X Perlovsky, L., & Deming, R. (2007). Neural networks for improved tracking. IEEE Transactions on Neural Networks, 18, 1854-18 57. doi:10.1109/TNN.2007.903143 Perlovsky, L., & Ilin, R. (2010a). Neurally and mathematically moti- vated architecture for language and thought. Special issue “brain and language architectures: Where we are now?” The Open Neuroimag- ing Journal, 4, 70-80. doi:10.2174/1874440001004020070 Perlovsky, L., & Ilin, R. (2010b). Grounded symbols in the brain, computational foundations for perceptual symbol system. Webmed- Central Psychology, 1, WMC001357. Perlovsky, L., & Kozma, R. (Eds.) (2007). Neurodynamics of higher- level cognition and consciousness. Heidelberg: Springer-Verlag. Perlovsky, L., & Mayorga, R. (2008). Preface. In R. Mayorga, & L. I. Perlovsky (Eds.), Sapient systems. London: Springe r. Perlovsky, L., & McManus, M. (1991). Maximum likelihood neural networks for sensor fusion and adaptive classification. Neural Net- works, 4, 89-102. doi:10.1016/0893-6080(91)90035-4 Perlovsky, L., Plum, C., Franchi, P., Tichovolsky, E., Choi, D., & Wei- jers, B. (1997). Einsteinian neural network for spectrum estimation. Neural Networks, 10, 1541-1546. doi:10.1016/S0893-6080(97)00081-6 Perlovsky, L., Schoendorf, W., Burdick, B., & Tye, D. (1997). Mo- del-based neural network for target detection in SAR images. IEEE Transactions on Image Processing, 6, 203-216. doi:10.1109/83.552107 Rychlak, J. (1983). Free will as transcending the unidirectional neural substrate. Zygon, 18, 439-442. doi:10.1111/j.1467-9744.1983.tb00527.x Tikhanoff, V., Fontanari, J., Cangelosi, A., & Perlovsky, L. (2006). Language and cognition integration through modeling field theory: Category formation for symbol grounding. Book Series in Computer Science, 4131. Heidelberg: Springer. Tversky, A., & Daniel, K. (1974). Judgment under uncertainty: Heuris- tics and biases. Science, 185, 1124-1131. doi:10.1126/science.185.4157.1124 Tversky, A,, and Daniel, K. (1981). The framing of decisions and the rationality of choice. Science, 211, 453-458. doi:10.1126/science.7455683 Velmans, M. (2003). Preconscious free will. Journal of Consciousness Studies, 10, 42-61. Velmans, M. (2008). Reflexive monism. Journal of Consciousness Studies, 15, 5-50. Wikipedia (2010a). Free will. http://en.wikipedia.org/wiki/Free_will Wikipedia (2010b). Baruch spinoz a. http://en.wikipedia.org/wiki/Baruch_Spinoza Wikipedia (2010c). René descartes. http://en.wikipedia.org/wiki/Rene_Descartes Wikipedia (2010d). David chalmers. http://en.wikipedia.org/wiki/ David_Chalmers Winston, P. (1984) Artificial intelligence (2nd ed.). Reading, MA: Addison-Wesley.

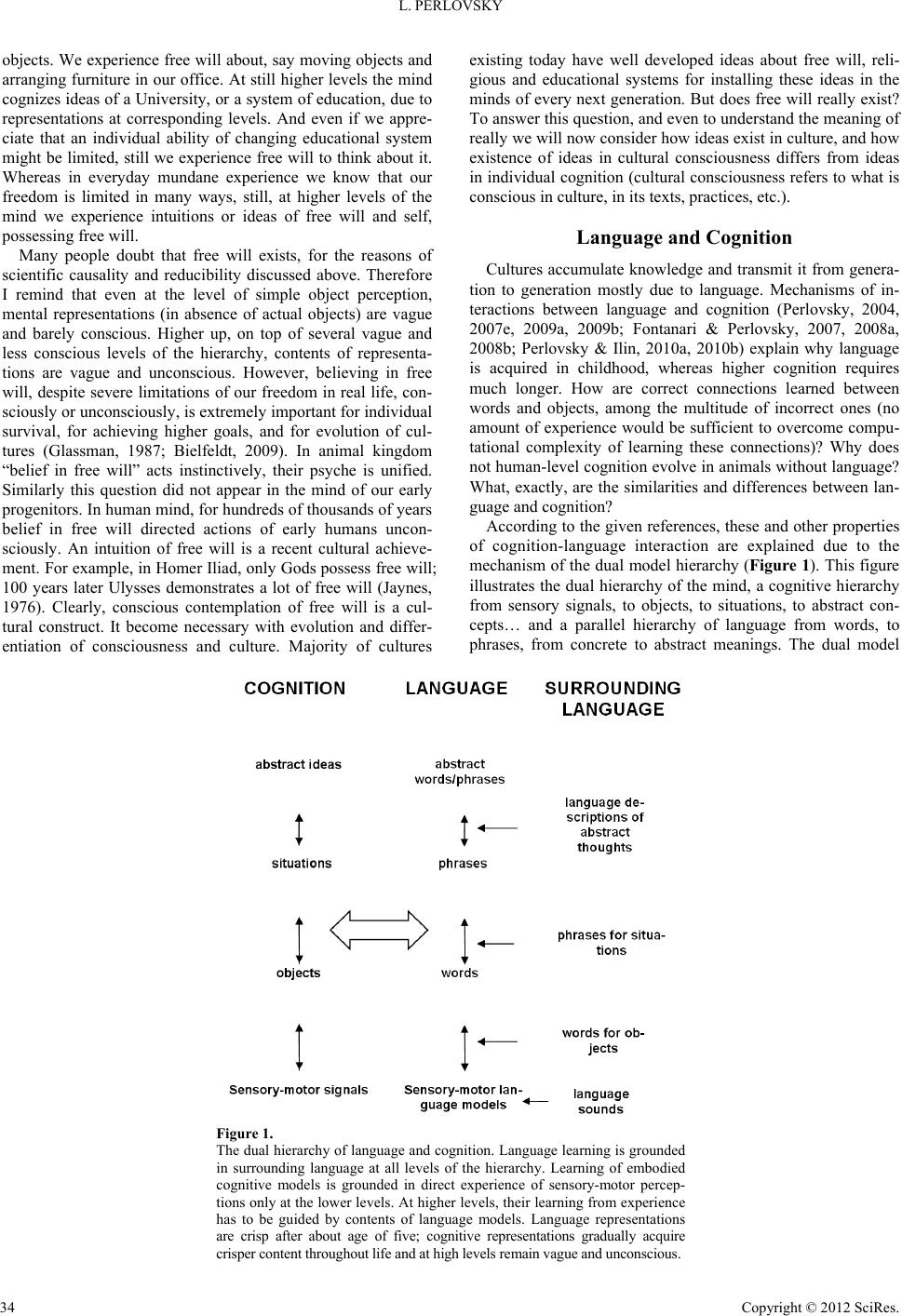

|