Applied Mathematics

Vol.05 No.19(2014), Article ID:51266,11 pages

10.4236/am.2014.519293

Stochastic Process Optimization Technique

Hiroaki Yoshida1, Katsuhito Yamaguchi2, Yoshio Ishikawa1

1College of Science and Technology, Nihon University, Chiba, Japan

2Junior College, Nihon University, Chiba, Japan

Email: yoshida@eme.cst.nihon-u.ac.jp

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 9 September 2014; revised 29 September 2014; accepted 10 October 2014

ABSTRACT

The conventional optimization methods were generally based on a deterministic approach, since their purpose is to find out an accurate solution. However, when the solution space is extremely narrowed as a result of setting many inequality constraints, an ingenious scheme based on experience may be needed. Similarly, parameters must be adjusted with solution search algorithms when nonlinearity of the problem is strong, because the risk of falling into local solution is high. Thus, we here propose a new method in which the optimization problem is replaced with stochastic process based on path integral techniques used in quantum mechanics and an approximate value of optimal solution is calculated as an expected value instead of accurate value. It was checked through some optimization problems that this method using stochastic process is effective. We call this new optimization method “stochastic process optimization technique (SPOT)”. It is expected that this method will enable efficient optimization by avoiding the above difficulties. In this report, a new optimization method based on a stochastic process is formulated, and several calculation examples are shown to prove its effectiveness as a method to obtain approximate solution for optimization problems.

Keywords:

Optimization, Stochastic Process, Path Integral, Expected Value, Quantum Mechanics

1. Introduction

Optimization methods are useful to system design, and especially the applications to engineering problems are expected. In order to obtain the accurate optimal solution, generally the conventional optimization methods were based on a deterministic approach. However, when design variables have many inequality restriction conditions, in order to search for a solution efficiently, it is necessary to adjust a parameter with trial and error. Moreover, if the problem is complex, the risk of falling into a local solution is also high.

Our method obtains an approximate value of an optimal solution using stochastic process. The optimization methods using stochastic process like simulated annealing (SA) [1] are found. However, in these methods, stochastic process is used as a means of searching for an accurate solution efficiently. Our method is based on probability theory, and essentially differs from the concepts of the conventional optimization methods. The purpose of the conventional optimization is to obtain the accurate optimal solution, but that of our method is to obtain the approximate value of the optimal solution.

We showed that the approximate value of the optimal solution could be obtained using stochastic process [2] [3] . And even if it was an approximate value of the optimal solution obtained by this method, it was shown that it is useful in engineering [4] . Furthermore, it was shown that this method can apply to general engineering optimization problems containing static design variables and dynamic design variables [5] .

As mentioned above, it was shown that the approximate value of the optimal solution can be obtained by this method using stochastic process. The aim of this paper is not to show the results of the applications of our method, but to bring our original idea to a wide reading audience.

Thus, we propose a new method in which an optimization problem is replaced with a stochastic process based on path integral techniques used in quantum mechanics and an approximate value of an optimal solution is calculated as an expected value instead of an accurate value. We call this new optimization method the “stochastic process optimization technique (SPOT)”. It is expected that this method will enable efficient optimization by avoiding the above difficulties.

Since expected values are stochastic average values, a stochastic approach is not appropriate to obtain an accurate solution as a deterministic approach. However, its characteristics are simple algorithms and the fewer number of manual parameters. The resultant solution is approximate enough to a global solution of engineering problems whose solution spaces are expected to be relatively smooth. The approximate solution can be an initial value to obtain an accurate solution with a deterministic process.

In this paper, a new optimization method is formulated based on a stochastic process. Some calculation examples are introduced to show how effective the approximate solutions are for optimization problems. The examples are a simple benchmark test, a classic line of swiftest descent problem, and an optimized glide problem of a hang glider as an introductory engineering optimization problem.

2. Path Integral and Optimization

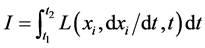

According to the principle of least action, Newtonian motion of a mass point makes the action integral minimum:

, (1)

, (1)

where  is the Lagrange function. Also, the Euler-Lagrange equation obtained with variation of

is the Lagrange function. Also, the Euler-Lagrange equation obtained with variation of  is equal to an equation of Newtonian motion. On the other hand, the motion of a particle is determined stochastically according to the quantum mechanics. A motion on the classical mechanics, which keeps

is equal to an equation of Newtonian motion. On the other hand, the motion of a particle is determined stochastically according to the quantum mechanics. A motion on the classical mechanics, which keeps  minimum, occurs at the “highest possibility”. However, other motions can occur at certain probabilities. This results in some particular phenomena, such as the tunnel effect, which never occur on the classical mechanics.

minimum, occurs at the “highest possibility”. However, other motions can occur at certain probabilities. This results in some particular phenomena, such as the tunnel effect, which never occur on the classical mechanics.

The well-known simulated annealing (SA) method adopts such a quantum mechanical approach to the solution of optimization problems. SA introduces a probability distribution that reaches its maximum at an optimal value. This method numerically searches the optimal value (peak of the probability distribution) with a temperature parameter using the probability distribution that reaches its maximum of an optimal value instead of directly obtaining a variational problem of a performance index . This method searches solution spaces in the large, which include solutions that never obtained with the variational method. Therefore, one advantage is a low probability of reaching local solutions. On the other hand, there are some disadvantages. For example, the operation of a cooling schedule, which is a temperature parameter, is difficult. In addition, it may take much time in calculation to converge a solution.

. This method searches solution spaces in the large, which include solutions that never obtained with the variational method. Therefore, one advantage is a low probability of reaching local solutions. On the other hand, there are some disadvantages. For example, the operation of a cooling schedule, which is a temperature parameter, is difficult. In addition, it may take much time in calculation to converge a solution.

R. Feynman constructed the “path integral” theory in 1948 and showed that it is equivalent to the quantum mechanics. The theory provides a countless number of paths that meet given boundary conditions, and the prob- ability (probability amplitude) of each particle taking its path is expressed in the form of multiple integral [6] . A stochastic behavior is the nature of quantum mechanics. It is therefore impossible to predict a measured physical quantity deterministically under a certain condition. Expected values only can be predicted. Thus, the expected value of a physical quantity is expressed in the form of multiple integral in the path integral method. Furthermore, the multiple integral calculation of an expected value can fit into numerical calculation with the Monte Carlo method.

In this paper, we propose a new numerical calculation method for optimization problems based on the quantum mechanics rather than SA. It means that we show a method of obtaining an approximate solution of an optimal solution as an expected value. An expected value is predicted to be located close to an optimal solution in a continuous probability distribution. This method can thus be applied to various engineering optimal problems.

The introduced probability distribution  here is basically the same as that in SA, and to obtain the minimum of performance function

here is basically the same as that in SA, and to obtain the minimum of performance function , the following equation is set:

, the following equation is set:

. (2)

. (2)

In SA, the peak of  is searched with

is searched with . On the other hand, we automatically generate a state variable of various time histories at probability

. On the other hand, we automatically generate a state variable of various time histories at probability  as finite value

as finite value . According to the generated frequency, the stochastic average (expected value) of the quantity to be obtained is calculated.

. According to the generated frequency, the stochastic average (expected value) of the quantity to be obtained is calculated.

3. Formulation of SPOT

According to the path integral method, the path of motion for a naturally stochastic particle such as photon and electron is obtained from an expected value as a path that keeps the action integral  minimum. We replace the action integral

minimum. We replace the action integral  with a performance index to recognize the motion of a general object as a stochastic process. Thus, we formulate the path integral method for a naturally stochastic particle in physics as an optimal method of obtaining a function that keeps the performance function

with a performance index to recognize the motion of a general object as a stochastic process. Thus, we formulate the path integral method for a naturally stochastic particle in physics as an optimal method of obtaining a function that keeps the performance function  minimum. Note that since this performance function is stochastic, an optimal solution is obtained as an expected value, which results in an approximate solution instead of an accurate solution.

minimum. Note that since this performance function is stochastic, an optimal solution is obtained as an expected value, which results in an approximate solution instead of an accurate solution.

According to the path integral method, the action integral  described in the above section is replaced with a performance function to define probability distribution

described in the above section is replaced with a performance function to define probability distribution

where

where

Here, the summation for the paths is the integral for all the countless paths (multiple integral), which for example connect each point

The horizontal axis and vertical axis show time and vertical positions, respectively. The better performance values the paths have, in other words, the closer to the accurate solution (red line) the values are, the higher the density of the paths is. Also, there are some paths that never exist with a deterministic method. The optimal solution is the expected value of all the paths. In this case, that solution is an approximate solution of an accurate solution. The expected solution that is thus obtained shows a path of the highest probability for move of a stochastic behavioral particle, such as an electron, between two points. When those paths are replaced with arbitrary functions, approximate solutions that make the performance function values minimum are obtained.

This idea can be extended to obtain an expected value of a m-dimensional functional that consists of

Figure 1. Paths generated by a probability distribution.

Figure 2. Time history of a variable.

Note that the subscript

The integral range is from

Note that since Equation (5) shows the multiple integral, the Monte Carlo method can be applied to actual numerical calculations.

4. Calculation Algorithm for SPOT

First, a performance index is determined, and if necessary, variables are discretized for numerical calculation. Next, the values of all variables are randomly chosen to generate solutions. Generation probabilities of variables should agree with the probability distribution of Equation (3). Thus, a solution with a fine performance value is generated at a high frequency, and a solution with a poor performance index value is generated at a low frequency. Also, the algorithm is based on the Markov process so that future solutions will have no dependency on the former solutions. Lastly, expected values are calculated using all the solutions generated with a probability distribution to obtain an approximate solution.

The procedure of calculation is shown in Figure 3.

1) The values of all the variables are generated randomly to obtain initial solutions.

2) Time point

3) The performance index value of the newly generated solution is calculated.

4) The adoption or rejection of this solution is decided to generate solutions depending on the probability distribution of Equation (3).

The procedure from Steps 2) to 4) is a pure explanation of the Metropolis method [7] to merely conduct the multiple integral. This method has an advantage of no necessity to obtain the normalization constant:

5) The expected value is calculated.

6) If the final condition is met, the calculation ends. If not, it returns to the generation of solutions.

The final condition can be a certain range of variation of an expected value. However, in this paper, the final condition is the number of iterations,

Equation (3) for probability distribution in this paper is the same as that in SA. SA is a deterministic approach to search only optimal solution at

Figure 3. Flow chart of calculation.

5. Calculation Example

This section describes examples of numerical calculation with SPOT to demonstrate the validity. First, SPOT is applied to a problem where the minimum value of a multi-peaked function is obtained without staying at other minimal values and the minimum value is always only one with no dependency on the initial value. Next, we apply SPOT to a problem of obtaining the minimum value of a functional to show the validity in a physical optimization problem. Lastly, we apply SPOT to an engineering optimal problem on the glide of a hang glider to show the high applicability of an approximate solution by SPOT.

We already applied SPOT to some engineering problems [8] [9] .

5.1. Minimization Problem of Bivariate Function

First, we apply SPOT to a problem of obtaining the minimum value of a bivariate function expressed in Equation (6). Here, performance function

Figure 4(a) shows the solution space of Equation (6). The set of

This example is a problem for obtaining the minimum value of a bivariate function. Therefore, the variables are not discretized, but the values of two variables are generated according to the probability distribution (3) defined by the performance values to obtain an expected value. The closed dots in Figure 4(a) show multiple initial values chosen to check the dependency on the initial values. Also, the cross marks in Figure 4(b) show the minimum values obtained with this method.

Figure 4. (a) Function space; (b) Contour lines of solution space.

If calculation starts with any initial value marked in the closed dot, the resultant values do not stay at any of the many minimal values in the solution space, but all the values concentrate near the minimum value. Since every solution generated in each calculation is obtained stochastically with this method, the calculation results are not influenced by the initial value in principle. However in reality, the number of calculations is finite. Therefore, the expected value as the minimum value may have a variation. The minimum values in Figure 4(b) have a little variation though it may be invisible. This variation is caused by the limited number of calculations.

Note that it took about 18 seconds to perform one million times of calculations on a 1.4 GHz Pentium 4 machine.

5.2. Line of Swiftest Descent Problem

Next, we applied SPOT to a problem on brachistochrone as one of the classical optimization problems to obtain a function. This problem is demonstrated to obtain a mass point path that shows the brachistochrone between a fixed start point and an end point at different altitudes under the uniform gravitation field. Here, performance function

The boundary conditions are as follows:

Initial condition:

In this problem, we discretized the vertical axis

Figure 5 shows the paths obtained with SPOT in the closed dots and the analytic solution in the solid line. It took about 438 seconds on a Pentium 4 machine to perform 20 million times of iterations.

SPOT is to obtain an approximate solution of an optimal solution. However, the approximate solutions agree well with the analytic solutions. Thus, this method can be applied to general optimization problems where performance functions are expressed in functional.

5.3. Optimal Glide Problem of Hang Glider

Lastly, we apply SPOT to a problem of a hang glider that starts the fall and glide at an altitude of 12 m toward the ground to obtain its flight operation (time history of angle of attack) for the maximum down range (as shown in Figure 6).

This problem was firstly solved by Suzuki et al. [10] and their results were used to check the validity of the results of SPOT.

This problem is based on the former problem of [10] and the specifications of the hang glider used here are based on those of the optimal glider obtained in [10] . The shape of the hang glider is shown in Figure 7.

Figure 5. Line of swiftest descent.

Figure 6. Flight trajectory optimization.

Figure 7. Top view of the hang glider.

Note that the performance index is the reciprocal number of the flight distance to set this problem to a minimal value problem. Also, the maximum value of the load factor is set to the restriction condition. If this condition exceeds the limitation during the flight, a penalty is added to the performance index value.

The equation of motion is as follows:

The main conditions are shown as follows:

Performance index:

Initial condition:

Final condition:

Restriction condition:

Specifications of hang glider:

The performance index value is the reciprocal number of the down range at the point where the hung glider reaches at an altitude of 2 m. First, the control variable, which is the angle of attack in this case, is discretized on the axis of time. Next, values of angle of attack at each time are specified with random numbers. The equations of motion are numerically calculated according to the time history of angle of attack to obtain the flight path. Then, the performance index value is determined as a reciprocal number of the down range. Therefore, the performance index values are obtained when the series of calculations ends with the final conditions being met.

Figure 8(a) and Figure 8(b) show the relation between the down range and the altitude, and that between the down range and the angle of attack, respectively.

This result may be an approximate solution close to the former optimization [9] . Note that it took about 15 hours on a Pentium 4 (1.4 GHz) machine to obtain the calculation result in this example that set the number of iterations to 3 millions.

Figure 8. (a) Altitude vs. down range; (b) Angle of attack vs. down range.

6. Discussion

We propose a new optimization method with a stochastic process called SPOT, and then demonstrate numerical calculations.

Generally, when proposing a new method, comparison of performance between the conventional method and a new method should be performed. However, since SPOT is the only method of obtaining the approximate value of the optimal solution, there is no sense in comparing them. Also fair comparison of computation time could not be performed.

As a result, SPOT has the following characteristics:

1) Advantages of Stochastic Process

SPOT results in an expected value from all the solutions generated by calculations. One of the advantages is to avoid influences from the initial value (a start point of the calculation) to the final expected value. The reason is that the generation of solutions during the calculation is a simple stochastic process, and the initial value is only the first-generated solution. As shown in Section 6.1, the calculation results all do not stay at local minimal values even though the calculation starts with any initial value, but the resultant values concentrate near the minimum value. Another advantage is to update an expected value from optimal solutions separately obtained under the same condition. This enables a parallel calculation and the recycling of the former calculation results. This characteristic is unique to SPOT and advantageous to other numerical calculation methods. For example, the calculation of a bivariate function, as shown in Section 6.1, gives the results starting with the multiple initial points as different minimum values. (The cross marks actually show almost the same point.) On the other hand, it is possible to obtain an expected value with all these expected values.

Also, SPOT seems to use asymptotic calculation in the algorithm. However, the former solutions are not used to generate the next solution. This means that the series of calculation is independent and thus the calculation time can easily be estimated with the number of calculations. Therefore, it is easy to estimate the calculation cost.

2) Independency on Experience

Another characteristic is that SPOT has only fluctuation parameter,

Also, the smaller the fluctuation parameter during calculation, the shorter the calculation time is, though this characteristic is not mentioned in this paper.

3) Restriction Conditions

In the conventional calculation approaches that start with initial solutions to search better performance index values, it may be difficult to generate an initial solution itself and thus to start the numerical calculation if the solution space is narrowed with restriction conditions. However, since the generation of solutions during calculation in our method is a stochastic process, it is possible to perform the calculation regardless of the presence of solutions.

Also, if there are restriction conditions on parameters, which are not the fluctuation parameter:

Next, in case of restriction conditions on state variables, when a solution generated during calculation is trapped on the restriction, the solution may be abandoned. However, expected values may approach but do not reach the restriction surfaces using only parameter values within constraint ranges and abandoning solutions that break the restrictions. Parameters and solutions that break the restrictions can be applied to a calculation using a penalty method to approach the expected value toward the restriction surface more closely. However, if results with this method are used as initial solutions of deterministic approaches, there is no necessity to make a device.

4) Scope of SPOT

The characteristics of SPOT are to obtain expected values of solution spaces with multiple integral. Thus, there is no problem if the solution spaces have restrictions as mentioned above. However, SPOT is not appropriate to be used for problems that have no restriction on the infinite solution spaces. Also, if a problem has a large solution space and a sharp peak on the performance index value, this method can be applied in principle. However, it may be difficult to obtain an approximate solution with high accuracy in the finite number of calculations. However, it is rare to have infinite range of a variable in an engineering problem, and the upper and lower limits can generally be set. Also, the fluctuation of a performance index value to that of a variable is expected to be smooth. Furthermore, the accuracy required for a variable is finite. Therefore, it is possible to obtain an approximate value for practical use with the finite number of calculations.

7. Conclusions

We propose a new optimization method based on the path integral called SPOT, and thus perform a numerical calculation on an engineering optimization problem. As a result, we obtain an approximate value of an optimal solution with high accuracy. In addition, this stochastic method has advantages on numerical calculation to other methods.

Also, SPOT has more effective features of optimization than the conventional methods on optimization problems of an unknown system or narrow solution space that leads to difficulty finding a feasible solution.

References

- Kirkpatrick, S., Gelatt Jr., C.D. and Vecchi, M.P. (1983) Optimization by Simulated Annealing. Science, 220, 671-680. http://dx.doi.org/10.1126/science.220.4598.671

- Terasaki, M., Kondo, M., Yoshida, H., Yamaguchi, K. and Ishikawa, Y. (2004) Integrated Optimization of Airplane Design and Flight Trajectory by New Optimization Method Using a Stochastic Process. In: CONPUTATIONAL MECHANICS WCCM VI in Conjunction with APCOM’04, Tsinghua University Press & Springer-Verlag, Beijing, 328.

- Yoshida, H., Konno, T., Yamaguchi, K. and Ishikawa, Y. (2005) New Optimization Method Using Stochastic Process Based on Path Integral. Journal of the Japan Society for Computational Methods in Engineering, 5, 145-150. (in Japanese)

- Yoshida, H., Yamaguchi, K. and Ishikawa, Y. (2006) Application of a New Optimization Method for System Design. Joint 3rd International Conference on Soft Computing and Intelligent Systems (SCIS) and 7th International Symposium on Advanced Intelligent Systems (ISIS), Tokyo, 20-24 September 2006, 1542-1547.

- Yoshida, H., Yamaguchi, K. and Ishikawa, Y. (2007) Design Tool Using a New Optimization Method Based on a Stochastic Process. Transactions of the Japan Society for Aeronautical and Space Sciences, 49, 220-230. http://dx.doi.org/10.2322/tjsass.49.220

- Feynman, R.P. and Hibbs, A.R. (1965) Quantum Mechanics and Path Integrals. McGraw-Hill, New York.

- Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H. and Teller, E. (1953) Equation of State Calculations by Fast Computing Machines. Journal of Chemical Physics, 21, 1087-1092. http://dx.doi.org/10.1063/1.1699114

- Nakane, M., Kobayashi, D., Yoshida, H. and Ishikawa, Y. (2009) Feasibility Study on Single Stage to Orbit Space Plane with RBCC Engine. 16th AIAA/DLR/DGLR International Space Planes and hypersonic Systems and Technologies Conference, Bremen, AIAA 2009-7331. http://dx.doi.org/10.2514/6.2009-7331

- Ishimori, Y., Nakane, M., Ishikawa, Y., Yoshida, H. and Yamaguchi, K. (2011) Feasibility Study for Spaceplane’s Concepts in Ascent Phase Using the Optimization Method. Journal of the Japan Society for Aeronautical and Space Sciences, 59, 291-297. (in Japanese) http://dx.doi.org/10.2322/jjsass.59.291

- Suzuki, S. and Kawamura, N. (1996) Simultaneous Optimization of Sailplane Design and Its Flight Trajectory. Journal of Aircraft, 33, 567-571. http://dx.doi.org/10.2514/3.46982

Nomenclature