Paper Menu >>

Journal Menu >>

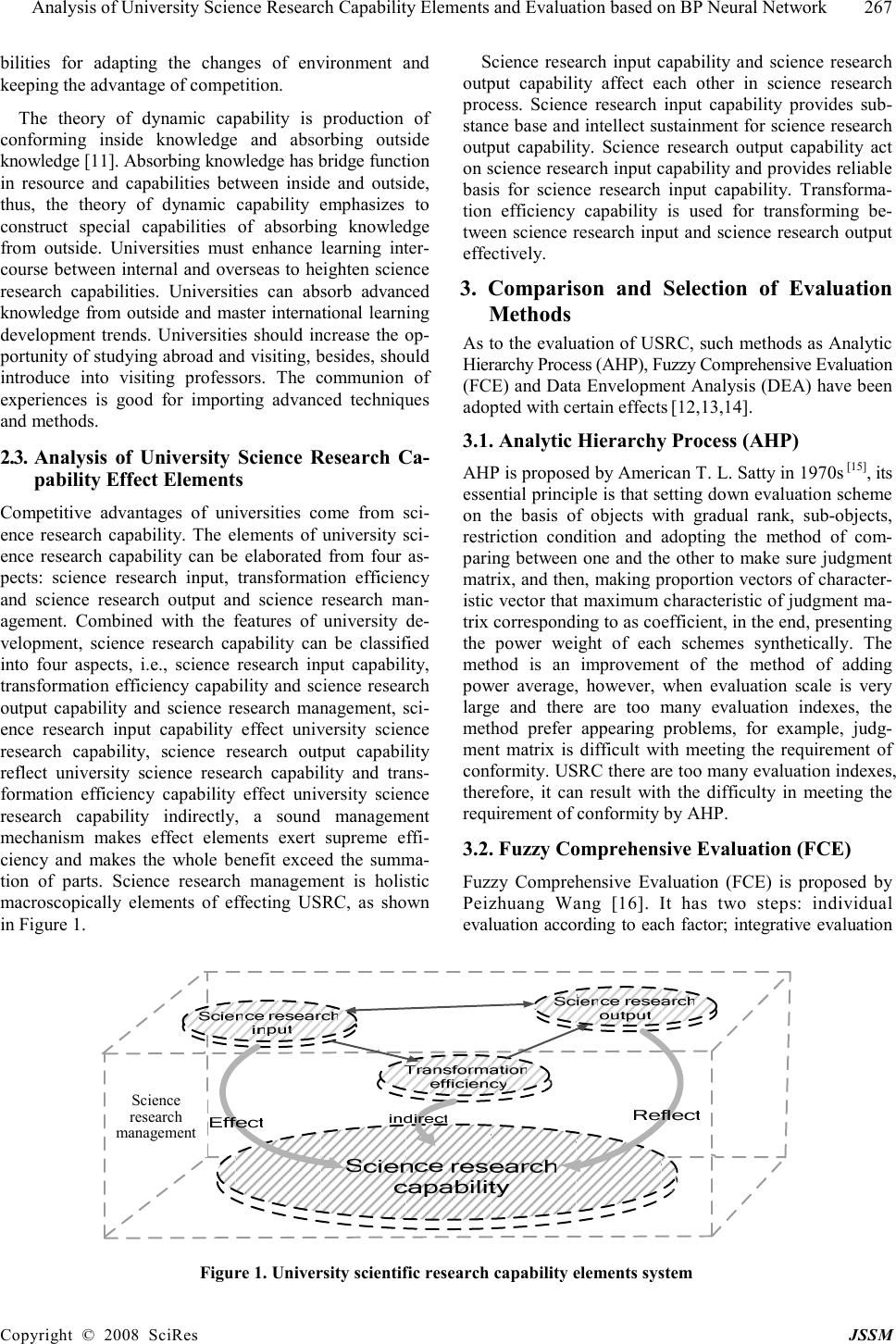

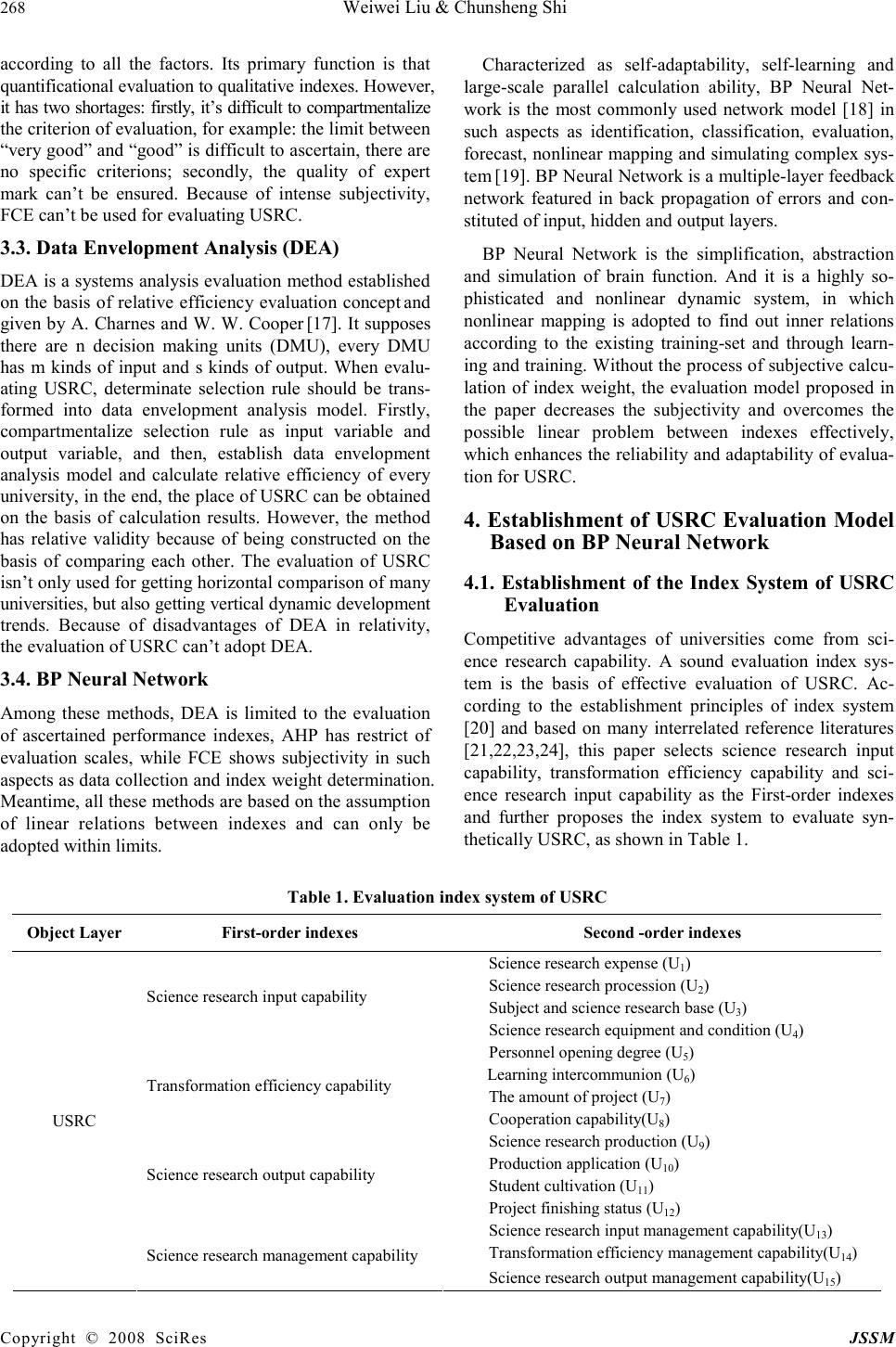

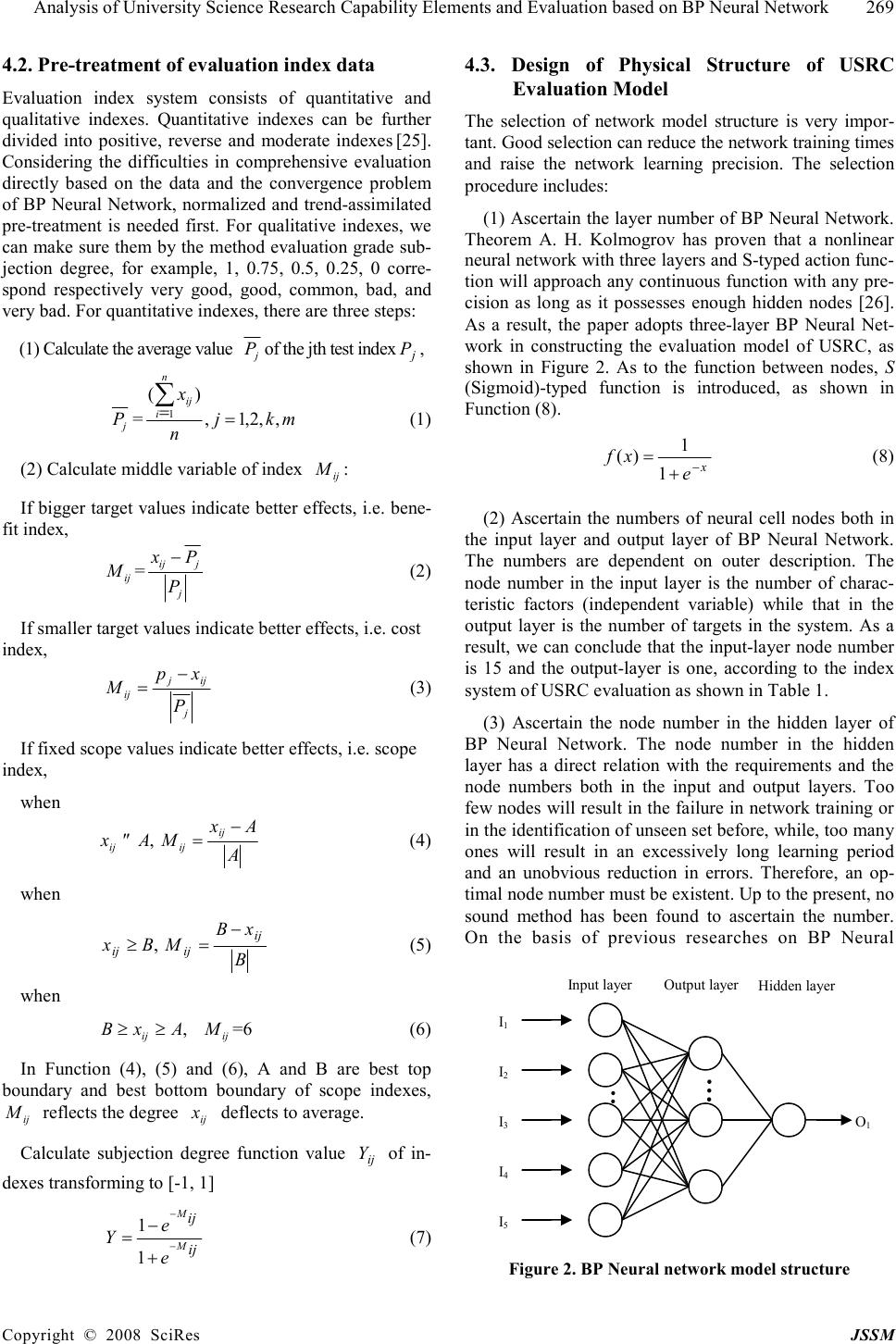

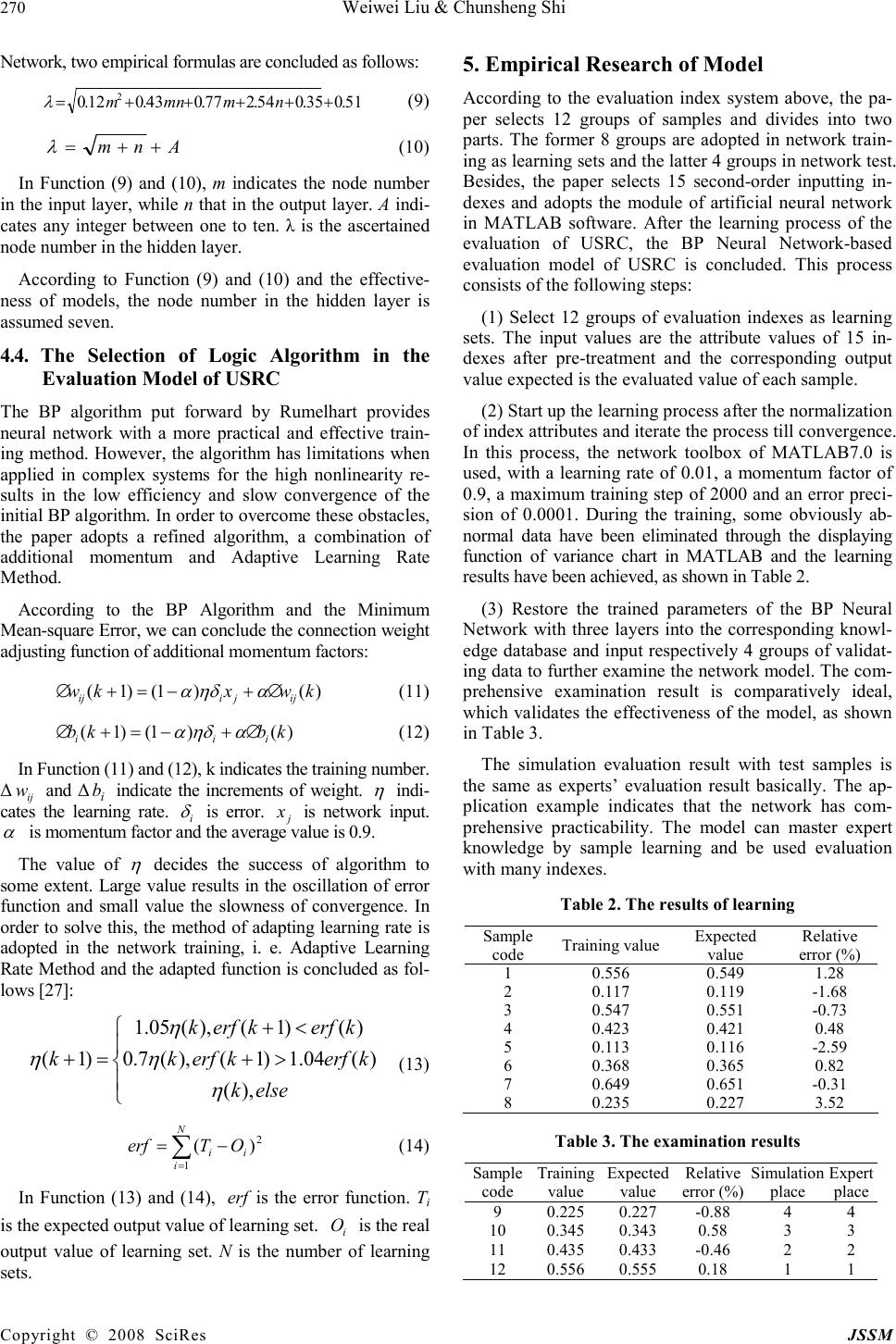

J. Serv. Sci. & Management, 2008, 1: 266-271 Published Online December 2008 in SciRes (www.SciRP.org/journal/jssm) Copyright © 2008 SciRes JSSM Analysis of University Science Research Capability Elements and Evaluation based on BP Neural Network Weiwei Liu & Chunsheng Shi School of Management, Harbin Institute of Technology Email: challenge10760@163.com Received October 28 th , 2008; received December 5 th , 2008; accepted December 14 th , 2008. ABSTRACT After analyzing effect elements of University Science Research Capability (USRC) based on dynamic capability theory, combined the substance of university science research with the highly self-organized, self-adapted and self-learned characteristics of Back Propagation (BP) Neural Network, the paper conducts a research on evaluation of USRC, in which an evaluation index system of USRC is constructed and a 15-7-1-typed BP Neural Network with three layers is presented to evaluate USRC, which provides a BP Neural Network-based methodology for evaluation of USRC with multiple inputs. Keywords: BP neural network, science research, capability evaluation 1. Introduction The capability of a university decides its competitive advantage and management performance in the essence [1]. Therefore, it does make sense for universities to accumulate, develop, evaluate and utilize their capabilities. Nowadays, science research capacity is the core of universities and an important indication of any powerful university, all the universities tend to pay attention to cultivate and enhance their capabilities, especially sci- ence research capability. It’s necessary to analyze and evaluate science research capability elements of universi- ties. A number of researches have already been con- ducted on evaluating competitive capabilities [2,3,4,5,6]. However, few researches have been taken on evaluating scientific research capacity in universities. To fill in this gap, the paper, first, investigates into the elements of university science research capability based on dynamic capability, and then, constructs BP Neural Network model of evaluation, in the end, introduces into a simula- tion evaluation, aiming at providing both theoretical and empirical perspectives in the cultivation of university science research capability. 2. Analysis of USRC Based on the Theory of Dynamic Capability 2.1. The Theory of Dynamic Capability The theory of dynamic capability is proposed firstly by Teece, Pisano and Shuen in “Firm Capability, Resource and Strategic concept” [7]. The theory of dynamic capa- bility develops and consummate gradually in “Dynamic Capabilities and Strategic Management” by Teece, Pis- ano and Shuen [8]. They defined dynamic capability as the capability of conforming, constructing and reconfig- uring inner and exterior capabilities to adapt environment changed rapidly. The definition has two outline: “dy- namic” namely, enterprises must renovate capabilities of themselves to adapt changeful environment; “capability” namely, strategic management has key function in reno- vating capabilities of themselves to adapt changeful en- vironment. Some scholars consider that capability can be defined as the gather of enterprise knowledge and capa- bility that can change capability is technology knowledge [9]. 2.2. Analysis of USRC Based on Characteristics of Dynamic Capability The theory of dynamic capability comes from the theory of resource base and absorbs many viewpoints of the theory of core capability, thus, its characteristic is similar with the theory of core capability, for example, the nature of value, the nature of unique. Nevertheless, dynamic capability is the capability that changes capabilities; its difference in nature from core capability is that it’s the nature of deploitation [10]. Since science research activi- ties of universities can’t depart from resource base, the products of universities science research activities has the value of applying and generalizing, science research char- acteristics of each universities are different from others and different from the advantage of science research competition rest with the nature of unique. Universities should renovate constantly their science research capa-  Analysis of University Science Research Capability Elements and Evaluation based on BP Neural Network 267 Copyright © 2008 SciRes JSSM bilities for adapting the changes of environment and keeping the advantage of competition. The theory of dynamic capability is production of conforming inside knowledge and absorbing outside knowledge [11]. Absorbing knowledge has bridge function in resource and capabilities between inside and outside, thus, the theory of dynamic capability emphasizes to construct special capabilities of absorbing knowledge from outside. Universities must enhance learning inter- course between internal and overseas to heighten science research capabilities. Universities can absorb advanced knowledge from outside and master international learning development trends. Universities should increase the op- portunity of studying abroad and visiting, besides, should introduce into visiting professors. The communion of experiences is good for importing advanced techniques and methods. 2.3. Analysis of University Science Research Ca- pability Effect Elements Competitive advantages of universities come from sci- ence research capability. The elements of university sci- ence research capability can be elaborated from four as- pects: science research input, transformation efficiency and science research output and science research man- agement. Combined with the features of university de- velopment, science research capability can be classified into four aspects, i.e., science research input capability, transformation efficiency capability and science research output capability and science research management, sci- ence research input capability effect university science research capability, science research output capability reflect university science research capability and trans- formation efficiency capability effect university science research capability indirectly, a sound management mechanism makes effect elements exert supreme effi- ciency and makes the whole benefit exceed the summa- tion of parts. Science research management is holistic macroscopically elements of effecting USRC, as shown in Figure 1. Science research input capability and science research output capability affect each other in science research process. Science research input capability provides sub- stance base and intellect sustainment for science research output capability. Science research output capability act on science research input capability and provides reliable basis for science research input capability. Transforma- tion efficiency capability is used for transforming be- tween science research input and science research output effectively. 3. Comparison and Selection of Evaluation Methods As to the evaluation of USRC, such methods as Analytic Hierarchy Process (AHP), Fuzzy Comprehensive Evaluation (FCE) and Data Envelopment Analysis (DEA) have been adopted with certain effects [12,13,14]. 3.1. Analytic Hierarchy Process (AHP) AHP is proposed by American T. L. Satty in 1970s [15] , its essential principle is that setting down evaluation scheme on the basis of objects with gradual rank, sub-objects, restriction condition and adopting the method of com- paring between one and the other to make sure judgment matrix, and then, making proportion vectors of character- istic vector that maximum characteristic of judgment ma- trix corresponding to as coefficient, in the end, presenting the power weight of each schemes synthetically. The method is an improvement of the method of adding power average, however, when evaluation scale is very large and there are too many evaluation indexes, the method prefer appearing problems, for example, judg- ment matrix is difficult with meeting the requirement of conformity. USRC there are too many evaluation indexes, therefore, it can result with the difficulty in meeting the requirement of conformity by AHP. 3.2. Fuzzy Comprehensive Evaluation (FCE) Fuzzy Comprehensive Evaluation (FCE) is proposed by Peizhuang Wang [16]. It has two steps: individual evaluation according to each factor; integrative evaluation Figure 1. University scientific research capability elements system Science research management  268 Weiwei Liu & Chunsheng Shi Copyright © 2008 SciRes JSSM according to all the factors. Its primary function is that quantificational evaluation to qualitative indexes. However, it has two shortages: firstly, it’s difficult to compartmentalize the criterion of evaluation, for example: the limit between “very good” and “good” is difficult to ascertain, there are no specific criterions; secondly, the quality of expert mark can’t be ensured. Because of intense subjectivity, FCE can’t be used for evaluating USRC. 3.3. Data Envelopment Analysis (DEA) DEA is a systems analysis evaluation method established on the basis of relative efficiency evaluation concept and given by A. Charnes and W. W. Cooper [17]. It supposes there are n decision making units (DMU), every DMU has m kinds of input and s kinds of output. When evalu- ating USRC, determinate selection rule should be trans- formed into data envelopment analysis model. Firstly, compartmentalize selection rule as input variable and output variable, and then, establish data envelopment analysis model and calculate relative efficiency of every university, in the end, the place of USRC can be obtained on the basis of calculation results. However, the method has relative validity because of being constructed on the basis of comparing each other. The evaluation of USRC isn’t only used for getting horizontal comparison of many universities, but also getting vertical dynamic development trends. Because of disadvantages of DEA in relativity, the evaluation of USRC can’t adopt DEA. 3.4. BP Neural Network Among these methods, DEA is limited to the evaluation of ascertained performance indexes, AHP has restrict of evaluation scales, while FCE shows subjectivity in such aspects as data collection and index weight determination. Meantime, all these methods are based on the assumption of linear relations between indexes and can only be adopted within limits. Characterized as self-adaptability, self-learning and large-scale parallel calculation ability, BP Neural Net- work is the most commonly used network model [18] in such aspects as identification, classification, evaluation, forecast, nonlinear mapping and simulating complex sys- tem [19]. BP Neural Network is a multiple-layer feedback network featured in back propagation of errors and con- stituted of input, hidden and output layers. BP Neural Network is the simplification, abstraction and simulation of brain function. And it is a highly so- phisticated and nonlinear dynamic system, in which nonlinear mapping is adopted to find out inner relations according to the existing training-set and through learn- ing and training. Without the process of subjective calcu- lation of index weight, the evaluation model proposed in the paper decreases the subjectivity and overcomes the possible linear problem between indexes effectively, which enhances the reliability and adaptability of evalua- tion for USRC. 4. Establishment of USRC Evaluation Model Based on BP Neural Network 4.1. Establishment of the Index System of USRC Evaluation Competitive advantages of universities come from sci- ence research capability. A sound evaluation index sys- tem is the basis of effective evaluation of USRC. Ac- cording to the establishment principles of index system [20] and based on many interrelated reference literatures [21,22,23,24], this paper selects science research input capability, transformation efficiency capability and sci- ence research input capability as the First-order indexes and further proposes the index system to evaluate syn- thetically USRC, as shown in Table 1. Table 1. Evaluation index system of USRC Object Layer First-order indexes Second -order indexes Science research expense (U 1 ) Science research procession (U 2 ) Subject and science research base (U 3 ) Science research input capability Science research equipment and condition (U 4 ) Personnel opening degree (U 5 ) Learning intercommunion (U 6 ) The amount of project (U 7 ) Transformation efficiency capability Cooperation capability(U 8 ) Science research production (U 9 ) Production application (U 10 ) Student cultivation (U 11 ) Science research output capability Project finishing status (U 12 ) Science research input management capability(U 13 ) Transformation efficiency management capability(U 14 ) USRC Science research management capability Science research output management capability(U 15 )  Analysis of University Science Research Capability Elements and Evaluation based on BP Neural Network 269 Copyright © 2008 SciRes JSSM 4.2. Pre-treatment of evaluation index data Evaluation index system consists of quantitative and qualitative indexes. Quantitative indexes can be further divided into positive, reverse and moderate indexes [25]. Considering the difficulties in comprehensive evaluation directly based on the data and the convergence problem of BP Neural Network, normalized and trend-assimilated pre-treatment is needed first. For qualitative indexes, we can make sure them by the method evaluation grade sub- jection degree, for example, 1, 0.75, 0.5, 0.25, 0 corre- spond respectively very good, good, common, bad, and very bad. For quantitative indexes, there are three steps: (1) Calculate the average value j P of the jth test index j P , j P = mkj n x n iij ,,2,1, )( 1 = = ∑ (1) (2) Calculate middle variable of index ij M: If bigger target values indicate better effects, i.e. bene- fit index, ij M= j jij P Px − (2) If smaller target values indicate better effects, i.e. cost index, j ijj ij P xp M − = (3) If fixed scope values indicate better effects, i.e. scope index, when ,Ax ij ≤A Ax M ij ij − = (4) when , Bx ij ≥ B xB M ij ij − = (5) when , AxB ij ≥≥ ij M=6 (6) In Function (4), (5) and (6), A and B are best top boundary and best bottom boundary of scope indexes, ij M reflects the degree ij x deflects to average. Calculate subjection degree function value ij Y of in- dexes transforming to [-1, 1] ij ij M M e e Y − − + − =1 1 (7) 4.3. Design of Physical Structure of USRC Evaluation Model The selection of network model structure is very impor- tant. Good selection can reduce the network training times and raise the network learning precision. The selection procedure includes: (1) Ascertain the layer number of BP Neural Network. Theorem A. H. Kolmogrov has proven that a nonlinear neural network with three layers and S-typed action func- tion will approach any continuous function with any pre- cision as long as it possesses enough hidden nodes [26]. As a result, the paper adopts three-layer BP Neural Net- work in constructing the evaluation model of USRC, as shown in Figure 2. As to the function between nodes, S (Sigmoid)-typed function is introduced, as shown in Function (8). x e xf − + =1 1 )( (8) (2) Ascertain the numbers of neural cell nodes both in the input layer and output layer of BP Neural Network. The numbers are dependent on outer description. The node number in the input layer is the number of charac- teristic factors (independent variable) while that in the output layer is the number of targets in the system. As a result, we can conclude that the input-layer node number is 15 and the output-layer is one, according to the index system of USRC evaluation as shown in Table 1. (3) Ascertain the node number in the hidden layer of BP Neural Network. The node number in the hidden layer has a direct relation with the requirements and the node numbers both in the input and output layers. Too few nodes will result in the failure in network training or in the identification of unseen set before, while, too many ones will result in an excessively long learning period and an unobvious reduction in errors. Therefore, an op- timal node number must be existent. Up to the present, no sound method has been found to ascertain the number. On the basis of previous researches on BP Neural Figure 2. BP Neural network model structure I 1 I 2 I 3 I 4 I 5 O 1 Input layer Hidden layer Output layer  270 Weiwei Liu & Chunsheng Shi Copyright © 2008 SciRes JSSM Network, two empirical formulas are concluded as follows: 51 .035.054.277.043.012.0 2 +++++= nmmnm λ (9) Anm ++= λ (10) In Function (9) and (10), m indicates the node number in the input layer, while n that in the output layer. A indi- cates any integer between one to ten. λ is the ascertained node number in the hidden layer. According to Function (9) and (10) and the effective- ness of models, the node number in the hidden layer is assumed seven. 4.4. The Selection of Logic Algorithm in the Evaluation Model of USRC The BP algorithm put forward by Rumelhart provides neural network with a more practical and effective train- ing method. However, the algorithm has limitations when applied in complex systems for the high nonlinearity re- sults in the low efficiency and slow convergence of the initial BP algorithm. In order to overcome these obstacles, the paper adopts a refined algorithm, a combination of additional momentum and Adaptive Learning Rate Method. According to the BP Algorithm and the Minimum Mean-square Error, we can conclude the connection weight adjusting function of additional momentum factors: )()1()1( kwxkw ijjiij ∆+−=+∆ αηδα (11) )()1()1( kbkb iii ∆+−=+∆ αηδα (12) In Function (11) and (12), k indicates the training number. ∆ ij w and ∆ i b indicate the increments of weight. η indi- cates the learning rate. i δ is error. j x is network input. α is momentum factor and the average value is 0.9. The value of η decides the success of algorithm to some extent. Large value results in the oscillation of error function and small value the slowness of convergence. In order to solve this, the method of adapting learning rate is adopted in the network training, i. e. Adaptive Learning Rate Method and the adapted function is concluded as fol- lows [27]: >+ <+ =+ elsek kerfkerfk kerfkerfk k ),( )(04.1)1(),(7.0 )()1(),(05.1 )1( η η η η (13) ∑ = −= N iii OTerf 1 2 )( (14) In Function (13) and (14), erf is the error function. T i is the expected output value of learning set. i O is the real output value of learning set. N is the number of learning sets. 5. Empirical Research of Model According to the evaluation index system above, the pa- per selects 12 groups of samples and divides into two parts. The former 8 groups are adopted in network train- ing as learning sets and the latter 4 groups in network test. Besides, the paper selects 15 second-order inputting in- dexes and adopts the module of artificial neural network in MATLAB software. After the learning process of the evaluation of USRC, the BP Neural Network-based evaluation model of USRC is concluded. This process consists of the following steps: (1) Select 12 groups of evaluation indexes as learning sets. The input values are the attribute values of 15 in- dexes after pre-treatment and the corresponding output value expected is the evaluated value of each sample. (2) Start up the learning process after the normalization of index attributes and iterate the process till convergence. In this process, the network toolbox of MATLAB7.0 is used, with a learning rate of 0.01, a momentum factor of 0.9, a maximum training step of 2000 and an error preci- sion of 0.0001. During the training, some obviously ab- normal data have been eliminated through the displaying function of variance chart in MATLAB and the learning results have been achieved, as shown in Table 2. (3) Restore the trained parameters of the BP Neural Network with three layers into the corresponding knowl- edge database and input respectively 4 groups of validat- ing data to further examine the network model. The com- prehensive examination result is comparatively ideal, which validates the effectiveness of the model, as shown in Table 3. The simulation evaluation result with test samples is the same as experts’ evaluation result basically. The ap- plication example indicates that the network has com- prehensive practicability. The model can master expert knowledge by sample learning and be used evaluation with many indexes. Table 2. The results of learning Sample code Training value Expected value Relative error (%) 1 0.556 0.549 1.28 2 0.117 0.119 -1.68 3 0.547 0.551 -0.73 4 0.423 0.421 0.48 5 0.113 0.116 -2.59 6 0.368 0.365 0.82 7 0.649 0.651 -0.31 8 0.235 0.227 3.52 Table 3. The examination results Sample code Training value Expected value Relative error (%) Simulation place Expert place 9 0.225 0.227 -0.88 4 4 10 0.345 0.343 0.58 3 3 11 0.435 0.433 -0.46 2 2 12 0.556 0.555 0.18 1 1  Analysis of University Science Research Capability Elements and Evaluation based on BP Neural Network 271 Copyright © 2008 SciRes JSSM 6. Conclusions This paper constructs an evaluation index system of USRC combined with characteristics of universities based on dynamic capability theory, after analyzing ef- fect elements of USRC, the paper presents a 15 - 7 -1-typed BP Neural Network with three layers to evalu- ate USRC on the basis of the highly self-organized, self-adapted and self-learned BP Neural Network com- prehensive evaluation method. The method constructs a comprehensive evaluation model combined with both quantitative and qualitative indexes which is close to human being thought mode better. The satisfying result is obtained by emulational test. Its advantages embodies in the following aspects: avoiding the effects of subjectivity and randomicity in traditional evaluating methods and ensure the preciseness and objectivity of results; accord- ing with the empirical situation along with the increasing number of training samples; and overcoming the possible linear problems among the indexes and enhancing the reliability and adaptability of evaluation. Therefore, compared with the traditional evaluating methods of USRC, the one based on BP Neural Network is of better practicability. BP Neural Network can learn by random sample parameter and construct diverse evaluation model. It can get reliable evaluation result on the basis of practi- cal test sample after learning successfully; meanwhile analysis result will be accurate and factual when training samples increase gradually, therefore, the method has more comprehensive applicability. REFERENCES [1] V. M. Giménez and J. L. Martínez, “Cost efficiency in the university: A departmental evaluation model,” [J]. Economics of Education Review, Vol. 25, pp. 543-553, 2006. [2] P. Boccardelli and M. G. Magnusson, “Dynamic capabilities in early-phase entrepreneurship,” [J]. Knowledge and Process Management, Vol. 13, pp. 162-174, 2006. [3] D. J. Teece, “Explicating dynamic capabilities: The nature and microfoundations of (sustainable) enterprise performance,” [J]. Strategic Management Journal, Vol. 28, pp. 1319-1350, 2007. [4] C. E. Helfat and M. A. Peteraf, “The dynamic resource-based view: Capability lifecycles,” [J]. Strategic Management Journal, Vol. 24, pp. 997-1010, 2003. [5] B. McEvily and A. Marcus, “Embedded ties and the acquisition of competitive capabilities,” [J]. Strategic Management Journal, Vol. 26, pp. 1033-1055, 2005. [6] D. Hussey, “Company analysis: Determining strategic capability,” [J]. Strategic Change, Vol. 11, pp. 43-52, 2002. [7] G. S. Wang, “Enterprise theory: capability theory [M],” China Economic Publishing House, PP. 69-70, 2006. [8] Teece, Pisano, and Shuen, “Dynamic capabilities and strategic management”[J], Strategic Management Journal, 18(7): pp. 509-533, 1997. [9] J. W. Dong, J. Z . Huang, and Z. H. Chen, “An intellectual model of the evolution of dynamic capabilities; and a Relevant Case Study of a Chinese Enterprise” [J], Management Word, (4): pp. 117-127, 2004. [10] J. Z. Huang and L. W. Tan, “From capability to dynamic capability: transformation of the points of stratagem” [J], Economy Management, (22): pp. 13-17, 2002. [11] K. M. Eisenhards and Martin, “Dynamic capabilities: what are they,” [J], Journal of Evolutionary Economics, (3): 519-523, 2000. [12] T. M. Crimmins, J. E. de Steiguer, and Donald Dennis, “AHP as a means for improving public participation: A pre-post experiment with university students,” [J]. Forest Policy and Economics, Vol. 7, pp. 501-514, May 2005. [13] A. Golec and E. Kahya, “A fuzzy model for competency-based employee evaluation and selection,” [J]. Computers & Industrial Engineering, Vol. 52, pp. 143-161, February 2007. [14] J. C. Glassa, G. McCallionb, and D. G. McKillopc, “Implications of variant efficiency measures for policy evaluations in UK higher education,” [J]. Socio-Economic Planning Sciences, Vol. 40, Issue 2, pp. 119-142, June 2006. [15] L. Bodin and S. I. Gass, “On teaching the analytic hierarchy process,” [J]. Computers & Operations research, pp. 1487-1497, 2003. [16] D. Du, Q. H. Pang, and Y. Wu, “Modern synthesis evaluation methods and cases choiceness,” [M], TSINGHUA University Press, pp. 34-40, 2008. [17] H. Hao and J. F. Zhong, “System analysis and evaluation methods,” [M], Economic Science Press, pp. 185-239. 2007. [18] S. L. Sun, “The application study of supplier evaluation model in supply chain,”[J], Modernization of Management, Vol. 1, pp. 62-64, 2007. [19] F. S. Wang and S. Z. Peng,“Study on the measurement of the activity-based value output of industry value chain based on BP neural network,” [J], Journal of Harbin Institute of Technology, Vol. 1, pp. 91-93, 2006. [20] Y. J. Guo, “Theory, method and application of comprehensive evaluation,” [M]. Bei Jing: Science Press, pp. 14-29, 2007. [21] W. Z. Zhu, “The establishment of systematized evaluation indexes to university science research capacity,” [J]. Journal of Anhui University of Technology and Science. Vol. 18, pp. 40-44, 2003. [22] J. Q. Cai and L. M.Cheng, “Research on evaluation of university science research capability”[J]. R & D Management, Vol. 10, pp. 76-80, 1998. [23] X. Min, C. R. Dai, and H. Bin, “Research on evaluation of university science research capability based on theory of fuzzy mathematics,” [J]. Science and Technology Management Research. Vol. 8, pp. 185-187, 2006. [24] B. Liu and X. L. Wang,“Research on evaluation methon of university sceince research capability,” [J] Science of Science and Management of S. & T. Vol. 12, pp. 83-85, 2003. [25] Y. H. Li and Y. Q. Hu, “Comprehensive evaluation based on bp artificial neural network on enterprises core competence in the old industrial base,” [J], Commercial Research, Vol. 5, pp. 34-36, 2006. [26] L. C. Jiao, “Systemic theory of neural network,” [M]. Xi An: Xidian University Press, 1996. [27] Y. F. Wu and Z. Z. Li, “Application of improved BP nerve network model in forecasting dam safety monitor- ing values,” [J], Design of Hydroelectric Power Station, Vol. 2, pp. 21-24, 2002. |