Applied Mathematics

Vol.05 No.21(2014), Article ID:52232,6 pages

10.4236/am.2014.521322

On Accelerated Singular Value Thresholding Algorithm for Matrix Completion

Li Wang, Jianfeng Hu*, Chuanzhong Chen

School of Mathematics and Statistics, Hainan Normal University, Haikou, China

Email: wlyoume@163.com, *lakerhjf@163.com, czchen@hainnu.edu.cn

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 16 October 2014; revised 8 November 2014; accepted 16 November 2014

ABSTRACT

An accelerated singular value thresholding (SVT) algorithm was introduced for matrix completion in a recent paper [1] , which applies an adaptive line search scheme and improves the convergence rate from O(1/N) for SVT to O(1/N2), where N is the number of iterations. In this paper, we show that it is the same as the Nemirovski’s approach, and then modify it to obtain an accelerate Nemirovski’s technique and prove the convergence. Our preliminary computational results are very favorable.

Keywords:

Matrix Completion, Singular Value Thresholding, Nemirovski’s Line Search Scheme, Adaptive Line Search

1. Introduction

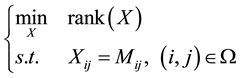

In many practical problems of interest, such as recommender system [2] -[4] , one would like to recover a matrix from a small sampling of its entries. These problems can be formulated as the following matrix completion problem:

(1)

(1)

where  is the given incomplete data matrix, Ω is the set of locations corresponding to the observed entries. The problem (1) is NP-hard because the rank function is non-convex and discontinuous. However, it is known that the rank of a matrix is equal to the number of nonvanishing singular values, and the nuclear norm

is the given incomplete data matrix, Ω is the set of locations corresponding to the observed entries. The problem (1) is NP-hard because the rank function is non-convex and discontinuous. However, it is known that the rank of a matrix is equal to the number of nonvanishing singular values, and the nuclear norm  stand for the sum of the singular values. Thus, the rank minimization problem (1) can be relaxed as following convex program problem:

stand for the sum of the singular values. Thus, the rank minimization problem (1) can be relaxed as following convex program problem:

(2)

(2)

and Candès and Recht [5] proved that under suitable conditions, the program (1) and (2) are formally equivalent in the sense that they have exactly the same unique solution.

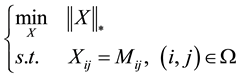

Now, there are many algorithms for solving the model (2), such as singular value thresholding (SVT) algorithm [6] , accelerated proximal gradient (APG) algorithm [7] and so on. The singular value thresholding (SVT) algorithm solves the following problem:

(3)

(3)

where PΩ denotes the orthogonal projector onto the span of matrices vanishing outside of Ω so that the  th component of

th component of  is equal to

is equal to  if

if  and zero otherwise. In [6] , Cai et al. showed that the optimal solution to the problem (3) converges to that of (2) as

and zero otherwise. In [6] , Cai et al. showed that the optimal solution to the problem (3) converges to that of (2) as .

.

However, SVT has only a global convergence rate of , where N is the number of iterations. Then Liu Jun et al. [1] proposed an accelerated SVT algorithm by considering the Lagrange dual problem of (1.3) as follows:

, where N is the number of iterations. Then Liu Jun et al. [1] proposed an accelerated SVT algorithm by considering the Lagrange dual problem of (1.3) as follows:

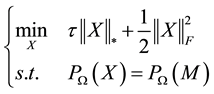

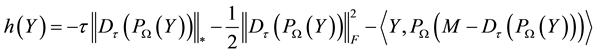

(4)

(4)

where ,

,  is the soft-thresholding op-

is the soft-thresholding op-

erator [1] , and  is convex and continuously differentiable with Lipschitz continuous gradient.

is convex and continuously differentiable with Lipschitz continuous gradient.

In the accelerated SVT algorithm, an adaptive line search scheme was adopted based on Nemirovski’s technique [8] . For reference purpose, in the following we list the related key steps of the algorithm in [1] :

Step 7: compute ;

;

Step 8: if

Step 9: go to step 14;

Step 10: else;

Step 11:

Step 12: end if;

Step 13: end while;

Step 14: set

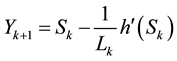

Comparing with Nemirovski’s scheme for updating Lk, i.e.

2. Main Procedure

First, we declare that the value of ω (in step 14) is always not bigger than 2, this means that it is just the same as Nemirovski’s line search scheme.

Since

where L is the Lipschitz gradient constant [10] .

Define function

which along with

From (5), (6) and (7), we have

and

It follows that

that is

Combining (8), (9),

Then, we make a few improvement based on the original algorithm to obtain a revised algorithm. The overall steps can be organized as follows:

Algorithm 1 The Modified ASVT Algorithm

Step 1: Input:

Step 2: Output:

Step 3: for

Step 4: compute

Step 5: compute

Step 6: while 1 do;

Step 7: compute

Step 8: if

Step 9:

Step 10: else;

Step 11:

Step 12: end if;

Step 13: end while;

Step 14: set

Step 15: end for.

The convergence of algorithm 1 is given by the following theorem. And in this algorithm, the upper bounds of the order of convergence have nothing to do with the initial value L1, which means that the modified scheme improved and accelerated the Nemirovski’s technique.

Theorem 1 For large enough k, the approximate solution Yk obtained by the modified algorithm satisfies

where Rh is the distance from the starting point to the optimal solution set.

Proof. According to the convergence of Nemirovski’s algorithm ([8] Theorem

where

Suppose that there exists a positive integer k such that

and then

So we have a recurrence relation of the form

Obviously, as

3. Computational Results

In this section, numerical experiments with MATLAB were performed to compare the performance of the Nemirovski’s technique algorithm and further to gain an insight into the behavior of our approach on synthetic dataset.

Code Ne-SVT and M-ASVT, based on the Nemirovski’s technique SVT method and our modified algorithm, respectively. Specific problems as follows: similar to the paper [1] , we generate m × n matrices M of rank r by randomly selecting two matrices of ML and MR, with the dimension of m × r and r × n, respectively. Each having independent identically distributed Gaussian entries, and setting

where X is the computed solution of an algorithm, then there is using the “error” to evaluate the quality of the algorithm. In addition, we set

Firstly, we compare the relative error between Ne-SVT and M-ASVT for solving the randomly generated low rank matrix problem. The initial parameters as follows: m = 100, n = 50, r = 5, p = 0.9, so meaning that 90% entries are observed. We will recover the other 10% entries by running Ne-SVT and M-ASVT separately. Table 1 reports the relative reconstruction error of different methods after 30, 60, 90 and 120 iterations. We can observe that the convergence rate of M-ASVT is really faster than that of the other, which is consistent with our analysis, and the further shows we can see Figure 1.

Then we can still test these algorithms with different settings on the following different low rank matrix completion problems: 1) Fix the matrix size

Finally, Table 2 shows the comparative results of randomly generated matrix completion problems, in which we choose error < 10−6 as the stop condition. Clearly, we can know that the more smaller τ, the more bigger p,

Table 1. Relative error comparison between Ne-SVT and M-ASVT.

Figure 1. Convergence rate of Ne-SVT and M-ASVT on synthetic data.

Table 2. Comparisons between Ne-SVT and M-ASVT on the synthetic dataset with different settings.

the more smaller rank r and the more bigger the size, then the more smaller the error and the more better efficiently. And we can observe that the M-ASVT performance surpasses Ne-SVT in all cases.

4. Conclusion

In this paper, we point out that the ASVT algorithm [1] is essentially the same as the Nemirovski’s approach, then modify it to obtain the accelerate Nemirovski’s approach and prove the convergence. We also give the comparative results of the convergence rate of Ne-SVT and M-ASVT. The preliminary computational results show that our approach is really more efficient. We empirically choose

Acknowledgements

The research is supported by Natural Science Foundation of Hainan Province of China (No. 114006).

References

- Hu, Y., Zhang, D. and Liu, J. (2012) Accelerated Singular Value Thresholding for Matrix Completion. Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, 12-16 August 2012, 298-306. http://dx.doi.org/10.1145/2339530.2339581

- Koren, Y. (2008) Factorization Meets the Neighborhood: A Multifaceted Collaborative Filtering Model. Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, 24-27 August 2008, 426-434. http://dx.doi.org/10.1145/1401890.1401944

- Koren, Y. (2010) Collaborative Filtering with Temporal Dynamics. Communications of the ACM, 53, 89-97. http://dx.doi.org/10.1145/1721654.1721677

- Steck, H. (2010) Training and Testing of Recommender Systems on Data Missing Not at Random. Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Washington DC, 25-28 July 2010, 713-722.

- Candès, E.J. and Recht, B. (2009) Exact Matrix Completion via Convex Optimization. Foundations of Computational Mathematics, 9, 717-772. http://dx.doi.org/10.1007/s10208-009-9045-5

- Cai, J.F., Candès, E.J. and Shen, Z. (2010) A Singular Value Thresholding Algorithm for Matrix Completion. SIAM Journal on Optimization, 20, 1956-1982. http://dx.doi.org/10.1137/080738970

- Toh, K.C. and Yun, S. (2010) An Accelerated Proximal Gradient Algorithm for Nuclear Norm Regularized Least Squares Problems. Pacific Journal of Optimization, 6, 615-640.

- Nemirovski, A. (1994) Lecture Notes on Efficient Methods in Convex Programming. http://www2.isye.gatech.edu/~nemirovs/Lect_EMCO.pdf

- Liu, J., Chen, J. and Ye, J. (2009) Large-Scale Sparse Logistic Regression. Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, 28 June-1 July 2009, 547-556. http://dx.doi.org/10.1145/1557019.1557082

- Nesterov, Y. (2003) Introductory Lectures on Convex Optimization: A Basic Course. Kluwer Academic Publishers, Norwell.

NOTES

*Corresponding author.