Applied Mathematics

Vol.09 No.06(2018), Article ID:85751,15 pages

10.4236/am.2018.96052

A Poisson Solver Based on Iterations on a Sylvester System

Michael B. Franklin, Ali Nadim

Institute of Mathematical Sciences, Claremont Graduate University, Claremont, CA, USA

Copyright © 2018 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: June 1, 2018; Accepted: June 26, 2018; Published: June 29, 2018

ABSTRACT

We present an iterative scheme for solving Poisson’s equation in 2D. Using finite differences, we discretize the equation into a Sylvester system, , involving tridiagonal matrices A and B. The iterations occur on this Sylvester system directly after introducing a deflation-type parameter that enables optimized convergence. Analytical bounds are obtained on the spectral radii of the iteration matrices. Our method is comparable to Successive Over-Relaxation (SOR) and amenable to compact programming via vector/array operations. It can also be implemented within a multigrid framework with considerable improvement in performance as shown herein.

Keywords:

Poisson’s Equation, Sylvester System, Multigrid

1. Introduction

Poisson’s equation , an elliptic partial differential equation [1] , was first published in 1813 in the Bulletin de la Société Philomatique by Siméon-Denis Poisson. The equation has since found wide utility in applications such as electrostatics [2] , fluid dynamics [3] , theoretical physics [4] , and engineering [5] . Due to its expansive applicability in the natural sciences, analytic and efficient approximate solution methods have been sought for nearly two centuries. Analytic solutions to Poisson’s equation are unlikely in most scientific applications because the forcing or boundary conditions on the system cannot be explicitly represented by means of elementary functions. For this reason, numerical approximations have been developed, dating back to the Jacobi method in 1845. The linear systems arising from these numerical approximations are solved either directly, using methods like Gaussian elimination, or iteratively. To this day, there are applications solved by direct solvers and others solved by iterative solvers, depending largely on the structure and size of the matrices involved in the computation. The 1950s and 1960s saw an enormous interest in relaxation type methods, prompted by the studies on optimal relaxation and work by Young, Varga, Southwell, Frankel and others. The books by Varga [6] and Young [7] give a comprehensive guide to iterative methods used in the 1960s and 1970s, and have remained the handbooks used by academics and practitioners alike [8] .

The Problem Description

The Poisson equation on a rectangular domain is given by

(1)

where is to be solved in the 2D domain , and is the forcing function. Typical boundary conditions for this equation are either Dirichlet, where the value of is specified on the boundary, or Neumann, where the value of the normal derivative is specified on the boundary. These are given mathematically as,

(2)

where is the outward unit normal along and and are the function values specified by Dirichlet or Neumann boundary conditions. It is also possible to have mixed boundary conditions along the boundary, where some edges have Dirichlet and some have Neumann, so long as the problem is well-posed. Furthermore, edges could be subject to Robin boundary conditions of the form . Any numerical scheme designed to solve the Poisson equation should be robust in its ability to incorporate any form of boundary condition into the solver. A detailed discussion of boundary condition implementation is given in the Appendix. Discretizing (1) using central differences with equal grid size leads to an rectangular array of unknown U, such that (assuming that a and b are both integer multiples of h). This discretization leads to a linear system of the form , the Sylvester equation, which can be solved either directly or iteratively. The direct method utilizes the Kronecker product approach [9] , given by

(3)

where and are appropriately ordered column vectors obtained from the arrays U and F, and K is a sparse matrix. The Kronecker product, , of any two matrices P and Q is a partitioned matrix whose ijth partition contains matrix Q multiplied by component of P. Due to the potentially large size of the system given in (3), direct solvers are not the preferred solution approach. Specifically addressed here is an iterative approach to solving the Sylvester equation,

(4)

where is the array of interior unknowns (not including the known boundary values when Dirichlet boundary conditions are given) with and . Operator matrices A and B are given by the finite difference approximation to the second derivative,

(5)

For an array of unknowns , the operator matrices are of dimension , and . The matrix structure of U should be modified such that the first index i corresponds to the x-direction, and the second index j corresponds to the y-direction. With this orientation, multiplying by the matrix A on the left approximates the second derivative in the x-direction, and multiplying by the matrix B on the right approximates the second derivative in the y-direction.

2. Sylvester Iterative Scheme

Examining (4) it might seem natural to move one term to the right-hand side of the equation to achieve an iterative scheme such as:

(6)

However, this scheme diverges, and an alternative approach is required to iterate on the Sylvester system. An appropriate method is to break up the iterative scheme into two “half-steps’’ as follows

1) First half-step:

(7)

2) Second half-step:

(8)

where is some intermediate solution between steps k and . Rearranging, this leads to

(9)

The iterative scheme (9) is similar to the Alternating Direction Implicit (ADI) formulation [10] , where Poisson’s equation is reformulated to have pseudo-time dependency,

(10)

which achieves the solution to Equation (4) when it reaches steady-state. This method is separated into two half-steps, the first time step going from time treating the x-direction implicitly and the y-direction explicitly. The second time step then goes from time , treating the y-direction implicitly and the x-direction explicitly. The two half-steps are,

(11)

which leads to

(12)

This iteration procedure looks nearly identical to our Sylvester iterations given in (9) with replaced by the unknown parameters 1/α and 1/β. However in our formulation, there is no pseudo-time dependency introduced. Instead, the eigenvalues of our operator matrices A and B are deflated to produce an iterative scheme that optimally converges, and finding the values of the parameters α and β becomes an optimization problem.

Convergence

After the Sylvester Equation (4) is modified into the iterative system (9), the iterative scheme can be written as a single step by substituting the expression for the intermediate solution into the second step of the iterative process; this yields the single update equation for given by

(13)

Assuming that an exact solution exists that exactly satisfies the linear system (4), i.e. , we define the error between the kth iteration and the exact solution as

(14)

Finding an update equation for the error is done by subtracting the error at the kth step from the error at the step, noting that the expressions involving the forcing F disappear, we arrive at

(15)

where the matrices P and Q are given by

(16)

Denoting the m eigenvalues of by , and the n eigenvalues of by , the corresponding deflated eigenvalues of the iteration matrices P and Q are

(17)

A sufficient condition for convergence of the iterative process is achieved if the spectral radii of both iteration matrices P and Q are less than one,

(18)

The error at each consecutive iteration is decreased by the product of and ,

(19)

where is the initial error. Often in practical applications, the exact solution is not known, so the error cannot be computed directly. In this case, the preferred measure in iterative schemes is given by the residual, which measures the difference of the left and right hand sides of the linear system being solved. This will be further discussed in the Results section.

3. Finding Optimal Parameters α and β

Finding α and β is an optimization problem for achieving the fastest convergence rate of the Sylvester iterative scheme (9). Given the operator matrices A and B and their respective eigenvalues and , it seems feasible to find optimal values of α and β to minimize the spectral radii of the iteration matrices P and Q given in Equations (17). From (15) the error is found by multiplying by P on the left, and Q on the right, thus the convergence is governed by the spectral radii of both P and Q.

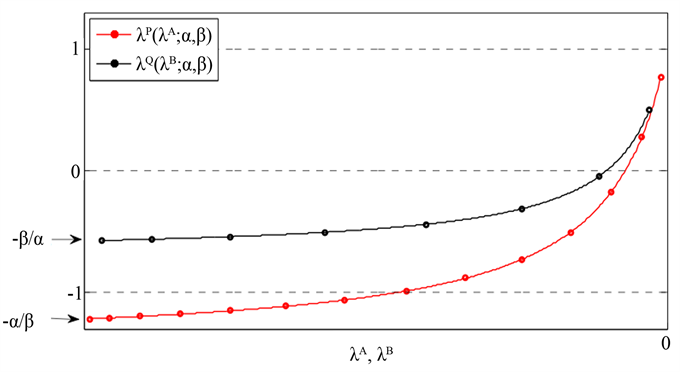

Figure 1 shows the eigenvalues of P and Q for arbitrary m and n, given by Equation (17), plotted vs. the eigenvalues of A and B for some parameters α and β. It can be seen that as or get large in magnitude, the values of or approach and , respectively. This implies that if ,

Figure 1. Eigenvalues and vs. and for given constant and . In this figure, , so , and the scheme will diverge.

the high frequency eigenvalues of P and Q, in magnitude, will be greater than one, thus convergence condition (18) will not be satisfied. This provides the restriction for convergence that,

(20)

This optimal value of will henceforth be called . It is important to note that the operator or with the larger dimension

(21)

has a larger range of eigenvalues. Figure 1 shows , (i.e. ) so it can be seen that and . This property of the eigenvalues is important when calculating an expression for c, which will soon prove to be a highly useful parameter for an adaptive approach to smoothing. Letting in (17) gives the following expression for the eigenvalues of P and Q:

(22)

Finding the optimal parameter is done by considering the error reduction of Sylvester iterations on an arbitrary initial condition U0. Assume that U0 can be decomposed into its constituent error (Fourier) modes, ranging from low frequency (smooth) to high frequency (oscillatory) modes. Given that U0 contains error modes of all frequencies, the most conservative method would be to choose such that the spectral radii and are minimized over the full range of frequencies. This ensures that all modes of error are efficiently relaxed, and convergence is governed by the product of spectral radii.

Referring to the lower curve in Figure 2, the conservative method of

Figure 2. Eigenvalues and illustrating the quantities involved in computing the optimal parameters and , and their respective optimal smoothing regions and . Note that the upper curve illustrates the method of determining in the multigrid formulation, while the lower curve is for determining in conservative Sylvester iterations.

determining would be to set and according to (22),

(23)

Noting that eigenvalues for all dimensions collapse onto the curves shown in Figure 2, this conservative approach “locks in’’ the value of the larger operator’s spectral radius, thus providing an upper bound for convergence. Figure 2 shows , so , so convergence will be limited by . Equation (23) can then be solved for giving

(24)

where absolute values are introduced as a reminder that are negative. This value of the parameter most uniformly smooths all frequencies for any arbitrary containing all frequency modes of error. It can be seen that the spectral radii of P and Q shown in Figure 2 occur at either endpoint, and the minimum amplitude occurs near the intersection of the curve with the axis. Varying the parameter in (22) controls the intersection point, and thus creates an effective “optimal smoothing region’’, denoted . Modes of error associated with this optimal smoothing region will be damped fastest, which makes Sylvester iterations highly adaptive in nature. This adaptive nature of Sylvester iterations lends itself nicely to a multigrid formulation.

The Sylvester multigrid formulation is based on the philosophy that most iterative schemes, including Sylvester iterations, relax high frequency modes fastest, leaving low frequency components relatively unchanged [11] . On all grids traversed by a multigrid V-cycle, the high frequency modes are eliminated fastest by finding the optimal parameter value such that , as shown in the upper curve of Figure 2. The height is essentially the distance above the axis associated with the approximate “middle’’ eigenvalue in the range of or . This equality gives the following optimal parameter value for the Sylvester multigrid method

(25)

where, if , the minimum (i.e., most negative) middle eigenvalue is chosen to shrink the optimal smoothing region such that high frequencies are smoothed most effectively. This choice of optimal parameter can be observed in Figure 2 to drastically decrease the magnitude of and associated with high frequencies which significantly enhance relaxation in accordance with the multigrid philosophy.

To find analytical expressions for and , it is necessary to have values for and . For Dirichlet boundary conditions, analytical expressions for and are derived below, but for Neumann boundary conditions numerical approaches are necessary to find and . The operator matrices A and B are each of tridiagonal form,

(26)

Tridiagonal matrices with constant diagonals, such as A and B for Dirichlet boundary conditions, have analytical expressions for their eigenvalues given by

(27)

where p is the arbitrary dimension of the matrix [12] . Neumann boundary conditions alter the upper and lower diagonals of A or B, thus there is no analytical form of eigenvalues for Neumann boundary conditions. Using (5) and (27) gives the following analytic form of the eigenvalues of the tridiagonal matrices A and B,

(28)

which achieves minimum and maximum values given by

(29)

respectively. Using (24), (25), and (29) the analytic expressions for optimal parameters for both conservative and multigrid approaches are given by

(30)

where again . Having expressions for and allows and to be found analytically using (22) which subsequently allows the spectral radii of the iteration matrices P and Q to be calculated. Knowing the spectral radii of the iteration matrices P and Q is highly advantageous, as it allows for an analysis of the Sylvester iterative scheme.

4. Analysis

The analysis of standard Sylvester iterations can be performed and describes the error reduction with each consecutive iteration using (19). Having the optimal parameters given by (30) and eigenvalues of P and Q in (22), the spectral radii can be calculated to be

(31)

Rewriting the last expression of (19), we see that

(32)

If we want to reduce our error to and we wish to know how many iterations it will take to achieve this error reduction, using (32) we set , and solving for k, we find it will take

(33)

iterations to reduce the error by . Here log can be with respect to any base, as long as the same one is used in both the numerator and denominator; e.g., the natural log can be used. Recall that the exact solution of (4) is only an approximate solution of the differential Equation (1) we are actually solving. Due to this, we can only expect accuracy of the truncation error of the approximation. With an method, differs from on the order of so we cannot achieve better accuracy than this no matter how well we solve the linear system. Thus, it is practical to take to be something proportional to the expected global error, e.g. for some fixed C [12] .

To calculate the order of work required asymptotically as , (i.e. ) using (33) and our choice for , we see that

(34)

The expressions for and in (31) contain several cosine terms which can be Taylor expanded about different values. Cosines with arguments like can be expanded about or depending on the form of x, namely

(35)

where, from (31), the form of x is something like or , which clearly approach one or zero, respectively, in the limit that . Using these expansions, along with the fact that for to simplify the spectral radii, we arrive at the following

(36)

when . Since , (34) combined with (36) gives the following order of work needed for convergence to within :

(37)

where only linear terms are used from (36), and the latter simplified expression can be deduced by using the property that for . Note that when , the order of work for Sylvester iterations is , which is comparable to the work necessary for the Successive Over-Relaxation (SOR) algorithm to solve Poisson’s equation [12] . This will be our basis for comparison in the Results section for standard Sylvester iterations.

5. Results

Problems solved by Sylvester iterations can, in general, be written shorthand as , where is a linear operator. In the case of Poisson’s equation, is the Laplacian operator. As an error measure, the discrete norm of the residual, , can be measured at each iteration. This number provides the stopping criterion for our iterative schemes, namely the iterations are run until

(38)

where is the initial residual, and tol is the tolerance. The tolerance is set to machine precision to illustrate the asymptotic convergence rate,

(39)

however, in practice, the discretization error is the best accuracy that can be expected. These numerical results were run using MATLAB on a 1.5 GHz Mac PowerPC G4. The model problem that is solved is given by

(40)

where , and whose exact solution

(41)

is known so errors can be computed [11] . This model problem is used to show performance of both standard and multigrid Sylvester iterations. In all cases, the initial guess of the iterative scheme is a normalized random array and can be assumed to contain all modes of error.

5.1. Standard Sylvester Iterations

For comparison, standard Sylvester iterations were tested against Successive Over-Relaxation (SOR) with Chebyshev acceleration (see e.g., [13] ). In SOR with Chebyshev acceleration, one uses odd-even ordering of the grid and changes the relaxation parameter at each half-step, which converges to the optimal relaxation parameter. The results are shown in Table 1. It is important to note that SOR iterations involve no matrix inversions, whereas Sylvester iterations do, thus the CPU time measure might not be an appropriate gauge for this particular comparison. From these results, it is clear that standard Sylvester iterations are comparable to SOR, and in most cases, converge to within tolerance in fewer iterations than SOR. An unfortunate artifact of standard iterative schemes is that as system size increases, so does the spectral radii governing the convergence. This can be observed in Table 1, as asymptotic convergence rates for each method and steadily increase, thus requiring higher numbers of iterations to solve within tolerance. The number of Sylvester iterations required to converge is consistent with the predicted number of iterations given by Equation (33) letting .

5.2. Multigrid Sylvester Iterations

In multigrid Sylvester iterations, the performance of the -cycle using Sylvester iterations is compared to that using the traditional Gauss-Seidel (GS)

Table 1. Sylvester iterations vs. successive over-relaxation (SOR).

Table 2. Multigrid sylvester iterations vs. multigrid gauss-seidel (GS) iterations.

iterations. The parameter represents the number of smoothing iterations done on each level of the downward branch of the V-cycle, while represents the number done on the upward branch. In practice, common choices are , so our performance is based on the -cycle [14] . In each case, the V-cycle descends to the coarsest grid having gridwidth , and in the Sylvester implementation, the value of is calculated to smooth high frequencies most effectively on each grid traversed by the cycle. The results are shown in Table 2. It can be seen that the asymptotic convergence rates and reach steady values independent of the gridwidth h. This is characteristic of multigrid methods, and enables the optimality of the multigrid method. It is clear when comparing the CPU times of the Sylvester multigrid formulation in Table 2 with standard Sylvester iterations in Table 1 that the multigrid framework is substantially faster (e.g., 30 times faster than standard iterations for a grid of size ). It can also be seen that the asymptotic convergence rates are such that , thus convergence is met in fewer cycles using Sylvester smoothing versus Gauss-Seidel smoothing.

6. Conclusion

Sylvester iterations provide an alternative iterative scheme to solve Poisson’s equation that is comparable to SOR in the number of iterations necessary to converge, namely converging to discretization accuracy within iterations. The true benefit of the Sylvester iterations, however, comes from its adaptive ability to smooth any range of error frequencies, thus being a perfect candidate for smoothing in a multigrid framework. Multigrid -cycles using Sylvester smoothing have an asymptotic convergence rate of (versus for Gauss-Seidel smoothing) and indicate significant improvement in efficiency over standard Sylvester iterations.

Cite this paper

Franklin, M.B. and Nadim, A. (2018) A Poisson Solver Based on Iterations on a Sylvester System. Applied Mathematics, 9, 749-763. https://doi.org/10.4236/am.2018.96052

References

- 1. Douglass, C., Hasse, G. and Langer, U. (2003) A Tutorial on Elliptic PDE Solvers and Their Parallelization. Society for Industrial and Applied Math, Philadelphia. https://doi.org/10.1137/1.9780898718171

- 2. Feig, M., Onufriev, A., Lee, M.S., Im, W., Case, D.A. and Brooks III, C.L. (2003) Performance Comparison of Generalized Born and Poisson Methods in the Calculation of Electrostatic Solvation Energies for Protein Structures. Journal of Computational Chemistry, 25, 265-284. https://doi.org/10.1002/jcc.10378

- 3. Ravoux, J.F., Nadim, A. and Haj-Hariri, H. (2003) An Embedding Method for Bluff Body Flows: Interactions of Two Side-by-Side Cylinder Wakes. Theoretical and Computational Fluid Dynamics, 16, 433-466. https://doi.org/10.1007/s00162-003-0090-4

- 4. Trellakis, A., Galick, A.T., Pacelli, A. and Ravaioli, U. (1997) Iteration Scheme for the Solution of the Two-Dimensional Schrodinger-Poisson Equations in Quantum Structures. Journal of Applied Physics, 81, 7880-7884. https://doi.org/10.1063/1.365396

- 5. Saraniti, M., Rein, A., Zandler, G., Vogl, P. and Lugli, P. (1996) An Efficient Multigrid Poisson Solver for Device Simulations. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 15, 141-150. https://doi.org/10.1109/43.486661

- 6. Varga, R.S. (1962) Matrix Iterative Analysis. Prentice-Hall, New Jersey.

- 7. Young, D.M. (1971) Iterative Solution of Large Linear Systems. Academic Press, New York.

- 8. Brezinski, C. and Wuytack, L. (2001) Numerical Analysis: Historical Developments in the 20th Century. Elsevier Science B.V., Netherlands.

- 9. Van Loan, C.F. (2000) The Ubiquitous Kronecker Product. Journal of Computational and Applied Mathematics, 123, 85-100. https://doi.org/10.1016/S0377-0427(00)00393-9

- 10. Peaceman, D.W. and Rachford, H.H. (1955) The Numerical Solution of Parabolic and Elliptic Differential Equations. Journal of the Society for Industrial and Applied Mathematics, 3, 28-41. https://doi.org/10.1137/0103003

- 11. Briggs, W.L., Henson, V.E. and McCormick, S.F. (2000) A Multigrid Tutorial. 2nd Edition, Society for Industrial and Applied Math, Philadelphia. https://doi.org/10.1137/1.9780898719505

- 12. LeVeque, R.J. (2007) Finite Difference Methods for Ordinary and Partial Differential Equations: Steady-State and Time-Dependent Problems. Society for Industrial and Applied Math, Philadelphia. https://doi.org/10.1137/1.9780898717839

- 13. Press, W., Vetterling, W., Teukolsky, S. and Flannery, B. (1992) Numerical Recipes in Fortran. 2nd Edition, Cambridge University Press, New York.

- 14. Trottenberg, U., Oosterlee, C. and Schuller, A. (2001) Multigrid. Elsevier Academic Press, London.

Appendix

1) Boundary condition implementation

Solving the Poisson equation using Sylvester iterations lends itself nicely to boundary condition implementation. Dirichlet boundary conditions of the form

(1)

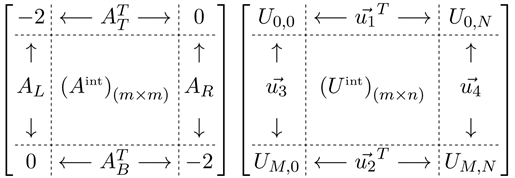

where and are functions describing the edges of U, can be implemented as follows. The unknown values in the Sylvester system given in Equation (4) are the array of interior values, where . It is possible to incorporate Dirichlet boundary conditions directly into this interior system by examining the partitioned matrix product, for example AU, given by

(2)

(2)

with UB taking an analogous partitioned form. Multiplying through by associated with the operator matrices A and B, the partitioned Sylvester system for internal unknowns gives

(3)

where all matrix-vector products are outer products. Note that the product AU incorporates Dirichlet boundary conditions in the x-direction, and UB incorporates Dirichlet boundary conditions in the y-direction. Combining the partitioned systems incorporating both A and B matrix multiplications and boundary conditions yields

(4)

which is an linear system for .

For Neumann boundary conditions, the edge at which the condition is imposed becomes part of the internal unknowns in the Sylvester system. As an example, consider a Neumann boundary condition given by

(5)

Staying within the finite difference formulation of derivatives and letting , this condition can be discretized and approximated with the central difference approximation, which yields

(6)

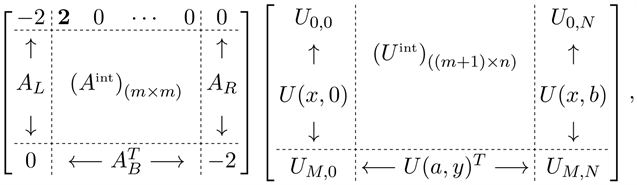

For a Neumann condition along the edge , the row vector described in (2) becomes a part of the internal array of unknowns . In order to implement this finite difference on the edge, we need to introduce a ghost layer with index , and pair Equation (6) to the second derivative operator AU for . This gives

(7)

which leads to the following partitioned form of AU,

(8)

(8)

where the additional term is taken to the right hand side of the Sylvester system such that for . Comparing (8) to (2), the size of changes from to , and is changed from 1 to 2 (shown boldface in (8)), and the right hand side is slightly modified along that edge. Similarly, any edge with a Neumann condition can be handled in this fashion. It is clear that both Dirichlet and Neumann boundary conditions are very simple to implement in the Sylvester iteration method, and only slightly modify the structure of the arrays involved.