Open Journal of Statistics

Vol.06 No.03(2016), Article ID:67748,17 pages

10.4236/ojs.2016.63045

Inverse Problem for a Time-Series Valued Computer Simulator via Scalarization

Pritam Ranjan1, Mark Thomas2, Holger Teismann2, Sujay Mukhoti1

1OM & QT, Indian Institute of Management Indore, Indore, India

2Department of Mathematics & Statistics, Acadia University, Wolfville, Canada

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 25 April 2016; accepted 25 June 2016; published 28 June 2016

ABSTRACT

For an expensive to evaluate computer simulator, even the estimate of the overall surface can be a challenging problem. In this paper, we focus on the estimation of the inverse solution, i.e., to find the set(s) of input combinations of the simulator that generates a pre-determined simulator output. Ranjan et al. [1] proposed an expected improvement criterion under a sequential design framework for the inverse problem with a scalar valued simulator. In this paper, we focus on the inverse problem for a time-series valued simulator. We have used a few simulated and two real examples for performance comparison.

Keywords:

Calibration, Computer Experiments, Contour Estimation, Gaussian Process Model, Non-Stationary Process, Sequential Design

1. Introduction

Experimentation with computer simulators has gained much popularity in the last two-three decades for applications where actual physical experiment is either too expensive, time consuming, or even infeasible. The applications range from drug discovery, medicine, agriculture, industrial experiments, engineering, manu- facturing, nuclear research, climatology, astronomy, green energy to business and social behavioural research. A computer simulator (or computer model), built with the help of an application area expert, is often a mathe- matical model implemented in C/C++/Java/etc. which aims to mimic the underlying true physical phenomenon. Thus, the ultimate objective of the (unobservable) experiment with the true physical process (or phenomenon) can be fulfilled via the computer simulators.

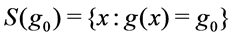

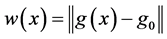

In this paper, we are interested in the inverse problem for deterministic simulators, i.e., find the input(s) of the simulator that corresponds to a pre-specified output . That is, if

. That is, if  represents the simulator response for an input

represents the simulator response for an input , then the objective is to find

, then the objective is to find . The inverse problem is also referred to as the history matching problem or (pre-)calibration problem, contour (or iso-surface) estimation, etc. Ranjan et al. [1] proposed a sequential design framework for efficient estimation of the inverse solution when the simulator returned a scalar response, whereas the simulator under consideration in this paper gave time-series response. That is, we are interested in a functional inverse problem.

. The inverse problem is also referred to as the history matching problem or (pre-)calibration problem, contour (or iso-surface) estimation, etc. Ranjan et al. [1] proposed a sequential design framework for efficient estimation of the inverse solution when the simulator returned a scalar response, whereas the simulator under consideration in this paper gave time-series response. That is, we are interested in a functional inverse problem.

This research is motivated by two real-life applications. The first application comes from the apple farming industry in the Annapolis valley, Nova Scotia, Canada, where the objective is to find a suitable set of parameters of the two-delay blowfly (TDB) model that corresponds to the reality. TDB model simulates population growth of European red mites which infest on apple leaves and diminish the quality of crop ( [2] ). The data collection for the true population growth of these mites is very expensive, as the field expert would have to periodically count the mites on the leaves of apple trees in multiple orchards. That is, the inverse problem or equivalently, the history matching problem is to find the inputs of the TDB model that generates growth curve close to the actual data collected from a specific apple orchard in Nova Scotia, Canada.

The second application focuses on the calibration of a simulator which projects the inflation rate of a country over a period of time. Inflation or increase in overall price level is a key metric in determining the economic and financial health of a country, and the central banks are the key policy makers involved in such projections or setting up a target (http://www.imf.org/external/pubs/ft/fandd/basics/target.htm). To steer the actual inflation towards the target, the central banks control its driver, the interbank interest rate. We use a computer model called Chair-The-Fed (CTF), designed by the Federal Reserve System (referred as the Fed) of United States of America (USA), which simulates inflation rates for a given interbank interest rate over a period of time. The underlying inverse problem (also known as the pre-calibration problem) is to find out an interbank interest rate (the input of the CTF model) that leads to inflation rates closest to the target.

Though the computer models are cheaper/feasible alternatives of the unobservable/expensive physical pro- cesses, realistic simulators of complex physical phenomena can also be computationally demanding, i.e., one run may take from seconds/minutes to days/months. In such a scenario, a statistical metamodel or surrogate is often used to emulate the outputs of the simulator and draw inference based on the emulated surrogate. In computer experiment literature, Gaussian process (GP) model is perhaps the most popular class of statistical surrogate because of its flexibility, closed form predictors, and ability to incorporate various uncertainties in model specification ( [3] [4] ).

Given that the simulator is expensive (computationally or otherwise), one has to be very careful in selecting the input points while training the surrogate. One efficient method is to use a sequential design framework that exploits the overall objective. For instance, [5] developed an expected improvement (EI)-based design scheme for estimating global minimum, and in the same spirit [1] proposed another EI criterion for estimating a pre-specified contour. See [6] for a brief review. Since the simulator under consideration returns time-series response, the sequential approach with standard GP model by [1] cannot directly be used.

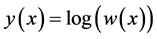

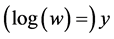

We propose scalarizing this functional inverse problem by first computing the Euclidean distance,  for every x and then find the global minimum of

for every x and then find the global minimum of  using the EI-based sequential approach with GP model proposed by [5] . Examples in Section 4 illustrates that among all realizations of GP that emulate

using the EI-based sequential approach with GP model proposed by [5] . Examples in Section 4 illustrates that among all realizations of GP that emulate , a few (in fact numerous) realizations would give negative

, a few (in fact numerous) realizations would give negative  for x near the global minimum, which is unacceptable as

for x near the global minimum, which is unacceptable as  is the Euclidean distance. To alleviate this theoretical glitch, we first propose building a non-stationary surrogate of

is the Euclidean distance. To alleviate this theoretical glitch, we first propose building a non-stationary surrogate of  via Bayesian Additive Regression Tree (BART) model ( [7] ) and then find the global minimum of

via Bayesian Additive Regression Tree (BART) model ( [7] ) and then find the global minimum of  using the EI-based scheme as in [8] .

using the EI-based scheme as in [8] .

The rest of the article is organized as follows: Section 2 presents a brief review of the statistical surrogates and the sequential design scheme. Section 3 discusses the functional inverse problem, the scalarization step and the resultant scalar inverse problem. In Section 4, we present the results on the performance comparison of y-inverse and w-inverse via both test functions and two real applications: calibration of TDB model, and CTF model. Finally Section 5 concludes the paper with a few remarks.

2. Review

In this section, we briefly review the GP model, key features of BART model as a non-stationary surrogate for computer models, and the EI-based sequential design scheme for estimating the global minimum of w- and  -surface. For this section, we assume that the simulator returns scalar response

-surface. For this section, we assume that the simulator returns scalar response  for d- dimensional input

for d- dimensional input .

.

2.1. Gaussian Process Models

Sacks et al. [3] suggested using realization of a GP for emulating deterministic computer simulator outputs. Since then several variations have been proposed for building surrogates of expensive computer models (see [4] , [9] ). The simplest version of a GP model with n training points,  , is given by

, is given by

where

ponent of the GP model, which makes it very flexible, is the correlation structure. Gaussian correlation is perhaps the most popular because of its properties like smoothness and usage in other areas like machine learning and geostatistics, whereas, both power-exponential and Matern can be thought of as generalizations of the Gaussian correlation. The power-exponential correlation is given by

where

where

(mean squared error) is

It turns out that the actual implementation of both methods (MLE and Bayesian) suffer from numerical instability in computing the determinant and inverse of R. The problem of numerical instability is certainly more pronounced for GP models with Gaussian correlation as compared to other power-exponential and Matern correlation. See [10] for more details. Popular implementations of the GP model like mlegp [11] , GPfit ( [12] ), GPmfit [13] , and DiceKriging ( [14] ) use some sort of numerical fix to overcome the computational instability issue. We used GPfit in R for all implementations of the GP model.

If the process is believed to be non-stationary (e.g.,

2.2. BART Model

Chipman et al. [7] proposed BART for approximating the conditional mean of the response given the data using a sum of regression trees. In our context, the computer simulator output can be emulated using the BART model as

where

The “ensemble” of m such tree models in (5) makes the BART model very flexible. It is capable of incor- porating higher-dimensional interactions, by adaptively choosing the structure and individual rules of the Tj’s. Furthermore, many individual trees

The model fitting is done via a Markov Chain Monte Carlo (MCMC) Bayesian backfitting algorithm. Each

iteration of the this algorithm generates one draw from the posterior distribution of

where

For applying BART to our deterministic computer experiment, we relax the default prior,

In this paper we use the freely available R package BayesTree for implementing all BART models. Recently, [16] have developed a computationally more efficient surrogate model equipped with parallel computing func- tionality.

2.3. Sequential Design

Given the fixed budget of n simulator evaluations, a naive method of estimating a pre-specified feature of interest (FOI) would be to first choose n input (training) points in a space-filling manner, build (train) the surrogate, and then estimate the FOI from this fitted emulator. Popular space-filling designs in computer experiment applications are Latin hypercube designs (LHDs) with space-filling properties like maximin, mini- mum pairwise coordinate correlation, orthogonal arrays, etc.

It has been shown via numerous illustrations in the literature that any reasonably designed sequential sampling scheme outperforms the naive one-shot design approach. The key steps of a sequential design framework is summarized as follows:

1) Choose an initial set of points

2) Obtain the vector of corresponding simulator outputs

3) Fit a statistical surrogate using the data D and response vector

4) Estimate the feature of interest (FOI) from the trained (fitted) surrogate.

5) If (the budget is exhausted, or a stopping criterion has met), then exit, else continue.

6) a) Find a new input point (or set of points)

b) Obtain

c) Append

7) Go back to Step 3 (refit the surrogate with the updated data).

For the surrogate in Step 3, we consider both the GP model and BART, and the FOI is the global minimum. The most important part of this sequential framework is Step 6(a). Though one can easily come up with a merit- based criterion, proposing a good one, that can lead to the global minimum in the fewest number of follow-up runs, is not easy.

Jones et al. [5] proposed the most popular sequential design criterion in computer experiment literature called the expected improvement (EI) with the objective of finding the global minimum of an expensive deterministic com- puter simulator. The proposed improvement at an untried point

where

the expected value of

where

Inspired by [5] , a host of EI criteria have been proposed for estimating different FOIs. See [6] for a recent review on such merit-based design criteria. Finding the optimal follow-up design point also depends on the accuracy of EI optimization. It turns out that the EI surfaces are typically multi-modal, and the location and number of prominent modes/peaks changes from iteration-to-iteration. Popular optimization techniques used for EI optimization include, genetic algorithm ( [1] ), particle swarm optimization [13] , multistart newton-based methods ( [12] ), and branch-and-bound algorithms [17] . Thus, one should be careful in choosing the follow-up points to achieve the optimal improvement at every step.

3. Inverse Problem for Time-Series Response

In this paper, we assume that the computer simulator is deterministic, takes a d-dimensional input

The key idea is to propose a scalarization strategy that transforms the functional inverse problem to a minimization problem for a scalar-valued simulator. That is, for every

then find the global minimum of

It is important to note that the predicted realizations of

One possibility is to fit a log-GP model, i.e., fit a GP model to

Next we use simulated and real-life examples to compare the performance of the EI-BART method for minimizing

4. Results

In this section, we consider the functional inverse problems for one test function with slight variations that enables 1-, 2- and 3-(dimensional) inputs, TDB model with six-dimensional inputs, and CTF model with 1-dimensional input. For all examples, we use both EI-BART and EI-GP approaches with the same sequential settings, i.e., same

Example 1. Suppose the simulator

Let the true field data correspond to

Figure 2 illustrates results for EI-GP implementation with

It is clear from Figure 2(d) that the global minimum was located very quickly in this sequential procedure, which was expected given the simplicity of the test function. Figure 2(b) also shows that many realizations of the GP model that emulate the training data would give negative value of

Figure 3 shows EI-BART illustration with the same sequential settings as in Figure 2 for finding the minimum of

Both EI-BART and EI-GP find the global minimum, but EI-GP exhibit much faster convergence. This is also expected as BART is typically a bit more data-hungry than the GP models. Further note from Figure 3(c) that

Figure 1. A few computer model outputs and the true field data for Example 1.

Figure 2. Sequential optimization of

Example 2. Consider the same test function as in Example 1, with a small modification that would allow two-dimensional inputs

Let the true field data correspond to

For this inverse problem, we used

Figure 5(d) shows that a decent value of the global minimum has been found after 9 - 10 follow-up trials. Of course, the efficiency can perhaps be improved by exploring different

Figure 3. Sequential optimization of

EI-BART requires a few more points to attain the same accuracy level (see Figure 6). The estimated

Example 3. Again we consider the same base example (as in Example 1) with a slight twist to the simulator to allow a three-dimensional input

Furthermore, we assume that the true field data correspond to

As the input dimension grows, we have increased the initial design size and the overall budget to

Figure 4. A few model outputs and the true field data for the simulator in Example 2.

Figure 5. Sequential optimization of

Figure 6. Sequential optimization of

leading by a small margin, but EI-BART is a theoretically more correct methodology to follow.

Under EI-GP, the final estimate of

Example 4. Annapolis Valley in Nova Scotia, Canada is popular for its apple farming. Unfortunately, apple orchards are susceptible to the infestation of pests. Of particular interest is the Panonychus ulmi (Koch) or European red mite (ERM).

Growth cycle of a mite consists of three stages (1) egg (2) juvenile and (3) adult. These mites start their lives

Figure 7. A few computer model outputs and the true data for Example 3.

Figure 8. Running estimate of

as eggs that are laid in the late summer months of the previous year. Once the temperature rises to a sufficient level the following spring, these eggs hatch and emerge as larvae which further grow to juveniles followed by egg-laying adults. During the summer, adult female ERM lay eggs that hatch during the same season due to the warmer climate. Finally, in mid-to-late August, ERM lay eggs and the cycle repeats itself.

For deeper understanding of the dynamics of ERM population growth, data collection and analysis is important, but the field data collection from apple orchards is very expensive, as the field experts would have to physically go to the orchard on multiple occasions and count the number of mites (in different stages) from the leves of trees. Tiesmann et al. (2009) proposed a two-delay blowfly (TDB) model that tries to mimic the population growth of these mites. Figure 10 presents a few TDB models output along with the corresponding field data collected with significant effort for the juvenile group.

Though there are several parameters of this TDB model, the following six parameters turned out to be very

Figure 9. Comparison of the best solution found under EI-GP and EI-BART for Example 3.

influential:

・

・

・

・

・

・ Season―average number of days on which adults switch to laying winter eggs.

The TDB model returns the population growth of all three stages of mite, we focus only on the growth cycle of “juveniles” in this paper. A feasible range of x was elicited by the experts for running the TDB model. The main objective of this study is to use the proposed sequential strategy to efficiently calibrate the TDB model so that it returns realistic outputs. That is, find x (six-dimensional) such that

As in the earlier examples, we apply both EI-BART and EI-GP with

Figure 10. A few realisation of juvenile growth curves from the TDB model, and the true average field data collected from the Annapolis valley, NS, Canada.

Figure 11. Running estimate of

From Figure 11 it is clear than EI-GP outperforms EI-BART. (

Example 5. Inflation and unemployment are two key tools to measure the financial health of a country. Typically central bank of a country, like the Federal Reserve System (referred to as the Fed) in the United States of America (USA), is mandated to minimize unemployment rate and stabilize prices of goods and services. Central banks aim to do so by controlling the interbank borrowing rate, i.e. the rate of interest at which banks and credit institutions can raise fund overnight from other similar institutions. Decision on interest rate is thus a

Figure 12. Best TDB model runs obtained via the two sequential procedure.

crucial component of monitory policy for a country.

The monetary policy making body of the Fed, Federal Open Market Committee (FOMC), announces pro- jected inflation (measured using personal consumption expenditures) and unemployment rates based on the analysis of its members for the current year as well as next two years. In this paper, we focus on the inflation rates. Figure 13 presents the projected inflation rate from the January meeting announcements of FOMC for each year during 2006-2015 (see https://www.federalreserve.gov/monetarypolicy/fomccalendars.htm and https://www.federalreserve.gov/monetarypolicy/fomc_historical.htm).

Several interesting theories and models have been proposed thus far to understand the rates projected by the Fed (e.g., [18] [19] ). We focus on the simulator called Chair-the-Fed (CTF)

(http://sffed-education.org/chairthefed/WebGamePlay.html), wherein one can select the interbank borrowing rate (i.e., funds rate, the input-x) and observe the simulated inflation rates for the next 10 time points (years). The CTF model allows x to vary between 0 and 20 with an increment of 0.25. Figure 13 depicts a few simulated model runs overlay with the projected rates. Assuming that the Fed funds rate remains static for the next 10 time points, one can play the game (i.e., run the CTF model) and generate a set of inflation fund rates. Our main objective is to find the fund rate (x) which generates the inflation rates curve closest to the target (projected values) set by FOMC.

Since the CTF simulator is only one-dimensional, we started the sequential approach in both EI-GP and EI- BART with only

Figure 13. A few realisation of the inflation rates curve from the CTF model (blue dashed curves), and the true projected rates set by the Fed and FOMC (red solid curve).

Figure 14. Running estimate of

Figure 15. Best Chair-The-Fed (CTF) model runs obtained via the two se- quential procedure (EI-GP and EI-BART). The solid (red) curve denotes the truth (target inflation rates curve) and the dashed (blue) curve represents the best match found by the CTF model.

minimum with relatively fewer additional model evaluations as compared to the number points needed by EI-GP.

The discrepancy in the target inflation rates curve and the best match produced by CTF model is perhaps attributed to the discreteness in the x-space (CTF allows inputs in the interval (0.20) with a jump of 0.25), or the fact that x is not allowed to vary with time, or perhaps additional input variables have to be included to get a closer match. Furthermore, higher efficiency can perhaps be achieved by exploring the right

5. Concluding Remarks

In this paper, we focused on an inverse problem for deterministic computer simulator with time-series (or functional) outputs. Our main focus was on reducing the complexity of the problem from time-series response to scalar by scalarization,

The efficiency (minimizing the number of simulator runs) is achieved by using EI-based sequential design scheme and surrogate-based approach. It is explained in Section 3 that the most popular choice of surrogate in computer experiment (GP model) is theoretically inappropriate, however, as illustrated through multiple ex- amples, EI-GP approach works equally well for finding the inverse solution. Even if we ignore this theoretical glitch, there is no clear winner between EI-GP and EI-BART.

There are several interesting issues that should be investigated further and we wish to work on it in our future research endeavours. A few of them are listed as follows: 1) A more thorough comparison between the two methods (EI-GP and EI-BART) should be conducted. For instance, we should use a variety of test functions, repeat the simulations to average out the effect of initial design choice, find optimal (

Acknowledgements

Preliminary work was done by Corey Hodder during his BSc honours thesis at Acadia University, NS, Canada. Thanks to the Acadia Centre for Mathematical Modeling and Computation (ACMMaC) facility for providing access to clusters for conducting simulations. The authors also thank the reviewers for their comments.

Cite this paper

Pritam Ranjan,Mark Thomas,Holger Teismann,Sujay Mukhoti, (2016) Inverse Problem for a Time-Series Valued Computer Simulator via Scalarization. Open Journal of Statistics,06,528-544. doi: 10.4236/ojs.2016.63045

References

- 1. Ranjan, P., Bingham, D. and Michailidis, G. (2008) Sequential Experiment Design for Contour Estimation from Complex Computer Codes. Technometrics, 50, 527-541.

http://dx.doi.org/10.1198/004017008000000541 - 2. Teismann, H., Karsten, R., Hammond, R., Hardman, J. and Franklin, J. (2009) On the Possibility of Counter-Productive Intervention: The Population Mean for Blowflies Models Can Be an Increasing Function of the Death Rate. Journal of Biological Systems, 47, 739-757.

http://dx.doi.org/10.1142/S0218339009003009 - 3. Sacks, J., Welch, W.J., Mitchell, T.J. and Wynn, H.P. (1989) Design and Analysis of Computer Experiments. Statistical Science, 4, 409-423.

http://dx.doi.org/10.1214/ss/1177012413 - 4. Santner, T.J., Williams, B.J. and Notz, W.I. (2003) The Design and Analysis of Computer Experiments. Springer Verlag, New York.

http://dx.doi.org/10.1007/978-1-4757-3799-8 - 5. Jones, D., Schonlau, M. and Welch, W. (1998) Efficient Global Optimization of Expensive Black-Box Functions. Journal of Global Optimization, 13, 455-492.

http://dx.doi.org/10.1023/A:1008306431147 - 6. Bingham, D., Ranjan, P. and Welch, W. (2014) Sequential Design of Computer Experiments for Optimization, Estimating Contours, and Related Objectives. Statistics in Action: A Canadian Outlook, 109-124.

- 7. Chipman, H.A., George, E.I. and McCulloch, R.E. (2010) BART: Bayesian Additive Regression Trees. Annals of Applied Statistics, 4, 266-298.

http://dx.doi.org/10.1214/09-AOAS285 - 8. Chipman, H., Ranjan, P. and Wang, W. (2012) Sequential Design for Computer Experiments with a Flexible Bayesian Additive Model. Canadian Journal of Statistics, 40, 663-678.

http://dx.doi.org/10.1002/cjs.11156 - 9. Rasmussen, C.E. and Williams, C.K.I. (2006) Gaussian Processes for Machine Learning. The MIT Press, Cambridge.

- 10. Ranjan, P., Haynes, R. and Karsten, R. (2011) A Computationally Stable Approach to Gaussian Process Interpolation of Deterministic Computer Simulation Data. Technometrics, 53, 366-378.

http://dx.doi.org/10.1198/TECH.2011.09141 - 11. Dancik, G.M. and Dorman, K.S. (2008) mlegp: Statistical Analysis for Computer Models of Biological Systems Using R. Bioinformatics, 24, 1966-1967.

http://dx.doi.org/10.1093/bioinformatics/btn329 - 12. MacDonald, K.B., Ranjan, P. and Chipman, H. (2015) GPfit: An R Package for Fitting a Gaussian Process Model to Deterministic Simulator Outputs. Journal of Statistical Software, 64, 1-23.

http://dx.doi.org/10.18637/jss.v064.i12 - 13. Butler, A., Haynes, R., Humphries, T.D. and Ranjan, P. (2014) Efficient Optimization of the Likelihood Function in Gaussian Process Modeling. Computational Statistics and Data Analysis, 73, 40-52.

http://dx.doi.org/10.1016/j.csda.2013.11.017 - 14. Roustant, O., Ginsbourger, D. and Deville, Y. (2012) DiceKriging, DiceOptim: Two R Packages for the Analysis of Computer Experiments by Kriging-Based Metamodeling and Optimization. Journal of Statistical Software, 51, 1-55.

http://dx.doi.org/10.18637/jss.v051.i01 - 15. Gramacy, R.B. and Lee, H.K.H. (2008) Bayesian Treed Gaussian Process Models with an Application to Computer Modeling. Journal of the American Statistical Association, 103, 1119-1130.

http://dx.doi.org/10.1198/016214508000000689 - 16. Pratola, M.T., Chipman, H.A., Gattiker, J.R., Higdon, D.M., McCulloch, R. and Rust. W.N. (2014) Parallel Bayesian Additive Regression Trees. Journal of Computational and Graphical Statistics, 23, 830-852.

http://dx.doi.org/10.1080/10618600.2013.841584 - 17. Franey, M., Ranjan, P. and Chipman, H. (2011) Branch and Bound Algorithms for Maximizing Expected Improvement Functions. Journal of Statistical Planning and Inference, 141, 42-55.

http://dx.doi.org/10.1016/j.jspi.2010.05.011 - 18. Svensson, L.E. (1997) Inflation Forecast Targeting: Implementing and Monitoring Inflation Targets. European Economic Review, 41, 1111-1146.

http://dx.doi.org/10.1016/S0014-2921(96)00055-4 - 19. Huang, K.X. and Liu, Z. (2005) Inflation Targeting: What Inflation Rate to Target? Journal of Monetary Economics, 52, 1435-1462.

http://dx.doi.org/10.1016/j.jmoneco.2004.08.008