Applied Mathematics

Vol.3 No.10A(2012), Article ID:24106,6 pages DOI:10.4236/am.2012.330188

Infinite Horizon LQ Zero-Sum Stochastic Differential Games with Markovian Jumps

1School of Management, Guangdong University of Technology, Guangzhou, China

2School of Economics & Commence, Guangdong University of Technology, Guangzhou, China

Email: huainian258@163.com

Received June 22, 2012; revised July 22, 2012; accepted July 30, 2012

Keywords: Stochastic Systems; Differential Games; Markovian Jumps; Stochastic H∞ Control

ABSTRACT

This paper studies a class of continuous-time two person zero-sum stochastic differential games characterized by linear Itô’s differential equation with state-dependent noise and Markovian parameter jumps. Under the assumption of stochastic stabilizability, necessary and sufficient condition for the existence of the optimal control strategies is presented by means of a system of coupled algebraic Riccati equations via using the stochastic optimal control theory. Furthermore, the stochastic H∞ control problem for stochastic systems with Markovian jumps is discussed as an immediate application, and meanwhile, an illustrative example is presented.

1. Introduction

The stochastic control problems governed by Itô’s differential equation have become a popular research topic in past decades. Recently, stochastic H∞ control problem with state and control—dependent noise was considered [1,2]. It has attracted much attention and has been widely applied to various fields. Particularly, the stochastic H2/H∞ control with state-dependent noise has been addressed [3,4]. Recently, linear quadratic differential games and their applications have been widely investigated in many literatures, and examples of differential games in economics and management science can be found e.g. in [5-9]. These results are mainly based on the deterministic systems. However, to the best of our knowledge, few results have been obtained for stochastic differential games with Markovian jumps.

In this paper, the stochastic zero-sum games for linear quadratic systems governed by Itô’s differential equations with state-dependent noise and Markovian jumps are addressed, Such class of systems has important applications in engineering practice since they can be used to represent random failure processes in manufacturing systems, electric power systems and so on, see [10-18]. In particular, stability and robust stabilization for such perturbed systems were investigated extensively in [13, 15,17]. A bounded real lemma for Markovian jump stochastic systems was derived in [13]. [11] studied the optimal filtering problem for such systems, while [12,14,16] addressed the issue of linear quadratic regulator. The goal of this paper is to develop the differential game theory for stochastic Itô systems with Markovian jumps, a necessary and sufficient condition is developed for the existence of optimal control strategies in terms of a coupled algebraic Riccati equations (AREs), which can be viewed as an extension of the existing results of [19]. In the end, stochastic H∞ control problem with Markovian jumps is given as our theoretical applications and an illustrative example is presented.

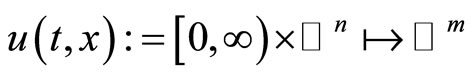

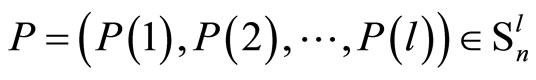

For convenience, we will make use of the following notations in this paper:

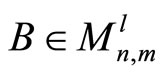

AT: transpose of a matrix or vector A; A−1: inverse of a matrix or vector A; A > 0 (A ≥ 0): positive definite (positive semidefinite) symmetric matrix A; χA: indicator function of a set A; : space of all

: space of all  with A (i) being n × m matrix,

with A (i) being n × m matrix, ;

; ;

; : space of all n × n symmetric matrices;

: space of all n × n symmetric matrices; : space of all

: space of all  with A(i) being n × n symmetric matrix,

with A(i) being n × n symmetric matrix, ;

;

means

means

for

for ;

; : space of all n-dimensional real vectors with usual 2-norm | • |.

: space of all n-dimensional real vectors with usual 2-norm | • |.

2. Definitions and Preliminaries

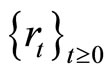

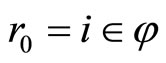

Throughout this paper, let  be a given filtered probability space where there exists a standard one dimensional Wiener process

be a given filtered probability space where there exists a standard one dimensional Wiener process , and a right continuous homogeneous Markov chain

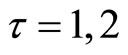

, and a right continuous homogeneous Markov chain  with state space

with state space . We assume that

. We assume that  is independent of

is independent of  and has the following transition probability:

and has the following transition probability:

(1)

(1)

where  for

for  and

and . Ft stands for the smallest σ-algebra generated by process

. Ft stands for the smallest σ-algebra generated by process , rs,

, rs,  , i.e.

, i.e. .

.

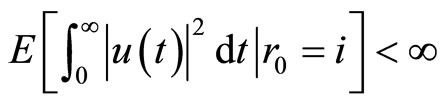

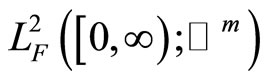

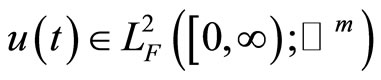

By  denote the space of all measureable functions

denote the space of all measureable functions , which is Ft -measurable for every

, which is Ft -measurable for every , and

, and ,

, . Obviously,

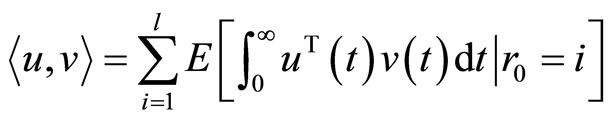

. Obviously, is a Hilbert space with the inner product

is a Hilbert space with the inner product

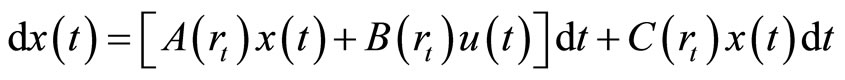

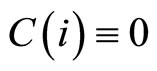

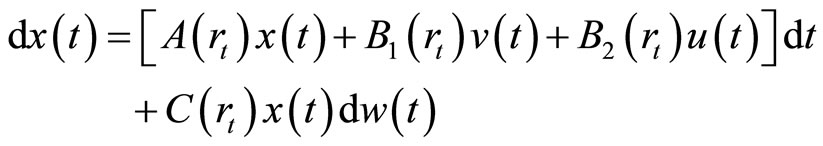

Consider the following linear stochastic controlled system with Markovian jumps

(2)

(2)

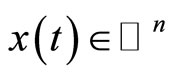

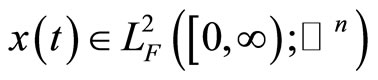

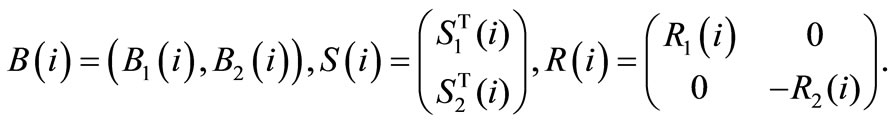

where  and

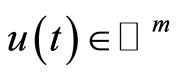

and  are the state and control input, respectively. The coefficients

are the state and control input, respectively. The coefficients  and

and  with A(i), B(i), C(i),

with A(i), B(i), C(i),  , being constant matrices.

, being constant matrices.

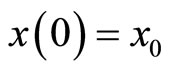

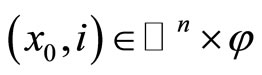

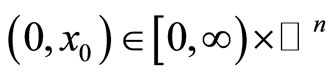

It is well known that for any  and

and , there exists a unique solution

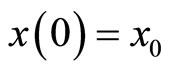

, there exists a unique solution  of (1) with initial condition

of (1) with initial condition ,

, . Next, we first introduce the definition of stochastic stabilizability which is an essential assumption in this paper.

. Next, we first introduce the definition of stochastic stabilizability which is an essential assumption in this paper.

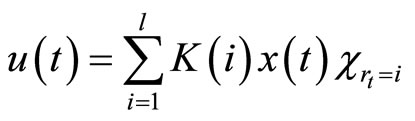

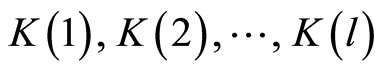

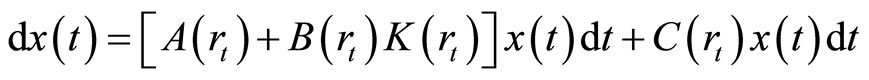

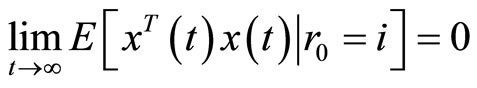

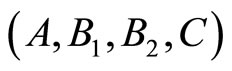

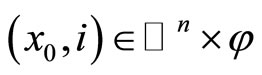

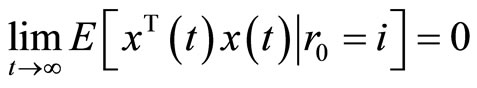

Definition 1. System (2) or (A, B, C) is called stochastic stabilizable (in mean-square sense), if there exists a feedback control  with

with being constant matrices, such that for any initial state

being constant matrices, such that for any initial state ,

,  , the closed-loop system

, the closed-loop system

is asymptotically mean-square stable, i.e.

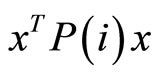

Now we give two lemmas which are important in our subsequent analysis. For system (2), by applying Itô’s formula to , we immediately obtain the following result.

, we immediately obtain the following result.

Lemma 1. Suppose  is given, then for system (2) with initial condition

is given, then for system (2) with initial condition , we have (see Equation (3) below)

, we have (see Equation (3) below)

Lemma 2 [4]. For system (2), (A, B, C) is stochastic stabilizable if and only if (iff) the following Lyapunov-type equation:

(4)

(4)

has a unique positive semidefinite solution  .

.

3. Problem Formulation

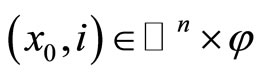

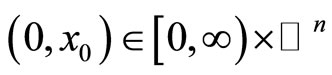

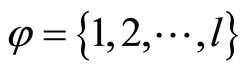

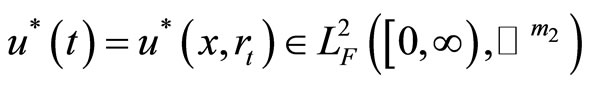

Fix . Let

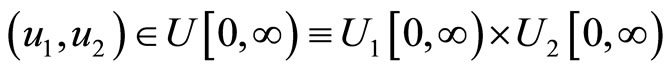

. Let  be the set of the

be the set of the  -valued, square integrable processes adapted with the σ-field generated by

-valued, square integrable processes adapted with the σ-field generated by , rt,

, rt,  , respectively. Associated with each

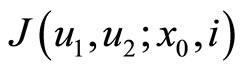

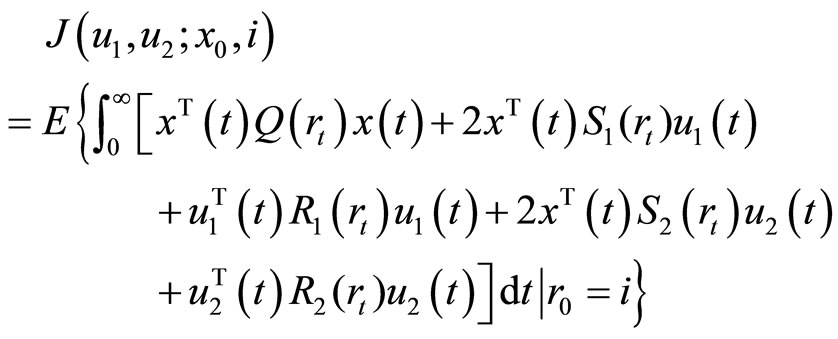

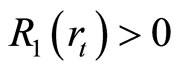

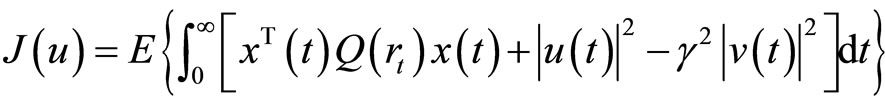

, respectively. Associated with each  is a quadratic cost functional

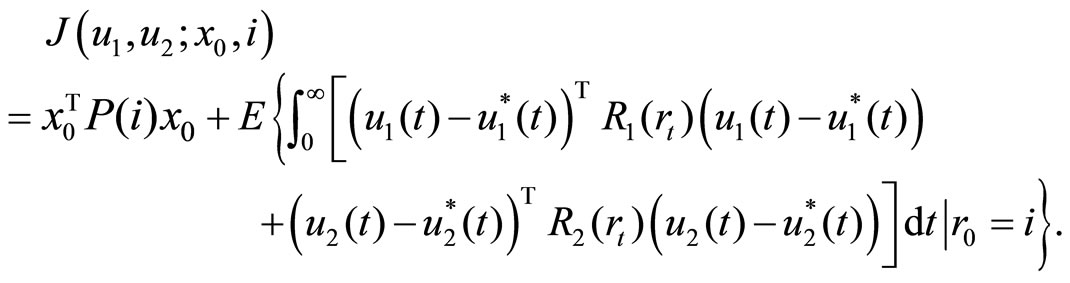

is a quadratic cost functional :

:

(5)

(5)

where ,

,  ,

,  ,

,  ,

,  ,

,  represents the expectation of the enclosed random variable,

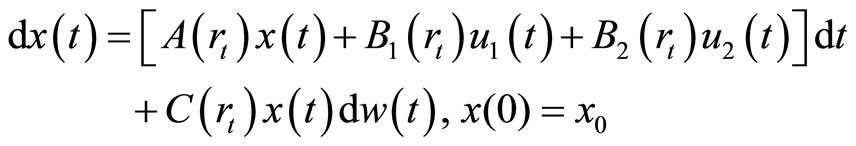

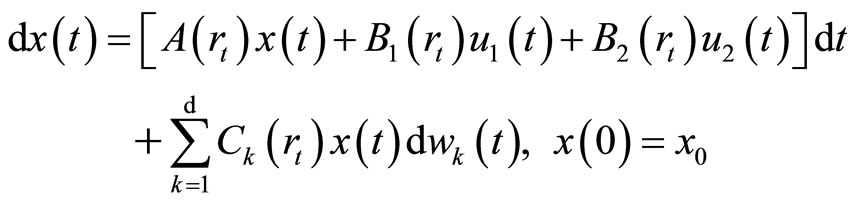

represents the expectation of the enclosed random variable,  is the solution to the following linear stochastic differential equation with statedependent noise and Markovian parameter jumps

is the solution to the following linear stochastic differential equation with statedependent noise and Markovian parameter jumps

(6)

(6)

In (5) and (6),  , etc. whenever

, etc. whenever . Now we consider the following zero-sum differential game problem.

. Now we consider the following zero-sum differential game problem.

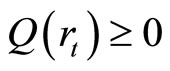

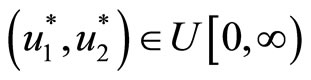

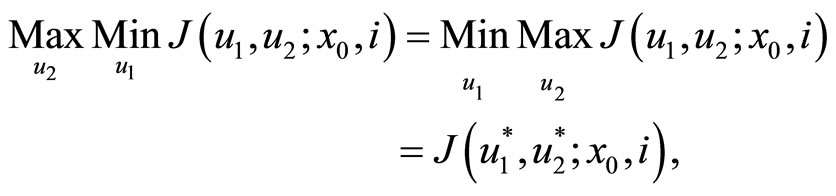

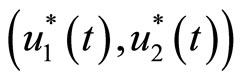

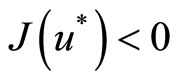

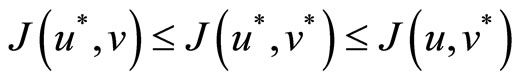

Problem 1. Given a system described by (6), find , such that

, such that

(3)

(3)

or equivalently,

That is, there are two players for the differential game. Player 1 chooses control  to minimize the objective J, while Player 2 chooses control

to minimize the objective J, while Player 2 chooses control  to maximize J. Now we introduce a new type of coupled algebraic Riccati equations associated with the problem 1.

to maximize J. Now we introduce a new type of coupled algebraic Riccati equations associated with the problem 1.

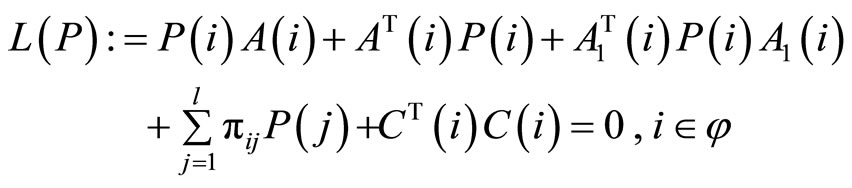

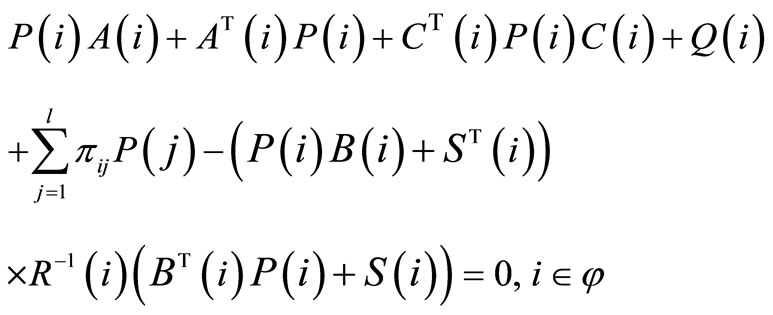

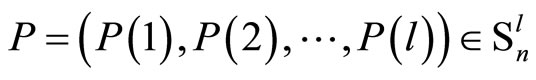

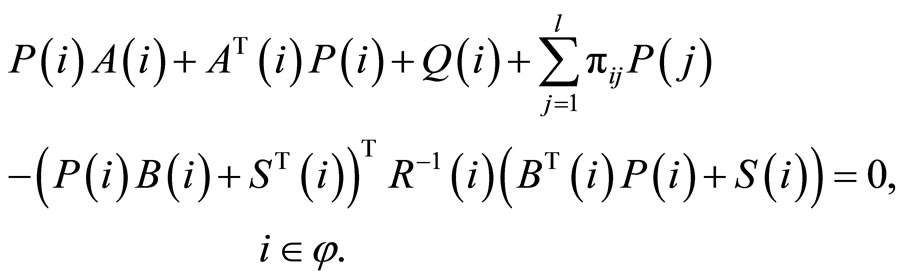

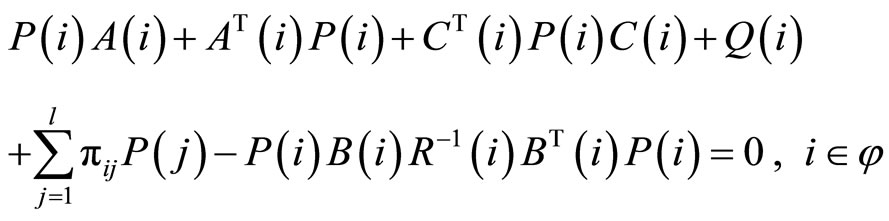

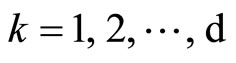

Definition 2. The following system of algebraic equations

(7)

(7)

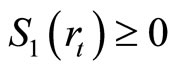

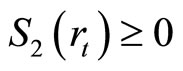

with

is called a system of coupled algebraic Riccati equations (AREs).

In the next section, we will give our main results of this paper.

4. Main Results

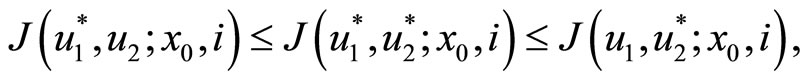

In this section, we will show that the solvability of the AREs (7) is sufficient and necessary for the existence of the optimal control strategies of problem 1.

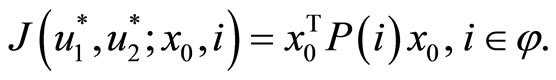

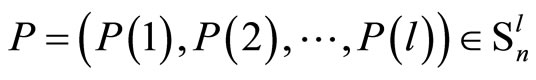

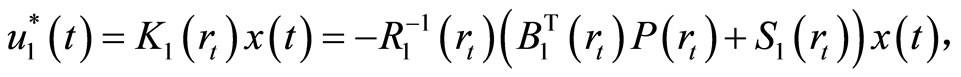

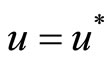

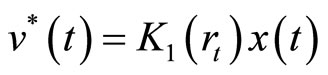

Theorem 1. Suppose  is stochastic stabilizable, problem 1 has a pair of solutions

is stochastic stabilizable, problem 1 has a pair of solutions  with respect to the initial

with respect to the initial  , where

, where  and

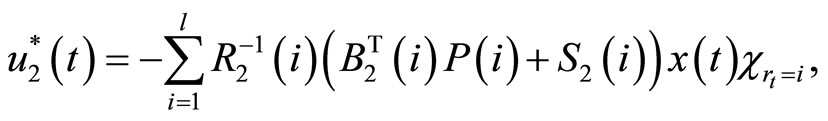

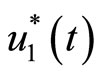

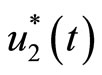

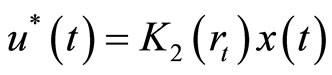

and  are the following feedback strategies

are the following feedback strategies

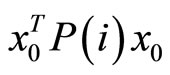

respectively, iff the AREs (7) admits a solution . In this case

. In this case

1)

2)

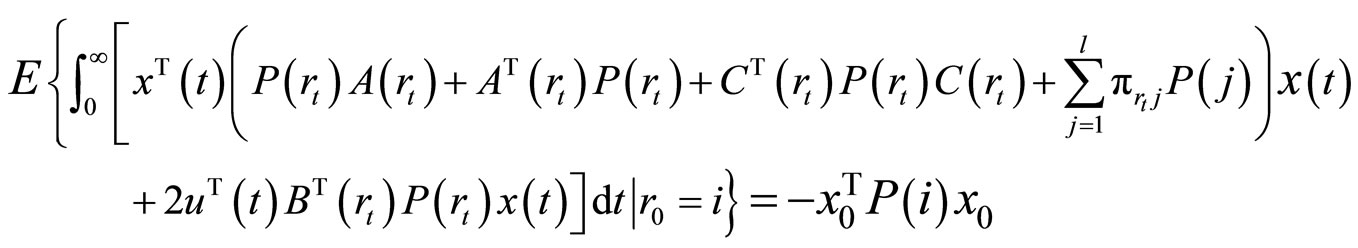

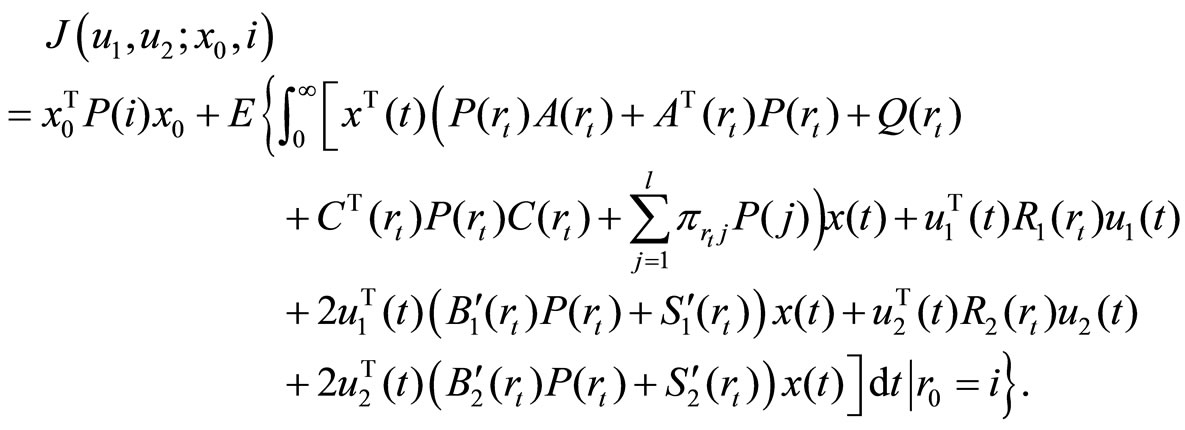

Proof. Sufficiency: Let

be a solution of the AREs (7). According to lemma 1, we have

be a solution of the AREs (7). According to lemma 1, we have

By a series of simple computation together with (7), the cost function  can be expressed as following

can be expressed as following

Thus,  is minimized by the control strategies

is minimized by the control strategies  and

and  with the optimal value being

with the optimal value being .

.

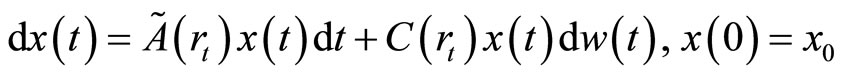

Necessity: Let

be the optimal control strategies to problem 1. Implement  and

and  in (6), then

in (6), then

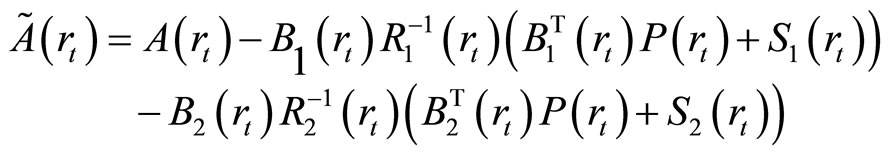

where

According to lemma 2 and the stochastic optimal control theory, we can easily obtain the conclusion that the AREs (7) admit a solution  .

.

So this completes the proof of Theorem 1.

Remark 1. It is interesting to see the specialization of our results in the deterministic case (i.e.  for

for ). The corresponding AREs are

). The corresponding AREs are

(8)

(8)

which can be viewed as an extended results of [12].

Remark 2. From Theorem 1 we can see that the derivation of the optimal control strategies for this type of differential games is transformed into deriving the solutions to coupled algebraic Riccati Equations (7), this conclusion be coincident with the results presented in [4], etc.

Remark 3. For the coupled algebraic Riccati Equations (7) may be solved by a standard numerical integration such as LMI method [20], or iterative algorithm similar with the algorithm presented in [21].

5. Application to Stochastic H∞ Control

Now, we apply the above developed theory to solve some problems related to stochastic H∞ control. Firstly, we statement the stochastic H∞ control problem with Markovian jumps, then, we demonstrate the usefulness of the above developed theory in the study of stochastic H∞ control.

Consider the following controlled system:

(9)

(9)

with the cost functional

(10)

(10)

where  is a right continuous Markov process on a given probability space

is a right continuous Markov process on a given probability space  and the state space

and the state space  and the transition probability described by (1); here

and the transition probability described by (1); here  is a standard one dimensional Wiener processes. In (9) and (10),

is a standard one dimensional Wiener processes. In (9) and (10),  is the state vector,

is the state vector,  is the input control and

is the input control and  is the vector of the exogenous disturbances.

is the vector of the exogenous disturbances.

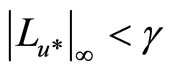

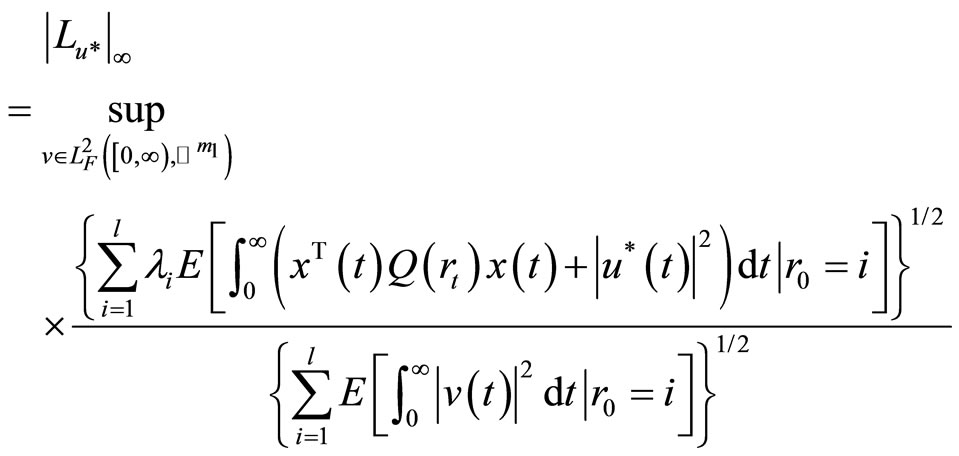

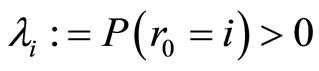

The following definition is parallel with the definition 2 presented in [22].

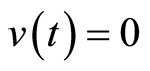

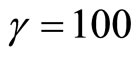

Definition 3. Given disturbance attenuation level γ > 0, the state feedback strategy  is said to be an H∞ control for system (9), if for

is said to be an H∞ control for system (9), if for ,

,  ,

,  , we have

, we have

1)  stabilizes system (9) internally, i.e. when

stabilizes system (9) internally, i.e. when ,

,  , the state trajectory of (9) with any initial value

, the state trajectory of (9) with any initial value  satisfies

satisfies

2)  with

with

where  for all

for all .

.

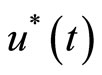

Generally speaking, the H∞ control problem described by (9) and (10) is to find a control  such that

such that  for arbitrary exogenous disturbances

for arbitrary exogenous disturbances . As stated in [23], if we view

. As stated in [23], if we view  and

and  in the stochastic H∞ control problem as two control strategies of players P1 and P2 from the viewpoint of game theory, the H∞ control problem can be converted into solving a stochastic game problem, while

in the stochastic H∞ control problem as two control strategies of players P1 and P2 from the viewpoint of game theory, the H∞ control problem can be converted into solving a stochastic game problem, while  is in fact the saddle point of this game, e.g.

is in fact the saddle point of this game, e.g.

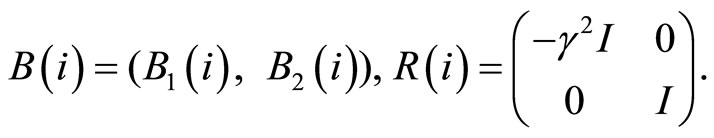

According to Theorem 1 discussed in Section 4, the following results can be obtained straightly:

Theorem 2. For system described by (9), the stochastic H∞ control admits a pair of solutions  with

with ,

,  , iff the following AREs

, iff the following AREs

(11)

(11)

with

has a solution , where

, where

.

.

In this case,  is an H∞ control for system (9), and

is an H∞ control for system (9), and  is the corresponding worst case disturbance.

is the corresponding worst case disturbance.

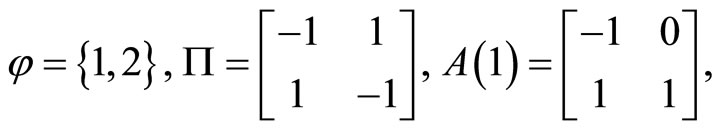

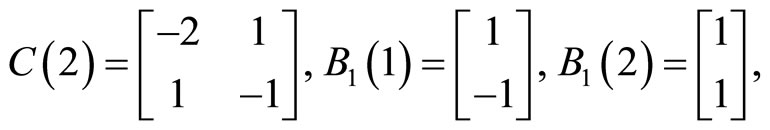

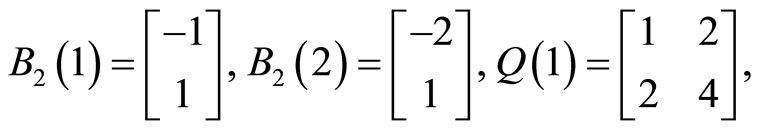

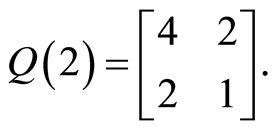

Illustrative example: Consider system (9) with the coefficients as follows:

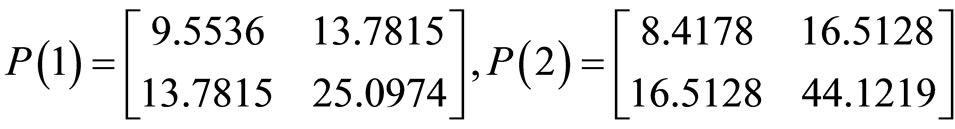

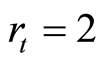

Set , solving (11) via using the algorithm proposed in [21], we have

, solving (11) via using the algorithm proposed in [21], we have

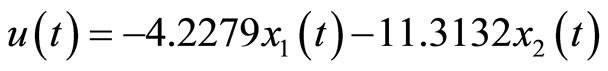

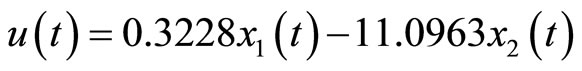

Therefore, the H∞ control is given by  while

while ;

;  while

while .

.

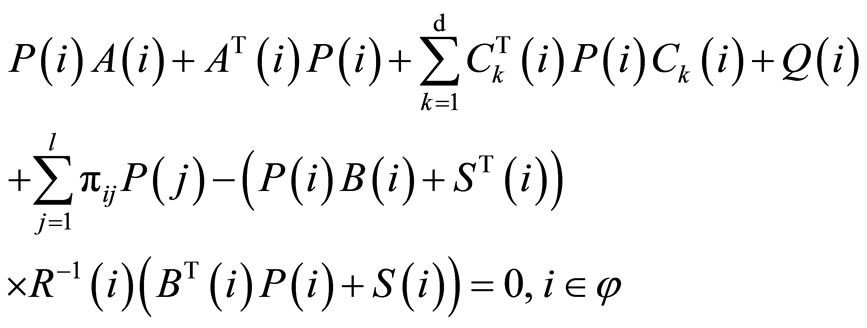

Remark 4. Although we restrict ourselves to single noise stochastic systems throughout the paper, our main theorem still hold for multiple multiplicative noise case. For example, if we replace (6) with

(12)

(12)

where ,

,  , being independent, one dimensional Wiener processes, then Theorem 1 still holds with AREs (7) replaced by

, being independent, one dimensional Wiener processes, then Theorem 1 still holds with AREs (7) replaced by

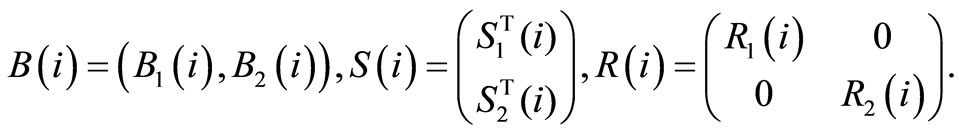

(13)

(13)

with

6. Conclusion

This paper has investigated the linear quadratic zero-sum stochastic differential games with state-dependent noise and Markovian jump parameters in infinite-time horizon, sufficient and necessary conditions for the existence of the optimal control strategies have been obtained, which are expressed in a system of coupled algebraic Riccati equations. The results obtained in this paper extend the existing results of [19]. Throughout this paper, we only have focused on the zero-sum LQ differential games for stochastic systems, while we believe that nonzero-sum LQ differential games still have essential applications, and further studies on such kind of case should be continued.

7. Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant No. 71171061, the Natural Science Foundation of Guangdong Province under Grant No. S2011010004970.

REFERENCES

- V. A. Ugrinovskii, “Robust H∞ Control in the Presence of Stochastic Uncertainty,” International Journal of Control, Vol. 71, No. 2, 1998, pp. 219-237. doi:10.1080/002071798221849

- D. Hinrichsen and A. J. Pritchard, “Stochastic H∞,”SIAM Journal on Control and Optimization, Vol. 36, No. 5, 1998, pp. 1504-1538. doi: 10.1137/S0363012996301336

- B.-S. Chen and W. H. Zhang, “Stochastic H2/H∞ Control with State-Dependent Noise,” IEEE Transactions on Automatic Control, Vol. 49, No. 1, 2004, pp. 45-57. doi:10.1109/TAC.2003.821400

- Y. L. Huang, W. H. Zhang and G. Feng, “Infinite Horizon H2/H∞ Control for Stochastic Systems with Markovian Jumps,” Automatica, Vol. 44, No. 3, 2008, pp. 857-863. doi:10.1016/j.automatica.2007.07.001

- T. Basar and G. J. Olsder, “Dynamic Noncooperative Game Theory,” 2nd Edition, SIAM, Philadelphia, 1999.

- E. J. Dockner, S. Jorgensen, N. Van Long and G. Sorger, “Differential Games in Economics and Management Science,” Cambridge University Press, Cambridge, 2000. doi:10.1017/CBO9780511805127

- F. Avner, “Differential Games,” Dover Publications, New York, 2006.

- S. Jorgensen and G. Zaccour, “Differential Games in Marketing,” International Series in Quantitative Marketing, Kluwer Academic Publishers, London, 2004.

- T. L. Friesz, “Dynamic Optimization and Differential Games,” Springer, New York, 2009.

- E. K. Boukas, Q. Zhang and G. Yin, “Robust Production and Maintenance Planning in Stochastic Manufacturing Systems,” IEEE Transactions on Automatic Control, Vol. 40, No. 6, 1995, pp. 1098-1102. doi:10.1109/9.388692

- T. Björk, “Finite Dimensional Optimal Filters for a Class of Itô-Processes with Jumping Parameters,” Stochastics, Vol. 4, No. 2, 1980, pp. 167-183. doi:10.1080/17442508008833160

- D. D. Sworder, “Feedback Control of a Class of Linear Systems with Jump Parameters,” IEEE Transactions on Automatic Control, Vol. 14, No. 1, 1969, pp. 9-14. doi:10.1109/TAC.1969.1099088

- V. Dragan and T. Morozan, “Stability and Robust Stabilization to Linear Stochastic Systems Described by Differential Equations with Markovian Jumping and Multiplicative White Noise,” Stochastic Analysis and Applications, Vol. 20, No. 1, 2002, pp. 33-92. doi:10.1081/SAP-120002421

- V. Dragan and T. Morozan, “The Linear Quadratic Optimization Problems for a Class of Linear Stochastic Systems with Multiplicative White Noise and Markovian Jumping,” IEEE Transactions on Automatic Control, Vol. 49, No. 5, 2004, pp. 665-675. doi:10.1109/TAC.2004.826718

- M. D. Fragoso and N. C. S. Rocha, “Stationary Filter for Continuous-Time Markovian Jump Linear Systems,” SIAM Journal on Control and Optimization, Vol. 44, No. 3, 2006, pp. 801-815. doi:10.1137/S0363012903436259

- X. Li, X. Y. Zhou and M. A. Rami, “Indefinite Stochastic Linear Quadratic Control with Markovian Jumps in Infinite Time Horizon,” Journal of Global Optimization, Vol. 27, No. 2-3, 2003, pp. 149-175. doi:10.1023/A:1024887007165

- X. R. Mao, G. G. Yin and C. G. Yuan, “Stabilization and Destabilization of Hybrid Systems of Stochastic Differential Equations”, Automatica, Vol. 43, No. 2, 2007, pp. 264-273. doi:10.1016/j.automatica.2006.09.006

- A. S. Willsky, “A Survey of Design Methods for Failure Detection in Dynamic Systems,” Automatica, Vol. 12, No. 6, 1976, pp. 601-611. doi:10.1016/0005-1098(76)90041-8

- M. McAsey and L. Mou, “Generalized Riccati Equations Arising in Stochastic Games,” Linear Algebra and Its Applications, Vol. 416, No. 2-3, 2006, pp. 710-723. doi:10.1016/j.laa.2005.12.011

- M. A. Rami and X. Y. Zhou, “Linear Matrix Inequalities, Riccati Equations, and Indefinite Stochastic Linear Quadratic Controls,” IEEE Transactions on Automatic Control, Vol. 45, No. 6, 2000, pp. 1131-1143. doi: 10.1109/9.863597

- V. Dragan and I. Ivanon, “A Numerical Procedure to Compute the Stabilising Solution of Game Theoretic Riccati Equations of Stochastic Control,” International Journal of Control, Vol. 84, No. 4, 2011, pp. 783-800. doi: 10.1080/00207179.2011.578261

- Z. W. Lin, Y. Lin and W. H. Zhang, “A Unified Design for State and Output Feedback H∞ Control of Nonlinear Stochastic Markovian Jump Systems with State and Disturbance-Dependent Noise,” Automatica, Vol. 45, No. 12, 2009, pp. 2955-2962. doi:10.1016/j.automatica.2009.09.027

- W. H. Zhang and B.-S. Chen, “State Feedback H∞ Control for a Class of Nonlinear Stochastic Systems,” SIAM Journal on Control and Optimization, Vol. 44, No. 6, 2006, pp. 1973-1991. doi: 10.1137/S0363012903423727