Open Journal of Statistics

Vol.08 No.01(2018), Article ID:82716,33 pages

10.4236/ojs.2018.81012

Simulated Minimum Hellinger Distance inference methods for Count Data

Andrew Luong, Claire Bilodeau, Christopher Blier-Wong

École d’actuariat, Université Laval, Québec, Canada

Copyright © 2018 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: January 22, 2018; Accepted: February 25, 2018; Published: February 28, 2018

ABSTRACT

In this paper, we consider simulated minimum Hellinger distance (SMHD) inferences for count data. We consider grouped and ungrouped data and emphasize SMHD methods. The approaches extend the methods based on the deterministic version of Hellinger distance for count data. The methods are general, it only requires that random samples from the discrete parametric family can be drawn and can be used as alternative methods to estimation using probability generating function (pgf) or methods based matching moments. Whereas this paper focuses on count data, goodness of fit tests based on simulated Hellinger distance can also be applied for testing goodness of fit for continuous distributions when continuous observations are grouped into intervals like in the case of the traditional Pearson’s statistics. Asymptotic properties of the SMHD methods are studied and the methods appear to preserve the properties of having good efficiency and robustness of the deterministic version.

Keywords:

break Down Points, Robustness, Power Mixture, Esscher Transform, Mixture Discrete Distributions, Chi-Square Tests Statistics

1. Introduction

1.1. New Distribution Created Using Probability Generating Functions

Nonnegative discrete parametric families of distributions are useful for modeling count data. Many of these families do not have closed form probability mass functions nor closed form formulas to express the probability mass function (pmf) recursively. Their pmfs can only be expressed using an infinite series representation but their corresponding Laplace transforms have a closed form and, in many situations, they are relatively simple. Probability generating functions are often used for discrete distributions but Laplace transforms are equivalent and can also be used. In this paper, we use Laplace transforms but they will be converted to probability generating functions (pgfs) whenever the need arises to link with results which already appear in the literature. We begin with a few examples to illustrate the situation often encountered when new distributions are created.

Example 1 (Discrete stable distributions) The random variable follows a positive stable law if the probability generating function and Laplace transform are given respectively as

and

.

The distribution was introduced by Christoph and Schreiber [1] .

It is easy to see that .

The Poisson distribution can be obtained by fixing . The distribution is infinitely divisible and displays long tail behavior. The recursive formula for its mass function has been obtained; see expression (8) given by Christoph and Schreiber [1] .

Now if we allow to be a random variable with an inverse Gaussian distribution whose Laplace transform is given by , a mixed

nonnegative discrete stable distribution can be created with Laplace transform given by

,

where and is the distribution with Laplace transform . The resulting Laplace transform,

,

is the Laplace transform of a nonnegative infinitely divisible (ID) distribution.

We can see that it is not always straightforward to find the recursive formula for the pmf for a nonnegative count distribution. Even if it is available, it might still complicated to be used numerically for inferences meanwhile the Laplace transform or pgf can have a relatively simple representation.

We can observe that the new distribution is obtained by using the inverse Gaussian distribution as a mixing distribution. This is also an example of the use of a power mixture (PM) operator to obtain a new distribution. The PM operator will be further discussed in Section 1.2.

From a statistical point of view, when neither a closed form pmf nor a recursive formula for the pmf exists, maximum likelihood estimation can be difficult to implement.

The power mixture operator was introduced by Abate and Whitt [2] (1996) as a way to create new distributions from an infinitely divisible (ID) distribution together with a mixing distribution using Laplace transforms (LT). We shall review it here in the next section, after a definition of an ID distribution.

Definition 1.1.3. A nonnegative random variable X is infinitely divisible if its Laplace transform can be written as

,

where also is the Laplace transform of a random variable. In many situations, and belong to the same parametric family. See Panjer and Willmott [3] (1992, p42) for this definition.

Abate and Whitt [2] (1996) introduced the power mixture (PM) operator for ID distributions and also some other operators. To the operators already developed by them, we add the Esscher transform operator and the shift operator. All operators considered are discussed below.

1.2. Operational calculus on Laplace Transforms

1.2.1. Power Mixture (PM) Operator

Suppose that is an infinitely divisible nonnegative discrete random variable such that the Laplace transform can be expressed as , where is the Laplace transform of X, which is nonnegative and infinitely divisible as well. The power mixture (PM) with mixing distribution function and Laplace transform of a nonnegative random variable Y is defined as the Laplace transform

.

Furthermore, if is infinitely divisible, then the distribution with Laplace transform is also infinitely divisible. The random variable with distribution can be discrete or continuous but needs to be ID. This is the PM method for creating new parametric families, i.e., using the PM operator. The PM method can be viewed as a form of continuous compounding method. The ID property can be dropped but as a result the new distribution created using the PM operator needs not be ID. For the traditional compounding methods, see Klugman et al. [4] (p141-148). Abate and Whitt [2] also mentioned other methods.

Example 2 (Generalized negative binomial) The generalized negative binomial (GNB) distribution introduced by Gerber [5] can be viewed as a power variance function distribution mixture of a Poisson distribution. The power variance function distribution introduced by Hougaard [6] is obtained by tilting the positive stable distribution using a parameter . It is a three-parameter continuous nonnegative distribution with Laplace transform given by

.

Gerber [5] used a different parameterization and named this distribution generalized gamma. It is also called positive tempered stable distribution in finance.

Let be the Laplace transform of a Poisson distribution with rate . The Laplace transform of the GNB distribution can be represented as

.

The corresponding pgf can be expressed as

.

The pgf is given by expression (21) in the paper by Gerber [5] . The GNB distribution is infinitely divisible. If stochastic processes are used instead of distributions, the distribution can also be derived from a stochastic process point of view by considering a Poisson process subordinated to a generalized gamma process and obtain the new distribution as the distribution of increments of the new process created. See section 6 of Abate and Whitt [2] (p92-93). See Zhu and Joe [7] for other distributions which are related to the GNB distribution.

Note that, if is discrete, is the Laplace transform of a random variable expressible as a random sum. A random sum is also called stopped sum in the literature, see chapter 9 by Johnson et al. [8] (p343-403). The Neymann-Type A distribution given below is an example of a distribution of a random sum.

Example 3 Let ,the ’s conditioning on Y are independent and identically distributed and follows a Poisson distribution with rate and Y is distributed with a Poisson distribution with rate . Using the Power mixture operator we conclude that the LT for X is

,

and the pgf is

.

Properties and applications of the Neymann type A distribution have been studied by Johnson et al. [8] (p368-378). The mean and variance of X are given respectively by and . From these expressions, moment estimators (MM) have closed form expressions, see section (4.1) for comparisons between MM estimators and SMHD estimators in a numerical study. For applications often the parameter is smaller than the parameter .

1.2.2. Esscher transform operator

By tilting the density function using the Esscher transform, the Esscher transform operator can be defined and, provided the tilting parameter introduced is identifiable, new distributions can be created from existing ones.

Let X be the original random variable with Laplace transform . The Esscher transform operator which can be viewed as a tilting operator is defined as

.

1.2.3. Shift operator

Let be the Laplace transform of a positive continuous random variable X. The Laplace transform of is given by . So, we can define the shift operator as

.

In some cases, even the pmf of Y has a closed form but the maximum likelihood (ML) estimators might be attained at the boundaries, the ML estimators might not have the regular optimum properties.

Note that parallel to the closed form pgf expressions for these new discrete distributions, it is often simple to simulate from the new distributions if we can simulate from the original distribution before the operators are applied. For example, let us consider the new distribution obtained by using the Esscher operator. It suffices to simulate from the distribution before applying the operator and apply the acceptance-rejection method to obtain a sample from the Esscher transformed distribution. The situation is similar for new distributions created by the PM operator. If we can simulate one observation from the mixing distribution of Y which gives a realized value t and if it is not difficult to draw one observation from the distribution with LT then combining these two steps, we would be able to obtain one observation from the new distribution created by the PM operator. Consequently, simulated methods of inferences offer alternative methods to inferences methods based on matching selected points of the empirical pgf with its model counterpart or other related methods, see Doray et al. [9] for regression methods using selected points of the pgfs. For these methods there is some arbitrariness on the choice of points which make it difficult to apply. The techniques of using a continuum number of points to match are more involved numerically, see Carrasco and Florens [10] . The new methods also avoid the arbitrariness of the choice of points which is needed for the regression methods and the k-L procedures as proposed by Feurverger and McDunnough [11] if characteristic functions are used instead of probability generating functions and they are more robust than methods based on matching moments (MM) in general. We can reach the same conclusions for another class of distributions namely mixture distributions created by other mixing mechanisms, see Klugman et al. [4] , Nadarajah and Kotz [12] , Nadarajah and Kotz [13] . These distributions might not display closed form pmf or the pmf are only expressible only using special functions such as the confluent hypergeometric functions. For these models, likelihood methods might also be difficult to implement.

This leads us to look for alternative methods such as the simulated minimum Hellinger distance (SMHD) methods for count data. We shall consider grouped count data and ungrouped count data. With grouped data, it leads to simulated chi-square type statistics which can be used for model testing for discrete or continuous models. These statistics are similar to the traditional Pearson statistics. For model testing with continuous distributions, continuous observations when grouped into intervals are reduced to count data and we do not need to integrate the model density functions on intervals using SMHD methods, it suffices to simulate from the continuous model and construct sample distribution functions to obtain estimate interval probabilities. Therefore, the scopes of applications of simulated methods are widened due to these features.

We briefly describe the classical minimum Hellinger distance methods introduced by Simpson [14] , Simpson [15] for estimation for count data in the next section and we shall develop inference methods based on a simulated version of this HD distance following Pakes and Pollard [16] (1989), who have developed an elegant asymptotic theory for estimators obtained by minimizing a simulated objective function expressible as the Euclidean norm of a random vector of functions. As an example, they have shown that the simulated minimum chi-square estimators without weight satisfy the regularity conditions for being consistent and have an asymptotic normal distribution, see Pakes and Pollard [16] (p1048). They work with properties of some special classes of sets to check the regularity conditions of their Theorem 3.3. Meanwhile, Newey and McFadden [17] (p2187) work with properties of random functions and introduce a stochastic version of the classical equicontinuity property of real analysis. In this paper, we shall also extend the notion of continuity of real analysis to a version which only holds in probability for random functions which we call continuity in probability for a sequence of random functions which is similar to the notion of continuity with probability one as discussed by Newey and McFadden [17] (p2132) in their Theorem 2.6. We also use the property of the compact domains under considerations shrink as the sample size to verify conditions of Theorem 3.3 given by Pakes and Pollard [16] (1989) for SMHD methods using grouped data and conditions of Theorem 7.1 of Newey and McFadden [17] (p2185) for ungrouped data. This approach appears to be new and simpler that other approaches which have been used in the literature to establish asymptotic normality for estimators using simulations; previous approaches are very general but they are also more complicated to apply. A similar notion of continuity in probability has been introduced in the literature of stochastic processes.

It is worth to mention that simulated methods of inferences are relatively recent. In advanced econometrics textbook such as the book by Davidson and McKinnon [18] , only section 9.6 is devoted to simulated methods of inferences where the authors mention simulated methods of moments (MSM). The simulated version for HD methods will be referred to as version S and the original version which is deterministic will be referred to as version D in this paper. We briefly review the Hellinger distance and chi-square distance below and subsequently develop simulated inference methods for grouped and ungrouped count data using HD distance.

1.3. Hellinger and Chi-Square Distance Estimation

Assume that we have a random sample of n independent and identically distributed

(iid) nonnegative observations from a pmf with and

is the vector of parameters of interest,

is the vector of the true parameters. If the data are grouped into

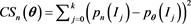

disjoint intervals  so that they form a partition of the nonnegative real line, the unweighted chi-square distance is defined to be

so that they form a partition of the nonnegative real line, the unweighted chi-square distance is defined to be

,

,

where  is the proportion of the sample which fall into the interval

is the proportion of the sample which fall into the interval  and

and  is the probability of an observation which fall into

is the probability of an observation which fall into  under the pmf

under the pmf . If

. If  has no closed form expression but we can draw a sample of size

has no closed form expression but we can draw a sample of size  from this distribution then clearly

from this distribution then clearly  can be estimated

can be estimated

by  using the simulated sample of size U which is the proportion of observations of the simulated sample which has taken a value in

using the simulated sample of size U which is the proportion of observations of the simulated sample which has taken a value in . To illustrate their theory Pake and Pollard [16] (p1047-1048) considered simulated estimators obtained by minimizing with respect to

. To illustrate their theory Pake and Pollard [16] (p1047-1048) considered simulated estimators obtained by minimizing with respect to  the objective function

the objective function

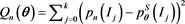

and show that the estimators satisfy the regularity conditions of their Theorem 3.1 and 3.3 which lead to conclude that the simulated estimators are consistent and have an asymptotic normal distribution. As we already know, a weighted version can be more efficient, if we attempt a version S for the Pearson’s chi square distance,

,

,

and since the denominator of the summand involves , it is numerically not easy to introduce a version S. Clearly, if

, it is numerically not easy to introduce a version S. Clearly, if , the version S of this

, the version S of this

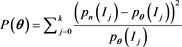

distance will run into numerical difficulties. The traditional and deterministic version of the Hellinger distance as given by

(1)

(1)

is more appropriate for a version S and it is already known that it generates minimum HD estimators which are as efficient as the minimum chi-square estimators or maximum likelihood (ML) estimators for grouped data, see

Cressie-Read divergence measure with

Note that

so that

Since the objective function remains bounded and this property continues to hold for the ungrouped data case, this suggests that SMHD methods could preserve some of the nice robustness properties of version D.

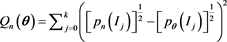

For ungrouped data, it is equivalent to have grouped data but using intervals with unit length

Note that for a data set the sum given by the RHS of the above expression only has a finite number of terms as

The version D with

has been investigated by Simpson [14] , Simpson [15] who also shows that the MHD estimators have a high breakdown point of at least 50% and first order as efficient as the ML estimators. For the Poisson case, the ML estimator is the sample mean which has a zero breakdown point and consequently far less robust than the HD estimators, yet the HD estimators are first order as efficient as the ML estimators. This feature makes HD estimators attractive. For the notion of finite sample break down point as a measure of robustness, see Hogg et al. [20] (p594-595), Kloke and McKean [21] (p29) and for the notion of asymptotic breakdown point for large samples, see Maronna et al. [22] (p58).

Simpson [14] , Simpson [15] extended the works of Beran [23] for continuous distributions to discrete distributions. Beran [23] appears to be the first to introduce a weaker form of robustness not based on bounded influence function and shows that efficiency can be achieved for robust estimators not based on influence functions. Also, see Lindsay [24] for discussions on robustness of Hellinger distance estimators. Simulated versions extending some of the seminal works of Simpson will be introduced in this paper.

SMHD methods appear to be useful for actuarial studies when there is a need for fitting discrete risk models, see chapter 9 of Panjer and Willmott [3] (p292-238) for fitting discrete risk models using ML methods. The SMHD methods appear to be useful for other fields as well especially when there is a need to analyze count data with efficiency and robustness but the pmfs of the models do not have closed form expressions. For minimizing the objective functions to obtain SMHD estimators, simplex derivative free algorithm can be used and the R package already has built in functions to implement these minimization procedures.

1.4. Outlines of the paper

In this paper, we develop unified simulated methods of inferences for grouped and ungrouped count data using HD distances and it is organized as follows. Asymptotic properties for SMHD methods are developed in Section 2 where consistency and asymptotic normality are shown in section 2.2. Based on asymptotic properties, consistency of the SMHD estimators hold in general but high efficiencies of SMHD estimators can only be guaranteed if the Fisher information matrix of the parametric exists, a situation which is similar to likelihood estimation. One can also viewed the estimators are fully efficient within the class of simulated estimators obtained with the model pmf being replaced by a simulated version. Chi-square goodness of fit test statistics are constructed in Section 2.3. For the ungrouped case, it can be seen as having grouped data but the number of intervals with unit length and the number of intervals is infinite, it is given in section 3 where the ungrouped SMHD estimators are shown to have good efficiencies. The breakdown point for the SMHD estimators remains

at least

included in section 4. First, we consider the Neymann type A distribution and compare the efficiencies of the SMHD estimators versus moment (MM) estimators, simulations results appear to confirm the theoretical results showing that the SMHD estimators are more efficient than the MM estimators based on matching the first two empirical moments with their model counterparts for a selected range of parameters. The Poisson distribution is considered next and the study shows that despite being less efficient than the ML estimator, the efficiency of the SMHD estimators remain high and the estimators are far more robust than the ML estimator in the presence of outliers just as in the deterministic case as shown by Simpson [14] (p805). More works are needed in this direction in general and for assessing the performance SMHD estimators and comparisons with the performances of other traditional estimators in various parametric models in finite samples.

2. SMHD Methods for Grouped data

2.1. Introduction

Pakes and Pollard [16] have developed a very elegant and general theory for establishing consistency and asymptotic normality of estimators obtained by minimizing the length of a random function taking values in an Euclidean space, i.e., by minimizing

or

where

Theorem 3.3 given in Pakes and Pollard [16] (p1038-1043). It is very general and it is clearly applicable for both versions D and S for Hellinger distance with grouped data. Let

and for HD distance, version D, let

and for version S, let

which can be reexpressed as

In general, the intervals

with

for continuous distribution with support of the entire real line used in financial

study, we might let

Let

of the true parameters is denoted by

2.2. Asymptotic properties

2.2.1. Consistency

We define MHD estimators as given by the vector

Theorem 1 (Consistency)

Under the following conditions

a)

b)

c)

Theorem 3.1 states condition b) as

An expression is

Therefore, for both versions of

Asymptotic normality is more complicated in general. For the grouped case, Theorem 3.3 given by Pakes and Pollard [16] (p1040) can be used to establish asymptotic normality for both versions of Hellinger distance estimators. We shall rearrange results of Theorem 3.3 under Theorem 2 and Corollary 1 given in the next section to make it easier to apply for HD estimation using both versions.

Since the proofs have been given by the authors, we only discuss here the ideas of their proofs to make it easier to follow the results of Theorem 2 and Corollary 1 in Section (2.2.2).

For both versions,

not differentiable for version S, the traditional Taylor expansion argument cannot be used to establish asymptotic normality of estimators obtained by minimizing

Let

Under these circumstances, it suffices to work with

This condition is used to formulate Theorem 2 below and is slightly more stringent than the condition iii) of their Theorem 3.3 but it is less technical and sufifcient for SMHD estimation. Clearly, for SMHD estimation

2.2.2. Asymptotic normality

In this section, we shall state a Theorem namely Theorem 2 which is essentially Theorem 3.3 by Pakes and Pollard [16] (p1040-1043) with the condition (4) of Theorem 2 given by expression (9) replacing their condition (iii) in their Theorem 3.3, the condition (4) implies the condition (iii) by being more stringent. We also comment on the conditions needed to verify asymptotic normality for the HD estimators based on Theorem 2.

Theorem 2

Let

Under the following conditions:

1) The parameter space Ω is compact,

2)

3)

4)

5)

6)

Then, we have the following representation which will give the asymptotic distribution of

or equivalently, using equality in distribution,

The proofs of these results follow from the results used to prove Theorem 3.3 given by Pakes and Pollard [16] (p1040-1043). For expression (13) or expression (14) to hold, in general only condition 5) of Theorem 2 is needed and there is no need to assume that

Corollary 1.

Let

The matrices

We observe that condition 4) of Theorem 2 when applies to Hellinger distance or in general involve technicalities. The condition 4) holds for version D, we only need to verify for version S. Note that to verify the condition 4, it is equivalent to verify

and for version S of Hellinger distance estimation, let

and for the grouped case, it is given by

We need to verify that we have the sequence of functions

Note that

We shall outline the approach by first defining the notion of continuity in probability and let

Now as

details of these arguments are given in technical appendices TA1.1 and TA1.2 at the end of the paper, in the section of Appendices.

The notion of continuity in probability has been used in a similar context in the literature of stochastic processes, see Gusalk et al. [25] and will be introduced in the next paragraph and we also make a few assumptions which are summarized by Assumption 1 and Assumption 2 given below along with the notion of continuity in probability. A related continuity notion namely the notion of continuity with probability one has been mentioned by Newey and McFadden [18] in their Theorem 2.6 as mentioned earlier. They also commented that this notion can be used for establishing asymptotic properties of simulated estimators introduced by Pakes [26] . Pakes [26] also has used pseudo random numbers to estimate probability frequencies for some models. For SMHD estimation, we extend a standard result of analysis which states that a continuous function attains its supremum on a compact set to a version which holds in probability. This approach seems to be new and simpler than the use of the more general stochastic equicontinuity condition given by section 2.2 in Newey and McFadden [18] (p2136-2138) to establish uniform convergence of a sequence of random functions in probability. Our approach uses the fact that as

used previously by other approaches to establish

Definition 1 (Continuity in probability)

A sequence of random functions

result of continuity in real analysis. It is also well known that the supremum of a continuous function on a compact domain is attained at a point of the compact domain, see Davidson and Donsig [27] (p81) or Rudin [28] (p89) for this classical result. The equivalent property for a random function which is only continuous in probability is the supremum of the random function is attained at a point of the compact domain in probability. The compact domain we study here

is given by

might be more precise to use the term sequence of random functions rather than just random function here for the notion of continuity in probability as the random function will depend on n.

Below are the assumptions we need to make to establish asymptotic normality for SMHD estimators and they appear to be reasonable.

Assumption 1

1) The pmf of the parametric model has the continuity property with

2) The simulated counterpart has the continuity in probability property with

3)

In general, the condition 2) will be satisfied if the condition 1) holds and implicitly we assume the same seed is used for obtaining the simulated samples across different values of

Definition 2 (Differentiability in probability)

The sequence of random functions

differentiability in real analysis for nonrandom function.

A similar notion of differentiability in probability has been used in stochastic processes literature, see Gusak et al. [25] (p33-34), a more stringent differentiability notion namely differentiability in quadratic mean has also been used to study local asymptotic normality (LAN) property for a parametric family, see Keener [29] (p326). The notion of differentiability in probability will be used in section 3 with Theorem 7.1 of Newey and McFadden [17] to establish asymptotic normality for the SMHD estimators for the ungrouped case. We make the

following assumption for

Assumption 2

This assumption appears to be reasonable, this can be checked by using limit operations as in real analysis with

Since regularity conditions for Theorem 2 and its corollary can be met and they are justified in TA1.1 and TA1.2 in the Appendices, we proceed here to find the asymptotic covariance matrix

Since

from the sample given by the data, so we can focus on version D and make the adjustment for version S. We need the asymptotic covariance matrix

vector

Recall that form properties of the multinomial distribution, the covariances of

and

The covariance matrix of

and the asymptotic covariance matrix of

We then have the vector of HD estimators version D and S given respectively by

so

with

Therefore for version S,

the simulated sample size is

Note that for version D, the HD estimators are as efficient as the minimum chi-square estimators or ML estimators based on grouped data. The overall asymptotic relative efficiency (ARE) between version D and S for HD estimation is

simply ARE =

An estimate for the covariance matrix

The asymptotic covariance matrix of

For ungrouped data and for version D, it is equivalent to choose

and

Section 3 may be skipped for practitioners if their main interests are only on applications of the results.

2.3. Chi-square Goodness of Fit test Statistics

2.3.1. Simple Hypothesis

In this section, the Hellinger distance

H0: data comes from a specified distribution with distribution

The version S is of interest since it allows testing goodness of fit for discrete or continuous distribution without closed form pmfs or density functions, all we need is to be able to simulate from the specified distribution. We shall justify the asymptotic chi-square distributions given by expression (23) and expression (24) below.

Note that

and

for version D. For version S,

Using standard results for distribution of quadratic forms and the property of the operator trace of a matrix with

2.3.2. Composite hypothesis

Just as the chi-square distance, the Hellinger distance

H0: data comes from a parametric model

for version D and for version S,

where

which can be reexpressed as

or

With

by noting

using

For version S,

and

with

3. SMHD Methods for Ungrouped Data

For the classical version D with ungrouped data, Simpson [14] (p806) in the proof of his Theorem 2 has shown that we have equality in probability of the following expression by letting

be the vector of partial derivatives with respect to

with

score functions with covariance matrix

For version D, we then have

or equivalently

Therefore, we can conclude that

the result of Theorem 2 given by Simpson [14] (p804) which shows that the MHDE estimators are as effcient as the maximum likelihood (ML) estimators.

For version S with ungrouped data, it is more natural to use Theorem 7.1 of Newey and McFadden [17] (p2185-2186) to establish asymptotic normality for SMHD estimators. The ideas behind Theorem 7.1 can be summarized as follows. In case of the objective function

with its equivalent expression given by expression (3).

Also, if

The vector

If the remainder of the approximation is small, we also have

Before defining the remainder term

as

For the approximation to be valid, we define

and requires

proofs of Theorem 7.1 given by Newey and McFadden. The following Theorem 3 is essentially Theorem 7.1 given by Newey and McFadden but restated with estimators obtained by minimizing an objective function instead of maximizing an

objective function and requires

more stringent than the original condition v) of their Theorem 7.1. We also require compactness of the parameter space

Theorem 3

Suppose that

1)

2)

3)

4)

5)

The regularity conditions (1-3) of Theorem 3 can easily be checked. The condition 4 follows from expression (27) established by Simpson [14] . The condition 5 might be the most difficult to check as it involve technicalities and it is verified in TA2 of the Appendices. By assuming all can be verified, we apply Theorem 3 for SMHD estimation with

The objective function

the matrix of second derivative of

and it can be seen that

by performing limit operations to find derivates as in real analysis and using Assumption 1 and Assumption 2. Therefore, we have the following equality in distribution using the condition 4) of Theorem 3 and expression (27)

which is similar to the grouped case.

Now with

One might want to define the extended Cramér-Rao lower bound for simulated method estimators to be

other simulated methods, it can be interpreted as the adjustment factor when estimators are obtained via minimizing a simulated version of the objective function instead of the original objective function with the model distribution being replaced by a sample distribution using a simulated sample, see Pakes and Pollard [16] (p1048) for the simulated minimum chi-square estimators, for example. Clearly,

We close this section by showing the asymptotic breakdown point

see Simpson [14] (p805-806) and assuming only the original data set might be contaminated, there is no contamination coming from simulated samples. This assumption appears to be reasonable as we can control the simulation procedures. We focus only on the strict parametric model and the set up is less general than the one considered by Theorem 3 of Simpson [13] (p805) which also includes distributions near the parametric model.

Let

Now with the same seed used across

but

as

With

using the inequality

The last inequality follows from the assumption that

Using

probability under the true model which is similar to version D. The only difference is here we have an inequality in probability. From this result, we might conclude that the SMHD estimators preserve the robustness properties of version D and the loss of asymptotic efficiency comparing to version D can be minimized if

4. Numerical Issues

4.1. Methods to Approximate Probabilities

Once the parameters are estimated, probabilities can be estimated. For situations where recursive formulas exist then Panjer’s method can be used, see Chapter 9 of the book by Klugman et al. [4] . Otherwise, we might need to approximate probabilities by simulations or by analytic methods.

In this section, we discuss some methods for approximating probabilities

See Butler [35] (p8-9) for details of the saddlepoint approximation. It can be described as using

The saddlepoint

If the cumulant function does not exist, an alternative method which is based on the characteristic function, as described by Abate and Whitt [36] (p32), can be used.

4.2. A Limited Simulation study

4.2.1. Neymann Type A distribution

As an example for illustration we choose the Neymann Type A distribution with the method of moments (MM) estimators for

by Johnson et al. [8] . The MM estimators are given by

with the sample mean and variance given respectively by

For the range of parameter values, we let

efficiency used is the ratio

the mean square error of the estimator inside the parenthesis. The mean square error of an estimator

The ratio ARE can be estimated using simulated data and they are displayed in Table A. Due to limited computing facilities, we only draw

4.2.2. Poisson distribution

For the Poisson model with parameter

using the ratio

model, the information matrix exists and we can check the efficiency and robustness of the SHD estimator and compare it with the ML estimator which is the sample mean. Since there is only on parameter estimate we are able to fix

Table A. Asymptotic relative efficiencies between MM estimators and SMHD estimators

Asymptotic relative efficiency between MLE

Asymptotic relative efficiency between MLE

U = 10000 for the simulated sample size from the Poisson model without slowing down the computations. It appears overall the SHD estimators performs very well for the range of parameters often encountered in actuarial studies, here we observe that the asymptotic efficiencies range from 0.7 to 1.1. We also study a contaminated Poisson model (

the SMHD estimator vs ML estimator in presence of contamination. The sample mean looses its efficiency and becomes very biased. The results are given at the bottom of Table A which shows that the

5. Conclusion

More simulation experiments to further study the performance of the SMHD estimators vs commonly used estimators across various parametric models are needed and we do not have the computing facilities to carry out such large scale studies. Most of the computing works were carried out using only a laptop computer. So far, the simulation results confirm the theoretical asymptotic results which show that SMHD estimators have the potential of having high efficiencies for parametric models with finite Fisher information matrices and they are robust if data is contaminated; the last feature might not be shared by ML estimators.

Acknowledgements

The helps received from the Editorial staffs of OJS which lead to an improvement of the presentation of the paper are gratefully acknowledged.

Cite this paper

Luong, A., Bilodeau, C. and Blier-Wong, C. (2018) Simulated Minimum Hellinger Distance Inference Methods for Count Data. Open Journal of Statistics, 8, 187-219. https://doi.org/10.4236/ojs.2018.81012

References

- 1. Christoph, G. and Schreiber, K. (1998) Discrete Stable Random Variables. Statistics and Probability Letters, 37, 243-247. https://doi.org/10.1016/S0167-7152(97)00123-5

- 2. Abate, J. and Whitt, W. (1996) An Operational Calculus for Probability Distributions via Laplace Transforms. Advances in Applied Probability, 28, 75-113. https://doi.org/10.2307/1427914

- 3. Panjer, H. and Willmot, G.E. (1992) Insurance Risk Models. Society of Actuaries, Chicago, IL.

- 4. Klugman, H., Panjer, H.H. and Willmot, G.E. (2012) Loss Models: From Data to Decisions. Wiley, New York.

- 5. Gerber, H.U. (1991) From the Generalized Gamma to the Generalized Negative Binomial Distribution. Insurance: Mathematics and Economics, 10, 303-309. https://doi.org/10.1016/0167-6687(92)90061-F

- 6. Hougaard, P. (1986) Survival Models for Heterogeneous Populations Derived from Stable Distributions. Biometrika, 73, 387-396. https://doi.org/10.1093/biomet/73.2.387

- 7. Zhu, R. and Joe, H. (2009) Modelling Heavy Tailed Count Data Using a Generalized Poisson Inverse Gaussian Family. Statistics and Probability Letters, 79, 1695-1703. https://doi.org/10.1016/j.spl.2009.04.011

- 8. Johnson, N.L., Kotz, S. and Kemp, A.W. (1992) Univariate Discrete Distributions. 2nd Edition, Wiley, New York.

- 9. Doray, L.G., Jiang, S.M. and Luong, A. (2009) Some Simple Methods of Estimation for the Parameters of the Discrete Stable Distributions with Probability Generating Functions. Communications in Statistics, Simulation and Computation, 38, 2004-2007. https://doi.org/10.1080/03610910903202089

- 10. Carrasco, M. and Florens, J.-P. (2000) Generalization of GMM to a Continuum Moments Conditions. Econometric Theory, 16, 797-834. https://doi.org/10.1017/S0266466600166010

- 11. Feuerverger, A. and McDunnough, P. (1981) On the Efficiency of Empirical Characteristic Function Procedure. Journal of the Royal Statistical Society, Series B, 43, 20-27.

- 12. Nadarajah, S and Kotz, S. (2006a) Compound Mixed Poisson Distribution I. Scandinavian Actuarial Journal, 2006, 141-162. https://doi.org/10.1080/03461230600783384

- 13. Nadarajah, S and Kotz, S. (2006b) Compound Mixed Poisson Distribution II. Scandinavian Actuarial Journal, 2006, 163-181. https://doi.org/10.1080/03461230600715253

- 14. Simpson, D.G. (1987) Minimum Hellinger Distance Estimation for the Analysis of Count Data. Journal of the American Statistical Association, 82, 802-807. https://doi.org/10.1080/01621459.1987.10478501

- 15. Simpson, D.G. (1989) Hellinger Deviance Tests: Efficiency, Breakdown Points and Examples. Journal of the American Statistical Association, 84, 107-113. https://doi.org/10.1080/01621459.1989.10478744

- 16. Pakes, A. and Pollard, D. (1989) Simulation Asymptotic of Optimization Estimators. Econometrica, 57, 1027-1057. https://doi.org/10.2307/1913622

- 17. Newey, W.K. and McFadden, D. (1994) Large Sample Estimation and Hypothesis Testing. In: Engle, R.F. and McFadden, D., Eds., Handbook of Econometrics, Vol. 4, North Holland, Amsterdam.

- 18. Davidson, R. and MacKinnon, J.G. (2004) Econometric Theory and Methods. Oxford University Press, Oxford.

- 19. Cressie, N. and Read, T. (1984) Multinomial Goodness of Fit Tests. Journal of the Royal Statistical Society, Series B, 46, 440-464.

- 20. Hogg, R.V., Mc Kean, J.W. and Craig, A. (2013) Introduction to Mathematical Statistics. 7th Edition, Pearson, Boston, MA.

- 21. Kloke, J. and McKean, J.W. (2015) Nonparametric Statistical Using R. CRC Press, New York.

- 22. Maronna, R.A., Martin, R.D. and Yohai, V.G. (2006) Robust Statistics: Theory and Methods. Wiley, New York. https://doi.org/10.1002/0470010940

- 23. Beran, R. (1977) Minimum Hellinger Distance Estimates for Parametric Models. Annals of Statistics, 5, 445-463. https://doi.org/10.1214/aos/1176343842

- 24. Lindsay, B.G. (1994) Efficiency versus Robustness: The Case for Minimum Hellinger Distance and Related Methods. Annals of Statistics, 22, 1081-1114. https://doi.org/10.1214/aos/1176325512

- 25. Gusak, D., Kukush, A., Kulik, A., Mishura, Y. and Pilipenko, A. (2010) Theory of Stochastic Processes with Applications to Financial Mathematics and Risk Theory. Springer, New York.

- 26. Pakes, A. (1986) Patents as Options: Some Estimates of the Value of Holding European Patent Stocks. Econometrica, 54, 755-784. https://doi.org/10.2307/1912835

- 27. Keener, R.W. (2016) Theoretical Statistics. Springer, New York.

- 28. Davidson, K.R. and Donsig, A.P. (2009) Real Analysis and Applications. Springer, New York.

- 29. Rudin, W. (1976) Principles of Mathematical Analysis. McGraw-Hill, New York.

- 30. Luong, A. and Thompson, M.E. (1987) Minimum Distance Methods Based on Quadratic Distance for Transforms. Canadian Journal of Statistics, 15, 239-251. https://doi.org/10.2307/3314914

- 31. Greenwood, P.E. and Nikulin, M.S. (1996) A Guide to Chi-Squared Testing. Wiley, New York.

- 32. Lehmann, E.L. (1999) Element of Large Sample Theory. Wiley, New York. https://doi.org/10.1007/b98855

- 33. Luong, A. (2016) Cramér-Von Mises Distance Estimation for Some Positive Infinitely Divisible Parametric Families with Actuarial Applications. Scandinavian Actuarial Journal, 2016, 530-549. https://doi.org/10.1080/03461238.2014.977817

- 34. Tsay, R.S. (2010) The Analysis of Financial Time Series. 3rd Edition, Wiley, New York. https://doi.org/10.1002/9780470644560

- 35. Butler, R.W. (2007) Saddlepoint Approximations with Applications. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511619083

- 36. Abate, J. and Whitt, W. (1992) The Fourier Methods for Inverting Transforms of Probability Distributions. Queueing Systems, 10, 5-87. https://doi.org/10.1007/BF01158520

- 37. Devroye, L. (1993) A Triptych of Discrete Distributions Related to the Stable Laws. Statistics and Probability Letters, 18, 349-351. https://doi.org/10.1016/0167-7152(93)90027-G

Appendices

Technical Appendix 1 (TA1)

TA 1.1

In this technical appendix, we shall show that a sequence of random functions

TA 1.2

In this technical appendix, we shall show that the sequence of function

The first two terms of the RHS of the above equation are bounded in probability as they have a limiting distributions and this implies the third term is also bounded in probability by using Cauchy-Schwartz inequality. Now using the conditions of Assumption 1 of Section (2.2.2) and implicitly the assumption of the same seed is used across different values of

and

From the above property, it is clear that

belongs to

The justifications for the ungrouped case are similar using the same type of arguments but with the use of Theorem 7.1 given by Newey and McFadden [17] and will be given in TA2.

Technical Appendix 2 (TA2)

In this technical appendix we shall verify the condition

hence the use of DCT is justified. Therefore,

Now if we define