Journal of Signal and Information Processing

Vol. 3 No. 1 (2012) , Article ID: 17674 , 6 pages DOI:10.4236/jsip.2012.31011

A New Method for Fastening the Convergence of Immune Algorithms Using an Adaptive Mutation Approach

![]()

1Electrical and Electronics Engineering Department, Faculty of Engineering, Assiut University, Assiut, Egypt; 2Communication Engineering Department, Hijjawi Faculty for Engineering Technology, Yarmouk University, Irbid, Jordan.

Email: zahhad@yahoo.com

Received December 28th, 2010; revised November 29th, 2011; accepted December 12th, 2011

Keywords: Adaptive Mutation; Immune Algorithm; Convergence; Traditional Mutation

ABSTRACT

This paper presents a new adaptive mutation approach for fastening the convergence of immune algorithms (IAs). This method is adopted to realize the twin goals of maintaining diversity in the population and sustaining the convergence capacity of the IA. In this method, the mutation rate (pm) is adaptively varied depending on the fitness values of the solutions. Solutions of high fitness are protected, while solutions with sub-average fitness are totally disrupted. A solution to the problem of deciding the optimal value of pm is obtained. Experiments are carried out to compare the proposed approach to traditional one on a set of optimization problems. These are namely: 1) an exponential multi-variable function; 2) a rapidly varying multimodal function and 3) design of a second order 2-D narrow band recursive LPF. Simulation results show that the proposed method efficiently improves IA’s performance and prevents it from getting stuck at a local optimum.

1. Introduction

Finding an appropriate set of parameter values for evolutionary algorithms (EAs) has been a longstanding major challenge of the evolutionary computation community. Such difficulty has directed researchers’ attention towards devising an automated ways of controlling EAs parameters [1]. Immune Algorithm (IA) is one of recently EAs that based on the mechanism of the amalgamation between antigen and antibody in biologic immune system [2]. IAs have been used widely and successfully in many computational intelligence areas including medical data processing, biomedical and satellite image processing, spectrum analysis and design of digital filters [3-6]. The performance of IAs depends on many factors, such as selection schemes and control parameters. In spite of the research carried out up to date, there are no general rules on how the control parameters of IA can be selected. In literature [5-8], the choice these parameters is still left to the user to be determined statically prior to the execution of the IA. Mutation rate (pm) is considered to be one of the most sensitive control parameters that an immune algorithm works with. This is due to the fact that it increases the diversity in population. The choice of mutation rate is essentially a tradeoff between conservatism and exploration [9].

In this paper we investigate an efficient technique for multi-modal function optimization using IAs. We recommend the use of adaptive probability of mutation to realize the twin goals of maintaining diversity in the population and sustaining the convergence capacity of the IA. In adaptive mutation approach, the value of  is varied depending on the fitness values of the solutions. The fitness values of the solution in relation to an objective function to be optimized. During each generation, the fitness of each solution is evaluated, and solutions are selected for reproduction based on their fitness. Selection embodies the principle of survival of the fittest. Good solutions are selected for reproduction while bad solutions are eliminated. The goodness of a solution is determined from its fitness value. The adaptation probability of mutation provides a solution to the problem of choosing the optimal value of the probability of mutation in IA.

is varied depending on the fitness values of the solutions. The fitness values of the solution in relation to an objective function to be optimized. During each generation, the fitness of each solution is evaluated, and solutions are selected for reproduction based on their fitness. Selection embodies the principle of survival of the fittest. Good solutions are selected for reproduction while bad solutions are eliminated. The goodness of a solution is determined from its fitness value. The adaptation probability of mutation provides a solution to the problem of choosing the optimal value of the probability of mutation in IA.

The paper is organized as follows. Section 2 describes definition of the optimization problem. The proposed method for fastening the convergence of IA is described in Section 3. Section 4 presents the three experiments that we have conducted to compare the performance of the adaptive and traditional mutation approaches on IA. Section 5 shows the results and discussion of the experiments. Finally, Section 6 offers some conclusions.

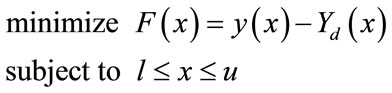

2. Problem Definition

The global optimization problem is considered as [10, 11]:

(1)

(1)

where,  is the objective function,

is the objective function,  is the optimizing function and

is the optimizing function and  is the desired function. The vector

is the desired function. The vector  is a variable vector and

is a variable vector and  and

and  define the feasible solution space. Since the IAs mimic the antigen-antibody reaction of the immune system in mammals. The antigen and the antibody in the IA are equivalent to the objective function and the feasible solution for a conventional optimization method, respectively. The antibodies (chromosomes) population is generated either by using binary coding representation or real coding representation. In the binary coding representation, each variable (gene) is encoded as a binary string and the resulting strings are concatenated to form single antibody [12]. However, in the real coding representation, each antibody is encoded as a vector of floating point numbers (i.e.

define the feasible solution space. Since the IAs mimic the antigen-antibody reaction of the immune system in mammals. The antigen and the antibody in the IA are equivalent to the objective function and the feasible solution for a conventional optimization method, respectively. The antibodies (chromosomes) population is generated either by using binary coding representation or real coding representation. In the binary coding representation, each variable (gene) is encoded as a binary string and the resulting strings are concatenated to form single antibody [12]. However, in the real coding representation, each antibody is encoded as a vector of floating point numbers (i.e. , where

, where  is a random value), with the same length as the vector of decision variables. This representation is accurate and efficient because it is closest to the real design space, and the string length represents the number of design variables. The hyper-mutation mechanism of the clonal proliferation and mutation mechanism play a critical role in creating diverse antibodies based on minimization of the difference between the optimizing and desired functions. The diversity is measured between the antibodies, and it is increased to prevent local optimization during the optimal search [6].

is a random value), with the same length as the vector of decision variables. This representation is accurate and efficient because it is closest to the real design space, and the string length represents the number of design variables. The hyper-mutation mechanism of the clonal proliferation and mutation mechanism play a critical role in creating diverse antibodies based on minimization of the difference between the optimizing and desired functions. The diversity is measured between the antibodies, and it is increased to prevent local optimization during the optimal search [6].

In optimizing functions, it is important that the IA should be able to converge to the optimal value in as few generations as possible. The convergence speed heavily depends on its main control parameters: population size, replication rate, mutation rate, clonal rate and hypermutation rate [13]. Mutation rate is considered to be one of the most sensitive control parameters that an immune algorithm works with, because it increases the diversity in population to prevent the solution to get stuck at a local optimal value during the optimization search. So, the adaptive mutation approach is considered here.

3. Adaptive Mutation Approach

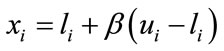

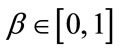

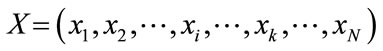

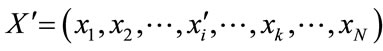

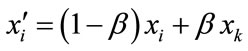

Mutation alters one or more genes (unknown variables) depending on the mutation rate. For a given antibody , if the gene xi is selected for mutation depending on mutation rate

, if the gene xi is selected for mutation depending on mutation rate  and the other xk is randomly selected to join in, the resulting offspring antibody becomes

and the other xk is randomly selected to join in, the resulting offspring antibody becomes , where the new gene

, where the new gene  is

is  and β is selected randomly in the range [0,1]. The choice of

and β is selected randomly in the range [0,1]. The choice of  is essentially a tradeoff between conservatism and exploration [9]. It has been well established in IA literature [8] that when the value of

is essentially a tradeoff between conservatism and exploration [9]. It has been well established in IA literature [8] that when the value of  is selected nearby the range of [0.02, 0.05], the time spent on searching is relatively short and the searching process is stable as well as the search efficiency is better [13]. In the proposed approach, we aim at achieving this trade-off between conservatism and exploration in a different manner, by varying the

is selected nearby the range of [0.02, 0.05], the time spent on searching is relatively short and the searching process is stable as well as the search efficiency is better [13]. In the proposed approach, we aim at achieving this trade-off between conservatism and exploration in a different manner, by varying the  adaptively in response to the fitness values of the solutions;

adaptively in response to the fitness values of the solutions;  is increased when the population tends to get stuck at a local optimum and is decreased when the population is scattered in the solution space. So, it is essential to be able to identify whether the IA is converging to an optimum solutions or it diverges. The average of fitness value (

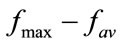

is increased when the population tends to get stuck at a local optimum and is decreased when the population is scattered in the solution space. So, it is essential to be able to identify whether the IA is converging to an optimum solutions or it diverges. The average of fitness value ( ) of the population in relation to maximum fitness value (

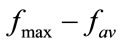

) of the population in relation to maximum fitness value (![]() ) of the population is used to detect the conversance of IA.

) of the population is used to detect the conversance of IA.  is likely to be less for a population that has converged to an optimum solution than that for a population scattered in the solution space. Therefore the difference in the maximum and average fitness values (

is likely to be less for a population that has converged to an optimum solution than that for a population scattered in the solution space. Therefore the difference in the maximum and average fitness values ( ) is used as a yardstick for detecting the convergence of IA. The value of

) is used as a yardstick for detecting the convergence of IA. The value of  is varied depending on the value of

is varied depending on the value of  to prevent premature convergence of the IA to a local optimum. Since

to prevent premature convergence of the IA to a local optimum. Since  has to be increased when the IA converge to a local optimum,

has to be increased when the IA converge to a local optimum,  will have to be varied inversely with

will have to be varied inversely with . The expression that we have chosen for

. The expression that we have chosen for  is of the form:

is of the form:

(2)

(2)

From Equation (2), it can be noticed that  do not depend on the fitness value of any particular solution, and have the same value for all the solutions of the population. Consequently, solutions with high fitness values as well as solutions with low fitness values are subjected to the same levels of mutation. When a population converges to a globally optimal solution (or even a locally optimal solution),

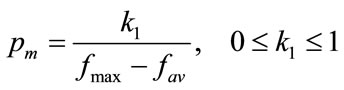

do not depend on the fitness value of any particular solution, and have the same value for all the solutions of the population. Consequently, solutions with high fitness values as well as solutions with low fitness values are subjected to the same levels of mutation. When a population converges to a globally optimal solution (or even a locally optimal solution),  increases and may cause the disruption of the near-optimal solutions. To overcome this problem and to preserve good solutions of the population

increases and may cause the disruption of the near-optimal solutions. To overcome this problem and to preserve good solutions of the population  should have lower value for high fitness solutions and higher value for low fitness solutions. While the high fitness solutions aid in the convergence of the IA, the low fitness solutions prevent the IA from getting stuck at a local optimum. The value of

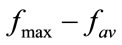

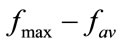

should have lower value for high fitness solutions and higher value for low fitness solutions. While the high fitness solutions aid in the convergence of the IA, the low fitness solutions prevent the IA from getting stuck at a local optimum. The value of  should depend not only on

should depend not only on , but also on the fitness value f of the solution. The closer f is to

, but also on the fitness value f of the solution. The closer f is to![]() , the smaller

, the smaller  should be, i.e.,

should be, i.e.,  should vary directly as

should vary directly as . So, the expression in Equation (2) is modified to become:

. So, the expression in Equation (2) is modified to become:

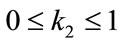

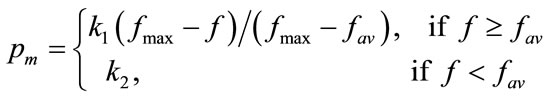

(3)

(3)

Note that  is zero for the solution with the maximum fitness. Also

is zero for the solution with the maximum fitness. Also  for a solution with

for a solution with . For solutions with sub-average fitness values (i.e. f < fav),

. For solutions with sub-average fitness values (i.e. f < fav),  might assume value larger than 1.0. To prevent the overshooting of

might assume value larger than 1.0. To prevent the overshooting of  beyond 1.0, we also have the following constraints,

beyond 1.0, we also have the following constraints,

(4)

(4)

where,

For convenience, the expression for  is given as:

is given as:

(5)

(5)

The values of  and

and  are in the range [0,1] and are selected to prevent the IA from getting stuck at a local optimum. For solutions with sub-average fitness the search space is searched for the region containing the global optimum solution. Such solutions need to be completely disrupted, and for this purpose the value of

are in the range [0,1] and are selected to prevent the IA from getting stuck at a local optimum. For solutions with sub-average fitness the search space is searched for the region containing the global optimum solution. Such solutions need to be completely disrupted, and for this purpose the value of  is selected to be 0.5. But, the value of

is selected to be 0.5. But, the value of  is selected by 1/L as state in [14], where L is the encoding string length.

is selected by 1/L as state in [14], where L is the encoding string length.

4. Experiments

This section presents the three experiments that we have conducted to compare the performance of the adaptive and traditional mutation approaches on IA. For this purpose we have employed three test problems with varying complexities.

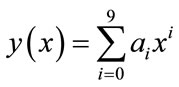

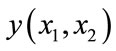

Experiment 1: This experiment considers the optimization of the exponential function shown in Figure 1 and described by the following Equation [13]:

(6)

(6)

with the following desired specified values  at x=

at x= .

.

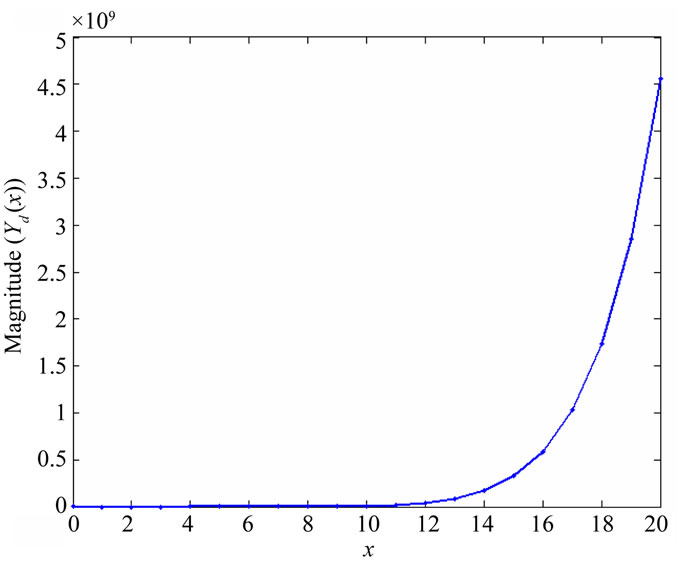

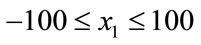

Experiment 2: This experiment considers the rapidly varying multimodal function of two variables described by Equation (7). This function is symmetric about the origin with the barrier height between adjacent minima approaching zero as the global optimum is approached and is considered in [15] to test the performance of adaptive genetic algorithm. Figure 2 illustrates the function  in biand uni-dimensional plots for

in biand uni-dimensional plots for

Figure 1 Desired specifications of the function y(x) (experiment 1).

(a)

(a) (b)

(b)

Figure 2. The function y(x1,x2) (experiment 2). (a) Bi-dimensional plot; (b) Uni-dimensional plot.

and

and .

.

(7)

(7)

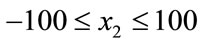

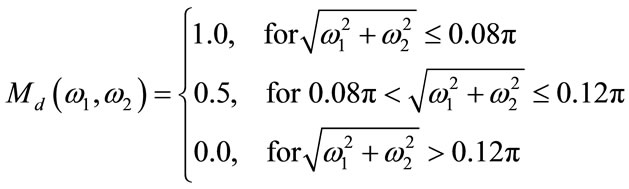

Experiment 3: This experiment considers the design of a second order 2-D narrow-band recursive LPF with magnitude and group delay specifications. The specified magnitude  is shown in Figure 3 [7,13]. Namely, it is given by Equation (8) with the additional constant group delay

is shown in Figure 3 [7,13]. Namely, it is given by Equation (8) with the additional constant group delay  over the passband

over the passband

![]() and the design space is [-3 3]. To solve this problem, the frequency samples are taken at

and the design space is [-3 3]. To solve this problem, the frequency samples are taken at  in the ranges

in the ranges  , and

, and .

.

(8)

(8)

5. Results and Discussion

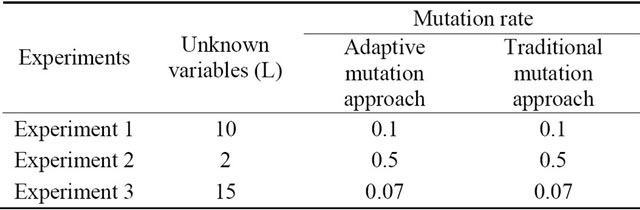

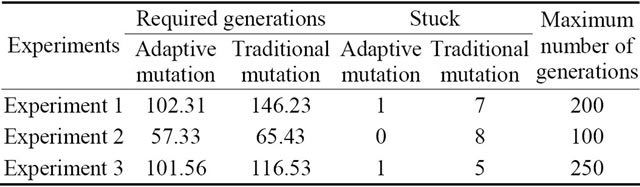

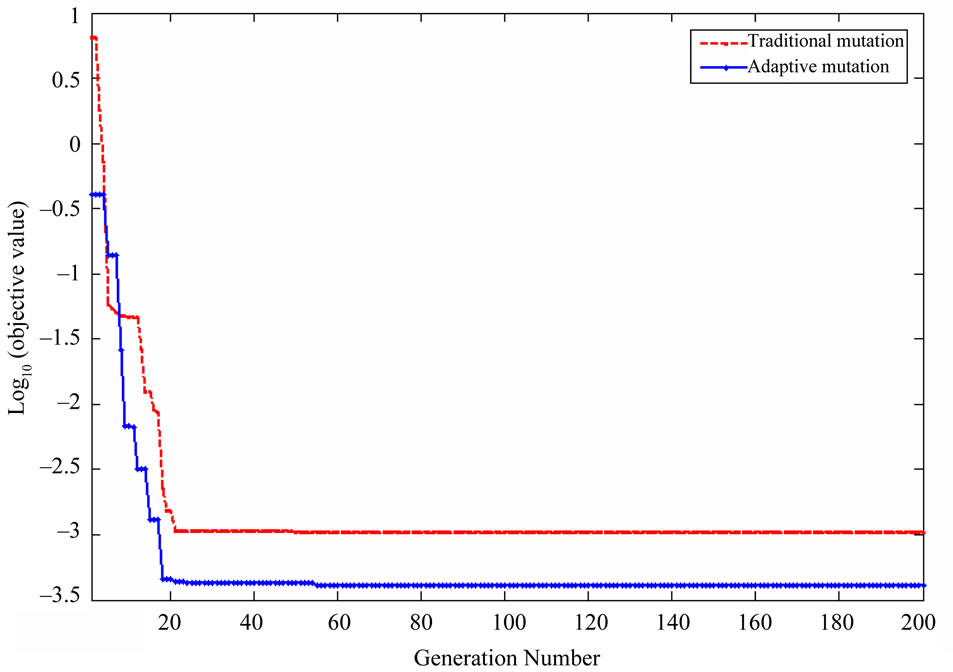

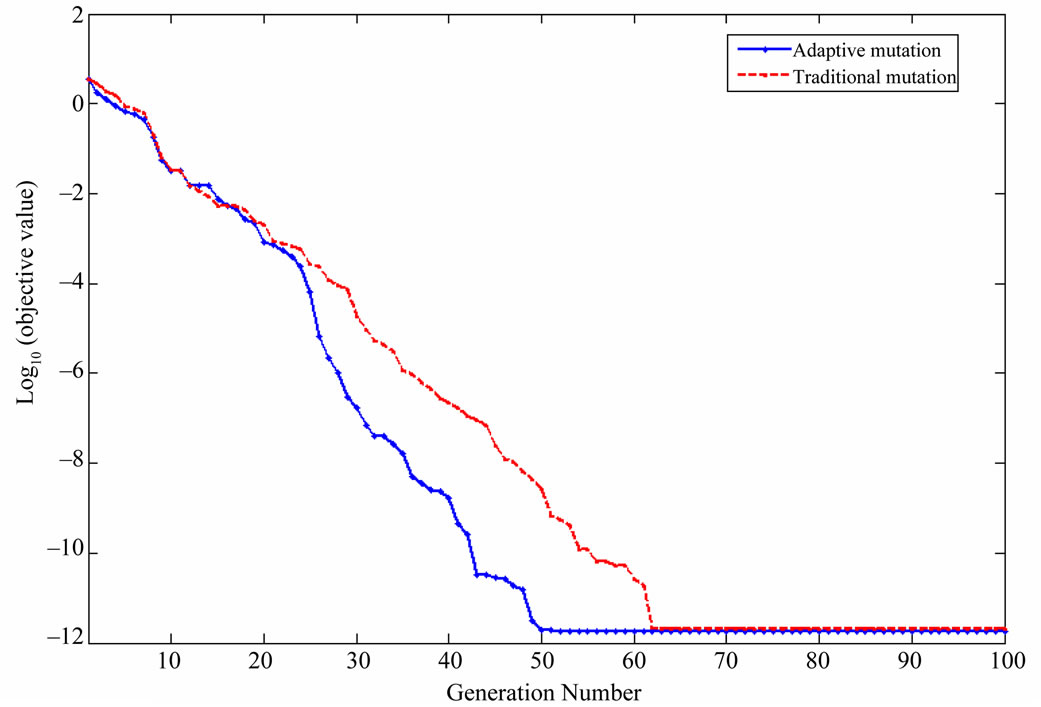

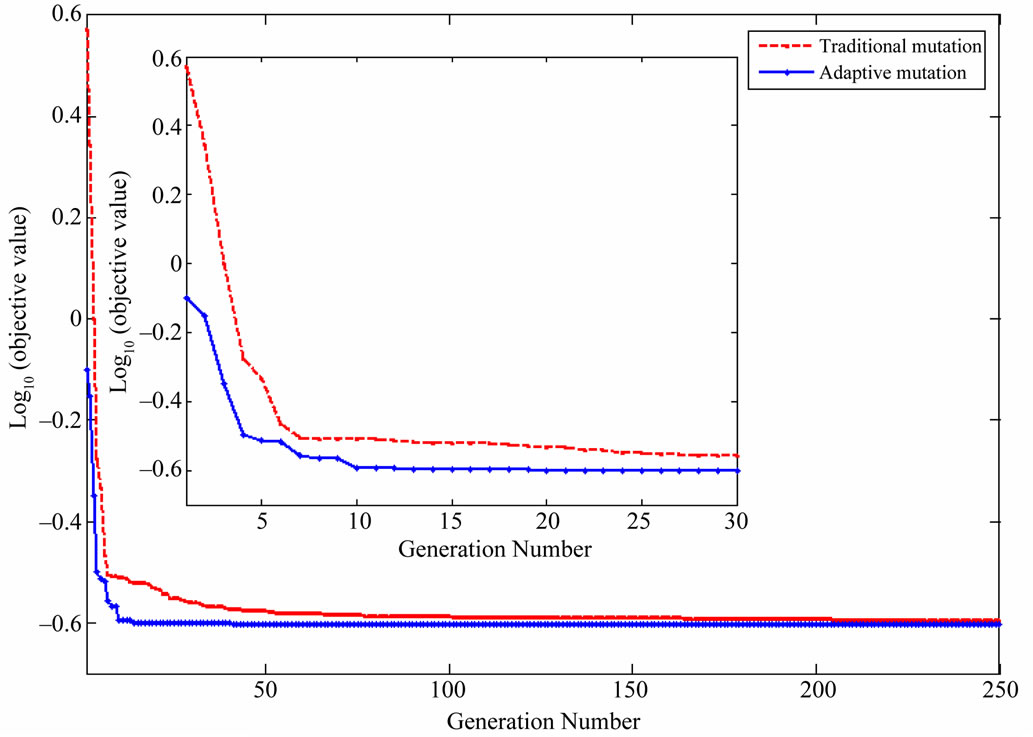

For the three experiments [16] considered, the program implementation for each chosen approach is run 30 times. The population size is set to 100 for all experiments and the values of mutation rate for all experiments are given in Table 1. Table 2 gives the average number of generations required by each approach for attaining a solution, the number of instances (out of 30 trials) for which the IA have gotten stuck at a local optimum, and the maximum number of generations. Figures 4, 5 and 6 show the objective value of the three experiments respectively that obtained using adaptive and traditional muta-

Figure 3. Desired amplitude response  of the 2-D narrow-band LPF (experiment 3).

of the 2-D narrow-band LPF (experiment 3).

Table 1. The values of mutation rate for all experiments.

Table 2. Comparison between adaptive and traditional mutation approaches for IA.

Figure 4. The objective values of experiment 1.

Figure 5. The objective values of experiment 2.

Figure 6. The objective values of experiment 3.

tion approaches. From Table 2 and Figures 4, 5 and 6 can be noticed that the adaptive mutation approach improves the performance of the IA and give better results than traditional mutation approach.

6. Conclusion

In this paper, we adopt an efficient adaptive mutation approach to provide a solution to the problem of finding the optimal value of pm for immune algorithms. In this approach, the value of pm is varied depending on the fitness values of the solutions. The proposed approach decreases the value of pm for high fitness solutions to sustain the convergence capacity of the IA and increases the value of pm for low fitness solutions to maintain diversity in the population. This method not only improves the convergence of IA, but also prevents the IA from getting stuck at a local optimum. Simulation results show that the proposed mutation approach efficiently improves IA’s performance.

REFERENCES

- F. Vafaee and P. C. Nelson, “A Genetic Algorithm That Incorporates an Adaptive Mutation Based on an Evolutionary Model,” International Conference on Machine Learning and Applications, Miami Beach, 13-15 December 2009, pp. 101-107. doi:10.1109/ICMLA.2009.101

- W. Zhang and Y. Liu, “Reactive Power Optimization Based on PSO in a practical Power System,” Proceedings of the International Multi-Conference of Engineers and Computer Scientists, Vol. 2, 2008.

- M. Abo-Zahhad, S. M. Ahmed, N. Sabor and A. F. AlAjlouni, “Design of Two-Dimensional Recursive Digital Filters with Specified Magnitude and Group Delay Characteristics Using Taguchi-based Immune Algorithm,” International Journal of Signal and Imaging Systems Engineering, Vol. 3, No. 3, 2010, pp. 222-235. doi:10.1504/IJSISE.2010.038018

- V. Cutello, G. Nicosia, M. Romeo and P. S. Oliveto, “On the Convergence of Immune Algorithm,” IEEE Symposium on Foundations of Computational Intelligence, Honolulu, 1-5 April 2007, pp. 409-415.

- J. T. Tsai, W. H. Ho, T. K. Liu and J. H. Chou, “Improved Immune Algorithm for Global Numerical Optimization and Job-Shop Scheduling Problems,” Applied Mathematics and Computation, Vol. 194, No. 2, 2007, pp. 406-424. doi:10.1016/j.amc.2007.04.038

- J. T. Tsai and J. H. Chou, “Design of Optimal Digital IIR Filters by Using an Improved Immune Algorithm,” IEEE Transactions on Signal Processing, Vol. 54, No. 12, 2006, pp. 4582-4596. doi:10.1109/TSP.2006.881248

- J. T. Tsai, W. H. Ho and J. H. Chou, “Design of Two-Dimensional Recursive Filters by Using Taguchi Immune Algorithm,” IET Signal Process, Vol. 2, No. 2, 2008, pp. 110-117.

- G. Zilong, W. Sun’an and Z. Jian, “A Novel Immune Evolutionary Algorithm Incorporating Chaos Optimization,” Pattern Recognition Letter, Vol. 27, No. 1, 2006, pp. 2-8. doi:10.1016/j.patrec.2005.06.014

- R. Myers and E. R. Hancock, “Genetic Algorithm Parameter Sets for Line Labelling,” Pattern Recognition Letters, Vol. 1827, No. 11, 1997, pp. 1363-1371. doi:10.1016/S0167-8655(97)00111-6

- R. Danchick, “Accurate Numerical Partials with Applications to Optimization,” Applied Mathematics and Computation, Vol. 183, No. 1, 2006, pp. 551-558. doi:10.1016/j.amc.2006.05.083

- J. T. Tsai, T. K. Liu and J. H. Chou, “Hybrid TaguchiGenetic Algorithm for Global Numerical Optimization,” IEEE Transactions on Evolutionary Computation, Vol. 8, No. 4, 2004, pp. 365-377. doi:10.1109/TEVC.2004.826895

- Z. Michalewiz, “Genetic Algorithm and Data Structure,” 3rd Edition, Springer-Verlag, Berlin, 1996.

- M. Abo-Zahhad, S. M Ahmed, N. Sabor and A. F. AlAjlouni, “The Convergence Speed of Single-And MultiObjective Immune Algorithm Based Optimization Problems,” Signal Processing: An International Journal (SPIJ), Vol. 4, No. 5, 2010, pp. 247-266.

- G. Ochoa, “Setting the Mutation Rate: Scope and Limitations of the 1/L Heuristic,” Proceedings of the Genetic and Evolutionary Computation Conference, Morgan Kaufmann, 9-13 July 2002, pp. 315-322.

- M. Srinivas and L. M. Patnaik, “Adaptive Probabilities of Crossover and Mutation in Genetic Algorithms,” IEEE Transactions on Systems, Vol. 24, No. 4, 1994, pp. 656- 667.

- M. Abo-Zahhad, S. M. Ahmed, N. Sabor and A. F. AlAjlouni, “Digital Filters Design Educational Software Based on Immune, Genetic and Quasi-Newton Line Search Algorithms,” International Journal of Innovation and Learning, Vol. 9, No. 1, 2011, pp. 35-62. doi:10.1504/IJIL.2011.037191