Open Journal of Statistics

Vol.06 No.04(2016), Article ID:70070,10 pages

10.4236/ojs.2016.64057

CBPS-Based Inference in Nonlinear Regression Models with Missing Data

Donglin Guo1,2*, Liugen Xue1, Haiqing Chen1

1College of Applied Sciences, Beijing University of Technology, Beijing, China

2School of Mathematics and Information Science, Shangqiu Normal University, Shangqiu, China

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 20 June 2016; accepted 22 August 2016; published 25 August 2016

ABSTRACT

In this article, to improve the doubly robust estimator, the nonlinear regression models with missing responses are studied. Based on the covariate balancing propensity score (CBPS), estimators for the regression coefficients and the population mean are obtained. It is proved that the proposed estimators are asymptotically normal. In simulation studies, the proposed estimators show improved performance relative to usual augmented inverse probability weighted estimators.

Keywords:

Nonlinear Regression Model, Missing at Random, Covariate Balancing Propensity Score, GMM, Augmented Inverse Probability Weighted

1. Introduction

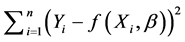

Consider the nonlinear regression model:

(1)

(1)

where  is a scalar response variate,

is a scalar response variate,  is a

is a  vector of covariate,

vector of covariate,  is a

is a  vector of unknown regression parameter,

vector of unknown regression parameter,  is a known function, and it is nonlinear with respect to

is a known function, and it is nonlinear with respect to ,

,  is a random statistical error with

is a random statistical error with . In general, d is different from p. The model has been studied by many authors, such as Jennrich [1] , Wu [2] , Crainceanu and Ruppert [3] and so on.

. In general, d is different from p. The model has been studied by many authors, such as Jennrich [1] , Wu [2] , Crainceanu and Ruppert [3] and so on.

Missing data is frequently encountered in statistical studies, and ignoring it could lead to biased estimation and misleading conclusions. Inverse probability weighting (Horvitz and Thompson [4] ) and imputation are two main methods for dealing with missing data. Since Scharfstein et al. [5] noted that the augmented inverse probability weighted (AIPW) estimator in Robins et al. [6] was double-robust, authors have proposed many estimators with the double-robust property, see Tan [7] , Kang and Schafer [8] , Cao et al. [9] . The estimator is doubly robust in the sense that consistent estimation can be obtained if either the outcome regression model or the propensity score model is correctly specified. The AIPW estimators have been advocated for routine use (Bang and Robins [10] ). For model (1), in the absence of missing data, the weighted least squares estimator of

can be obtained by minimizing the objective function

can be obtained by minimizing the objective function . In the presence of missing

. In the presence of missing

data, the above-mentioned method can not be used directly, so we make use of AIPW method to consider the model (1).

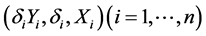

Throughout this paper, we assume that X’s are observed completely, Y is missing at random (Rubin [11] ). Thus, the data actually observed are independent and identically distributed , where

, where  indicates that

indicates that  is observed and

is observed and

If

In this paper, we construct estimators for

The rest of this paper is organized as follows. In Section 2, based on the CBPS and the AIPW methods, the estimators for the regression parameter

2. Construction of Estimators

The most popular choice of

where

2.1. CBPS-Based Estimator for the Propensity Score

Based on

Assuming that

where

Equation (5) ensures that the first moment of each covariate is banlanced and the weights based on CBPS are robust even when propensity score model is misspecified. The key idea behind the CBPS is that propensity score model determines the missing mechanism and covariate balancing weights, see Imai and Ratkovic [17] . The sample analogue of the covariate balancing moment condition given in Equation (5) is

According to Imai and Ratkovic [17] , the CBPS is said to be just identified when the number of moment conditions equals that of parameters. If we use the covariate balancing conditions given in Equation (6) alone, the CBPS is just-identified. If we combine Equation (6) with the score condition given in Equation (4), then the CBPS is overidentified because the number of moment conditions exceeds that of parameters.

Combining Equation (6) with the score condition given in Equation (4), we obtain the following equation:

Let

It is easy to show that, under some regularity conditions,

Theorem 1. Suppose that

2.2. Estimator for the Regression Parameter

To make use of AIPW method, we borrow the idea of Seber and Wild [21] and define the least squares estimator of

where

where

uated at

we stop the above iterative algorithm and obtain the least squares estimator of

Although the implementation of the complete case method is simple, it may result in misleading conclusion by simply excluding the missing data. In this section, we introduce an AIPW method based on CBPS to deal with the problems of complete case method.

Denote

under the MAR condition. Hence

where

The following Theorem 2 gives the asymptotic normality of

Theorem 2. Suppose that Assumptions (A1)-(A4) in the Appendix hold. Then we have

where

To apply Theorem 2 to construct the confidence region of

Therefore, we have

and

We can construct the confidence interval of

2.3. Estimator for the Response Mean

It is of interest to estimate the mean of Y, say

Under the MAR condition, we have

In the following theorem, we state the asymptotic properties of

Theorem 3. Under the assumptions (A1)-(A4) in the Appendix, we have

where

Borrowing the method of Xue [15] , we can obtain the following consistent estimator of V:

where

By Theorem 3, the normal approximation based confidence interval of

3. Simulation Examples

We conducted simulation studies to examine the performance of the proposed estimation methods. The simulated data are generated from the model

When both models are misspecified or either of them is misspecified, we adopt the same way as Kang and Schafer [8] to examine whether our method can improve the empirical performance of doubly robust estimators

or not. Similar to Kang and Schafer [8] , only the

are observed. If Y is expressed as

1) both outcome and propensity score models are correctly specified;

2) only the propensity score model is correct;

3) only the outcome model is correct;

4) both outcome and propensity score models are correctly misspecified.

Due to the regression parameter

Table 1. Relative performance of the estimators for regression parameter based on different propensity score estimation methods when both models are correct.

Table 2. Relative performance of the estimators for regression parameter based on different propensity score estimation methods when only outcome model is correct.

Table 3. Relative performance of the doubly robust estimators based on different propensity score estimation methods for mean under the four different scenarios.

Remark: 1) Both models are correct; 2) Only propensity score model is correct; 3) Only outcome model is correct; 4) Both models are incorrect.

a) usual GLM method;

b) the just-identified CBPS estimation with the covariate balancing moment conditions and without the score condition (CBPS1);

c) the overidentified CBPS estimation with both the covariate balancing and score conditions (CBPS2);

d) The true propensity score model which we do not need to estimate (TRUE).

From Table 1 and Table 2, we can see that SD and MSE of our estimators for

4. Concluding Remarks

We have proposed an improved estimation method for the parameters of interest in the nonlinear regression model with missing responses. The estimators based on CBPS and AIPW method have the following merits: 1) They avoid the “curse of dimensionality” and avoid selecting the optimal bandwidth; 2) When either the outcome regression model or the propensity score model is correctly specified, the proposed estimators perform as well as estimators based on true propensity model in the terms of SD and MSE; 3) When both outcome regression and propensity score models are misspecified, as mentioned in Section 1, the usual AIPW estimator can be severely biased, but our method improves the performance of them and obtains an improved estimator for population mean. The simulation shows that the proposed method is feasible. Furthermore, with appropriately modification, the proposed method can be extended to other models with missing responses. The exhaustive procedure will be presented in our future work.

Acknowledgements

We thank the Editor and the referee for their helpful comments that largely improve the presentation of the paper.

Cite this paper

Donglin Guo,Liugen Xue,Haiqing Chen, (2016) CBPS-Based Inference in Nonlinear Regression Models with Missing Data. Open Journal of Statistics,06,675-684. doi: 10.4236/ojs.2016.64057

References

- 1. Jennrich, R.I. (1969) Asymptotic Properties of Nonlinear Least Squares Estimators. The Annals of Mathematical Statistics, 40, 633-643.

http://dx.doi.org/10.1214/aoms/1177697731 - 2. Wu, C.F. (1981) Asymptotic Theory of Nonlinear Least Squares Estimation. Ann. Statist. The Annals of Statistics, 9, 501-513.

http://dx.doi.org/10.1214/aos/1176345455 - 3. Crainiceanu, C.M. and Ruppert, D. (2004) Likelihood Ratio Tests for Goodness-of-Fit of a Nonlinear Regression Model. Journal of Multivariate Analysis, 100, 35-52.

http://dx.doi.org/10.1016/j.jmva.2004.04.008 - 4. Horvitz, D.G. and Thompson, D.J. (1952) A Generalization of Sampling without Replacement from a Finite Universe. Journal of the American Statistical Association, 47, 663-685.

http://dx.doi.org/10.1080/01621459.1952.10483446 - 5. Scharfstein, D.O., Rotnitzky, A. and Robins, J.M. (1999) Adjusting for Nonignorable Drop-Out Using Semiparametric Nonresponse Models. Journal of the American Statistical Association, 94, 1096-1120.

http://dx.doi.org/10.1080/01621459.1999.10473862 - 6. Robins, J.M. and Rotnitzky, A. (1994) Estimation of Regression Coefficients When Some Regressors Are Not Always Observed. Journal of the American Statistical Association, 89, 846-866.

http://dx.doi.org/10.1080/01621459.1994.10476818 - 7. Tan, Z. (2006) A Distributional Approach for Causal Inference Using Propensity Scores. Journal of the American Statistical Association, 101, 1619-1637.

http://dx.doi.org/10.1198/016214506000000023 - 8. Kang, J.D.Y. and Schafer, J.L. (2007) Demystifying Double Robustness: A Comparison of Alternative Strategies for Estimating a Population Mean from Incomplete Data. Statistical Science, 22, 523-539.

http://dx.doi.org/10.1214/07-sts227 - 9. Cao, W., Tsiatis, A. and Davidian, M. (2009) Improving Efficiency and Robustness of the Doubly Robust Estimator for a Population Mean with Incomplete Data. Biometrika, 96, 723-734.

http://dx.doi.org/10.1093/biomet/asp033 - 10. Bang, H. and Robins, J.M. (2005) Doubly Robust Estimation in Missing Data and Causal Inference Models. Biometrics, 61, 692-972.

http://dx.doi.org/10.1111/j.1541-0420.2005.00377.x - 11. Rubin, D.B. (1976) Inference and Missing Data. Biometrika, 63, 581-592.

http://dx.doi.org/10.1093/biomet/63.3.581 - 12. Rosenbaum, P.R. and Rubin, D.B. (1983) The Central Role of the Propensity Score in Observational Studies for Causal Effects. Biometrika, 70, 41-55.

http://dx.doi.org/10.1093/biomet/70.1.41 - 13. Wang, Q.H. and Rao, J.N.K. (2001) Empirical Likelihood for Linear Regression Models under Imputation for Missing Responses. The Canadian Journal of Statistics, 29, 597-608.

http://dx.doi.org/10.2307/3316009 - 14. Wang, Q.H. and Rao, J.N.K. (2002) Empirical Likelihood-Based Inference in Linear Models with Missing Data. The Scandinavian Journal of Statistics, 29, 563-576.

http://dx.doi.org/10.1111/1467-9469.00306 - 15. Xue, L.G. (2009) Empirical Likelihood for Linear Models with Missing Responses. Journal of Multivariate Analysis, 100, 1353-1366.

http://dx.doi.org/10.1016/j.jmva.2008.12.009 - 16. Qin, Y. and Lei, Q. (2010) On Empirical Likelihood for Linear Models with Missing Responses. Journal of Statistical Planning and Inference, 140, 3399-3408.

http://dx.doi.org/10.1016/j.jspi.2010.05.001 - 17. Imai, K. and Ratkovic, M. (2014) Covariate Balancing Propensity Score. Journal of the Royal Statistical Society, Series B, 76, 243-263.

http://dx.doi.org/10.1111/rssb.12027 - 18. Imai, K. and Ratkovic, M. (2015) Robust Estimation of Inverse Probability Weights for Marginal Structural Models. Journal of the American Statistical Association, 110, 1013-1022.

http://dx.doi.org/10.1080/01621459.2014.956872 - 19. Qin, J. and Zhang, B. (2007) Empirical-Likelihood-Based Inference in Missing Response Problems and Its Application in Observational Studies. Journal of the Royal Statistical Society, Series B, 69, 101-122.

http://dx.doi.org/10.1111/j.1467-9868.2007.00579.x - 20. Hansen, L.P. (1982) Large Sample Properties of Generalized Method of Moments Estimators. Econometrica, 50, 1029-1054.

http://dx.doi.org/10.2307/1912775 - 21. Seber, G.F. and Wild, C.J. (2003) Nonlinear Regression. Wiley, New York.

- 22. Newey, W. and McFadden, D. (1994) Large Sample Estimation and Hypothesis Testing. In: Engle, R.F. and McFadden, D.L., Eds., Handbook of Econometrics, IV, North-Holland, Amsterdam, 2111-2245.

Appendix: Proofs of the Main Results

Throughout, let

(A1) For all X’s,

(A2)

(A3) 1) W is positive semi-definite and

3)

(A4)

To complete the proofs of Theorems 1-3, the following lemma is needed. If there is a function

Lemma 1. is the fundamental consistency result for extremum estimators. Its proof can be found in Newey and McFadden [22] , and we omit it here.

Proof of Theorem 1. Similar to Theorem 2.6 in Newey and McFadden [22] , the proof of

McFadden (1994), we have

Lemma 1 holds by

Proof of Theorem 2. Denote

To prove Theorem 2, we will verify the asymptotically normality of

we have

where

Under MAR assumption, we have

yields

From the Theorem 5 in Wu (1981), we know that

By Theorem 1,

According to the assumptions given in model (1), we have

Then, it follows from the central limit theorem that

Therefore, by using (19) and Slutsky theorem, the proof of Theorem 2 is completed.

Proof of Theorem 3. By direct calculation, we have we have

where

By the central theorem, we have

Similar to arguments of Qin and Lei [16] , we have

For

NOTES

*Corresponding author.