Journal of Applied Mathematics and Physics

Vol.04 No.11(2016), Article ID:72413,34 pages

10.4236/jamp.2016.411207

High-Capacity Quantum Associative Memories

M. Cristina Diamantini1, Carlo A. Trugenberger2

1Nips Laboratory, INFN and Dipartimento di Fisica, University of Perugia, Perugia, Italy

2Swiss Scientific, Cologny, Switzerland

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: September 6, 2016; Accepted: November 27, 2016; Published: November 30, 2016

ABSTRACT

We review our models of quantum associative memories that represent the “quantization” of fully coupled neural networks like the Hopfield model. The idea is to replace the classical irreversible attractor dynamics driven by an Ising model with pattern-dependent weights by the reversible rotation of an input quantum state onto an output quantum state consisting of a linear superposition with probability amplitudes peaked on the stored pattern closest to the input in Hamming distance, resulting in a high probability of measuring a memory pattern very similar to the input. The unitary operator implementing this transformation can be formulated as a sequence of one-qubit and two-qubit elementary quantum gates and is thus the exponential of an ordered quantum Ising model with sequential operations and with pattern-dependent interactions, exactly as in the classical case. Probabilistic quantum memories, that make use of postselection of the measurement result of control qubits, overcome the famed linear storage limitation of their classical counterparts because they permit to completely eliminate crosstalk and spurious memories. The number of control qubits plays the role of an inverse fictitious temperature. The accuracy of pattern retrieval can be tuned by lowering the fictitious temperature under a critical value for quantum content association while the complexity of the retrieval algorithm remains polynomial for any number of patterns polynomial in the number of qubits. These models thus solve the capacity shortage problem of classical associative memories, providing a polynomial improvement in capacity. The price to pay is the probabilistic nature of information retrieval.

Keywords:

Quantum Information, Associative Memory, Quantum Pattern Recognition

1. Introduction

There is a growing consensus that the fundamental mechanism of human intelligence is simply pattern recognition, the retrieval of information based on content association, albeit repeated in ever increasing hierarchical structures [1] [2] . Correspondingly, pattern recognition in machine intelligence [3] has made enormous progress in the last decade or so and such systems are now to be found in applications ranging from medical diagnosis to facial and voice recognition in security and digital personal assistants, the latest addition to the family being self-driving cars. On the other side, the last two decades have seen the birth of, and an explosion of research in a new information- theoretic field: quantum information theory and quantum computation [4] [5] . This chapter deals with quantum pattern recognition, with particular emphasis on models that are both accessible to detailed analytical treatment and efficiently implementable within the framework of the quantum circuit model.

Pattern recognizers, which go also under the name of associative memories (or more precisely autoassociative memories), are fundamentally different than von Neumann or Turing machines [6] , which have grown into the ubiquitous computers that permeate our information society. Computation is not sequential but, rather, based on collective phenomena due to interactions among a large number of, typically redundant, elementary components. Information is not address-oriented, i.e. stored in look-up tables (random access memories, RAMs) but, rather, distributed in often very complex ways over the connections and interactions parameters. In traditional computers information is identified by a label and stored in a database indexed by these labels. Retrieval requires the exact knowledge of the relevant label, without which information is simply not accessible. This is definitely not how our own brain works. When trying to recognize a person from a blurred photo it is totally useless to know that it is the 16878th person you met in your life. Rather, the recognition process is based on our strong power of association with stored memories that resemble the given picture. Association is what we use every time we solve a crossword puzzle and is distinctive of the human brain.

The best known examples of pattern recognizers are neural networks [7] [8] and hidden Markov models [9] , the Hopfield model [10] (and its generalization to a bidirectional associative memory [11] ) being the paradigm, since it can be studied analytically in detail by the techniques of statistical mechanics [7] [8] [12] . The great advantage of these architectures is that they eliminate the extreme rigidity of RAM memories, which require a precise knowledge of the memory address and, thus, do not permit the retrieval of incomplete or corrupted inputs. In associative memories, on the contrary, recall of information is possible also on the basis of partial knowledge of its content, without knowing a precise storage location, which typically does not even exist. This is why they are also called “content-addressable memories”.

Unfortunately, classical associative memories suffer from a severe capacity shortage. When storing multiple patterns, these interfere with each other, a phenomenon that goes under the name of crosstalk. Above a critical number of patterns, crosstalk becomes so strong that a phase transition to a completely disordered spin glass phase [13] takes place. In this phase there is no relation whatsoever between the information encoded in the memory and the original patterns. For the Hopfield model, the critical threshold on the number p of patterns that can be stored in a network of n binary neurons is  [7] [8] . While various possible improvements can be envisaged, the maximum number of patterns remains linear in the number of neurons,

[7] [8] . While various possible improvements can be envisaged, the maximum number of patterns remains linear in the number of neurons, .

.

The power of quantum computation [4] [5] is mostly associated with the speed-up in computing time it can provide with respect to its classical counterpart, the paramount examples being Shor’s factoring algorithm [14] and Grover’s database search algorithm [15] . The efficiency advantage over classical computation is due essentially to the quantum superposition principle and entanglement, which allow for massively parallel information processing.

The bulk of the research effort in quantum computation has focused on the “quantization” of the classical sequential computer architecture, which has led to the quantum circuit model [4] [5] , in which information processing is realized by the sequential application of a universal set of elementary one- and two-qubit gates to typically highly entangled quantum states of many qubits. The computation is said to be efficient if the desired unitary evolution of the quantum state can be realized by the application of a polynomial number (in terms of the number of involved qubits) of these elementary quantum gates.

However, the question immediately arises if quantum mechanics can be applied successfully also to the collective information processing paradigm typical of machine intelligence algorithms and, specifically, if there are advantages in doing so. While this research has trailed the development of the quantum circuit model, it is presently experiencing a flurry of increased interest, so much so that last year NASA and Google have teamed up to found the Quantum Artificial Intelligence Laboratory, entirely dedicated to develop and advance machine intelligence quantum algorithms.

While speed has been the main focus of quantum computation, it can be shown that quantum mechanics also offers a way out from the impossibility of reconciling the association power of content-addressable memories with the requirement of large storage capacity. Indeed, one of us pointed out already in 2001 [16] [17] [18] [19] that storage capacity of associative memories can also be greatly enhanced by the quantum superposition principle. The key idea is to exploit the fundamental probabilistic nature of quantum mechanics. If one is willing to abandon the classical paradigm of one-off retrieval and sacrifice some speed by repeating the information retrieval step several times, then it is possible to store any desired polynomial number (in terms of the number of qubits) of patterns in a quantum associative memory and still tune the associative retrieval to a prescribed accuracy, a large advantage with respect to the classical linear limitation described above. Quantum entanglement permits to completely eliminate crosstalk and spurious memories in a tuneable probabilistic content association procedure with polynomial complexity for a polynomial number of stored patterns. Such probabilistic quantum associative memories can thus be implemented efficiently. Similar ideas in this direction were developed simultaneously in [20] [21] [22] .

In this chapter, we will review our own work on fundamental aspects of quantum associative memories and quantum pattern recognition. We will begin by a short survey of the main features of classical fully coupled neural networks like the Hopfield model and its generalizations, with a special emphasis on the capacity limitation and its origin. We will then describe the quantization of the Hopfield model [23] : the idea is to replace the classical irreversible dynamics that attracts input patterns to the closest minima of an energy function, representing the encoded memories, with a reversible unitary quantum evolution that amplifies an input quantum state to an output quantum state representing one of the stored memories at a given computational time t. In the classical model there is a complex phase diagram in terms of the two noise parameters, the temperature T and the disorder p/n with n the number of bits and p the number of stored patterns. It is, specifically the disorder due to an excessive loading factor p/n that prevents the storage of more than a critical number of patterns by causing the transition to a spin glass phase [13] , even at zero temperature. Correspondingly, in the quantum version there are quantum phase transitions due to both disorder and quantum fluctuations, the latter being encoded in the effective coupling Jt, with J being the energy parameter of the model and t being the computational time (throughout the review we will use units in which c = 1 and ). These are first examples of quantum collective phenomena typical of quantum machine intelligence. It turns out that, barring periodicity effects due to the unitary time evolution, the phase diagram for the quantum Hopfield model is not so different from its classical counterpart. Specifically, for small loading factors the quantum network has indeed associative power, a very interesting feature by itself, but the maximum loading factor is still limited to

). These are first examples of quantum collective phenomena typical of quantum machine intelligence. It turns out that, barring periodicity effects due to the unitary time evolution, the phase diagram for the quantum Hopfield model is not so different from its classical counterpart. Specifically, for small loading factors the quantum network has indeed associative power, a very interesting feature by itself, but the maximum loading factor is still limited to , above which there is a totally disordered spin glass phase, with no association power for any computational time. The transition to this quantum spin glass phase takes place when one tries to store a number of memories that is not anymore linearly independent.

, above which there is a totally disordered spin glass phase, with no association power for any computational time. The transition to this quantum spin glass phase takes place when one tries to store a number of memories that is not anymore linearly independent.

We then turn our attention to probabilistic quantum associative memories [16] [17] [18] [19] . The basic idea underlying their architecture is essentially the same as above, with one crucial difference: they exploit, besides a unitary evolution, a second crucial aspect of quantum mechanics, namely wave function collapse upon measurement [4] [5] . A generic (pure) quantum state is a superposition of basis states with complex coefficients. A measurement projects (collapses) the state probabilistically onto one of the basis states, the probability distribution being governed by the squared absolute values of the superposition coefficients. Probabilistic quantum associative memories involve, besides the memory register itself a certain number b of control qubits. The unitary evolution of the input state is again determined by a Hamiltonian that depends only on the stored patterns. Contrary to quantized Hopfield memories, however, this unitary evolution mixes the memory register and the control qubits. After having applied the unitary evolution to the initial input state, the control qubits are measured. Only if one obtains a certain specific result, one proceeds to measure the memory register. This procedure is called probabilistic postselection of the measurement result and guarantees that the memory register is in a superposition of the stored patterns such that the measurement probabilities are peaked on those patterns that minimize the Hamming distance to the input. A measurement of the memory register will thus associate input and stored patterns according to this probability distribution.

Of course, if we limit ourselves to a maximum number T of repetitions, there is a non-vanishing probability that the memory retrieval will fail entirely, since the correct control qubit state will never be measured. One can say that information retrieval in these quantum memories consists of two steps: recognition (the correct state of the control qubits has been obtained) and identification (the memory register is measured to give an output). Both steps are probabilistic and both the recognition efficiency and the identification accuracy depend on the distribution of the stored patterns: recognition efficiency is best when the number of stored patterns is large and the input is similar to a substantial cluster of them, while identification accuracy is best for isolated patterns which are very different from all other ones, both very intuitive features. Both recognition efficiency and identification accuracy can be tuned to prescribed levels by varying the repetition threshold T and the number b of control qubits.

The accuracy of the input-output association depends only on the choice of the number b of control qubits. Indeed, we will show that  plays the role of an effective temperature [19] . The lower t, the sharper is the corresponding effective Boltz- mann distribution on the states closest in Hamming distance to the input and the better becomes the identification. By averaging over the distribution of stored patterns with Hamming distance to the input above a threshold d one can eliminate the dependence on the stored pattern distribution and derive the effective statistical mechanics of quantum associative memories by introducing the usual thermodynamic potentials as a function of d and the effective temperature

plays the role of an effective temperature [19] . The lower t, the sharper is the corresponding effective Boltz- mann distribution on the states closest in Hamming distance to the input and the better becomes the identification. By averaging over the distribution of stored patterns with Hamming distance to the input above a threshold d one can eliminate the dependence on the stored pattern distribution and derive the effective statistical mechanics of quantum associative memories by introducing the usual thermodynamic potentials as a function of d and the effective temperature . In particular, the free energy

. In particular, the free energy  describes the average behaviour of the recall mechanism and provides concrete criteria to tune the accuracy of the quantum associative memory. By increasing b (lowering t), the associative memory undergoes a phase transition from a disordered phase with no correlation between input and output to an ordered phase with perfect input-output association encoded in the minimal Hamming distance d. This extends to quantum information theory the relation with Ising spin systems known in error-correcting codes [24] [25] [26] and in public key cryptography [27] .

describes the average behaviour of the recall mechanism and provides concrete criteria to tune the accuracy of the quantum associative memory. By increasing b (lowering t), the associative memory undergoes a phase transition from a disordered phase with no correlation between input and output to an ordered phase with perfect input-output association encoded in the minimal Hamming distance d. This extends to quantum information theory the relation with Ising spin systems known in error-correcting codes [24] [25] [26] and in public key cryptography [27] .

The recognition efficiency can be tuned mainly by varying the repetition threshold T: the higher T, the larger the number of input qubits that can be corrupted without affecting recognition. The crucial point is that the recognition probability is bounded from below by . For any number of patterns, thus, a repetition threshold T polynomial in n guarantees recognition with probability

. For any number of patterns, thus, a repetition threshold T polynomial in n guarantees recognition with probability . Due to the factor

. Due to the factor  in the numerator, whose origin is exclusively quantum mechanical, the number of repetitions required for efficient recognition would actually be polynomial even for a number of patterns exponential in n. The overall complexity of probabilistic associative quantum memories is thus bounded by the complexity

in the numerator, whose origin is exclusively quantum mechanical, the number of repetitions required for efficient recognition would actually be polynomial even for a number of patterns exponential in n. The overall complexity of probabilistic associative quantum memories is thus bounded by the complexity  of the unitary evolution operator. Any polynomial number of patterns

of the unitary evolution operator. Any polynomial number of patterns  can be encoded and retrieved efficiently in polynomial computing time. The absence of spurious memories leads to a substantial storage gain with respect to classical associative memories, the price to pay being the probabilistic nature of information recall.

can be encoded and retrieved efficiently in polynomial computing time. The absence of spurious memories leads to a substantial storage gain with respect to classical associative memories, the price to pay being the probabilistic nature of information recall.

2. The Classical Hopfield Model

Historically, the interest in neural networks [7] [8] has been driven by the desire to build machines capable of performing tasks for which the traditional sequential computer architecture is not well suited, like pattern recognition, categorization and generalization. Since these higher cognitive tasks are typical of biological intelligences, the design of these parallel distributed processing systems has been largely inspired by the physiology of the human brain.

The Hopfield model is one of the best studied and most successful neural networks. It was designed to model one particular higher cognitive function of the human brain, that of associative pattern retrieval or associative memory.

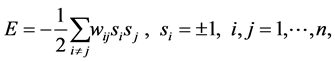

The Hopfield model consists of an assembly of n binary neurons ,

,  [28] , which can take the values ±1 representing their firing (+1) and resting (−1) states. The neurons are fully connected by symmetric synapses with coupling strengths

[28] , which can take the values ±1 representing their firing (+1) and resting (−1) states. The neurons are fully connected by symmetric synapses with coupling strengths  (

( ). Depending on the signs of these synaptic strengths, the couplings will be excitatory (>0) or inhibitory (<0). The model is characterised by an energy function

). Depending on the signs of these synaptic strengths, the couplings will be excitatory (>0) or inhibitory (<0). The model is characterised by an energy function

(1)

(1)

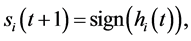

and its dynamical evolution is defined by the random sequential updating (in time t) of the neurons according to the rule

(2)

(2)

where

The synaptic coupling strengths are chosen according to the Hebb rule

where

It can be easily shown that the dynamical evolution (2) of the Hopfield model satis- fies exactly the requirement for an associative memory. This is because:

・ The dynamical evolution (2) minimizes the energy functional (1), i.e. this energy functional never increases when the network state is updated according to the evolution rule (2). Since the energy functional is bounded by below, this implies that the network dynamics must eventually reach a stationary point corresponding to a, possibly local, minimum of the energy functional.

・ The stored patterns

Actually, the second of these statements must be qualified. Indeed, the detailed behavior of the Hopfield model depends crucially upon the loading factor

For

For

For

3. Quantum Neural Networks and the Quantization of the Hopfield Model

In this section, we introduce a quantum information processing paradigm that is different from the standard quantum circuit model [23] . Instead of one- and two-qubit gates that are switched on and off sequentially, we will consider long-range interactions that define a fully-connected quantum neural network of qubits. This is encoded in a Hamiltonian that generates a unitary evolution in which the operator acting on one qubit depends on the collective quantum state of all the other qubits. Note that some of the most promising technologies for the implementation of quantum information processing, like optical lattices [29] and arrays of quantum dots [30] rely exactly on similar collective phenomena.

In mathematical terms, the simplest classical neural network model is a graph with the following properties:

・ A state variable

・ A real-valued weight

・ A state-space-valued transfer function

Directed graphs correspond to feed-forward neural networks [7] [8] while undirected graphs with symmetric weights contain feed-back loops. If the graph is complete one has fully-connected neural networks like the Hopfield model. Two types of dynamical evolution have been considered: sequential or parallel synchronous. In the first case the neurons are updated one at a time according to

while in the second case all neurons are updated at the same time. The simplest model is obtained when neurons become binary variables taking only the values

As we have seen in the previous section, the Hopfield model [10] is a fully-connected McCullogh-Pitts network in which the synaptic weights are symmetric quantities chosen according to the Hebb rule [7] [8]

and in which the the dynamics-defining function f is the sign function,

where n is the total number of neurons and

A quantum McCullogh-Pitts network can correspondingly be defined as a graph that satisfies:

・ A two-dimensional Hilbert space

・ A vector-valued weight

・ The synaptic potential becomes an operator

In case of feed-forward quantum networks on directed graphs only a subset of qubits is measured after the unitary evolution, in case of fully connected quantum networks with symmetric weights the state of the whole network is relevant.

The crucial difference with respect to classical neural networks concerns the interactions between qubits. In the classical model, the dynamics (5) induced by the transfer function is fully deterministic and irreversible, which is not compatible with quantum mechanics. A first generalization that has been considered is that of stochastic neurons, in which the transfer function determines only the probabilities that the classical state variables will take one of the two values:

It is known that two-level unitary gates are universal, i.e. every unitary matrix on an n-dimensional Hilbert space may be written as a product of two-level unitary matrices. However, an arbitrary unitary evolution cannot be implemented as a sequential succession of a discrete set of elementary gates, nor can it be approximated efficiently with a polynomial number of such gates [4] [5] . In general, quantum neural networks as defined above, have to be thought of as defined by Hamiltonians H that code hard-wired qubit interactions and generate a unitary evolution

We now describe a direct “quantization” of the Hopfield model in this spirit, i.e. by defining a quantum Hamiltonian that generalizes (7). At first sight one would be tempted to simply replace the classical spins

where

where

This corresponds to a “blank memory” in the sense that all possible states have the same probability of being recovered upon measurement. In the language of spin systems this is a state in which all spins are aligned in the x direction.

Inputs

where

Let us now specialize to the simplest case of one assigned memory

As in the classical case we shall analyze the model in the mean field approximation. In this case, the mean field represents the average over quantum fluctuations rather than thermal ones but the principle remains the same. The mean field model becomes exactly solvable and allows to derive self-consistency conditions on the average overlaps with the stored patterns. In the classical case, the mean field approximation is known to become exact for long-range interactions [31] .

In the quantum mean-field approximation operators are decomposed in a sum of their mean values in a given quantum state and fluctuations around it,

where

To this end we compute the average pattern overlaps

where

lap of the external stimulus with the stored memory.

Before we present the detailed solution of these equations, let us illustrate the mechanism underlying the quantum associative memory. To this end we note that, for

in the two-dimensional Hilbert spaces of each qubit i. The rotation parameter is exactly the same synaptic potential

This is the generalization to quantum probability amplitudes of the probabilistic formulation of classical stochastic neurons. Indeed, the probabilities for the qubit to be in its eigenstates ±1 after a time t, obtained by squaring the probability amplitudes, are given by

We shall now focus on a network without external inputs. In this case the equation for the average pattern overlaps has only the solution

For

The following picture of quantum associative memories emerges from the above construction. States of the network are generic linear superpositions of computational basis states. The network is prepared in the state

We will now turn to the more interesting case of a finite density

In case of a finite density of stored patterns, one cannot neglect the noise effect due to the infinite number of memories. This changes (13) to

As in the classical case we will assume that

is the spin-glass order parameter [13] . According to the central limit theorem one can now replace

The second order parameter r has to be evaluated self-consistently by a similar procedure starting from the equation analogous to Equation (16) for

where

In terms of these order parameters one can distinguish three phases of the network. First of all the value of m determines the presence (

For

Figure 1. The phase structure of quantum associative memories with finite density of stored patterns. P, F and SG denote (quantum) paramagnetic, ferromagnetic and spin-glass phases, respectively. F + SG denotes a mixed phase in which the memory retrieval solution is only locally stable.

network will be in a quantum spin glass state for all computation times (after the transition from the quantum paramagnet).

4. Probabilistic Quantum Memories

We have seen in the last section that crosstalk prevents the amplification of patterns stored in the weights of a simple quantum Hamiltonian like (8) when the loading factor exceeds a linear bound comparable with the classical one. In this section we show that this limit can be overcome by probabilistic quantum memories, which use postselection of the measurement results of certain control qubits [16] [17] [18] [19] . The price to pay is that such probabilistic memories require repetitions of the retrieval process and that there is non-vanishing probability that this fails entirely. When it is successful, however, it allows retrieval of the most appropriate pattern among a polynomial pool instead of a linear one.

4.1. Storing Patterns

Let us start by describing the elementary quantum gates [4] [5] that we will use in the rest of the paper. First of all there are the single-qbit gates represented by the Pauli matrices

Then, we will use extensively the two-qbit XOR (exclusive OR) gate, which performs a NOT on the second qbit if and only if the first one is in state

for

The construction of quantum memories relies, of course, on the fundamental fact that one can use entanglement to “store” an arbitrary number p of binary patterns

The idea of the memory architecture consists thus of two steps:

・ Generate the state

・ Given an input state

The quantum memory itself is the unitary operator M that codes the p patterns. It defines implicitly a Hamiltonian through the formal relation

In order to construct explicitly the quantum memory M we will start from an algorithm that loads sequentially the classical patterns into an auxiliary register, from which they are then copied into the actual memory register. A first version of such an algorithm was introduced in [35] . The simplified version that we present here is due to [16] [17] .

We shall use three registers: a first register p of n qbits in which we will subsequently feed the patterns

The idea of the storage algorithm is to separate this state into two terms, one corresponding to the already stored patterns, and another ready to process a new pattern. These two parts will be distinguished by the state of the second utility qbit

For each pattern

This simply copies pattern

The first of these operations makes all qbits of the memory register

This is the central operation of the storing algorithm. It separates out the new pattern to be stored, already with the correct normalization factor.

These two operations are the inverse of Equation (26) and restore the utility qbit

With the last operation,

one restores the third register m of the processing term, the second term in Equation (29) above, to its initial value

Any quantum state can be generically obtained by a unitary transformation of the initial state

To this end we introduce first the single-qbit unitary gates

where

We now introduce, in addition to the memory register proper, the same two utility qbits as before, also initially in the state

which loads pattern

From this construction it is easy to see that the memory operator M involves a number

While the memory construction we have presented here mirrors its classical counterpart, it is important to stress one notable difference. In classical associative memories, patterns are stored as minima of an energy landscape or, alternatively in the parameters of a dynamical evolution law [7] [8] . This is reflected verbatim in the construction of the unitary operator M in (34), which completely codes the patterns in a dynamical law, albeit reversible in the quantum case. In quantum mechanics, however, there is the possibility of shuffling some (but not all, as we will shortly see) information about the patterns from the unitary evolution law M onto a set of quantum states.

The ideal, most compressed quantum memory would indeed be the quantum superposition of patterns

This leaves state-dependent cloning [41] as the only viable option. State-dependent cloners are designed to reproduce only a finite number of states and this is definitely enough for our purposes. Actually the memory M in (34) is equivalent to a state- dependent cloner for the state

The cloning efficiencies of the probabilistic cloner of two states are bounded as follows [42] :

This bound can be made large by choosing

together with

and the bound for the cloning efficiencies would be very close to its maximal value 2 in both cases.

The quantum network for the probabilistic cloner of two states has been developed in [43] . It can be constructed exclusively out of the two simple distinguishability tranfer (D) and state separation (S) gates. As expected, these gates embody information about the two states to be cloned. Part of the memory, therefore, still resides in the cloning network. The pattern-dependence of the network cloner can be decreased by choosing a larger set of states in the pool that can be cloned, so that the cloner becomes more and more generic. On one side this decreases also the efficiency of the cloner, so that more repetitions are required, on the other side, since the clonable pool is limited to a set of linearly independent states, one can never eliminate completely the pattern-dependence of the cloning operator. This is why the original claim of an exponential capacity increase of quantum associative memories [16] [17] , based on probabilistic cloning of the state

4.2. Retrieving Patterns

Let us now assume we are given a binary input i that is a corrupted version of one of the patterns stored in the memory. The task of the retrieval algorithm is to “recognize” it, i.e. output the stored pattern that most resembles this input, where similarity is defined (here) in terms of the Hamming distance, the number of different bits between the two patterns, although other similarity measures [7] could also be incorporated.

The retrieval algorithm requires also three registers. The first register i of n qbits contains the input pattern; the second register m, also of n qbits, contains the memory

where

Let us now apply to this state the following combination of quantum gates:

As a result of the above operation the memory register qbits are in state

where

Consider now the following Hamiltonian:

where

Every term in the superposition (41) is an eigenstate of

where

In the final step we restore the memory gate to the state

The idea is now to repeat the above operations sequentially for all b control qbits

where

Note that one could also dispense with a register for the input but, rather, code also the input directly into a unitary operator. Indeed, the auxiliary quantum register for the input is needed only by the operator (40) leading from (39) to (41). The same result (apart from an irrelevant overall sign) can be obtained by applying

directly on the memory state

The end effect of the information retrieval algorithm represents thus a rotation of the memory quantum state in the enlarged Hilbert space obtained by adding b control qbits. The overall effect of this rotation is an amplitude concentration on memory states similar to the input, if there is a large number of

There are two ways of obtaining this projection. The first, and easiest one, is to simply repeat the above algorithm and measure the control register several times, until exactly the desired state for the control register is obtained. If the number of such repetitions exceeds a preset threshold T the input is classified as “non-recognized” and the algorithm is stopped. Otherwise, once

The second method is to first apply T steps of the amplitude amplification algorithm [44] rotating

By adding also the two utility qbits needed for the storing algorithm one can then obtain

The amplitude amplification rotation of

on the state

The expected number of repetitions needed to measure the desired control register state is

the probability of measuring

For

This shows that, independent of the number p of patterns, the threshold T for recognition can be set as a polynomial function of the number n of qubits. Note that this is entirely due to the factor

In general, the probability of recognition is determined by comparing (even) powers of cosines and sines of the distances to the stored patterns. It is thus clear that the worst case for recognition is the situation in which there is an isolated pattern, with the remaining patterns forming a tight cluster spanning all the largest distances to the first one. As a consequence, the threshold needed to recognize all patterns diminishes when the number of stored patterns becomes very large, since, in this case, the distribution of patterns becomes necessarily more homogeneous. Indeed, for the maximal number of stored patterns

Once the input pattern i is recognized, the measurement of the memory register yields the stored pattern

Clearly, this probability is peaked around those patterns which have the smallest Hamming distance to the input. The highest probability of retrieval is thus realized for that pattern which is most similar to the input. This is always true, independently of the number of stored patterns. In particular, contrary to classical associative memories, there are no spurious memories: the probability of obtaining as output a non-stored pattern is always zero. This is another manifestation of the fact that there are no restrictions on the loading factor p/n due to the information retrieval algorithm.

In addition to the threshold T, there is a second tunable parameter, namely the number b of control qbits. This new parameter b controls the identification efficiency of the quantum memory since, increasing b, the probability distribution

where

While the recognition efficiency depends on comparing powers of cosines and sines of the same distances in the distribution, the identification efficiency depends on comparing the (even) powers of cosines of the different distances in the distribution. Specifically, it is best when one of the distances is zero, while all others are as large as possible, such that the probability of retrieval is completely peaked on one pattern. As a consequence, the identification efficiency is best when the recognition efficiency is worst and vice versa.

The role of the parameter b becomes familiar upon a closer examination of Equation (53). Indeed, the quantum distribution described by this equation is equivalent to a canonical Boltzmann distribution with (dimensionless) temperature

with Z playing the role of the partition function.

The appearance of an effective thermal distribution suggests studying the average behaviour of quantum associative memories via the corresponding thermodynamic potentials. Before this can be done, however, one must deal with the different distributions of stored patterns characterizing each individual memory. The standard way to do this in similar classical problems is to average over the random distribution of patterns. Typically, one considers quenched averages in which extensive quantities, like the free energy are averaged over the disorder: this is the famed replica trick used to analyze spin glasses [13] . In the present case, however, the disorder cannot lead to spin-glass- like phases since there are no spurious memories: by construction, probabilistic quantum memories can output only one of the stored patterns. The only question is how accurate is the retrieval of the most similar pattern to the input as a function of the fictitious temperature

To do so we first normalize the pattern representation by adding (modulo 2) to all patterns, input included, the input pattern i. This clearly preserves all Hamming distances and has the effect of choosing the input as the state with all qbits in state

where

with all other

We now introduce the free energy

where we have chosen a normalization such that

The free energy describes the equilibrium of the system at effective temperature

Note that, with the normalization we have chosen in (59), the entropy S is always a negative quantity describing the deviation from its maximal value

By inverting Equation (56) with F substituting E one can also define an effective (relative) input/output Hamming distance

This corresponds exactly to representing the recognition probability of the average memory as

which can also be taken as the primary definition of the effective Hamming distance.

The function

A first hint about the general behaviour of the effective distance function

Choosing again the normalization in which

Figure 2. Effective input/output distance and entropy (rescaled to [0, 1]) for 1 Mb patterns and

Apart from a constant, this is the Hamiltonian of an infinite-range antiferromagnetic Ising model in presence of a magnetic field. The antiferromagnetic term favours configurations k with half the spins up and half down, so that

and leading to an effective distance

This value corresponds to a disordered phase with no correlation between input and output of the memory.

A numerical study of the thermodynamic potentials in (60) and (61) indeed confirms a phase transition from the ordered to the disordered phase as the effective temperature is raised. In Figure 2 we show the effective distance

The phase transition occurs around

Having described at length the information retrieval mechanism for complete, but possibly corrupted patterns, it is easy to incorporate also incomplete ones. To this end assume that only

so that the Hamming distances to the stored patterns are computed on the basis of the known qbits only. After this, the pattern recall process continues exactly as described above. This second possibility has the advantage that it does not introduce random noise in the similarity measure but it has the disadvantage that the operations of the memory have to be adjusted to the inputs.

Finally, it is fair to mention that the model of probabilistic quantum associative memory presented here has been criticised [45] on three accounts:

・ It has been claimed that the same result could have been obtained by storing only one of the p patterns in n classical bits and always using this single pattern as the same output independently of the input, provided the input has a Hamming distance to the unique stored pattern lower than a given threshold, otherwise the input would not be recognized.

・ It has been claimed that the Holevo theorem bounds the number of patterns that can be stored in a quantum associative memory.

・ It has been pointed out that the complexity of memory preparation prevents the efficient storing of patterns.

This criticism is wrong on the first two accounts and partially justified on the third [46] . It is true that both the quantum memory and the proposed equivalent classical prescription are based on probabilistic recognition and identification processes. In the proposed classical alternative, however the probabilities for both recognition and identification depend on one unique, fixed and random pattern whereas in the quantum memory, exactly due to its quantum character, these probabilities depend on the distribution of all stored patterns. These probabilities are such that an input different from most stored patterns is more difficult to recognize than an input similar to many stored memories and that the identification probability distribution can be peaked with any prescribed accuracy on the stored pattern most similar to the input. In the proposed classical alternative, given that only one single pattern can be stored on the n classical bits, the recognition or lack thereof depends on the distance to a randomly chosen pattern and the identification probability is a delta function peaked on this fixed random pattern. In other words there is no correlation whatsoever between input and output apart from the fact that they have Hamming distance below a certain threshold, a prescription that can hardly qualify as an associative memory: it would indeed be a boring world the one in which every stimulus would produce exactly the same response, if any response at all. Also, the Holevo theorem [34] does not impose any limitation on this type of probabilistic quantum memories. The Holevo theorem applies to the situation in which Alice codes information about a classical random variable in a quantum state and Bob tries to retrieve the value of this random variable by measurements on the received quantum state. In the present case Alice gives to Bob also corrupted or incomplete classical information about the random variable (the input) and Bob can use also a unitary transformation that encodes both the memories and the input (operator

4.3. Efficiency, Complexity and Memory Tuning

In this last section we would like to address the efficient implementation of probabilistic quantum memories in the quantum circuit model [4] [5] and their accuracy tuning.

We have stressed several times that all unitary operators involved in the memory preparation can be realized as a sequence of one- and two-qubit operators. It remains to prove that this is true also for pattern retrieval and that all these operators can be implemented in terms of a small set of universal gates. To this end we would like to point out that, in addition to the standard NOT, H (Hadamard), XOR, 2XOR (Toffoli) and nXOR gates [4] [5] we have introduced only the two-qbit gates

and the two-qbit controlled gate

It is then easy to check that

where c is the control qbit for which one is currently repeating the algorithm. Essentially, this means that one implements first

Using this representation for the Hamming distance operator one can count the total number of simple gates that one must apply in order to implement one step of the information retrieval algorithm. This is given by

where

for the simplest version of the algorithm, using memory preparation by M and an auxiliary input register.

The computation of the overall complexity is easier for the information retrieval algorithm which uses the amplitude amplification technique. In this case the initial memory is prepared only once by a product of the operators M, with complexity

As expected, the memory complexity (be it (72) or (73)) depends on both T and b, the parameters governing the recognition and identification efficiencies. The major limitation comes from the factor p representing the total number of stored patterns. Note however that, contrary to classical associative memories, one can efficiently store and retrieve any polynomial number of patterns due to the absence of spurious memories and crosstalk.

Let us finally show how one can tune the accuracy of the quantum memory. Suppose one would like to recognize on average inputs with up to 1% of corrupted or missing bits and identify them with high accuracy. The effective i/o Hamming distance

5. Conclusions

We would like to conclude this review by highlighting the fundamental reason why a probabilistic quantum associative memory works better than its classical counterpart and pointing out about some very intuitive features of the information retrieval process.

In classical associative memories, the information about the patterns to recall is typically stored in an energy function. When retrieving information, the input configuration evolves to the corresponding output, driven by the dynamics associated with the memory function. The capacity shortage is due to a phase transition in the statistical ensemble governed by the memory energy function. Spurious memories, i.e. spurious metastable minima not associated with any of the original patterns become important for loading factors p/n above a critical value and wash out completely the memory, a phenomenon that goes by the name of crosstalk. So, in the low p/n phase the memory works perfectly in the sense that it outputs always the stored pattern which is most similar to the input. For p/n above the critical value, instead, there is an abrupt transition to total amnesia caused by spurious memories.

Probabilistic quantum associative memories work better than classical ones since they are free from spurious memories. The easiest way to see this is in the formulation

All the information about the stored patterns is encoded in the unitary operator M. This generates a quantum state in which all components that do not correspond to stored patterns have exactly vanishing amplitudes.

An analogy with the classical Hopfield model [7] [8] can be established as follows. Instead of generating the memory state

Now, this same result can also be obtained by Grover’s algorithm, or better by its generalization with zero failure rate [47] . Here the state

where J rotates the amplitudes of the states corresponding to the patterns to be stored by a phase

The price to pay is the probabilistic nature of the information retrieval mechanism. As always in quantum mechanics, the dynamics determines only the evolution of probability distributions and the probabilistic aspect is brought in by the collapse of this probability distributions upon measurement. Therefore, contrary to the classical Hopfield model in the low p/n phase, one does not always have the absolute guarantee that an input is recognized and identified correctly as the stored pattern most similar to the input, even if this state has the highest probability of being measured. But, after all, this is a familiar feature of the most concrete example of associative memory, our own brain, and should thus not be so disturbing. Indeed, it is not only the probabilistic nature of information retrieval that is reminiscent of the behaviour of the human brain but also the properties of the involved probability distributions. These are such that inputs very similar to a cluster of stored patterns will be much easier to recognize than i.

Cite this paper

Diamantini M.C. and Trugenberger, C.A. (2016) High- Capacity Quantum Associative Memories. Journal of Applied Mathematics and Physics, 4, 2079-2112. http://dx.doi.org/10.4236/jamp.2016.411207

References

- 1. Hawkins, J. (with Blakeslee, S.) (2004) On Intelligence. Times Books.

- 2. Kurzweil, R. (2012) How to Create a Mind. Penguins Books, London.

- 3. Bishop, C.M. (2006) Pattern Recognition and Machine Learning. Springer Verlag, Singapore.

- 4. Nielsen, M.A. and Chuang, I.L. (2000) Quantum Computation and Quantum Information. Cambridge University Press, Cambridge.

- 5. Pittenger, A.O. (2000) An Introduction to Quantum Computing Algorithms. Birkhäuser, Boston.

- 6. Davis, M. (2000) Engines of Logic: Mathematicians and the Origin of the Computer. W. W. Norton Company, New York.

- 7. Müller, B. and Reinhardt, J. (1990) Neural Networks. Springer-Verlag, Berlin.

- 8. Kohonen, T. (1984) Self-Organization and Associative Memmory. Springer-Verlag, Berlin.

- 9. Rabiner, L.R. (1989) A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition. Proceedings of the IEEE, 77, 257-286.

https://doi.org/10.1109/5.18626 - 10. Hopfield, J.J. (1982) Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proceedings of the National Academy of Sciences of the United States of America, 79, 2554-2558.

https://doi.org/10.1073/pnas.79.8.2554 - 11. Kosko, B. (1988) Bidirectional Associative Memories. IEEE Transactions on Systems, Man, and Cybernetics, 18, 49-60.

https://doi.org/10.1109/21.87054 - 12. Nishimori, H. (2001) Statistical Physics of Spin Glasses and Information Processing. Oxford University Press, Oxford.

https://doi.org/10.1093/acprof:oso/9780198509417.001.0001 - 13. Mezard, M., Parisi, G. and Virasoro, M.A. (1987) Spin Glass Theory and Beyond. World Scientific, Singapore City.

- 14. Shor, P.W. (1997) Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM Journal of Computing, 26, 1484-1509.

- 15. Grover, L. (1997) Quantum Mechanics Helps in Searching for a Needle in a Haystack. Physical Review Letters, 79, 325.

https://doi.org/10.1103/PhysRevLett.79.325 - 16. Trugenberger, C.A. (2001) Probabilistic Quantum Memories. Physical Review Letters, 87, 067901.

https://doi.org/10.1103/PhysRevLett.87.067901 - 17. Ball, P. (2001) Brain Inspires New Memories. Nature News, August 6.

- 18. Trugenberger, C.A. (2002) Phase Transitions in Quantum Pattern Recognition. Physical Review Letters, 89, 277903.

https://doi.org/10.1103/PhysRevLett.89.277903 - 19. Trugenberger, C.A. (2002) Quantum Pattern Recognition. Quantum Information Processing, 1, 471.

https://doi.org/10.1023/A:1024022632303 - 20. Sasaki, M., Carlini, A. and Jozsa, R. (2001) Quantum Template Matching. Physical Review A, 64, 022317.

https://doi.org/10.1103/PhysRevA.64.022317 - 21. Sasaki, M and Carlini, A. (2003) Quantum Learning and Universal Quantum Matching Machine. Physical Review A, 66, 022303.

https://doi.org/10.1103/PhysRevA.66.022303 - 22. Schützhold, R. (2003) Pattern Recognition on a Quantum Computer. Physical Review A, 67, 062311.

https://doi.org/10.1103/PhysRevA.67.062311 - 23. Cristina Diamantini, M. and Trugenberger, C.A. (2006) Quantum Pattern Retrieval by Qubit Networks with Hebb Interactions. Physical Review Letters, 97, 130503.

https://doi.org/10.1103/PhysRevLett.97.130503 - 24. Sourlas, N. (1989) Spin-Glass Models as Error-Correcting Codes. Nature, 339, 693-695.

https://doi.org/10.1038/339693a0 - 25. Kanter, I. and Saad, D. (1999) Error-Correcting Codes That Nearly Saturate Shannon’s Bound. Physical Review Letters, 83, 2660.

https://doi.org/10.1103/PhysRevLett.83.2660 - 26. Kabashima, Y., Murayama, T. and Saad, D. (2000) Typical Performance of Gallager-Type Error-Correcting Codes. Physical Review Letters, 84, 1355.

https://doi.org/10.1103/PhysRevLett.84.1355 - 27. Kabashima, Y., Murayama, T. and Saad, D. (2000) Cryptographical Properties of Ising Spin Systems. Physical Review Letters, 84 2030.

https://doi.org/10.1103/PhysRevLett.84.2030 - 28. McCullogh, W.S. and Pitts, W. (1943) A Logical Calculus of the Ideas Immanent in Nervous Activity. The bulletin of Mathematical Biophysics, 5, 115-133.

https://doi.org/10.1007/BF02478259 - 29. Mandel, O., Greiner, M., Widera, A., Rom, T., Hänsch, T.W. and Bloch, I. (2003) Controlled Collisions for Multi-Particle Entanglement of Optically Trapped Atoms. Nature, 425, 937-940.

https://doi.org/10.1038/nature02008 - 30. Kane, B.E. (2003) A Silicon-Based Nuclear Spin Quantum Computer. Nature, 393, 133-137.

https://doi.org/10.1038/30156 - 31. Parisi, G. (1988) Statistical Field Theory. Addison-Wesley, Redwood City.

- 32. Sachdev, S. (1999) Quantum Phase Transitions. Cambridge University Press, Cambridge.

- 33. Barenco, A., Bennet, C., Cleve, R., DiVincenzo, D., Margolus, N., Shor, P., Sleator, T., Smolin, J. and Weinfurter, H. (1995) Elementary Gates for Quantum Computation. Physical Review A, 52, 3457.

https://doi.org/10.1103/PhysRevA.52.3457 - 34. Holevo, A.S. (1973) Bounds for the Quantity of Information Transmitted by a Quantum Communication Channel. Problems of Information Transmission, 9, 177-183.

- 35. Ventura, D. and Martinez, T. (1999) Initializing the Amplitude Distribution of a Quantum State. Foundations of Physics Letters, 12, 547-559.

https://doi.org/10.1023/A:1021695125245 - 36. Tanaka, Y., Ichikawa, T., Tada-Umezaki, M., Ota, Y. and Nakahara, M. (2011) Quantum Oracles in Terms of Universal Gate Set. International Journal of Quantum Information, 9, 1363-1381.

https://doi.org/10.1142/S0219749911008106 - 37. Wootters, W. and Zurek, W. (1982) A Single Quantum Cannot Be Cloned. Nature, 299, 802-803.

https://doi.org/10.1038/299802a0 - 38. Buzek, V. and Hillery, M. (1996) Quantum Copying: Beyond the No-Cloning Theorem. Physical Review A, 54, 1844.

https://doi.org/10.1103/PhysRevA.54.1844 - 39. Gisin, N. and Massar, S. (1997) Optimal Quantum Cloning Machines. Physical Review Letters, 79, 2153.

https://doi.org/10.1103/PhysRevLett.79.2153 - 40. Bruss, D., Ekert, A.K. and Macchiavello, C. (1998) Optimal Universal Quantum Cloning and State Estimation. Physical Review Letters, 81, 2598.

https://doi.org/10.1103/PhysRevLett.81.2598 - 41. Bruss, D., DiVincenzo, D.P., Ekert, A., Fuchs, C.A., Macchiavello, C. and Smolin, J.A. (1998) Optimal Universal and State-Dependent Quantum Cloning. Physical Review A, 57, 2368.

https://doi.org/10.1103/PhysRevA.57.2368 - 42. Duan, L.-M. and Guo, G.-C. (1998) Probabilistic Cloning and Identification of Linearly Independent Quantum States. Physical Review Letters, 80, 4999.

https://doi.org/10.1103/PhysRevLett.80.4999 - 43. Chefles, A. and Barnett, S. M. (1999) Strategies and Networks for State-Dependent Quantum Cloning. Physical Review A, 60, 136.

https://doi.org/10.1103/PhysRevA.60.136 - 44. Brassard, G., Hoyer, P., Mosca, M. and Tapp, A. Amplitude Amplification and Estimation, quant-ph/0005055.

- 45. Brun, T., Klauck, H., Nayak, A., Roetteler, M. and Zalka, Ch. (2003) Comment on “Probabilistic Quantum Memories”. Physical Review Letters, 91, 209801.

https://doi.org/10.1103/PhysRevLett.91.209801 - 46. Trugenberger, C.A. (2003) Trugenberger Replies. Physical Review Letters, 91, 209802.

https://doi.org/10.1103/PhysRevLett.91.209802 - 47. Long, G.L. (2001) Grover Algorithm with Zero Theoretical Failure Rate. Physical Review A, 64, 022307.

https://doi.org/10.1103/physreva.64.022307