Open Journal of Nursing

Vol.2 No.2(2012), Article ID:20435,4 pages DOI:10.4236/ojn.2012.22014

Development and psychometric evaluation of the radiographers’ competence scale

![]()

1School of Health Sciences, Jönköping University, Jönköping, Sweden

2Department of Clinical Neurophysiology, University Hospital Linköping, Linköping, Sweden

Email: *Bodil.Andersson@hhj.hj.se

Received 13 December 2011; revised 22 January 2012; accepted 15 February 2012

Keywords: competence scale; instrument development; management; nursing; psychometric evaluation; radiographer; RCS

ABSTRACT

Assessing the competence of registered radiographers’ clinical work is of great importance because of the recent change in nursing focus and rapid technological development. Self-assessment assists radiographers to validate and improve clinical practice by identifying their strengths as well as areas that may need to be developed. The aim of the study was to develop and psychometrically test a specially designed instrument, the Radiographers Competence Scale (RCS). A cross sectional survey was conducted comprising 406 randomly selected radiographers all over Sweden. The study consisted of two phases; the development of the instrument and evaluation of its psychometric properties. The first phase included three steps: 1) construction of the RCS; 2) pilot testing of face and content validity; and 3) creation of a web-based 54-item questionnaire for testing the instrument. The second phase comprised psychometric evaluation of construct validity, internal consistency reliability and item reduction. The analysis reduced the initial 54 items of the RCS to 28 items. A logical two-factor solution was identified explaining 53.8% of the total variance. The first factor labelled “Nurse initiated care” explained 31.7% of the total variance. Factor 2 labelled “Technical and radiographic processes” explained 22.1% of the total variance. The scale had good internal consistency reliability, with a Cronbach’s alpha of 0.87. The RCS is a short, easy to administer scale for capturing radiographers’ competence levels and the frequency of using their competence. The scale was found to be valid and reliable. The self-assessment RCS can be used in management, patient safety and quality improvement to enhance the radiographic process.

1. INTRODUCTION

Competence in nursing practice is a challenging concept that is continually being debated and discussed [1,2]. Definitions used to describe competence vary and, in particular, the simultaneous use of the terms competence and performance gives rise to confusion [3-5]. While [5] offers a distinction between the two concepts, where competence is concerned with perceived skills, and performance with an actual situated behaviour that is measurable. Competence has also been described as being closely related to “being able to” and “having the ability to” do something [6]. Nevertheless, there is no agreement as to whether competence implies a greater level of ability or capacity than performance [6]. Benner [7] defined competence in general as the ability to perform a task with desirable outcomes.

In more recent nursing studies, the issue of competence has been explored in different ways. There is a general consensus that it is based on a combination of components that reflect knowledge, understanding and judgment, cognitive skills, technical and interpersonal skills and personal attitudes [2]. Among others, Meretoja et al. [8] provide details of a Nursing Competence Scale (NCS) used to measure the competence level of nursing professionals. The NCS is a self-assessment tool consisting of 73 items grouped into seven sub-scales used to assess registered nurses in medical and surgical work environments in a hospital setting. The NCS has strong validity and reliability. Another available instrument is the Competency Inventory for Registered Nurses (CIRN). This 58-item instrument was developed from a qualitative study based on the International Council of Nurses’ (ICN) framework. Liu et al. [9] identified strong evidence of internal consistency reliability, content and construct validity of the CIRN.

The examination of professional competence also includes the way of acting in a specific context, in this case, a diagnostic radiology department as people may not possess identical knowledge although they may work in the same field. Knowledge can be so deeply embedded that a registered radiographer with extensive experience may carry out his/her duties intuitively. This is known as “tacit knowledge” and often difficult to assess [10]. A central premise of tacit knowledge is that “we know more than we can express” [11]. According to Benner [7] and Dreyfus et al. [12], five levels of professional pathways “from novice to expert” are described as the basis for achieving increased skills and competencies. Understanding and judging situations are the key skills in complex human activities [13]. Benner [7] described the nurse’s evidence-based knowledge as derived from actual nursing situations in an emergency context. She developed it even further by emphasizing a more holistic view of caring behaviour [14], which is often challenging due to complex technological advances in the health care services. Assessing clinical competence among registered radiographers is therefore of major importance because of the immense changes that have taken place in the past decade (i.e., the rapid technological development and change in nursing focus) at all diagnostic radiology departments. In most countries, registered nurses are responsible for patient care, while a radiological technologist or corresponding professional is in charge of the radiological equipment [15]. In Sweden, specially educated and registered radiographers have a unique position due to being responsible for the entire radiographic examination, nursing actions as well as for the medical technology, e.g. injections, catheterizing and medical technical equipment [16]. In this paper, radiographer will be used to refer to these professionals.

The use of self-assessment tools allows radiographers to consider different aspects of nursing in their clinical work and helps them to improve their clinical competence [17]. Furthermore, the assessment of competence is also an ethical matter, as well as a quality of care concern [18]. Accordingly, competence assessment should be a core function in management, patient safety and quality improvement. Valid and reliable methods for assessing professional clinical competence are therefore essential. However, based on a review of the literature, no specific and reliable tool was identified to meet the specific needs of radiographers. Accordingly, the aim of the present study was to develop and psychometrically test a specially designed instrument, the Radiographers Competence Scale (RCS).

2. METHOD

2.1. Design

The design was a cross sectional survey consisting of two phases; the development of the instrument and evaluation of its psychometric properties. The first phase included three steps: 1) construction of the RCS; 2) pilot testing of the face and content validity; and 3) creation of a web-based questionnaire for testing the instrument. The second phase comprised psychometric evaluation of the construct validity and internal consistency reliability.

2.2. Phase I. Instrument Development

2.2.1. Step 1. Construction of the Radiographers Competence Scale

The development of the RCS was guided by the concept of Streiner and Norman [19]. The basis was a qualitative study exploring professional competence [16], and two main areas (i.e., direct and indirect patient related areas) emerged from the data. The first was broken down into four competencies focusing on the care provided in close proximity to the patient; guiding, performing the examination, providing support and being vigilant. The second area was likewise divided into four competencies focusing on the surrounding environment and activities and including; organization, ensuring quality, handling the image and collaboration with internal and external agencies.

When defining the construction of the RCS it was valuable that all involved were practising nurses and/or researchers. These individuals had different specialities, for example; cardiovascular, geriatric, intensive, anaesthetics and radiographic nursing care. Experience of developing and psychometrically testing instruments was also considered a strength among members of the research group [20].

The initial version of the RCS consisted of 42 items in eight areas with between four and seven items per area, based on the categories and sub-categories reported in a qualitative study by Andersson et al. [16]. Each item represented behaviours and was answered by means of a two part scale, one of which focused on valuation of radiographer competence and the other on the frequency of its use. Valuation of the competence was measured on a 10-point scale (1 - 10) where one was the lowest and 10 the highest grade. The frequency of using the competence was measured by the following response alternative: “never used”, “very seldom used”, “sometimes used”, “often used”, “very often used” and “always used”. In the present study, only the first part focusing on valuation of the competence was used for item reduction and reliability testing of the RCS.

2.2.2. Step 2. Pilot Test for Face and Content Validity

Pilot testing of the face and content validity was conducted in line with Lynn’s Criteria [21], (i.e., content relevance, clarity, concreteness, understandability and readability of the scale). A strategically selected group comprising 16 participants with varying experiences of the field was used. The group members were; one third year radiography student; six clinically experienced radiographers; four radiographers in management positions; three PhD students and two nursing researchers who were familiar with diagnostic radiology. The participants were asked to judge the relevance of the items, individually and as a set. A 4-point rating scale (from 1 “not relevant”, to 4 “very relevant”), was used. In addition, the participants were requested to identify important areas not included in the instrument. Hence, after every set of items there was space for comments. Further assessment was undertaken regarding the items dealing with competencies and the association between the items and competencies. Finally, missing items or competencies and suggested additional items were also assessed.

Following analysis of the data, the 42-item version of the RCS was amended. As a result, it was proposed that 12 items concerning relatives, patient safety, vigilance, prioritizing and optimizing image quality should be added to the questionnaire. The mean value of the relevance of the items was judged to be 3.4 (range 2 - 4) with the lowest value (2) pertaining to understandability and readability of the items; “prioritization of patients”, “providing relief to the patient”, “intervening in life and death situations” and “independent reporting of medical images”. Furthermore, the face and content validity resulted in linguistic adjustments to enhance readability. The order of the competencies and items was also changed to ensure a more systematic and easy to use tool. The number of items in all competence areas was increased except for the first; “organization and leadership”. In line with the recommendations of Berk [22], four of the authors independently acted as experts when considering the logical consistency of the competencies and the number of items to be included in the RCS. Content and face validity were based on agreement between the four authors.

2.2.3. Step 3. Construction of a Web-Based Questionnaire

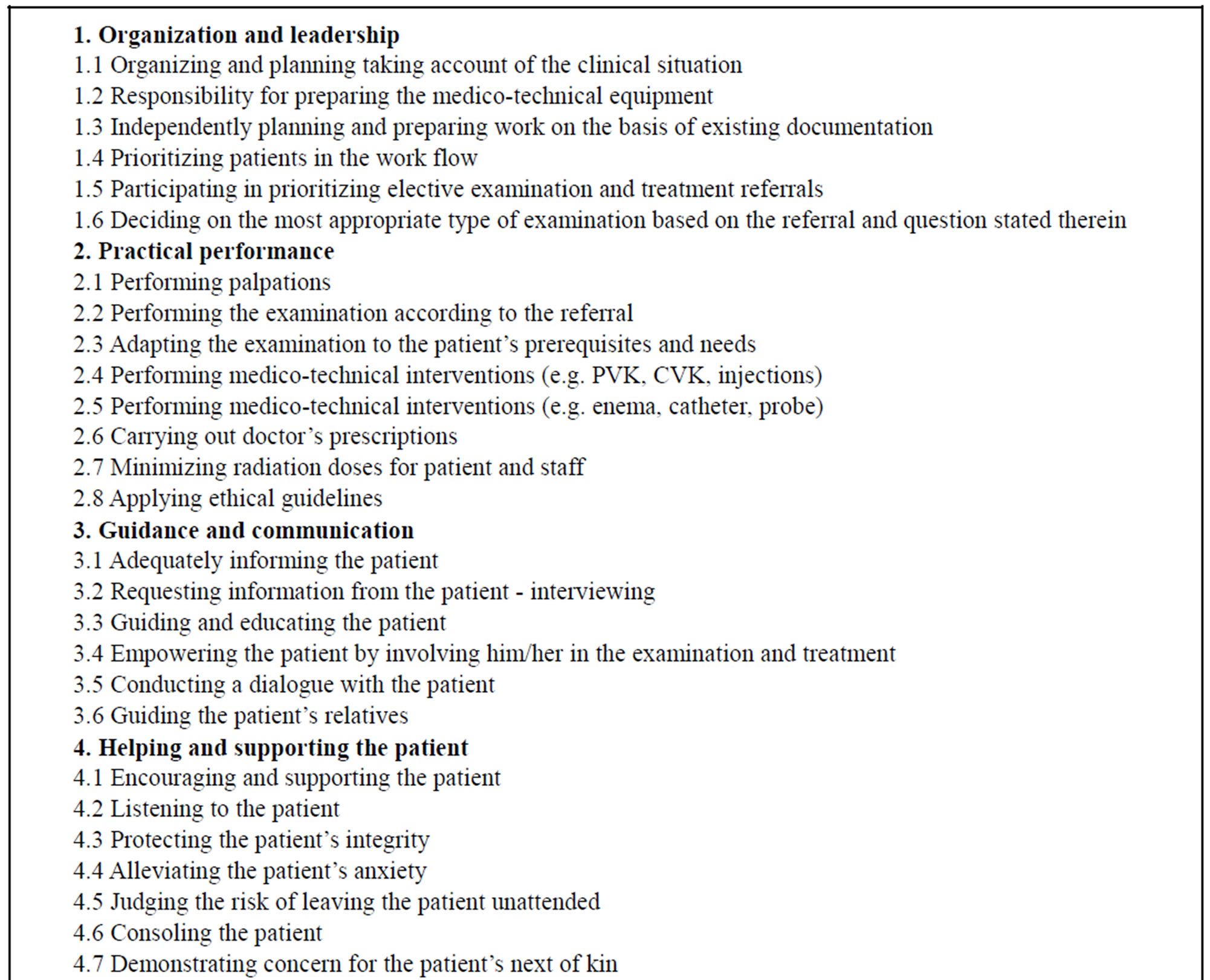

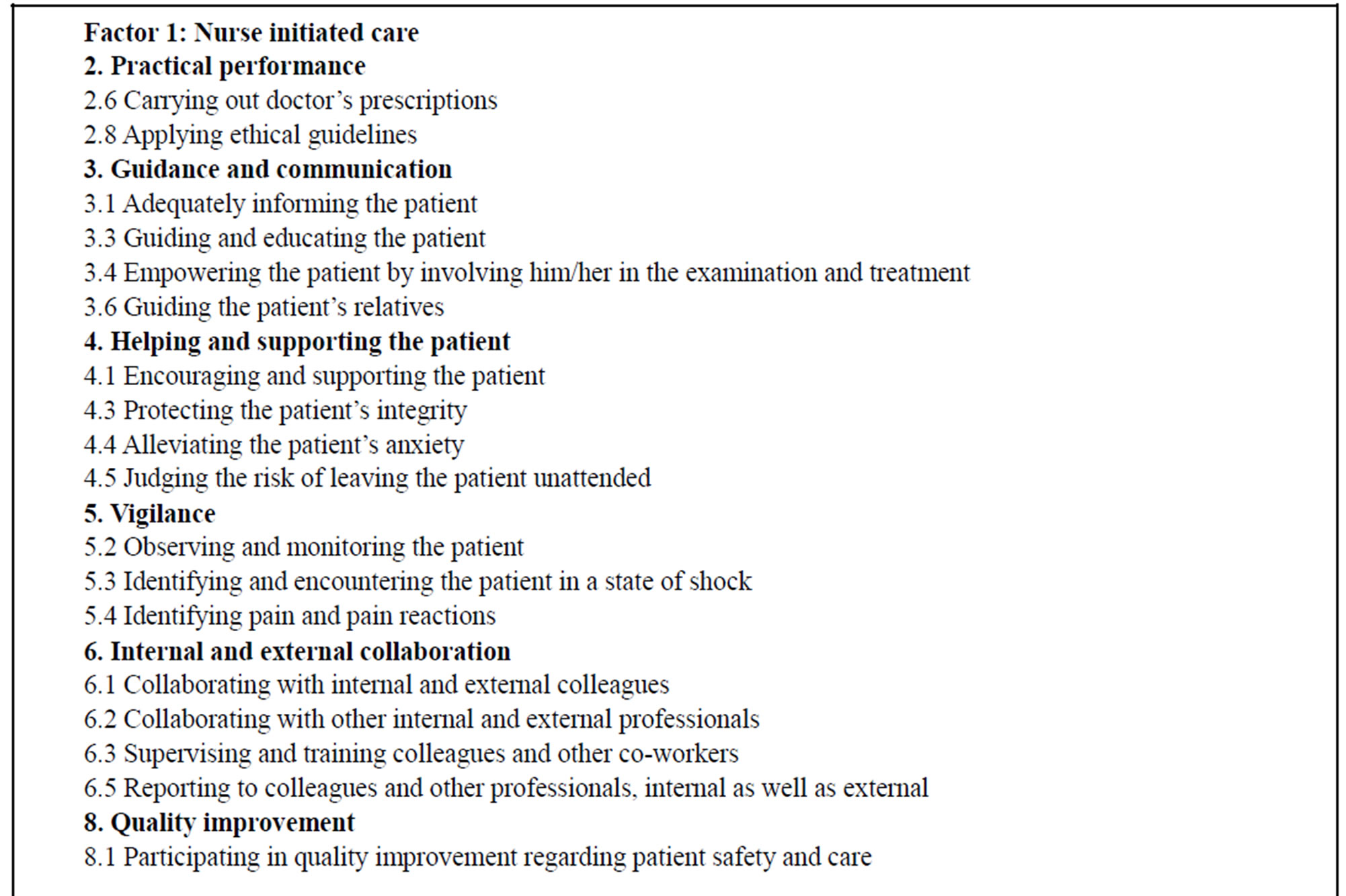

The amended pilot-tested 54-item version of the RCS was used to construct a web-based questionnaire. The RCS was divided into eight different competencies with six to eight items in each area (Figure 1). Every item had two levels; valuation of radiographic competence and frequency of its use, each were answered on a 10-point scale. After every section a space was provided for comments. The web-based questionnaire included instructions for participants and an opportunity to obtain demographic data including; age, sex, professional status, educational level and number of years in present position.

2.3. Phase II. Item Reduction and Psychometric Evaluation

2.3.1. Sample and Design

Radiographers from all over Sweden were identified from a register administered by the Swedish Association of Health Professionals (SAHP). The SAHP is a trade union and professional organization for radiographers, nurses, midwives and biomedical scientists. The inclusion criterion was clinically active participants currently working as radiographers. Of the 3592 Swedish radiographers listed in the SAHP, 2167 were members of the SAHP at the time of the study, of whom 1772 met the inclusion criteria. Using the register, a computer systematically generated a list of 500 radiographers who were invited to participate.

In late November 2010, a link to the web-based questionnaire, comprising of the RCS was e-mailed to the participants. An accompanying letter was distributed, containing information about the study, that participation was voluntary and that confidentiality would be maintained at all times. Informed consent was obtained before the participant completed the questionnaire. The first reminder was sent after one week and a second after two weeks. This resulted in 200 responses, a response rate of 40%. As the number of responses was considered low, a new computer generated list of 500 participants was chosen from the SAHP register and a reminder sent after two weeks. A total of 1000 questionnaires was distributed, resulting in 406 responses (40.6%).

2.3.2. Item Reduction

All data were analysed using SPSS 18.0 for Windows (SPSS Inc., Chicago, Illinois, USA). The number of items was reduced in two phases. Firstly, a corrected item-total correlation and Cronbach’s alpha if item deleted, was conducted on the 54-item questionnaire [23- 25]. Items with low correlations, i.e. ≤0.5, were removed one at a time and new item-total statistics calculated on each occasion. Secondly, repeated explorative factor analyses (Varimax type with Kaiser’s Normalization) were conducted on the remaining items [19,20]. One item was removed at a time from the factor with the lowest factor loading. A new factor analysis was performed each time an item was extracted. According to Field [26], factor loadings of >0.50 were considered sufficient.

2.3.3. Construct Validity

Construct validity (i.e., to emphasize a clear and theoretically sound factor structure) was assessed using principal component analyses with Varimax rotation with Kaiser’s Normalization [19,20]. Initially, data were examined with Bartlett’s test of sphericity, as well as with

Figure 1. A description of the initial 54-item version of the RCS, the item reduction and items included in factor 1 and factor 2 of the validated 28-item version of the RCS.

the measure of sample adequacy in each variable and overall. The number of factors extracted was decided by the Kaiser criteria (Eigenvalue < 1.0). Catell’s scree test was also used to control for the number of tentative factors to be retained [27]. Pett et al. [25] recommend that a newly developed instrument should explain 60% of the total variance.

2.3.4. Internal Consistency Reliability

The internal consistency reliability was established using Cronbach’s alpha coefficient [19,20,23]. With regard to developing a new instrument, the lowest value for Cronbach’s alpha coefficient was set at >0.70 [24].

2.3.5. Floor and Ceiling Effects and Missing Data

The proportion of floor and ceiling effects (people obtaining minimum and maximum scores respectively) among the items was also examined as were missing data [19].

2.4. Ethical Considerations

This study was conducted in accordance with the principles outlined in the Declaration of Helsinki [28] and according to The Ethical Guidelines for Nursing Research in the Nordic Countries [29], as well as to The Swedish Law for Ethical Approval for Research on Humans [30] and the The Official Secrets Act [31]. Subjects were informed that participation was voluntary, that the data would be treated confidentially and that they could withdrawal from the study at any time. It was impossible to associate any specific answer with a given participant. Completing the questionnaires implied informed consent.

3. RESULT

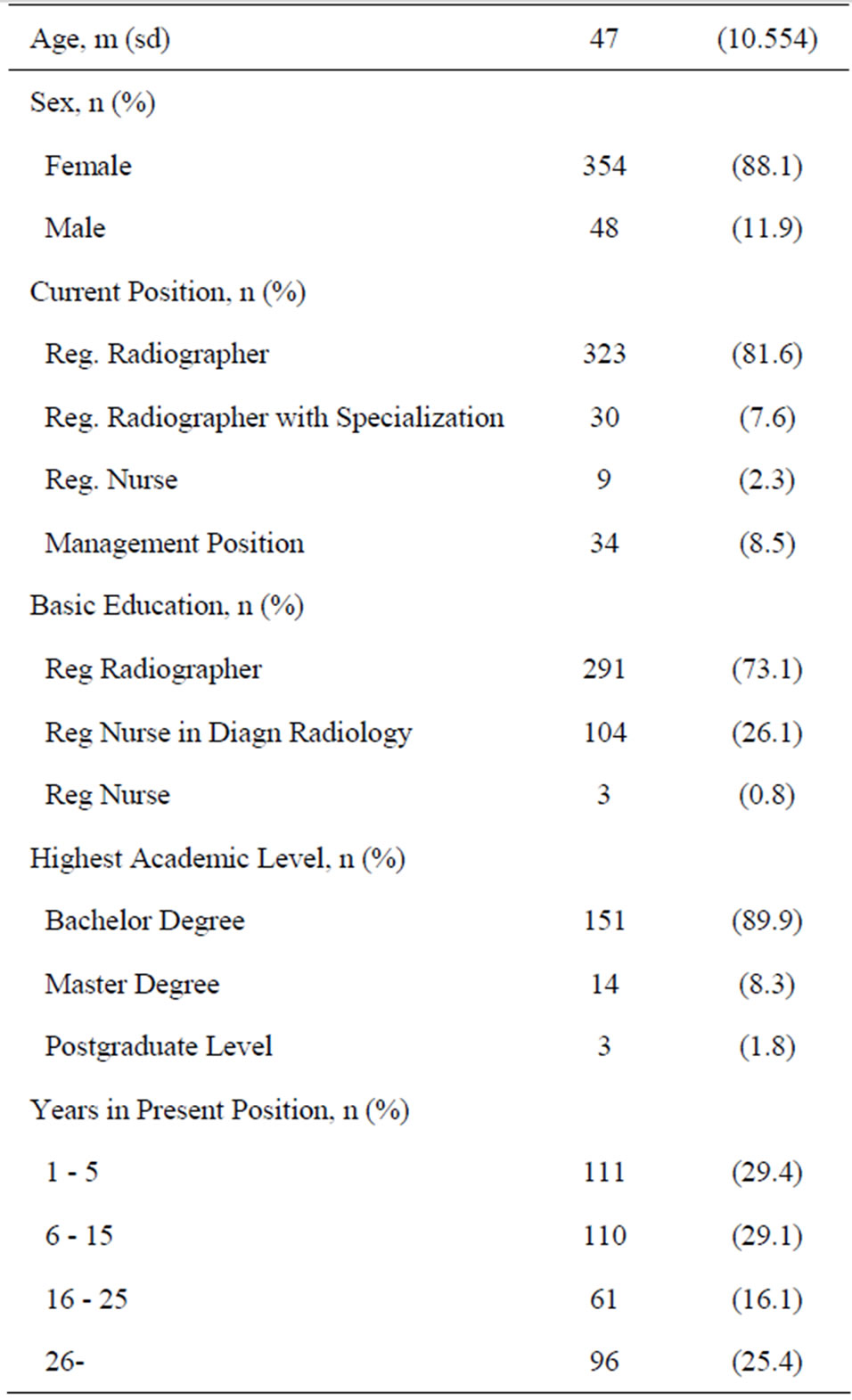

Valid questionnaires were obtained from 406 respondents with clinical experience in diagnostic radiology departments. The mean age of the participants was 47 years (+SD 10.55), ranging between 22 and 66 years, 88% of whom were women (Table 1).

3.1. Item Reduction of the RCS

In the first step, the use of corrected item-total correlation and Cronbach’s alpha if item deleted led to the removal of 12 items with low correlations (<0.5) from the 54-item scale. In the second step, several explorative factor analyses were performed on the remaining 42 items. Principal component analyses were conducted to obtain the solution with optimal scale variance. One item at a time was removed from the factor with the lowest loading which led to a further 14 items being removed.

Table 1. Characteristics of the sample (n = 406).

Figure 1 presents the 26 items that were removed from the eight competencies in the initial 54-item questionnaire.

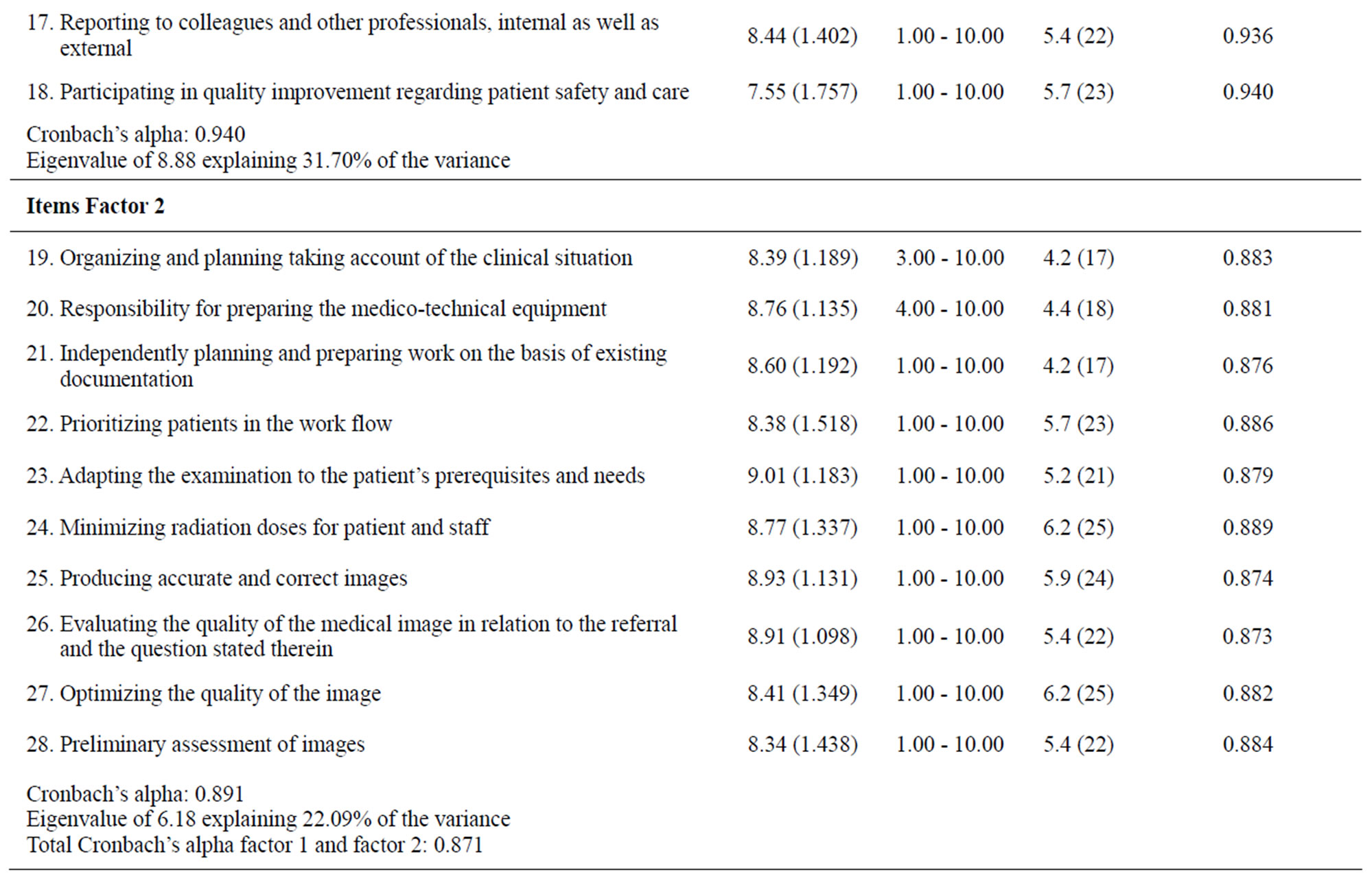

3.2. Validity of the RCS

A series of exploratory factor analyses was performed to investigate the complex interrelationships among the variables. As the factor structure decided by the Kaiser criteria was irrational (not demonstrated in detail here), the items were forced into a two factor solution. The items in the two identified factors had fairly good communality values of >0.40 with the exception of one; “optimizing of radiation doses to patient and personnel”, which had 0.34. There were 18 items in factor 1 and 10 items in factor 2 (Table 2). Factor 1 was labelled “Nurse initiated care” and factor 2 “Technical and radiographic processes”. The two factors appeared to be clearly defined and quite different from each other. The Scree plot supported the two-factor model, with two factors clearly above the “elbow” (Figure 2). The first factor explained 31.7% and the second 22.1% of the total variance. As indicated in Figure 1, the items in factor 1 had their origin in categories two, three, four, five, six and eight of the eight competencies. The items in factor 2 originated in categories one, two and seven. Only one category was found to belong to both factors 1 and 2.

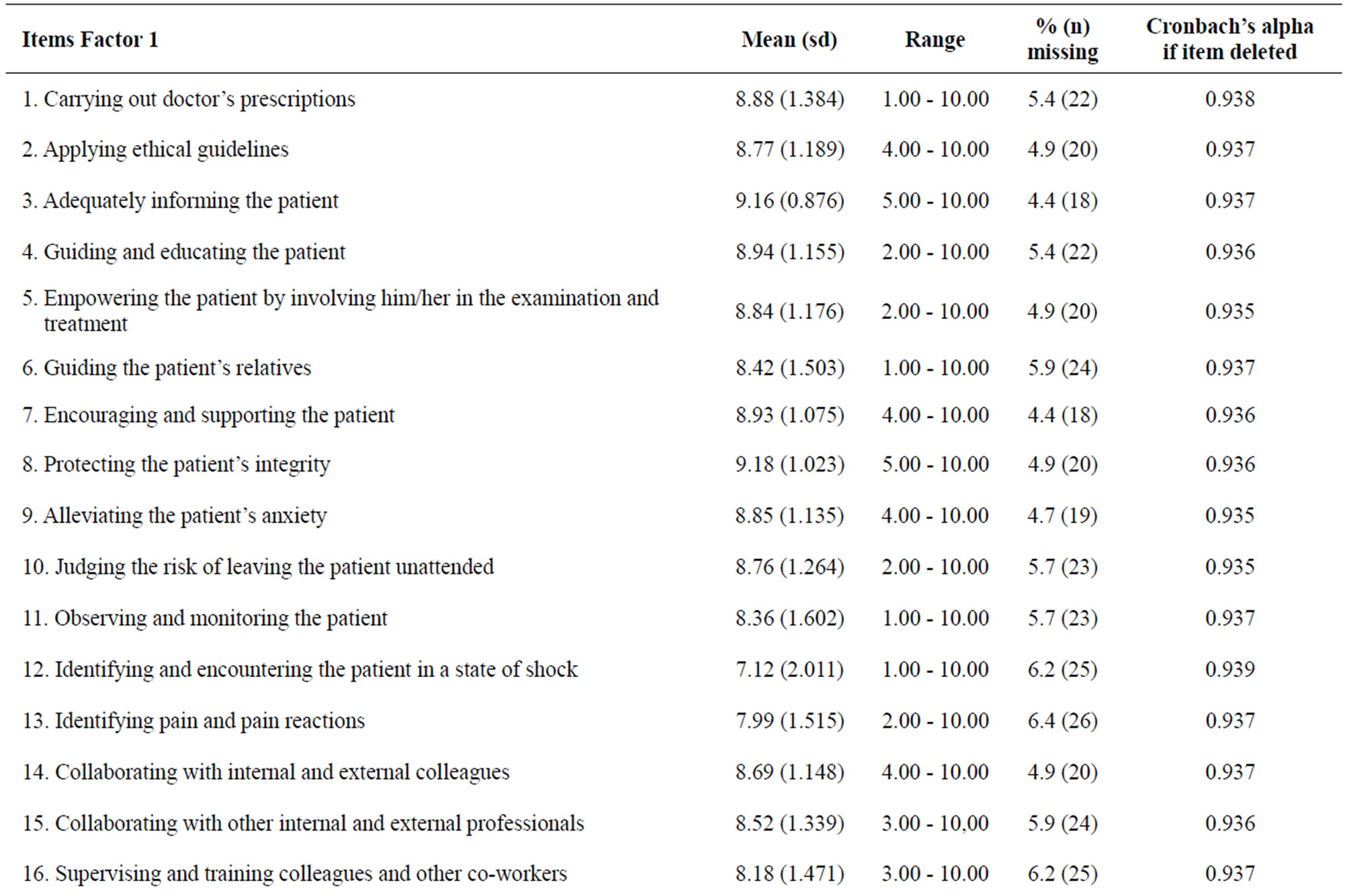

3.3. Reliability of the RCS

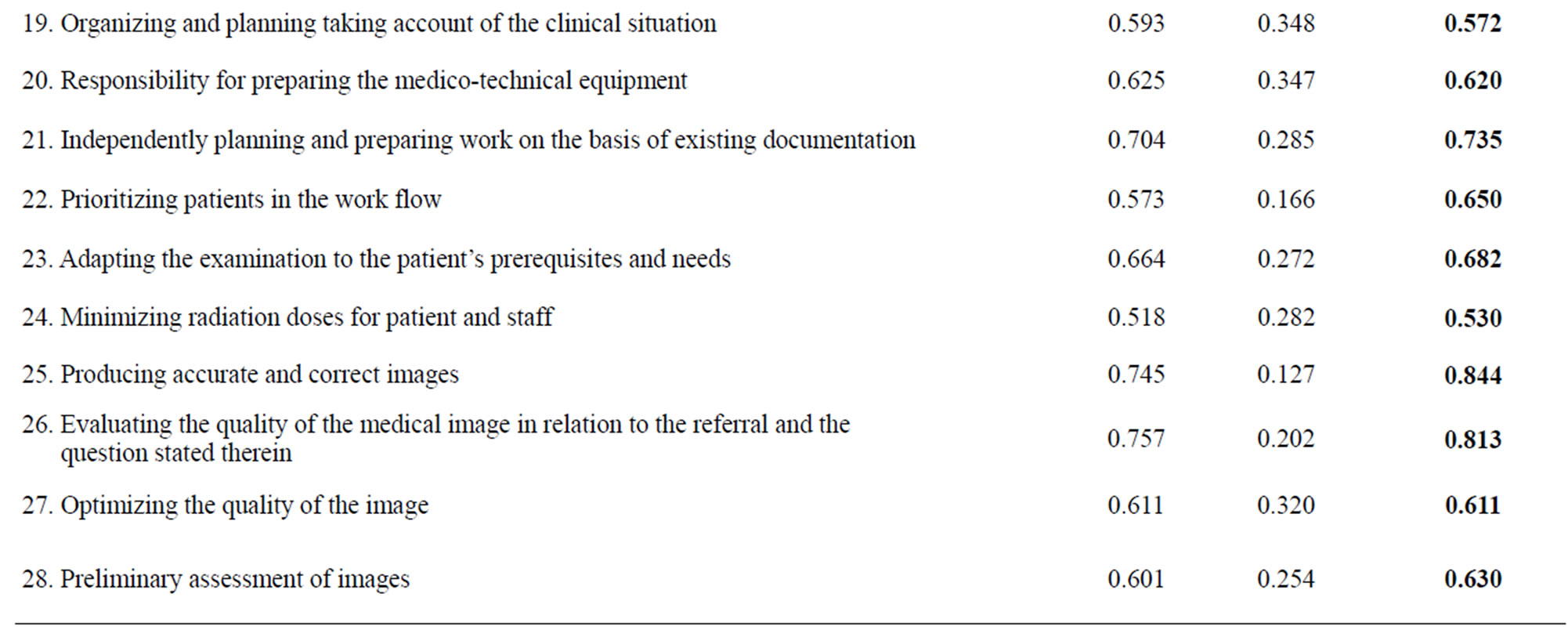

The Cronbach’s alpha coefficient was 0.87 for the 28- item scale. The coefficient for the first factor was 0.94 and for the second 0.89. The item-total correlations in the first factor varied between 0.54 and 0.77, and in the second between 0.52 and 0.76 (Table 3). The item with the lowest correlation in factor 1 was labelled; “participating in quality improvement regarding patient safety and care”, while the item with the highest correlation was labelled; “alleviating the patient’s anxiety”. In factor 2, the corrected item-total correlation varied from 0.52 to 0.78. The item with the lowest correlation was labelled; “evaluating the quality of the medical image in relation to the referral and question stated therein”. The item with highest correlation was labelled; “minimizing radiation doses for patient and staff” (Table 3).

Floor and Ceiling Effects as Well as Item Response Rates

As indicated in Table 3, the distribution of scores spannthe entire range (i.e., 1 - 10) with no floor and ceiling effect problems identified. The results indicated that the number of participants did not exceed 15% of the anticipated floor and ceiling effects. The response rate for each item in the 28-item instrument was high (range: 93.6% to 95.8%).

Table 2. Item-total correlation and factor loading. 2-factor solution. Factor 1 (number 1-18) and Factor 2 (number 19-28).

4. DISCUSSION

The purpose of this study was to develop and test the psychometric properties of a specific instrument, the RCS, aimed at measuring radiographers’ competence. There is, to the best of our knowledge, no specific and validated instrument for measuring such competence. By presenting a valid and reliable tool for measuring competence, this study contributes to the current knowledge base of radiographers’ competence. We found that the validated 28-item version of the RCS demonstrated satisfactory validity and reliability, suggesting that the instrument can be used to measure competence. Furthermore, the identified two factor solution appeared to capture the core of the radiographers’ competence in relation to the human being and the technology within the scope

Table 3. Descriptive and reliability of items, 2-factor solution. Factor 1 (number 1-18) and Factor 2 number (19-28).

Figure 2. A Scree plot with two factors above the “elbow”.

of a short encounter [16].

In the first phase of the study, face, content and construct validity was tested. Content validity is deemed the most important type of validity, as it ensures congruence between the research objective and the data collection tool [20]. The evidence of content validity in the pilot test of the RCS was based firstly on a study by Andersson et al. [16] and secondly on the judgments of 16 strategically selected specialists with experience in the diagnostic radiology field. Face validity was verified by assessing that the instrument really measured the intended concept [19,21]. Content relevance, clarity, concreteness, comprehensibility and readability of the scale were found to be adequate. The information obtained from the specialists was also used to modify items in the questionnaire. The final selection of items included in the web-based version of the RCS was based on agreement among the authors about the relevance of the items [21]. It is a strength to demonstrate that the theoretical construct behind the instrument is solid [25]. The instrument can thus be considered a fruitful symbiosis of an inductive and a deductive approach.

The second phase focused on reducing the initial 54- item RCS to the final 28-item version and involved two steps. Firstly, corrected item-total correlation and Cronbach’s alpha if item deleted reduced the number of items to 42. Low values indicate low discrimination ability for the specific item [24]. An acceptable level of item-total correlation for a newly developed instrument is >0.3, but >0.4 is preferable for an established instrument [20]. 12 items had an item-total correlation of <0.5. One item labelled “issuing written reports on one’s own medical imaging” was removed because it had the lowest corrected item-total correlation (0.26). This may indicate that Swedish radiographers do not yet make their own reports as, for example, radiographers from the UK [32, 33]. Four items regarding involvement in research and development work were also removed from the scale due to having correlations of <0.50. The reason for this may be that research is not as important in the radiographers’ daily work as efficiency (i.e., the number of examinations). On the other hand, developments in diagnostic radiology have opened up additional areas for research and the corresponding need to implement the research and development findings in clinical practice [34]. Rather surprisingly another two items dealing with medical technical procedures also had low correlations. As both these competencies are frequently used in the radiographers’ clinical work, the low correlations are difficult to explain.

In the second step of the process of reducing the items from the 42 to the final 28-item scale, an explorative factor analysis was used. Several factor solutions were performed and finally a two-factor solution was chosen, which presented two factors clearly about the elbow [27]. When scrutinizing the factor analysis procedure it is recommended that the number of participants should be considered in relation to the number of items in order to conduct a correct explorative factor analysis [19,20]. However, there are large differences in recommendations of an acceptable sample size when performing a factor analysis [25,35]. Some authors recommend five respondents per item [36] and others ten [24]. In our study, the recommended ratio of 5 respondents per item implied a sample of at least 270, which was more than fulfilled by our sample of 406. Furthermore, the Kaiser-Mayer-Olkin measures also indicated that our sample was adequate for a factor analysis, as values of >0.60 have been suggested as the minimum for a satisfactory factor analysis [36]. Low factor loadings indicate that an item measures something unrelated to the scale as a whole [19]. Catell’s scree test was also used to control the number of tentative factors to be retained [27]. It is recommended to retain all factors above the elbow or break in the plot, as these factors contribute most to the explanation of the variance in the data [37]. We found that the factor analysis explained almost 54% of the total variance (i.e., 31.7% on factor 1 and 22.1% on factor 2), which can be considered relatively low in relation to the desired 60%. However, factor analytical procedures are based on both statistical procedures and theoretical assumptions [25]. The results (i.e., the construct validity) might therefore be deemed acceptable, since the two factors included items that were sound from a theoretical perspective [16].

Reliability can be assessed in terms of internal consistency which refers to the homogeneity of the instrument [19]. In well-established questionnaires, alpha is recommended to be >0.80 [35]. In this study >0.70 was used since RCS is a newly developed instrument. The results of the item analysis [24] were found to have good internal consistency reliability. The RCS as a whole had satisfactory internal consistency reliability. Cronbach’s alpha was considered high for the 28-item questionnaire, as well as for each of the two factors. Furthermore, no major problems were identified with floor and ceiling effects. The distribution of scores ranged over the whole span (i.e., 1 - 10) for all of the items.

No other specific instruments were identified that allow comparisons, but when comparing the RCS with the NCS [8] and a previous competence scale by Benner [7], the content of the first RCS factor; “Nurse initiated care” can be compared with items in five of seven categories of these other two scales. The content in the second RCS factor; “Technical and radiographic processes” can be related to items in two categories described by both Meretoja et al. [8] and Benner [7]; therapeutic interventions, ensuring quality and work role. However, the unique focus of the RCS is on competence in a specific high technology setting, quite different from a medical or surgery ward, and the content of the items in the second factor reflect the fundamental elements of radiography. Another important aspect is the number of items included in a scale. The NCS includes 73 [8] and the CIRN 58 items [9]. It is crucial to have a simple, uncomplicated questionnaire, since many instruments are too timeconsuming to administer in clinical practice [19]. Compared to other competence scales, the RCS seems to be a simple tool for use in clinical practice.

The use of a self-assessment tool allows radiographers to consider their clinical work and can contribute to a baseline for evaluation. The RCS could be used in areas such as management, patient safety and quality improvements to assist managers and administrators when planning and evaluating competence development related to radiographers’ clinical work situation. Moreover, the RCS could also be valuable for radiographers to reflect upon their own competence, role and possibilities for development. Competence assessment may be seen as a rewarding process as it provides information about less visible matters. The information obtained may be a help in the nursing process to safeguard or restore patients’ health.

There are some limitations to this study that need to be considered. One is the response rate of 40.6%. Polit and Beck [38] hold a response rate of 50% to be satisfactory. As the study employed anonymous return that implied consent, it was not possible to identify those who did not participate. One reason for the high drop-out could be that the SAHP membership register may not contain up to date private e-mail addresses of members and many employees are not allowed to answer private e-mails during working hours. Another might be the length of the original RCS, as the web-based 54-item version could be perceived too comprehensive and time-consuming. Due to the low response rate, we distributed the questionnaire twice. However, most important is the number of respondents to each item and not the total number of respondents in this study. The response rate for each item was very high in the 28-item instrument. Furthermore, analysis of criterion-related validity was not possible due to the fact that no gold standard instrument could be identified. Moreover, no test-retest analysis was conducted, which could have strengthened reliability and revealed whether the RCS is valid in other limited samples (e.g., among radiographers working in an intervenetional radiology or an emergency radiology department).

In conclusion, the newly constructed RCS is a short, valid and reliable scale that can be easily administered to capture the competence of radiographers. Forthcoming studies will conduct further tests of the psychometric properties of the RCS among radiographers in different clinical settings in Sweden. The RCS might have the potential for use in comparative studies in various work environments as well as in different countries. Furthermore, longitudinal studies where changes in competencies can be measured over time are also needed as they lead to the development of radiography education and the content of the curriculum.

5. ACKNOWLEDGEMENTS

Our sincere thanks to the radiographers who participated in this study during the various phases of instrument development and testing for sharing their knowledge and competence related experiences and values. We would also like to express our thanks to Ulf Jakobsson, Associate Professor, nurse and statistician, for his contribution to the instrument development, to the Swedish Association of Health Professionals for supporting the study with the distribution of the questionnaire and Lund University for support and facilities.

REFERENCES

- McCready, T. (2007) Portfolios and the assessment of competence in nursing: A literature review. International Journal of Nursing Studies, 44, 143-151. doi:10.1016/j.ijnurstu.2006.01.013

- Redfern, S., Norman, I., Calman, L., Watson, R. and Murrells, T. (2002) Assessing competence to practice in nursing: A review of the literature. Research Paper Education, 17, 51-77.

- Ramritu, P.L. and Barnard, A. (2001) New nurse graduates’ understanding of competence. International Nursing Review, 48, 47-57. doi:10.1046/j.1466-7657.2001.00048.x

- Watson, R., Calman, L., Norman, I., Redfern, S. and Murrells, T. (2002) Assessing clinical competence in student nurses. Journal of Clinical Nursing, 11, 554-555. doi:10.1046/j.1365-2702.2002.00590.x

- While, A.E. (1994) Competence versus performance: Which is more important? Journal of Advanced Nursing, 20, 525-531. doi:10.1111/j.1365-2648.1994.tb02391.x

- Eraut, M., Germain, J., James, J., Cole, J.J., Bowring, S. and Pearson, J. (1998) Evaluation of vocational training of science graduates in the NHS. University of Sussex School of Education, Brighton.

- Benner, P. (1984) From novice to expert: Excellence and power in clinical nursing practice. Addison-Wesley, Menlo Park.

- Meretoja, R., Isoaho, H. and Leino-Kilpi, H. (2004) Nurse Competence scale: Development and psychometric testing. Journal of Advanced Nursing, 47, 124-133. doi:10.1111/j.1365-2648.2004.03071.x

- Liu, M., Kunaiktikul, W., Senaratana, W., Tonmukayakul, O. and Eriksen, L. (2007) Development of competency inventory for registered nurses in the People’s Republic of China: Scale development. International Journal of Nursing Studies, 44, 805-813. doi:10.1016/j.ijnurstu.2006.01.010

- Johannessen, K.S. (1999) Praxis och tyst kunnande. Dialoger, Stockholm.

- Polanyi, M. (1967) The tacit dimension. Doubleday, Garden City.

- Dreyfus, H.L., Dreyfus, S.E. and Athanasiou, T. (1986) Mind over machine: The power of human intuition and expertise in the era of the computer. Basil Blackwell, Oxford.

- Dreyfus, H.L. (1982) Husserl, intentionality and cognitive science. MIT Press, Cambridge.

- Benner, P.E., Chesla, C.A. and Tanner, C.A. (1996) Expertise in nursing practice: Caring, clinical judgment, and ethics. Springer Pub. Co., New York.

- Reeves, P.J. (1999) Models of Care for Diagnostic Radiography and their use in the education of undergraduate and postgraduate radiographers. Dissertation, University of Wales, Bangor.

- Andersson, B., Fridlund, B., Elgan, C. and Axelsson, Å. (2008) Radiographers’ areas of professional competence related to good nursing care. Scandinavian Journal of Caring Sciences, 22, 401-409. doi:10.1111/j.1471-6712.2007.00543.x

- Campbell, B. and Mackay, G. (2001) Continuing competence: An Ontario nursing regulatory program that supports nurses and employers. Nursing Administration Quarterly, 25, 22-30.

- The Swedish Society of Radiographers and The Swedish Association of Health Professionals (2008) Code of Ethics for Radiographers. The Swedish Association of Health Professionals, Stockholm. http://www.swedrad.com

- Streiner, D.L. and Norman, G.R. (2008) Health measurement scales: A practical guide to their development and use. 4th Edition, Oxford University Press, Oxford.

- Burns, N. and Grove, S.K. (2001) The practice of nursing research: Conduct, critique & utilization. 4th Edition, Saunders, Philadelphia.

- Lynn, M.R. (1986) Determination and quantification of content validity. Nursing Research, 35, 382-385. doi:10.1097/00006199-198611000-00017

- Berk, R.A. (1990) Importance of expert judgment in content-related validity evidence. Western Journal Nursing Research, 12, 659-671. doi:10.1177/019394599001200507

- Cronbach, L.J. and Warrington, W.G. (1951) Time-limit tests: Estimating their reliability and degree of speeding. Psychometrika, 6, 167-188. doi:10.1007/BF02289113

- Nunnally, J.C. and Bernstein, I.H. (1994) Psychometric theory. 3rd Edition, McGraw-Hill, New York.

- Pett, M.A., Lackey, N.R. and Sullivan, J.J. (2003) Making sense of factor analysis: The use of factor analysis for instrument development in health care research. SAGE, London.

- Field, A. (2005) Discovering statistics using SPSS. 2nd Edition, SAGE, London.

- DeVellis, R.F. (2003) Scale development: Theory and applications. 2nd Edition, Sage, Newbury Park.

- MFR-Rapport (2002) Riktlinjer för etisk värdering av medicinsk humanforskning: Forskningsetisk policy och organisation i Sverige. (Guidelines for ethical valuation of medical human research: Research ethics about policy and organization in Sweden.) 2nd Edition, Swedish Research Council in Medicine, Stockholm.

- Sykepleiernes Samarbeid i Norden (2003) Etiska Riktlinjer för Omvårdnadsforskning i Norden (in Swedish). (Ethical recommendations for nursing research in the Nordic countries.) http://www.ssn-nnf.org/vard/index.html

- SFS 2003: 460. Lag om etikprövning av forskning som avser människor (in Swedish). (Law for ethical approval regarding research on humans.) Stockholm, The Riksdag. http://www.riksdagen.se/webbnav/index.aspx?nid=3911&bet=2003:460

- The Official Secrets Act 1989 (Prescription) (Amendment). http://www.legislation.gov.uk/uksi/2007/2148/pdfs/uksi_20072148_en.pdf

- Smith, T.N. and Baird, M. (2007) Radiographers’ role in radiological reporting: A model to support future demand. Medical Journal of Australia, 186, 629-631.

- Price, R.C. and Le Mausurier, S.B. (2007) Longitudinal changes in extended roles in radiography: A new perspective. Radiography, 13, 18-29. doi:10.1016/j.radi.2005.11.001

- Reeves, P.J. (2008) Research in medical imaging and the role of the consultant radiographer: A discussion. Radiography, 14, 61-64. doi:10.1016/j.radi.2008.11.004

- Rattray, J. and Jones, M.C. (2007) Essential elements of questionnaire design and development. Journal of Clinical Nursing, 16, 234-243. doi:10.1111/j.1365-2702.2006.01573.x

- Tabachnick, B.G. and Fidell, L.S. (2007) Experimental designs using ANOVA. Thomson/Brooks/Cole, Belmont.

- Pallant, J. (2010) SPSS survival manual: A step by step guide to data analysis using SPSS. 4th Edition, Open University Press/McGrawHill, Maidenhead.

- Polit, D.F. and Beck, C.T. (2008) Nursing research: Generating and assessing evidence for nursing practice. 8th Edition, Wolters Kluwer Health/Lippincott Williams & Wilkins, Philadelphia.

NOTES

*Corresponding author.