Open Journal of Statistics

Vol.05 No.04(2015), Article ID:57440,5 pages

10.4236/ojs.2015.54034

On the Approximation of Maximum Deviation Spline Estimation of the Probability Density Gaussian Process

Mukhammadjon S. Muminov1*, Kholiqjon S. Soatov2

1Institute of Mathematics, National University of Uzbekistan, Tashkent, Uzbekistan

2Tashkent University of Information Technologies, Tashkent, Uzbekistan

Email: *m.muhammad@rambler.ru

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 23 March 2015; accepted 22 June 2015; published 26 June 2015

ABSTRACT

In the paper, the deviation of the spline estimator for the unknown probability density is approximated with the Gauss process. It is also found zeros for the infimum of variance of the derivation from the approximating process.

Keywords:

Spline-Estimator, Distribution Function, Gauss Process

1. Introduction

The present work is a continuation of the work [1] , that’s why we use notations admitted in it. We shall not turn our attention to more detailed review because it is given [1] .

Let  be a simple sample from the parent population with the probability density

be a simple sample from the parent population with the probability density  concentrated and continuous on the segment

concentrated and continuous on the segment . Let

. Let  be a cubic spline interpolating values

be a cubic spline interpolating values  at the points

at the points ,

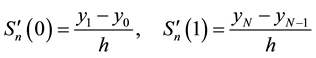

,  with the boundary conditions

with the boundary conditions

where ,

,  ,

,  ,

,  as

as .

.

Remind that

,

,

,

,

,

,

where

of Wiener processes.

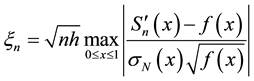

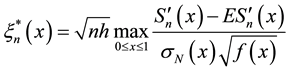

Denote by

and by

where

In the second section of the work, Theorem 2 and 3 are proven:

and

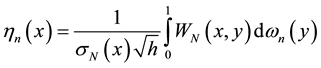

And it is also stated (Theorem 5) that

2. Formulation and Proof the of Main Results

It holds the following

Theorem 1. Let

The proof of this statement is easy, therefore we omit it.

Theorems 2 and Theorem 3 will be proved by the mthods given in [2] .

Theorem 2. Let

Then under our assumption a) and b) concerning

Proof. By the main Theorem from [1] ,

and for any

Set

Theorem 2 follows now from Theorem 1, relations (2) from [1] , inequalities (3) and (4), and the fact that the

random variables

Theorem 3. If conditions of Theorem 2 hold and

where

Proof. From the interpolation condition

we have

One can easily note that

in the points of interpolation

where

The relation (5) implies that for arbitrary

It remains to choose

Relations

Theorem 4. First order mean square derivations of the Gauss process

Let now

Theorem 5. 1) The variance of mean square derivations of the Gauss process

2) If the variance vanishes also in intervals

Proof. At the beginning of the proof of the theorem, we proceed as in [2] . Let

we get for

Substituting into (6)

and taking into account that

or

We find analogously

and also

Generalizing the obtained results, we have

Denote

implies

On the other hand,

where

Obviously,

i.e. at

The first part of Theorem 5 is proved.

Let pass to the proof of the second part. Both in the case of

is valid for

The explicit form of

Note, in this case

One can easily see that

The first part of Theorem 5 is proved.

At last, Theorems 2 and 3 imply that limit distributions of the random variables

coincide. However, the Gauss process

polation points for the spline, and

to investigate the distribution of the maximum of

References

- Muminov, M.S. and Soatov, Kh. (2011) A Note on Spline Estimator of Unknown Probability Density Function. Open Journal of Statistics, 157-160. http://dx.doi.org/10.4236/ojs.2011.13019

- Khashimov, Sh.A. and Muminov, M.S. (1987) The Limit Distribution of the Maximal Deviation of a Spline Estimate of a Probability Density. Journal of Mathematical Sciences, 38, 2411-2421.

- Stechkin, S.B. and Subbotin, Yu.N. (1976) Splines in Computational Mathematics. Nauka, Moscow, 272p.

- Hardy G.G., Littlewood, J.E. and Polio, G. (1948) Inequalities. Moscow. IL, 456 p. http://dx.doi.org/10.1007/BF01095085

- Berman, S.M. (1974) Sojourns and Extremes of Gaussian Processes. Annals of Probability, 2, 999-1026. http://dx.doi.org/10.1214/aop/1176996495

- Rudzkis, R.O. (1985) Probability of the Large Outlier of Nonstationary Gaussian Process. Lit. Math. Sb., XXV, 143- 154.

- Azais, J.-M. and Wschebor, M. (2009) Level Sets and Extrema of Random Processes and Fields, Wiley, Hoboken, 290p.

- Muminov, M.S. (2010) On Approximation of the Probability of the Large Outlier of Nonstationary Gauss Process. Siberian Mathematical Journal, 51, 144-161. http://dx.doi.org/10.1007/s11202-010-0015-6

NOTES

*Corresponding author.