Intelligent Information Management

Vol.2 No.10(2010), Article ID:2957,14 pages DOI:10.4236/iim.2010.210066

Adaptive System for Assigning Reliable Students’ Letter Grades

—A Computer Code

Department of Civil Engineering, College of Engineering Sciences

King Fahd University of Petroleum & Minerals, Dhahran, Kingdom of Saudi Arabia

E-mail: saghamdi@kfupm.edu.sa

Received March 19, 2010; revised June 10, 2010; accepted August 12, 2010

Keywords: Reliable Students’ Grades; Computer Code; Assigning Reliable Letter Grades

Abstract

The availability of automated evaluation methodologies that may reliably be used for determining students’ scholastic performance through assigning letter grades are of utmost practical importance to educators, students, and do invariably have pivotal values to all stakeholders of the academic process. In particular, educators use letter grades as quantification metrics to monitor students’ intellectual progress within a framework of clearly specified learning objectives of a course. To students grades may be used as predictive measures and motivating drives for success in a study field. However due to numerous objective and subjective variables that may by be accounted for in a methodological process of assigning students’ grades, and since such a process is often tainted with personal philosophy and human psychology factors, it is essential that educators exercise extra care in maximizing positive account of all objective factors and minimizing negative ramifications of subjectively fuzzy factors. To this end, and in an attempt to make assigning students’ grades more reliable for assessing true-level of mastering specified learning outcomes, this paper will: i) provide a literature review on previous works on the most common methods that have traditionally been in use for assigning students’ grades, and a short account of the virtues and/or vices of such methods, and ii) present a user-friendly computer code that may be easily adapted for the purpose of assigning students’ grades. This would relieve educators from the overwhelming concerns associated with mechanistic aspects of determining educational metrics, and it would allow them to have more time and focus to obtain reliable assessments of true-level of students’ mastery of learning outcomes by accounting for all possible evaluation components.

1. Introduction

Educators are entrusted to provide their best judgments on students’ intellectual progress and achievements within a specified construct of learning objectives and outcomes for a particular course. Such judgments are however more often than not tainted with personal philosophy and human psychology factors, and despite the numerous educational instruments (including: homework assignments, quizzes, examinations, projects, etc.) that influence such judgments, they are ultimately reduced to assigning a letter grade that should have high degree of reliabilities and must be always defensible under circumstances of possible filed grievances [1-3].

The use of grades as quantification metrics [4-6] to monitor the students’ intellectual progress have traditionally been through utilizing one of three forms: 1) criterion grading, or 2) normative grading, or 3) rubric grading. The most common methods and some of the less common methods of assigning students grades have been previously presented and discussed in the literature [7,8], and the various goals assigning grades are expected to achieve and the reliability of these assignments to achieve designated goals (as means for reward or penalty, or for communication to others, or for prediction of future performance) have also been discussed by numerous other researchers [9-11].

The criterion-referenced grading process [12] is based on a preset-grade-range criterion (as percentage of the total possible points) assigned for each letter grade (e.g. a percentage score range of 76% to 79 % may be assigned for a letter grade of C+). The method compares the performance of a student against preset criteria, it is highly dependent on designated learning outcomes for a course, and it is considered a more precise diagnostic-tool for the faculty (educator) to pinpoint to students particular strengths and weaknesses. This method may however lead to: 1) grade-assignment biases towards the upper end or the lower end of a grade-scale, and 2) improved collaboration amongst students as grades assigned for a given course would not be influenced by the individual performance of others in a students’ group. The method is sometimes referred to as a domain or mastery referencing procedure to assign grades to students.

The norm-referenced grading process [13] is based on the premise that students’ performances in a course represent a bell-shaped curve distribution that emulates a similar distribution of the learning outcomes designated for the course, and descriptive statistics are associated with this norm-based grading procedure. The curve of grades statistical distribution is centered on the mean score and the standard deviation is used as an index of scores’ dispersion around the mean score [14]. The method compares the performance of a student against the performance of other classmates, and it is highly dependent on the course content, but it is considered less precise diagnostic-indicator for a student (learner) to pinpoint to him particular weaknesses on which he must concentrate to improve his standing in a course. This method may further lead to: 1) more wide-spreading of grade-assignment with less biases towards the upper end or the lower end of a grade-scale, and 2) increased level of competition amongst students as grades assigned to one student in a course would be influenced by the individual performance of others in a students’ group. The method is sometimes referred to as a norm or a comparative referencing procedure to assign grades to students.

The rubric-referenced (generic) grading process [15] is used less in assigning students’ grades and is based on values assigned to descriptive-scale indicators. The descriptive indicators for the letter grades (namely: the passing letter grades A+, A, B+, B, C+, C, D+, D, or the unfavorable failing letter grade F) are clearly spilled-out with values attached to them to be most suitable for the level of performance-achievements demonstrated by a student for specified major and minor goals of a course. For instance a 75-poin ts achievement by a student-performance out of the total possible 100 points may be assigned a letter grade C indicating that “most major goals and minor goals of the course stated learning outcomes have been truly achieved”, while a 15- points achievement by a student-performance out of the total possible 100 points may be assigned a letter grade F indicating that “few goals of the course stated learning outcomes have been barely achieved ”, etc.

2. Grading Systems Attributes, Anchoring, and Automation

For educators it is a unanimously agreed upon fact that there is no single method of evaluation (i.e. assigning students’ grades) that would invariably prove effective in all formats of courses, and a method of evaluating a student’s performance should be adapted to fit the course learning objectives and expected outcomes [16,17]. It is however expected that once a method of assigning students’ grades is selected and is judged to be most suitable for the purpose it should be characterized by key functional attributes including:1) face and content validities, and 2) reliability, and realistic expectations. To these ends, face validity, on one hand, of a grading procedure must have clear and suitable metrics to measure the degree of relevance of the evaluation process to the course objectives, and such relevance must be transparent to the students as well. On the other hand, content validity, should provide suitable analysis (or design) case-studies so that the method of evaluation conform to the course objectives. An evaluation method (for assigning students’ grades) will further be termed reliable if it would invariably produce, with little variations, the same results (students’ grades) for the same students. But since it is well-known by educators (and students for this matter!) that under general circumstances a grade assigned to a student is not an absolute measure of his performance and true level of achievement in a course, and is often an artifact of the educator and/or the competencies (e.g. for the criterion-referenced grading) of the classmates enrolled in a course, every efforts should be exercised to account for all evaluation factors in an objective format that would endeavor to exclude subjective factors so that grades assigned would be true measure of students’ achievements. The reliability of an evaluation procedure (to assign students’ grades) is also constrained not only by the realistic expectations of the tools used for the evaluation but also by the individual being evaluated. The realistic expectations would define the number and type of evaluation parameters that may be developed and administered by the educator within a most-suitable time-frame for a course, and should provide suitable considerations to the fact that a student is also enrolled in other courses.

Analysis of the two most commonly used grading systems (namely: the normative and criterion procedures) further indicates that while the norm-referencing may be the most suitable procedure for assigning students’ grades, it requires using the unsatisfactory method of grading on a class-curve [15,18,19]. Therefore and to overcome the vices of either one of the two grading assignment systems it has been recommended [20] to use various anchor measures that would enable utilizing the virtues of norm-based grading without introducing the vices of class-curve-based grading.

It is therefore conspicuous that due to the numerous variables that may enter into a process of evaluating students through suitably selected quantification metrics (using a norm-referencing or a domain-criteria-referencing), this process may invariably suffer from a reliability-problem [2,21-24]. This may further result in un-intended negative ramifications on the learning-educational process and may even set the whole evaluative process in a true reduced-reliability dilemma. This process is, therefore, often categorized by academicians as truly one of the least favorable and highly daunting activities amongst the myriad of other activities undertaken by an educator. As such there is a real need for an educator to account for all possible parameters that would influence the evaluation process and properly weigh all relevant factors to increase the reliability of the procedure [14]. To this end the literature includes scores of disparate previous attempts that have been documented for automating the mechanistic aspects of the grading assignment process [25-26]. Therefore, the development and adapting the uses of an automated grading system would certainly be a relieve for educators from the prohibitive-drudgery of overwhelming numeric processing that is often typical within the grading-assignment process for medium-to-large size classes or for multi-section courses with unified grading-standards. It is believed that if the mechanistic aspects of the evaluative process are included within an automated and user-friendly procedure, educators’ efforts would then be more meaningfully focused on ensuring more reliable evaluation of students’ mastery of the designated learning outcomes in a given course. The following sections of this paper will present an adaptive [27] automated grading system designed as a FORTRAN Computer Code. The code has been developed to automate the mechanistic aspects of the evaluative process and has been further successfully tested and has been proven to be a reliable and practical tool for assigning students’ letter grades.

3. The Computer Code Taxonomy and Attributes

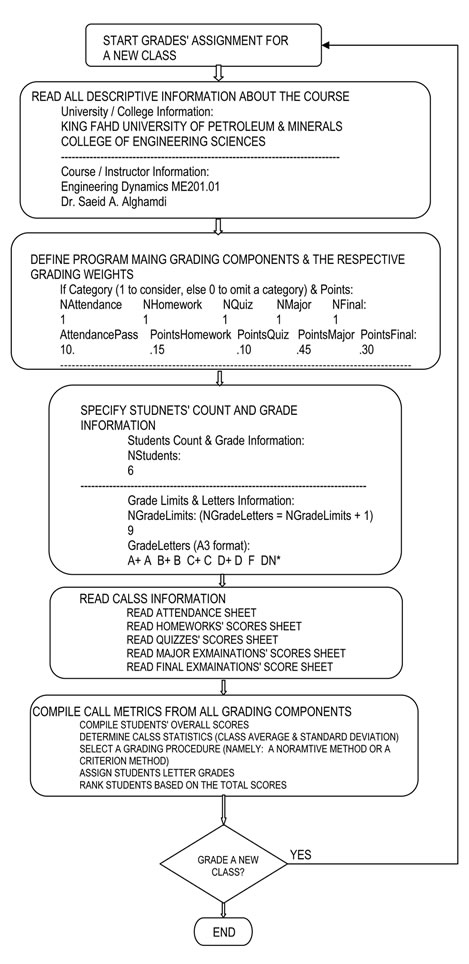

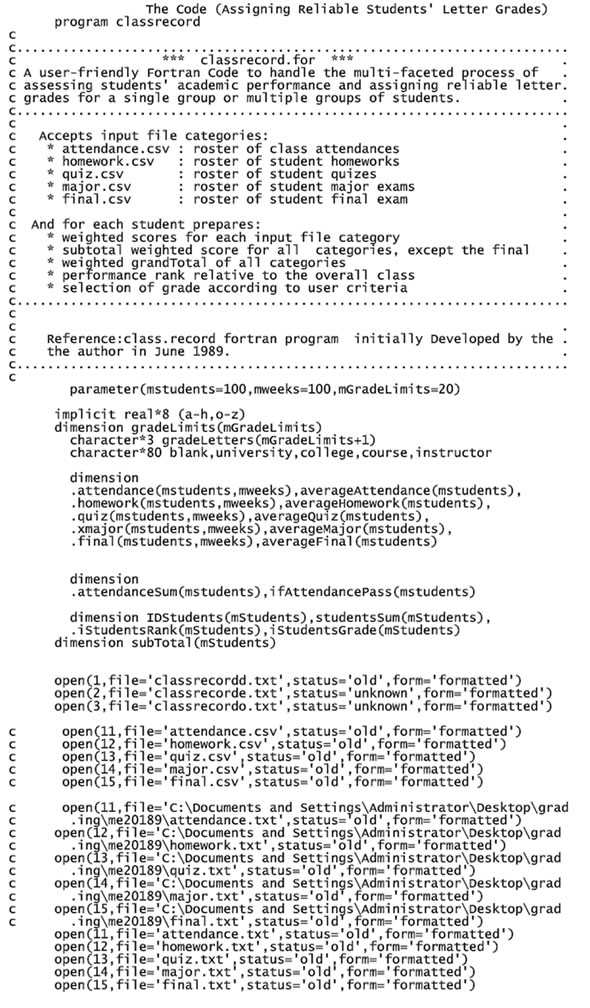

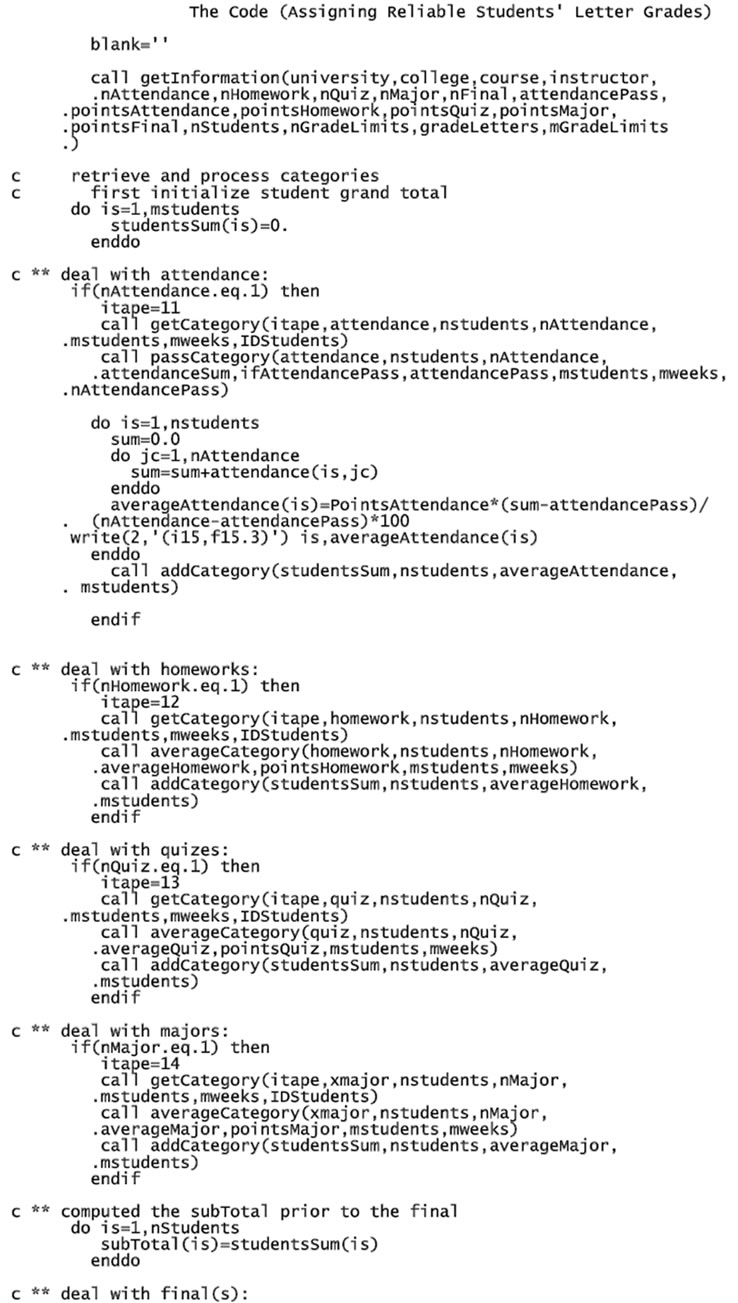

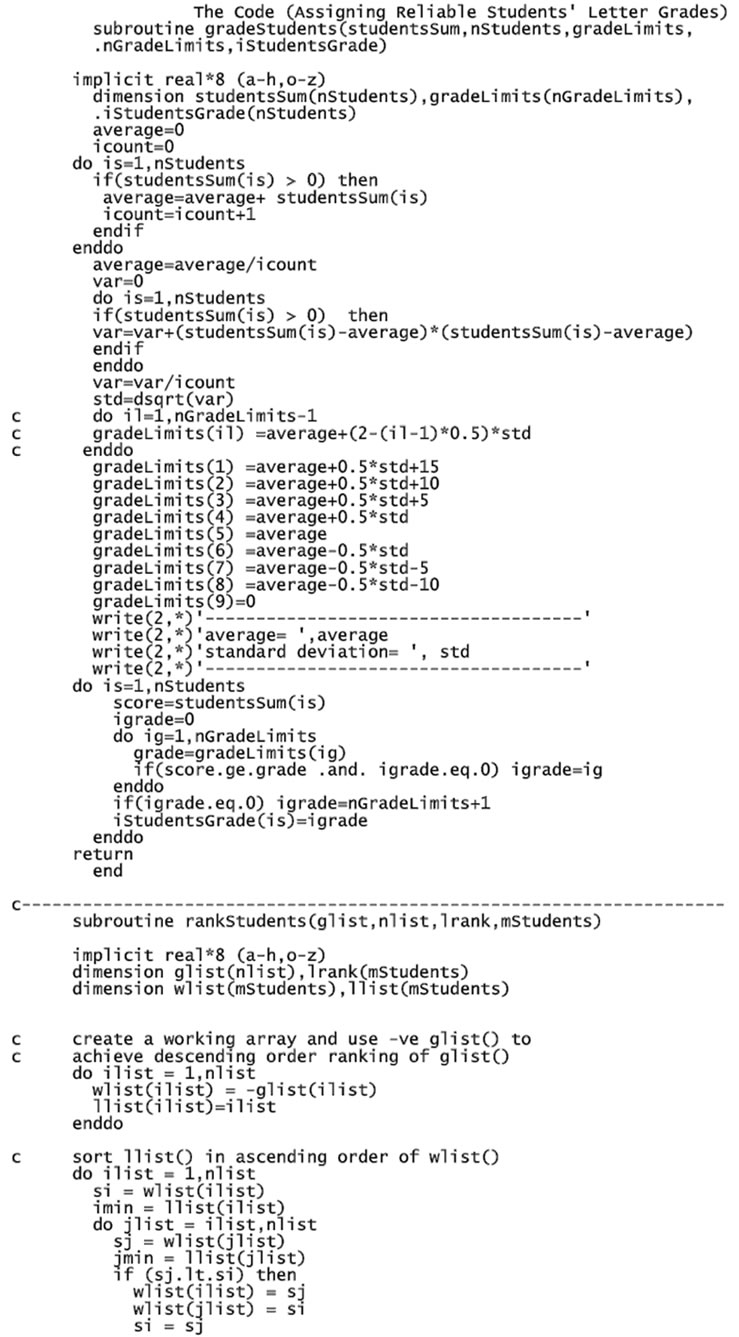

Based on the premise that an evaluative process (e.g. assigning students’ grades) would be highly more reliable if the mechanistic aspects of determining evaluation metrics are automated, an algorithmic process for assigning letter grades to students has bee automated through the development of a FORTRAN computer code “classrecord.for”. The overall taxonomy of the coding process is summarized in the flowchart shown in Figure 1. The code has several user-friendly features that make it easily adaptable to either a normative-referencing procedure or to a domain-referencing procedure. Complete listing of the code is given in Appendix I-a, and further clarifying details of the code structure are also available elsewhere [28]. Specifically, the coding procedure presented herein would enable the educator to:

1) Devise several input file categories that may include rosters for class attendances, student homeworks, students quizzes, student scores on major examinations, and students’ scores on the final examination (namely: attendance.csv; homework.csv; quiz.csv; major.csv; and final.csv, respectively).

2) Compute the evaluative metrics for a course by preparing weighted scores for each input file category entered for each student, compute pre-final (sub-total) weighted scores for all input categories, and determine weighted grandtotal scores for all input categories.

3) Use the grandtotal scores (course metrics) obtained based on considerations of all input categories to assign students’ grades by adapting the computer code to use a normative-referencing procedure or a domain-referencing procedure.

Compared to the method of using an ad hoc spread-sheet calculation, which invariably requires frequent redesigns of the spread-sheet [20], this code has the following main features:

1) It has simple text-input prepared in free-format;

2) It requires no prior programming knowledge on the part of the user;

3) It is highly valuable and is easily adaptable for assigning students’ grade for multi-sections courses and for providing detailed individual reports for each section [29];

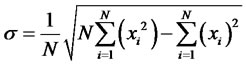

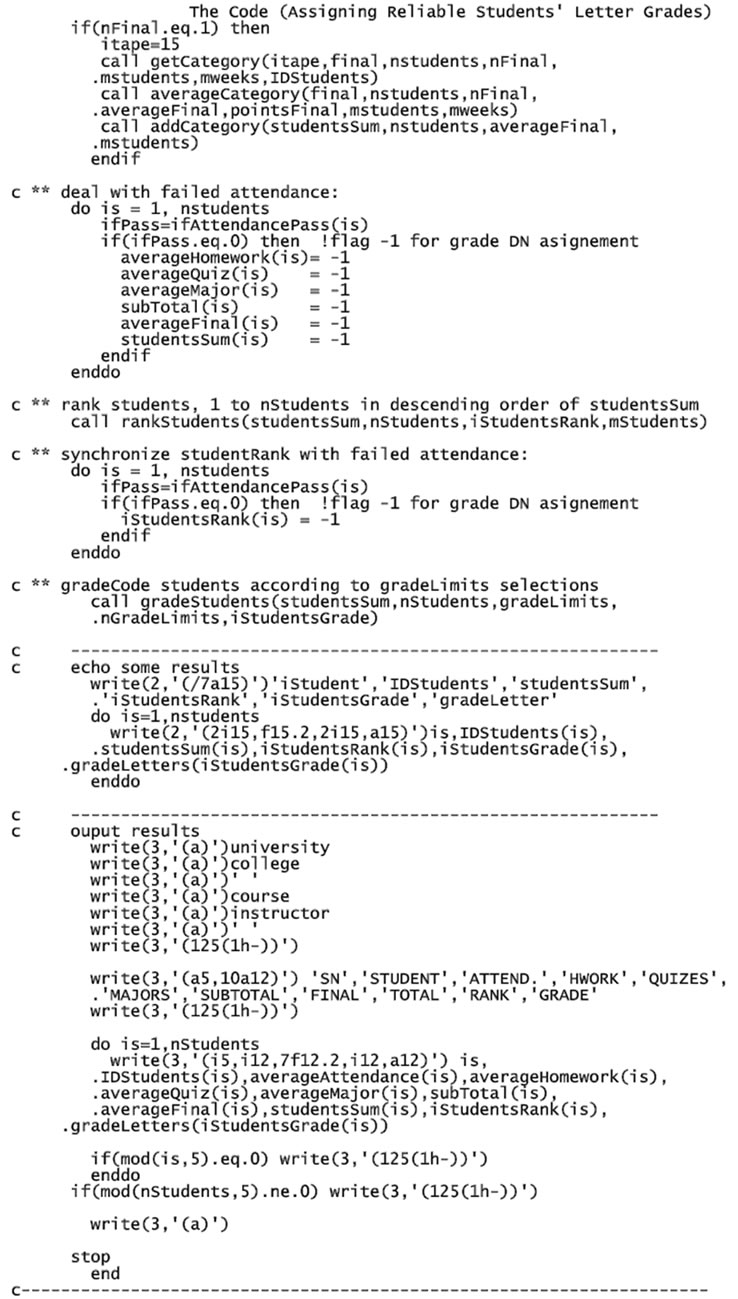

4) The computational mechanics for determining the cut-off lines (normally implemented in a normative-referencing process) is based on the class weighted average and the class(s) standard deviation such that with N = number of students, and xi = a student score, the code module “grade Students” would compute the weighted course mean and the standard deviation

and the standard deviation  (as a measure of dispersion of scores around the mean) such that with

(as a measure of dispersion of scores around the mean) such that with

(1)

(1)

(2)

(2)

Figure 1. Main steps of the grades’ assignment computer code.

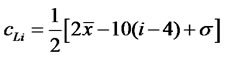

grades’ cut-off lines (defined as cLi) for the lower bounds of the ranges for the eight passing grades (namely: for the letter grade A+, A, B+, B, C+, C, D+, D) are determined generically. For this purpose the following generic formula for determining letter grades above the mean, for mean grades, and for grades below the mean, are respectively given as

for i = 1, 2, 3, 4 (3)

for i = 1, 2, 3, 4 (3)

for i = 5 (4)

for i = 5 (4)

for i = 6, 7, 8 (5)

for i = 6, 7, 8 (5)

in which: the index i values of 1, 5, and 8, correspond to lower bounds of the distinction grade A+, the above average grade C+, and the just barely passing grading D, and so on, respectively; the output of the code presents the assigned letter grades in detailed and simple formats that are easily interpretable (as shown for example in Appendix I-a and I-b).

4. Code Utilization and Discussions

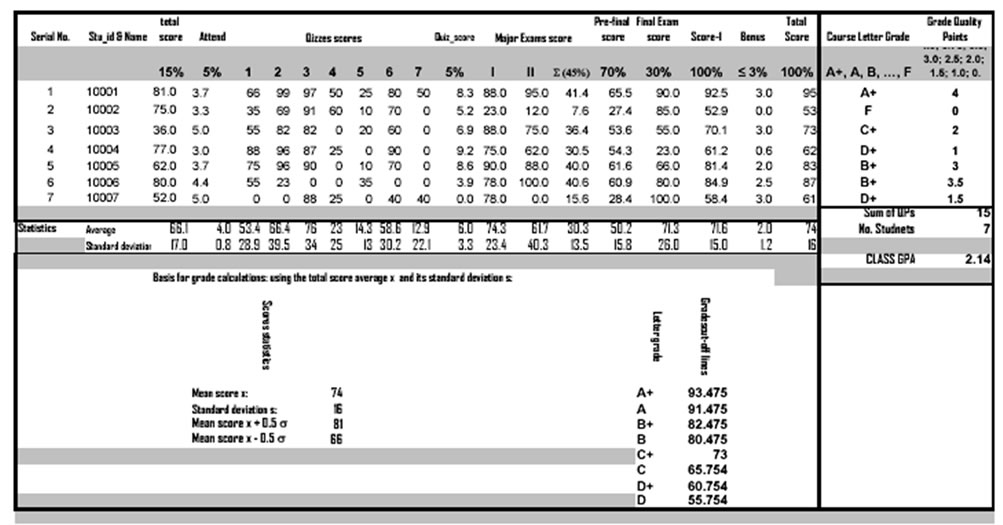

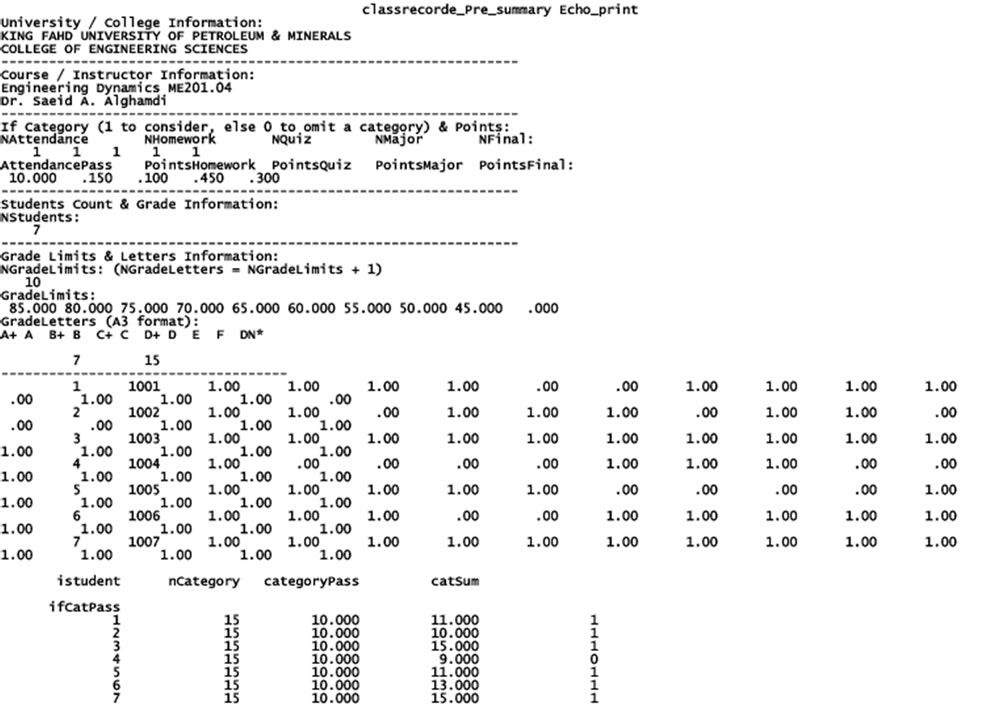

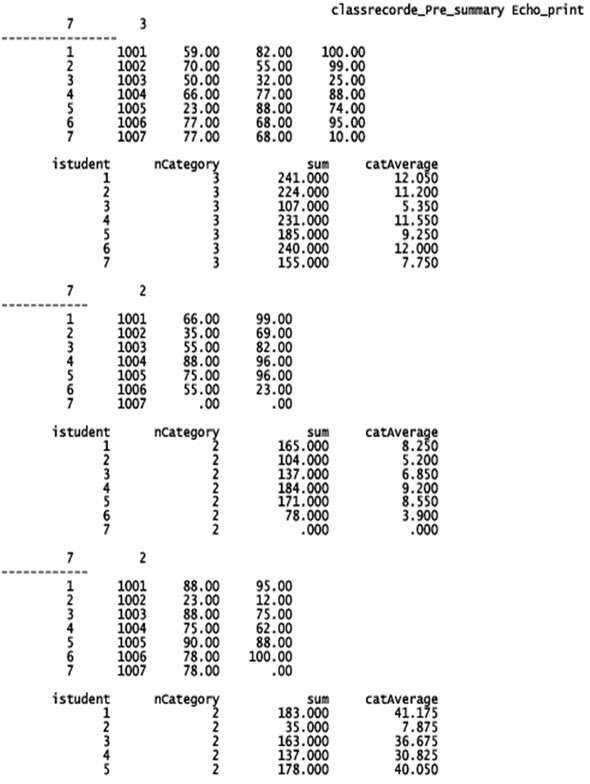

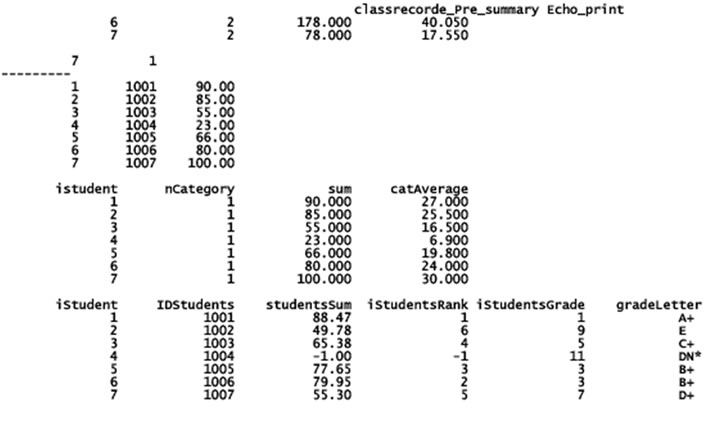

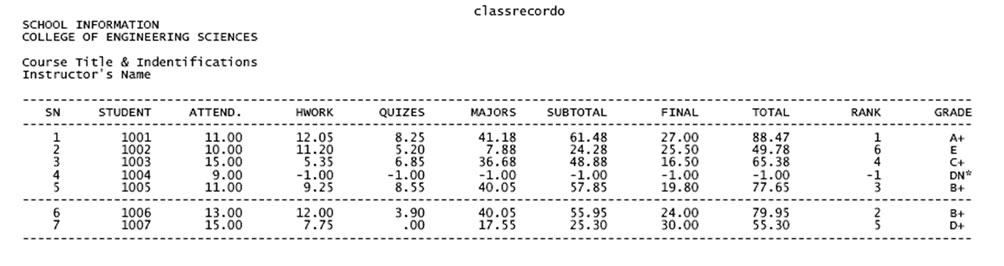

To demonstrate the ease this code would provide to educators, that are frequently involved in evaluating students’ performance in given course for assigning letter grades, the utilization of the code is shown herein for a class size of only seven students. The output obtained from this code is further compared to the output obtained from an ad hoc Excel spread-sheet that has been prepared to determine the letter grades for the same group of students.

Sample input-control data and output-summary details of the code outputs obtained for a class-size of just eleven students given in Appendix I-b. The code has also been utilized in a comparative study to determine students’ letter grades for another class of small size with only seven students and the students’ letter grades assigned by the code are summarized in Appendix II-a, and in Appendix II-b, and the comparison is shown with reference to the letter grades reported by an ad hoc spread-sheet calculations (shown in Appendix II-c). The comparison of the results (i.e. the letter grades assigned to the students in the class) obtained from the two automated procedures clearly show identical results and as this has been repeatedly noted by the author on several occasions it should represent a strong empirical evidence of the numerous advantages and robustness of the code presented herein. These advantages would of course be more indisputable particularly when reporting letter grades for classes with large students’ population.

This shows that while the results obtained are identical the code presented herein has the advantages of being more general and user-friendly as the user is not presumed to have a prior programming knowledge to use the code and all the inputs required are entered merely as separate text sheets that can be easily prepared with free format to be read by the code.

In particular, the code has clear and distinct advantages for the grading assignment process of multi-section courses as near-fair and less-disparate grade distributions are made more likely possible and attainable. The code would make this easily achieved as educators would be relieved from the overwhelming concerns that frequently accompany the mechanistic aspects (to determine numerous evaluative metrics that may be included for a specific course) of an academic evaluation process. The utilization of the code would certainly enable educators have more time to focus on getting rational assessment of students’ achievements within the framework of designated learning outcomes, and would certainly help minimize the number of possible grievances that may be filed by some students for different subjective justifications.

5. Closure

It is an undeniable reality among educators and academicians that a process of assigning students’ grades is invariably influenced by numerous objective and subjective variables, and quite often it is tainted with the subjective influences of personal philosophy and human psychology factors. Based on this, and due to the vital importance of using grades’ as quantification metrics to monitor students’ intellectual progress within the framework of clearly specified learning objectives of a course, and since most students see grades as predictive measures and motivating drives for success in a study field, it is essential that educators exercise extra care in maximizing the positive accounts of all objective factors and minimizing the negative ramifications of subjectively fuzzy factors. This can be easily achieved only if the mechanistic aspects of determining the students’ performance metrics are taken care of by an automated methodology.

For this purpose, the FORTRAN Computer Code presented in this paper that has been tested by the author on several occasions to report students’ letter grades and the results obtained have provided strong empirical evidence of the robustness of the code as an instrument for assigning students’ grades with relative ease. The code is capable of accounting for numerous parameters that may be deemed essential for the process of academic evaluation. Furthermore and compared to an ad hoc grading spread-sheet, the user-friendly features of the code make it easily adaptable for the purpose of assigning more reliable students’ grades that reflect the true-level of students’ mastery of learning outcomes when all possible evaluation components are accounted for.

6. Acknowledgements

Initial versions of the program described herein were developed and tested using the computational facilities made available by King Fahd University of Petroleum and Minerals (KFUPM; Dhahran, KSA). The support provided by the staff of the Academic Computing Services (ACS) of the ITC-KFUPM is acknowledged with appreciations.

7. References

[1] E. J. Pedhazur and L. P. Schmelkin, “Measurement, Design and Analysis: An Integrated Approach,” Lawrence, Erlbaum, Hillsdale, 1991.

[2] B. Thompson and T. Vacha-Haase, “Psychometrics is Datametrics: The Test is Not Reliable,” Educational and Psychological Measurement, Vol. 60, No. 2, 2000, pp. 174- 195.

[3] L. M. Aleamoni, “Why is Grading Difficult? Note to the Faculty,” University of Arizona, Office of Instructional Research and Development, Tucson, 1978.

[4] D. A. Frisbie, N. A. Diamond and J. C. Ory, “Assigning Course Grades,” Office of Instructional Resources, University of Illinois, Urbana, 1979.

[5] W. Hornby, “Assessing Using Grade-Related Criteria: A Single Currency for Universities?” Assessment & Evaluation in Higher Education, Vol. 28, No. 4, 2003, pp. 435-454.

[6] B. Cheang, A. Kurnia, A. Lim and W.-C. Oon, “On Automated Grading of Programming Assignment in an Academic Institution,” Computers and Education, Vol. 41, No. 2, 2003, pp. 121-131.

[7] W. J. McKeachie, “College Grades: A Rational and Mild Defense,” AAUP Bulletin, Vol. 320, 1976.

[8] J. S. Terwilliger, “Classroom Standard Setting and Grading Practices,” Educational Measurement: Issues and Practices, Vol. 8, No. 2, 1989, pp. 15-19.

[9] G. R. Johnson, “Taking Teaching Seriously: A Faculty Handbook,” Texas A & M University, Center for Teaching Excellence, College Station, TX, 1988.

[10] J. E. Stice, “Grades and Test Scores: Do They Predict Adult Achievement?” Engineering Education, No. 390, 1979.

[11] K. E. Eble, “The Craft of Teaching,” 2nd Edition, JosseyBass, San Francisco, 1988.

[12] W. J. McKeachie, “The A B C’s of Assigning GradesTeaching Tips: Strategies, Research, and Theory for College and University Teachers,” In: W. J. McKeachie Ed., 9th Edition, D. C. Heath, Lexington, 1994.

[13] J. S. Terwilliger, “Assigning Grades to Students,” IL: Scott, Forsman, Glenview, 1971.

[14] M. J. Evans and J. S. Rosenthal, “Probability and Statistics—The Science of Uncertainty,” W. H. Freeman, 2004, p. 638.

[15] K. A. Smith, “Grading and Distributive Justice,” Proceedings ASEE/IEEE Frontiers in Education Conference, IEEE, New York, 1986, p. 421.

[16] R. L. Ebel, “Essentials of Educational Measurement,” 3rd Edition, , Prentice Hall, Englewood Cliffs, 1979.

[17] C. Adelman, “Performance and Judgment: Essay on Principles and Practice in the Assessment of College Student Learning,” U.S. Department of Education, Washington, D. C., 1988.

[18] R. M. W. Travers, “Appraisal of the Teaching of the College Faculty,” Journal of Higher Education, Vol. 21, No. 41, 1950.

[19] R. L. Thornedike, “Applied Psychometrics,” Houghton Mifflin, Boston, 1982.

[20] G. S. Hanna and W. E. Cashin, “Matching Instruction Objectives, Subject Matter, Tests, and Score Interpretations. (IDEA Paper 18), ” Kansas State University, Center for Faculty Evaluation and Development, Manhattan, 1987.

[21] P. Elbow, “Embracing Contraries: Explorations in Learning and Teaching,” Oxford University Press, New York, 1986.

[22] J. D. Krumboltz and C. J. Yeh, “Competitive Grading Sabotage Good Teaching,” Phi Delta Kappan, Vol. 78, No. 4, 1996.

[23] T. R. Guskey, “Grading Policies That Work Against Standards and How to Fix Them,” NASSP Bulletin, Vol. 84, No. 620, 2000, pp. 20-29.

[24] J. M. Graham, “Congeneric and (Essentially) TauEquivalent Estimates of Score Reliability—What They are and How to Use Them,” Educational and Psychological Measurement, Vol. 66, No. 6, 2006, pp. 930-944.

[25] I. Krunger, “A Computer Code to Assign Student Grades,” Educational and Psychological Measurement, No. 34, 1974, pp. 179-180.

[26] Microsoft Corporation, “Managing grade with Excel 2002,” 2002. http://www.microsoft.com/education/ ManagingGrades.mspx

[27] E. Wilson, C. L. Karr and L. M. Freeman, “Flexible, Adaptive, Automatic Fuzzy-Based Grade Assigning System,” Proceedings Fuzzy Information Processing Society—NAFIPS, Conference of the North American, Vol. 60, 1998, pp. 174-195.

[28] S. A. Alghamdi, “Towards Automated and Reliable Evaluation of Students’ Scholastic Performance: A FORTARN Computer Code,” Unpublished report, CEDepartment, King Fahd University of Petroleum & Minerals, Dhahran, KSA, 2008.

[29] S. R. Cheshier, “Assigning Grades More Fairly,” Engineering Education, Vol. 343, 1975, pp. 4-8.

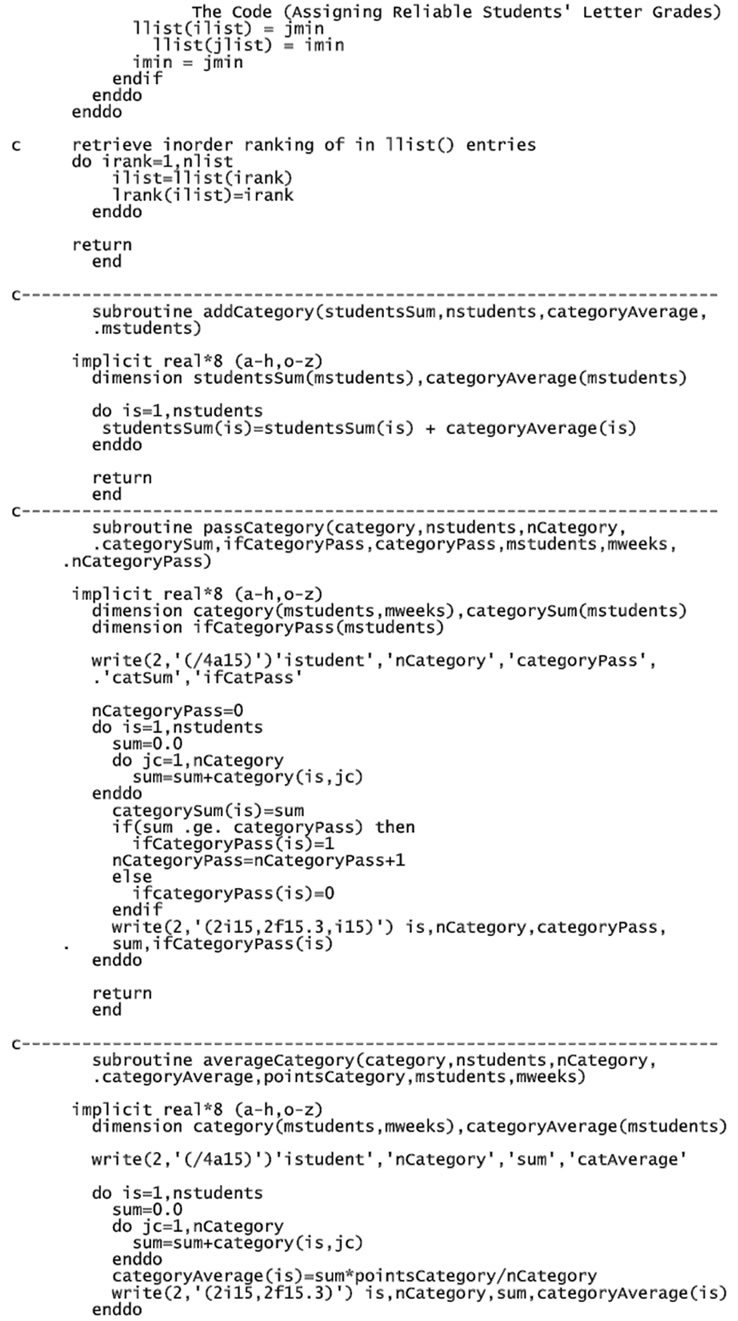

Appendix I-a: Listing of the code

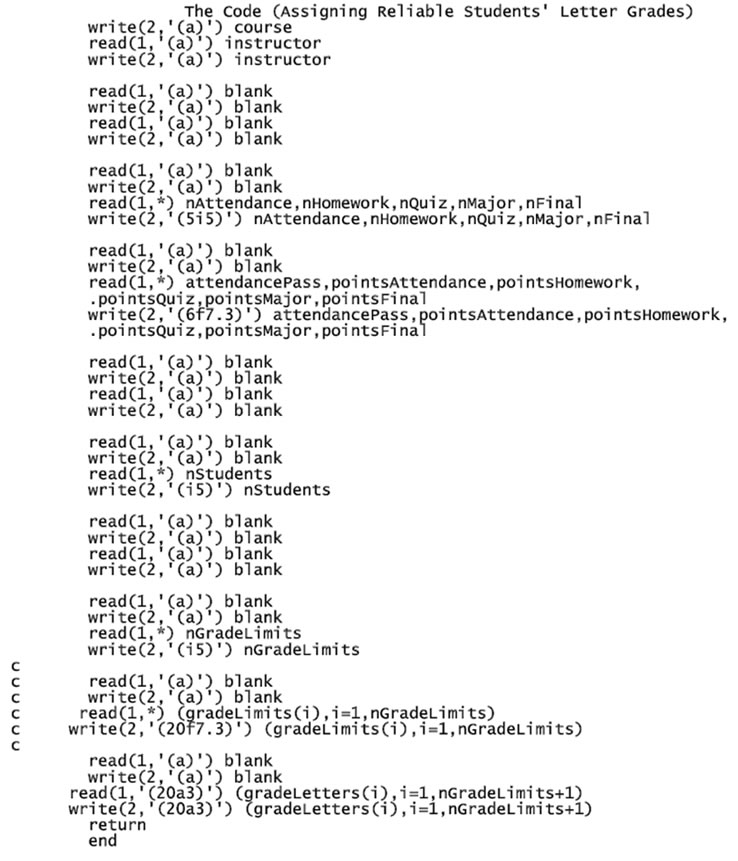

Appendix I-b: Sample input-control data and output-summary details of the code

Appendix I-b (cont’d)

Appendix II-a: A brief sample summary output of the code

Appendix II-a (cont'd)

Appendix II-b: A brief sample summary output of the code

Appendix II-c: A sample ad hoc Excel spread sheet grades' assignment