Health

Vol.4 No.11(2012), Article ID:24426,8 pages DOI:10.4236/health.2012.411154

Design and implementation of a survey of senior Canadian healthcare decision-makers: Organization-wide resource allocation processes

![]()

1Centre for Clinical Epidemiology & Evaluation, Vancouver Coastal Health Research Institute, Vancouver, Canada; *Corresponding Author: neale.smith@ubc.ca

2School of Population and Public Health, University of British Columbia, Vancouver, Canada

3Faculty of Health and Social Development, University of British Columbia Okanagan, Kelowna, Canada

4University of Toronto Joint Centre for Bioethics, Toronto, Canada

5Department of Health Policy, Management & Evaluation, University of Toronto, Toronto, Canada

6Canadian Centre for Applied Research in Cancer Control (ARCC), Vancouver, Canada

7British Columbia Cancer Agency, Vancouver, Canada

8Glasgow Caledonian University, Glasgow, UK

Received 10 August 2012; revised 13 September 2012; accepted 27 September 2012

Keywords: Health Policy; Resource Allocation; Rationing; Canada

ABSTRACT

A three-year research project based in British Columbia, Canada, is attempting to develop a framework and tools to assist healthcare system decision-makers achieve “high performance” in resource allocation. In pursuit of this objective, a literature search was conducted and two phases of primary data collection are being undertaken: an on-line survey of senior healthcare decision-makers, and in-depth case studies of potential “high performing” organizations. This paper addresses the survey phase; our aim is to provide a practical example of the mechanics of survey design, of benefit to those who want to better understand our forthcoming results, but also as an aid to other researchers grappling with the hard choices and trade-offs involved in the survey development process. Survey content is described in light of the existing literature, with discussion of the choices made by the research team to decide what questions and items would be included and excluded. The target population for the survey was senior managers in Canadian regional health authorities (or the closest equivalent organizations) in each of the 10 provinces and 3 territories. The paper discusses how this sample was obtained, and describes the survey implementation process.

1. INTRODUCTION

Making choices about what to fund and not fund is an on-going obligation of healthcare decision-makers; available financial resources are never sufficient to meet all claims. However, to date we have limited knowledge about the range of organizational factors—structures, behaviours and processes—that influence resource allocation practice. Strategies succeed or fail in large part due to their fit with an organization’s context and culture. Decision-makers would greatly benefit from having tools and other resources that would enable them to assess their resource allocation procedures in context, and so pursue excellence and “high performance” [1]. A threeyear research project based in British Columbia, Canada, with healthcare delivery organizations as strong partners, is underway to help address this gap. The overall objective of this project is to develop a framework that can be used to identify how healthcare organizations can be transformed to achieve excellence in priority setting and resource management. In addition to a literature review, two phases of primary data collection are being conducted to inform the development of this framework: an on-line survey of senior decision-makers, and in-depth case studies of resource allocation within several Canadian healthcare organizations. Our understanding of the concept of high performance will be emergent throughout the course of the project.

There are some priority setting or resource allocation case studies reported in the literature, though as Tsourapas & Frew [2] note, only one macro-level case—that is an organization-wide effort to allocate between different Departments and types of services [3]—has been widely reported [Calgary, 2001-2003]. While some of the authors have been involved in other projects at this level in Canada only one has yet been reported in the peer reviewed literature [4,5]. There are also few examples of cross-sectional surveys that look comparatively at priority setting or resource allocation processes in multiple organizations at the same point in time [6-10].

A cross-province/territory survey of senior decisionmakers will provide a snapshot of the state of the field in Canada at the present time. It can show what issues are commonplace among healthcare organizations, and so where applied research for decision support might usefully be directed. We can also identify what is working well. Comparisons down the road to monitor change will be possible. Finally, a survey data set enables us to look for patterns that possibly explain (perceptions of) effective resource allocation and priority setting.

Most published protocol studies are for clinical trials; however in terms of increasing transparency and understanding of the research process, there is similar value in the publication of protocols and designs for other methodologies—in this case, survey technique. One of the main contributions of this paper is to illuminate key decisions made through the design stage, and how and why such determinations were reached. There are many texts which give general guidance about survey development, but only real examples can illustrate how difficult choices are made. One publication which does this describes the survey development process as a means to reflect upon power relationships within community development initiatives [11]. Some recent protocols do go into details of how the content of a survey instrument was determined [12], and the rationale behind the selection of items, including the intended analysis [13]. More work and deliberate thought are required to develop effective survey instruments than many observers anticipate; this paper intends to make some of the inner workings of research design more visible. As such, our audience is both those interested in our substantive topic and those researchers “getting their legs” in survey work.

It is important to note that we do not present here any survey results—those will be reported elsewhere. In this paper, we discuss survey content development and sample selection. Sampling and content are iterative, in that certain types of research question point toward particular sets of respondents, which then further directs what would be appropriate and relevant content. We trust that other researchers and decision-makers can usefully learn from this recounting of our experience.

2. DESIGN: SURVEY CONTENT DEVELOPMENT

The goal of the on-line survey was to have senior management team members describe the organization-wide resource allocation processes in which they are most directly involved. The instrument went through multiple iterations with research team members. Possible questions were posed, modified or deleted; some deletions were re-inserted in altered form, and occasionally then deleted again. Question design was guided by two principles. One was to maximize the rigour of the instrument, for example using concepts validated in the literature where possible. We also employed techniques such as reversal of items to address response pattern bias, and including cross-check or multiple forms of some questions, as commonly advocated by survey methodology specialists [14]. The second principle was to minimize the total response burden which participants would face, for example, minimizing open-ended questions, and phrasing questions so that informed respondents should be able to answer them without having to look things up. Some loss of information is often entailed by this, such as creating categories from what otherwise would be continuous variables. Response burden is also minimized by including only questions which the researchers have a clear intent to analyze.

The penultimate version of the instrument was pilot tested with three decision-makers representative of the intended target audience, and slight revisions were made as a result. Within Canada, a truly national study needs to include Quebec and therefore requires a French language version of the instrument. A francophone colleague translated the final English instrument into a French version. This preserved consistency of questions and response options, and allowed for the final data to be pooled. However, a directly translated text can be somewhat awkward, as it is designed around concepts which might not have exact French equivalents, and may not incorporate distinctive terms and phrases which have emerged in Francophone decision maker parlance. Eglene and Dawes, for instance, have described their challenge in reconciling the different meaning of terms like “leadership” for Quebecois and English-speaking North Americans [15]. As well, there was no opportunity to pilot test the French instrument before implementation.

In the paragraphs following, we outline the content of each major section of the survey. We cover both which questions we included, and why, and those we opted not to include, and why. Note however that the instrument as a whole has not been subject to validity and reliability testing. (The French-language version is available upon request to the authors.)

2.1. Descriptive Information about the Respondents and Their Organizations

We gathered information about respondents and their organization which we thought could be used to identify possible patterns in the data (in other words, hypotheses we might test about the systematic impact of key factors on perceptions of resource allocation processes). On the individual side, factors include respondent role, educational background, years of experience and tenure. We wanted a mix of executives in finance, operations and planning roles, because we speculated on the basis of past literature they may have different perceptions of the resource allocation process [16]. Operational definitions of these roles are contained in the survey itself. For these individual factors, while we considered that each of these might be associated with decision-makers’ views, on the basis of very limited literature we had no rationale to expect a direction for the hypotheses. There was extended debate within the team about whether to include a question on gender. In light of the above principles, it was decided to leave this out. This is not to say of course that there is not an important role for studies specific to the question of gender and its impact upon healthcare management. We fully expect some readers would have made different choices than we did here.

Data was collected about such organizational factors as senior executive team turnover, budget size, and budget trend. On the basis of the literature, we would expect that lower levels of turnover describe a more stable organization, and thus one in which there is greater opportunity to establish and sustain formal processes for resource allocation, and to build strong relationships among executive members which also could facilitate effective priority setting [7,17]. Similarly we might expect organizations with growing or at least stable budgets to have had more opportunity to develop and maintain formal resource allocation strategies. On the other hand, while financial pressures—decreasing budgets—might seem an obvious spur to focus on mechanisms for allocation, a crisis atmosphere might override efforts to make well thought-out choices about disinvestment. Finally, we might speculate that larger and better resourced organizations would be more apt to have established formal and consistent resource allocation practices. For some organizational variables, we had the choice to obtain self-reports, or to collect our own more objective data from publicly available sources. While self-report is quick and easy, answers are less precise and possibly less accurate. We opted to collect objective data but also to ask in the survey about budget size and trend, in case the data we sought from annual reports and other sources were unavailable.

2.2. Overview of Resource Allocation Processes

The claim that healthcare resource allocation is predominantly driven by historical or political factors has been often repeated [3,18]; this is contrasted with the presumed superiority of more formal or rational approaches. We designed one question to test this claim: it offers a summative measure against which to check if there are perceived differences in effectiveness of resource allocation processes between contexts. A second question (“check all that apply”) used several items drawn from the literature and was meant to provide additional insight into what formal processes might look like within Canada’s healthcare organizations. To ensure some consistency in response, respondents were instructed to reply based on “what is” current practice within their organization as opposed to what they think it ought to be.

2.3. Organizational Values and Decision Making

In this section, one question gives respondents the opportunity to provide their overall judgment of the fairness of their process, which can serve as a summative measure in data analysis. The survey also contains several items related to the Accountability for Reasonableness framework [19,20]; with these we can check to see if reported fairness of decision making is related to the presence or absence of specific features which are part of a widely used conceptual model of fair resource allocation process. We asked about “moral awareness” within the organization, based upon previous work with respect to ethics of resource allocation in the US Veterans Health Administration [21]. Participation in priority setting has been a topic of considerable research interest [22,23], and we designed one question to assess the extent (though not the quality) of direct stakeholder engagement in organization-wide priority setting.

2.4. Specific Factors or Criteria Which Are Considered in Resource Allocation Decisions

It has been increasingly recognized that the results of academic research studies are not the only factor which drives healthcare decision making. Other influences may be quite legitimate. For instance, Lomas et al. discuss the roles of scientific evidence balanced against “colloquial evidence” such as political judgment, professional expertise, and interest group lobbying [24]. We asked one question to give a sense of the range of knowledge and information that was typically brought into organization-wide resource allocation. A large number of criteria can and have been used to assess the relative benefit of spending options, though there is also considerable overlap among criteria used in many organizations. We reviewed the literature and drew a list based heavily upon recent case studies which had synthesized much previous experience in the field [25].

2.5. Organizational Context and Culture

Barriers and enablers to priority setting processes have been investigated in the literature and well summarized by Mitton and Donaldson [7]. We developed a lengthy list, drawing on additional sources [18,26-27]. In the end, items were culled from the list in order to make the task of assessing the presence or absence of these barriers and enablers more manageable for respondents. We ensured that the most commonly reported features, and a broad range of factors, were represented in the response set.

At various points through survey development, we conducted data visualization exercises. The idea here is to imagine the range of possible response patterns, and what we might understand in that light; e.g., what would it mean if all respondents were to give a rating of 5 out of 5, or if they were to check off none of the items in a list. This enables us to assess if question wording might be unclear, or categories ill-defined, or if there were other problems of wording which might need to be adjusted. As an example, we originally had two separate questions on the presence of barriers and of enablers, but realized through visualizing likely responses that these were tapping into much the same concepts and the survey instrument would be simplified by combining them into a single question.

2.6. Overall Assessment of Resource Allocation Processes

To date there has been little consistent or coherent attention given to evaluation of priority setting and resource allocation processes [2,28]. Sibbald et al.’s framework is groundbreaking in this sense [29,30]. The framework was developed using a Delphi consultation with priority setting scholars, qualitative interviews with Canadian healthcare decision-makers from eight provinces, and focus groups with patients. The framework contains both process and outcome elements [30]. We used this framework as the basis for a survey question. Two other questions asked respondents to identify the main strengths and weaknesses of their organization-wide resource allocation process; these were the only open-ended questions we used. Finally, respondents were asked to give a summative judgment or overall rating of their process, on a scale of 1 to 5 (where 1 = very poor and 5 = very good).

2.7. Identification of High Performers

Respondents were asked to name up to three organizations they considered “high performers.” No definition of this concept was provided; we asked respondents to give the rationale for their nominations, which we expected would give some sense of how they understood the idea. The research team debated whether or not we wished to specify nominations “within your region”—we concluded that while this might help obtain diversity for possible case studies, it was an artificial limit and might prevent us from finding out which Canadian organizations truly were best known for effective efforts at organization-wide resource allocation.

There are relatively few venues within which healthcare decision-makers in Canada can regularly interact, and knowledge of good practice might be communicated within these. In this case we would expect active and knowledgeable informants in the healthcare decision maker community to draw upon a common stock of knowledge. Visibility within this community, resulting in a nomination, or more than one, might be seen as an indicator of a potentially high performing organization.

3. DESIGN: SAMPLE SELECTION

Our main interest is in resource allocation within Canadian organizations that have some population health focus, and which fund and deliver a range of different types of health service. In most provinces, this means Regional Health Authorities (RHAs). In Ontario, the closest equivalent organizations are the Local Health Integration Networks, or LHINs. LHINs have planning and purchasing functions, but unlike RHAs do not also have service delivery responsibility. The initial research plan was also to include a sample of provider organizations from Ontario, but following research team discussion it was concluded that in most cases the nature of the challenges faced by such organizations in resource allocation was too different in scale and/or kind to pool with the other data, and it was also considered unlikely to achieve a sufficient number of replies for a separate analysis.

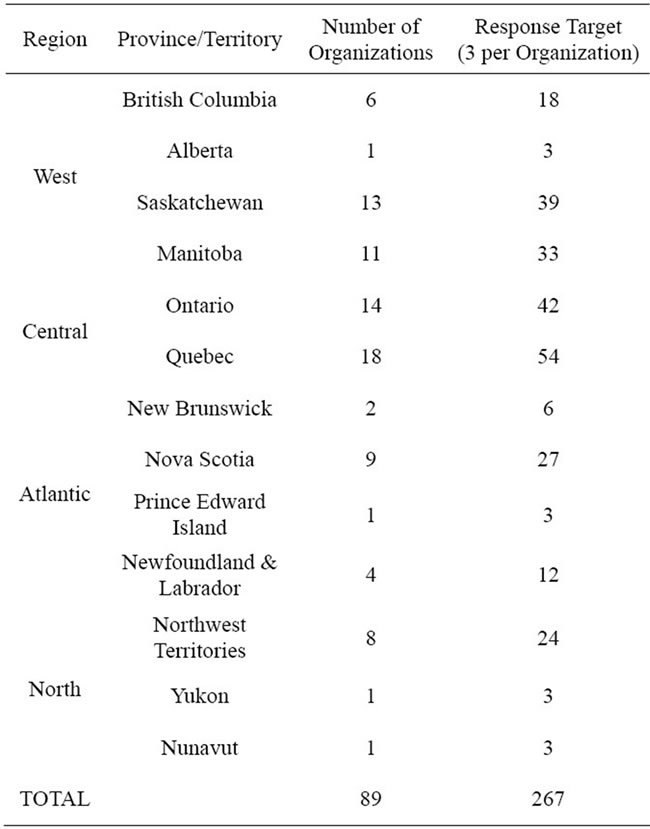

We recognize that the potential number of individual participants from some smaller jurisdictions (e.g., Saskatchewan, Nova Scotia, Northwest Territories) will exceed those from larger jurisdictions (e.g., British Columbia, Alberta). Our sampling is not meant to reflect population or total healthcare resources, but distribution across the country of health planning and service delivery organizations in which resources are allocated to different types of health care service.

Priority setting and resource allocation happens continuously within organizations, at all levels from senior executive to front line staff. We restricted the focus of our survey to senior managers, operationally defined as individuals with a direct reporting relationship to the CEO and who meet as a group to make major decisions about organizational strategy or direction. Executive Vice-President or Vice-President would be a typical job title. After considering a number of options we decided to have respondents limit their attention to their involvement in organization-wide (i.e., macro-level) allocation decisions, that is, the allocation of financial resources across departments, portfolios and/or major programs. These resources can be existing budgets or new monies. This instruction maximizes comparability among replies, while we acknowledge that it means we would not obtain information about possible effective resource allocation practices at the Departmental level.

It was decided to limit the survey response to three persons per organization, so as to maximize breadth in response (Table 1). Ideally, we sought one person each from the Finance, Operations, and Planning roles, as described above. Because their responsibilities span all of these areas, CEOs were excluded from the sample. Finance, Operations, and Planning roles are not equally distributed in organizations (based on the research team’s categorization of job titles, the distribution was 12% Finance, 41% Planning and 47% Operations), so our sample was not intended to be representative of the pool of senior managers in this sense. Should one category be exhausted without successful recruitment, then members from another would be selected instead. Where more than one person in a role was available on the senior management team, the respondents were selected randomly. This was

Table 1. Desired response by province/territory.

to ensure that we did not cherry-pick respondents who had previous interaction with the research team.

Having multiple respondents from each senior management team will allow us to look at internal consistency of responses within organizations, which could be an interesting finding in itself. It is known to be methodologically problematic to take the view of a single informant, however senior, as representing the whole truth of organizational practice [31]; differences of perception among senior managers in survey research are reported in public and private organizations [32]. In addition, we will be able to check accuracy of self-reported budget size and trend against actually reported figures, another point which could potentially inform design of future surveys in this area.

4. SURVEY IMPLEMENTATION

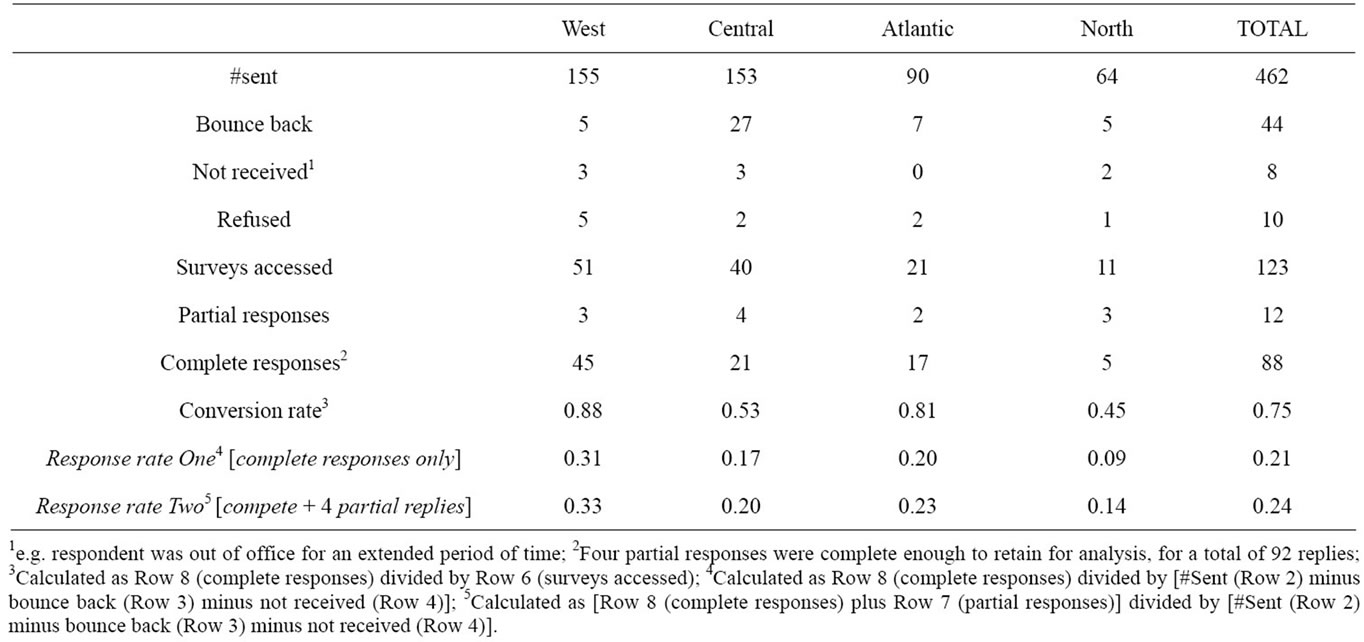

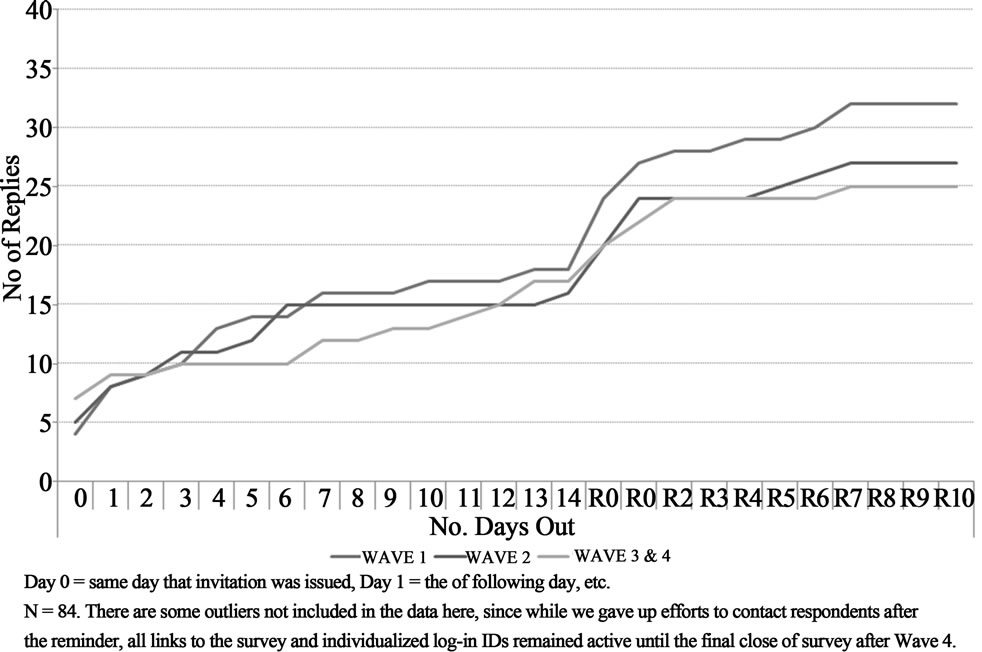

Response rate can be calculated as 24% of those contacted, and as 34% of the ideal target (i.e., out of 267). Table 2 provides response rates by region, and gives an indication of the sort of information which we monitored on an on-going basis to ensure that data collection was on-track. Alterations were made to improve response over the course of the four waves, following some precepts of the tailored design method [33]. For instance, to address slow responses we altered our email invitation for Ontario residents, to emphasize the role of a research team member based in that province, and for Quebec to encourage expression of any possibly distinctive perspective from that system which is relatively less familiar to English Canada. Reminders for Waves 3 and 4 respondents indicated, where appropriate, if their organization was yet unrepresented in the data and encouraged them to make their voice heard. Beyond this, regional variations in response are not easily explained. While each wave allowed a number of weeks for response, most replies came quickly following contact. One-third of all replies came within 48 hours of the initial email, and a further 31% came in the two days following our reminder note. See Figure 1. While people could leave the survey partially complete and return to it at a later time, only six respondents did so; the remainder completed it during a single session. Based on initial analyses, a 16% sample of all replies, it took approximately 25 minutes on average to complete the survey. Anecdotally, we have been told that the expected 30 minute survey length was a barrier to some respondents. Meta-analyses which have looked at the results from a large number of e-mail and web-based surveys have suggested that average response rates between 33% and 40% can be expected [34,35].

5. CONCLUSIONS

This survey was expected to generate considerable

Table 2. Survey response tracking, by region.

Figure 1. Time-to-response, by survey wave.

data on current resource allocation practices in Canadian healthcare organizations, which will enable us to speak to important questions such as those mentioned at the opening of this paper: on which aspects are decisionmakers performing well, what are commonplace issues and challenges, and what factors might explain or predict success in resource allocation performance? Readers will be able to see in planned subsequent publications the extent to which the data we obtained will enable us to reach conclusions on these questions.

This paper looked at the survey design process itself. Surveys always will trade off the amount of information that can be obtained against willingness to respond. Therefore there is some inevitable compromise in the final instrument between idealism and practicality. Some limitations have already been hinted at throughout this paper. These include the lack of literature on which to base some key survey items, the challenge of ensuring that participants have the same frame of reference in answering questions, and determining whether or not responses can be aggregated by organization or need to be treated at the individual level of analysis. The response rate may be seen as less than desirable, but given that the target audience is very busy decision-makers, approached without warning, we take the degree of interest and rapid response by many to be signs of positive support for this research and hope that it will provide useful and applicable findings for senior administrators.

Readers can agree or not with the choices we made, but what we wanted to illustrate here is the process by which we made such decisions. In itself that should be helpful to others who are working to design survey instruments which attempt to collect data for similar types of research objectives. A clear record of research team decisions, an audit trail, has been helpful in enabling us to construct this paper, as it laid out key decision points in our survey development process.

This initial survey work has pointed us to a number of aspects of resource allocation which can be elucidated through detailed case studies. These include whether or not organizations have distinct and different processes at Department or portfolio level, the precise nature of stakeholder engagement, and further articulation of key ideas such as formalization, fairness, and definitions of successful allocation. This work will lead up to the final phase of the research, the development of a framework or tool which will provide decision-makers with practical and evidence-informed guidance on managing structures, processes and behaviours in order to achieve high performance in resource allocation.

6. ACKNOWLEDGEMENTS

This project was funded by the Canadian Institutes of Health Research—Funding Reference #PRE-106811. Ethics approval was obtained from the University of British Columbia Behavioural Research Ethics Board. Francois Dionne and Lisa Masucci provided helpful comments at various stages in the research. Francois Dionne prepared the French language version of the survey instrument. The authors also thank the other members of the research team, our decision-maker partners—Bonnie Urquhart and Stuart MacLeod.

![]()

![]()

REFERENCES

- Baker, G.R., MacIntosh-Murray, A., Porcellato, C., Dionne, L., Stelmacovich, K. and Born, K. (2008) High performing healthcare systems: Delivering quality by design. Longwoods Publishing Corporation, Toronto.

- Tsourapas, A. and Frew, E. (2011) Evaluating “success” in programme budgeting and marginal analysis: A literature review. Journal of Health Services Research and Policy, 16, 177-183. doi:10.1258/jhsrp.2010.009053

- Mitton, C. and Donaldson, C. (2004) Priority setting toolkit: A guide to the use of economics in healthcare decision making. BMJ Books, London.

- Dionne, F., Mitton, C., Smith, N. and Donaldson, C. (2009) Evaluation of the impact of program budgeting and marginal analysis in vancouver island health authority. Journal of Health Services Research and Policy, 14, 234- 242. doi:10.1258/jhsrp.2009.008182

- Dionne, F., Mitton, C., Smith, N. and Donaldson, C. (2008) Decision maker views on priority setting in the vancouver island health authority. Cost Effectiveness and Resource Allocation, 6, 13. doi:10.1186/1478-7547-6-13

- Lomas, J., Veenstra. G. and Woods, J. (1997) Devolving authority for health care in Canada’s provinces: 2. Backgrounds, resources and activities of board members. Canadian Medical Association Journal, 156, 513-520.

- Mitton, C. and Donaldson, C. (2003) Setting priorities and allocating resources in health regions: lessons from a project evaluating program budgeting and marginal analysis (PBMA). Health Policy, 64, 335-348. doi:10.1016/S0168-8510(02)00198-7

- Menon, D., Stafinski, T. and Martin, D. (2007) Priority-setting for healthcare: who, how, and is it fair? Health Policy, 84, 220-233. doi:10.1016/j.healthpol.2007.05.009

- Bate, A., Donaldson, C., Hunter, D.J., McCafferty, S., Robinson, S. and Williams, I. (2011) Implementation of the world class commissioning competencies: A survey and case-study evaluation. UK Department of Health: Policy Research Programme, London.

- Robinson, S., Dickinson, H., Williams, I., Freeman, T., Rumbold, B. and Spence, K. (2011) Setting priorities in health: Research report. Nuffield Trust, London & University of Birmingham, Health Services Management Centre, Birmingham.

- Schulz, A.J., Parker, E.A., Israel, B.A., Becker, A.B., Maciak, B.J. and Hollis, R. (1998) Conducting a participatory community-based survey for a community health intervention on Detroit’s east side. Journal of Public Health Management and Practice, 4, 10-24.

- Fasuru, B., Daley, C.M., Gajewski, B., Pacheco, C.M. and Choi, W.S. (2010) A longitudinal study of tobacco use among American Indian and Alaska Native tribal college students. BMC Public Health, 10.

- Aicken, C.R.H., Cassell, J.A., Estcourt, C.S., Keane ,F., Brook, G., Rait, G., White, P.J. and Mercer, C.H. (2011) Rationale and development of a survey tool for describing and auditing the composition of, and flows between, specialist and community clinical services for sexually transmitted infections. BMC Health Services Research, 11, 30. doi:10.1186/1472-6963-11-30

- Alreck, P.L. and Settle, R.B. (1985) The survey research handbook. Irwin, Homewood.

- Eglene, O. and Dawes, S.S. (2006) Challenges and strategies for conducting international public management research. Administration and Society, 38, 596-622. doi:10.1177/0095399706291816

- Walker, R.M. and Enticott, G. (2004) Using multipleinformants in public administration: Revisiting the managerial values and action debate. Journal of Public Administration Research and Theory, 14, 417-434. doi:10.1093/jopart/muh022

- Peacock, S., Mitton, C., Bate, A., McCoy, B. and Donaldson, C. (2009) Overcoming barriers to priority setting using interdisciplinary methods. Health Policy, 92, 124-132. doi:10.1016/j.healthpol.2009.02.006

- Bate, A., Donaldson, C. and Murtagh, M.J. (2007) Managing to manage healthcare resources in the English NHS? What can health economics teach? What can health economics learn? Health Policy, 84, 249-261. doi:10.1016/j.healthpol.2007.04.001

- Gibson, J.L., Martin, D.K. and Singer, P.A. (2004) Setting priorities in health care organizations: Criteria, processes, and parameters of success. BMC Health Services Research, 4, 25. doi:10.1186/1472-6963-4-25

- Martin, D.K., Giacomini, M. and Singer, P.A. (2002) Fairness, accountability for reasonableness, and the views of priority setting decision-makers. Health Policy, 61, 279-290. doi:10.1016/S0168-8510(01)00237-8

- Foglia, M.B., Pearlman, R.A., Bottrell, M.M., Altemose, J.K. and Fox, E. (2007) Priority setting and the ethics of resource allocation within VA healthcare facilities: Results of a survey. Organizational Ethics, 4, 83-96.

- Bruni, R., Laupacis, A. and Martin, D.K. (2008) Public engagement in setting priorities in health care. Canadian Medical Association Journal, 179, 15-18. doi:10.1503/cmaj.071656

- Mitton, C., Smith, N., Peacock, S., Evoy, B. and Abelson, J. (2009) Public participation in health care priority setting: A scoping review. Health Policy, 91, 219-228. doi:10.1016/j.healthpol.2009.01.005

- Lomas, J., Culyer, T., McCutcheon, C., McAuley, L. and Law, S. (2005) Conceptualizing and combining evidence for health system guidance. Canadian Health Services Research Foundation, Ottawa.

- Mitton, C., Dionne, F., Damji, R., Campbell, D. and Bryan, S. (2011) Difficult decisions in times of constraint: Criteria based resource allocation in the Vancouver Coastal Health Authority. BMC Health Services Research, 11, 169. doi:10.1186/1472-6963-11-169

- Teng, F., Mitton, C. and MacKenzie, J. (2007) Priority setting in the provincial health services authority: Survey of key decision-makers. BMC Health Services Research, 7, 84. doi:10.1186/1472-6963-7-84

- Kapariri, L. and Martin, D.K. (2009) Successful priority setting in low and middle income countries: A framework for evaluation. Health Care Analysis, 18, 129-127. doi:10.1007/s10728-009-0115-2

- Smith, N., Mitton, C., Cornelissen, E., Gibson, J. and Peacock, S. (2012) Using evaluation theory in priority setting and resource allocation. Journal of Health Organization and Management, 26, 655-671.

- Sibbald, S.L., Gibson, J.L., Singer, P.A., Upshur, R. and Martin, D.K. (2010) Evaluating priority setting success in healthcare: A pilot study. BMC Health Services Research, 10, 131. doi:10.1186/1472-6963-10-131

- Sibbald, S.L., Singer, P.A., Upshur, R. and Martin, D.K. (2009) Priority setting: What constitutes success? A conceptual framework for successful priority setting. BMC Health Services Research, 9, 43. doi:10.1186/1472-6963-9-43

- Enticott, G., Boyne, G.A. and Walker, R.W. (2009) The use of multiple informants in public administration research: Data aggregation using organizational echelons. Journal of Public Administration Research and Theory, 19, 229-253. doi:10.1093/jopart/mun017

- Starbuck, W.H. and Mezias, J.M. (1996) Opening Pandora’s box: Studying the accuracy of managers’ perceptions. Journal of Organizational Behavior, 17, 99-117. doi:10.1002/(SICI)1099-1379(199603)17:2<99::AID-JOB743>3.0.CO;2-2

- Dillman, D. (2007) Mail and internet surveys: The tailored design method. 2nd Edition, John Wiley and Sons, Hoboken.

- Cook, C., Heath, F. and Thompson, R.L. (2000) A metaanalysis of response rates in webor internet-based surveys. Educational and Psychological Measurement, 60, 821-836. doi:10.1177/00131640021970934

- Shih, T. and Fan, X. (2009) Comparing response rates in e-mail and paper surveys: A meta-analysis. Educational Research Review, 4, 26-40. doi:10.1016/j.edurev.2008.01.003