Advances in Linear Algebra & Matrix Theory

Vol.06 No.01(2016), Article ID:64247,10 pages

10.4236/alamt.2016.61001

Dykstra’s Algorithm for the Optimal Approximate Symmetric Positive Semidefinite Solution of a Class of Matrix Equations*

Chunmei Li#, Xuefeng Duan, Zhuling Jiang

College of Mathematics and Computational Science, Guilin University of Electronic Technology, Guilin, China

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 4 December 2015; accepted 4 March 2016; published 7 March 2016

ABSTRACT

Dykstra’s alternating projection algorithm was proposed to treat the problem of finding the projection of a given point onto the intersection of some closed convex sets. In this paper, we first apply Dykstra’s alternating projection algorithm to compute the optimal approximate symmetric positive semidefinite solution of the matrix equations AXB = E, CXD = F. If we choose the initial iterative matrix X0 = 0, the least Frobenius norm symmetric positive semidefinite solution of these matrix equations is obtained. A numerical example shows that the new algorithm is feasible and effective.

Keywords:

Matrix Equation, Dykstra’s Alternating Projection Algorithm, Optimal Approximate Solution, Least Norm Solution

1. Introduction

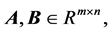

Throughout this paper, we use  and

and  to stand for the set of

to stand for the set of  real matrices and

real matrices and  symmetric positive semidefinite matrices, respectively. We denote the transpose and Moore-Penrose generalized inverse of the matrix A by

symmetric positive semidefinite matrices, respectively. We denote the transpose and Moore-Penrose generalized inverse of the matrix A by  and

and , respectively. The symbol

, respectively. The symbol  stands for

stands for  identity matrix. For

identity matrix. For

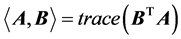

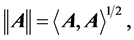

denotes the inner product of the matrix A and B. The induced norm is the so-called Frobenius norm, that is,

denotes the inner product of the matrix A and B. The induced norm is the so-called Frobenius norm, that is,  then

then  is a real Hilbert space. In order to develop this paper, we need to give the following definition.

is a real Hilbert space. In order to develop this paper, we need to give the following definition.

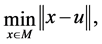

Definition 1.1. [1] Let M be a closed convex subset in a real Hilbert space H and u be a point in H, then the point in M nearest to u is called the projection of u onto M and denoted by , that is to say,

, that is to say,  is the solution of the following minimization problem

is the solution of the following minimization problem

(1.1)

(1.1)

i.e.

In this paper, we consider the matrix equations

and their matrix nearness problem.

Problem I. Given matrices

where

Obviously,

This kind of matrix nearness problem occurs frequently in experimental design, see for instance [2] [3] . Here

Dykstra’s alternating projection algorithm was proposed by Dykstra [18] to treat the problem of finding the projection of a given point onto the intersection of some closed convex sets. It is based on a clear modification of the classical alternating projection algorithm first proposed by Von Neumann [19] , and studied later by Cheney and Goldstein [20] . For an application of Dykstra’s alternating projection algorithm to compute the nearest diagonally dominant matrix see [21] . For a complete survey on Dykstra’s alternation projection algorithm and applications see Deutsch [22] .

In this paper, we propose a new algorithm to compute the optimal approximate symmetric positive semidefinite solution of Equation (1.3). We state Problem I as the minimization of a convex quadratic function over the intersection of three closed convex sets in the vector space

2. Dykstra’s Algorithm for Solving Problem I

In this section, we apply Dykstra’s alternating projection algorithm to compute the optimal approximate symmetric positive semidefinite solution of Equation (1.3). We first introduce Dykstra’s alternating projection algorithm and its convergence theorem.

In order to find the projection of a given point onto the intersection of a finite number of closed convex sets

Dykstra’s Algorithm 2.1

1) Given the initial value

2) Set

3) For

For

End

End

The utility of Dykstra’s algorithm 2.1 is based on the following theorem (see [23] - [25] and the references therein).

Lemma 2.1. ( [23] , Theorem 2) Let

Now we begin to use Dykstra’s algorithm 2.1 to solve Problem I. Firstly, we define three sets

It is easy to know that

On the other hand, it is easy to verify that

After defining the sets

By Definition 1.1 and noting that the equalities (2.2) and (1.2), it is easy to find that

Therefore, Problem I can be converted equivalently into finding the projection

We can see that the key problems to realize Dykstra’s algorithm 2.1 are how to compute the projections

Theorem 2.1. Suppose that the set

Proof. By Definition 1.1, we know that the projection

Now we begin to solve the minimization problem (2.4). We first characterize the solution set

where

and

Substituting (2.5) into the matrix equation

which implies

Let

Then the matrix equation

which implies that

By (2.8) we have

Noting that the set

where

Consequently,

By (2.13) we know that

Therefore, the solution of the minimization problem (2.4) is

Combining (2.14) and (2.5)-(2.7), we have

The theorem is proved.

Theorem 2.2. Suppose that the set

Proof. The proof is similar to that of Theorem 2.1 and is omitted here.

For any

where

Theorem 2.3. For a given

where

By Dykstra’s algorithm 2.1 and noting that the projection

Algorithm 2.2

1) Set the initial value

2) Set

3) For

For

End

End

By Lemma 2.1 and (2.1), and noting that

Theorem 2.4. If the set

Combining Theorem 2.4 and the equalities (2.3) and (2.2), we have

Theorem 2.5. If the set

3. Numerical Experiments

In this section, we give a numerical example to illustrate that the new algorithm is feasible and effective to compute the optimal approximate symmetric positive semidefinite solution of the matrix equation (1.3). All programs are written in M ATLAB 7.8. We denote

and use the practical stopping criterion

Example 3.1. Consider the matrix equation (1.3) with

Here we use

1) Let

and its residual error

By concrete computations, we know that the distance from

2) Let

and its residual error

By concrete computations, we know that the distance from

3) Let

which is also the least Frobenius norm symmetric positive semidefinite solution of the matrix equations (1.3), and its residual error

By concrete computations, we know that the distance from

Example 4.1 shows that Algorithm 2.2 is feasible and effective to compute the optimal approximate symmetric positive semidefinite solution of the matrix equations (1.3).

4. Conclusion

In this paper, we state Problem I as the minimization of a convex quadratic function over the intersection of three closed convex sets in the Hilbert space

Cite this paper

ChunmeiLi,XuefengDuan,ZhulingJiang, (2016) Dykstra’s Algorithm for the Optimal Approximate Symmetric Positive Semidefinite Solution of a Class of Matrix Equations. Advances in Linear Algebra & Matrix Theory,06,1-10. doi: 10.4236/alamt.2016.61001

References

- 1. Bauschke, H.H. and Borwein, J.M. (1994) Dykstra’s Alternating Projection Algorithm for Two Sets. Journal of Approximation Theory, 79, 418-443.

http://dx.doi.org/10.1006/jath.1994.1136 - 2. Dai, H. and Lancaster, P. (1996) Linear Matrix Equations from an Inverse Problem of Vibration Theory. Linear Algebra and Its Applications, 246, 31-47.

http://dx.doi.org/10.1016/0024-3795(94)00311-4 - 3. Meng, T. (2001) Experimental Design and Decision Support. In: Leondes, C., Ed., Expert System the Technology of Knowledge Management and Decision Making for 21st Century, Volume 1, Academic Press, San Diego.

- 4. Navarra, A., Odell, P.L. and Young, D.M. (2001) A Representation of the General Common Solution to the Matrix Equations A1XB1=C1, A2XB2=C2 with Applications. Computers & Mathematics with Applications, 41, 929-935.

http://dx.doi.org/10.1016/S0898-1221(00)00330-8> - 5. Wang, Q.W. (2004) A System of Matrix Equations over Arbitrary Regular Rings with Identity. Linear Algebra and Its Applications, 384, 44-53.

http://dx.doi.org/10.1016/j.laa.2003.12.039 - 6. Liao, A.P., Lei, Y. and Yuan, S.F. (2006) The Matrix Nearness Problem for Symmetric Matrices Associated with the Matrix Equations [ATXA,BTXB]=[C,D]. Linear Algebra and Its Applications, 418, 939-954.

http://dx.doi.org/10.1016/j.laa.2006.03.032 - 7. Liao, A.P. and Lei, Y. (2005) Least-Squares Solution with the Minimum-Norm for the Matrix Equations [AXB,GXH]=[C,D]. Computers & Mathematics with Applications, 50, 539-549.

http://dx.doi.org/10.1016/j.camwa.2005.02.011 - 8. Sheng, X.P. and Chen, G.L. (2007) A Finite Iterative Method for Solving a Pair of Linear Matrix Equation (AXB,CXD)=(C,D). Applied Mathematics and Computation, 189, 1350-1358.

http://dx.doi.org/10.1016/j.amc.2006.12.026 - 9. Ding, J., Liu, Y.J. and Ding, F. (2010) Iterative Solutions to Matrix Equation of the Form AiXBi = fi. Computers & Mathematics with Applications, 59, 3500-3507.

http://dx.doi.org/10.1016/j.camwa.2010.03.041 - 10. Peng, Y.X., Hu, X.Y. and Zhang, L. (2006) An Iterative Method for Symmetric Solutions and Optimal Approximation Solution of the System of Matrix Equations A1XB1=C1, A2XB2=C2. Applied Mathematics and Computation, 183, 1127-1137.

http://dx.doi.org/10.1016/j.amc.2006.05.124 - 11. Chen, Y.B., Peng Z.Y. and Zhou, T.J. (2010) LSQR Iterative Method Common Symmetric Solutions to Matrix Equations AXB =E and CXD = F. Applied Mathematics and Computation, 217, 230-236.

http://dx.doi.org/10.1016/j.amc.2010.05.053 - 12. Cai, J. and Chen, G.L. (2009) An Iteraive Algorithm for the Least Squares Bisymmetric Solutions of the Matrix Equations 1XB1=C1, A22=2. Mathematical and Computer Modelling, 50, 1237-1244.

http://dx.doi.org/10.1016/j.mcm.2009.07.004 - 13. Dehghan, M. and Hajarian, M. (2008) An Iteraive Algorithm for Solving a Pair of Matrix Equations AXB = E, CXD = F over Generalized Centro-Symmetric Matrices. Computers & Mathematics with Applications, 56, 3246-3260.

http://dx.doi.org/10.1016/j.camwa.2008.07.031 - 14. Li, F.L., Hu, X.Y. and Lei, Z. (2008) The Generalized Reflexive Solution for a Class of Matrix Equations (AX=B, XC=D). Acta Mathematica Scientia, 28, 185-193.

http://dx.doi.org/10.1016/S0252-9602(08)60019-3 - 15. Peng, Z.H., Hu, X.Y. and Zhang, L. (2006) An Efficient Algorithm for the Least-Squares Reflexive Solution of the Matrix Equation A1XB1=C1, A2XB2=C2. Applied Mathematics and Computation, 181, 988-999.

http://dx.doi.org/10.1016/j.amc.2006.01.071 - 16. Yuan, S.F., Wang, Q.W. and Xiong, Z.P. (2013) Linear Parameterized Inverse Eigenvalue Problem of Bisymmetric Matrices. Linear Algebra and Its Applications, 439, 1990-2007.

http://dx.doi.org/10.1016/j.laa.2013.05.026 - 17. Yuan, S.F. and Liao, A.P. (2014) Least Squares Hermitian Solution of the Complex Matrix Equation AXB+CXD=E with the Least Norm. Journal of the Franklin Institute, 351, 4978-4997.

http://dx.doi.org/10.1016/j.jfranklin.2014.08.003 - 18. Dykstra, R.L. (1983) An Algorithm for Restricted Least-Squares Regression. Journal of the American Statistical Association, 78, 837-842.

http://dx.doi.org/10.1080/01621459.1983.10477029 - 19. Von Numann, J. (1950) Functional Operators Volume II. Princeton University Press, Princeton.

- 20. Cheney, W. and Goldstein, A. (1959) Proximity Maps for Convex Sets. Proceedings of the American Mathematical Society, 10, 448-450.

http://dx.doi.org/10.1090/S0002-9939-1959-0105008-8 - 21. Mendoza, M., Raydan, M. and Tarazaga, P. (1998) Computing the Nearest Diagonally Dominant Matrix. Numerical Linear Algebra with Applications, 5, 461-474.

http://dx.doi.org/10.1002/(SICI)1099-1506(199811/12)5:6<461::AID-NLA141>3.0.CO;2-V - 22. Deutsch, F. (2001) Best Approximation in Inner Product Spaces. Springer, New York.

http://dx.doi.org/10.1007/978-1-4684-9298-9 - 23. Boyle, J.P. and Dykstra, R.L. (1986) A Method for Finding Projections onto the Intersections of Convex Sets in Hilbert Spaces. Lecture Notes in Statistics, 37, 28-47.

- 24. Escalante, R. and Raydan, M. (1996) Dykstra’s Algorithm for a Constrained Least-Squares Matrix Problem. Numerical Linear Algebra with Applications, 3, 459-471.

http://dx.doi.org/10.1002/(SICI)1099-1506(199611/12)3:6<459::AID-NLA82>3.0.CO;2-S - 25. Morillas, P.M. (2005) Dykstra’s Algorithm with Strategies for Projecting onto Certain Polyhedral Cones. Applied Mathematics and Computation, 167, 635-649.

http://dx.doi.org/10.1016/j.amc.2004.06.136 - 26. Higham, N. (1988) Computing a Nearest Symmetric Positive Semidefinite Matrix. Linear Algebra and Its Applications, 103, 103-118.

http://dx.doi.org/10.1016/0024-3795(88)90223-6

NOTES

*The work was supported by National Natural Science Foundation of China (No.11561015; 11261014; 11301107), Natural Science Foundation of Guangxi Province (No.2012GXNSFBA053006; 2013GXNSFBA019009).

#Corresponding author.