Journal of Computer and Communications

Vol.04 No.11(2016), Article ID:70458,14 pages

10.4236/jcc.2016.411001

Image-Based Mobile Robot Guidance System by Using Artificial Ceiling Landmarks

Ching-Long Shih*, Yu-Te Ku

Department of Electrical Engineering, National Taiwan University of Science and Technology, Taiwan

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: July 20, 2016; Accepted: September 4, 2016; Published: September 8, 2016

ABSTRACT

This paper presents an image-based mobile robot guidance system in an indoor space with installed artificial ceiling landmarks. The overall system, including an omni-directional mobile robot motion control, landmark image processing and image recognition, is implemented on a single FPGA chip with one CMOS image sensor. The proposed feature representation of the artificial ceiling landmarks is invariant with respect to rotation and translation. One unique feature of the proposed ceiling landmark recognition system is that the feature points of landmarks are determined by topological information from both the foreground and background. To enhance recognition accuracy, landmark classification is performed after the mobile robot is moved to a position such that the ceiling landmark is located in the upright- top corner position of the robot’s camera image. The accuracy of the proposed artificial ceiling landmark recognition system using the nearest neighbor classification is 100% in our experiments.

Keywords:

Mobile Robot Guidance System, Artificial Ceiling Landmark, Image Processing, NCC (Nearest Neighbor Classification), FPGA

1. Introduction

The basic functions of AGV and mobile robots are automated navigation to recognized sites to stop and perform tasks. Thus, navigation and guidance control are a couple of the most critical parts in mobile robot systems. An essential issue of mobile robot navigation and ocalization focuses on the process for a robot to identify its position. A variety of AGV and mobile robot navigation technologies have been used in the past, including tape, electromagnetic (e.g. RFID), optical, laser, inertial, GPS, ultrasonic, vision and image recognition guidance [1] . The accuracy and limitations of these sensors are quite different from one to another. Among these sensors, cameras, which are known for their lower prices, ease of use and ability to capture in abundance information, have been increasingly applied to vision-based mobile robot localization. Due to their flexibility and setup without requirement for guidance paths, vision and image based recognition systems have become the trend in mobile robot guidance technology [2] [3] .

The simultaneous localization and mapping (SLAM) algorithm approach has drawn great attention among researchers in the mobile robotics field [3] . The SLAM algorithm assists autonomous robots navigation in large environments where precise maps are not available. In SLAM, a map of an unknown environment is built from a sequence of landmark measurements performed by a moving robot. SIFT/SURF algorithms have successfully extracted feature information from an unknown environment and created the environmental map based on the feature points [4] [5] . Most SLAM algorithms rely on unique environmental features or use artificial landmarks captured in camera images. The extracted features of landmarks are used to increase the accuracy of the estimated position of the robot. Robot position is calibrated through the following steps: artificial landmark recognition, current position calculation, navigation towards the landmark until directly underneath, and calibration of the robot pose using information from the landmark.

Artificial landmarks may carry additional information about the environment and may be used to assist a robot in localization and navigation. Artificial landmarks are commonly used for navigation and map building in indoor environments. Compared with natural landmarks, artificial landmarks are designed with particular colors and shapes to greatly reduce identification difficulty. This approach may increase the accuracy in localization and positioning for vision based mobile robot guidance systems. Landmark recognition is of great significance to robotic autonomous navigation or task execution. One commonly used artificial landmark is a visually printed landmark, such as a QR code. There are several ways to detect a QR code in an image. The detection quality turns out to be very sensitive to the distance and the angle between the camera and QR code plane [6] . Robot navigation control scheme based on dead-reckoning localization and refined by artificial ceiling landmarks setup in the environment for indoor positioning and navigation has been reported in [7] . Robot localization could be refined using fixed/static artificial QR-code landmarks setup in the environment for indoor positioning and navigation [8] . Since it is difficult to position the robot precisely perpendicularly to the artificial landmark using a single camera, the system has position localization error. A visual odometer technique based on artificial landmarks is described in [9] ; however, it is still subject to accumulated error.

To overcome some difficulties of previous works in [6] - [9] , this paper presents an image-based mobile robot localization and guidance system in an indoor space with installed artificial ceiling landmarks. Most previous approaches on artificial landmark pattern matching focus only on foreground information. In the contrary, the proposed ceiling landmark recognition system is that the feature points of landmarks are determined by topological information from both foreground and background. This work focuses on an entirely FPGA based mobile robot guidance control system with only one CMOS image sensor. To enhance recognition accuracy, landmark recognition/classifi- cation is performed after the mobile robot has moved to a position such that the ceiling landmark is located in the upright-top position of the robot’s camera image. The proposed system achieved recognition accuracy of 100% for 36 digit and upper case alphabet ceiling landmarks using the nearest neighbor classification. One requirement of the system is that one of the ceiling landmarks on the (route) map is visible to the robot’s camera at power up for localization.

The contributions of this work are as follows: 1) Only one CMOS image camera is required for the proposed mobile robot guidance control system. 2) The proposed foreground/background feature representation of the artificial ceiling landmarks is invariant with respect to rotation and translation. 3) The overall system, including omni- directional mobile robot motion control and landmark image processing and recognition, is implemented on a single FPGA chip.

2. Omni-Directional Mobile Robot System

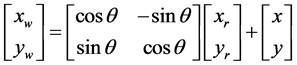

The mobile robot system coordinate systems, as shown in Figure 1, include the world coordinate frame , robot coordinate frame

, robot coordinate frame , camera pixel coordinate frame (c, r), and landmark coordinate Frame (u, v). Let

, camera pixel coordinate frame (c, r), and landmark coordinate Frame (u, v). Let  be the position and orientation of the robot frame with respect to the world coordinate. The camera system has a pixel resolution of

be the position and orientation of the robot frame with respect to the world coordinate. The camera system has a pixel resolution of  with 1.225 mm per pixel. The origin of robot coordinate is located at the center of the LCD image screen, pixel coordinate (400, 240). The transformation from robot coordinate to world coordinate is

with 1.225 mm per pixel. The origin of robot coordinate is located at the center of the LCD image screen, pixel coordinate (400, 240). The transformation from robot coordinate to world coordinate is

(1)

(1)

and the transformation from camera coordinate to robot coordinate is

(2)

(2)

Figure 1. Coordinate systems of an image-based omni-directional mobile robot and ceiling land- marks.

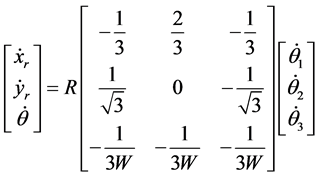

The mobile robot consists of three omni-directional driving wheels with radius R, and its body platform is circular with radius W. Let ,

,  , be the angular speed of each driving wheel, the velocity kinematic equation of the mobile robot is

, be the angular speed of each driving wheel, the velocity kinematic equation of the mobile robot is

(3)

(3)

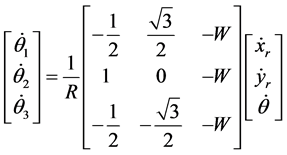

and the inverse velocity kinematic equation is

(4)

(4)

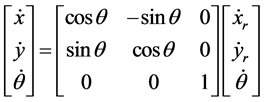

The velocity kinematics between the world frame and robot frame is

(5)

(5)

3. Ceiling Landmark Feature Points

A ceiling landmark is an artificial character symbol that consists of blue foreground and yellow background as Shown in Figure 2. Let F and B denote the center of foreground (blue) and background (yellow) pixels respectively; it is assumed that . The origin of the landmark (u, v) frame is located at the center of the line from F to B, the u-axis points from F to B, and the v-axis is perpendicular to the u-axis in

. The origin of the landmark (u, v) frame is located at the center of the line from F to B, the u-axis points from F to B, and the v-axis is perpendicular to the u-axis in  rotation. There are 4 feature points

rotation. There are 4 feature points  used to encode each ceiling landmark.

used to encode each ceiling landmark.

Figure 2. Landmark feature points in the object frame.

and  are centers of the foreground and background pixels respectively of the landmark in the first and fourth quarters of the (u, v) frame.

are centers of the foreground and background pixels respectively of the landmark in the first and fourth quarters of the (u, v) frame.

The computation of feature points in the landmark frame is straight forward as follows. First, ceiling landmark foreground and background centers

and its feature points

are obtained from the camera image processing system. The origin and orientation of the landmark frame with respect to the robot coordinate can then be expressed by

and

The feature points

where

The procedure of building the landmark feature template table is as follows. Navigate the mobile robot underneath each ceiling landmark, such that the robot frame origin is at the center of each landmark's foreground/background center points, F and B. Rotate the mobile robot in place so that the robot frame’s x-axis is alignment with the world frame's x-axis. Record landmark’s orientation angle

Figure 3 shows 36 experimental ceiling landmarks including digits 0 - 9 and alphabets A~Z. Figure 4 shows the feature points of each ceiling landmark in Figure 3. Table 1 shows the nearest neighbor for each landmark and the 1-norm distance of the difference between each landmark’s and its nearest neighbor’s feature points, where 1-norm distance is the sum of absolute values. It is noted that the feature points of a landmark depend on both foreground and back ground shape patterns. Therefore, it is

Figure 3. Experimental ceiling landmarks, digits 0 - 9 and alphabets A~Z.

Figure 4. Feature points

Table 1. The nearest neighbor (NN) and distance of digits 0 - 9 and alphabets A~Z.

not surprising that the digit 0 and alphabet O (or digit 3 and digit 8) have distinct feature points.

4. Image-Based Guidance Control

A robot road map is a deterministic orientation (angle) table for guiding the mobile robot from a current landmark to a target landmark. To illustrate, a simple example of a robot road map is presented in Figure 5 and Table 2. The mobile robot landmark guidance control procedure is as follows (Figure 6):

Step 1: Wait for new target landmark command.

Step 2: Move toward next landmark.

Step 3: Arrive at the next landmark.

Step 4: If current landmark is the target landmark then go to Step 1; otherwise, go to Step 2.

Figure 5. A simple example of a robot road map, where each arrow line shows a landmark’s orientation angle

Figure 6. Landmark-based mobile robot guidance control state diagram.

Table 2. (a) Robot route map (Figure 5); (b) Route map landmark orientation.

4.1. Move toward Next Landmark

Assume that the mobile robot is located directly underneath landmark i. Robot frame orientation to world frame (landmark map) is

where

and the angular speeds of driving wheels are planned as

The current landmark leaves the camera image when

4.2. Arrive at the Next Landmark

If new feature points show up and

The mobile robot translates a relative movement

The angular speeds of the driving wheels are planned as

After time T the next landmark is located directly above the mobile robot, and then landmark recognition is performed. The identified landmark based on nearest neighbor classification is the landmark (in Figure 3) which has the minimum value of

5. System Implementation and Experiments

The proposed image-based guidance control mobile robot system, as shown in Figure 7, is built on the Altera DE2-115 FPGA development board running at a system clock rate of 50 Mhz. A LCD display with a camera module (VEEK_MT) is connected to the DE2-115 and mounted on the top of the mobile robot. All FPGA modules are implemented using the Verilog HDL and synthesized by the Altera Quartus II EDA tool.

Figure 7. Experimental finger-tip writing and recognition process.

5.1. Ceiling Landmark Recognition

The landmark detection image processing module captures real-time images with a CMOS image sensor. Figure 8 shows the functional block diagram of the image processing module. The processing pipeline includes color space transformation, histogram equalization, color detection, filtering, object tracking and recording.

The proposed ceiling landmark recognition system is implemented as a dedicated logic circuit on a FPGA chip. Real-time image input is fed to the FPGA chip line by line. Up to 5 rows of an image are stored in line-buffers (FIFO) in a pipelined fashion. The image processing clock is 96 MHz. Raw image data is captured by a color camera with

Figure 8. The image processing of landmark detection.

Figure 9. Experimental ceiling landmark example and landmark detection and classification result. (a) ceiling landmark “7”; (b) result of landmark classification.

ceiling landmark and the image processing result for landmark detection and classification, landmark identification accuracy is 100% under experiments. We had tested each landmark 10 times from different orientations (a total of 360 landmarks), and no recognition error occurred.

5.2. Mobile Robot Speed Control

The mobile robot consists of 3 omni-directional driving wheels actuated respectively by 3 BLDC servo motors, each with gear trains of ratio 246:1. The radius of the robot platform is W = 22.746 cm and the radius of wheels are R = 5.093 cm. The motor drive is under pulse-type motor position control with 100 steps per cycle, 24600/360 = 68.33 step/deg. The maximum position pulse command rate is

A simple rectangular velocity profile PTP (point-to-point) motion control is applied to each servo motor to achieve the desired mobile robot motion trajectory. The design goal of the digital rectangular velocity profile for each servo motor is to exactly generate a train of

constant output frequency

Figure 10 shows a finite-state machine approach for the implementation of the frequency divider method.

5.3. User Command Interface and Experiment

The user command interface for the experimental mobile robot guidance system, as shown in Figure 11, is a vision-based fingertip-writing character recognition system, proposed by the same authors in [10] . In the beginning, the mobile robot is located directly underneath a landmark, for instance, landmark “2” of the route map in Figure 5, and waiting for a command to move to a new target landmark location. The user orders the mobile robot to move to the target landmark “7” by writing digit “7” as shown in Figure 12. Figure 13 shows the recorded path of the mobile robot moving from Landmark “2” to Landmark “7”, following the route map in Table 2.

Figure 10. Finite-state machine implementation of the frequency divider.

Figure 11. Experimental mobile robot ceiling landmark guidance control system.

Figure 12. User input target landmark location.

Figure 13. Robot moves from Landmark "2" to Landmark "7" (route map in Table 2). (a) start location: Landmark “2”; (b) leaves “2”; (c) moves from “2” to “1”; (d) arrives “1”; (e) leaves “1”; (f) moves from “1” to “6”; (g) arrives “6”; (h) leaves “6”; (i) moves from “6” to “7”; (j) arrives “7”; (k) final location: LandSmark “7”.

6. Conclusion

We have presented a simple but effective vision-based artificial ceiling landmark recognition and mobile robot indoor guidance and localization system. The feature points of ceiling landmarks are characterized by topological information from both foreground and background. The landmark identification accuracy by the nearest neighbor classification is 100% in our tests. One requirement of the proposed mobile robot guidance control system is that one of the ceiling landmarks on the route map is visible to the robot’s camera in the beginning. The contributions of this work are as follows: 1) Only one CMOS image camera is required for the proposed mobile robot guidance control system. 2) The proposed foreground/background feature representation of the artificial ceiling landmarks is invariant with respect to rotation and translation. 3) The overall system, including omni-directional mobile robot motion control and landmark image processing and recognition, is implemented on a single FPGA chip. In future work, we would like to install odometer and compass sensors to the mobile robot and to build the route map automatically.

Acknowledgements

This work is supported by Taiwan Ministry of Science and Technology grants MOST104-2221-E-011-035 and MOST 105-2221-E-011-047.

Cite this paper

Shih, C.-L. and Ku, Y.-T. (2016) Image-Based Mobile Robot Guidance System by Using Artificial Ceiling Landmarks. Journal of Computer and Com- munications, 4, 1-14. http://dx.doi.org/10.4236/jcc.2016.411001

References

- 1. Long, J. and Zhang, C.L. (2012) The Summary of AGV Guidance Technology, Advanced Materials Research, 591-593, 1625-1628.

http://dx.doi.org/10.4028/www.scientific.net/AMR.591-593.1625 - 2. DeSouza, G.N. and Kak, A.C. (2002) Vision for Mobile Robot Navigation: A Survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24, 237-267.

http://dx.doi.org/10.1109/34.982903 - 3. Siegwart, R., Nourbakhsh, I.R. and Scaramuzza, D. (2011) Introduction to Autonomous Mobile Robots. MIT Press, Cambridge.

- 4. Lowe, D.G. (2004) Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision, 60, 91-110.

http://dx.doi.org/10.1023/B:VISI.0000029664.99615.94 - 5. Bay, H., Ess, A., Tuytelaars, T. and Gool, L.V. (2008) SURF: Speeded up Robust Features. Computer Vision and Image Understanding, 110, 346-359.

http://dx.doi.org/10.1016/j.cviu.2007.09.014 - 6. Kartashov, D., Krinkin, K. and Huletski, A. (2015) Fast Artificial Landmark Detection for Indoor Mobile Robots. Proceedings of the Federated Conference on Computer Science and Information Systems, Vol. 5, 209-214.

http://dx.doi.org/10.15439/2015f232 - 7. Kim, T., and Lyou, J. (2009) Indoor Navigation of Skid Steering Mobile Robot Using Ceiling Landmarks. IEEE International Symposium on Industrial Electronics, Seoul, 5-8 July 2009, 1743-1748.

- 8. Okuyama, K., Kawasaki, T. and Kroumov, V. (2011) Localization and Position Correction for Mobile Robot Using Artificial Visual Landmarks. Proceedings of the 2011 International Conference on Advanced Mechatronic Systems, Zhengzhou, 11-13 August 2011, 414-418.

- 9. Fernandes, C., l’audio dos S., Campos, F.M. and Chaimowicz, L. (2012) A Low-Cost Localization System Based on Artificial Landmarks. 2012 Brazilian Robotics Symposium and Latin American Robotics Symposium, Brazilian, 16-19 October 2012, 109-114.

http://dx.doi.org/10.1109/SBR-LARS.2012.25 - 10. Shih, C.L., Lee, W.Y. and Ku, Y.T. (2016) A Vision-Based Fingertip-Writing Character Recognition System. Journal of Computer and Communications, 4, 160-168.

http://dx.doi.org/10.4236/jcc.2016.44014