Optics and Photonics Journal

Vol.09 No.02(2019), Article ID:90485,6 pages

10.4236/opj.2019.92002

Information Interpretation of Heisenberg Uncertainty Relation

Oleg V. Petrov*,#

A.V. Vishnevsky Institute of Surgery, Moscow, Russia

Copyright © 2019 by author(s) and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: November 30, 2018; Accepted: February 10, 2019; Published: February 13, 2019

ABSTRACT

In this paper the following information interpretation of uncertainty relation is proposed: if one bit of information was extracted from the system as a result of the measurement process, then the measurement itself adds an additional uncertainty (chaos) into the system equaled to one bit. This formulation is developed by calculating of the Shannon information entropy for the classical N-slit interference experiment. This approach allows looking differently at several quantum phenomena. Particularly, the information interpretation is used for explanation of entangled photons diffraction picture compression.

Keywords:

Uncertainty Relation, N-Slit Interference Experiment, Information Entropy, Entangled Photons

1. Introduction

Heisenberg uncertainty relation is one of the fundamental principles of Quantum Mechanics. On the other hand, an information approach to Quantum Mechanics is popular now (for example, see [1] [2] [3] ). In this paper, firstly, we consider the classical N-slit interference experiment and calculate the Shannon information entropy for it. This allows obtaining a formulation of the information interpretation of the uncertainty relation. Then, it is used for an explanation of the entangled photons diffraction picture compression.

2. N-Slit Interference Experiment Analysis

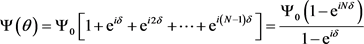

Let’s consider the N-slit interference experiment (Figure 1). Let’s assume that a source wave function is a plane wave , where ω is the wave frequency, k = 2π/λ, were λ is the wavelength. Then the wave function of N slits is

, (1)

, (1)

where θ is the angle between the wave vector and the optical axis, δ = k・d・sinθ, d is the space between slits. Multiplying (1) by its complex conjugate the interference picture intensity on the screen M as a function of angle θ is obtained:

. (2)

This intensity can also be considered as a probability distribution. Let’s calculate the information entropy H of this distribution using Shannon’s formula [4] :

(3)

where the normalization condition is: . The curve presenting dependence H from N in a semilogarithmic scale for kl = 10 is shown in Figure 2. The integral (3) was calculated numerically for . Correlation and regression coefficients were calculated using Statistica 6 software. The statistical analysis of this data shows: First, there is linear dependence between H and log2(N) which is demonstrated by high value of correlation coefficient equaled to −0.9996.

Second, the equation of straight line, which describes the best point’s distribution on the curve (Figure 2), was obtained by the least squares method. This equation has the following appearance:

(4)

Figure 1. N-slit interference experiment.

Figure 2. Dependence of information entropy H from number of slits N.

It is called the regression equation. As it can be seen from this equation, reduction of slits amount by a factor of 2 increases the distribution entropy by one bit. As it was demonstrated in the popular lections by R. Feynman [5] , the reduction of slit amount in half changes the interference picture of particle diffraction the same way as obtaining information of which half of the slits the particle passed through when all slits were open. This allows connecting amount of information about the particles passing through the slits with amount of information on where these particles will hit. That is obtaining one bit of information via uncertainty reduction (reduction of entropy) of the slit amount, which the particle has passed through, results in uncertainty increase (increase of entropy) of the interference picture. The information on where the particle may hit will also be reduced by one bit. Following Shannon’s approach [4] we state that uncertainty reduction due to decrease of the slits amount by a factor of 2 relates to entropy reduction (increase of information amount) by one bit.

3. Formulation of Information Interpretation of Uncertainty Relation

Since the obtained regularity does not depend on the specific experimental parameters, this regularity can be generalized for any quantum system. Let’s call this: information interpretation of uncertainty relation. The uncertainty relation itself can be written in the information form. In fact, the uncertainty relation for a harmonic oscillator is: ΔX・ΔP = ћ/2 (expression 16.8 from [6] ), where ΔX and ΔP are uncertainties (standard deviations) in coordinate and momentum, respectively, ħ is the reduced Planck constant (h/2π). Let’s rewrite this in form: ΔX/2・ΔP・2 = ћ/2 (here the coordinate uncertainty was reduced in half and as a result momentum uncertainty was increased twice) and then find the logarithm of this: log2(ΔX) − 1 bit + log2(ΔP) + 1 bit = const. Here: 1 bit = log2(2), const = log2(ћ/2), log2(ΔX) and log2(ΔP) − amounts of information during determination of coordinate and pulse, respectively (up to additional constant, which is equaled to log2(2πe)0.5 for the normal distribution [7] ). The last equation can be interpreted as following: we extracted one bit of information from the system during coordinate X measurement (we decreased coordinate uncertainty ΔX in half) but this disturbed the system increasing its entropy also by one bit (momentum uncertainty ΔP increased twice). Let’s formulate the information interpretation of uncertainty relation as following: if one bit of information was extracted from the system as a result of the measurement process, then the measurement itself adds an additional uncertainty (chaos) into the system equaled to one bit.

4. Application for Explanation of Entangled Photons Diffraction Picture Compression

As an example let’s show how the information interpretation of uncertainty relation can be used for explanation of entangled photons diffraction picture compression. In [8] generation of entangled photon pairs was obtained by directing the argon ion laser beam into BaB2O4 crystal. Photons generated in the crystal have frequencies equaled to the half of falling photon frequencies. Let’s describe the principle scheme of this experiment (Figure 3). The entangled photon pairs are generated in an area V. Photons belonging to the same pair are orthogonally polarized and propagate in opposite directions in horizontal plane. Two slits are placed symmetrically on the left and right sides of the entangled photons source. A photon counting detector is placed into the far-field zone on each side, and the coincidences between the “clicks” of both detectors are registered. Thus the entangled photons diffraction picture is obtained. The experiment showed that main diffraction maximum width was two times smaller than it supposed to be in classical case. That result may be obtained with ordinary photons of twice higher energy (twice smaller wavelength).

To understand this result let’s notice that in ordinary photons diffraction case we can always distinguish one photon from the other as one comes to detector earlier than the other. However, this information is lost in the experiment described above. The entangled photons are born simultaneously and come to

Figure 3. Schematic of a two-photon diffraction-interference experiment (from [5] ). V is BaB2O4 crystal, D1 and D2 are detectors.

detector also at the same time. As a result, the system obtains an additional uncertainty corresponding to impossibility distinguishing photons. The system entropy increases by one bit. However, the corresponding diffraction picture shrinks in half. It is easy to show that the diffraction picture entropy decreases by one bit. Let’s assume that P(х) and G(х) are a normalized distributions of diffraction picture intensity before and after shrinking, respectively. So, . The entropy of this distribution is by definition equaled:

(5)

The entropy of diffraction picture distribution after shrinking is equaled:

(6)

An experimental factor of shrinking is equaled to 2. So, . Then, we can obtain from normalization : . Then:

(7)

Here we used change of variable y = 2x inside the integral. Then, form and log2(1/2)= -1 bit we can obtain:

(8)

Thus the system compensates loosing of photons identification possibility by diffraction spot reduction. This allows conservation of the balance between the system uncertainty and the amount of information extracted from the system.

5. Conclusions

The information entropy for the N-slit interference experiment was calculated. A formulation of the information interpretation of the uncertainty relation was proposed. It was applied for an explanation of the entangled photons diffraction picture compression.

Oleg Petrov deceased in 2009. His unpublished original work was in Russian. It was translated to English by A.M. Smolovich with help of D.A. Oulianov. Figures were edited by A.P. Orlov and P.A. Smolovich.

Conflicts of Interest

The author declares no conflicts of interest regarding the publication of this paper.

Cite this paper

Petrov, O.V. (2019) Information Interpretation of Heisenberg Uncertainty Relation. Optics and Photonics Journal, 9, 9-14. https://doi.org/10.4236/opj.2019.92002

References

- 1. Frieden, B.R. (1992) Fisher Information and Uncertainty Complementarity. Physics Letters A, 169, 123-130. https://doi.org/10.1016/0375-9601(92)90581-6

- 2. Luo, S. (2003) Wigner-Yanase Skew Information and Uncertainty Relations. Physical Review Letters, 91, Article No. 180403. https://doi.org/10.1103/PhysRevLett.91.180403

- 3. Romera, E., Sánchez-Moreno, P. and Dehesa, J.S. (2006) Uncertainty Relation for Fisher Information of D-Dimensional Single-Particle Systems with Central Potentials. Journal of Mathematical Physics, 47, Article No. 103504. https://doi.org/10.1063/1.2357998

- 4. Shannon, C.E. (1948) A Mathematical Theory of Communication. Bell System Technical Journal, 27, 623-656. https://doi.org/10.1002/j.1538-7305.1948.tb00917.x

- 5. Feynman, R., Leighton, R. and Sands, M. (2009) The Feynman Lectures on Physics: The Definitive and Extended Edition. Addison-Wesley, San Francisco, Calif., Harlow, Vol. 3, Chap. 1.

- 6. Landau, L.D. and Lifshitz, E.M. (1977) Quantum Mechanics: Non-Relativistic Theory. 3rd Edition, Pergamon Press.

- 7. Kuzin, L.T. (1973) Osnovy kibernetiki. Energiya, Moscow, 174. (In Russian)

- 8. D’Angelo, M., Chekhova, M.V. and Shih, Y. (2001) Two-Photon Diffraction and Quantum Lithography. Physical Review Letters, 87, Article No. 013602. https://doi.org/10.1103/PhysRevLett.87.013602

NOTES

*Oleg Petrov deceased in 2009. If necessary, contact Anatoly Smolovich, e-mail: asmolovich@petersmol.ru.