Journal of Applied Mathematics and Physics

Vol.05 No.08(2017), Article ID:78860,18 pages

10.4236/jamp.2017.58131

Review on Mathematical Perspective for Data Assimilation Methods: Least Square Approach

Muhammed Eltahan

Aerospace Engineering, Cairo University, Cairo, Egypt

Copyright © 2017 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: July 27, 2017; Accepted: August 28, 2017; Published: August 31, 2017

ABSTRACT

Environmental systems including our atmosphere oceans, biological… etc. can be modeled by mathematical equations to estimate their states. These equations can be solved with numerical methods. Initial and boundary conditions are needed for such of these numerical methods. Predication and simulations for different case studies are major sources for the great importance of these models. Satellite data from different wide ranges of sensors provide observations that indicate system state. So both numerical models and satellite data provide estimation of system states, and between the different estimations it is required the best estimate for system state. Assimilation of observations in numerical weather models with data assimilation techniques provide an improved estimate of system states. In this work, highlights on the mathematical perspective for data assimilation methods are introduced. Least square estimation techniques are introduced because it is considered the basic mathematical building block for data assimilation methods. Stochastic version of least square is included to handle the error in both model and observation. Then the three and four dimensional variational assimilation 3dvar and 4dvar respectively will be handled. Kalman filters and its derivatives Extended, (KF, EKF, ENKF) and hybrid filters are introduced.

Keywords:

Least Square Method, Data Assimilation, Ensemble Filter, Hybrid Filters

1. Introduction

In general, models could be classified as [1] [2] :

1) Process specific models (Causality Process) are based on conservation of laws of nature e.g.: Shallow water modeling, Navier Stokes Equation.

2) Data specific models (correlation based) are based on developed experimental models e.g.: Time Series models, Machine Learning, Neural Networks. etc.

Atmospheric and oceans models are process specific models which Navier stokes equations are the core of the solver to predict, simulate and estimate system states. Process specific models have different classifications from different perspectives, time, space, and structure of the model, in addition to another classification which is deterministic and stochastic.

It is assumed that the state of dynamical system evolves according to first order nonlinear equation

(1)

where is the current state of the system; M(.) is the mapping function from the Current state X at time K to the next state X at K + 1

・ If the mapping function M(.) doesn’t depend on the time index K then it is called a time invariant or autonomous system

・ If the M(.) varies with time index k that is , then it is called time-varying system (dynamic system)

・ If for M non-singular matrix, then it is called time invariant linear system.

・ If matrix M varies with time, that is , then it is called time varying linear or non-autonomous system.

・ In the special cases when M(.) is an identity map, that is , then it is called static system

The mentioned above is the deterministic case; the Randomness in the model can enter in three ways:

(A) Random initial conditions (B) Random forcing (C) Random Coefficients

So, a random or a stochastic model is given by:

(2)

where the random sequences , denotes to the external forcing; typically captures uncertainties in the model including model error.

So, the classes of models that are used in the data assimilation could be classified as:

(1) Deterministic-Static (3) Stochastic-Static

(2) Deterministic-Dynamic (4) Stochastic-Dynamic

The paper is organized as follow: Section 2 states the mathematical foundation for data assimilations which describes linear and nonlinear least square in addition to weighted least square and Recursive Least square. Section 3 introduces the deterministic static model linear and nonlinear cases. Section 4 shows stochastic static model: Both linear and nonlinear cases. Section 5 describes deterministic dynamic linear case and the recursive case. Section 6 explains stochastic dynamic linear, nonlinear, reduced and hybrid filters.

2. Mathematical Background

Mainly all the data assimilation techniques are based on least square estimation, so classification of the estimation problems based on different criteria. The Estimation problems can be underdetermined problem when number of observations (m) are less than number of state (n) (m < n). And can be over determined when number of observations (m) is larger than number of states (n) (m > n) [1] . Also the Estimation problem can be classified according to the mapping function from the state space to observation space which can be linear or nonlinear. and nonlinearity should be handled

Other type of classification is offline or online problems. Where offline problems if the observations are known priori. In other words we have historical set of data and we treat with them. While Online/Sequential problems are computing a new estimate of the unknown state X as a function of the latest estimate and the current observation. So, online formulation is most useful in real time applications. The last type of classifications is Strong and Weak Constraints, The Strong Constraint is occurred when the estimation is performed under the perfect model assumption. While in case of allowing for errors in the model dynamics, it is considered Weak Constraint [1]

Since, Data Assimilation based on Least Square Approach [1] [2] [3] [4] . First we introduce linear version of least square then will move to nonlinear version. After that weighted version for both linear and nonlinear will be introduced.

2.1. Linear Least Square Method

Let (3)

Given the observation vector Z and the mapping function/Interpolation matrix H (Full Rank) find the unknown state vector X.

Then the error Vector which represents the difference between the Observations Z and the required estimated state X can be represented as follow

(4)

The Previous term is called also error term or Innovation term. So we need to find the best estimate that minimizes the error. So the problem is converted from Estimation Problem to Optimization Problem. One of the basic classifications for problem is over determined Problem (m > n) or under determined problem (m < n) and we consider first over determined case (m > n). To measure The vector e using The Euclidean norm and the fact of Minimization of norm is equal to minimization of square norm. The cost function will be as follow

(5)

And minimization of X is Obtained under the following condition . This lead to

(6)

And this equation was known as normal equation method. In case of over determined problem m > n. While in case under determined case (m < n). The estimation for X will be as follow.

(7)

And this undetermined problem where m number of observation is less than n, number of unknowns is known in geophysical (physical process physical properties of the earth and its surrounding space Environment) because of the cost of collection of the observation is high. And in case uniquely determined case (m = n) which mean that the error e is zero. The estimated state vector X will be

(8)

As we can see from the above three formulation that minimization for the cost function ends to Solving system of linear equation, and solving the linear system (A = Xb) of equations can be done using:

o Direct methods (Cholesky decomposition, Q-R decomposition, Singular Value decomposition, …) [5] .

o Iterative methods (Jacobi Method, Gauss-Seidel Method, Successive Over-Relaxation method, …) [5] .

2.2. Non Linear Least Square Method

The problem here will be: Given set of observation Z and knowing the function form h which is nonlinear function. Find the state vector X as shown

(9)

And the Innovation term will be

(10)

And the cost function will be

(11)

And so that, the idea here is extension for the linear case by replacing the nonlinear term h(x) with its Linear approximation of Taylor series expansion around an Operating Point let it and in this case it is called first order approximation for nonlinear least square as follow

(12)

where is the Jacobin matrix of h which is an matrix given by:

. (13)

And by substitution of first order approximation of Taylor series expansion in the cost function and giving it name and the index one refer for first order

(14)

And simplifying the notation by define

(15)

And by comparing the previous equation by the linear version.

(16)

You will find that every Z was replaced by g(x) and every H is replaced by also every X is replaced by

And if the gradient is ; we will obtain

(17)

And this is iterative approach and given an initial value for and solving the above equation using direct methods or iterative methods then iterate again until < prescribed threshold

And by similarity the second order algorithm for non Linear Least Square will be same steps except that Taylor series expansion will be full Quadratic approximation

(18)

where the is the hussian of and by substituting this second order expansion in the nonlinear cost function and giving it name since the index 2 refer to second order approximation and Putting the gradient equal zero you will get the following

(19)

And this is iterative approach and given an initial value for and solving the above equation using direct methods or iterative methods then iterate again until < prescribed threshold. All the above methods were for deterministic least square where

or (20)

2.3. The Weighted Least Square Method

If there additive random noise was V presented. Which mean the observation is noisy with as follow

or (21)

・ Mean E(V) = zero which mean that the instrument is well calibrated Unbiased and If it is ≠ zero which mean that there is bias (for example under or over reading).

・ Covariance , The covariance matrix for the instrument. which it is property for the instrumentation.

So, for the linear form of Least Square, the cost function will be for linear form will be

(22)

And the non Linear form

(23)

Also by the same methodology that used above, the best estimate form for Stochastic linear least square after equality for the Gradient to zero will be

(24)

And for the stochastic nonlinear least square first order approximation will be

(25)

And for the stochastic nonlinear least square Second order approximation will be

(26)

where for simiplyifing the notation.

2.4. The Recursive Least Square Estimation Approach (Offline/Online Approach)

All the above analysis was assumed that the number m of observations is fixed. which mean by another term it is offline version of least square. So if we don’t know number observation m and they are arrive sequentially in time. There’re two ways to add this new observations:

・ The first approach is to solve the system of linear equation repeatedly after arrival of every new observation. But this approach is very expensive from Computational point of view

・ The second approach is to formulate the following problem which is based on Knowing the Optimal estimate based on the m observations. we need to compute based on observation. In more clear words we need to calculate to reach to formulation that can compute the new estimate function of the old estimate plus sequantional term. And this approach is called situational or recursive framework

It was known as mentioned above that the optimal linear least square estimate for is . Let be the new observation then the can be expanded in the form of matrix-vector relation as:

(27)

So, the Innovation will be:

(28)

So, the Cost function will be:

(29)

(30)

So, by taking the gradient and equal it to zero . We can get the following

(31)

where

(32)

The cost of computation for the second term of this equation is less than solving the all system again.

The basic building block for understanding the Data assimilation based on Least Square approach was introduced.

3. Deterministic-Static Models

In Atmospheric science, when we want to assimilate observation data to the model at time step, Two source of information are available, one of them after mapping the observation Z to X and the other source is the Prior information.

The Linear/Non Linear Case

The formulation for the problem, we need the best estimate for X given the two source of information

・ The first source is the given the Observation Z and mapping function H, where the innovation term was

・ The Second source is given the Prior or Background information where the innovation term is

So the cost function for this case will

(33)

For linear Case

(34)

For Nonlinear case

(35)

4. Stochastic-Static Models

The formulation for the problem will be the same as in Deterministic/Static problem, we need the best estimate for X given the two source of information

・ The first source is the given the Observation Z and mapping function H, where the innovation term was and the observation has noise with its covariance R

・ The Second source is given the Prior or Background information where the innovation term is , and the Background has its Covariance B

So the cost function for this case will

(36)

For linear Case:

(37)

For Nonlinear case:

(38)

5. Deterministic-Dynamic

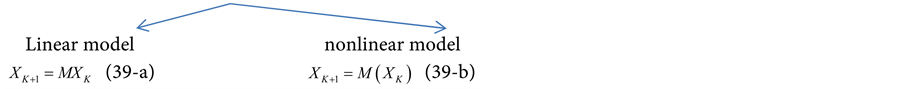

The Dynamical models can be classified as:

where is the state of the dynamical system, so if the initial condition is known, so computing the is a forward problem

It is assumed that is not known. And Estimating based on noisy indirect information is the inverse problem that it is required to solve.

The observations also can be classified as:

And assume the is White noise, has zero mean and Known covariance matrix R, which depend on the nature and the type of instruments used

So, formulation for statement of problem is: given set of noisy observations and the model equations, it is required to estimate the initial

Condition that give best fit between the background states and noisy observations

To conclude there are four different types of problems:

1) Linear Model-Linear observation

2) Linear model-non Linear observation

3) Nonlinear model-linear observation

4) Non linear model-Non linear observation

We will consider only one case only the which is the simplest formulation with both model and observations are linear and the other cases could be checked in [1] [2] .

The Definition of the cost function which is weighted sum of the squared errors is given as:

For linear case

(41)

For nonlinear case

(42)

Depend on the whether the observations are linear or not linear. And the goal is to minimize the J(X) w.r.t

In case there background will be included for linear case

(43)

And for nonlinear case

(44)

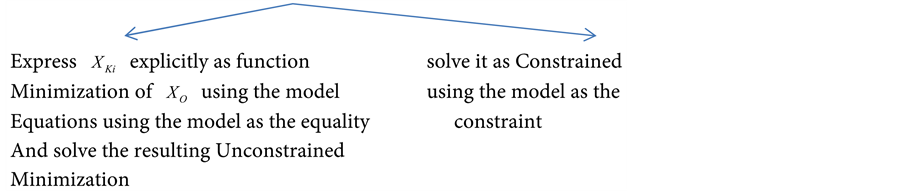

There are two approaches for minimization of this cost functions

5.1. Deterministic-Dynamic Linear Case

5.1.1. Linear Case-Method of Elimination

This method is mainly based on substitute the following equation in the cost function J(X) then, get the following equation

(45)

Then we get the gradient for the

For simplicity

and it is quadratic in (46)

(46-a)

(46-b)

(46-c)

So, the gradient is which leads to

(47)

But, this approach is not practical, since it involves matrix-matrix products in the computation of A and b, so there is need to another way

5.1.2. Linear Case-Lagrangian Multipliers Formulation

Define Lagrangian L:

(48)

And the Necessary conditions for the minimum

(49-a)

(49-b)

(49-c)

So (50-a)

(50-b)

(50-c)

Defining:

(51)

And substitute it in the last two equations

(52-a)

(52-b)

So, as shown that the formulation for calculate is backword relation. we can iterate backward starting from to Compute . And this technique is known as backword adjoint dynamics. Then after getting substitute in and get the gradient then use any minimization algorithm to get , The 4-D Var Algorithm (First order adjoint method) can be summarized as follow:

1) Start with an arbitrary , and compute the model solution using

2) Given the observation where

3) Set → and Solve to find

4) Compute the gradient

5) Use this gradient in minimization algorithm to find the optimal by repeating the steps 1 through 4 until convergence.

5.2. Recursive Least Squares Formulation of 4D Var (Online Approach)

In the previous part of 4Dvar section the solution for off-line 4Dvar problem of assimilating given set of observations in deterministic-dynamic model using classical least square method.

Now there is need to develop an online or recursive method for computing the estimate of the state as new observations arrive. which mean we need to compute the in terms of and the new observation .

Consider linear deterministic dynamical system without model noise

(53)

where the initial condition is random variable with and . and the observations for are given as

(54)

where is full rank and is the observation vector noise with the following known properties

and (55)

So, the objective function that

(56)

where

(57)

(58)

Since, our goal to find an optimal that minimize the , it is needed to express and

In tem of the corresponding N values and . So,

Since, (59)

Then (60)

Since (61)

Hence, the trajectory of the model starting from

Substitute for and into

(62)

And differentiate w.r.t twice to get the gradient and Hessian. Then setting the gradient to zero and simplifying the notation

(63)

where

(64)

(65)

(66)

(67)

By induction the minimization for of is given by

(68)

The goal of the recursive framework is to express as function of and . this calls when expressing , , , in terms of , , ,

So using equations from (61) to (64) and and the following equation

(69)

Then N + 1 formula in terms of N can be get

(70)

(71)

And since

(72)

(73)

(74)

or (75)

(76)

By combining Equations (70) and (76) into Equation (68) and defining after combination

(77)

The right hand side of this equation is sum of two terms the first one is

(78)

So adding and subtracting this term is equal to

(79)

where

is called kalman gain matrix and

combine it with Equation (77). The desired recursive expression will be gained

(80)

6. Stochastic-Dynamic Model

This type of data assimilation problems is same as deterministic/dynamic problems, except that, this type introduces an additional term in the forecast equation which is noise vector that associated with the model (i.e. model error).

For Stochastic-dynamic model we can divide the filters to Linear and Nonlinear filters Linear filter has evolution function in the model and the mapping function is linear while in Nonlinear filters those two functions are nonlinear

6.1. Linear Filters

Kalman Linear Filter

Kalman filter approach was first introduced at reference [6] [7]

Problem formulation:

This section will show how model and observation with error will be presented then will formulate the algorithm:

A1-Dynamic model: it will be assumed linear, non autonomous, dynamical system that evolves according to

(81)

where is nonsingular system matrix that varies with time K and denote to model error. It is assumed that and satisfy the following conditions (A) is random variable with known mean vector and known covariance matrix (B) The model erro is unbiased, mean for all k, and temporally uncorrelated (white noise) when if and otherwise (C) The model error and the initial state is uncorrelated for all k

B1-Observations: The observation is the observation at time k and related to via

(82)

where represent the time varying measurement system and represent the measurement noise with the following properties (A) has mean zero (B) is temporary uncorrelated: if while otherwise . (C) is uncorrelated with the initial state and the model error which mean for all and for all K and j

C1-statement of the filtering problem: Given that evolves according to equation 81 and set of observations. Our goal to find an estimate that minimize the mean square error

And the following is the summary of the Kalman filter procedure (Covariance Form)

Model

,

is random with mean and

Observation

,

Model Forecast ,

Data Assimilation

The computation of the covariance matrices and is the most time-consuming part since in many of the applications n > m. so that reduced order filters has been introduced [1] .

6.2. Non Linear Filters

6.2.1. First Order Filter/Extended Kalman Filter (EKF)

The extended kalman filter is an extension for kalman filter idea in case the evolution function in the model and the mapping function is non linear

For nonlinear model

For nonlinear observation

The main idea for First order filter/extended kalman filter is to expand the around

and around in first order taylor series expansion. when and are linear it reduces to kalman filter. The following is the summary steps for Extended Kalman filter

Model

Observation

Forecast Step

Data Assimilation Step

6.2.2. Second Order Filter

The second order filter is the same idea of the first order filter except that the expansion of around and around in Second order Taylor series expansion. And the following is summary of the second order nonlinear filter

Model

Observation

Forecast Step

Data Assimilation Step

6.3. Reduced Rank Filters

Ensemble Kalman Filter

The ensemble Kalman filter [8] originated from the merger of Kalman filter theory and Monte Carlo estimation methods. It was introduced the basic principles of linear and nonlinear filtering but these are not used in day by day operations at the national centers for weather predictions. Because of the cost of the updating the covariance matrix was very high.

So, there are mainly two ways to avoid the high cost of computing the covariance matrix

1) The first method was the Parallel computation which mainly dependent on

a) The Algorithm

b) The number of Processors

c) The topology of the interconnection of the network

d) How the tasks of the algorithm are mapped on the processor

2) The second method which became more popular which to compute low/ reduced rank approximation to the full rank covariance matrix. and most of low rank filters differs only in the way in which the approximation are derived. Excellent review on the Ensemble Kalman filter was introduced

Formulation of the problem

It is assumed that the model is nonlinear and observations are linear functions of the state

And it is assumed that

1) The initial conditions

2) The dynamic system noise wk is white Gaussian noise with

3) The observation noise is White noise with

4) , , are mutually uncorrelated

Model

Observation

Initial ensemble

・ Create the initial ensemble

Forecast step

1) Create the ensemble of forecasts at time using the following

The members of the ensemble forecast at time are generated where

2) Compute and using

Data assimilation step

1) Create the ensemble of estimates at time using

and

2) Compute and using

The sample mean of the estimate at time is then given by

where

where

where for large N

All summaries, derivation and details could be checked in reference [1] [9] . and full review on ensemble kalman filter for atmospheric data assimilation is inteoduced by P. L. Houtekamer and Fuqing Zhang, 2016 [10] .

6.4. Hybrid Filters

3DVar uses static climate Background error while 4DVar uses implicit flow dependent information but still start with static background error. And since. The B-matrix affects the performance of the assimilation heavily [11] it is important to use a B-matrix that is a realistic representation of the actual forecast error covariance [12] . So many proposed hybrid filters were introduced. they are to use flow dependent background error in vartional data assimilation system by combining the 3Dvar climate background error covariance and error of the day from ensemble.

In Equation (37) replace the B by weighted sum of 3Dvar B and the ensemble covariance as follow [13] :

where

The Ensemble covariance is included in the 3DVAR cost function through

Augmentation of control variables [14] and the following formula is mathematically equivalent to [13] .

This is well known hybrid 3DVar-EnKF method. While 4DVar-EnKF method has the same idea if we substitute by Equation (43) by the same methodology in 3DVar-EnKF part. More advanced hybrid filters are highlighted in ref [15] [16] .

7. Conclusions

This paper shows the mathematical perspective for the basic foundation of data assimilation modules starting from least square to advanced filters that used in data assimilation as journey. This work is the first of its type to summarize the mathematical perspective for data assimilation in extensive way and highlights both classical and advanced data assimilation methods. This paper could be used as reference to understand the mathematics behind data assimilation. It started by least square method and their different versions then explains on the classical method 3Dvar. 4DVar also is introduced. Advanced filters such kalman filter and its families were highlighted. The idea of hybrid filter was introduced finally.

For future work, detailed hybrid filters should be highlighted, since there are different hybrid filters structure were introduced. Generic case studies the evaluate performance of the different assimilation techniques.

Cite this paper

Eltahan, M. (2017) Review on Mathematical Perspective for Data Assimilation Methods: Least Square Approach. Journal of Applied Mathematics and Physics, 5, 1589-1606. https://doi.org/10.4236/jamp.2017.58131

References

- 1. Lewis, J.M. (2009) Dynamic Data Assimilation: A Least Square Approach. Cambridge University Press.

- 2. Dynamic Data Assimilation: An Introduction Prof S. Lakshmivarahan, School of Computer Science, University of Oklahoma. http://nptel.ac.in/courses/111106082/#

- 3. Gibbs, B.P. (2011) Advanced Kalman Filtering, Least-Squares and Modeling: A Practical Handbook. Wiley.

- 4. Bjorck, A. (1996) Numerical Methods for Least Squares Problems, Linköping University, Linköping, Sweden. https://doi.org/10.1137/1.9781611971484

- 5. Kreyszig, E. (2011) Advanced Engineering Mathematics. https://www-elec.inaoep.mx/~jmram/Kreyzig-ECS-DIF1.pdf

- 6. Zarchan, P. and Musoff, H. (2000) Fundamentals of Kalman Filtering: A Practical Approach. American Inst of Aeronautics & Astronautics, United States, 2.

- 7. Kalman, R.E. (1960) A New Approach to Linear Filtering and Prediction Problems. Journal of Basic Engineering, 82, 35-45. https://doi.org/10.1115/1.3662552

- 8. Evensen, G. (1994) Sequential Data Assimilation with a Nonlinear Quasi-Geostrophic Model Using Monte Carlo Methods to Forecast Error Statistics. Journal of Geophysical Research, 99, 10143-10162. https://doi.org/10.1029/94JC00572

- 9. Evensen, G. (2003) The Ensemble Kalman Filter: Theoretical Formulation and Practical Implementation.

- 10. Houtekamer, P.L. and Zhang, F. (2016) Review of the Ensemble Kalman Filter for Atmospheric Data Assimilation. Monthly Weather Review, 144.https://doi.org/10.1175/MWR-D-15-0440.1

- 11. Bannister, R.N. (2008) A Review of Forecast Error Covariance Statistics in Atmospheric Variational Data Assimilation. I: Characteristics and Measurements of Forecast Error Covariances. Quarterly Journal of the Royal Meteorological Society, 134, 1951-1970. https://doi.org/10.1002/qj.339

- 12. Bannister, R.N. (2008) A Review of Forecast Error Covariance Statistics in Atmospheric Variational Data Assimilation. II: Modelling the Forecast Error Covariance Statistics. Quarterly Journal of the Royal Meteorological Society, 134, 1971-1996. https://doi.org/10.1002/qj.340

- 13. Hamill, T.M. and Snyder, C. (2000) A Hybrid Ensemble Kalman Filter-3D Variational Analysis Scheme. Monthly Weather Review, 128, 2905-2919. https://doi.org/10.1175/1520-0493(2000)128<2905:AHEKFV>2.0.CO;2

- 14. Lorenc, A.C. (2003) The Potential of the Ensemble Kalman Filter for NWP: A Comparison with 4D-Var. Quarterly Journal of the Royal Meteorological Society, 129, 3183-3203. https://doi.org/10.1256/qj.02.132

- 15. Nawinda, et al. (2016) A Hybrid Ensemble Transform Particle Filter for Nonlinear and Spatially Extended Dynamical Systems.

- 16. Laura, S., et al. (2015) A Hybrid Particle-Ensemble Kalman Filter for High Dimensional Lagrangian Data Assimilation.