Open Access Library Journal

Vol.03 No.11(2016), Article ID:72431,17 pages

10.4236/oalib.1103186

On Comparative Study for Two Diversified Educational Methodologies Associated with “How to Teach Children Reading Arabic Language?” (Neural Networks’ Approach)

Hassan M. H. Mustafa, Fadhel Ben Tourkia

Computer Engineering Department, Al-Baha Private College of Sciences, Al-Baha, KSA

Copyright © 2016 by authors and Open Access Library Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: October 31, 2016; Accepted: November 27, 2016; Published: November 30, 2016

ABSTRACT

This paper considered the increasingly sophisticated role of artificial neural networks (ANNs) after its applications at the interdisciplinary discipline incorporating neuroscience, education, and cognitive sciences. Recently, those applications have resulted in some interesting findings which recognized and adopted by neurological, educational, in addition to linguistic researchers. Accordingly,

Subject Areas:

Computer Engineering

Keywords:

Brain Reading Function, Computer Based Teaching Methodology, Coincidence Detection Learning, Reading Arabic Language, Artificial Neural Networks’ Modeling

1. Introduction

Overwhelming majority of neurobiologists’ research efforts as well as neurological researches have recently revealed findings associated with the increase of commonly sophisticated role of artificial neural networks (ANN). This piece of research adopts the conceptual approach of (ANN) role inspired by functioning of highly specialized biological neurons in reading brain processing. Interestingly, observed reading function is dependable upon individual differences of brain’s ability that originated from its organizational structures/substructures and even sets of neurons. More specifically, introduced models concerned with Performed tasks by human brain are commonly coupled tightly to the two main brain functions. These are: learning that defined as brain’s ability to modify behavior in response to stored experience (inside brain synaptic interconnections), and memory is that capability to store that modification (information) over a period of time as well as to retrieve spontaneously modified experienced (learned) patterns i.e. Pattern Recognition. Based on neural network modeling, mutual tight relation between learning and memory brain functions has been presented at [1] and illustrated at two more recently published pieces of researches [2] [3] . All of these publications and more [4] [5] have been motivated by a challenging educational debated issue titled: “how to learn reading?” which announced―before two decades―by the great debate at [6] , and during last decade as well at [7] [8] .

At educational field practice, it has been observed that working memory components contribute to development of for a reading brain function on the basis of translation visualized (orthographic word-from) into a spoken voiced word (phonological word- form) [9] [10] . Interestingly, one of revealed findings by majority of neuroscientists suggests the concept that huge number of neurons besides their interconnections constituting the central nervous system and synaptic connectivity performs behavioral learning in human [11] [12] [13] [14] . Moreover, in practice that learning performed by other non-human creatures (mammals) such as: cats, dogs, ants, and rats [15] . The general role of human brain while performing learning is based on two principles [12] . The first is that many different levels of analysis can be applied to the brain’s structures and functions. The second is that a system approach is needed to understand how the various component functions might be organized when a brain performs either reading or writing tasks [9] [16] .

Teaching/learn how to read is an interesting critical issue, children must code written words and letters into working memory; this code is called orthographic word-form. The goal is to translate that orthographic seen word-from into a pronounced spoken word (phonological word-form). In this context, ensembles of highly specialized neurons inside human brain considered as a neural network. That dominantly plays a dynamic role in performing developing function of reading process in brain [9] . In other words, the visually recognized written pattern should be transferred and pronounced as its correspondingly associated correlated auditory pattern (previously stored) [10] . Fur- thermore, individual intrinsic characteristics of such highly specialized neurons (in vi- sual brain area) influence directly on the correctness of identified images associated with orthographic word-from [17] [18] . The rest of this paper is organized as follows. At the next section, revising the basic concepts of learning function inside human brain versus that at ANN is introduced in brief. Additionally, a review of reading process to translate visualized (orthographic word-from) into a spoken voiced word (phonological word-form) is presented. A review of the basis of coincidence detection learning is given at the third section. At the fourth section, practical field results are introduced. Interesting conclusive remarks are given at the final fifth section. At the end, two Appendix I & Appendix II concerned respectively with reading ability modeling and results of three diverse academic levels of tested students.

2. Human Brain versus Artificial Neural Networks’ Concepts

How our minds emerge from our flock of neurons remains deeply mysterious. It’s the kind of question that neuroscience, for all its triumphs, has been ill equipped to answer. Some neuroscientists dedicate their careers to the workings of individual neurons. Others choose a higher scale: they might, for example, look at how the hippocampus, a cluster of millions of neurons, encodes memories. Others might look at the brain at an even higher scale, observing all the regions that become active when we perform a particular task, such as reading or feeling fear [19] . Accordingly,

Artificial neural networks are computational systems which are increasingly common and sophisticated. Computational scientists who work with such systems however, often assume that they are simplistic versions of the neural systems within our brains and [25] [26] has gone further in proposing that human learning takes place through the self-organization of such however, artificial neural networks were originally conceived of in relation to how systems according to the stimuli they receive.

Artificial neural networks are mathematical models inspired by the organization and functioning of biological neurons. There are numerous artificial neural network variations that are related to the nature of the task assigned to the network. There are also numerous variations in how the neuron is modeled. In some cases, these models correspond closely to biological neurons [27] [28] in other cases the models depart from biological functioning in significant ways. Functional and structural neuroimaging studies of adult readers have provided a deeper understanding of the neural basis of reading, yet such findings also open new questions about how developing neural systems come to support this learned ability. A developmental cognitive neuroscience approach provides insights into how skilled reading emerges in the developing brain, yet also raises new methodological challenges. This review focuses on functional changes that occur during reading acquisition in cortical regions associated with both the perception of visual words and spoken language, and examines how such functional changes differ within developmental reading disabilities. We integrate these findings within an interactive specialization framework of functional development, and propose that such a framework may provide insights into how individual differences at several levels of observation (genetics, white matter tract structure, functional organization of language, cultural organization of writing systems) impact the emergence of neural systems involved in learning ability and disability of how to read? [29] [30] [31] .

In the context of teaching children how to read, individually, intrinsic different number a set of highly specialized neurons at the corresponding visual brain area contribute to the perceived sight (seen) signal is in direct proportionality with the correctness of identified depicted/printed images. These images represent the orthographic word-from has to be transferred subsequently into a spoken word (phonological word- form) during reading process. Furthermore, individual intrinsic characteristics of such highly specialized neurons (in visual brain area) influence directly on the correctness of identified images associated with orthographic word-from.

3. Coincidence Detection Learning

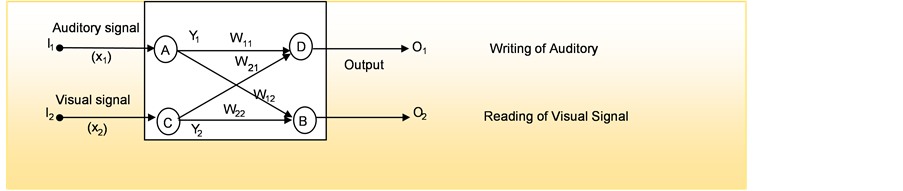

Referring to the original psycho-experimental work of Pavlov [20] ; coincidence detection learning process observed to be performed, after the fulfillment two vectors association as shown at Figure 1 (adapted from [21] ). More precisely, the coincidence process between input signal vector (X1, X2) provided to sensory neurons (A, C), and

Figure 1. Component processes for word identification in reading.Evidence suggests that word- identification problems in reading disabilityreflect core deficits at the level of orthographic- to-phonological mapping (shown by the bold lines) (adapted from [31] .

dynamically adaptive weight vector (W1, W2), associated with both neurons. The threshold value is denoted by . The coincidence learning of input signals (with two vector components), is detected as an output salivation signal (Z), and developed by motor neuron (B).

. The coincidence learning of input signals (with two vector components), is detected as an output salivation signal (Z), and developed by motor neuron (B).

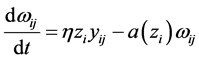

Referring to the weight dynamics described by the famous Hebbian learning law [22] , adaptation dynamical process for synaptic interconnections given after [32] , by the following equation:

(1)

(1)

where, the first right term corresponds to learning Hebbian law and h is a positive constant. The second term represents active forgetting; a (zi) is a scalar function of the output response (zi). Referring to the structure of the model given at Figure1 the adaptation Eq. of the single stage model is as follows.

(2)

(2)

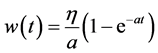

where, the values of h, zi and yij are assumed all to be non-negative quantities [32] , h is the proportionality constant less than one, a is also a less than one constant. The solution of the above Equation (2) given as follows:

(3)

(3)

The above solution considered herein, for investigation of two synaptic plasticity factors (forgetting and learning).That is following both long-term phenomena Potentiation (LTP) and depression (LTD) observed at hippocampus brain area.

B. Modeling of Coincidence Learning

The model based on transferring of dot products of coincidence detection vectors, into learning process curve that closely similar to the well-known sigmoid transfer (output) function.

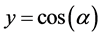

Considering normalized two weight and input vectors, it seems a good presentation of coincidence detection learning process given as:

(4)

(4)

where y coincidence learning value presented by cosine of the angle  between weight and input vectors.

between weight and input vectors.

Therefore, the relation given as:

where

(5)

(5)

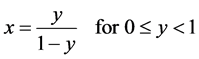

This Equation inversely equivalently given by inverse  as:

as:

(6)

(6)

This function could be easily as an approximation of

(7)

(7)

When only two terms of  expansion are considered.

expansion are considered.

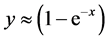

However, this exponentially saturated function behaves as the sigmoid function at the range  at the next section. Considering generalization of this function, individual differences represented well by relevant choice the parametric value

at the next section. Considering generalization of this function, individual differences represented well by relevant choice the parametric value  in the following Eq.:

in the following Eq.:

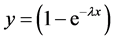

(8)

(8)

This value corresponds to the learning rate factor suggested when solving Hebbian learning differential equation using Mathematica [32] . Considering the view of coincidence detection learning, the angle  is a virtual learning parameter that controlling individual differences factor. So the parametric value

is a virtual learning parameter that controlling individual differences factor. So the parametric value  expressed as:

expressed as:

The special case where (

These two-brain state functions are in well correspondence with electrically practical stimulating signal observed at hippocampus brain area. That by either higher or lower frequencies than the normalized learning curve (

(

the value of

The results of learning process considering Hebbian rule are shown by following the Equation (8). In other words, the value of

The exponentially increasing function (8) behaves in a similar way as the sigmoid function as it saturated at unity value (

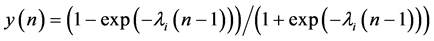

At Figure 2, three curves are shown representing various individual levels of learning approaches (surface, deep, and strategic). Curve (Y2) is the equalized representation of both forgetting and learning factors [32] . However curve (Y1) shown the low level of learning rate (learning disability) that indicates the state of angle (

Figure 2. The structure of the Hebbian learning rule model representing Pavlov’s psycho-expe- rimental work form (adapted from [22] ).

Figure 3. Shows three different learning performance curves Y1, Y2 and Y3 that converge at time t1, t2 and t3 considering different NMDA receptor opening time represented at different slope values corresponding to l1, l2, l3 respectively.

& t2, and t3) have corresponding to values of relative time units (2 & 2.6, and 3.5) as suggested at [1] . They are corresponding to three performance learning curves denoted by (Y1 & Y2 and Y3) respectively. Interestingly, the obtained simulation graphical results (given by graphs: Y1, Y2, and Y3) are realistically corresponding respectively to three students’ academic diversity academic achievements (levels) namely: week, average and excellent. That is well illustrated by three examples given at Appendix II.

Referring to Figure 4 in below, this figure illustrates graphically the presentations for suggested mathematical for mulationin accordance with different specific ANN design parameter namely gain factor values (λ).Additionally, the effect of this design parameter observed either implicitly or explicitly on dynamical synaptic plasticity weighs illustrated by dynamics equations given at [33] . Furthermore, normalized behavior model considers the changes of communication levels (indicated by λ parameter). Therefore, gain factor parameter values is analogous to speeds changing for reaching optimum solutions for Travelling Salesman Problem (TSP) using Ant colony System (ACS) [34] [35] . The following equation presents a set of curves changes in accordance with different gain factor values (λ).

where λi represents one of gain factors (slopes) of sigmoid function. (n) is the number of training cycles. These curves represent a set of sigmoid functions to reach by time maximum achievement.

where (n) is the number of training cycles. That set of curves is illustrated graphically at Figure 4 given in blow. The examples given consider normalization of output response values.

Figure 4. Graphical representation of learning performance of model with different gain factor values (λ) adapted from [34] .

4. Educational Field Practical Results

The obtained results are introduced at this section considering that children preferentially take in and process information in simultaneously two different teaching materials: by seeing and hearing, reflecting and acting, reasoning logically and intuitively, analyzing and visualizing, steadily and in fits and starts. The results concerned with the control group after using conventional methodology is given at the Table 1 Additionally, the Table 2 represent the results of the experimental group based on teaching using computer multimedia program methodology.

The two Table 1 and Table 2 are respectively presented at Figure 5, in two graphically shown colored curves (red and blue).

5. Conclusions and Discussions

This piece of research comes to the following five interesting conclusion remarks:

§ ANN modeling is a realistic and relevant tool to obtain interesting results in the context of student’s learning performance.

§ Herein, evaluation of academic achievement performance presented via statistical analysis approach that provides educationalists with unbiased fair judgment tool for quality for any of instructional methodologies. The comparative evaluation of statistical analysis results in clearly improvement has been regarding average M [%] (mean) values of academic achievement.

§ This paper adopted two educational methodologies modules (applied for teaching a mathematical topic and Arabic language reading). One of these methodologies considers classical (conventional) instructional methodology. However, the other considered simultaneous visual and audible learning materials. It revealed dependency of learning/teaching effectiveness upon children’s sensory cognitive systems. As shown at Table 1 and Table 2.

Table 1. Illustrates the resulting relation between recognition (Time Response) and the mapped mark values.

Table 2. Lustrates the resulting relation between recognition (time response) and the mapped mark values.

Figure 5. Illustrates the obtained recognition achievement results for assessments of two applied conventional (a) and computer based (b) methodologies: (a) control group (conventional methodology); (b) experimental group (computer based methodology).

§ Interestingly, the above obtained results agree well with Lindstrom’s findings that participants could only remember 20% of the total learning materials when they were presented with visual material only, 40% when they were presented with both visual and auditory material, and about 75% when the visual and auditory material were presented simultaneously [36] .

§ Finally, for future extension of presented research work, it is highly recommended to consider more elaborate investigational analysis and evaluations for other behavioral learning phenomena observed at educational field (such as learning creativity, improvement of learning performance, learning styles,… etc.) using ANNs modeling. As consequence of all given in above, it is worthy to recommend realistic implementation of ANNs models , to be applicable for solving educational phenomena issues related to cognitive styles observed at educational phenomena and/or activities.

Cite this paper

Mustafa, H.M.H. and Ben Tourkia, F. (2016) On Comparative Study for Two Diversified Educational Me- thodologies Associated with “How to Teach Children Reading Arabic Language?” (Neu- ral Networks’ Approach). Open Access Lib- rary Journal, 3: e3186. http://dx.doi.org/10.4236/oalib.1103186

References

- 1. Hassan, H.M. (2005) On Mathematical Analysis, and Evaluation of Phonics Method for Teaching of Reading Using Artificial Neural Network Models. SIMMOD, 17-19 January 2005, 254-262.

- 2. Mustafa, H.M. (2012) On Performance Evaluation of Brain Based Learning Processes Using Neural Networks. The Seventeenth IEEE Symposium on Computers and Communication (ISCC’12), Cappadocia, 1-4 July 2012.

http://www.computer.org/csdl/proceedings/iscc/2012/2712/00/IS264-abs.html - 3. Mustafa, H.M., et al. (2015) On Enhancement of Reading Brain Performance Using Artificial Neural Networks’ Modeling. Proceedings of the 2015 International Workshop on Pattern Recognition (ICOPR 2015), Dubai, 4-5 May 2015.

- 4. Mustafa, H.M. (2013) Quantified Analysis and Evaluation of Highly Specialized Neurons' Role in Developing Reading Brain (Neural Networks Approach). The 5th Annual International Conference on Education and New Learning Technologies, Barcelona, 1-3 July 2013.

- 5. Hassan, H.M. (2008) On Artificial Neural Network Application for Modeling of Teaching Reading Using Phonics Methodology (Mathematical Approach). The 6th International Conference on Electrical Engineering, ICEENG 2008, Cairo.

- 6. Chall, J.S. (1996) Learning to Read, the Great Debate. Harcourt Brace.

- 7. Rayner, K., et al. (2001) How Psychological Science Informs The Teaching of Reading. Psychological Science in Public Interest, 2, 31-74.

https://doi.org/10.1111/1529-1006.00004 - 8. Rayner, K., Foorman, B.R., Perfetti, C.A., Pesetsky, D. and Seiedenberg, M.S. (2003) How Should Reading Be Taught? Majallat Aloloom, 19, 4-11.

- 9. The Developing of Reading Brain.

http://www.education.com/reference/article/brain-and-learning/#B - 10. Beale, R. and Jackson, T. (1990) Neural Computing, an Introduction, Adam Hiler T.

https://doi.org/10.1887/0852742622 - 11. (2014) On Quantified Analysis and Evaluation for Development Reading Brain Performance Using Neural Networks’ Modeling. The Conference ICT for Language Learning 2014, Florence, 13-15 November 2014.

- 12. Hardman, M. (2010) Complex Neural Networks—A Useful Model of Human Learning. The British Educational Research Association (BERA) Conference, September 2010.

http://www.academia.edu/336751/Complex_Neural_NetworksA_Useful_Model_of_Human_Learning - 13. Hassan, H.M. (2008) On Analysis of Quantifying Learning Creativity Phenomenon Considering Brain Synaptic Plasticity. WSSEC08 Conference, Derry, 18-22 August 2008.

- 14. Mustafa, H.M, et al. (2011) On Quantifying of Learning Creativity through Simulation and Modeling of Swarm Intelligence and Neural Networks. IEEE EDUCON on Education Engineering-Learning Environments and Ecosystems in Engineering Education, Amman, 4-6 April 2011.

- 15. Hassan, H.M. (2008) A Comparative Analogy of Quantified Learning Creativity in Humans versus Behavioral Learning Performance in Animals: Cats, Dogs, Ants, and Rats. (A Conceptual Overview). WSSEC08 Conference, Derry, 18-22 August 2008.

- 16. Borzenko, A. (2009) Neuron Mechanism of Human Languages. IJCNN’09 Proceedings of the 2009 International Joint Conference on Neural Networks, Atlanta, 14-19 June 2009, 375-382.

- 17. Lwin, K.T., Myint, Y.M., Si, H. and Naing, Z.M. (2008) Evaluation of Image Quality Using Neural Networks. Journal of World Academy of Science, Engineering and Technology, 24.

- 18. Cook, A.R. and Cant, R.J. (1996) Human Perception versus Artificial Intelligence in the Evaluation of Training Simulator Image Quality. Proceedings of the 1996 European Simulation Symposium, Genoa, 24-26 October 1996, 19-23.

- 19. Zimmer, C. (2011) 100 Trillion Connections: New Efforts Probe and Map the Brain’s Detailed Architecture. Scientific American Mind & Brain.

- 20. Pavlov, I.P. (1927) Conditional Reflex: An Investigation of the Psychological Activity of the Cerebral Cortex. Oxford University Press, New York.

- 21. Hassan, H. and Watany, M. (2000) On Mathematical Analysis of Pavlovian Conditioning Learning Process Using Artificial Neural Network Model. 10th Mediterranean Electro-technical Conference, Cyprus, 29-31 May 2000, 578-581.

https://doi.org/10.1109/melcon.2000.879999 - 22. Hebb, D.O. (1949) The Organization of Behaviour. Wiley, New York.

- 23. Tsien, J.Z. (2001) Building a Brainier Mouse. Scientific American, Majallat Aloloom, 17, 28-35.

- 24. Tsien, J.Z. (2000) Enhancing the Link between Hebb’s Coincidence Detection and Memory Formation. Current Opinion in Neurobiology, 10.

- 25. Cilliers, P. (1998) Complexity and Postmodernism: Understanding Complex Systems, Routledge, London.

- 26. Mark Hardman Complex Neural Networks—A Useful Model of Human Learning. Canterbury Christ Church University. Presented at: British Educational Research Association Annual Conference University of Warwick 2 September, 2010.

http://www.academia.edu/336751/Complex_Neural_Networks_-A_Useful_Model_of_Human_Learning - 27. Gluck, M.A. and Bower, G.H. (1989) Evaluating an Adaptive Model of Human Learning. Journal of Memory and Language, 27, 166-195.

https://doi.org/10.1016/0749-596X(88)90072-1 - 28. Granger, R., Ambros-Ingerson, J., Staubli, U. and Lynch, G. (1989) Memorial Operation of Multiple, Interacting Simulated Brain Structures. In: Gluck, M. and Rumelhart, D., Eds., Neuroscience and Connectionist Models, Erlbaum Associates, Hillsdale.

- 29. Schlaggar, B.L. and McCandliss, B.D. (2007) Development of Neural Systems for Reading. Annual Review of Neuroscience, 30, 475-503.

https://doi.org/10.1146/annurev.neuro.28.061604.135645 - 30. Ghonaimy, M.A., Al-Bassiouni, A.M. and Hassan H.M. (1994) Learning Ability in Neural Network Model. 2nd International Conference on Artificial Intelligence Applications, Cairo, 22-24 January 1994, 400-413.

- 31. Pugh, K.R., Mencl, W.E., Jenner, A.R., Katz, L., Frost, S.J., Lee, J.R., Shaywitz, S.E. and Shaywitz, B.A. (2000) Functional Neuroimaging Studies of Reading and Reading Disability (Developmental Dyslexia). Mental Retardation and Developmental Disabilities Research Reviews, 6, 207-213.

- 32. Freeman, J.A. (1994) Simulating Neural Networks with Mathematica. Addison-Wesley Publishing Company, Boston.

- 33. Hassan, H.M. (2005) On Principles of Biological Information Processing Concerned with Learning Convergence Mechanism in Neural and Non-Neural Bio-Systems. 2005 and International Conference on Intelligent Agents, Web Technologies and Internet Commerce, International Conference on Computational Intelligence for Modelling, Control and Automation, Vienna, 28-30 November 2005, 647-653.

- 34. Hassan, H.M. (2008) A Comparative Analogy between Swarm Smarts Intelligence and Neural Network Systems. WSSEC’08 Conference, Derry, Northern Ireland, 18-22 August 2008.

- 35. Dorigo, M., et al. (1997) Ant Colonies for the Traveling Salesman Problem.

www.iridia.ulb.ac.be/dorigo/aco.html - 36. Lindstrom, R.L. (1994) The Business Week Guide to Multimedia Presentations: Create Dynamic Presentations that Inspire. McGraw-Hill, New York.

Appendix I

Reading Ability Model System

Referring to the two figures (Figure 1 & Figure 2) shown in below, suggested models obeys that concept as the two inputs I1, I2 represent sound (heard) stimulus which simulates phonological word-form and visual (sight) stimulus which simulates orthographic word-from respectively. The outputs O1, O2 are representing pronouncing and image recognition processes respectively. In order to justify the superiority and optimality of phonic approach over other teaching to read methods, an elaborated mathematical representation is introduced for two different neuro-biologically based models. Any of models needs to learn how to behave (to perform reading tasks). Somebody has to teach (for supervised learning)-not in our case?or rather for our learning process is carried out on the base of former knowledge of environment problem (learning without a teacher). The model obeys the original Hebbian learning rule. The reading process is simulated at that model in analogues manner to the previous simulation for Pavlovian conditioning learning. The input stimuli to the model are considered as either conditioned or unconditioned stimuli. Visual and audible signals are considered interchangeably for training the model to get desired responses at the output of the model. Moreover the model obeys more elaborate mathematical analysis for Pavlovian learning process [21] . Also, the model is modified following general Hebbian algorithm (GHA) and correlation matrix memory [29] [A1] [A2] . The adopted model is designed basically following after simulation of the previously measured performance of classical conditioning experiments. The model design concept is presented after the mathematical transformation of some biological hypotheses. In fact, these hypotheses are derived according to cognitive/behavioral tasks observed during the experimental learning process.

The structure of the model following the original Hebbian learning rule in its simplified form (single neuronal output) is given in Figure A1, where A and C represent two sensory neurons (receptors)/areas and B is nervous subsystem developing output response. The below simple structure at Figure A2 drives an output response reading function (pronouncing) that is represented as O1. However the other output response represented as O2 is obtained when input sound is considered as conditioned stimulus. Hence visual recognition as condition response of the heard letter/word is obtained as output O2. In accordance with biology, the strength of response signal is dependent upon the transfer properties of the output motor neuron stimulating salivation gland. The structure of the model following the original Hebbian learning rule in its simplified form is given in Figure A1. That figure represents the classical conditioning learning process where each of lettered circles A, B, and C represents a neuron cell body. The line connecting cell bodies are the axons that terminate synaptic junctions. The signals released out from sound and sight sensor neurons A and C are represented by y1 and y2 respectively.

Reading ability has served as a model system within cognitive science for linking

Figure A1. Generalized reading model which predented as pronouncing of some word (s) considering input stimuli and output responses.

Figure A2. The structure of the first model where reading process is expressed by conditioned response for seen letter/ word (adapted from [A3] ).

cognitive operations associated with lower level perceptual processes and higher level language function to specific brain systems [A3] [A4] . More recently, developmental cognitive neuroscience investigations have begun to examine the transformations in functional brain organization that support the emergence of reading skill. This work is beginning to address questions concerning how this evolutionarily recent human ability emerges from changes within and between brain systems associated with visual perception and language abilities, how learning experiences and maturation impact these neural changes, and the way in which individual differences at the genetic and neural systems level impact the emergence of this skill [A5] [A6] . Developmental reading studies have used ERP recordings to examine more directly the experience-dependent nature of the N170 response to visual word forms and the relationship between these signals and the rise of fast perceptual specializations for reading [A4] and difficulties in reading generally (Dyslexia) [A8] and specifically neural tuning for print peaks when children learn to read [A7] .

References

- [A1] Haykin, S. (1999) Neural Networks. Prentice Hall, Englewood Cliffs, 50-60.

- [A2] Eichenbaum, H. (2013) Hippocampus: Remembering the Choices. Neuron, 77, 999-1001. https://doi.org/10.1016/j.neuron.2013.02.034

- [A3] Mustafa, H.M, Al-Hamadi, A. and Al-Saleem, S. (2007) Towards Evaluation of Phonics Method for Teaching of Reading Using Artificial Neural Networks (A Cognitive Modeling Approach). IEEE International Symposium on Signal Processing and Information Technol-ogy, Giza, 15-18 December 2007, 855-862.

- [A4] Posner, M.I., Petersen, S.E., Fox, P.T. and Raichle, M.E. (1988) Localization of Cognitive Operations in the Human Brain. Science, 240, 1627-1631. https://doi.org/10.1126/science.3289116

- [A5] Vliet, E., Miozzo, M. and Stern, Y. (2004) Phonological Dyslexia without Phonological Im-pairment? Cognitive Neuropsychology, 21, 820-839. https://doi.org/10.1080/02643290342000465

- [A6] Maurer, U., Brem, S., Bucher, K. and Brandeis, D. (2005) Emerging Neurophysiological Specialization for Letter Strings. Journal of Cognitive Neuroscience, 17, 1532-1552. https://doi.org/10.1162/089892905774597218

- [A7] Dyslexia, Klein, R. and McMullen, P. MIT Press, Cambridge, 305-338

- [A8] Maurer, U., Brem, S., Kranz, F., Bucher, K., Benz, R., et al. (2006) Coarse Neural Tuning for Print Peaks When Children Learn to Read. NeuroImage, 33, 749-758. https://doi.org/10.1016/j.neuroimage.2006.06.025

Appendix II

Four observed print screens’ output of suggested computer based module. The first presents the starting inttial print screen. The other three screens present some observed samples of three diverse academic levels of tested students illustrated at Figure A, Figure B, and Figure C. These presented figures are respectively given in details as follows.

- At A, it presents a student that has an exellant academic level. He spends to the reach desired outcome (242 Section).

- At B, it presents a student that have an average academic level .He spends to reach desired outcome (342 Section).

- At C, it presents a student that have a week academic level.He spends to the reach desired outcome (538 Section).

Initially Starting Print Screen

(A) A student has an exellant academic level (242 Section)

(B) A student has an average academic level (342 Section)

(C) A student has a week academic level (538 Section)