Open Journal of Statistics Vol.05 No.01(2015), Article ID:54078,8

pages

10.4236/ojs.2015.51007

Combining Likelihood Information from Independent Investigations

L. Jiang, A. Wong

Department of Mathematics and Statistics, York University, Toronto, Canada

Email: august@mathstat.yorku.ca, august@yorku.ca

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 23 January 2015; accepted 11 February 2015; published 15 February 2015

ABSTRACT

Fisher [1] proposed a simple method to combine p-values from independent investigations without using detailed information of the original data. In recent years, likelihood-based asymptotic methods have been developed to produce highly accurate p-values. These likelihood-based methods generally required the likelihood function and the standardized maximum likelihood estimates departure calculated in the canonical parameter scale. In this paper, a method is proposed to obtain a p-value by combining the likelihood functions and the standardized maximum likelihood estimates departure of independent investigations for testing a scalar parameter of interest. Examples are presented to illustrate the application of the proposed method and simulation studies are performed to compare the accuracy of the proposed method with Fisher’s method.

Keywords:

Canonical Parameter, Fisher’s Expected Information, Modified Signed Log-Likelihood Ratio Statistic, Standardized Maximum Likelihood Estimate Departure

1. Introduction

Supposed that

independent investigations are conducted to test the same null hypothesis and the

p-values are

independent investigations are conducted to test the same null hypothesis and the

p-values are

respectively. Fisher [1] proposed a simple method to combine these p-values to obtain

a single p-value

respectively. Fisher [1] proposed a simple method to combine these p-values to obtain

a single p-value

without using the detailed information concerning the original data nor knowing

how these p-values were obtained. His methodology is based on the following two

results from distribution theories:

without using the detailed information concerning the original data nor knowing

how these p-values were obtained. His methodology is based on the following two

results from distribution theories:

1) If

is distributed as Uniform(0, 1), then

is distributed as Uniform(0, 1), then

is distributed as Chi-square with 2 degrees of freedom

is distributed as Chi-square with 2 degrees of freedom

2) If

are independently distributed as

are independently distributed as , then

, then

is distributed as

is distributed as .

.

Since

are independently distributed as Uniform(0, 1), then the combined p-value

are independently distributed as Uniform(0, 1), then the combined p-value

is

is

(1)

(1)

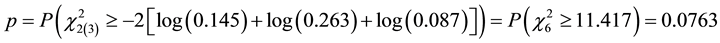

For illustration, Fisher [1] reported the p-values of three independent investigations: 0.145, 0.263 and 0.087. Thus the combined p-value is

which gives moderate evidence against the null hypothesis. Fisher [1] described the procedure as a “simple test of the significance of the aggregate”.

As an illustrative example is the study of rate of arrival. It is common to use

a Poisson model to model the number of arrivals over a specific time interval. Let

be the number of arrivals in n consecutive unit time intervals and denote

be the number of arrivals in n consecutive unit time intervals and denote

be the total number of arrivals over the n consecutive unit time intervals. Moreover,

let

be the total number of arrivals over the n consecutive unit time intervals. Moreover,

let

An alternate way of investigating the rate of arrival over a period of time is by

modeling the time to first arrival, T with the exponential model with rate

By Fisher’s way of combining the p-values, we have

which gives strong evidence that

In recent years, many likelihood-based asymptotic methods have been developed to

produce highly accurate p-values. In particular, both the Lugannani and Rice’s [2]

method and the Barndorff-Nielsen’s [3] [4] method produced p-values which have third-order

accuracy, i.e. the rate of convergence is

In Section 2, a brief review of the third-order likelihood-based method for a scalar parameter of interest is presented. In Section 3, the relationship between the score variable and the locally defined canonical parameter is determined. Using the results in Section 3, a new way of combining likelihood information is proposed in Section 4. Examples and simulation results are presented in Section 5 and some concluding remarks are recorded in Section 6.

2. Third-Order Likelihood-Based Method for a Scalar Parameter of Interest

Fraser [6] showed that for a sample

where

and

or the Barndorff-Nielsen [3] [4] formula

where

is the observed information evaluated at

Fraser and Reid [5] [9] generalized the methodology to any model with log likelihood

function

where

is the rate of change of

with

and the standardized maximum likelihood departure

Since

where

Applications of the general method discussed above can be found is Reid and Fraser [10] and Davison et al. [11] .

Note that

3. Relationship between the Score Variable and the Locally Defined Canonical Parameter

In Bayesian analysis, Jeffreys [13] proposed to use the prior density which is proportional to the square root of the Fisher’s expected information. This prior is invariant under reparameterization. In other words, the scalar parameter

yields an information function

Hence,

Fraser et al. [12] showed that

is a pivotal quantity to the second-order. A change of variable from the maximum

likelihood estimate of locally defined canonical parameter

which relates the score varaible to the locally defined canonical parameter. Taking the total derivative of (12), and evaluate at the observed data point, we have

Moreover, at

Therefore, the rate of change of the score variable with respect to the change of the locally defined canonical parameter at the observed data point is

This describes how the locally defined canonical parameter

4. Combining Likelihood Information

Assume we have

and hence the maximum likelihood estimate of

From (13), the rate of change of the score variable from the

where

Hence, the combined canonical parameter is

The standardized maximum likelihood departure based on the combined canonical parameter can be calculated from (5). Thus, a new p-value can be obtained from the combined log-likelihood function and the combined canonical parameter using the Lugannani and Rice formula or the Barndorff-Nielsen formula.

5. Examples

In this section, we first revisit the rate of arrival problem discussed in Section 1 and show that the proposed method gives results that is quite different from the results obtained by the Fisher’s way of combining p-values. Then simulation studies are performed to compare the accuracy of the proposed method with the Fisher’s method for the rate of arrival problem. Moreover, two well-known models: scalar canonical exponential family model and normal mean model, are examined. It is shown that, theoretically, the proposed method gives the same results as obtained by the third-order method that was discussed in Fraser and Reid [5] and DiCiccio et al. [14] , respectively.

5.1. Revisit the Rate of Arrival Problem

From the first investigation discussed in Section 1, the log-likelihood function for the Poisson model is

where

Moreover, from the second investigation discussed in Section 1, the log-likelihood function for the exponential model is

where

The combined log-likelihood function is

and we have

Therefore,

and from (17) we have

Hence,

Figure 1 plot

5.2. Simulation Study

Simulation studies are performed to compare the three methods discussed in this

paper. We examine the rate of arrival problem that was discussed in Section 1. For

each combination of

1) generate

2) calculate p-values obtained by the three methods discussed in this paper;

Figure 1. p-value function.

3) record if the p-value is less than a preset value

4) repeat this process

Finally, report the proportion of p-values that is less than

Table 1 recorded the simulated Type I errors obtained

by the Fisher’s method (Fisher), Lugannani and Rice method (LR) and Barndorff-Nielsen

method (BN). Results from Table 1 illustrated that

the proposed methods are extremely accurate as they are all within 3 simulated standard

errors. And the results by the Fisher’s method are not satisfactory as they are

way larger than the prescriped

5.3. Scalar Canonical Exponential Family Model

Consider

where

From the above model, we have

where

Table 1. Simulated Type I errors (based on 10,000 simulated sample).

and the log-likelihood ratio statistic obtained from the combined log-likelihood function can be obtained from (12). Moreover, from (17), we have

and hence the combined canonical parameter is

The maximum likelihood departure in the combined canonical parameter space is

with the observed information evaluated at

and thus,

which is the same as directly applying the third-order method to the canonical exponential

family model with

5.4. Normal Mean Model

Consider

where

with

with

and

and the standardized maximum likelihood departure calculated in the locally defined canonical parameter scale can be obtained from Equation (8) and is

These are exactly the same as those obtained in DiCiccio et al. [14] .

6. Conclusion

In this paper, a method is proposed to obtain a p-value by combining the likelihood functions and the standardized maximum likelihood estimates departure calculated in the canonical parameter space of independent investigations for testing a scalar parameter of interest. It is shown that for the canonical exponential model and the normal mean model, the proposed method gives exactly the same results as using the joint likelihood function. Moreover, for the rate of arrival problem, the proposed method gives very different results from the results obtained by the Fisher’s way of combining p-values. And simulation studies illustrate that the proposed method is extremely accurate.

Acknowledgements

This research was supported in part by and the National Sciences and Engineering Research Council of Canada.

References

- Fisher, R.A. (1925) Statistical Methods for Research Workers. Oliver and Boyd, Edinburg.

- Lugannani, R. and Rice, S. (1980) Saddlepoint Approximation for the Distribution of the Sum of Independent Random Variables. Advances in Applied Probability, 12, 475-490. http://dx.doi.org/10.2307/1426607

- Barndorff-Nielsen, O.E. (1986) Inference on Full or Partial Parameters Based on the Standardized Log Likelihood Ratio. Biometrika, 73, 307-322.

- Barndorff-Nielsen, O.E. (1991) Modified Signed Log-Likelihood Ratio. Biometrika, 78, 557-563. http://dx.doi.org/10.1093/biomet/78.3.557

- Fraser, D.A.S. and Reid, N. (1995) Ancillaries and Third Order Significance. Utilitas Mathematica, 47, 33-53.

- Fraser, D.A.S. (1990) Tail Probabilities from Observed Likelihoods. Biometrika, 77, 65-76. http://dx.doi.org/10.1093/biomet/77.1.65

- Jensen, J.L. (1992) The Modified Signed Log Likelihood Statistic and Saddlepoint Approximations. Biometrika, 79, 693-704. http://dx.doi.org/10.1093/biomet/79.4.693

- Brazzale, A.R., Davison, A.C. and Reid, N. (2007) Applied Asymptotics: Case Studies in Small-Sample Statistics. Cambridge University Press, New York. http://dx.doi.org/10.1017/CBO9780511611131

- Fraser, D.A.S. and Reid, N. (2001) Ancillary Information for Statistical Snference, Empirical Bayes and Likelihood Inference. Springer-Verlag, New York, 185-209. http://dx.doi.org/10.1007/978-1-4613-0141-7_12

- Reid, N. and Fraser, D.A.S. (2010) Mean Likelihood and Higher Order Inference. Biometrika, 97, 159-170. http://dx.doi.org/10.1093/biomet/asq001

- Davison, A.C., Fraser, D.A.S. and Reid, N. (2006) Improved Likelihood Inference for Discrete Data. Journal of the Royal Statistical Society Series B, 68, 495-508. http://dx.doi.org/10.1111/j.1467-9868.2006.00548.x

- Fraser, A.M., Fraser, D.A.S. and Fraser, M.J. (2010) Parameter Curvature Revisited and the Bayesian Frequentist Divergence. Journal of Statistical Research, 44, 335-346.

- Jeffreys, H. (1946) An Invariant Form for the Prior Probability in Estimation Problems. Proceedings of the Royal Society of London Series A: Mathematical and Physical Sciences, 186, 453-461. http://dx.doi.org/10.1098/rspa.1946.0056

- DiCiccio, T., Field, C. and Fraser, D.A.S. (1989) Approximations of Marginal Tail Probabilities and Inference for Scalar Parameters. Biometrika, 77, 77-95. http://dx.doi.org/10.1093/biomet/77.1.77