Paper Menu >>

Journal Menu >>

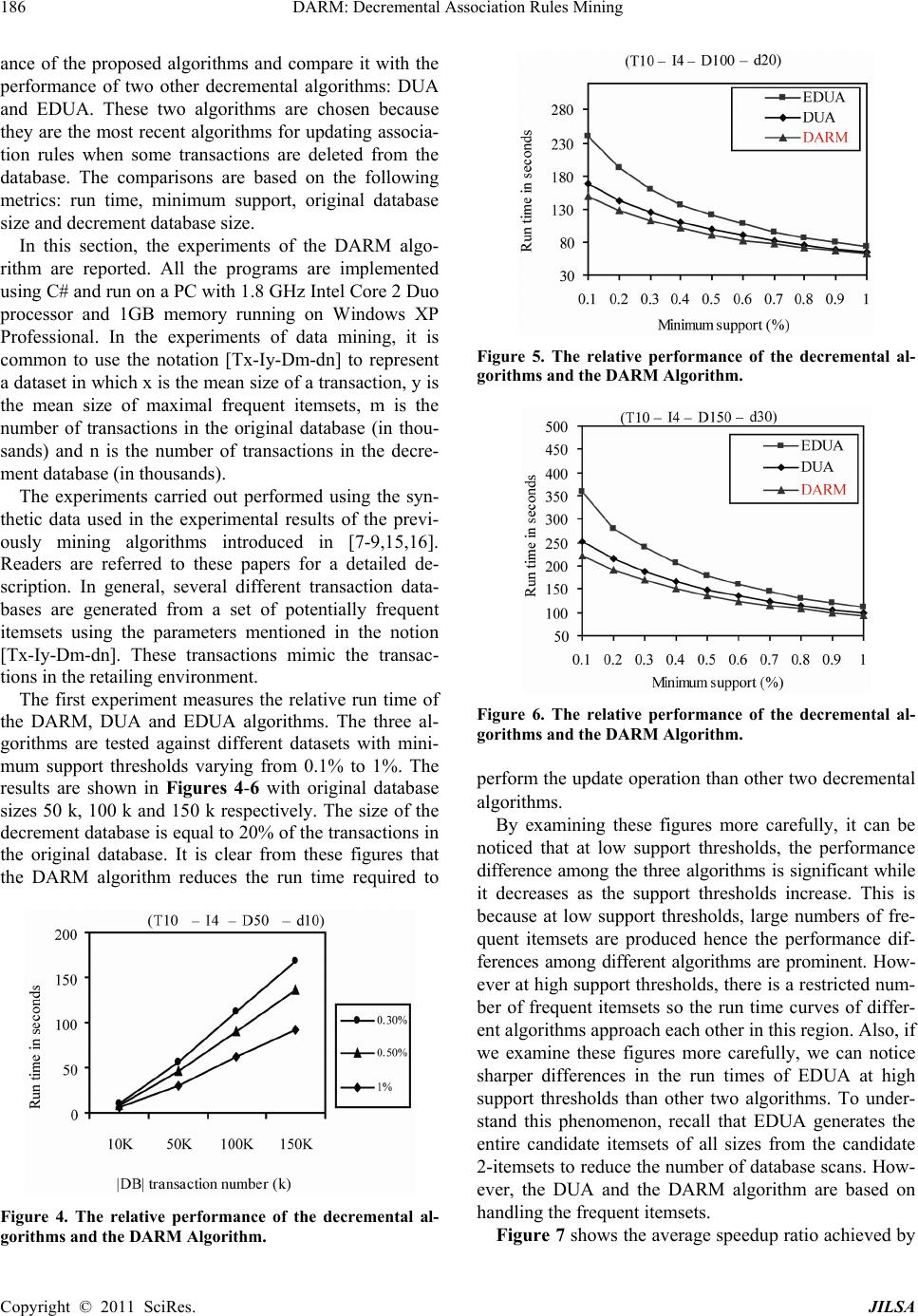

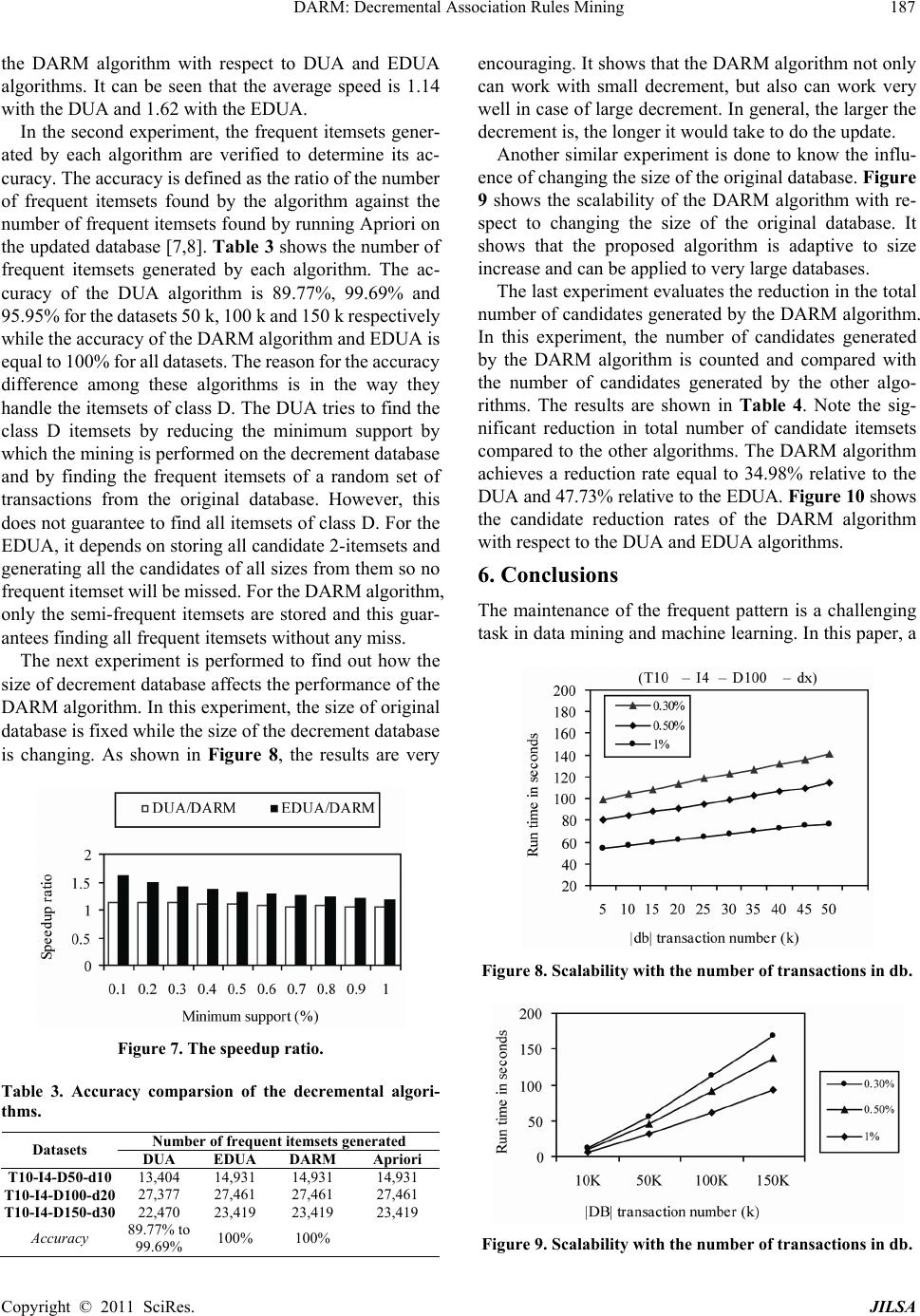

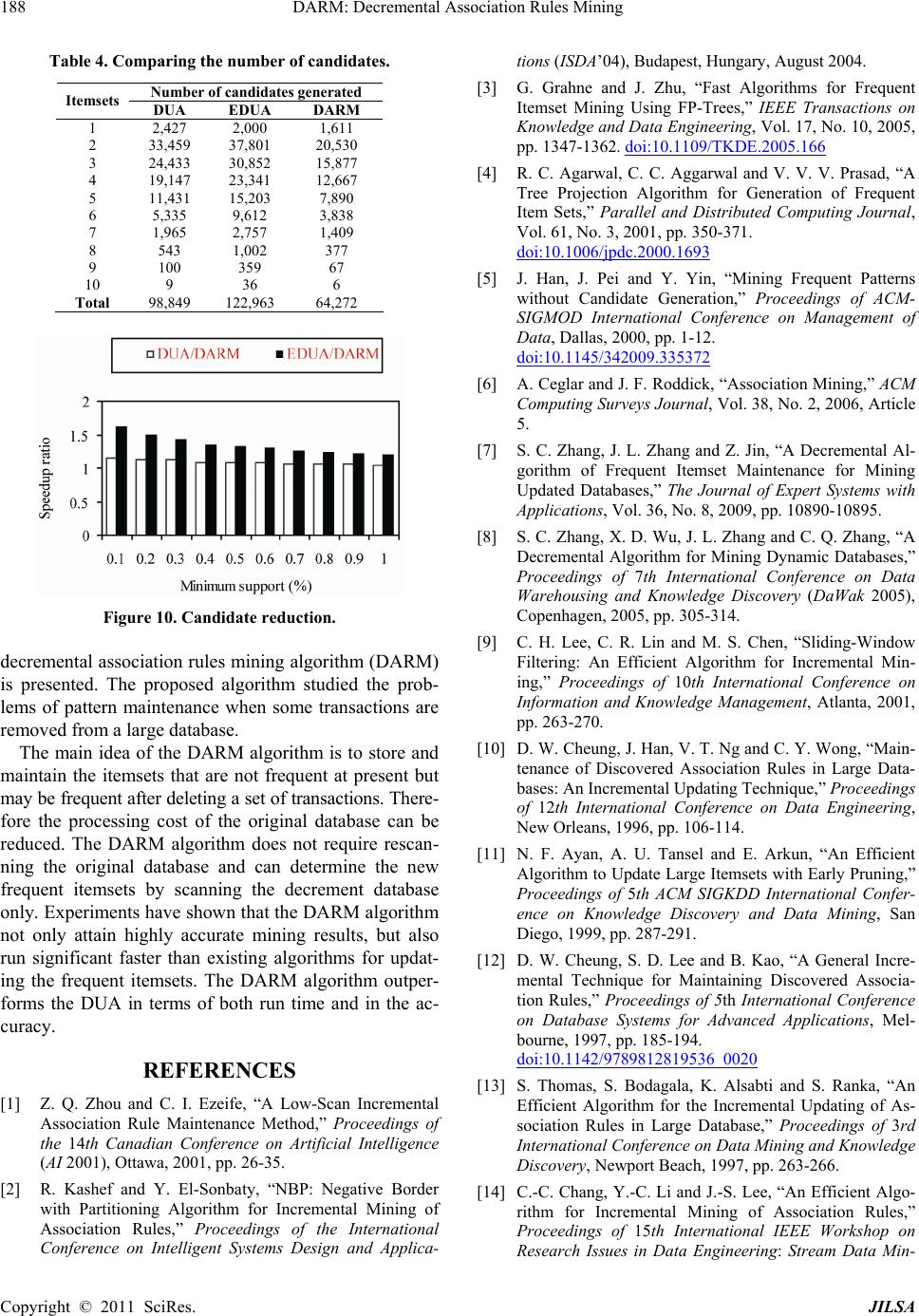

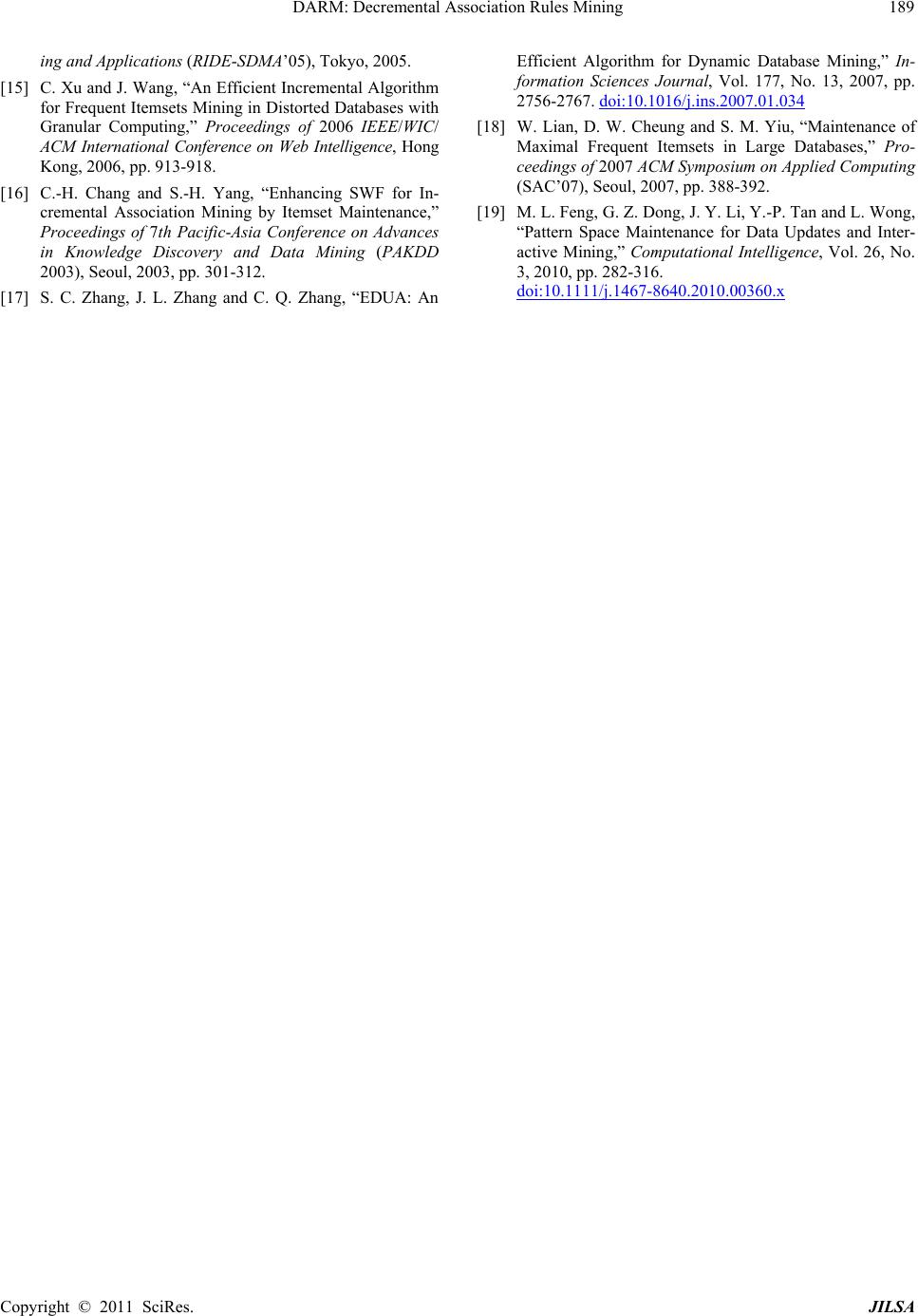

Journal of Intelligent Learning Systems and Applications, 2011, 3, 181-189 doi:10.4236/jilsa.2011.33019 Published Online August 2011 (http://www.SciRP.org/journal/jilsa) Copyright © 2011 SciRes. JILSA 181 DARM: Decremental Association Rules Mining Mohamed Taha1, Tarek F. Gharib2,3, Hamed Nassar4 1Faculty of Computers & Informatics, Benha University, Benha, Egypt; 2Faculty of Computer & Information Sciences, Ain Shams University, Cairo, Egypt; 3Faculty of Computing and Information Technology, King Abdulaziz University, Jeddah, Saudi Arabia; 4Faculty of Computers & Informatics, Suez Canal University, Ismailia, Egypt. Email: mohamed.taha@fci.bu.edu.eg, tfgharib@cis.asu.edu.eg, nassar@ci.suez.edu.eg Received December 29th, 2010; revised April 1st, 2011; accepted April 8th, 2011. ABSTRACT Frequent item sets mining plays an important role in association rules mining. A variety of algorithms for finding fre- quent item sets in very large transaction databases have been developed. Although many techniques were proposed for maintenance of the discovered rules when new transactions are added, little work is done for maintaining the discov- ered rules when some transactions are deleted from the database. Updates are fundamental aspect of data management. In this paper, a decremental association rules mining algorithm is present for updating the discovered association rules when some transactions are removed from the original data set. Extensive experiments were conducted to evaluate the performance of th e p ropo sed algo rithm. The resu lts sh o w tha t the proposed algo rithm is efficien t a nd ou tp erforms other well-know n al g ori t h ms. Keywords: Decremental Mining, Association Rules Maintenance, Upda ting Association Rules 1. Introduction Association rules mining is a data mining technique which discovers strong associations or correlation rela- tionships among data [1]. Recently, it has attracted much attention in data mining research because of its wide ap- plicability in many areas, including decision support, market strategy and financial forecast. An association rule is a relation between items in a set of transactions. This rule must have two measures to express its statisti- cal significance: the support and the confidence [2]. There are many mining algorithms to find out associa- tion rules in large databases: the Apriori and its varia- tions, Tree-Projection algorithm, FP-growth and others [3-5]. The Apriori algorithm is the first successful algo- rithm for mining association rules. The main idea of Ap- riori-based algorithms is to run number of iterations. In each iteration, a set of candidate itemsets is generated then the database is scanned to count the number of transactions that contain each candidate set. The candi- dates with support counts greater than or equal to the mi- nimum support count are the frequent itemsets. These mining algorithms differ in the techniques used to create the candidate sets. The smaller the number of candidate sets is, the faster the algorithm would be. The Apriori-based algorithms belong to a generate- and-test paradigm. They compute frequent itemsets by generating candidates and checking their frequencies a- gainst the transaction database. Another paradigm is based on a test-only approach. It does not generate can- didates and only tests for frequency. The FP-growth al- gorithm belongs to this paradigm. It uses a tree structure to represent all the transactions of the database. The fre- quent itemsets are generated with only two passes over the database and without any candidate generation proc- ess [6]. Due to advances in information technology, a large amount of data could be collected easily. Databases may be frequently or occasionally updated. Such updates may change the characteristic of the database and hence in- validate the existing discovered rules (some of the rules may still be valid and new rules may appear). A brute force approach to solve the update problem is to re- mine the entire updated database to find the new association rules. However, this approach is not efficient because it ignores the previous computation that has been per- formed. The present approach to the update problem is to use incremental and decremental mining algorithms. In- cremental mining refers to mining the database when new transactions are added to it while decremental min- ing refers to mining the database when some obsolete transactions are deleted from it [7,8]. The main purpose of these mining algorithms is to update the previously discovered rules by benefiting from the previously dis- covered information without repeating all the work done  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 182 previously. Much effort has been devoted to the problem of incremental mining and several algorithms have been already proposed to update the association rules after adding new transactions but decremental mining is not as fortunate. In this paper, a decremental updating algorithm called Decremental Association Rules Mining algorithm (DARM) has been introduced which aims to store and maintain the itemsets that are not frequent at present but may be fre- quent after updating the database. Therefore the process- ing cost of the updated database can be reduced. The rest of the paper is organized as follows. In the next section, some preliminaries in association rules mining are illustrated. Section 3 surveys the related work. In Section 4, the DARM algorithm is presented. Section 5 shows the experimental results. Finally, conclusions are presented in Section 6. 2. Preliminaries Let I = {i1, i 2,, im} be a set of items and DB be a transaction database, where each transaction T is a set of items such that T I. For an itemset A I, a transaction T contains A if and only if A T. An association rule is defined as an implication of the form A B, where A I, B I and A B = . The association rule A B has support s in DB if s is the percentage of transactions in DB contains A B. The association rule A B holds in DB with confidence c if c is the percentage of transactions in DB that contain A also contain B. The problem of mining association rules is to extract all the association rules whose support and confidence are not less than given threshold values called min_sup and min_conf. The asso- ciation rules that satisfy both the minimum support thre- shold and minimum confidence threshold are called strong rules to distinguish them from the weak ones. The support of an itemset A is the percentage of transactions in DB that contain A. An itemset A is frequent if its support is not less than a minimum support threshold min_sup [9- 12]. Let L be the set of frequent itemsets in the database DB, D be the number of transactions in DB, s be the minimum support and X.support be the support count for an itemset X which is the number of transactions in DB containing X. Assume that for each X L, X.support is available. After some updates, a set of old transactions db (decrement database) is deleted from DB, and d is the number of transactions in db. Using the same minimum support s, an itemset X is frequent in the updated database (DB – db) if the support of X in (DB – db) is not less than or equal to s, i.e., X.support s (D – d) [9,11]. Therefore, the problem of updating association rules can be defined as finding the set L` of frequent itemsets in DB – db. 3. Related Work In the literature, the majority of the research in updating the discovered association rules is focused on the incre- mental mining. This is due to updating databases by adding new transactions has many applications such as web log records, stock market data, grocery sales data, transactions in electronic commerce, and daily weather/ traffic records, to name a few [9]. Several incremental mining algorithms are proposed such as Fast UPdate al- gorithm (FUP) [10], The Update Large Itemsets algo- rithm (ULI) [13], Negative Border with Partitioning (NBP) [2], Update with Early Pruning algorithm (UWEP) [11], New Fast UPdate algorithm (NFUP) [14] and Granular Computing Based Incremental Frequent Itemset Mining in distorted databases (GrC-IFIM) [15]. Unfor- tunately, little work has addressed the decremental min- ing although deletion is one of the most frequently op- erations in many database systems. In many applications, the user may want to remove the out-of-date data from the database. Cheung et al. proposed the first decremental mining algorithm called FUP2 [12]. In fact, it is an extension of the incremental algorithm FUP to update the discovered association rules when transactions are deleted from the database. Mainly, the framework of FUP2 is similar to that of Apriori. It contains number of iterations. At each iteration, the frequent itemsets of the same size are found. However, FUP2 has two main problems; it generates large number of candidate itemsets and it requires multi- ple scans of the database. Lee et al. proposed a Sliding-Window Filtering tech- nique (SWF) for updating the discovered association rules [9]. The SWF technique partitions the transaction database into a set of partitions and employs the mini- mum support threshold in each partition to filter unnec- essary candidate itemsets. The algorithm uses a scan re- duction technique in which it generates all the candidate itemsets of all sizes from the candidate 2-itemsets to re- duce the number of database scans. However, this leads to generating larger number of candidates. In fact, SWF does not utilize previously discovered frequent itemsets which are used in the other algorithms to improve incre- mental or decremental mining. Since the SWF is based on partitioning the database, it requires the data to be uniformly distributed to work well. Chang et al. extended the SWF algorithm by incorporating previously discov- ered information and proposed two algorithms to en- hance the performance for incremental mining [16]. How- ever, they are only suitable for cases in which a whole partition of data is deleted from a database at a time. In practice, situations requiring the deletion of continuous blocks of data from databases are not very common [17].  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 183 Lian et al., [18] addressed the relationships between old and new maximal frequent itemsets and proposed an algorithm called IMFI, which is based on these relation- ships to reuse previously discovered knowledge. The al- gorithm follows a top-down mechanism rather than tradi- tional bottom-up methods to produce fewer candidates. Moreover, they integrated SG-tree into IMFI to improve the counting efficiency. Zhang et al. proposed the Decrement Updating Algo- rithm (DUA) [7,8]. The algorithm tries to find those itemsets that are most likely to change their frequentness in the remaining database by: 1) finding the frequent itemsets of the decrement database using a threshold value less than the minimum support. 2) finding the fre- quent itemsets of a random set of transactions from the original database. One important drawback of this algo- rithm is that its accuracy drops when a lower minimum support threshold is used because it can not find all the frequent itemsets in the updated database. Another draw- back is that the DUA algorithm is efficient as long as the size of the decrement database is less than 30% of the total data in the original database [7]. Also, Zhang et al. proposed another algorithm called Efficient Dynamic Database Updating Algorithm (EDUA) [17]. It is similar to the SWF in adopting the scan reduc- tion technique. The EDUA consists of two procedures: the pre-mining procedure which uses the scan reduction technique to calculate all the frequent itemsets of the original database and store all the candidate 2-itemsets for the following procedure; the dynamic maintaining procedure in which all the candidate 2-itemsets in the deleted database are calculated with their support counts and subtracted from their corresponding 2-itemsets in the original database then the scan reduction technique is used again to generate all the candidates of all sizes. Fi- nally, the new candidate itemsets are checked against the updated database in order to discover the new frequent itemsets. The main advantage of the EDUA over the SWF is that it can deal with the case in which transac- tions are deleted from any part of the database, while the SWF can only function when the whole first partition is deleted from the database. Like SWF, EDUA does not benefit from the previously discovered frequent itemsets. A pruning technique was also proposed in [17] to en- hance the performance of the EDUA that tries to use heuristic knowledge of the prior mining results. Mengling Feng et al., [19] addressed the maintenance of the frequent patterns space of both incremental and decremental updates. The author’s conducted an in-depth investigation on how the frequent pattern space evolves under both incremental and decremental updates. Based on the evolution analysis, a new data structure, Genera- tor-Enumeration Tree (GE-tree), is developed to facilitate the maintenance of the frequent pattern space. With the concept of GE-tree, Mengling Feng et al., proposed two novel algorithms, Pattern Space Maintainer+ (PSM+) and Pattern Space Maintainer− (PSM−), for the incre- mental and decremental maintenance of frequent pat- terns. 4. The DARM Algorithm The main idea of the proposed algorithm (DARM) is to store and maintain the itemsets that are not frequent at present but may be frequent after deleting a set of trans- actions. We will call these itemsets the semi-frequent itemsets and denote them by SF. In practice, the decre- mental algorithm is not invoked every time a transaction is deleted from the database. However, it is invoked after a non-trivial number of transactions are deleted. Assume that the algorithm will be invoked every time d transac- tions are deleted from the database, so an itemset X is semi-frequent if it satisfies the following condition: s D > X.support s (D – d) This means that the semi-frequent itemsets are those itemsets that may be frequent after d transactions are deleted from the database where their support counts are less than the minimum support count and greater than or equal to s (D – d). When deleting a set of transactions from a database, all the itemsets in the updated database can be categorized into four classes as illustrated in Ta ble 1. The itemsets in classes A and D need some processing because they may be frequent or infrequent in the updated database. How- ever, the itemsets in classes B and C are straight forward. If an itemset is frequent in the original database and in- frequent in the decrement database, it will be frequent in the updated database. Also, if an itemset is infrequent in the original database and frequent in the decrement data- base, it will be infrequent in the updated database. In order to find the new frequent itemsets of the up- dated database, the DARM algorithm must handle all the itemsets in the four classes. The itemsets of class A, B and C can be obtained easily by using the frequent item- sets of the original and the decrement databases. How- Table 1. The four classe s for an item set in the updated data- base. Class Original Database (DB) Decrement Database (db) Updated Database (DB – db) AFrequent Frequent Frequent or Infrequent BFrequent Infrequent Frequent CInfrequent Frequent Infrequent DInfrequent Infrequent Frequent or Infrequent  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 184 ever, the itemsets of class D need further consideration that is why the semi-frequent itemsets are stored. Table 2 shows the definitions of symbols used in the pseudo-code of the DARM algorithm. The actual steps of the DARM algorithm are shown in Figure 1. The first step of the algorithm is to find the frequent itemsets of the decre- ment database with their support counts. Then, it sub- tracts the support counts of the frequent itemsets of the decrement database from their corresponding frequent itemsets of the original database. The new support counts of these itemsets are then compared to the minimum support to determine which itemsets will be still frequent and which will not. By this way, the algorithm handles those itemsets falling in class A. To determine the itemsets of class D, the algorithm eliminates each frequent itemset in the decrement data- base from SF. After this elimination, the remaining item- sets in SF are the infrequent itemsets in both the original and the decrement database (class D). These itemsets require a scan of the decrement database to update their support counts then the algorithm verifies them against the minimum support. The rest of the itemsets that are frequent in the original database and they do not occur in the frequent itemsets of the decrement database will be frequent in the updated database and do not need to be verified (class B). However, the DARM algorithm needs a scan to the decrement database for these itemsets to update their support counts for further runs. Hence, the algorithm scans the decrement database only once to handle all the itemsets in class B and class D. Also, the rest of frequent itemsets of decrement database that do not have a corre- sponding frequent itemsets in the original database will be infrequent so that they do not need any processing at all (class C). For the subsequent runs of the algorithm, the semi- Table 2. The list of symbols used in the DARM Algorithm. Symbol Meaning DB The original database D The number of transactions in DB db The decrement database d The number of transactions in db DB – db The updated database D The number of transactions in the updated database S The minimum support SF The set of semi-frequent itemsets L The set of frequent itemsets in DB Ldec The set of frequent itemsets in db L The set of frequent itemsets in DB – db X An itemset X.support The number of transactions containing X in the data- base X.supportDB The number of transactions containing X in DB X.supportdb The number of transactions containing X in db X.supportDB–db The number of transactions containing X in DB – db Figure 1. The DARM algorithm. 123 Figure 2. The update procedure of SF. frequent itemsets need to be updated. Figure 2 shows the Update_SF procedure that maintains the itemsets in SF. First, the procedure computes the negative border of new frequent itemsets of the updated database. Then, it com- putes the support count of each itemset in the negative border in the updated database and checks if the itemset satisfies the condition of semi-frequent itemsets. Example 4.1 Consider the database DB presented in Figure 3(a) with ten transactions indexed from T1 to T10. All the frequent and semi-frequent itemsets of DB are known in advance and shown in Figure 3(b) with a Inputs: DB, db, L, SF and s Output: L (L is initially set to ) and SF Algorithm: 1- Find the set Ldec of frequent itemsets in the decrement data- base db. 2- For all X L If X Ldec then X.supportDB-db = X.supportDB – X.supportdb If X.supportDB-db s (D – d) then L = L {X} Remove X from L and Ldec 3- For all X SF If X Ldec then Remove X from SF 4- Scan db and compute X.supportdb for all X L SF 5- For all X L SF X.supportDB–db = X.supportDB – X.supportdb If X L then L = L {X} Else If X.supportDB–db s (D – d) then L = L {X} 6- Update_SF (L) //a procedure to maintain the itemsets in SF 7- Return L Function Update_SF (L ) 1- Compute Negative border of L NBd (L) 2- Scan DB – db and compute X.supportDB-db for all X NBd (L) 3- For X NBd (L) If X.supportDB-db <s D && X.supportDB-db s D SF = SF {X} End Function  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 185 minimum support 30%. After a period of time, four transactions are deleted from DB: T1, T7, T9 and T10. To find the new frequent itemsets of the updated data- base, the algorithm computes the frequent itemsets of the decrement database and the result is shown in Ldec in Figure 3(b). After that, it begins to handle the itemsets of class A Original database (DB) Decrement database (db) TID Transaction TID Transaction T1 A B E F T1 A B E F T2 B C F T7 C E F T3 A C E F T9 B C E F T4 B D E T10 B F T5 A B C E T6 A B D E T7 C E F T8 A B F T9 B C E F T10 B F (a) L Ldec SF Itemset Count Itemset Count Itemset Count A 5 B 3 D 2 B 8 C 2 AC 2 C 5 E 3 ABF 2 E 7 F 4 AEF 2 F 7 BE 2 BCE 2 AB 4 BF 3 BCF 2 AE 4 CE 2 BEF 2 AF 3 CF 2 BC 3 EF 3 BE 5 BEF 2 BF 5 CEF 2 CE 4 CF 4 EF 4 ABE 3 CEF 3 (b) Class A L Itemset Count Itemset Count B 5 B 5 C 3 C 3 E 4 E 4 F 3 F 3 BE 3 BE 3 BF 2 BF 2 CE 2 CE 2 CF 2 CF 2 EF 1 CEF 1 (c) Class B Class C Class D Itemset Count Itemset Count Itemset Count A 5 BEF 2 D 2 AB 4 AC 2 AE 4 ABF 2 AF 3 AEF 2 BC 3 BCE 2 ABE 3 BCF 2 (d) Class B Class D L Itemset Count ItemsetCount Itemset Count A 4 D 2 A 4 AB 3 AC 2 B 5 AE 3 ABF 1 C 3 AF 2 AEF 1 D 2 BC 2 BCE 1 E 4 ABE 2 BCF 1 F 3 AB 3 AC 2 AE 3 AF 2 BC 2 BE 3 BF 2 CE 2 CF 2 ABE 2 (e) Figure 3. An illustrative example. which are the common itemsets in L and Ldec. All the support counts of the frequent itemsets in Ldec are sub- tracted from their corresponding frequent itemsets in L (the highlighted itemsets in Figure 3(b)) and these item- sets are removed from the two lists. The results of the subtraction are shown in class A in Figure 3(c). The itemsets in class A for which the support count is not less than (6 × 30% = 1.8 ≈ 2) are moved to L' as shown in Figure 3(c). Note that the itemsets EF and CEF have not enough support count so they are not moved to L'. The remaining itemsets in L and Ldec are shown in class B and class C in Figure 3(d) respectively. By default, all the itemsets in class B will be frequent in the updated data- base and there is no need to be verified but their support counts need to be updated. Only their support counts in DB are known so they need a db scan to determine their support counts. The DARM algorithm postpones this scan until it determines the itemsets of class D to handle both of them in one scan. Using the itemsets in SF and in Ldec, the algorithm can determine the itemsets of class D by subtracting the itemsets of Ldec from SF. Here, the itemset BEF marked by an oval in Figure 3(b) is elimi- nated from SF because it appears in Ldec and all the re- maining itemsets in SF will belong to class D as shown in Figure 3(d). Then, the algorithm scans db to update the support counts of the itemsets of class B and D. Fig- ure 3(e) shows the itemsets of class B and D with their new support counts in the updated database. All the itemsets of class B are then moved to L' without any verification while the itemsets of class D are verified against the minimum support count of the updated data- base. The itemsets D and AC are the only itemsets from class D that are moved to L'. 5. Experimental Results Several experiments are designed to assess the perform-  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 186 ance of the proposed algorithms and compare it with the performance of two other decremental algorithms: DUA and EDUA. These two algorithms are chosen because they are the most recent algorithms for updating associa- tion rules when some transactions are deleted from the database. The comparisons are based on the following metrics: run time, minimum support, original database size and decrement database size. In this section, the experiments of the DARM algo- rithm are reported. All the programs are implemented using C# and run on a PC with 1.8 GHz Intel Core 2 Duo processor and 1GB memory running on Windows XP Professional. In the experiments of data mining, it is common to use the notation [Tx-Iy-Dm-dn] to represent a dataset in which x is the mean size of a transaction, y is the mean size of maximal frequent itemsets, m is the number of transactions in the original database (in thou- sands) and n is the number of transactions in the decre- ment database (in thousands). The experiments carried out performed using the syn- thetic data used in the experimental results of the previ- ously mining algorithms introduced in [7-9,15,16]. Readers are referred to these papers for a detailed de- scription. In general, several different transaction data- bases are generated from a set of potentially frequent itemsets using the parameters mentioned in the notion [Tx-Iy-Dm-dn]. These transactions mimic the transac- tions in the retailing environment. The first experiment measures the relative run time of the DARM, DUA and EDUA algorithms. The three al- gorithms are tested against different datasets with mini- mum support thresholds varying from 0.1% to 1%. The results are shown in Figures 4-6 with original database sizes 50 k, 100 k and 150 k respectively. The size of the decrement database is equal to 20% of the transactions in the original database. It is clear from these figures that the DARM algorithm reduces the run time required to Figure 4. The relative performance of the decremental al- gorithms and the DARM Algorithm. Figure 5. The relative performance of the decremental al- gorithms and the DARM Algorithm. Figure 6. The relative performance of the decremental al- gorithms and the DARM Algorithm. perform the update operation than other two decremental algorithms. By examining these figures more carefully, it can be noticed that at low support thresholds, the performance difference among the three algorithms is significant while it decreases as the support thresholds increase. This is because at low support thresholds, large numbers of fre- quent itemsets are produced hence the performance dif- ferences among different algorithms are prominent. How- ever at high support thresholds, there is a restricted num- ber of frequent itemsets so the run time curves of differ- ent algorithms approach each other in this region. Also, if we examine these figures more carefully, we can notice sharper differences in the run times of EDUA at high support thresholds than other two algorithms. To under- stand this phenomenon, recall that EDUA generates the entire candidate itemsets of all sizes from the candidate 2-itemsets to reduce the number of database scans. How- ever, the DUA and the DARM algorithm are based on handling the frequent itemsets. Figure 7 shows the average speedup ratio achieved by  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 187 the DARM algorithm with respect to DUA and EDUA algorithms. It can be seen that the average speed is 1.14 with the DUA and 1.62 with the EDUA. In the second experiment, the frequent itemsets gener- ated by each algorithm are verified to determine its ac- curacy. The accuracy is defined as the ratio of the number of frequent itemsets found by the algorithm against the number of frequent itemsets found by running Apriori on the updated database [7,8]. Table 3 shows the number of frequent itemsets generated by each algorithm. The ac- curacy of the DUA algorithm is 89.77%, 99.69% and 95.95% for the datasets 50 k, 100 k and 150 k respectively while the accuracy of the DARM algorithm and EDUA is equal to 100% for all datasets. The reason for the accuracy difference among these algorithms is in the way they handle the itemsets of class D. The DUA tries to find the class D itemsets by reducing the minimum support by which the mining is performed on the decrement database and by finding the frequent itemsets of a random set of transactions from the original database. However, this does not guarantee to find all itemsets of class D. For the EDUA, it depends on storing all candidate 2-itemsets and generating all the candidates of all sizes from them so no frequent itemset will be missed. For the DARM algorithm, only the semi-frequent itemsets are stored and this guar- antees finding all frequent itemsets without any miss. The next experiment is performed to find out how the size of decrement database affects the performance of the DARM algorithm. In this experiment, the size of original database is fixed while the size of the decrement database is changing. As shown in Figure 8, the results are very Figure 7. The speedup ratio. Table 3. Accuracy comparsion of the decremental algori- thms. Number of frequent itemsets generated Datasets DUA EDUA DARM Apriori T10-I4-D50-d10 13,404 14,931 14,931 14,931 T10-I4-D100-d20 27,377 27,461 27,461 27,461 T10-I4-D150-d30 22,470 23,419 23,419 23,419 Accuracy 89.77% to 99.69% 100% 100% encouraging. It shows that the DARM algorithm not only can work with small decrement, but also can work very well in case of large decrement. In general, the larger the decrement is, the longer it would take to do the update. Another similar experiment is done to know the influ- ence of changing the size of the original database. Figure 9 shows the scalability of the DARM algorithm with re- spect to changing the size of the original database. It shows that the proposed algorithm is adaptive to size increase and can be applied to very large databases. The last experiment evaluates the reduction in the total number of candidates generated by the DARM algorithm. In this experiment, the number of candidates generated by the DARM algorithm is counted and compared with the number of candidates generated by the other algo- rithms. The results are shown in Table 4. Note the sig- nificant reduction in total number of candidate itemsets compared to the other algorithms. The DARM algorithm achieves a reduction rate equal to 34.98% relative to the DUA and 47.73% relative to the EDUA. Figure 10 shows the candidate reduction rates of the DARM algorithm with respect to the DUA and EDUA algorithms. 6. Conclusions The maintenance of the frequent pattern is a challenging task in data mining and machine learning. In this paper, a Figure 8. Scalability with the number of transactions in db. Figure 9. Scalability with the number of transactions in db.  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 188 Table 4. Comparing the number of candidates. Number of candidates generated Itemsets DUA EDUA DARM 1 2,427 2,000 1,611 2 33,459 37,801 20,530 3 24,433 30,852 15,877 4 19,147 23,341 12,667 5 11,431 15,203 7,890 6 5,335 9,612 3,838 7 1,965 2,757 1,409 8 543 1,002 377 9 100 359 67 10 9 36 6 Total 98,849 122,963 64,272 Figure 10. Candidate reduction. decremental association rules mining algorithm (DARM) is presented. The proposed algorithm studied the prob- lems of pattern maintenance when some transactions are removed from a large database. The main idea of the DARM algorithm is to store and maintain the itemsets that are not frequent at present but may be frequent after deleting a set of transactions. There- fore the processing cost of the original database can be reduced. The DARM algorithm does not require rescan- ning the original database and can determine the new frequent itemsets by scanning the decrement database only. Experiments have shown that the DARM algorithm not only attain highly accurate mining results, but also run significant faster than existing algorithms for updat- ing the frequent itemsets. The DARM algorithm outper- forms the DUA in terms of both run time and in the ac- curacy. REFERENCES [1] Z. Q. Zhou and C. I. Ezeife, “A Low-Scan Incremental Association Rule Maintenance Method,” Proceedings of the 14th Canadian Conference on Artificial Intelligence (AI 2001), Ottawa, 2001, pp. 26-35. [2] R. Kashef and Y. El-Sonbaty, “NBP: Negative Border with Partitioning Algorithm for Incremental Mining of Association Rules,” Proceedings of the International Conference on Intelligent Systems Design and Applica- tions (ISDA’04), Budapest, Hungary, August 2004. [3] G. Grahne and J. Zhu, “Fast Algorithms for Frequent Itemset Mining Using FP-Trees,” IEEE Transactions on Knowledge and Data Engineering, Vol. 17, No. 10, 2005, pp. 1347-1362. doi:10.1109/TKDE.2005.166 [4] R. C. Agarwal, C. C. Aggarwal and V. V. V. Prasad, “A Tree Projection Algorithm for Generation of Frequent Item Sets,” Parallel and Distributed Computing Journal, Vol. 61, No. 3, 2001, pp. 350-371. doi:10.1006/jpdc.2000.1693 [5] J. Han, J. Pei and Y. Yin, “Mining Frequent Patterns without Candidate Generation,” Proceedings of ACM- SIGMOD International Conference on Management of Data, Dallas, 2000, pp. 1-12. doi:10.1145/342009.335372 [6] A. Ceglar and J. F. Roddick, “Association Mining,” ACM Computing Surveys Journal, Vol. 38, No. 2, 2006, Article 5. [7] S. C. Zhang, J. L. Zhang and Z. Jin, “A Decremental Al- gorithm of Frequent Itemset Maintenance for Mining Updated Databases,” The Journal of Expert Systems with Applications, Vol. 36, No. 8, 2009, pp. 10890-10895. [8] S. C. Zhang, X. D. Wu, J. L. Zhang and C. Q. Zhang, “A Decremental Algorithm for Mining Dynamic Databases,” Proceedings of 7th International Conference on Data Warehousing and Knowledge Discovery (DaWak 2005), Copenhagen, 2005, pp. 305-314. [9] C. H. Lee, C. R. Lin and M. S. Chen, “Sliding-Window Filtering: An Efficient Algorithm for Incremental Min- ing,” Proceedings of 10th International Conference on Information and Knowledge Management, Atlanta, 2001, pp. 263-270. [10] D. W. Cheung, J. Han, V. T. Ng and C. Y. Wong, “Main- tenance of Discovered Association Rules in Large Data- bases: An Incremental Updating Technique,” Proceedings of 12th International Conference on Data Engineering, New Orleans, 1996, pp. 106-114. [11] N. F. Ayan, A. U. Tansel and E. Arkun, “An Efficient Algorithm to Update Large Itemsets with Early Pruning,” Proceedings of 5th ACM SIGKDD International Confer- ence on Knowledge Discovery and Data Mining, San Diego, 1999, pp. 287-291. [12] D. W. Cheung, S. D. Lee and B. Kao, “A General Incre- mental Technique for Maintaining Discovered Associa- tion Rules,” Proceedings of 5th International Conference on Database Systems for Advanced Applications, Mel- bourne, 1997, pp. 185-194. doi:10.1142/9789812819536_0020 [13] S. Thomas, S. Bodagala, K. Alsabti and S. Ranka, “An Efficient Algorithm for the Incremental Updating of As- sociation Rules in Large Database,” Proceedings of 3rd International Conference on Data Mining and Knowledge Discovery, Newport Beach, 1997, pp. 263-266. [14] C.-C. Chang, Y.-C. Li and J.-S. Lee, “An Efficient Algo- rithm for Incremental Mining of Association Rules,” Proceedings of 15th International IEEE Workshop on Research Issues in Data Engineering: Stream Data Min-  DARM: Decremental Association Rules Mining Copyright © 2011 SciRes. JILSA 189 ing and Applications (RIDE-SDMA’05), Tokyo, 2005. [15] C. Xu and J. Wang, “An Efficient Incremental Algorithm for Frequent Itemsets Mining in Distorted Databases with Granular Computing,” Proceedings of 2006 IEEE/WIC/ ACM International Conference on Web Intelligence, Hong Kong, 2006, pp. 913-918. [16] C.-H. Chang and S.-H. Yang, “Enhancing SWF for In- cremental Association Mining by Itemset Maintenance,” Proceedings of 7th Pacific-Asia Conference on Advances in Knowledge Discovery and Data Mining (PAKDD 2003), Seoul, 2003, pp. 301-312. [17] S. C. Zhang, J. L. Zhang and C. Q. Zhang, “EDUA: An Efficient Algorithm for Dynamic Database Mining,” In- formation Sciences Journal, Vol. 177, No. 13, 2007, pp. 2756-2767. doi:10.1016/j.ins.2007.01.034 [18] W. Lian, D. W. Cheung and S. M. Yiu, “Maintenance of Maximal Frequent Itemsets in Large Databases,” Pro- ceedings of 2007 ACM Symposium on Applied Computing (SAC’07), Seoul, 2007, pp. 388-392. [19] M. L. Feng, G. Z. Dong, J. Y. Li, Y.-P. Tan and L. Wong, “Pattern Space Maintenance for Data Updates and Inter- active Mining,” Computational Intelligence, Vol. 26, No. 3, 2010, pp. 282-316. doi:10.1111/j.1467-8640.2010.00360.x |