Journal of Applied Mathematics and Physics

Vol.04 No.04(2016), Article ID:65442,6 pages

10.4236/jamp.2016.44067

A Note on Parameterized Preconditioned Method for Singular Saddle Point Problems

Yueyan Lv, Naimin Zhang*

School of Mathematics and Information Science, Wenzhou University, Wenzhou, China

Received 3 December 2015; accepted 6 April 2016; published 13 April 2016

ABSTRACT

Recently, some authors (Li, Yang and Wu, 2014) studied the parameterized preconditioned HSS (PPHSS) method for solving saddle point problems. In this short note, we further discuss the PPHSS method for solving singular saddle point problems. We prove the semi-convergence of the PPHSS method under some conditions. Numerical experiments are given to illustrate the efficiency of the method with appropriate parameters.

Keywords:

Singular Saddle Point Problems, Hermitian and Skew-Hermitian Splitting, Preconditioning, Iteration Methods, Semi-Convergence

1. Introduction

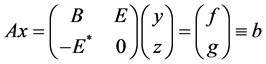

We consider the iterative solution of the following linear system:

(1)

(1)

where  is Hermitian positive definite,

is Hermitian positive definite,  is rank-deficient, i.e.,

is rank-deficient, i.e.,  ,

,  denotes the conjugate transpose of E,

denotes the conjugate transpose of E,  and

and . Linear systems of the form (1) are called saddle point problems. They arise in many application areas, including computational fluid dynamics, constrained optimization and weighted least-squares problem, see, e.g., [1] [2].

. Linear systems of the form (1) are called saddle point problems. They arise in many application areas, including computational fluid dynamics, constrained optimization and weighted least-squares problem, see, e.g., [1] [2].

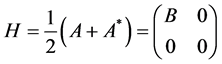

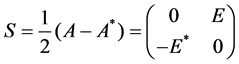

We review the Hermitian and skew-Hermitian splitting (HSS) [3] of coefficient matrix A:

where

,

, .

.

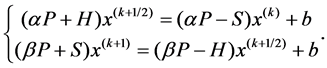

The PPHSS Iteration Method ([4]): Denote . Let

. Let  be an arbitrary initial guess, compute

be an arbitrary initial guess, compute  for

for  by the following iteration scheme until

by the following iteration scheme until  converges,

converges,

(2)

(2)

where ,

,

matrix C is Hermitian positive definite.

Evidently, the iteration scheme (2) of PPHSS method can be rewritten as

here,

with

Evidently, the matrix

where

Owing to the similarity of the matrices

2. The Semi-Convergence of the PPHSS Method

As the coefficient matrix A is singular, then the iteration matrix T has eigenvalue 1, and the spectral radius of matrix T cannot be small than 1. For the iteration matrix T of the singular linear systems, we introduce its pseudo-spectral radius

where

For a matrix

Lemma 2.1 ([6]). The iterative method (4) is semi-convergent, if and only if,

Lemma 2.2 ([7]).

Theorem 2.3. Assume that B and C be Hermitian positive definite, E be of rank-deficient. Then

Proof. The proof is similar to the proof of Lemma 2.8 in [8], here is omitted.

Lemma 2.4 ([4]). Let B and C be Hermitian positive definite, E be of rank-deficient. Assume that

Let

Lemma 2.5. The eigenvalues of the iteration matrix

Proof. Notice the similarity of matrices

Lemma 2.6. If

Proof. If

here,

If

Lemma 2.7 ([10]). Both roots of the real quadratic equation are less than one in modulus if and only if

Theorem 2.8. If the iteration parameters

then, the pseudo-spectral radius of the PPHSS method satisfies

Proof. Using condition (9), it follows that

and

By Lemma 2.7, for the eigenvalues

If

Theorem 2.9. Let

and correspondingly,

Proof. According to Lemma 2.5 and Lemma 2.6, we know that the eigenvalues of the iteration matrix

If

3. Numerical Results

In this section, we use an example to demonstrate the numerical results of the PPHSS method as a solver by comparing its iteration steps (IT), elapsed CPU time in seconds (CPU) and relative residual error (RES) with other methods. The iteration is terminated once the current iterate satisfies

Example 3.1 ([11]). Consider the saddle point problem (1), with the following block form of coefficient matrix:

where symbol

the right-hand side vector b is chosen by

For the Example 3.1, we choose

clear to see that the pseudo-spectral radius of the PPHSS and the SPPHSS methods are much smaller than of the PHSS method when the optimal parameters are employed. In Table 2, we list numerical results with respect to

Table 1. The optimal iteration parameters and pseudo-spectral radius.

Table 2. IT, CPU and RES for

IT, CPU and RES of the texting methods with different problem sizes l. We see that the PPHSS and SPPHSS methods with appropriate parameters always outperforms the PHSS method both as a solver and as a preconditioner for GMRES in iteration steps and CPU times. Notice

where

Cite this paper

Yueyan Lv,Naimin Zhang, (2016) A Note on Parameterized Preconditioned Method for Singular Saddle Point Problems. Journal of Applied Mathematics and Physics,04,608-613. doi: 10.4236/jamp.2016.44067

References

- 1. Elman, H.C., Ramage, A. and Silvester, D.J. (2007) Algorithm 866, IFISS, a MatLab Toolbox for Modelling Imcompressible Flow. ACM Trans. Math. Softw., 33, 1-18.

- 2. Bjorck, A. (1996) Numerical Methods for Least Squares Problems. SIAM, Philadelphia. http://dx.doi.org/10.1137/1.9781611971484

- 3. Bai, Z.Z., Golub, G.H. and Ng, M.K. (2003) Hermitian and Skew-Hermitian Splitting Methods for Non-Hermitian Positive Definite Linear Systems. SIAM J. Matrix Anal. Appl., 24, 603-626. http://dx.doi.org/10.1137/S0895479801395458

- 4. Li, X., Yang, A.L. and Wu, Y.J. (2014) Parameterized Preconditioned Hermitian and Skew-Hermitian Splitting Iteration Method for Saddle-Point Problems. Int. J. Comput. Math., 91, 1224-1238. http://dx.doi.org/10.1080/00207160.2013.829216

- 5. Chao, Z. and Zhang, N.M. (2014) A Generalized Preconditioned HSS Method for Singular Saddle Point Problems. Numer. Algorithms, 66, 203-221. http://dx.doi.org/10.1007/s11075-013-9730-y

- 6. Berman, A. and Plemmons, R. (1979) Nonnegative Matrices in Mathematical Science. Academic Press, New York.

- 7. Zhang, N.M. and Wei, Y.M. (2010) On the Convergence of General Stationary Iterative Methods for Range-Hermitian Singular Linear Systems. Numer. Linear Algebra Appl., 17, 139-154. http://dx.doi.org/10.1002/nla.663

- 8. Chen, Y. and Zhang, N.M. (2014) A Note on the Generalization of Parameterized Inexact Uzawa Method for Singular Saddle Point Problems. Appl. Math. Comput., 325, 318-322. http://dx.doi.org/10.1016/j.amc.2014.02.089

- 9. Golub, G.H. and Van Loan, C.F. (1996) Matrix Computions. 3rd Edition, The Johns Hopkins University Press, Baltimore.

- 10. Young, D.M. (1971) Iterative Solution of Large Linear Systems. Academic Press, New York.

- 11. Zheng, B., Bai, Z.Z. and Yang, X. (2009) On Semi-Convergence of Parameterized Uzawa Methods for Singular Saddle Point Problems. Linear Algebra Appl., 431, 808-817. http://dx.doi.org/10.1016/j.laa.2009.03.033

NOTES

*Corresponding author. This author is supported by National Natural Science Foundation of China under grant No. 61572018 and Zhejiang Provincial Natural Science Foundation of China under Grant No. LY15A010016.