Journal of Software Engineering and Applications

Vol. 6 No. 5 (2013) , Article ID: 31613 , 10 pages DOI:10.4236/jsea.2013.65033

Source-to-Source Refactoring and Elimination of Global Variables in C Programs

![]()

Electrical Engineering and Computer Science, University of Kansas, Lawrence, USA.

Email: prasadk@ku.edu

Copyright © 2013 Hemaiyer Sankaranarayanan, Prasad A. Kulkarni. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received February 15th, 2013; revised March 17th, 2013; accepted March 25th, 2013

Keywords: Global Variable; Program Refactoring; Compiler Transformations

ABSTRACT

A global variable in C/C++ is one that is declared outside a function, and whose scope extends the lifetime of the entire program. Global variables cause problems for program dependability, maintainability, extensibility, verification, and thread-safety. However, global variables can also make coding more convenient and improve program performance. We have found the use of global variables to remain unabated and extensive in real-world software. In this paper we present a source-to-source refactoring tool to automatically detect and localize global variables in a program. We implement a compiler based transformation to find the best location to redefine each global variable as a local. For each global, our algorithm initializes the corresponding new local variable, passes it as an argument to necessary functions, and updates the source lines that used the global to now instead use the corresponding local or argument. We also characterize the use of global variables in standard benchmark programs. We study the effect of our transformation on static program properties, such as change in the number of function arguments and program state visibility. Additionally, we quantify dynamic program features, including memory and runtime performance, before and after our localizing transformation.

1. Introduction

A Global variable is an external variable in C and C++ that is declared outside a function, and is in-scope and visible throughout the program. Thus, global variables are accessible and can be set and used in any program function [1]. The use of global variables has been observed to cause several problems. First, researchers have argued that global (and other non-local) variables increase the mental effort necessary to form an abstraction from the specific actions of a program to the effects of those actions, making it more difficult to comprehend a program that uses global variables [2]. In other words, source code is the easiest way to understand when we limit the scope of variables. Second, developers have found it more difficult to test and verify software that employs global variables. Use of globals makes it difficult (for humans and automatic tools) to determine the state being used and modified by a function, since globals do not need to be explicitly passed and returned from the function. Similarly, formally verifying code that uses global variables typically requires stating and proving invariant properties, which make the verification task more arduous [3]. For such reasons, formally-defined SPARK programming language requires the programmer to annotate all uses of global variables [4]. Third, global variables have also been implicated in increasing program dependence, which measures the influence of one program component on another [5]. Additionally, global variables have been observed to produce dependence clusters, where a set of program statements are all dependent on one another. Low program dependence and a lack of dependence clusters is found to benefit program comprehension [6,7] as well as program maintenance and reengineering [8,9]. Fourth, the use of global variables causes the program to be non-thread-safe [10,11]. This is because global variables are allocated in the data region of the process address space, providing only one copy of these variables for all program threads. Fifth, global variables typically violate the principle of least privileges. This philosophy says that if the accessibility of a program resource, such as a function or variable, is restricted to just those portions of the program where such accessibility is absolutely required, then the programmer is less likely to introduce errors into the code. Sixth, global variables can create mutual dependencies and untracked interactions between different program components causing an irregularity, called action at a distance in software engineering. This issue arises when operations in one part of the program affect behavior in some other program parts. On account of these limitations, the use of global variables in generally discouraged in programming. Regardless of the problems caused by global variables, they are still extensively used in current real-world software systems. Their use can be attributed to two (real or perceived) benefits of using global variables: 1) Efficiency—Researchers have shown that employing global variables can boost program efficiency and lower (stack) space usage by reducing or eliminating the overhead of argument passing and returning values during function calls/returns [12]. However, the globalization transformations to achieve this effect can generally be performed automatically by the compiler during the source-to-binary generation without affecting the high level source code. 2) Convenience—It may also be more convenient for developers to hold program state that is manipulated and consumed in multiple dislocated programs regions in global variables. In such cases of dislocated use of program variables, it may be difficult for the programmer to determine the best place for declaring the local variable and find the best path to make it available to all functions setting or using it. Such use of globals is especially attractive for developers updating unfamiliar code regions in large programs. However, given the harmful effects of global variables, it will be more desirable if we could provide developers the convenience of using global variables, but automatically localize them to preserve the dependability, understandability, verifiability, and maintainability of the source code program.

In this work, we develop and implement a compilerbased algorithm to automatically find and eliminate global variables in a program by transforming them into local variables. Our algorithm automatically finds the closest dominator function to localize each global, and then passes the corresponding local variable as a parameter to every function using the original global. Function prototypes are appropriately modified to reflect the new parameters for each function. At the same time, each access of the global variable is updated to instead modify or use the corresponding local variable or function argument. In this paper, we also design several experiments to measure the effect of this transformation on the space and time requirements of the modified programs. Thus, we make the following contributions in this work:

1) To our knowledge, we construct the first source-tosource transformation tool to localize global variables in C programs.

2) We present detailed statistics and observations on the use of global variables in existing benchmarks.

3) We measure and quantify the effect of this transformation on the number of function arguments passed, along with its space and performance (time) overheads.

2. Related Works

In this section we describe previous research efforts to localize and manage global variables. Many popular programming language textbooks [13] and individual programming practitioners [14] have derided and discouraged the use of global variables. At the same time, acknowledging the necessity and/or convenience of employing global variables/state, language designers have developed alternative programming constructs to provide some of the benefits while controlling many limitations of global variables. Arguably, one of the most wellknown alternatives to some uses of global variables is the static specifier in C/C++ that limits the scope of global variables to individual functions or files [13]. Another construct that programmers often use in place of global variables is the singleton design pattern that can encapsulate global state by restricting the instantiation of a class to a single object [15]. However, the use of the singleton pattern can result in many of the same problems with testing and code maintenance that are generally associated with global variables [16].

To our knowledge, there exist only a few attempts to automatically detect and eliminate global variables in high level programs. Sward and Chamillard developed a tool to identify global variables and add them as locals to the parameter list of functions in Ada programs [17]. However, apart from operating only on Ada programs, this work does not describe their implementation and does not provide any static or runtime results. Yang et al. proposed and implemented a “lifting” transformation to move global variables into main’s local scope [18]. However, lifting was designed to only work with their other “flattening” transformation that absorbs a function into its caller without making a new copy of the function for each call-site. This earlier research aimed to place the stack allocated variables in static memory to minimize RAM usage for embedded systems applications, and did not have to deal with most of the issues encountered in a more general technique to eliminate global variables.

More related to our current research are works that attempt to automatically eliminating global variables to generate thread-safe programs. Zheng et al. outlined a compiler-based approach to eliminate global variables from multi-threaded Fortran MPI (Message-Passing Interface) programs [11]. Their transformation moves all globals into a single structure, which is then passed as an argument to all functions. Thus, unlike our implementation, their transformation does not target or affect code maintainability. Additionally, this previous work also did not collect statistics on the use of global variables and the effect of the transformation on code maintainability and performance metrics. Smith and Kulkarni implemented a similar algorithm to transform global variables into locals to make “C” programs thread-safe [10]. However, this work was not targeted at code dependability and did not implement a source to source transformation. The ultimate goal of our research is to develop a new code refactoring tool that can flexibly reassign storage between local and global variables. Existing code refactoring tools are typically only used to enhance non-functional aspects of the source code, including program maintainability [19] and extensibility [20]. Examples of important code refactorings for C program maintainability include renaming variables and functions, dividing code blocks into smaller chunks, and adding comments to the source codes [21]. None of the existing refactoring tools provide an ability as yet to transform global/local variables, as we perform in this work.

3. Localizing Global Variables

Even moderate-sized programs in C/C++ often contain many global variables. Additionally, these global variables may be scattered throughout the code, which make it highly tedious and error-prone to manually detect and refactor the code to remove these variables. Therefore, our approach employs an automatic compiler-driven algorithm to find and eliminate global variables. Our algorithm works by converting the global variables to locals, and then passing them as arguments to all the functions where they are needed. This tool provides command-line options that enable the user to selectively eliminate all or some particular global variables and instantly see the changes made to the source code files. In this section we provide more details on our compiler-based framework and transformation algorithm.

Transformation Algorithm for Localizing Global Variables

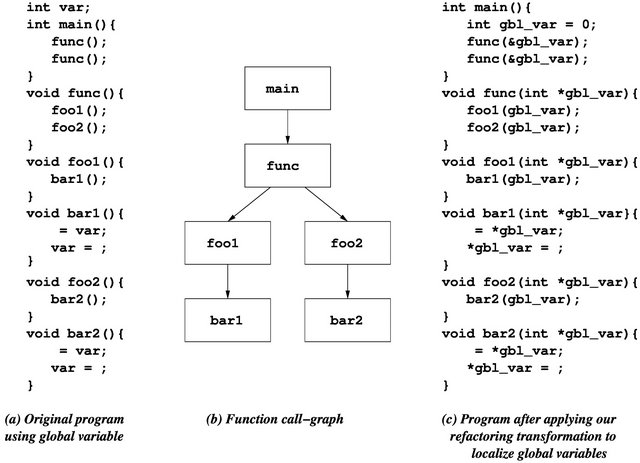

Our compiler based transformation performs two passes to localize global variables. In the first pass, we generate the call-graph, detect global variables, and collect other information regarding the use of global variables in the program. The second pass uses this information to move global variables into the local scope of the appropriate function, pass these new local variables to other functions that use the original globals, and update function headers and the variable names in the source statements accessing each global variable to instead use the new local/argument. We use the small example program in Figure 1(a) to explain our transformation algorithm in more detail. The syntax “=var” in Figure 1(a) indicates

Figure 1. Example to illustrate the program transformation to localize global variables.

a use of the variable var, while “var =” is a set of the variable var. The algorithm proceeds as follows.

• In the first step we invoke the compiler to compute the static call-graph of the program. Figure 1(b) shows the call-graph that will be generated for the example program in Figure 1(a).

• The compiler then detects all global variables in the program, as well as the functions that set and/or use each global variable. We also record the data type and initialization value of each global variable.

• Next, we automatically determine the best function to localize each global variable. While the root program function, main(), can act as the default localizing function for all global variables declared in application programs, we attempt to place each global as close as possible to the set of functions that access that variable in order to minimize the argument passing overheads. We employ our implementation of the Lengauer-Tarjan algorithm [22] to find the immediate dominator of each node (function) in the call-graph. A dominator for a control flow graph node n is defined as a node d such that every path from the entry node to n must go through d [23]. Since one global variable can be used in many functions, we further extend the Lengauer-Tarjan algorithm to find the closest dominator function for the set of functions that use a particular global variable. This closest dominator is determined by locating the first common dominator of all the functions that use that global. Thus, as an example, the global variable var that is used in two functions, bar1() and bar2(), in Figure 1(a) has the function func() as its common dominator.

• Simply localizing each global variable in its closest common dominator function may compromise the original program semantics, if this dominator function is called multiple times. In such cases, the corresponding localized variable will be re-declared and re-initialized on each invocation of the dominator function, which differs from the single initialization semantics of the original global. For instance, in the example program in Figure 1(a) the closest dominator function func() is called multiple times from main(), and therefore may not be a semantically legal choice to locate the global variable var. Consequently, for each global variable, we traverse the dominator tree upwards starting from its closest common dominator to main() to find the first legal dominator that is only invoked once by the program.

• Next, our transformation moves each global variable as a local variable to its closest legal dominator function. The transformation also adds new instructions to this function to correctly initialize the new local variable. In Figure 1(a), the global variable var is moved to the function main() and initialized as the new local variable gbl_var.

• The next step involves finding all the functions in the call-graph between the legal dominator and the functions where the global is used. We call this set of functions as the global variable’s frontier. The local copy for each global variable needs to be passed by reference to each of its frontier functions to reach their appropriate end locations where they are used. This requires modifying the calling interface of each frontier function. Thus, in program 1(a), the local variable gbl_var is passed by reference to all its frontier functions, namely func(), foo1() and foo2().

• Our transformation then modifies the calling interface of the end functions for each global variable to get the additional arguments corresponding to the local variants of global variables. Thus, the calling interfaces of functions bar1() and bar2() are updated to accept the address of the local variable gbl_var as an argument.

• Finally, our tool automatically updates every use of each global variable in the program statements to instead use its corresponding local variants. Thus, we can see all sets/uses of the global variable var replaced by the function argument gbl_var in the function bodies of bar1() and bar2().

Thus, our algorithm to eliminate global variables automatically transforms the program in Figure 1(a) to the program in Figure 1(c). Our tool has the ability to either transform all possible global variables in the program, or to selectively apply the transformation to individual globals that are specified by the user.

4. Compiler and Benchmark Framework

We have implemented our algorithm to localize global variables as a source-to-source transformation using the Clang compiler framework. In this section we describe our compiler framework, existing framework limitations, and present the set of benchmark programs.

4.1. Compiler Framework and Limitations

We use the modern and popular Clang/LLVM [24,25] compiler for this work. Clang is a modern C/C++/Objective-C frontend for LLVM that provides fast code transformation and useful error detection and handling ability. Clang also exposes an extensive library of functions that can be used to build tools to parse and transform source code.

Clang/LLVM is a highly popular and heavily adopted compiler framework. However, the Clang frontend is still maturing and some less common compiler features/algorithms are not yet implemented in this framework. Such deficiencies impose a few restrictions on our current implementation.

The first limitation is on account of Clang’s failure to generate precise call-graphs in the presence of function pointers. We circumvent this problem by supplementing the compiler-generated static call graph with runtime profiling-based information to map indirect function call-sites with their targets for each benchmark-input pair. Our framework for call-graph generation is illustrated in Figure 2. We modified GCC (version 4.5.2) to instrument each source file with additional instructions that output the (caller à callee) function relationships at every indirect call on program execution. This supplemental indirect function call information is used to complete the static call-graph, when necessary. We note that the use of function pointers is not a limitation of our general technique, since precise function pointer analysis and call-graph construction has been shown to be feasible for most programs in earlier studies [26].

Another related limitation is Clang’s failure to correctly deal with variable aliasing in all cases. Aliasing occurs when a data location in memory can be accessed through different symbolic names. Our transformation tool is able to detect simple aliasing cases when a global is passed as a parameter to another function. However, we do not yet handle more complex aliasing cases in the source codes.

Figure 2. Framework to obtain precise call-graph information for our analysis experiments.

We emphasize that none of these shortcomings are fundamental restrictions on the algorithm, and will be resolved by providing better compiler support. Extending Clang to provide such support is part of our future work.

4.2. Benchmark Suite

We have collected a rich and extensive set of benchmark programs to analyze the use of global variables in existing programs and validate the behavior of our transformation tool to eliminate global variables. Our benchmark set includes 14 benchmarks from the MiBench suite [27] and five benchmarks from SPEC CPU CINT2006 benchmark suite [28]. The MiBench benchmarks include popular C applications targeting specific areas of the embedded market. The standard SPEC suite allows us to experiment with larger and more complex general-purpose applications. The following MiBench benchmarks were analyzed but not included in our experimental set since they do not contain any read/write global variables: basicmath, crc32, fft, patricia, qsort, rijndael, sha, and susan. Additionally, rsynth from MiBench was not included as it produces no traceable output to verify the correctness of our transformation.

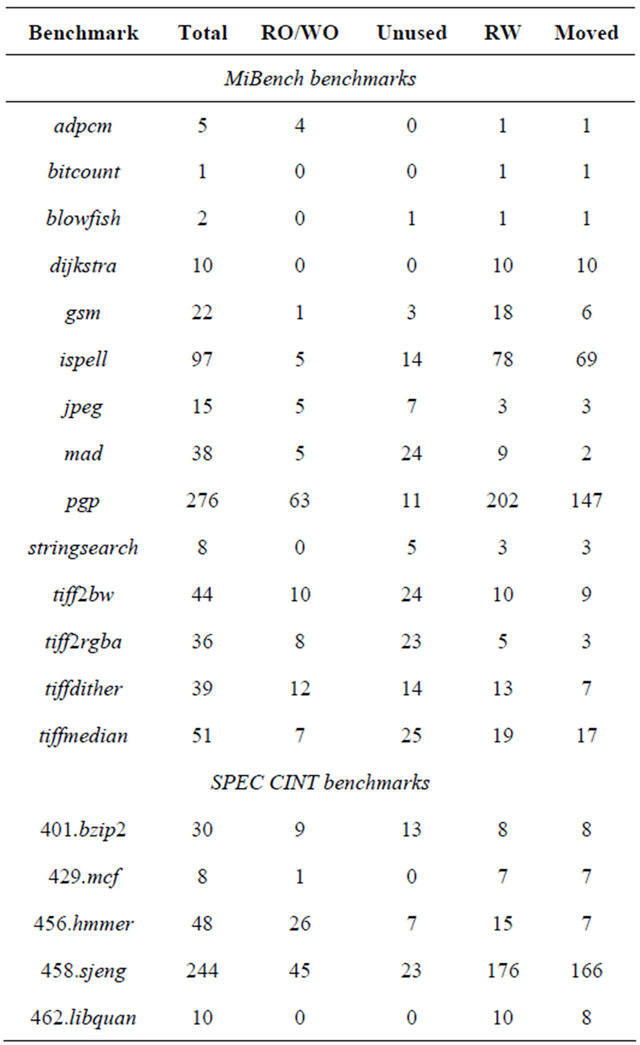

Table 1 shows the static characteristics of global variables in our selected benchmark programs. For each benchmark listed in the first column, the remaining columns successively show the total number of global variables declared in the program (total), the number of read-only or write-only global variables (RO/WO), the number of unused global variables (Unused), and the number of globals that are both read as well as written by the program (RW). Our transformation algorithm only considers the variables in the RW category as potential candidates from moving as local variables.

The final column in Table 1 shows the number of RW global variables that were successfully localized by our transformation algorithm. While our tool is able to localize most global variables, it fails in a small number of cases. We have categorized these failed cases into three primary sets: 1) Global variables used in calls to the sizeof function: After our transformation these calls fail to provide the correct size when using the corresponding function parameter pointers that are passed via reference. 2) Global variables used in functions called indirectly: We do not yet update function pointer declarations. 3) Miscellaneous: Global variables that cause the compiler to generate incorrect code, if transformed. We found that most of the failed cases in the “miscellaneous” category occur due to the imprecise alias analysis performed by the Clang compiler. Please note that global variables belonging to the first two sets are automatically detected and bypassed by our tool, with a message sent to the user. We expect that the future implementation of a more precise alias analysis algorithm in the underlying compiler

Table 1. Static number and type of global variables.

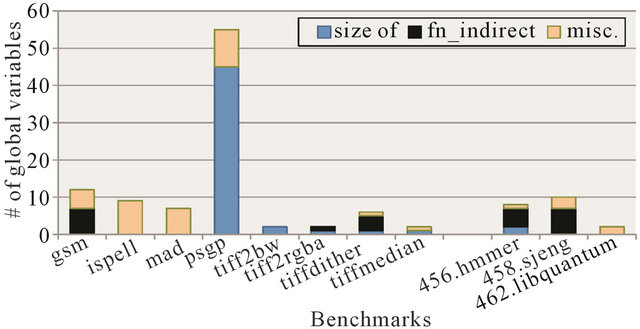

will enable our tool to automatically detect and correctly handle global variables in the third category. Figure 3 plots the number of failed RW global variables in each of these three categories for benchmarks that contain at least one failed RW global variable.

4.3. Properties of Global Variables

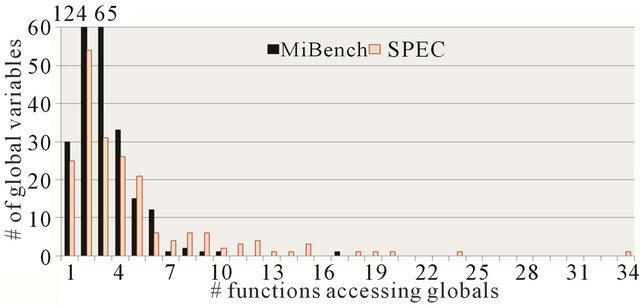

One typical use of global variables is as a convenience feature when particular program state is set or accessed in multiple program locations, and it is difficult to determine the best place to declare the variable and pass it as an argument to all program regions that need it. Global variables are also sometimes used to improve program efficiency by reducing the overhead of argument passing. Figure 4 plots the number of functions that use/set each global variable. For example, the first set of bars in Figure 4 shows that 30 global variables in the MiBench benchmarks and 25 global variables in our set of SPEC benchmarks are only accessed by one function in the program. We uniformly accumulate all global variables

Figure 3. Categories and number of global variables that our tool fails to localize for our benchmarks.

Figure 4. Number of functions accessing globals.

from each of our benchmark suites for this plot. Thus, most global variables are only used in a small number of functions. While this usage pattern is counter-intuitive, we reason that such usage trends indicate either poor programming practices or scenarios where the developer may not be comfortable with a large program code base. We believe that our automatic source-to-source transformation tool to localize globals will be very useful to resolve such improper uses of global variables.

5. Experimental Results

In this section we quantify the static and dynamic properties of our transformation to eliminate global variables. Our experiments employ the set of standard benchmark programs described in Section 4 to determine properties regarding the use of global variables in typical C programs, and static (source code visible) and dynamic (performance) effects of our localizing transformation.

5.1. Static Characteristics of Our Transformation Algorithm

Our transformation to eliminate global variables can affect many static aspects of the high-level program. In this section we analyze some effects of our transformation on static program properties. Our experiments in this section use the algorithm described in Section 3 to localize all the global variables in the Moved column of Table 1.

5.1.1. Effect on Number of Function Arguments

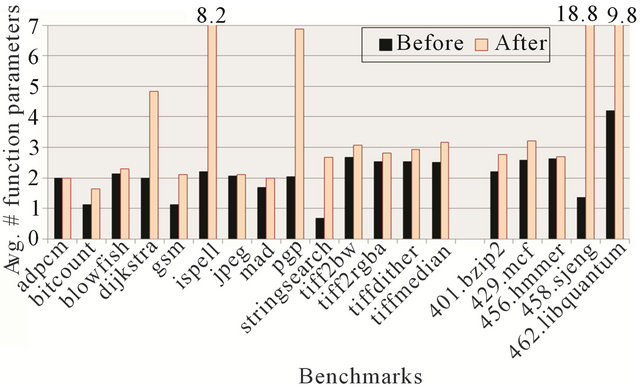

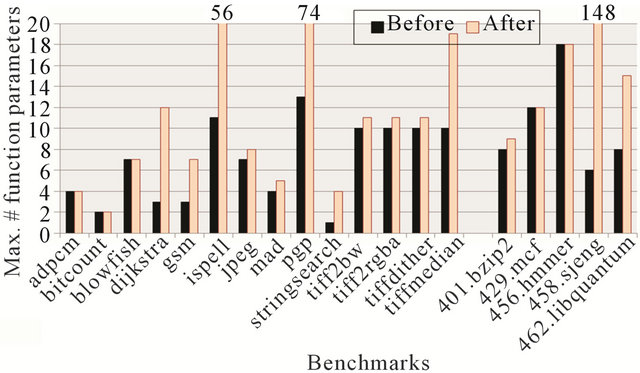

After localizing the global variables, our algorithm makes their state available to all functions that set/use the original global variable. We make the new local variable accessible by passing it as an argument to all functions that need it. This scheme adds additional parameters to several function declarations in the transformed program. Figures 5 and 6 respectively plot the average and maximum number of function parameters over all the functions in each of our benchmark programs.

Thus, we can see that the average and maximum number of function arguments is not significantly affected for most of the benchmark programs, although this number increases substantially for a few programs. On average, we find that the average number of function arguments increases from 1.95 to 3.33 for MiBench benchmarks and from 2.59 to 7.45 for SPEC programs. Similarly, the maximum number of function arguments increase, on average, from 6.78 to 16.50 for MiBench programs and from 10.4 to 40.4 for SPEC benchmarks. An important and desirable side-effect of our transformation is that it makes the declarations of all variables used/set in any function explicit in each function header. This property is particularly important both from the aspects of program maintainability and verifiability. Unfortunately, passing additional function arguments can

Figure 5. Average number of function parameters before and after applying our localizing transformation to eliminate global variables.

Figure 6. Maximum number of function parameters before and after applying our localizing transformation to eliminate global variables.

have an adverse effect on program efficiency. We explore the dynamic performance properties of our transformations in Section 5.2.

5.1.2. Number of Frontier Functions

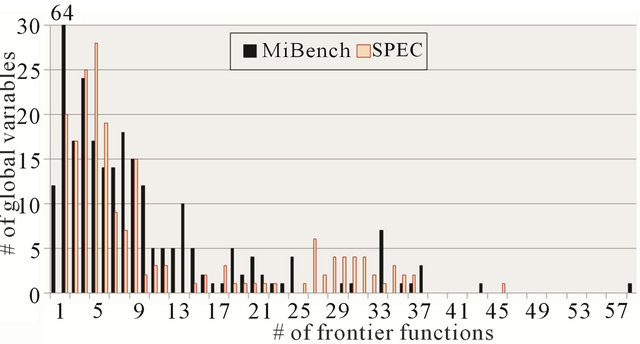

In order to make each new local variable available in all the functions that used/set the corresponding global variable in the original program, we may need to pass the local as an argument to intermediate (or frontier) functions that do not themselves use the local variable apart from sending them to other functions (functions foo1() and foo2() in Figure 1). Figure 7 presents the number of frontier functions for every transformed variable. The first set of bars in Figure 7 reveal that 12 of the new local variables in the MiBench benchmarks and no new local variable in the SPEC benchmarks have zero frontier functions. Thus, we can see that most local variables have only a small number of frontier functions. This observation shows that many global variables are used in functions that are located close to each other in the static program call-graph. However, some globals are used in functions that are considerably dislocated in the program call-graph. Thus, at the other extreme, we find that there is one function in the MiBench benchmarks that has 58 frontier functions and another in SPEC with 45 frontier functions respectively. Global variables employed in such dislocated call-graph functions will likely require more user effort to manually eliminate, and also seem to be more sensible scenarios for the developer to use global variables. By automatically handling such scenarios, our tool allows the programmer the convenience of using global variables in difficult situations, but eliminates them later to satisfy software engineering goals.

5.1.3. Effect on Program State Visibility

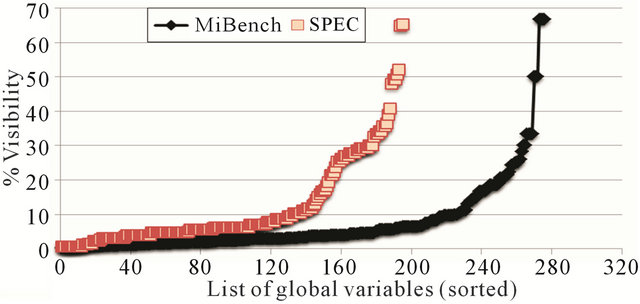

Global variables are visible and accessible to all functions in the program. It is often argued that such global visibility makes it more difficult for automatic program verification and maintainability. One goal of our localizing transformation is to reduce the visibility of all variables to only the program regions where they are needed

Figure 7. Number of frontier functions needed for the transformed local variables.

to assist verification and maintainability tasks. Figure 8 plots the percentage visibility of each transformed local variable as a ratio of the number of functions where the corresponding program state is visible to the total number of functions in the program. There is a point-plot for each transformed variable in Figure 8, sorted by its percentage visibility over all MiBench and SPEC benchmarks. Note that global variables are visible throughout (100%) the program. Thus, this figure shows that, after transformation, visibility is drastically reduced for most program state that was originally held in global variables. For example over 81% of the (original) global variables in MiBench programs and 68% of variables in SPEC benchmarks are visible in less than 10% of their respective program after the transformation.

5.2. Dynamic Characteristics of Our Transformation Algorithm

The transformation algorithm to eliminate global variables can have the following effects on memory consumption and program performance.

• Localizing global variables will move them out of the data region to the respective function activation records (or the stack region) of the process address space. This movement may reduce the size of the data region, but will supplement this reduction with a corresponding increase in the size of the stack.

• Each localized variable may need to be passed to functions that access it. This operation may increase the function call overhead, as well as increase the size of the function activation records (stack).

• Global variables are initialized statically or implicitly by the operating system. After localization, the corresponding locals will need to be explicitly initialized in the program. This initialization may be a source of additional overhead at runtime.

In this section we present results that quantify the memory space and runtime performance of the program before and after our transformation. For these experiments all our benchmark programs were compiled with GCC (version 4.5.2) using the “-O2” optimization flag

Figure 8. Percentage reduction in visibility of transformed variables compared to globals visible throughout the program.

for the x86 32-bit platform running the Linux operating system. We also built a simple GCC-based instrumentation framework to measure the maximum stack space requirement and dynamic instruction counts for each benchmark. This framework is described in the next section. The MiBench and SPEC benchmarks were run with their small and test inputs respectively. The program outputs with and without our transformations were compared to validate the correctness of our tool.

5.2.1. GCC-Based Instrumentation Framework

We updated the GCC compiler to instrument the program during code generation. Our instrumentations can generate two types of profiles at program runtime. 1) To output the stack pointer register on every function entry, after it sets up its activation record. The difference between the minimum and maximum stack pointer values gives us the maximum extent of the stack for that particular program run. 2) Our other set of instrumentations is added to the start of every basic block to produce a linear trace of the blocks reached during execution. We also modified GCC to generate a file during compilation that contains a list of all program basic blocks along with their set of instructions. The knowledge of the blocks that are reached at runtime and the number of instructions in each block allow us to compute the dynamic instruction counts for a particular program run. Since our instrumentations only modify the compiled code, we can only count the dynamic instructions executed in the application program and not in the library functions. Dynamic instruction counts are a good supplement to program run-times since they are deterministic and not affected by any hardware and operating system effects.

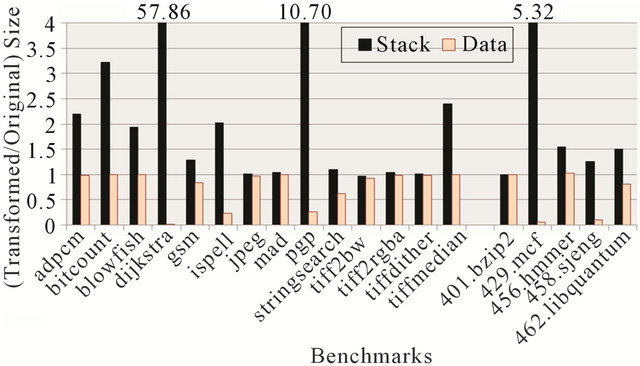

5.2.2. Effect on Maximum Stack and Data Size

For most existing systems, global variables reside in the data region of the process address space, while local variables and function arguments reside in the function activation record on the process stack. Therefore, our transformations to convert global variables into locals (that are passed around as additional function arguments) have the potential to reduce the data space and expanding the process stack. We use our GCC based stackpointer instrumentation to gather the maximum required stack space (in bytes) for each benchmark run with its standard input. We also employ the Linux size tool to determine the space occupied by the data region of each program. Figure 9 plots the ratio of the total data and maximum stack requirement for each of our benchmark programs before and after the transformation to eliminate global variables.

Thus, we can see that our transformation increases the stack requirement while reducing the data space size for most programs. While some benchmarks, including dijkstra,

Figure 9. Ratio of the total data and maximum stack area consumed by each process at runtime before and after our localizing transformation.

pgp and 429.mcf, may experience a large increase in maximum stack usage, many of these also notice a correlating reduction in the data region size. At the same time, note that while a program only maintains one copy of any global variable, multiple copies of the corresponding local variable/argument may reside simultaneously on the stack for the transformed program. Therefore, there also exist programs, such as adpcm, bitcount, and blowfish, that show no discernible reduction in data size, but still encounter significant increases in maximum stack space use.

5.2.3. Effect on Dynamic Performance

In this section we present experimental results that quantify the effect of eliminating global variables on program performance. We employ two metrics for performance estimation. First, we use the GCC instrumentation framework to measure each program’s dynamic instruction count before and after applying our transformation. Dynamic instruction counts can provide a good and deterministic estimation of actual program performance, but cannot account for differing instruction latencies, and variations due to memory, cache, and other micro-architectural effects. Second, we execute each benchmark natively on a x86-Linux machine to gather actual program run-time. Each benchmark is run in isolation to prevent interference from other user programs. To account for inherent timing variations during the benchmark runs, all the performance results in this paper report the average over 15 runs for each benchmark.

Figure 10 shows the results of these performance experiments. For the actual program run-times, we employ a statistically rigorous evaluation methodology, and only present results that show a statistically significant performance difference (with a 95% confidence interval) with and without our transformation. Thus, we can see that the localizing transformation does not produce a large performance overhead for most benchmarks. The dynamic instruction counts for most benchmarks with a

Figure 10. Ratio of dynamic instruction counts and program run-time before and after our localizing transformation.

small number of Moved global variables typically do not undergo a substantial change. However, the dynamic instruction counts do show large degradations in cases where the transformation localizes a large number of global variables and/or significantly increases the number of function arguments (as seen in Figure 5). Several benchmark programs including dijkstra, ispell, stringsearch, and 458.sjeng fall into this category. Interestingly, we observe that, in most cases, the increases in dynamic instruction count do not produce a corresponding increase in the actual benchmark runtime. The most notable exception to this observation is tiff2rgba that degrades substantially over the original program run-time after our localizing transformation. Remember that tiff2rgba is the only program not compiled with GCC’s -O2 optimizations. Thus, it seems that optimizations performed by GCC and the x86 micro-architecture do a good job of reducing the overhead caused by our transformation to localize and eliminate global variables.

6. Future Work

There are a number of possible improvements. First, we plan to improve pointer analysis and alias analysis in the Clang compiler, to appropriately resolve indirect function calls and build precise call-graphs. Second, we are developing an Eclipse-based interactive framework to enable the user to selectively localize only the important global variables. We also plan to develop machine-learning algorithms to automatically find the most promising globals to localize. Third, we will further investigate the causes of performance overhead and develop optimizations to reduce the overhead of the localizing transformation. Finally, we require good metrics to evaluate the benefit of our tool for program development, maintenance, verification, and thread-safety. We plan to develop such metrics in the future.

7. Conclusion

In this paper we present our compiler-based source-tosource transformation and refactoring tool to automatically convert global variables into locals. Our transformation algorithm automatically detects each global variable, finds the best place to redefine each as a local, appropriately initialize it, pass it as an argument to all the functions that set/use it, and then modify all program statements that used the original global variable to now instead use the corresponding local or argument. We also analyze the static and runtime effects of our localizing transformation. We found that many benchmarks make generous use of global variables. However, most globals are only used in a very small number of program functions that are located close to each other in the function call-graph. Localizing such global variables greatly reduces the percentage visibility of global program state, which can assist code verification efforts. While the localizing transformation can affect the amount and distribution of memory space consumed by the data and stack regions of the process address space, localizing most global variables only has a minor degrading effect on runtime performance.

REFERENCES

- B. W. Kernighan and D. M. Ritchie, “The C Programming Language,” Prentice-Hall, Inc., Upper Saddle River, 1978.

- W. Wulf and M. Shaw, “Global Variable Considered Harmful,” ACM SIGPLAN Notices, Vol. 8, No. 2, 1973, pp. 28-34.

- G. Klein, K. Elphinstone, G. Heiser, J. Andronick, D. Cock, P. Derrin, D. Elkaduwe, K. Engelhardt, R. Kolanski, M. Norrish, T. Sewell, H. Tuch and S. Winwood, “SEL4: Formal Verification of an OS Kernel,” Proceedings of the ACM SIGOPS 22nd Symposium on Operating Systems Principles, SOSP’09, New York, 11-14 October 2009, pp. 207-220,

- J, Barnes, “High Integrity Software: The SPARK Approach to Safety and Security,” Addison-Wesley Longman Publishing Co., Inc., Boston, 2003.

- D. Binkley, M. Harman, Y. Hassoun, S. Islam and Z. Li, “Assessing the Impact of Global Variables on Program Dependence and Dependence Clusters,” Journal of Systems and Software, Vol. 83, No. 1, 2010, pp. 96-107. doi:10.1016/j.jss.2009.03.038

- F. Balmas, “Using Dependence Graphs as a Support to Document Programs,” Proceedings of the Second IEEE International Workshop on Source Code Analysis and Manipulation, SCAM’02, Montreal, 1 October 2002, pp. 45-154

- Y. Deng, S. Kothari and Y. Namara, “Program Slice Browser,” Proceedings of the 9th International Workshop on Program Comprehension, Toronto, 12-13 May 2001, pp. 50-59.

- S. Black, “Computing Ripple Effect for Software Maintenance,” Journal of Software Maintenance, Vol. 13, No. 4, 2001, pp. 263-279. doi:10.1002/smr.233

- P. Tonella, “Using a Concept Lattice of Decomposition Slices for Program Understanding and Impact Analysis,” IEEE Transactions on Software Engineering, Vol. 29, No. 6, 2003, pp. 495-509. doi:10.1109/TSE.2003.1205178

- A. R. Smith and P. A. Kulkarni, “Localizing Globals and Statics to Make C Programs Thread-Safe,” Proceedings of the 14th International Conference on Compilers, Architectures and Synthesis for Embedded System, New York, 2011, pp. 205-214.

- G. Zheng, S. Negara, C. L. Mendes, L. V. Kale and E. R. Rodrigues, “Automatic Handling of Global Variables for Multi-Threaded mpi Programs,” IEEE 17th International Conference on Parallel and Distributed Systems (ICPADS), Tainan, 7-9 December 2011, pp. 220-227.

- P. Sestoft, “Replacing Function Parameters by Global Variables,” Proceedings of the Fourth International Conference on Functional Programming Languages and Computer Architecture, FPCA’89, London, 11-13 September 1989, pp. 39-53.

- B. W. Kernighan, “The C Programming Language,” 2nd Edition, Prentice Hall Professional Technical Reference, 1988.

- c2.com Wiki Contributors, “Global Variables Are Bad,” 2011. http://c2.com/cgi/wiki?GlobalVariablesAreBad

- E. Gamma, R. Helm, R. Johnson and J. Vlissides, “Design Patterns: Elements of Reusable Object-Oriented Software,” Addison-Wesley Longman Publishing Co., Inc., Boston, 1995.

- M. Hevery, “Clean Code Talks—Global State and Singletons,” Google Tech Talks, 2008.

- R. E. Sward and A. T. Chamillard, “Re-Engineering Global Variables in Ada,” Proceedings of the 2004 Annual ACM SIGAda International Conference on Ada: The Engineering of Correct and Reliable Software for Real-Time & Distributed Systems Using Ada and Related Technologies, SIGAda’04, Atlanta, 14 November 2004, pp. 29-34.

- X. J. Yang, N. Cooprider and J. Regehr, “Eliminating the Call Stack to Save Ram,” Proceedings of the 2009 ACM SIGPLAN/SIGBED Conference on Languages, Compilers, and Tools for Embedded Systems, LCTES’09, Dublin, 19-20 June 2009, pp. 60-69.

- R. C. Martin, “Clean Code: A Handbook of Agile Software Craftsmanship,” 1st Edition, Prentice Hall PTR, Upper Saddle River, 2008.

- J. Kerievsky, “Refactoring to Patterns,” Pearson Higher Education, 2004.

- D. Wilking, U. F. Khan and S. Kowalewski, “An Empirical Evaluation of Refactoring,” e-Informatica Software Engineering Journal, Vol. 1, No. 1, 2007, pp. 27-42.

- T. Lengauer and R. E. Tarjan, “A Fast Algorithm for Finding Dominators in a Flowgraph,” ACM Transactions on Programming Language Systems, Vol. 1, No. 1, 1979, pp. 121-141. doi:10.1145/357062.357071

- A. V. Aho, M. S. Lam, R. Sethi and J. D. Ullman, “Compilers: Principles, Techniques, and Tools,” Addison-Wesley Longman Publishing, Boston, 2006.

- C. Lattner and V. Adve, “Llvm: A Compilation Framework for Lifelong Program Analysis & Transformation,” Proceedings of the International Symposium on Code Generation and Optimization: Feedback-Directed and Runtime Optimization, CGO’04, IEEE Computer Society, Washington DC, 2004, p. 75

- Clang Team, “Clang: A C Language Family Frontend for Llvm,” 2012. http://clang.llvm.org/

- A. Milanova, A. Rountev and B. G. Ryder, “Precise Call Graphs for C Programs with Function Pointers,” Automated Software Engineering, Vol. 11, No. 1, 2004, pp. 7-26. doi:10.1023/B:AUSE.0000008666.56394.a1

- M. R. Guthaus, J. S. Ringenberg, D. Ernst, T. M. Austin, T. Mudge and R. B. Brown, “MiBench: A Free, Commercially Representative Embedded Benchmark Suite,” IEEE 4th Annual Workshop on Workload Characterization, Austin, December 2001.

- “Standard Performance Evaluation Corporation (SPEC),” 2006. http://www.spec.org/benchmarks.html