Psychology

Vol.07 No.09(2016), Article ID:69879,6 pages

10.4236/psych.2016.79124

Elderly People Benefit More from Positive Feedback Based on Their Reactions in the Form of Facial Expressions during Human-Computer Interaction

Stefanie Rukavina*, Sascha Gruss, Holger Hoffmann, Harald C. Traue

Department of Psychosomatic Medicine and Psychotherapy, Medical Psychology, Ulm University, Ulm, Germany

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 7 June 2016; accepted 16 August 2016; published 19 August 2016

ABSTRACT

In this brief report, we present the results of our investigation into the impact of age on reactions in the form of facial expressions to positive and negative feedback during human-computer interaction. In total, 30 subjects were analyzed after a video-recorded mental task in the style of a Wizard of Oz scenario. All subjects and their facial reactions were coded using the Facial Expression Coding System (FACES). To summarize briefly, we can conclude from our facial expression analysis that compared with their younger counterparts, elderly people show significantly lower levels of negative expression in response to positive feedback from the technical system (“Your performance is improving!”). This result indicates that elderly people seem to benefit more from praise during interaction than younger people, which is significant for the design of future companion technologies.

Keywords:

Age Difference, FACES, Affective Computing, Human-Computer Interaction, System Feedback

1. Introduction

Since the publication of Rosalind Picard’s theory on affective computing (Picard, 1995) , many research labs are still trying to build computer programs or digital devices to improve human-computer interaction (HCI), not only through enhanced usability of the technical device itself but also through increased social sensitivity and supportiveness with regard to the needs of the user. To be an empathetic and adaptive interactive partner, it is essential for the technical system to be able to recognize and respond to the emotional and motivational states of its user (Calvo et al., 2010) , which in turn means that it can be defined as a companion technology (Traue et al., 2013; Wendemuth et al., 2012) . Such companion technologies would be very useful and supportive for future HCI, especially for elderly people or people with limited cognitive abilities (Walter, et al., 2013) . While in the past, considerable effort went into automated emotion recognition using machine learning algorithms, one additional important factor seemed to be neglected or even ignored: Effective feedback provided by the technical system. This would, for example, address the kind of strategies the technical device can use to alter the user’s emotions if something goes awry during the interaction. In this regard, an important question to address might be: Is it accurate to assume that positive feedback will automatically elicit a positive response in the user? Questions like this were already raised in a recent study about feedback provided during a HCI (Rukavina et al., 2016a) , in which a paradoxical result was reported, namely that positive feedback elicits significantly more negative than positive emotional expressions, especially in women. In this brief report, we analyzed the influence of age as an additional independent variable on the effectiveness of feedback during a HCI, measured using the FACES facial expression measurement system according to (Kring et al., 2007; see 2.1) .

Facial expressions are of particular importance for the analysis of HCI, since they convey information about the emotion and motivation of the human conversational partner, who is either speaking or listening. This information can be used for the purposes of automated emotion recognition from the video signal. As per the concept of embodied communication, every listener is also a sender and vice versa (Storch et al., 2014) . Facial expressions, also described as “honest signals” by Pentland, can also be used to measure the quality of an interaction between humans (Pentland, 2010) . According to most emotion experts, an emotion leads to a facial expression, along with individual differences, e.g., inhibition of facial expressiveness (Traue et al., 2016) or display rules (Ekman et al., 2003) . However, not every facial expression must be based on a context-specific emotional experience and the source prompting the expression may thus remain unknown, e.g., “Othello’s error” (Kreisler, 2004) , although this aspect will not be discussed in detail in the present paper.

One way of influencing an ongoing HCI could involve providing specific positive feedback concerning the performance or motivation of the user. Referring to the reported gender differences found in the study by Rukavina et al. (Rukavina et al., 2016a) it is, however, also important to look for further influential variables such as age.

Age and Emotion

Due to demographic changes in our society, age plays an important role as a subject-specific variable, particularly for future companion technologies involving HCI. To the best of our knowledge, however, age has rarely been observed in past affective computing studies, although there are some exceptions, e.g., Tan et al. (2016) . This seems to be at odds with the fact that several articles do report age differences during emotion induction, recorded, e.g., by means of physiological measurements such as skin conductance or facial electromyography (Burriss et al., 2007) . Furthermore, age was also reported as influencing automatic emotion classification accuracy, which is an integral element of affective computing (Rukavina et al., 2016b; Tan et al., 2016) .

Most emotion and age studies not involving HCI do find a bias for seniors. This takes the form of a “positivity effect” of elderly people, which indicates that with age, the attention of a person shifts more to positive stimuli or, as Reed and colleagues conclude, “the phenomenon appears to reflect a default cognitive processing approach in later life that favors information relevant to emotion-regulatory goals” (Reed et al., 2012) . This effect has been found in studies focusing on several emotion-related topics, e.g., cognition, attention, decoding emotion (Isaacowitz et al., 2011) . The question of whether a similar positivity effect can be measured in behavioral reactions to affective feedback in an ongoing human-computer interaction remains unanswered and should be studied in more detail.

2. Methods

To assess the impact of age on facial expression reactions in response to feedback, we analyzed data from the OPEN_EmoRec_II corpus (Rukavina et al., 2015) , consisting of n = 30 subjects recorded during a naturalistic experiment realized using a Wizard of Oz simulation of true understanding of natural language (Bernsen et al., 1994; Kelley, 1984). In this experiment, the participants believed they were communicating and interacting with an autonomous technical system, but in reality, the experimenter, or “wizard,” was manipulating the interaction and triggering different kinds of feedback (see below).

The experimental task followed the rules of the popular game “Concentration” and consisted of six experimental sequences (ES) aimed at inducing various emotional states in users in accordance with the dimensional emotion model with the dimensions valence, arousal and dominance (Mehrabian et al., 1974; Russel et al., 1977) . Various strategies were used to elicit the different emotional states: manipulation of the difficulty of the game (size of deck of cards, motives), manipulation of the interaction itself (delayed system response, incorrect reactions) and manipulation by means of the feedback provided (differently valenced feedback provided by the technical system). For more information, the reader is referred to (Rukavina et al., 2016a) . At the end of the experiment, every subject rated each ES on the basis of valence, arousal and dominance using the SAM (Self- Assessment Manikin) rating scale developed by (Bradley et al., 1994) as a manipulation check.

The subjects’ overt behaviors were recorded using both video and audio recording throughout the whole experiment, which facilitated the subsequent coding and analyzing of facial responses to the feedback provided by the system. All final facial expression annotations including other recorded data (e.g., physiology) were compiled to form an open data corpus known as OPEN_EmoRec_II (Rukavina et al., 2015) .

2.1. Facial Expression Coding System

All facial expressions in the data corpus were coded using the Facial Expression Coding system (FACES) (Kring et al., 2007) . This method is based on the more popular Facial Action Coding System (FACS) method (Ekman et al., 1978; Ekman et al., 2002), but it is less time consuming and does not require certified FACS coders. In the FACES approach, whole expressions are coded with the dimensions of valence and intensity (Likert Scale 1 - 4), instead of single Action Units as in the FACS method. In addition, every rater included information on the subject’s overall expressiveness (1 - 5 Likert scale) and noted the start time, the apex and end time of the emotional expression. The intraclass correlation coefficient (Hallgren, 2012) was calculated to check for validity and agreement between different raters, resulting in an ICC = 0.74. This value is the mean of the ICC for valence = 0.75, ICC for intensity = 0.65, ICC for duration = 0.70 and ICC for expressiveness = 0.84. It is evident that the four raters had a relatively high agreement on their ratings.

The coded video sequences were 6 seconds long and were presented without the audio information to 4 coders. For detailed information on the procedure please see (Rukavina et al., 2016a) .

2.2. Subjects

The study sample consisted of 30 right-handed subjects (n = 23 women) split into two age groups between 20 - 40 years and above 51 years: the younger group (n = 18; mean age = 24.00 years; SD = 2.47) and the elderly group (n = 12; mean age = 66.08; SD = 8.54). Every subject signed the informed consent form and was paid ?5 for participating. The study was conducted in accordance with the ethical guidelines set forth in the Declaration of Helsinki (certified by the Ulm University ethics committee: C4 245/08-UBB/se).

3. Results

Faces

Only those facial expressions detected by at least two of the four raters were used for the analysis. As an agreement between the four raters, an ICC (3,4) model (intraclass correlation coefficient, two-way randomized) as per Hallgren was used, resulting in an ICC = 0.74 (Hallgren, 2012) .

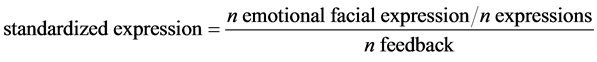

The facial expressions detected were categorized into the following expression classes: positive, negative, ambivalent and no expression.. Afterwards, the expressions were standardized on an individual basis as follows (see Table 1):

with n “emotional facial expression” = sum “VN/VP/VA/no expression” and “n expression” = sum of (VN + VP + VA) for specific feedback.

We conducted the non-parametric Wilcoxon test to look for differences between elderly and younger subjects in our study. In general, this revealed significantly more negative expressions for younger subjects following positive feedback (U = −1.95; p = 0.05; 0.039 < 0.060), see Figure 1. The effect size according to Hedges (on account of different sample sizes) is g = 0.85, which can be considered a strong effect (Cohen, 2013) .

This age difference results from the higher rate of negative expression following the pos1 feedback “Your performance is improving!” (U = −2.09; p = 0.04; 0.114 > 0.059).

No significant difference was found in the general expressivity of both age groups (p = 0.11), although the comparison of the means shows slightly higher expressivity values for the younger subgroup (2.50 > 1.94).

Table 1. Standardized facial expression after feedback from the technical system.

Notification: *only these are included in the analysis. neg6: “Your performance is declining”, neg7: “Would you like to terminate the task?”, neg8: “Delay”, neg9: “Wrong Card”, pos1: “Your performance is improving!”, pos2: “Keep it up!”, pos3: “You are doing great!” [All feedback was originally presented in German].

Figure 1. Standardized facial expressions (negative, positive, ambivalent and no expressions) in response to differently valenced feedback (positive, negative). On the right: Rated general expressivity.

4. Discussion

For an assistive application to be considered a companion technology, it not only needs to recognize the user’s mental states as accurately as possible but must also respond to them effectively. Over the past decade of affective computing, research focused mainly on automatic emotion recognition with machine learning techniques based on different modalities, e.g., audio-, video- or physiological features (Kächele et al., 2015; Rukavina et al., 2016b; Walter et al., 2011) , which can be understood as the first important step. We believe, however, that it is now important to understand what form effective feedback could take in order to build closed loop algorithms for adaptive communicative systems.

Therefore, we analyzed the effect of differently valenced feedback in an HCI based on reactions in the form of facial expressions extracted from an accessible corpus OPEN_EmoRec II (Rukavina et al., 2015) . The facial expressions can be understood as emotional reactions to system behaviors indicating the change of mental state in users. Since gender was reported to be influential on these facial expression reactions in a recently published study (Rukavina et al., 2016b) , this second analysis was conducted to look for age differences as well. It resulted in significantly fewer negative facial expressions after positive feedback for seniors and especially after feedback utterance in a supportive tone of voice: “Your performance is improving!” Because we found no significant difference between both age groups in terms of rated expressivity, we assume this difference is related to the often-reported positivity effect (Reed et al., 2012) . The shift in attention towards positive stimuli has also been reported in studies focusing on the association between gaze and mood, in which seniors were found to use their gaze to regulate their mood (Isaacowitz et al., 2008) . Although we know that the seniors in our study could not physically change their gaze to regulate their moods, it is conceivable that they changed their cognition with respect to the feedback, resulting in a “shifted” behaviorally expressed positivity effect. In addition, the reason we found no age differences in the positive expression class, e.g., that elderly people react more frequently with positive expressions, remains unclear. One could argue, however, that the decrease in negativity is also a form of positivity, especially if a trend is visible in the general decrease in facial expressiveness. In conclusion, we encourage future studies in this area, and especially studies about affective computing and companion technologies, to concentrate more on checking for age differences. This is particularly important because these differences were also reported in connection with several emotion-related topics, e.g., cognition and attention (Isaacowitz et al., 2011) , memory (Mather et al., 2005) and decoding of facially expressed emotions (Fölster et al., 2014) . However, it remains to be seen how this positivity effect could influence future and more complex HCI designed especially with seniors in mind.

A second point we would like to mention in terms of suggestions are the feedback types themselves. Future studies should investigate the feedback provided to the users in more detail with a wider variety of feedback types and with more specific differentiation among these types. Additionally, we would like to encourage future studies concentrate more on the reactions to different types of feedback in HCI and the influence of age in larger samples.

Acknowledgements

This research was supported in part by grants from the Transregional Collaborative Research Centre SFB/TRR 62 “Companion-Technology for Cognitive Technical Systems” funded by the German Research Foundation (DFG).

Cite this paper

Stefanie Rukavina,Sascha Gruss,Holger Hoffmann,Harald C. Traue, (2016) Elderly People Benefit More from Positive Feedback Based on Their Reactions in the Form of Facial Expressions during Human-Computer Interaction. Psychology,07,1225-1230. doi: 10.4236/psych.2016.79124

References

- 1. Bradley, M. M., & Lang, P. J. (1994). Measuring Emotion: The Self-Assessment Manikin and the Semantic Differential. Journal of Behavior Therapy and Experimental Psychiatry, 25, 49-59.

http://dx.doi.org/10.1016/0005-7916(94)90063-9 - 2. Burriss, L., Powell, D. A., & White, J. (2007). Psychophysiological and Subjective Indices of Emotion as a Function of Age and Gender. Cognition & Emotion, 21, 182-210.

http://dx.doi.org/10.1080/02699930600562235 - 3. Calvo, R., & D’Mello, S. (2010). Affect Detection: An Interdisciplinary Review of Models, Methods, and Their Applications. IEEE Transactions on Affective Computing, 1, 18-37.

http://dx.doi.org/10.1109/T-AFFC.2010.1 - 4. Cohen, J. (2013). Statistical Power Analysis for the Behavioral Sciences. Hillsdale, NJ: Lawrence Erlbaum Associates.

- 5. Ekman, P., & Friesen, W. V. (1978). Manual for the Facial Action Code. Palo Alto, CA: Consulting Psychologist Press.

- 6. Ekman, P., & Friesen, W. V. (2003). Unmasking the Face. A Guide to Recognizing Emotions from Facial Clues. Los Altos, CA: Malor Books.

- 7. Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial Action Coding System. Manual and Investigator’s Guide, Salt Lake City, UT: Research Nexus.

- 8. Fölster, M., Hess, U., & Werheid, K. (2014). Facial Age Affects Emotional Expression Decoding. Frontiers in Psychology, 5, 1-13.

http://dx.doi.org/10.3389/fpsyg.2014.00030 - 9. Hallgren, K. A. (2012). Computing Inter-Rater Reliability for Observational Data: An Overview and Tutorial. Tutorials in Quantitative Methods for Psychology, 8, 23-34.

- 10. Isaacowitz, D. M., & Riediger, M. (2011). When Age Matters: Developmental Perspectives on “Cognition and Emotion”. Cognition and Emotion, 25, 957-967.

http://dx.doi.org/10.1080/02699931.2011.561575 - 11. Isaacowitz, D. M., Toner, K., Goren, D., & Wilson, H. R. (2008). Looking While Unhappy Mood-Congruent Gaze in Young Adults, Positive Gaze in Older Adults. Psychological Science, 19, 848-853.

http://dx.doi.org/10.1111/j.1467-9280.2008.02167.x - 12. Kächele, M., Rukavina, S., Palm, G., Schwenker, F., & Schels, M. (2015) Paradigms for the Construction and Annotation of Emotional Corpora for Real-World Human-Computer-Interaction. In Proc. of the Int. Conf. on Pattern Recognition Applications and Methods (ICPRAM) (pp 367-373). SciTePress.

http://dx.doi.org/10.5220/0005282703670373 - 13. Kreisler, H. (2004). Paul Ekman Interview: Conversations with History. Institute of International Studies, UC Berkeley.

http://globetrotter.berkeley.edu/people4/Ekman/ekman-con3.html - 14. Kring, A., & Sloan, D. (2007). The Facial Expression Coding System (FACES): Development, Validation, and Utility. Psychological Assessment, 19, 210-224.

http://dx.doi.org/10.1037/1040-3590.19.2.210 - 15. Mather, M., & Carstensen, L. L. (2005). Aging and Motivated Cognition: The Positivity Effect in Attention and Memory. Trends in Cognitive Sciences, 9, 496-502.

http://dx.doi.org/10.1016/j.tics.2005.08.005 - 16. Mehrabian, A., & Russell, J. A. (1974). An Approach to Environmental Psychology. Cambridge, MA: The MIT Press.

- 17. Pentland, A. S. (2010). To Signal Is Human. American Scientist, 98, 204-219.

- 18. Picard, R. W. (1995). Affective Computing. M.I.T Media Laboratory Perceptual Computing Section Technical Report No. 321.

- 19. Reed, A. E., & Carstensen, L. L. (2012). The Theory behind the Age-Related Positivity Effect. Frontiers in Psychology, 3, 339.

http://dx.doi.org/10.3389/fpsyg.2012.00339 - 20. Rukavina, S., Gruss, S., Hoffman, H., & Traue, H. C. (2016a). Facial Expression Reactions to Feedback in a Human-Computer Interaction—Does Gender Matter? Psychology, 7, 356-367.

http://dx.doi.org/10.4236/psych.2016.73038 - 21. Rukavina, S., Gruss, S., Hoffmann, H., Tan, J.-W., Walter, S., & Traue, H. C. (2016b). Affective Computing and the Impact of Gender and Age. PLoS ONE, 11, Article ID: e0150584.

http://dx.doi.org/10.1371/journal.pone.0150584 - 22. Rukavina, S., Gruss, S., Walter, S., Hoffmann, H., & Traue, H. C. (2015). OPEN_EmoRec_II—A Multimodal Corpus of Human-Computer Interaction. International Journal of Computer, Electrical, Automation, Control and Information Engineering, 9, 977-983.

- 23. Russel, J. A., & Mehrabian, A. (1977). Evidence for a Three-Factor Theory of Emotions. Journal of Research in Personality, 11, 273-294.

http://dx.doi.org/10.1016/0092-6566(77)90037-X - 24. Storch, M., & Tschacher, W. (2014). Embodied Communication—Kommunikation beginnt im Körper, nicht im Kopf. Bern: Verlag Hans Huber.

- 25. Tan, J.-W., Andrade, A. O., Li, H., Walter, S., Hrabal, D., Rukavina, S. et al. (2016). Recognition of Intensive Valence and Arousal Affective States via Facial Electromyographic Activity in Young and Senior Adults. PLoS ONE, 11, Article ID: e0146691.

http://dx.doi.org/10.1371/journal.pone.0146691 - 26. Traue, H. C., Kessler, H., & Deighton, R. W. (2016). Emotional Inhibition. In G. Fink (Ed.), Stress: Concepts, Cognition, Emotion, and Behavior (pp. 233-240). San Diego, CA: Academic Press.

http://dx.doi.org/10.1016/b978-0-12-800951-2.00028-5 - 27. Traue, H. C., Ohl, F., Brechman, A., Schwenker, F., Kessler, H., Limbrecht, K. et al. (2013). A Framework for Emotions and Dispositions in Man-Companion Interaction. In M. Rojc, & N. Campbell (Eds.), Converbal Synchrony in Human-Machine Interaction (pp. 99-140). Boca Raton, FL: CRC Press.

http://dx.doi.org/10.1201/b15477-6 - 28. Walter, S., Scherer, S., Schels, M., Glodek, M., Hrabal, D., Schmidt, M. et al. (2011). Multimodal Emotion Classification in Naturalistic User Behavior. In J. A. Jacko (Ed.), Human-Computer Interaction: Towards Mobile and Intelligent Interaction Environments (Volume Part 3, pp. 603-611). Berlin: Springer.

http://dx.doi.org/10.1007/978-3-642-21616-9_68 - 29. Wendemuth, A., & Biundo, S. (2012). A Companion Technology for Cognitive Technical Systems. In A. Esposito, A. M. Esposito, A. Vinciarelli, R. Hoffmann, & V. C. Müller (Eds.), Cognitive Behavioural Systems (pp. 89-103). Berlin: Springer.

http://dx.doi.org/10.1007/978-3-642-34584-5_7

NOTES

*Corresponding author.