Paper Menu >>

Journal Menu >>

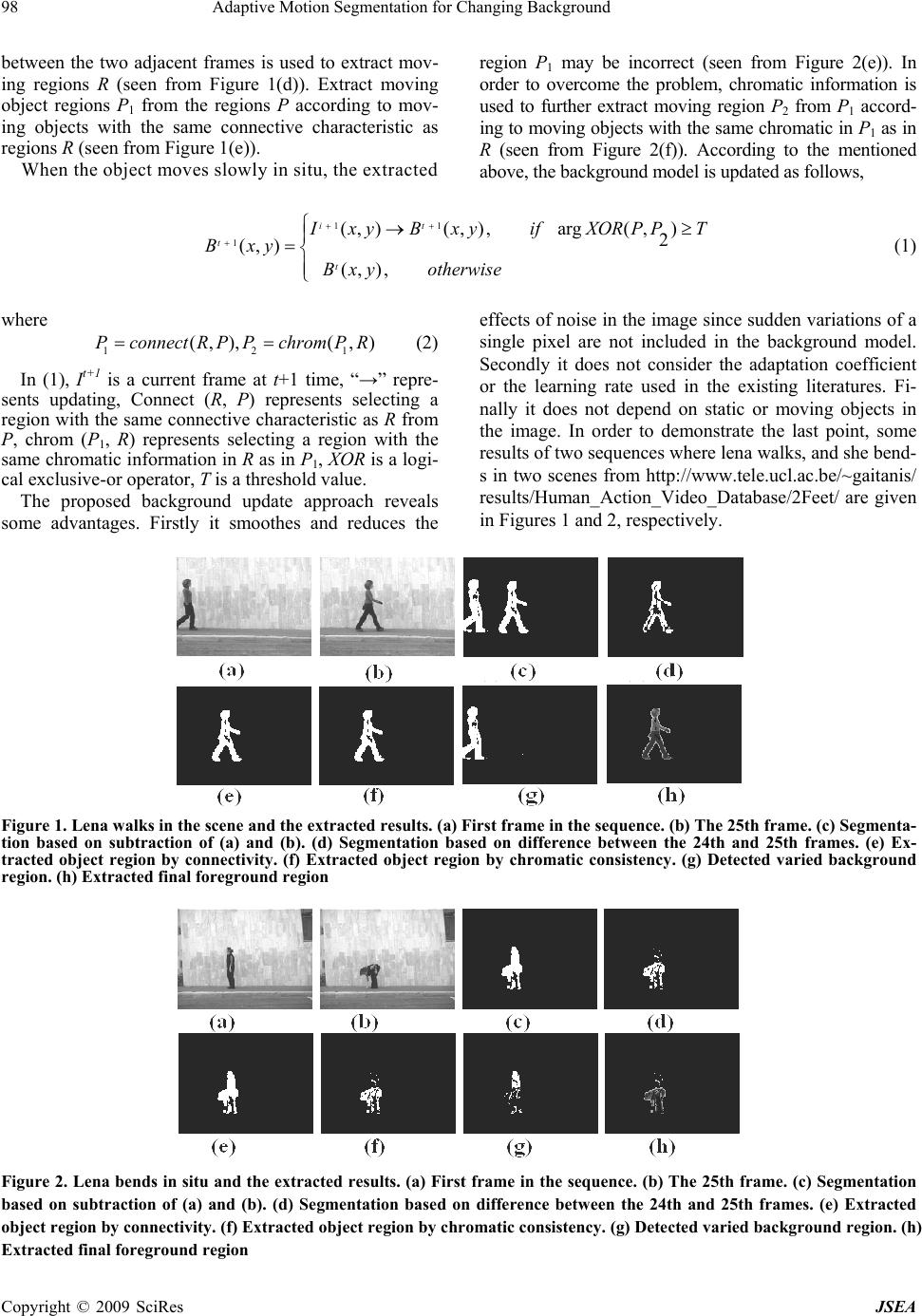

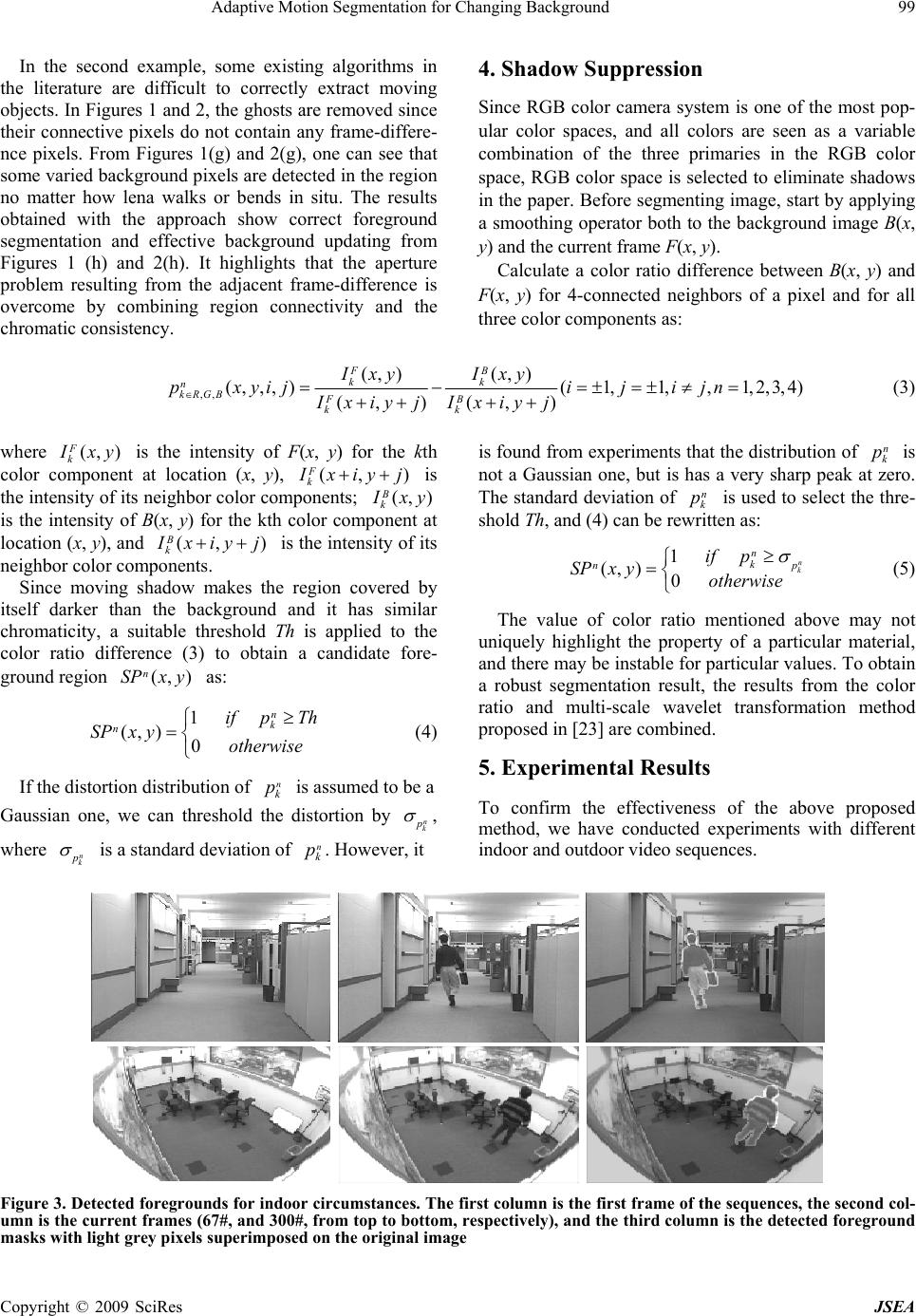

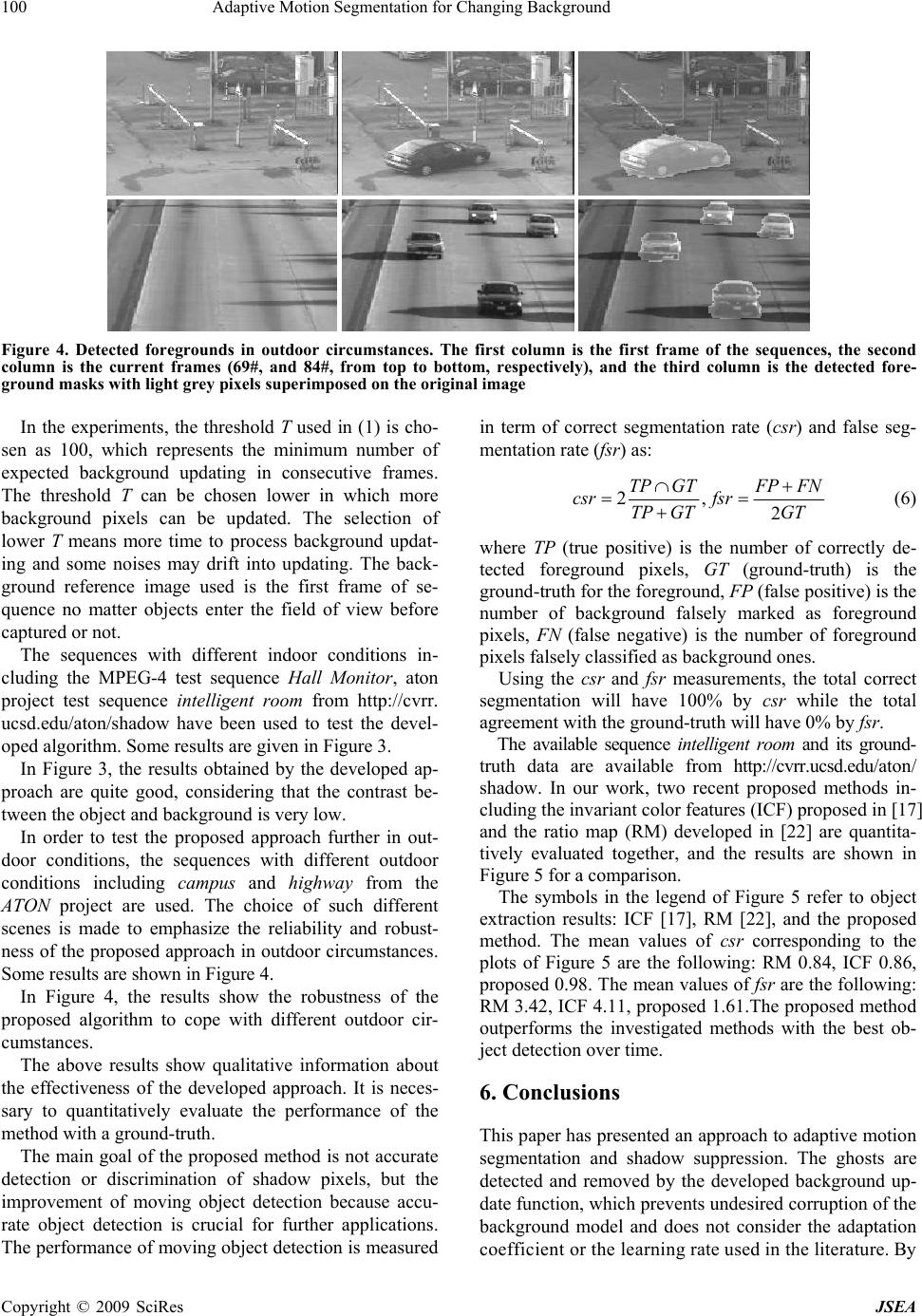

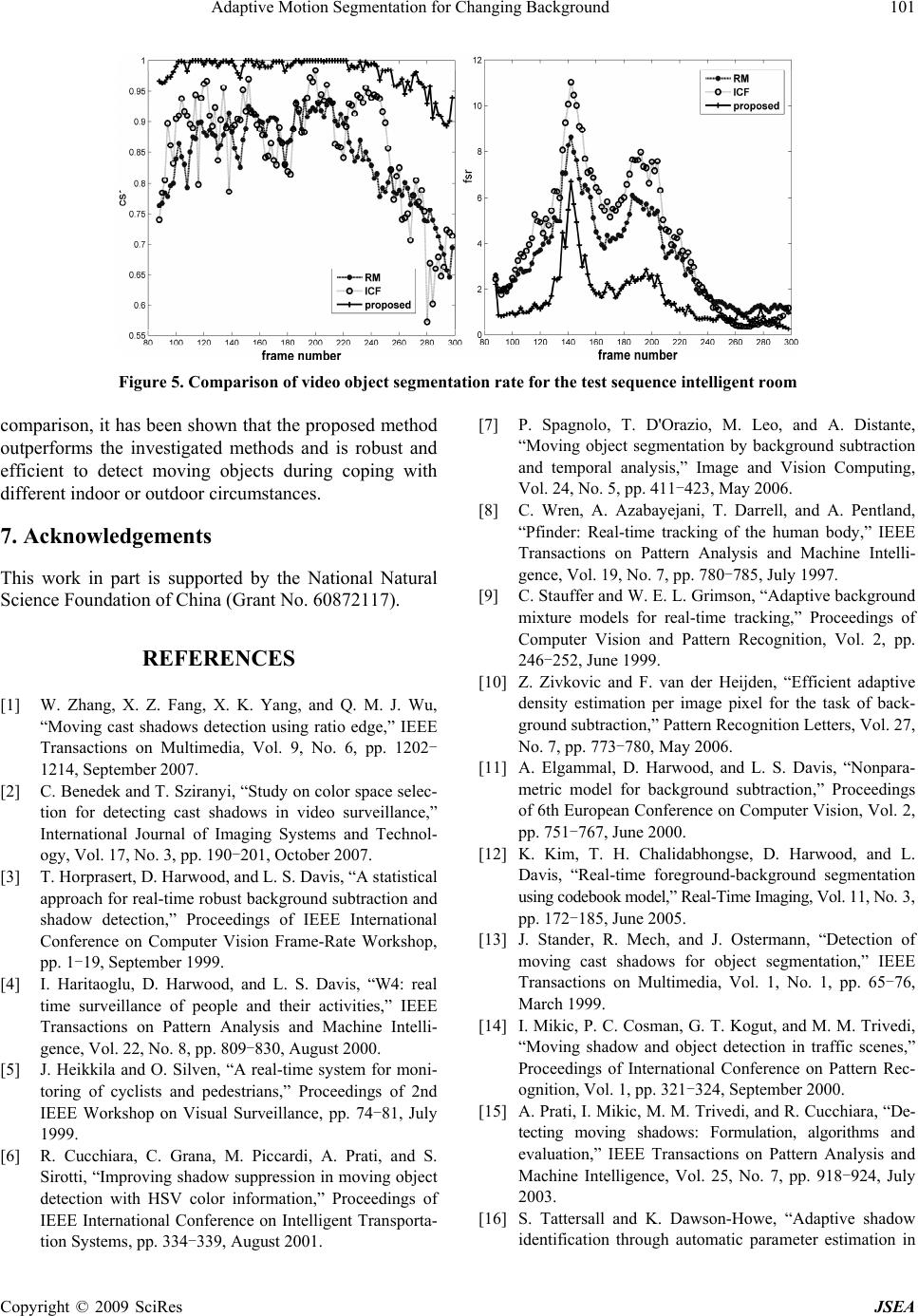

J. Software Engineering & Applications, 2009, 2: 96-102 doi:10.4236/jsea.2009.22014 Published Online July 2009 (www.SciRP.org/journal/jsea) Copyright © 2009 SciRes JSEA Adaptive Motion Segmentation for Changing Background Yepeng Guan1,2 1School of Communication and Information Engineering, Shanghai University, Shanghai, China; 2Key Laboratory of Advanced Dis- plays and System Application, Ministry of Education, 149 Yanchang Rd., Shanghai, China. Email: ypguan@shu.edu.cn Received December 25th, 2008; revised April 12th, 2009; accepted May 4th, 2009. ABSTRACT Segmentation of moving objects efficiently from video sequence is very important for many applications. Background subtraction is a common method typically used to segment moving objects in image sequences taken from a statistic camera. Some existing algorithms cannot adapt to changing circumstances and require manual calibration in terms of specification of parameters or some hypotheses for changing background. An adaptive motion segmentation method is developed according to motion variation and chromatic characteristics, which prevents undesired corruption of the background model and does not consid er the adaptation coefficient. RGB co lor space is selected in stead of introdu cing complex color models to segment moving objects and suppress shadows. A color ratio for 4-connected neighbors of a pixel and multi-scale wavelet transformation are combined to suppress shadows. The mentioned approach is scene-independent and high correct segmentation. It ha s been shown that th e approach is robust and efficient to detect moving objects by experiments. Keywords: Motion Segmentat ion, Background Update, Background Subtracti on, Motion Variation, Shadow Suppression 1. Introduction Moving objects segmentation is an important topic in co- mputer vision applications, including video conferences, vehicle tracking, and three-dimensional object identifica- tion, and has been actively investigated in recent years [1]. The most widely adopted approach for moving ob- ject segmentation with a fixed camera is based on back- ground subtraction. A background (called as background model also) is computed and evolved frame by frame. A reliable background model has to account for back- ground at each time instant. Mistake in labeling fore- ground and background points could cause wrong update of the background model. A particularly critical situation occurs whenever moving objects stop for a long time and become a part of the background. When these objects start again, a ghost is detected in the area where they stopped. This will persist for all the following frames, preventing the area to be updated in the background for- ever. In addition, moving object segmentation is easily af- fected by shadow problem. Researchers try to select an optimal color space for shadow eliminating among a set of color spaces, such as HSV, YCrCb, XY Z , L*a*b*, L*u*v*,C1C2C3, l1l2l3, normalized rgb and so on, how- ever, it remains open-ended how important is the appro- priate color space, and which color space is the most effective [2]. Many approaches in literature have been developed so far. Some existing methods require manual calibration in terms of the specification of parameters which are related to the environment and the lighting conditions or make some hypotheses. An approach to adaptive background updating and shadow suppressing is developed. RGB color space is selected instead of introducing complex color models to segment moving objects. Motion evaluation is intro- duced to prevent giving erroneous segmentation in those corresponding with an un-updated background model. A color ratio and multi-scale wavelet transformation are combined to suppress shadows. The main contribution of the proposal is that the developed approach is scene- independent and automatic background updating ac- cording to motion variations caused by moving objects. The second contribution is that when segmenting motion objects it does not require any complex supervised train- ing or manual calibration in terms of the specification of parameters or makes any hypotheses. Experimental re- sults from indoor and outdoor environments have shown *Tel: +86 21 56331967; Fax: +86 21 56336908.  Adaptive Motion Segmentation for Changing Background 97 the developed approach is efficient and flexible during segmenting moving objects and suppressing shadows in applications. The remainder paper is organized as follows. Section 2 briefly reviews some related previous works. In the next section, background model update would be discussed. Shadow suppression is described in Section 4. Experi- mental results from indoor and outdoor environments are given in Section 5 and followed by conclusions in Sec- tion 6. 2. Related Works Many works have been put forward in literature for mo- ving objects detection. Background subtraction based moving objects in the scene are detected by the differ- ence between the current frame and the background model. When the deviation is greater than some critical value, the pixel is considered as foreground (moving object) [3]. The most simple background model is the previous frame. The difference between the observed frame and the previous frame is thresholded to determine which pixel is background and which pixel is the fore- ground. Another way to model the background is to take the mean, median, or minimum and maximum values of the previous N pixel [4]. It needs to keep track of the pixel value history. To avoid this problem a first order recursive filter is used to update the background model [5]. It easily causes ‘tailing’ or ‘ghosting’. A particularly critical situation occurs whenever the moving object stopped for a long time and became a part of the back- ground. When these objects start again, a ghost is de- tected in the area where they stopped [6]. This will per- sist for all the following frames, preventing the area to be updated in the background image forever [7]. One method is by modeling each pixel as unimodal Gaussian distribution [8]. It fails to model background pixels that are subject to repetitive motions which have multiple background colors. To overcome these difficulties, a parametric background modeling is done by modeling each background pixel value as a mixture Gaussian dis- tribution [9,10]. The parametric background model still lacks flexibility when dealing with non-static back- grounds, a highly flexible non-parametric technique is proposed for estimating background probabilities from recent samples over time using Kernel density estimation [11]. False detections due to fluctuating backgrounds are still not covered in the algorithm until now. Codebook technique is a different approach proposed for the back- ground subtraction in [12]. One of drawbacks is that the algorithm cannot adapt to changing circumstance when the environment was not present in the training phase. Moving objects that stop moving and should be adopted into the background will get difficulties in the algorithm. Shadows cause serious problems while segmenting moving objects, due to the misclassification of shadow- points as a foreground. Many works have been devel- oped to suppress shadow [1,2,6,13,14,15,16,17,19,21,22]. By shadow suppression, the major problem is how to distinguish moving cast shadows from moving object points [15]. Cucchiara et al. [6] defined a shadow mask for each point resulting from motion segmentation. However, it often makes additional assumptions such as small changes in hue and saturation, necessary a prior knowledge of the bands of the changes in the value channel. Moreover choice of some parameters is less straightforward and for now is done empirically. Tatter- sall et al. [16] proposed adaptive shadow identification through automatic parameter estimation based on the above method. The single variable parameter is only used. However, much additional assumptions must be made also in [16]. Salvador et al. [17] proposed invariant color features to detect cast shadows through using chrominance color components. It is found that several assumptions are needed regarding the reflecting surfaces and the lightings. In outdoor scene, shadows will have a blue color cast due to the sky, while the lighting regions have a yellow cast (sunlight), hence the chrominance color values corresponding to the same surface point may be significantly different in shadow and sunlit re- gions [18]. Cavallaro et al. [19] proposed the normalized rgb space to detect shadows. It is known that the practi- cal application of normalized rgb suffers from a problem inherent to the noise at low intensities which would re- sult in unstable chromatic components [20]. Texture analysis can be potentially effective in solving the prob- lem. Heikkila et al. [21] proposed a texture-based me- thod for modeling the background and detecting moving objects from a video sequence. Each pixel is modeled as a group of adaptive local binary pattern histograms that are calculated over a circular region around the pixel. Because of the huge amount of different combinations, it must be done more or less empirically to find a good set of parameter values. Spagnolo et al. [22] proposed a ra- tio-based algorithm to detect shadows with an empiri- cally assigned ratio threshold. This ratio-based algorithm considering the ratio between only two adjacent pixels considerably shortens the computation time, but it easily misclassifies shadows as objects, because ratio magni- tude of shadows may have a similar magnitude value. 3. Background Model Update The main idea of the proposed approach is to update pix- els according to motion variations caused by moving objects, which has been proven to be more reliable and less sensitive to noise. Assuming the background model Bt+1(x, y) at t+1 time, extract possible moving regions P based on background subtraction (seen from Figure 1(c)). According to the fact that the variation of sensible motion target in the scene can be found out from the sequence, the difference Copyright © 2009 SciRes JSEA  98 Adaptive Motion Segmentation for Changing Background Copyright © 2009 SciRes JSEA region P1 may be incorrect (seen from Figure 2(e)). In order to overcome the problem, chromatic information is used to further extract moving region P2 from P1 accord- ing to moving objects with the same chromatic in P1 as in R (seen from Figure 2(f)). According to the mentioned above, the background model is updated as follows, between the two adjacent frames is used to extract mov- ing regions R (seen from Figure 1(d)). Extract moving object regions P1 from the regions P according to mov- ing objects with the same connective characteristic as regions R (seen from Figure 1(e)). When the object moves slowly in situ, the extracted 11 1 (, )(, ),arg( ,) 2 (, ) (, ), it t t I xyB xyifXORPPT Bxy Bxy otherwise (1) where 12 (, ),( , )PconnectR PPchrom PR 1 (2) In (1), It+1 is a current frame at t+1 time, “→” repre- sents updating, Connect (R, P) represents selecting a region with the same connective characteristic as R from P, chrom (P1, R) represents selecting a region with the same chromatic information in R as in P1, XOR is a logi- cal exclusive-or operator, T is a threshold value. The proposed background update approach reveals some advantages. Firstly it smoothes and reduces the effects of noise in the image since sudden variations of a single pixel are not included in the background model. Secondly it does not consider the adaptation coefficient or the learning rate used in the existing literatures. Fi- nally it does not depend on static or moving objects in the image. In order to demonstrate the last point, some results of two sequences where lena walks, and she bend- s in two scenes from http://www.tele.ucl.ac.be/~gaitanis/ results/Human_Action_Video_Database/2Feet/ are given in Figures 1 and 2, respectively. Figure 1. Lena walks in the scene and the extracted results. (a) First frame in the sequence. (b) The 25th frame. (c) Segmenta- tion based on subtraction of (a) and (b). (d) Segmentation based on difference between the 24th and 25th frames. (e) Ex- tracted object region by connectivity. (f) Extracted object region by chromatic consistency. (g) Detected varied background region. (h) Extracted final foreground re gion Figure 2. Lena bends in situ and the ex tracted results. (a) First frame in the sequence. (b) The 25th frame. (c) Segmentation based on subtraction of (a) and (b). (d) Segmentation based on difference between the 24th and 25th frames. (e) Extracted object region by connectivity. (f) Extr acte d objec t region by chromatic c onsistenc y. (g) Detec ted varie d backgr ound re gion. (h) Extracted final foreground region  Adaptive Motion Segmentation for Changing Background 99 In the second example, some existing algorithms in the literature are difficult to correctly extract moving objects. In Figures 1 and 2, the ghosts are removed since their connective pixels do not contain any frame-differe- nce pixels. From Figures 1(g) and 2(g), one can see that some varied background pixels are detected in the region no matter how lena walks or bends in situ. The results obtained with the approach show correct foreground segmentation and effective background updating from Figures 1 (h) and 2(h). It highlights that the aperture problem resulting from the adjacent frame-difference is overcome by combining region connectivity and the chromatic consistency. 4. Shadow Suppression Since RGB color camera system is one of the most pop- ular color spaces, and all colors are seen as a variable combination of the three primaries in the RGB color space, RGB color space is selected to eliminate shadows in the paper. Before segmenting image, start by applying a smoothing operator both to the background image B(x, y) and the current frame F(x, y). Calculate a color ratio difference between B(x, y) and F(x, y) for 4-connected neighbors of a pixel and for all three color components as: ,, (, )(, ) (,, ,)(1,1,,1,2,3,4) (,) (,) FB kk n kRGB FB kk Ixy Ixy pxyij ijijn Ixiyj Ixiyj (3) where (, ) F k I xy is the intensity of F(x, y) for the kth color component at location (x, y), (, F k) I xiy j (, B k is the intensity of its neighbor color components; ) I xy is the intensity of B(x, y) for the kth color component at location (x, y), and (, B k) I xiy j is the intensity of its neighbor color components. Since moving shadow makes the region covered by itself darker than the background and it has similar chromaticity, a suitable threshold Th is applied to the color ratio difference (3) to obtain a candidate fore- ground region as: (, ) n SPx y 1 (, )0 n k nif pTh SPx yotherwise (4) If the distortion distribution of is assumed to be a n k p Gaussian one, we can threshold the distortion by n k p , where n k p is a standard deviation of . However, it n k p is found from experiments that the distribution of is not a Gaussian one, but is has a very sharp peak at zero. The standard deviation of is used to select the thre- shold Th, and (4) can be rewritten as: n k p n k p 1 (, )0 n k n k np if p SPx yotherwise (5) The value of color ratio mentioned above may not uniquely highlight the property of a particular material, and there may be instable for particular values. To obtain a robust segmentation result, the results from the color ratio and multi-scale wavelet transformation method proposed in [23] are combined. 5. Experimental Results To confirm the effectiveness of the above proposed method, we have conducted experiments with different indoor and outdoor video sequences. Figure 3. Detected foregrounds for indoor circumstances. The first c olumn is the first frame of the sequence s, the second col- umn is the current frames (67#, and 300#, from top to bottom, respectively), and the third column is the detected foreground masks with light grey pixels superimposed on the original image Copyright © 2009 SciRes JSEA  100 Adaptive Motion Segmentation for Changing Background Figure 4. Detected foregrounds in outdoor circumstances. The first column is the first frame of the sequences, the second column is the current frames (69#, and 84#, from top to bottom, respectively), and the third column is the detected fore- ground masks with light grey pixels supe r imposed on the original image In the experiments, the threshold T used in (1) is cho- sen as 100, which represents the minimum number of expected background updating in consecutive frames. The threshold T can be chosen lower in which more background pixels can be updated. The selection of lower T means more time to process background updat- ing and some noises may drift into updating. The back- ground reference image used is the first frame of se- quence no matter objects enter the field of view before captured or not. The sequences with different indoor conditions in- cluding the MPEG-4 test sequence Hall Monitor, aton project test sequence intelligent room from http://cvrr. ucsd.edu/aton/shadow have been used to test the devel- oped algorithm. Some results are given in Figure 3. In Figure 3, the results obtained by the developed ap- proach are quite good, considering that the contrast be- tween the object and background is very low. In order to test the proposed approach further in out- door conditions, the sequences with different outdoor conditions including campus and highway from the ATON project are used. The choice of such different scenes is made to emphasize the reliability and robust- ness of the proposed approach in outdoor circumstances. Some results are shown in Figure 4. In Figure 4, the results show the robustness of the proposed algorithm to cope with different outdoor cir- cumstances. The above results show qualitative information about the effectiveness of the developed approach. It is neces- sary to quantitatively evaluate the performance of the method with a ground-truth. The main goal of the proposed method is not accurate detection or discrimination of shadow pixels, but the improvement of moving object detection because accu- rate object detection is crucial for further applications. The performance of moving object detection is measured in term of correct segmentation rate (csr) and false seg- mentation rate (fsr) as: 2, 2 TPGTFP FN csr fsr TP GTGT (6) where TP (true positive) is the number of correctly de- tected foreground pixels, GT (ground-truth) is the ground-truth for the foreground, FP (false positive) is the number of background falsely marked as foreground pixels, FN (false negative) is the number of foreground pixels falsely classified as background ones. Using the csr and fsr measurements, the total correct segmentation will have 100% by csr while the total agreement with the ground-truth will have 0% by fsr. The available sequence intelligent room and its ground- truth data are available from http://cvrr.ucsd.edu/aton/ shadow. In our work, two recent proposed methods in- cluding the invariant color features (ICF) proposed in [17] and the ratio map (RM) developed in [22] are quantita- tively evaluated together, and the results are shown in Figure 5 for a comparison. The symbols in the legend of Figure 5 refer to object extraction results: ICF [17], RM [22], and the proposed method. The mean values of csr corresponding to the plots of Figure 5 are the following: RM 0.84, ICF 0.86, proposed 0.98. The mean values of fsr are the following: RM 3.42, ICF 4.11, proposed 1.61.The proposed method outperforms the investigated methods with the best ob- ject detection over time. 6. Conclusions This paper has presented an approach to adaptive motion segmentation and shadow suppression. The ghosts are detected and removed by the developed background up- date function, which prevents undesired corruption of the background model and does not consider the adaptation coefficient or the learning rate used in the literature. By Copyright © 2009 SciRes JSEA  Adaptive Motion Segmentation for Changing Background 101 Figure 5. Comparison of video object segmentation rate for the test sequence intelligent room comparison, it has been shown that the proposed method outperforms the investigated methods and is robust and efficient to detect moving objects during coping with different indoor or outdoor circumstances. 7. Acknowledgements This work in part is supported by the National Natural Science Foundation of China (Grant No. 60872117). REFERENCES [1] W. Zhang, X. Z. Fang, X. K. Yang, and Q. M. J. Wu, “Moving cast shadows detection using ratio edge,” IEEE Transactions on Multimedia, Vol. 9, No. 6, pp. 1202- 1214, September 2007. [2] C. Benedek and T. Sziranyi, “Study on color space selec- tion for detecting cast shadows in video surveillance,” International Journal of Imaging Systems and Technol- ogy, Vol. 17, No. 3, pp. 190-201, October 2007. [3] T. Horprasert, D. Harwood, and L. S. Davis, “A statistical approach for real-time robust background subtraction and shadow detection,” Proceedings of IEEE International Conference on Computer Vision Frame-Rate Workshop, pp. 1-19, September 1999. [4] I. Haritaoglu, D. Harwood, and L. S. Davis, “W4: real time surveillance of people and their activities,” IEEE Transactions on Pattern Analysis and Machine Intelli- gence, Vol. 22, No. 8, pp. 809-830, August 2000. [5] J. Heikkila and O. Silven, “A real-time system for moni- toring of cyclists and pedestrians,” Proceedings of 2nd IEEE Workshop on Visual Surveillance, pp. 74-81, July 1999. [6] R. Cucchiara, C. Grana, M. Piccardi, A. Prati, and S. Sirotti, “Improving shadow suppression in moving object detection with HSV color information,” Proceedings of IEEE International Conference on Intelligent Transporta- tion Systems, pp. 334-339, August 2001. [7] P. Spagnolo, T. D'Orazio, M. Leo, and A. Distante, “Moving object segmentation by background subtraction and temporal analysis,” Image and Vision Computing, Vol. 24, No. 5, pp. 411-423, May 2006. [8] C. Wren, A. Azabayejani, T. Darrell, and A. Pentland, “Pfinder: Real-time tracking of the human body,” IEEE Transactions on Pattern Analysis and Machine Intelli- gence, Vol. 19, No. 7, pp. 780-785, July 1997. [9] C. Stauffer and W. E. L. Grimson, “Adaptive background mixture models for real-time tracking,” Proceedings of Computer Vision and Pattern Recognition, Vol. 2, pp. 246-252, June 1999. [10] Z. Zivkovic and F. van der Heijden, “Efficient adaptive density estimation per image pixel for the task of back- ground subtraction,” Pattern Recognition Letters, Vol. 27, No. 7, pp. 773-780, May 2006. [11] A. Elgammal, D. Harwood, and L. S. Davis, “Nonpara- metric model for background subtraction,” Proceedings of 6th European Conference on Computer Vision, Vol. 2, pp. 751-767, June 2000. [12] K. Kim, T. H. Chalidabhongse, D. Harwood, and L. Davis, “Real-time foreground-background segmentation using codebook model,” Real-Time Imaging, Vol. 11, No. 3, pp. 172-185, June 2005. [13] J. Stander, R. Mech, and J. Ostermann, “Detection of moving cast shadows for object segmentation,” IEEE Transactions on Multimedia, Vol. 1, No. 1, pp. 65-76, March 1999. [14] I. Mikic, P. C. Cosman, G. T. Kogut, and M. M. Trivedi, “Moving shadow and object detection in traffic scenes,” Proceedings of International Conference on Pattern Rec- ognition, Vol. 1, pp. 321-324, September 2000. [15] A. Prati, I. Mikic, M. M. Trivedi, and R. Cucchiara, “De- tecting moving shadows: Formulation, algorithms and evaluation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 25, No. 7, pp. 918-924, July 2003. [16] S. Tattersall and K. Dawson-Howe, “Adaptive shadow identification through automatic parameter estimation in Copyright © 2009 SciRes JSEA  102 Adaptive Motion Segmentation for Changing Background video sequences,” Proceedings of Irish Machine Vision and Image Processing, pp. 57-64, September 2003. [17] E. Salvador, A. Cavallaro, and T. Ebrahimi, “Cast shad- ow segmentation using invariant color features,” Com- puter Vision and Image Understanding, Vol. 95, No. 2, pp. 238-259, August 2004. [18] E. A. Khan and E. Reinhard, “Evaluation of color spaces for edge classification in outdoor scenes,” Proceedings of International Conference on Image Processing, Vol. 3, pp. 952-955, September 2005. [19] A. Cavallaro, E. Salvador, and T. Ebrahimi, “Detecting shadows in image sequences,” Proceedings of IEEE Conference on Visual Media Production, pp. 165-174, March 2004. [20] M. Kampel, H. Wildenauer, P. Blauensteiner, and A. Hanbury, “Improved motion segmentation based on sha- dow detection,” Electronic Letters on Computer Vision and Image Analysis, Vol. 6, No. 3, pp. 1-12, December 2007. [21] M. Heikkila and M. Pietikainen, “A texture-based method for modeling the background and detecting moving ob- jects,” IEEE Transactions on Pattern Analysis and Ma- chine Intelligence, Vol. 28, No. 4, pp. 657-662, April 2006. [22] P. Spagnolo, T. D. Orazio, M. Leo, and A. Distante, “Ad- vances in shadow removing for motion detection algo- rithms,” Proceedings of 2nd International Conference on Vision Video Graphics, pp. 69-75, July 2005. [23] Y. P. Guan, “Wavelet multi-scale transform based fore- ground segmentation and shadow elimination,” The Open Signal Processing Journal, Vol. 1, No. 6, pp. 1-6, Nove- mber 2008. Copyright © 2009 SciRes JSEA |