Advances in Internet of Things

Vol. 3 No. 2A (2013) , Article ID: 33321 , 11 pages DOI:10.4236/ait.2013.32A005

Learning Based Falling Detection Using Multiple Doppler Sensors

Graduate School of Science and Technology, Keio University, Yokohama, Japan

Email: tomii@ohtsuki.ics.keio.ac.jp, ohtsuki@ics.keio.ac.jp

Copyright © 2013 Shoichiro Tomii, Tomoaki Ohtsuki. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received March 9, 2013; revised April 10, 2013; accepted April 17, 2013

Keywords: Falling Detection; Doppler Sensor; Cepstrum Analysis; SVM; k-NN

ABSTRACT

Automated falling detection is one of the important tasks in this ageing society. Such systems are supposed to have little interference on daily life. Doppler sensors have come to the front as useful devices to detect human activity without using any wearable sensors. The conventional Doppler sensor based falling detection mechanism uses the features of only one sensor. This paper presents falling detection using multiple Doppler sensors. The resulting data from sensors are combined or selected to find out the falling event. The combination method, using three sensors, shows 95.5% accuracy of falling detection. Moreover, this method compensates the drawbacks of mono Doppler sensor which encounters problems when detecting movement orthogonal to irradiation directions.

1. Introduction

In these days, the elderly population has been growing thanks to advances in the medical field. Healthy, safe and secure life is important particularly for the elderly. However, we are faced with problem of increasing the old-age dependency ratio. The old-age dependency ratio is the ratio of the sum of the population aged 65 years or over to the population aged 20 - 64. The ratio is presented as the number of dependents per 100 persons of working age (20 - 64). According to estimates of the United Nations, for about 30 countries, this ratio is projected to reach 30% in 2020 [1]. In particular, it is expected to reach 52% in Japan. There is an urgent need to develop automated health care systems to detect some accidents for the elderly.

Falling detection is one of the most important tasks to prevent the elderly from having crucial accidents. Yu [2] and Hijaz et al. [3] classified falling detection systems into three groups, wearable device approach, ambient sensors approach, and cameras approach. Wearable devices are easy to set up and operate. Devices can be attached to chest, waist, armpit, and the back [4]. The shortcomings of these devices are that they are easily broken, and that they are intrusive. Furthermore, the older we become, the more forgetful we become. Therefore, no matter how sophisticated the algorithm implemented on wearable devices is, there is no meaning if they fail to wear them. On the other hand, ambient sensors such as pressure and acoustic sensors can also be used. These sensors are cheap and non-intrusive. Moreover, they are not prone to privacy issues. However, pressure sensors cannot discern whether pressure is from the user’s weight, while acoustic sensors show high false alarm rate in a situation of loud noise [5]. Cameras enable remote visual verification, and multiple persons can be monitored using a single setup. However, in private spaces such as bath and restroom, cameras are prohibited. Also in living room, many people do not want to be monitored by cameras.

Doppler sensor is an inexpensive, palm-sized device. It is capable of detecting moving targets like humans. Using this sensor, we can construct passive, non-intrusive, and noise tolerant systems. Activity recognition using Doppler sensor has been actively studied recently. Kim et al. proposed classification of seven different activities based on micro-Doppler signature characterized by arms and legs with periodic and active motion [7]. Subjects act toward sensor. An accuracy performance above 90% is achieved by using support vector machine (SVM). Tivive et al. [8] classified three types of motion, free arm-motion, partial arm-motion, and no arm-motion. Based on arm-motion, they describe human activity status. Liu et al. [9] show automatic falling detection. They use two sensors, which are positioned 1.8 m and 3.7 m away from the point of falling. The data of each sensor is independently processed. Subjects act forward, back, left-side, and right-side fall. The directions of activities include between two sensors, toward a sensor, and away from a sensor.

Doppler sensor is sensitive to the objects moving along irradiation directions; however, less sensitive to movements orthogonal to irradiation directions. For the practical use of Doppler sensors, we propose falling detection using multiple Doppler sensors to alleviate the moving direction dependency. By using sensors that have different irradiation directions, each sensor complements less sensitive directions of the other sensors. Sensor data are processed by feature combination or selection methods. In the combination method, features of multiple sensors are simply combined. In the selection method, the sensor is selected based on the power spectral density of the particular bandwidth, which characterizes the falling activity. After the process of each method, features are classified by using SVM or k-nearest neighbors (kNN). We evaluate both methods in terms of the number of features, the number of sensors, and the type of classifier. We also discuss the accuracy of each activity direction and the viability of these methods for the practical use.

The remainder of this paper is organized as follows. In Section 2, we introduce basic Doppler sensor system, how we can determine target velocity from Doppler shift. In Section 3, we explain about flow of the proposed falling detection algorithm using multiple Doppler sensors. In Section 4, the sensor setup of the proposed method and the type of tested activities are explained. Our methods are evaluated by comparing them to the one sensor method. We discuss the accuracy of falling detection for each activity direction, and the viability of the proposed feature combination and selection methods in terms of the practical use. In Section 5, we draw conclusion.

2. Doppler Sensor

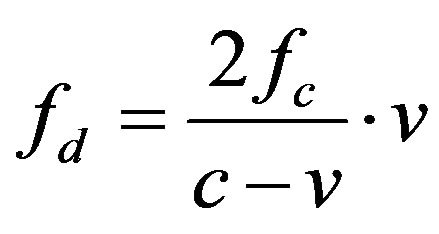

In this section, we discuss the basic information about Doppler sensor. Doppler sensor transmits a continuous wave and receives the reflected wave which has its frequency shifted the moving object. The Doppler shift is defined as

(1)

(1)

where v is the target velocity, c is the light velocity, and fc is the carrier frequency. In Equation (1), since , the target velocity is represented as c

, the target velocity is represented as c

(2)

(2)

fc and c are the given values. Only by observing the Doppler shift fd, we can determine the target velocity v.

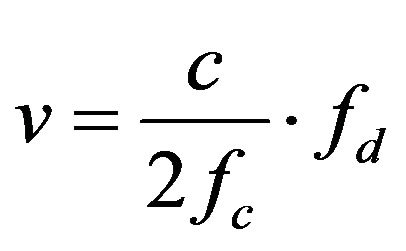

3. Falling Detection Algorithm Using Multiple Doppler Sensors

In this section, we show the proposed falling detection algorithm using multiple Doppler sensors. Figure 1 depicts the algorithm of falling detection. Our approach involves four phases: 1) Decision of extraction time range, 2) Feature extraction, 3) Feature combination/selection, 4)Training and classification.

3.1. Decision of Extraction Time Range

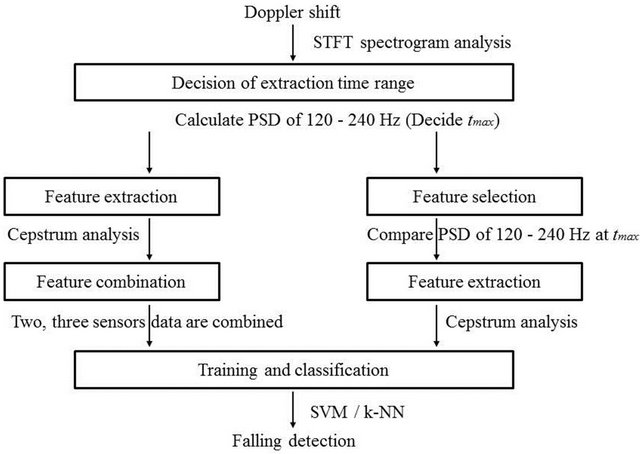

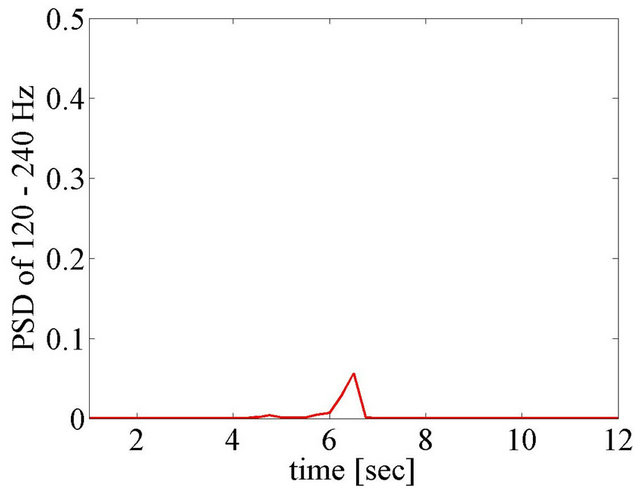

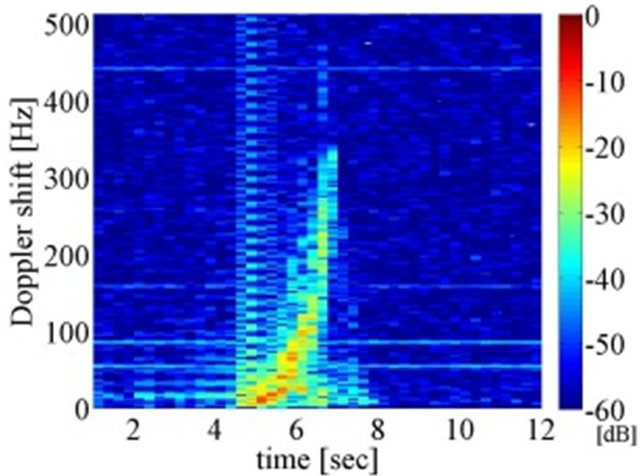

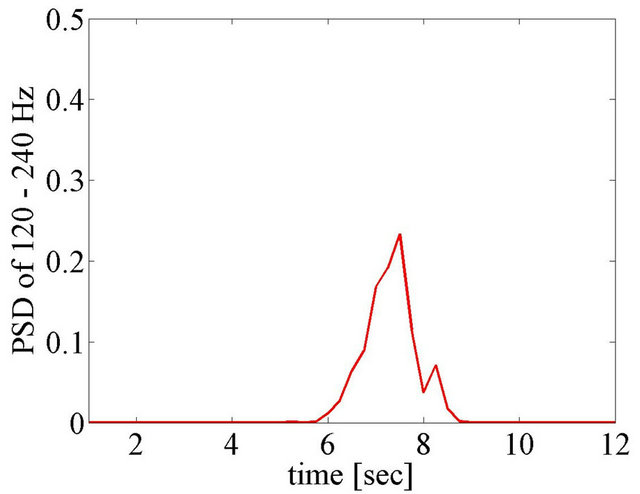

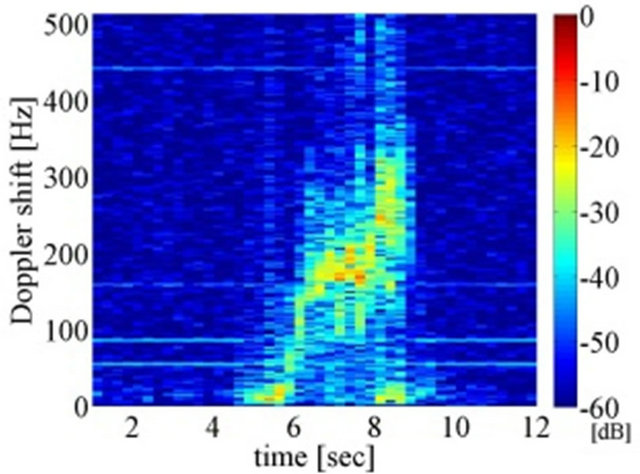

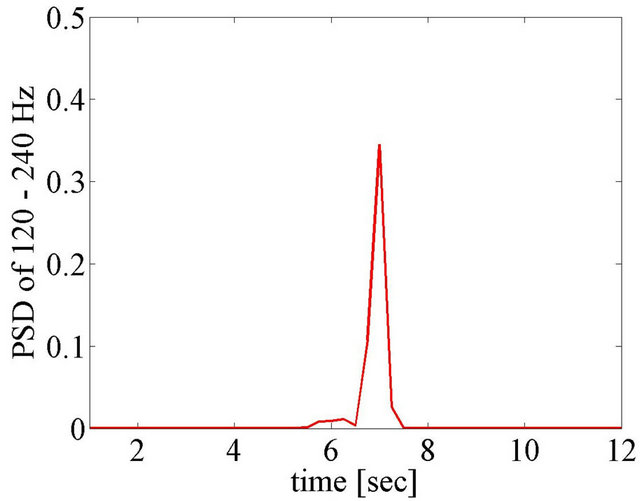

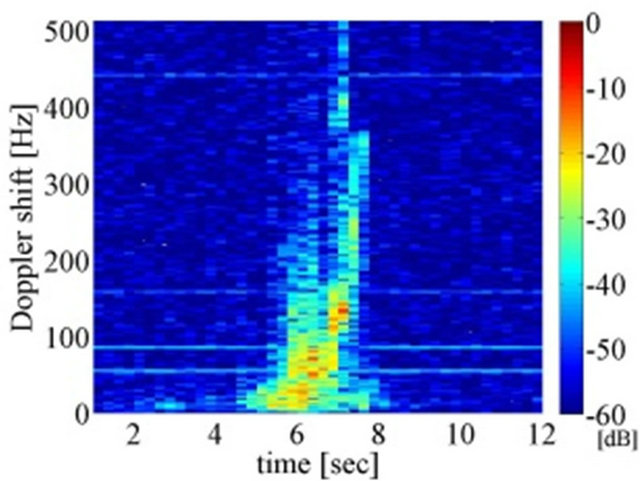

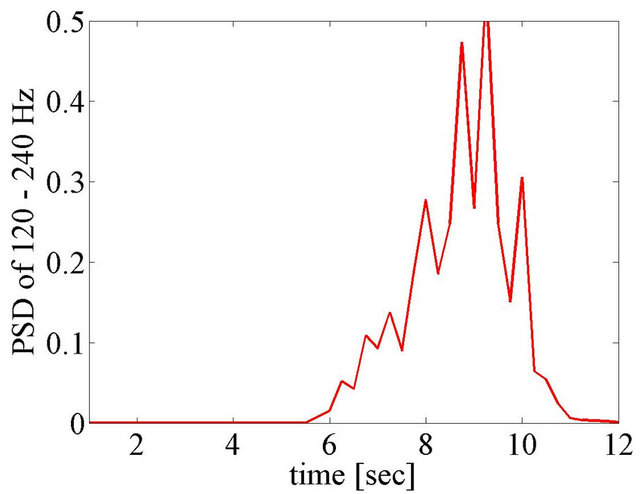

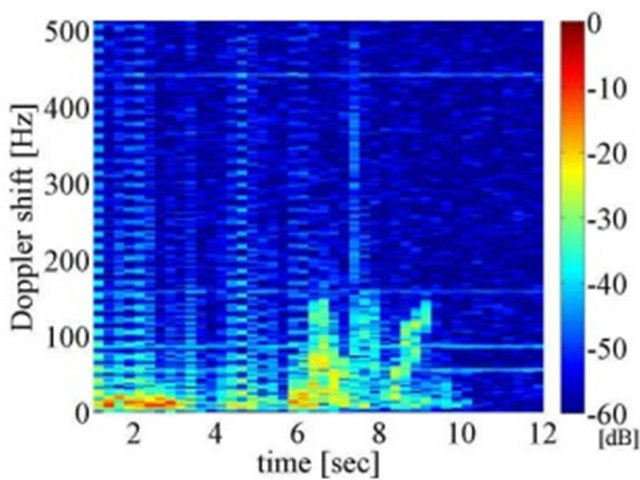

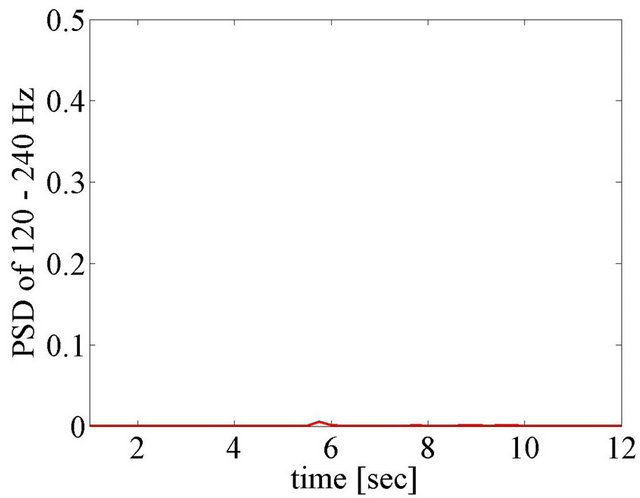

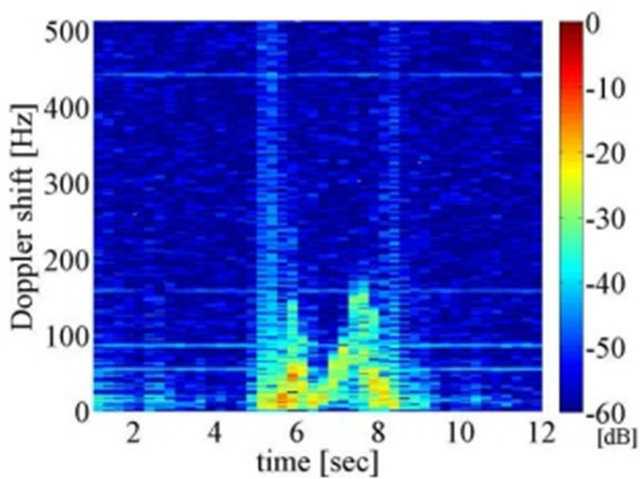

This process is aimed at deciding the timing for extracting 4 second features from the voltage data of the sensors. Firstly, we compute spectrogram by using short time Fourier transform (STFT). It is reported that 25 - 50 Hz bandwidth features are suitable to distinguish falling and non-falling when the carrier frequency is 5 GHz [9]. As shown in Equation (2), Doppler shift is proportional to carrier frequency on the condition of the same target velocity. Our experiment uses 24 GHz carrier frequency so that bandwidth should be expanded by 4.8 times, i.e. to within 120 - 240 Hz. On each time bin, which is decided by discrete Fourier transform (DFT) points and window overlap, we calculate the power spectral density (PSD) of 120 - 240 Hz. tmax, the time that the PSD of 120 - 240 Hz becomes maximum in 12 second experiment duration, indicates the time that remarkable event happens. Remarkable events mean activities involving a sudden quick movement using whole body. We specify the 4 second voltage data centered at tmax, and then extract features. Figures 2 and 3 show STFT spectrogram and PSD of 120 - 240 Hz of experienced activities, respectively. Subjects act at about time 7 second.

Figure 1. The proposed falling detection.

(a) Standing - Falling

(b) Walking - Falling

(c) Standing up - Falling

Figure 2. Spectrogram (left) and PSD of 120 Hz - 240 Hz (right) of Falling.

3.2. Feature Extraction

Using the 4 second voltage data centered at tmax, we compute cepstral coefficients. Mel-frequency cepstral coefficients (MFCC) are applied in [9]. Mel-frequency is the scale definition that emphasizes lower frequency 0 - 1000 Hz and compresses higher frequency. MFCC is basically applied to the analysis of voice up to about 16 kHz. On sensing falling motion, we found empirically that up to 500 Hz is enough to observe human activities on condition of 24 GHz carrier frequency. To compute MFCC, 0 - 1000 Hz frequency band is divided into linearly spaced blocks, which are called filter banks. Sampling frequency is 1024 Hz so that there is almost no process to compress higher frequency. Strictly speaking, instead of MFCC, cepstral coefficients analysis is applied. To calculate cepstral coefficients, we use the Auditory Toolbox [10]. The method is as follows.

1) Divide amplitude spectrogram into 13 linearly spaced filter banks.

2) Compute fast Fourier transform (FFT) of amplitude spectrum of each filter bank.

3) Compute discrete cosine transform (DCT) of the obtained data above. The result is called cepstrum.

4) We use C1-C6 coefficients, where C0 is directcurrent component. C7-C12 come from latter half of 0 - 1024 Hz, which is not focused on to observe human activity.

Cepstral coefficient features are computed for each set of 256 DFT points which is called window. The window update frequency is defined as frame rate. As the frame rate becomes higher, the number of features increases.

3.3. Feature Combination/Selection

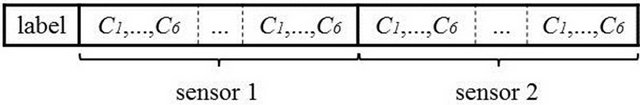

In our proposal, at most three sensors are used. We employ two methods to make features using multiple Doppler sensors, a combination method and a selection method. In the combination method, cepstral coefficients of the sensors are simply associated. Figure 4(a) shows the example of feature structure using two sensors. “label” represents the type of activity. In the selection method, the PSD of 120 - 240 Hz at tmax are compared among sensors before computing cepstral coefficients.

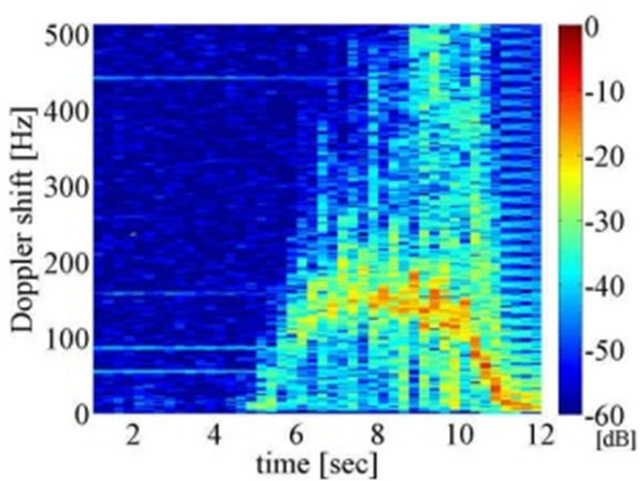

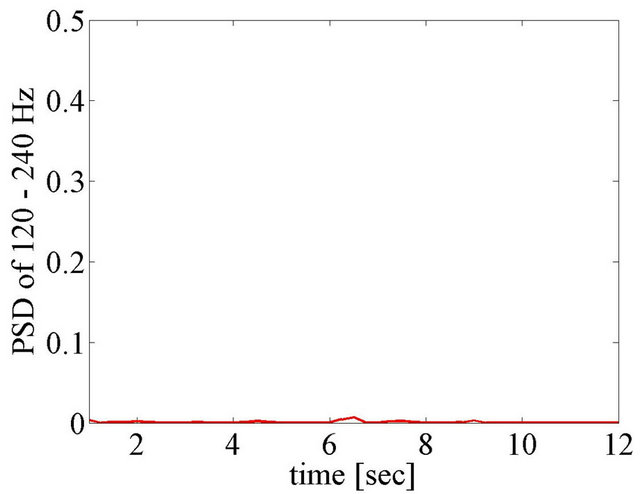

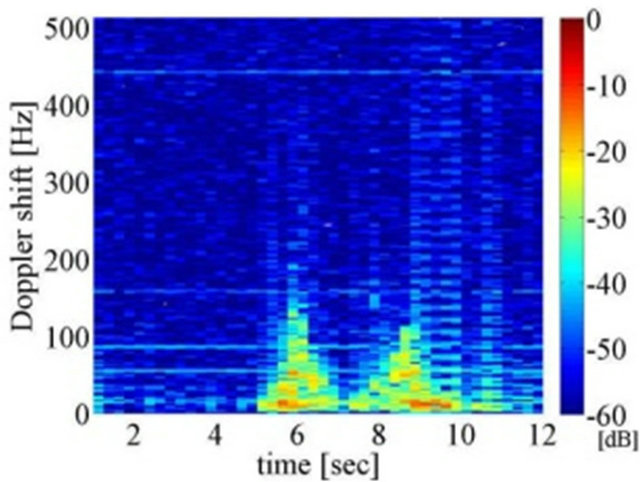

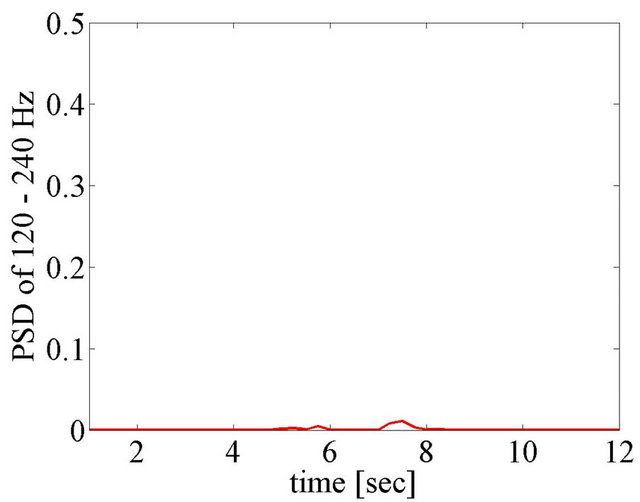

(a) Walking

(b) Standing – Lying down

(c) Picking up

(d) Sitting on a chair

Figure 3. Spectrogram (left) and PSD of 120 Hz - 240 Hz (right) of Falling.

The sensor that has the largest PSD of 120 - 240 Hz at tmax is selected for feature extraction. The selected sensor is assumed to catch human motion better than the other sensors.

3.4. Training and Classification

To train and classify the features, we use SVM and k-NN. For classification by using SVM on MATLAB, LIBSVM [11] is available. SVM has a kernel function that decides boundaries of groups. As a kernel function, linear, polynomial, radial basis function (RBF), and sigmoid are able to be used on LIBSVM. We exploit the RBF kernel. A linear kernel is the special case of RBF [12], and sigmoid kernel behaves like RBF with some parameters [13]. Polynomial kernel has numerical difficulty [14] so that RBF is the most suitable kernel in general. Kernel has several parameters and they should be tuned by changing each parameter. When we classify by using k-NN, Euclidean distance between the features is used.

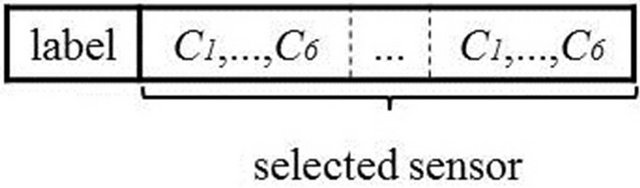

We use four persons (A, B, C, D), who are men from 20’s to 30’s, as training and test subjects as shown in Table 1, and apply cross validation. This process generalizes the results of SVM and k-NN. In addition, features are normalized to prevent the greater values from having stronger effect on the results than the others.

4. Performance Evaluation

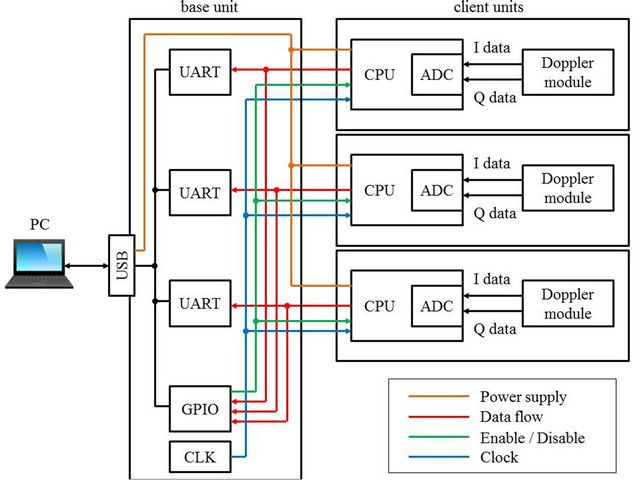

Figure 5 shows contents of the multiple Doppler sensors. They include client units, a base unit, and a PC. Client units receive reflected microwave at Doppler module and CPU (MSP430F2618, Texas Instruments) outputs data to base unit. The connection between base unit and each client unit is connected by LAN cable. The collected data of each Doppler sensor are sent to PC through USB port. The data are processed MATLAB.

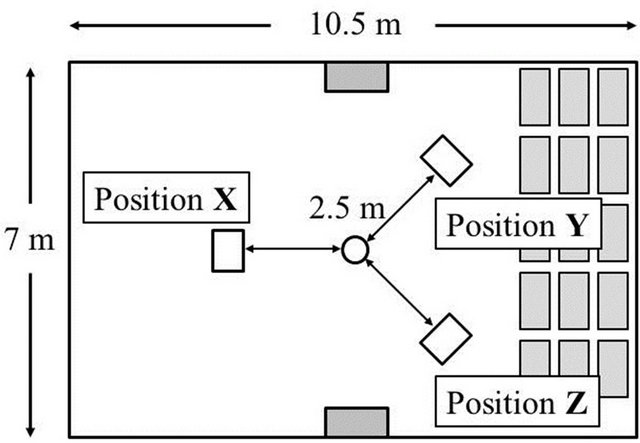

Figure 6 shows how multiple sensors are deployed in the proposed methods. The room is rectangular, and its longer side is 10.5 m and shorter side is 7 m. In the middle of the each longer side, there is pillar. A dozen desks are placed in the rear. The angle between positions X and Y is 135˚, and that between positions Y and Z is 90˚. We used three sensors that transmit continuous wave whose frequency band is 24 GHz. Each sensor uses a slightly different transmit frequency to prevent interference among the sensors. Sampling frequency is 1024 Hz. Sensors are 1 m high from floor as shown in Figure 7, because strength of signal reflected from the torso is higher than that from any other parts of human body, and reflection on the floor cannot be negligible if they are deployed too close to the floor.

(a) Feature combination method

(b) Feature selection method

Figure 4. Feature structure. Ci is the ith cepstral coefficient.

Table 1. Training and testing subject patterns.

Figure 5. The diagram of sensing system of multiple Doppler sensors.

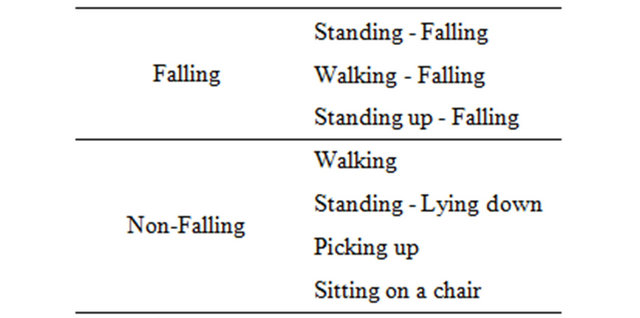

For evaluation of falling detection, subjects took seven activities listed in Table 2. Activities are roughly divided into two categories, “Falling” and “Non-Falling.” Falling includes three following activities.

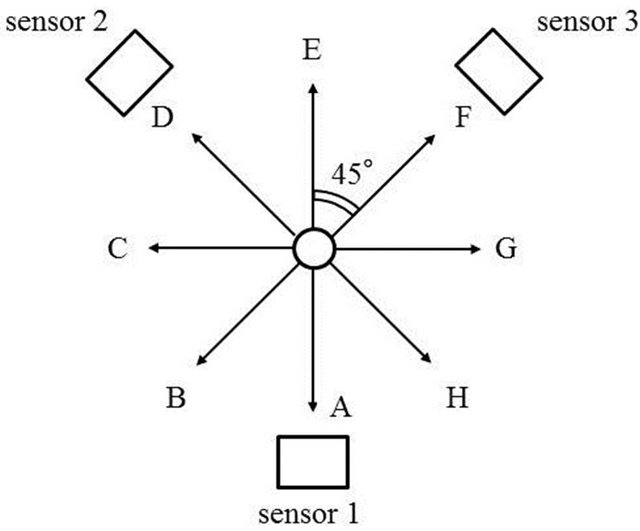

● Standing-Falling: Keep standing for seconds, then fall down toward each direction at the center, shown as circle in Figure 8.

● Walking-Falling: Walk from a distance of 2.5 m from the center, then fall down at the center.

● Standing up-Falling: Stand up, then fall down toward each direction at the center. This simulates lightheadedness.

Non-Falling includes four following activities.

● Walking: Walk from a distance of 2.5 m from the center, across the center, toward each activity direction. Totally 5 m walk.

Figure 6. The deployment of multiple Doppler sensors.

Figure 7. The image of a Doppler sensor.

Table 2. Falling and Non-Falling activity.

Figure 8. Deployment of multiple sensors.

● Standing-Lying down: Keep standing for seconds, then lie down on the floor toward each direction.

● Picking up: Pick up a pen on the floor. It is put about 30 cm apart from the center toward activity direction.

● Sitting on a chair: the back of a chair is toward activity direction.

These seven activities are tested in eight directions (AH) as shown in Figure 8.

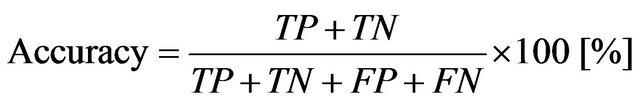

The accuracy of falling detection is defined as

(3)

(3)

Each variable has the following meaning.

● TP (True Positive): Subject acts falling, and classified as falling.

● TN (True Negative): Subject acts non-falling, and classified as non-falling.

● FP (False Positive): Subject acts non-falling, and classified as falling.

● FN (False Negative): Subjects acts falling, and classified as non-falling.

4.1. Frame Rate

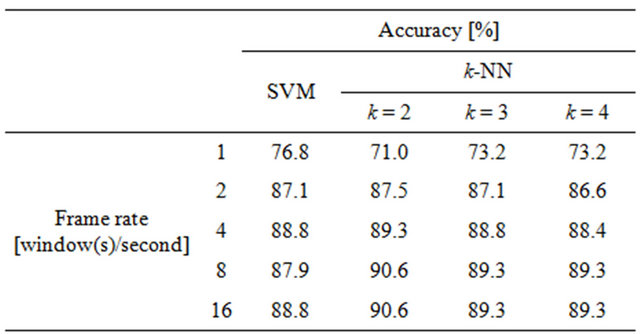

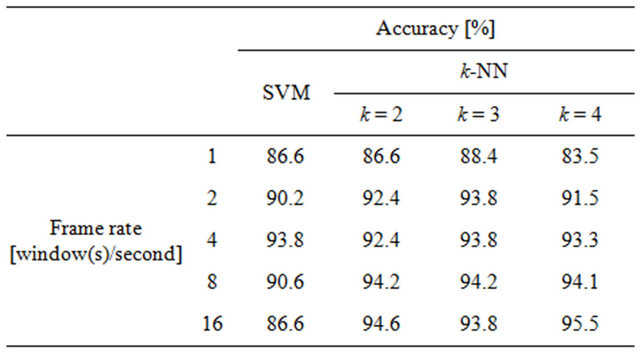

Frame rate is the number of window updates per second. The higher the frame rate becomes, the larger the number of features becomes. Tables 3-5 show the relation between frame rate and accuracy of falling detection. The results of one sensor method and the combination and selection methods using three sensors are shown for comparison.

When we choose k-NN as a classifier, the accuracy increases until frame rate reaches 8 windows/second. When frame rate is higher than 16 windows/second, the degree of increase in accuracy becomes moderate or stable for all methods.

Referring the results using k-NN, we decide to set frame rate at 16 windows/second. We note that frame rate should not be too high because it increases the computation load. On the other hand, the low frame rate, which means lack of the features, causes the low accuracy because the k-NN classifier generally suffers from the problem of high variance in the case of limited sampling [15].

When SVM is chosen as a classifier, the best accuracy for falling detection occurs when the frame rate is 4 windows/second in all the methods. Unlike the case classified by k-NN, accuracy does not increase monotonically as frame rate increases. SVM is available to classify linearly non-separable features [13] so that it is generally thought to be able to separate complicated features. This result indicates that the optimum number of features should be found when SVM is applied. Based on these results, we use the optimum frame rate 4 windows/second on SVM.

Table 3. Relation between frame rate and accuracy of falling detection (one sensor method).

Table 4. Relation between frame rate and accuracy of falling detection (combination method, three sensors).

Table 5. Relation between frame rate and accuracy of falling detection (selsection method, three sensors).

4.2. One Sensor Method

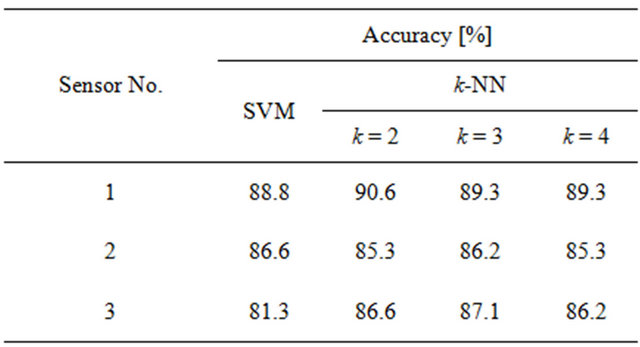

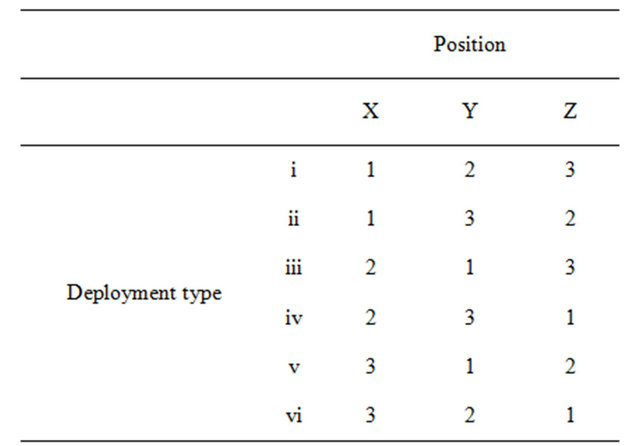

Table 6 shows accuracy of falling detection using one sensor. The result of each sensor is classified by SVM and k-NN. The best accuracy of 90.6 % is achieved on sensor 1 using k-NN (k = 2). As shown in Figure 6, there are three positions, X, Y, and Z. We use three sensors, No. 1, 2, and 3. Table 7 shows the position of each sensor in six deployments. The result shown in Table 6 is for deployment type i. There are differences in accuracy based on sensor No.

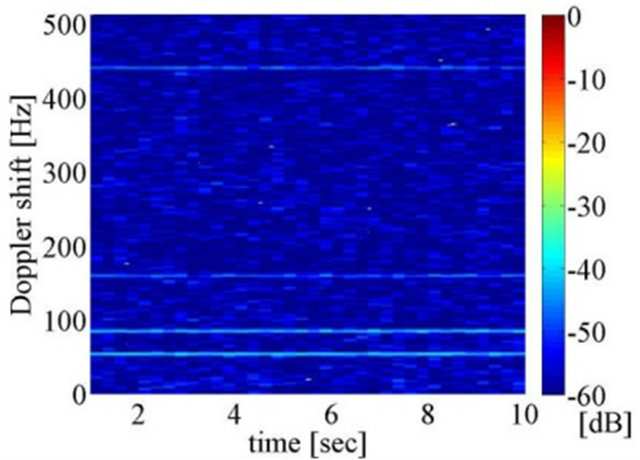

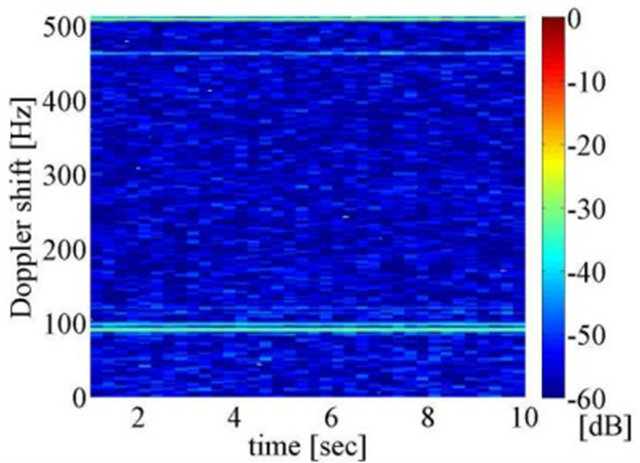

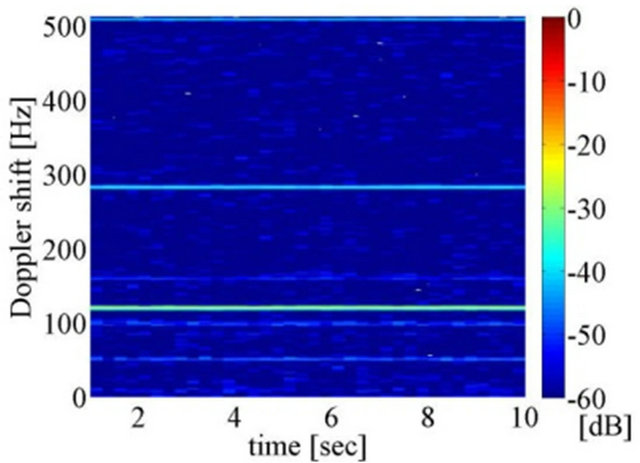

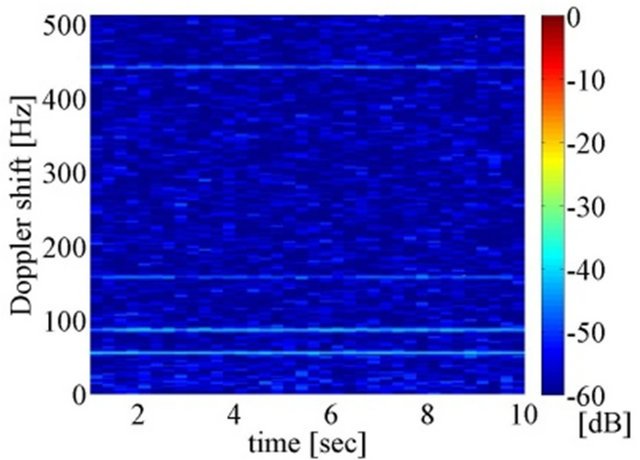

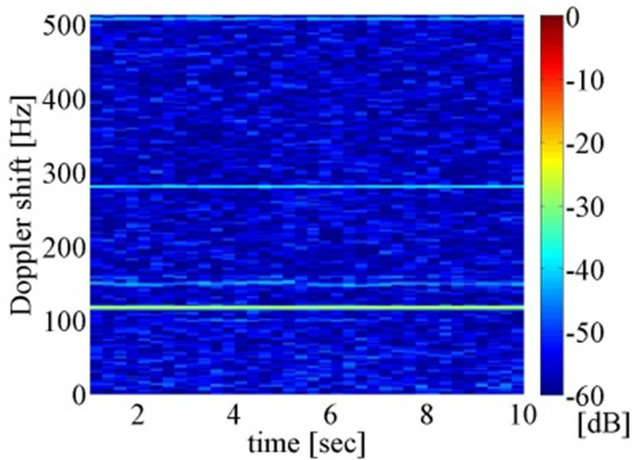

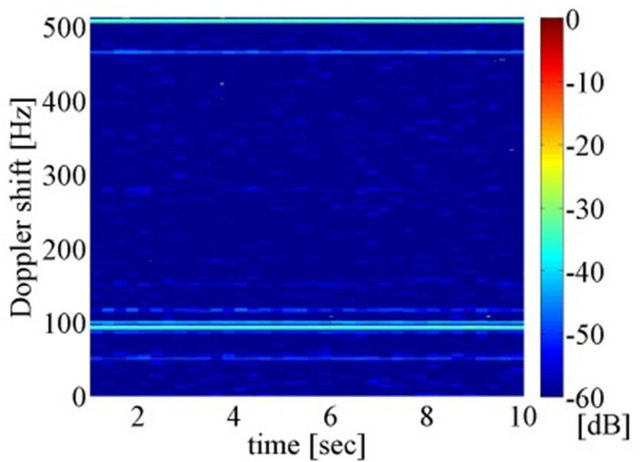

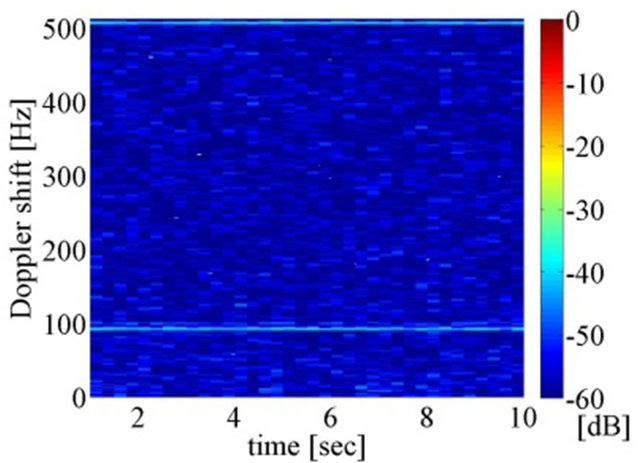

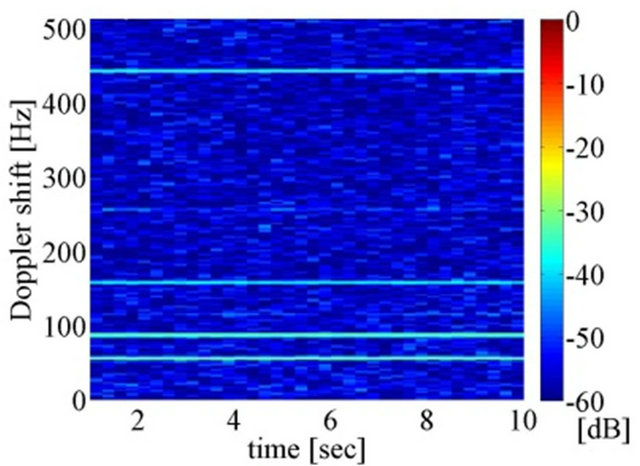

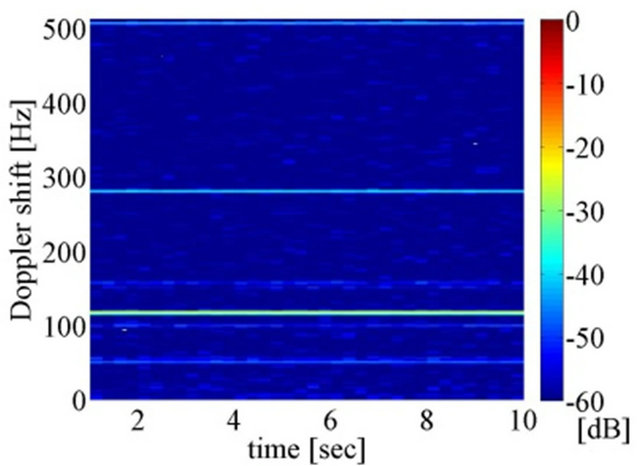

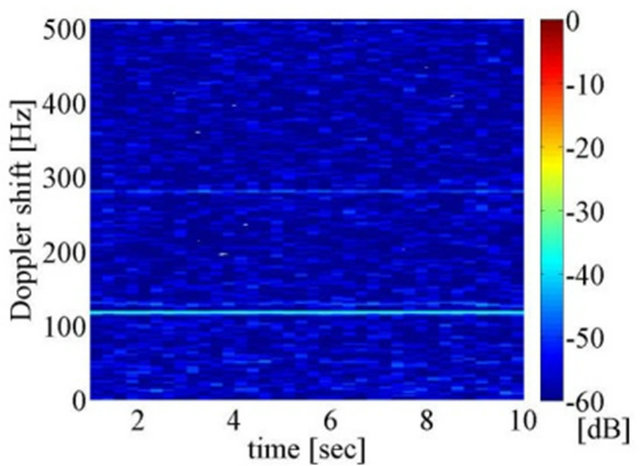

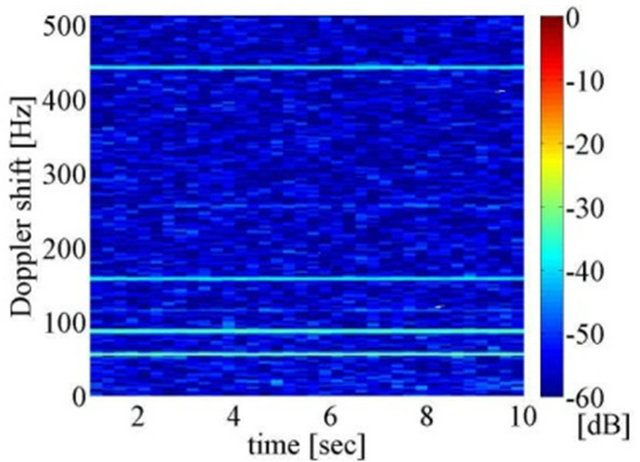

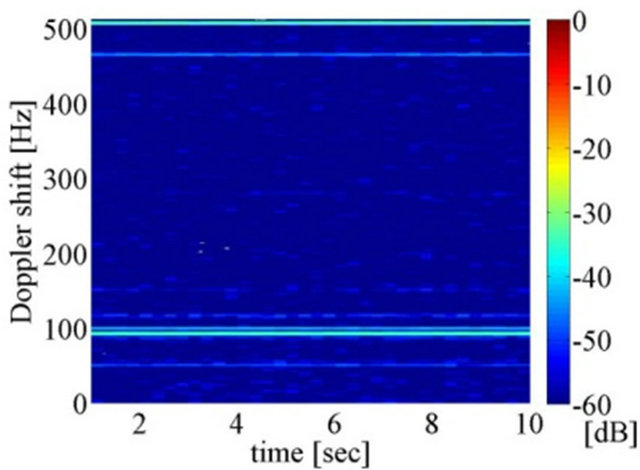

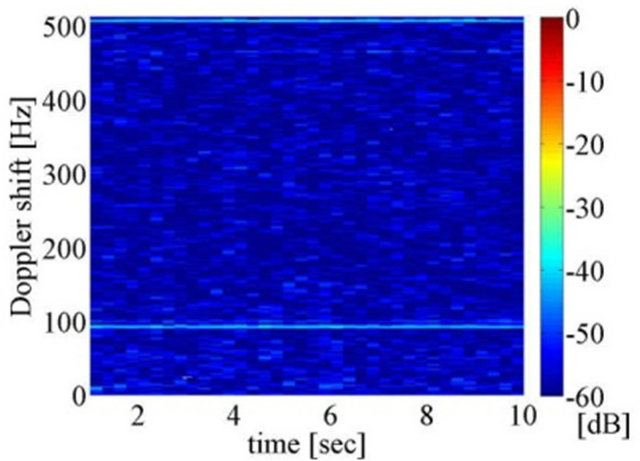

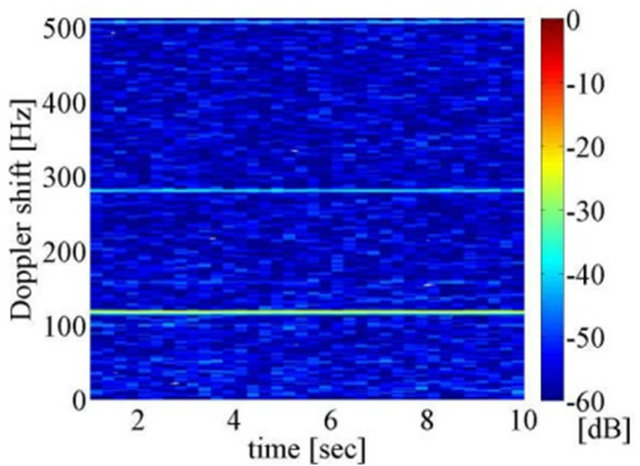

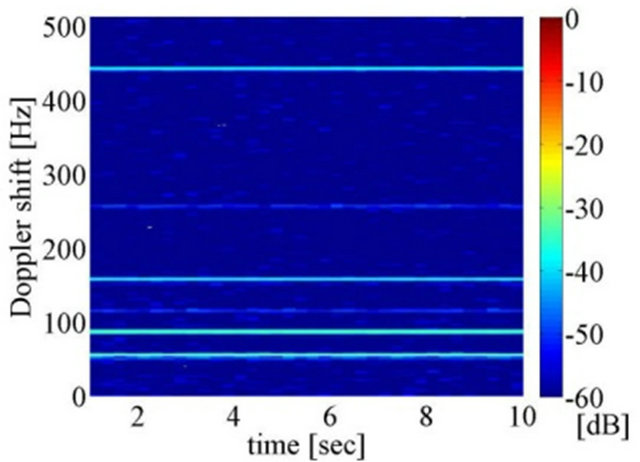

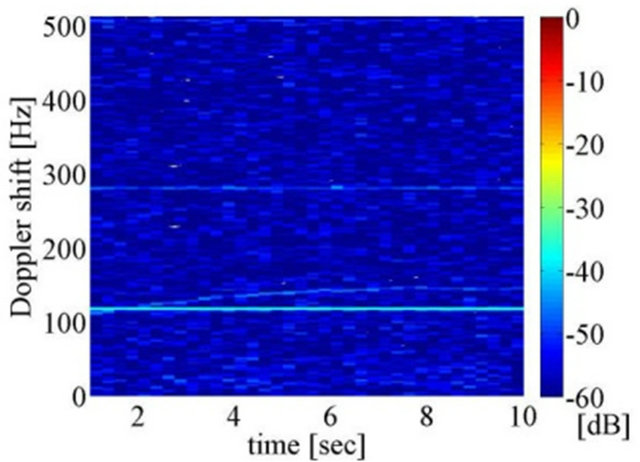

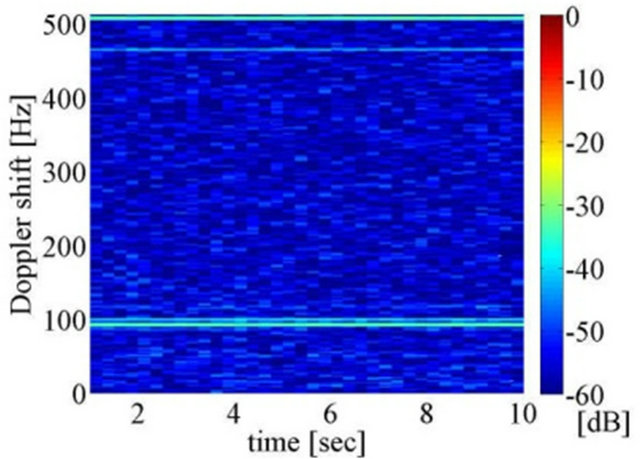

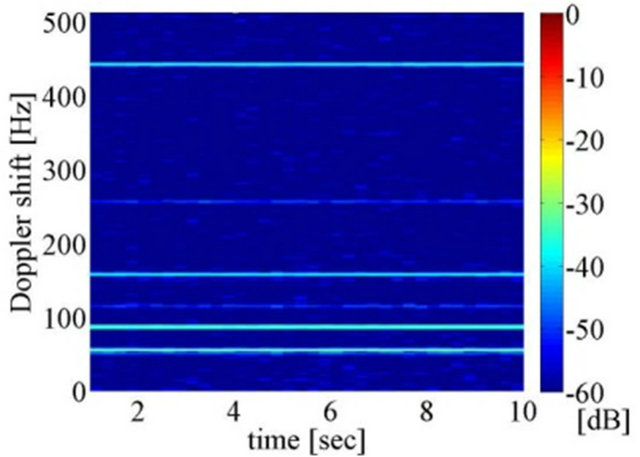

Figures 9-14 show the spectrogram when no subject is moving. The color shows the strength of PSD in dB. The spectrogram should be characterized, in principle, by a lack of partiality of PSD on each Doppler shift. However, several spectrograms show strong PSD in specific Doppler shift, such as for deployment type ii, position Y (the middle of Figure 10, at 100 Hz, or deployment type iv, position X (the left of Figure 12), at 60, 90, 160, and 440 Hz. To find out the factor of this strong PSD, six different types of deployments are tested. When comparing six types of deployment, on each position, the strong PSD occurs on the similar Doppler shift. For instance, in position Y, a strong PSD appears on 100 Hz Doppler shift regardless of deployment type. Accordingly, the strong PSD did not occur because of device impairments, but by the effect of environment.

Table 6. Accuracy of falling detection using one sensor.

Table 7. Relation between position and sensor No.

Figure 9. Spectrogram when no subject is moving in deployment type i. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

Figure 10. Spectrogram when no subject is moving in deployment type ii. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

Figure 11. Spectrogram when no subject is moving in deployment type iii. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

Figure 12. Spectrogram when no subject is moving in deployment type iv. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

Figure 13. Spectrogram when no subject is moving in deployment type v. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

In [6,7], a similar type of constant strong PSD at particular Doppler shift is reported when the Doppler sensor is used through the wall. This appears only on 60 Hz of Doppler shift, namely the strong PSD is caused by the alternate current (AC). The transmitted microwave is attenuated through the wall, and the strong PSD becomes non-negligible. On the other hand, the strong PSD on the result of our experiment appears on several Doppler shifts. This means that it is not caused by AC component. It is considered that the strong PSD comes from the reflection on the wall.

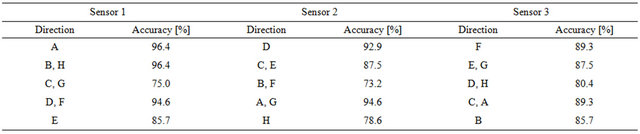

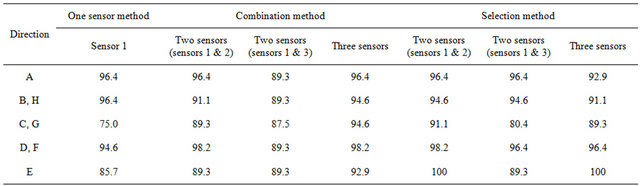

Table 8 shows the accuracy of falling detection for activity directions. Direction A-H corresponds to 8 directions in Figure 8. The relative position as seen from each sensor, in the same row in Table 8, is the same. For instance, direction A relative to sensor 1 is the same as direction D relative to sensor 2. Regardless of sensor No., the accuracy decreases in direction orthogonal to irradiation direction, that is, directions C and G for sensor 1, directions B and F for sensor 2, and directions D and H for sensor 3. This comes from the characteristics that Doppler sensor can figure out the activity through irradiation directions. The direction against the sensor also shows low accuracy. It is considered that the environmental noise, which comes from reflection on the wall, cannot be negligible. When the subject moves far from the sensor, the strength of microwave, which reflects on the body, decreases.

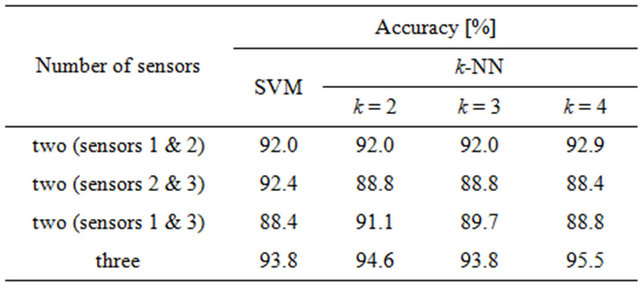

4.3. Feature Combination Method

Table 9 shows the accuracy of falling detection using the combination method. We test with two or three sensors. In particular, when we use two sensors, three types of sensor combinations are tested. In case of two sensors, 92.9% accuracy is achieved when k-NN is used with k set to 4. Just like the result of one sensor method, in Table 6, accuracy depends on the position in which the sensor is set. For instance, when sensors 2 and 3, or 1 and 3 are used, accuracy of falling detection is about 88%. On the other hand, when sensors 1 and 2 are used, an accuracy of 92.9% is achieved using k-NN (k = 4).

By using three sensors, 95.5% accuracy is performed and this is 4.9% higher than the best accuracy of the method using one sensor. In the combination method, three sensors are appropriate for the stable accuracy of falling detection.

Table 11 shows the relation between activity direction and accuracy of falling detection. We use k-NN (k = 4) as a classifier and deployment type is i in Table 7. As seen from sensor 1, B and H, C and G, D and F, are the same directions relative to the sensor.

Figure 14. Spectrogram when no subject is moving in deployment type vi. (left: Pos. X, middle: Pos. Y, right: Pos. Z).

Table 8. Relation between activity directions and accuracy of falling detection (one sensor method).

Table 9. Accuracy of falling detection using the combination method.

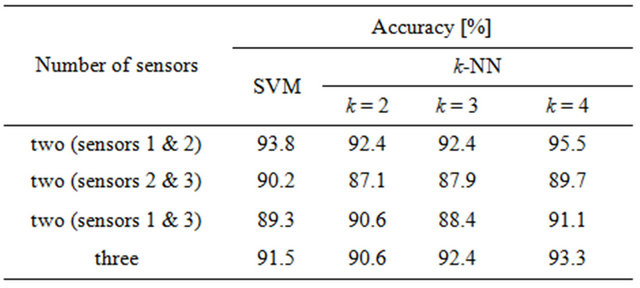

Table 10. Accuracy of falling detection using the selection method.

The accuracy of directions C and G in one sensor method is 75.0%. This is 21.4% lower than the direction A, which is the direction that the subject acts toward the sensor. In the combination method using three sensors, the accuracy of directions C and G is 94.6%. This result indicates that the combination method compensates the drawback of Doppler sensor. When using two sensors, the accuracy of directions C and G is improved compared to that of one sensor method. However, the accuracy depends on the deployment. Thus, three sensors are needed for high accuracy of falling detection and less dependency on deployment in our experiment.

4.4. Feature Selection Method

Table 10 shows the accuracy of falling detection using the selection method. The highest accuracy of falling detection is 95.5%, which occurs when two sensors (1 & 2) are used, and the features are classified by k-NN. However, as mentioned in the one sensor method and the combination method, the difference in accuracy appears in the feature selection methods using two sensors. To alleviate the effect of sensor dependency, we choose three sensor method. Accordingly, the best accuracy in the selection methods is 93.3%, which is in the three sensor method and classified by k-NN (k = 4).

The relation between activity direction and accuracy is shown in Table 11. Compared to one sensor method, the accuracy in the direction orthogonal to irradiation direction is improved. However, it is still relatively low in comparison with the other directions. This is caused by the algorithm to select feature from only one sensor.

In the view of the robustness in the direction orthogonal to irradiation direction, the combination method outperforms the selection method. However, that may not be always the case in practical situations. Consider, for example, the case that we are using multiple sensors, and one of them is obstructed by fallen objects such as furniture or plants. Without line of sight between the target and the sensor, the obstructed sensor cannot receive the Doppler information related to the target motion. The features of the combination method are constructed using features obtained from all the sensors. Thus, the obstructed sensor produces features that are different from the training data. This means that the system that simply combines the features is not tolerant to a situation that the sensors are obstructed by some objects.

Alternatively, the selection method has an advantage in the situation that a part of the sensors is obstructed. Even if one of the multiple sensors is obstructed by some objects, the selection method excludes the data of the obstructed sensor. This is because the decision of which sensor to choose is based on the selection of the largest PSD of 120 - 240 Hz at tmax. The data of the obstructed sensor is like the data in the environment that there are no targets moving around. Thus, the PSD of 120 - 240 Hz becomes smaller than that of the sensor that is not obstructed. Therefore, the selection method is more suitable for practical use.

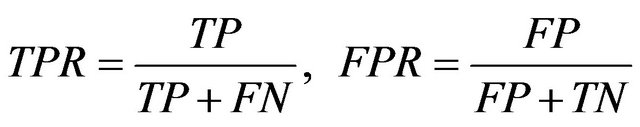

4.5. True Positive Rate and False Positive Rate

When analyzing systems of falling detection, true positive rate (TPR) and false positive rate (FPR) are often used. TPR and FPR are calculated as follows.

(4)

(4)

when FN becomes 0, TPR is equal to 1. Considering that FN is critical on falling detection system, TPR should be near 1. On the other hand, FPR should be near 0 because FP indicates over care. However, there sometimes exists trade-off between TPR and FPR. For practical systems, it is ideal that TPR reaches 1 and FPR reaches 0.

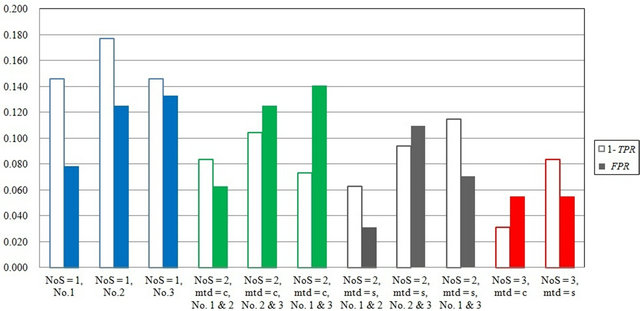

Figure 15 shows FPR and 1-TPR in each method. Both values should be near 0. Abbreviations “NoS,” “mtd = s,” and “mtd = c” in this figure means “Number of sensors,” “method = selection,” and “method = combination,” respectively. Comparing to the conventional method using individual Doppler sensor data, in the proposed method using multiple Doppler sensor data, FPR and 1-TPR decrease.

Table 11. Relation between activity directions and accuracy of falling detection.

Figure 15. Results of False positive rate and True positive rate on each method.

5. Conclusion

This paper proposes falling detection using multiple Doppler sensors. We propose the combination and selection methods to extract features. The combination method achieves 95.5% accuracy of falling detection using k-NN (k = 4). In this method, three sensors are used. Doppler sensors are less sensitive to the direction orthogonal to the irradiation direction than the other directions. However, the combination method compensates this drawback of Doppler sensor and shows the high accuracy in each direction. The selection method achieves 93.3% accuracy using k-NN (k = 4). In this method, three sensors are used. This method also improves the accuracy of the direction orthogonal to the irradiation direction. However, the accuracy of the direction is still relatively low compared to the other directions. Although the selection method does not outperform the combination method in the view of the robustness of activity direction, we consider the idea of selection method to be useful for the practical use. The selection method excludes data of the echoless sensor such as accidentally obstructed by furniture or plants. Our future work is to construct the hybrid method between the combination and selection method.

REFERENCES

- Department of Economic and Social Affairs, “Population Division: World Population Prospects: The 2010 Revision,” United Nations, Department of Economic and Social Affairs, 2011.

- X. Yu, “Approaches and Principles of Fall Detection for Elderly Andpatient,” Proceedings of the 10th International Conference of the IEEE HealthCom, Singapore, 7-9 July 2008, pp. 42-47.

- F. Hijaz, N. Afzal, T. Ahmad and O. Hasan, “Survey of Fall Detectionand Daily Activity Monitoring Techniques,” Proceedings of International Conference on Information and Emerging Technologies, ICIET, Pakistan, 14-16 June 2010, pp. 1-6.

- N. Noury, A. Fleury, P. Rumeau, A. Bourke, G. Laighin, V. Rialle and J. Lundy, “Fall Detection-Principles and Methods,” Proceedings of the 29th Annual International Conference of the IEEE EMBS, Paris, 22-26 August 2007, pp. 1663-1666.

- J. Perry, S. Kellog, S. Vaidya, J. H. Youn, H. Ali and H. Sharif, “Surveyand Evaluation of Real-Time Fall Detection Approaches,” Proceedings of the 6th International Symposium of HONET, Egypt, 28-30 December 2009, pp. 158-164.

- S. Ram, C. Christianson, Y. Kim and H. Ling, “Simulation and Analysis of Human Micro-Dopplers in throughWall Environments,” IEEE Transactions on Geoscience and Remote Sensing, Vol. 48, No. 4, 2010, pp. 2015-2023. doi:10.1109/TGRS.2009.2037219

- Y. Kim and H. Ling, “Human Activity Classification Based on Microdopplersignatures Using a Support Vector Machine,” IEEE Transactions on Geoscience and Remote Sensing, Vol. 47, No. 5, 2009, pp. 1328-1337. doi:10.1109/TGRS.2009.2012849

- F. Tivive, A. Bouzerdoum and M. Amin, “Automatic Human Motion Classification from Doppler Spectrograms,” Proceedings of the 2nd International Workshop of CIP, Elba Island, 14-16 June 2010, pp. 237-242.

- L. Liu, M. Popescu, M. Skubic, M. Rantz, T. Yardibi and P. Cuddihy, “Automatic Fall Detection Based on Doppler Radar Motion Signature,” Proceedings of the 5th International Conference of Pervasive Health, Dublin, 23-26 May 2011, pp. 222-225.

- M. Slaney, “Auditory Toolbox Version 2”. https://engineering.purdue.edu/~malcolm/interval/1998-010/

- C. Chang and C. Lin, “LIBSVM: A Library for Support Vector Machines.” http://www.csie.ntu.edu.tw/~cjlin/libsvm/

- S. S. Keerthi and C.-J. Lin, “Asymptotic Behaviors of Support Vector Machines with Gaussian Kernel,” MIT Press Journals, Vol. 15, No. 7, 2003, pp. 1667-1689.

- C. J. Burges, “A Tutorial on Support Vector Machines for Pattern Recognition,” Data Mining and Knowledge Discovery, Vol. 2, No. 2, 1998, pp. 121-167. doi:10.1023/A:1009715923555

- V. N. Vapnik, “The Nature of Statistical Learning Theory,” 2nd Edition, Springer, New York, 1999.

- H. Zhang, A. Berg, M. Maire and J. Malik, “SVM-KNN: Discriminative Nearest Neighbor Classification for Visual Category Recognition,” Proceedings of the IEEE Conference of CVPR, New York, 17-22 June 2006, pp. 2126- 2136.