Journal of Signal and Information Processing

Vol.3 No.1(2012), Article ID:17634,4 pages DOI:10.4236/jsip.2012.31005

Retinal Identification System Based on the Combination of Fourier and Wavelet Transform*

![]()

Nuclear Science and Technology Research Institute, Tehran, Iran.

Email: {msabaghi, rhadian}@aeoi.org.ir, fattahi.mh2000@gmail.com, kouchaki@alum.sharif.edu, aali_zahedi@yahoo.com

Received September 30th, 2011; revised October 28th, 2011; accepted November 15th, 2011

Keywords: Component; Formatting; Style; Styling; Insert

ABSTRACT

Retinal image is one of the robust and accurate biometrics. A new biometric identification system base on combination of Fourier transform and that special partitioning and wavelet transform presented in this article. In this method, at first, optical disc is localized using template matching technique and use it for rotate the retinal image to reference position. Angular partitioning with the special structure on magnitude spectrum of retinal image and Wavelet Transform is used for feature definition. Finally we employ Euclidean distance for feature matching. The proposed method applied on a database consist 400 retinal images from 40 persons. In this article noisy and rotate retinal image are used in identification process. 99.1% identification rate can achieve in this proposed method.

1. Introduction

Biometric is the use of distinctive biological or behavioral characteristics to identify people. Biometric systems are now being used for large national and corporate security project, and their effectiveness rests on an understanding of biometric system and data analysis [1]. Some commonly identification method include: voice, fingerprint, face, hand geometry, facial thermo gram, iris, retina [2]. Less change in vessels pattern during life, high security, more reliability and stability are important feature which exist in retinal image [2-3]. This traits make retina as a robust approach in person identification. Different algorithms have been utilized for human identification. Shahnazi et al. [4] extract blood vessels pattern and then used 2 level Daubechies wavelet for decomposition and extract wavelet energy as a feature.

In [5] presented an approach based on localizing the optical disk using Haar wavelet and active Contour model and used for rotation compensation and also FourierMellin transform coefficients and complex moment magnitudes of the rotated retinal image have been used for feature definition.

In [3] extract blood vessel pattern and then obtain vessels information around of optical disc for recognition. Ortega et al. [6] used a fuzzy circular Hough transform to localize the optical disk in the retinal image. Then, they defined feature vectors based on the ridge endings and bifurcations from vessel obtained from a crease model of the retinal vessels inside the optical disk. For matching, they adopted a similar approach as in [7] to compute the parameters of a rigid transformation between feature vectors which gives the highest matching score. This algorithm is more computationally efficient in comparison with the algorithm presented in [7]. However, the performance of the algorithm has been evaluated using a very small database including only 14 subjects.

As mentioned before, pre processing based on blood vessel extraction increase the computational cost of the algorithm. In this paper a new robust feature extraction method without any pre processing phase has been proposed to reduce computational time and complexity. This proposed method is based on angular partitioning of the frequency spectrum information of retinal image by a new special structure and Wavelet Transform.

In the proposed method we have used angular partitioning with the special structure on magnitude spectrum of retinal image and Wavelet Transform for feature extraction.

This article is in 6 sections as follow: Section 2 describes Anatomy of the retina in Section 3 localizing optic disk and regulated retinal image to reference position. In Section 4 represent Fourier transform coefficient and Wavelet Transform to obtain feature vector. In Section 5, represent the experimental result of propose method and Section 6 gives a conclusion.

2. Retinal Anatomy

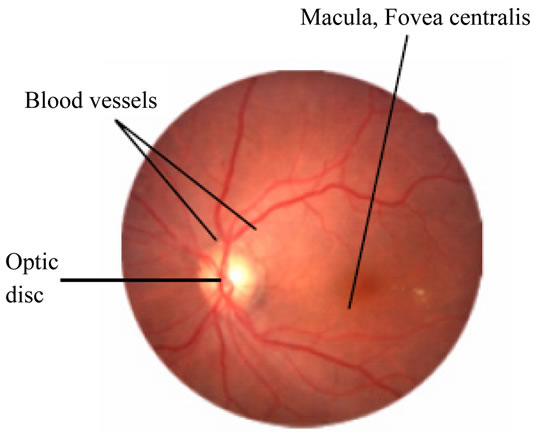

Before The retina is a multi-layered sensory tissue that lines the back of the eye. It contains millions of photoreceptors that capture light rays and convert them into electrical impulses. These impulses travel along the optic nerve to the brain where they are turned into images. Optic disc is brighter than other parts of the retina and is normally circular in shape and has a diameter of almost 3 mm. It is also the entry and exist point for nerves entering and leaving the retina to and from the brain. Fovea or the “yellow spot” is a very small area at the center of retinal that is most sensitive to light and is responsible for our sharp central vision [5] (Figure 1).

3. Using Compensation of Undesired Rotation

Because of anatomic movement during imaging process, some rotation occurred in retinal images. These rotations cause some problem in feature extraction and matching phase of retinal image recognition. To achieve a robust method, rotation compensation is needed. To determine the rotation angle of the retinal image, at first, optical disk has been localized by template matching technique [3]. For this purpose green plane of retinal image is used and a template image is considered. The template image is constructed by selection a rectangular region around the optical disc. Retinal image is correlated by template image to find most bright region in the retina, as shown in Figure 2. This point is an approximation of center of optical discposition.

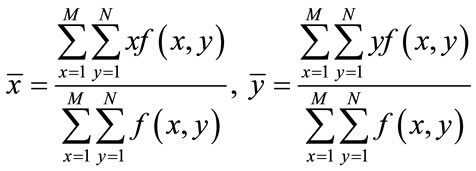

In the second step, the center of optical disc and image center of mass are used to determine the required rotation angle and then the undesired rotation of the scanned image of retina is compensated by applying the opposite rotation. To locate image center of mass for a M × N image the following equation are use:

(1)

(1)

After localization of optic disc and center of mass points, we calculate angle between baseline and the line passing these two points as shown in Figure 3.

We then compensate for the rotation by applying opposite rotation to input image.

4. Feature Extraction

Our proposed feature extraction method is based on Fourier transform of retinal images and Two-dimensional Wavelet Transform.

Figure 1. Retinal anatomy [5].

(a) (b) (c)

(a) (b) (c)

Figure 2. Template matching technique for optical disk localization: (a) Original image; (b) Template; (c) Correlated image.

(a) (b) (c)

(a) (b) (c)

Figure 3. The result of regulate retinal image to reference position: (a) Retinal image after localized center of mass and optical disk; (b) Obtain the angle of rotate; (c) Compensated image by rotation.

4.1. Fourier Transform

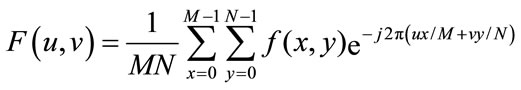

First without any preprocessing, Fourier transform has been applied to raw retinal images. Two-dimensional discrete Fourier transform of input image is calculated using the following equation:

(2)

(2)

where f(x,y) is image intensity of size M × N and the variable u and v are the frequency variable [8]. Fourier spectrum and phase angle are defined as follows:

(3)

(3)

(4)

(4)

where R(u,v) an I(u,v) are the real and imaginary part of F(u,v) ,respectively. For using amplitude information, the Fourier spectrum of retinal image is generated [alizahedi].

In the second step of feature extraction, a new partitioning is introduced, based on dividing the Fourier spectrum to several half circle with the same center around the central of spectrum that include segments with same area and same degree arc. The pixels near to the center of spectrum are unvalued because these pixels include only low frequency information of the image that depends on average gray level of the image. Also the pixels that have more than 105 pixel distance from the center of spectrum didn’t have any useful information. Because of symmetrical property of the spectrum, partitioning for feature extraction only include upper half circle of the spectrum and underneath half circle have been neglected to decrease the dimension of feature vector. The magnitude spectrum of image divided to N parts with same area called partition as described in previous. The radius of selected half circle started from 5 to 105 pixels. The number of the partitions (N) can be varied, we selected N = 24. After the partitioning of the spectrum image of the retina, the energy of each partition used for construction the feature vector [alizahedi]. Energy of each part as defined as Equation (5):

(5)

(5)

Finally, the vector is scaled to smaller range to achieve a better comparison between the retinal images in the same scale. This normalized vector is named Fourier Energy Feature (FEF).

4.2. Wavelet Transform

In this section we use an approach that describe in [4].

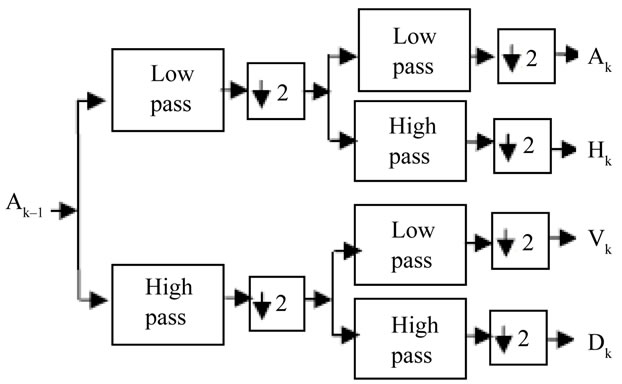

Kth-level wavelet decomposition is shown in Figure 4, where Ak–1 is the approximation coefficients of the (K – 1)th-level decomposition, Ak, Hk, Vk and Dk are the approximation, horizontal, vertical and diagonal detail coefficients of the Kth-level decomposition, respectively. A0 is the original image I. So after decomposed on Jth-level, the original image I is represented by 3J + 1 sub images Aj, . The wavelet energy in horizontal, vertical and diagonal direction at ith level can be, respectively, defined as: (6).

. The wavelet energy in horizontal, vertical and diagonal direction at ith level can be, respectively, defined as: (6).

(6)

(6)

These energies reflect the strength of the images detail-

Figure 4. Kth-level wavelet decomposition.

sin different direction at the ith wavelet decomposed level [4]. So the feature vector is as (6)

(7)

(7)

where M is the total wavelet decomposition level, can describe the global details features of a blood vessels effectively. The vectors computed from Equations (6)-(7) are global features of a blood vessels. These features extracted from the whole images don’t preserve the information concerning the special location of different details, so its ability to describe a retina is weak. In order to deal with this problem, we can divide the detail images into non-overlap blocks equally and then compute the energy of each block [4]. Thirdly, the energies of all blocks are used to construct a vector. Finally, the vector is normalized by total energy. This normalized vector is named wavelet energy feature (WEF) [4]. If an image is decomposed to J level, the length of its WEF is 3·S·S·J. Each retinal image is decomposed to M = 2 level Haarwavelet, and each wavelet details image is divided into 2 * 2 blocks to construct WEF. So the length of WEF is 24.

Our proposed identification system includes the following phases. In the registration phase of the persons, a number of images scanned from each person, then after rotation compensation of the captured retinal image, FEF and WEF of all image are extracted and registered in a Data Base.

In the test phase, FEF and WEF of the test retinal image is computed, and then compares with all feature vector of retinal images in the Data Base; finally find the image in Data Base by the minimum Euclidean distance and select it as the identified person.

5. Experimental Results

The proposed system was fully software implemented and have been tested on a data base including 40 retina images from DRIVE [3] data base. We increase the images to 400 retinal images from 40 subjects. For each subject we use 10 images.

First image is original one and 5 next images were ro-

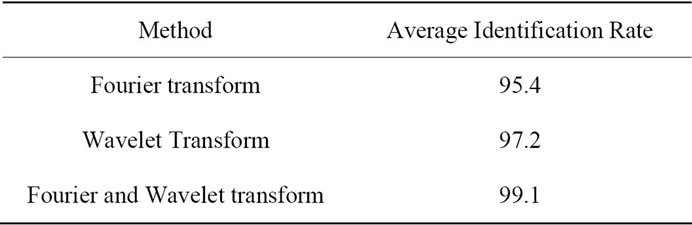

Table 1. Comparison between results of different partition.

tated images by a random angle. White Gaussian noise is added to the original images to generate 3 noisy image and the 10th image is a noisy and rotated one. all the experiment are conducted with Matlab 7 on PIV 1 G, 512 M RAM pc.

The proposed method is evaluated by a test routine as follow: Euclidean distance between each retinal feature vector and all of the others in feature vector data base were calculated. Identified person is determined as corresponding minimum distance. Accuracy of the identification process is presented in Table 1.

6. Conclusion

In this article, a method for human identification system based on retinal image processing using Fourier and Wavelet transform and new special partitioning was proposed. This approach is robust to rotation and noise; in addition, Because of the small feature vector and the extraction vessel this proposed method simple and has low computational complexity. Feature vector generated in this approach have useful information about vessel density and vessels direction in the image.

REFERENCES

- T. Dunstone and N. Yager, “Biometric System and Data Analysis,” Springer, New York, 2008, pp. 529-548.

- S. Nanavati, M. Thieme and R. Nanavati, “Biometrics Identity Verification in a Networked World,” John Wiley & Sons, Inc., New York, 2002.

- H. Farzin, H. A. Moghaddam and M. S. Moin, “A Novel Retinal Identification System,” EURASIP Journal on Advances in Signal Processing, Vol. 2008, 2008, Article ID: 280635.

- M. Shahnazi, M. Pahlevanzadeh and M. Vafadoost, “Wavelet Based Retinal Recognition,” 9th International Symposium on Signal Processing and Its Applications (ISSPA), Sharjah, February 2007, pp. 1-4.

- H. Tabatabaee, A. Milani-Fard and H. Jafariani, “A Novel Human Identifier System Using Retina Image and Fuzzy Clustering Approach,” Proceedings of the 2nd IEEE International Conference on Information and Communication Technologies (ICTTA06), Damascus, April 2006, pp. 1031-1036.

- M. Ortega, C. Marino, M. G. Penedo, M. Blanco and F. Gonzalez, “Biometric Authentication Using Digital Retinal Images,” Proceedings of the 5th WSEAS International Conference on Applied Computer Science (ACOS06), Hangzhou, April 2006, pp. 422-427.

- Z. W. Xu, X. X. Guo, X. Y. Hu and X. Cheng, “The Blood Vessel Recognition of Ocular Fundus,” Proceedings of the 4th International Conference on Machine Learning and Cybernetics (ICMLC05), Guangzhou, August 2005, pp. 4493-4498.

- R. C. Gonzalez and R. E. Woods, “Digital Image Processing,” Pearson Education Inc., New Delhi, 2003, pp. 548-560.

NOTES

*This work was supported in part by N.S.T.R.I Tehran, Iran.