Open Journal of Optimization

Vol.2 No.1(2013), Article ID:29488,5 pages DOI:10.4236/ojop.2013.21005

A Variable Metric Algorithm with Broyden Rank One Modifications for Nonlinear Equality Constraints Optimization

1School of Electronic Engineering and Automation, Guilin University of Electronic Technology, Guilin, China

2School of Mathematics and Computing Science, Guilin University of Electronic Technology, Guilin, China

Email: huchyel@hotmail.com

Received January 17, 2013; revised February 18, 2013; accepted March 8, 2013

Keywords: Equality Constrained Optimization; Variable Metric Algorithm; Broyden Rank One Modification; Superlinear Convergence

ABSTRACT

In this paper, a variable metric algorithm is proposed with Broyden rank one modifications for the equality constrained optimization. This method is viewed expansion in constrained optimization as the quasi-Newton method to unconstrained optimization. The theoretical analysis shows that local convergence can be induced under some suitable conditions. In the end, it is established an equivalent condition of superlinear convergence.

1. Introduction & Algorithm

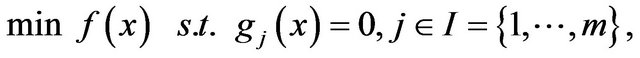

This In this paper, it is proposed to consider the following nonlinear mathematical programming problem:

(1)

(1)

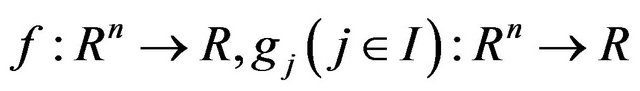

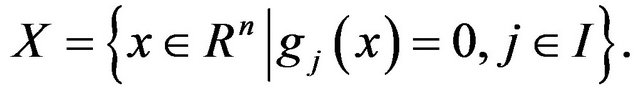

where  are continuously differentiable functions. Denote the feasible set as follows:

are continuously differentiable functions. Denote the feasible set as follows:

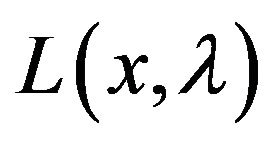

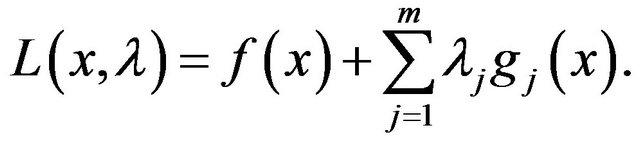

Let  be Lagrangian function of (1), and

be Lagrangian function of (1), and

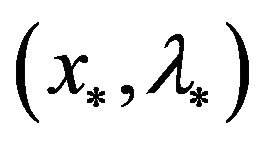

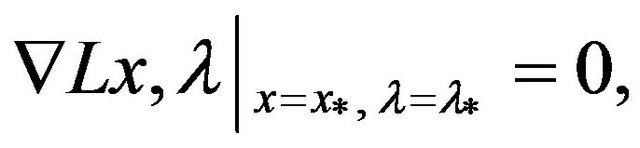

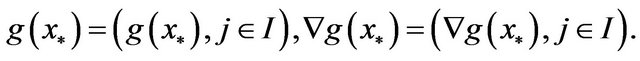

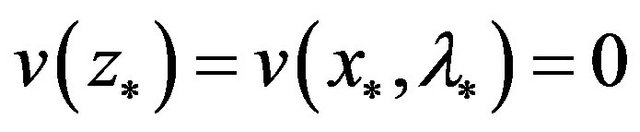

If  is a KKT point pair of Equation (1), then

is a KKT point pair of Equation (1), then

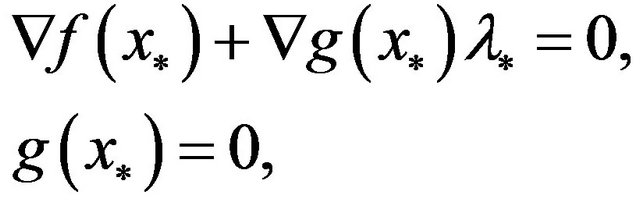

i.e.,

i.e.,

(2)

(2)

where

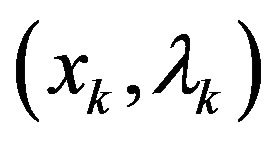

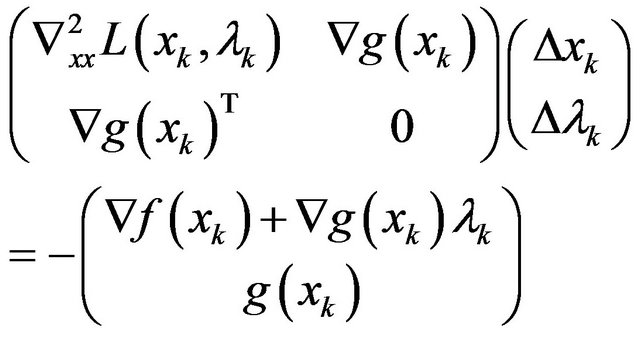

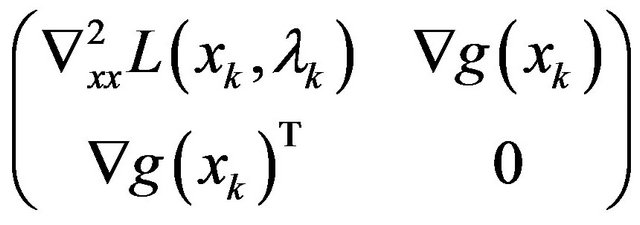

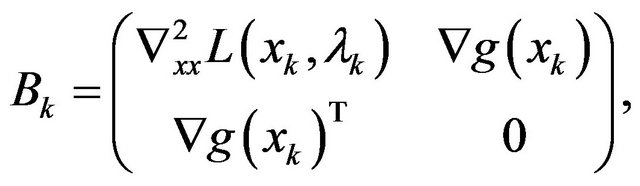

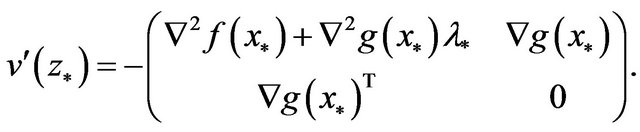

At the point pair , the Newton’s iteration of (2) is defined as follows:

, the Newton’s iteration of (2) is defined as follows:

(3)

(3)

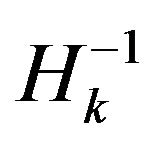

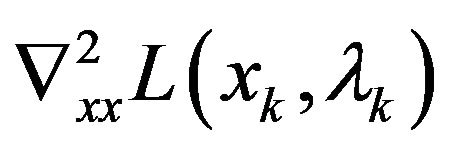

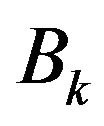

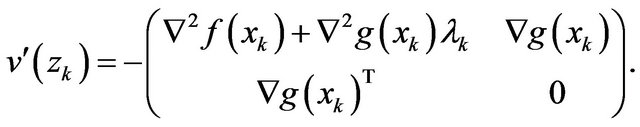

Later, a positive definite matrix  is replaced for

is replaced for

by a lot of authors to develop some kinds of variable metric methods, such as sequential quadratic programming (SQP) methods [1-5], sequential systems of linear equations (SSLE) algorithms [6-8]. In general, the computational cost of those methods is large.

by a lot of authors to develop some kinds of variable metric methods, such as sequential quadratic programming (SQP) methods [1-5], sequential systems of linear equations (SSLE) algorithms [6-8]. In general, the computational cost of those methods is large.

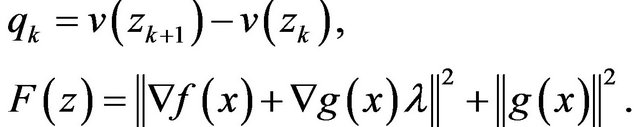

In this paper, a new variable metric method is presented, in which the following fact is based on: a positive definite matrix is replaced for the matrix

is replaced for the matrix

.

.

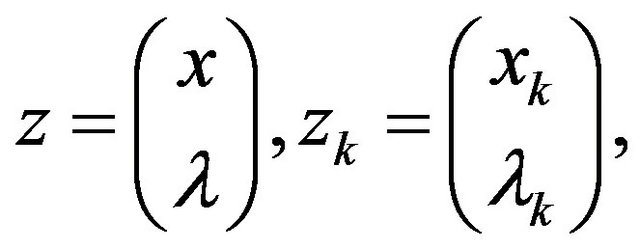

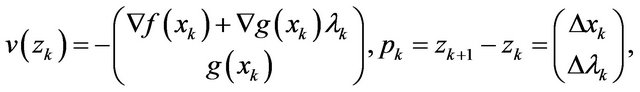

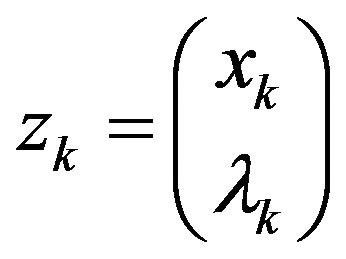

In the sequel, we describe the algorithm for the solution of (1). Denote

It is obvious that  and from Equation (3), we have

and from Equation (3), we have

(4)

(4)

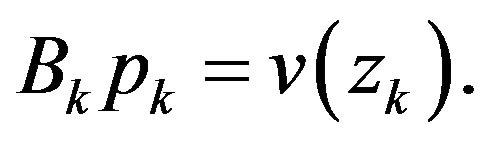

To the system of linear Equations (4), like unconstrained optimization,  is dealt with using Broyden rank one modifications as follows:

is dealt with using Broyden rank one modifications as follows:

(5)

(5)

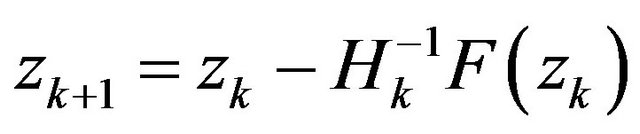

Now, the algorithm for the solution of Equation (1) can be stated as follows:

Algorithm A:

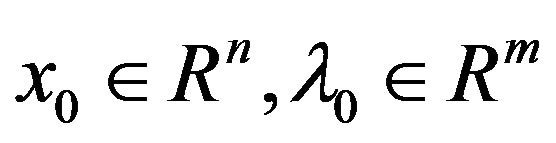

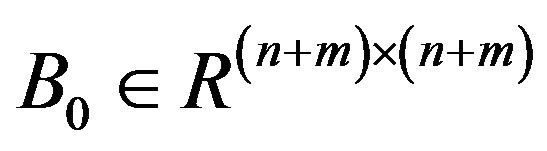

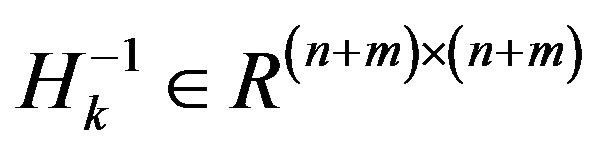

Step 1: Initialization: Given a starting point  (i.e.,

(i.e., ), and a initial positive definite matrix

), and a initial positive definite matrix .

.

Step 2: Compute . If

. If , stop;

, stop;

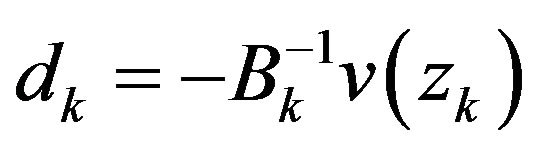

Step 3: Compute ;

;

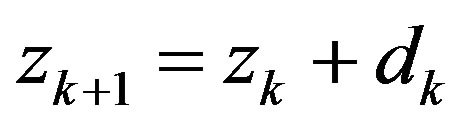

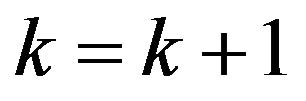

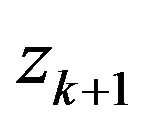

Step 4: Let , and obtain

, and obtain  according to (5). Set

according to (5). Set . Go back to step 2.

. Go back to step 2.

2. Convergence of Algorithm

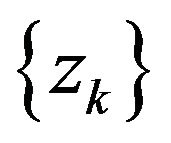

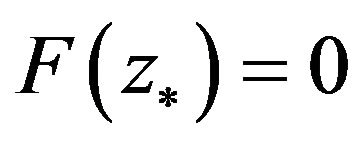

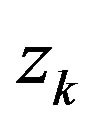

If the algorithm stops at , then

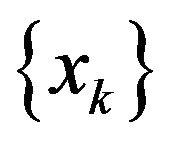

, then  is a KKT point of (1). In the sequel, we suppose that algorithm generates an infinite sequence

is a KKT point of (1). In the sequel, we suppose that algorithm generates an infinite sequence .

.

Four basic assumptions are given as follows:

A1 The feasible set  is nonempty; The functions

is nonempty; The functions

are two-times continuously differentiable;

are two-times continuously differentiable;

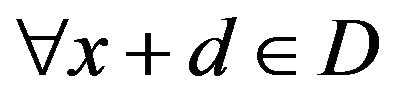

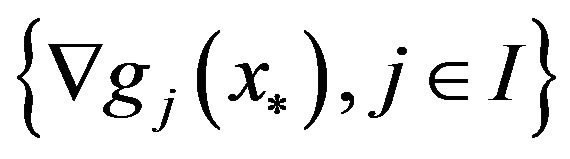

A2 For all , the vectors

, the vectors  are linearly independent;

are linearly independent;

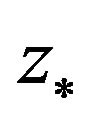

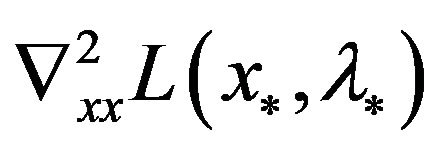

A3  and

and  are bounded. There exists a KKT point pair

are bounded. There exists a KKT point pair , such that

, such that  is positive definite;

is positive definite;

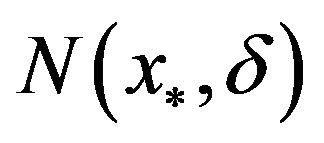

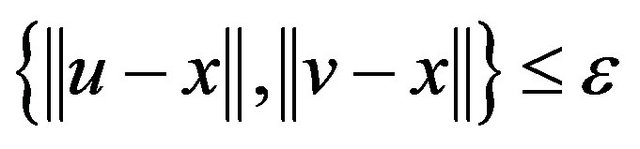

A4 There exists a ball  of radius

of radius  about

about , where

, where  satisfy the Lipschitz condition on

satisfy the Lipschitz condition on .

.

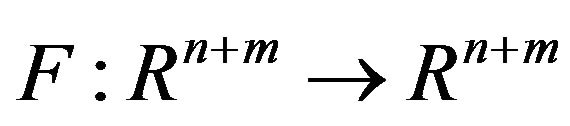

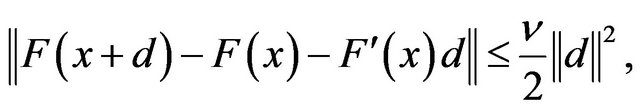

Lemma 1 [9] Let  be continuously differentiable in some open and convex set

be continuously differentiable in some open and convex set , and

, and  is Lipschitz continuous in

is Lipschitz continuous in , then

, then , it holds that

, it holds that

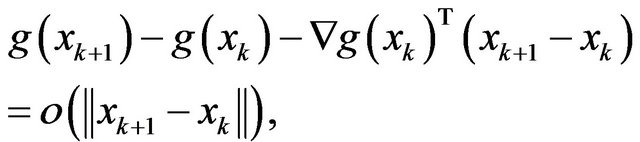

where  is the Lipschitz constant. Moreover,

is the Lipschitz constant. Moreover,

, it follows that

, it follows that

Lemma 2 [9] Let  be continuously differentiable in some open and convex set

be continuously differentiable in some open and convex set , and

, and  is Lipschitz continuous in

is Lipschitz continuous in . Moreover, assume

. Moreover, assume is invertible for some

is invertible for some , then there exist some

, then there exist some , such that for all

, such that for all , the fact

, the fact

implies that

implies that

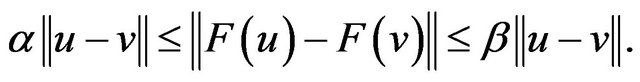

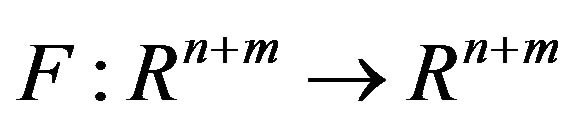

Lemma 3 [9] For operator , which satisfies:

, which satisfies:

R1  is continuously differentiable on

is continuously differentiable on ;

;

R2 There exists a point , such that

, such that , and

, and  is reversible;

is reversible;

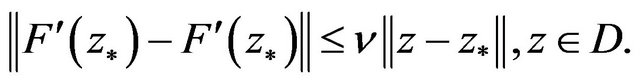

R3  is Lipschitz continuous at

is Lipschitz continuous at , i.e., there exists a constant

, i.e., there exists a constant , such that

, such that

Let , where

, where , and it holds that

, and it holds that

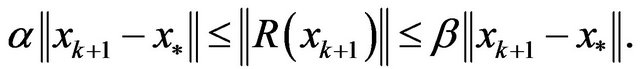

(6)

(6)

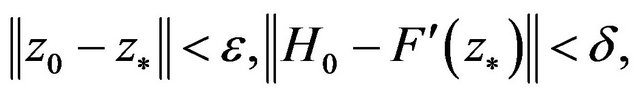

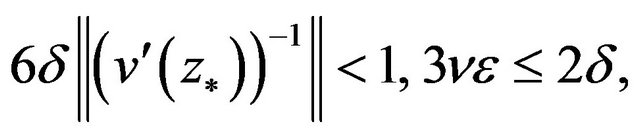

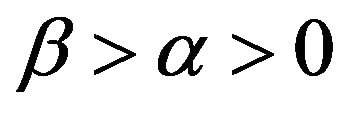

then there exist  and

and , when

, when

it is true that  is meaning, and

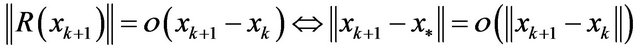

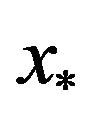

is meaning, and  is linearly convergent to

is linearly convergent to . Thereby, we can conclude that

. Thereby, we can conclude that  is superlinearly convergent to

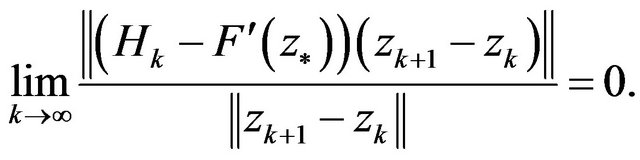

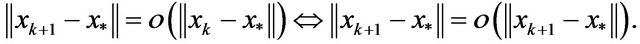

is superlinearly convergent to , if and only if

, if and only if

(7)

(7)

In the sequel, we prove the convergence Theorem as follows:

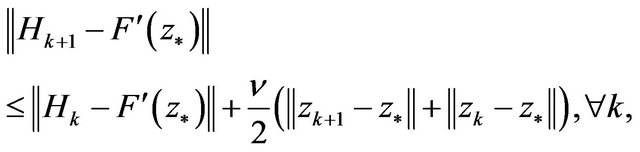

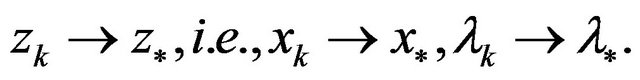

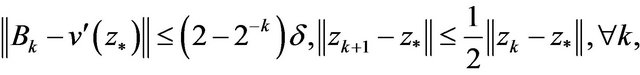

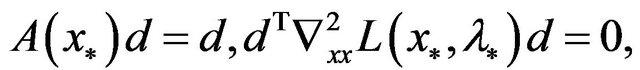

Theorem 1 If there exist constants  and

and  such that

such that

then  is meaning, and

is meaning, and  is linearly convergent to

is linearly convergent to

, thereby

, thereby  and

and  are linearly convergent to

are linearly convergent to  and

and  respectively.

respectively.

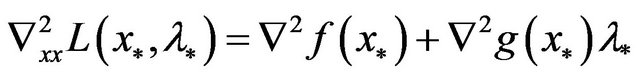

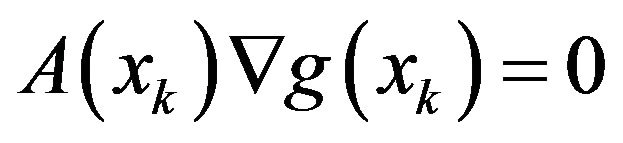

Proof: From Lemma 3, we only prove that  and

and  satisfy conditions R1, R2, R3 and the inequality (6). From assumption A1, it is obvious that

satisfy conditions R1, R2, R3 and the inequality (6). From assumption A1, it is obvious that  is continuously differentiable. From A3, it holds that

is continuously differentiable. From A3, it holds that , and

, and

While  is positive definite, and

is positive definite, and  are linearly independent. So, it is easy to see that

are linearly independent. So, it is easy to see that  is reversible. In addition,

is reversible. In addition,

From assumption A4, it holds that  is Lipschitz continuous at

is Lipschitz continuous at . In a word,

. In a word,  satisfies the conditions R1, R2, R3.

satisfies the conditions R1, R2, R3.

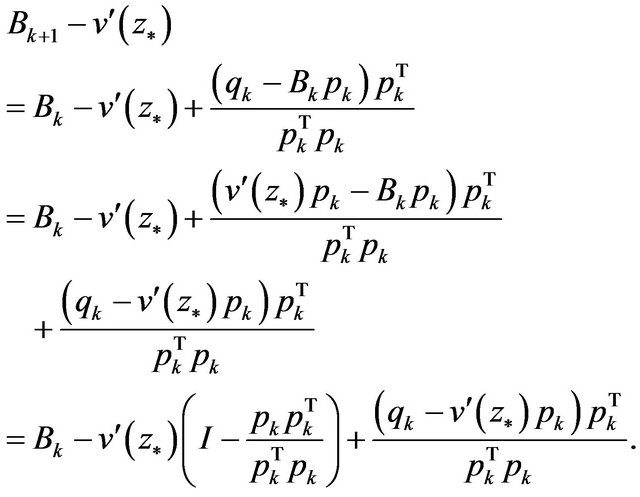

Now, we prove that, for  and

and  generated according to (5), (6) is satisfied.

generated according to (5), (6) is satisfied.

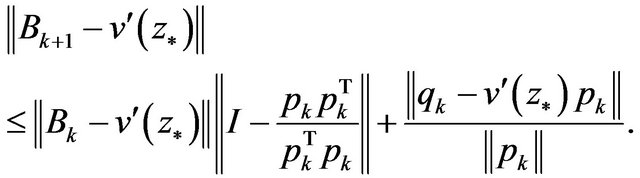

In fact, from (5), we have

(8)

(8)

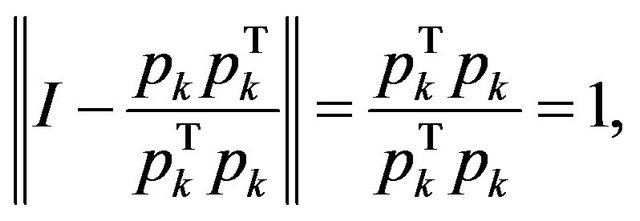

So,

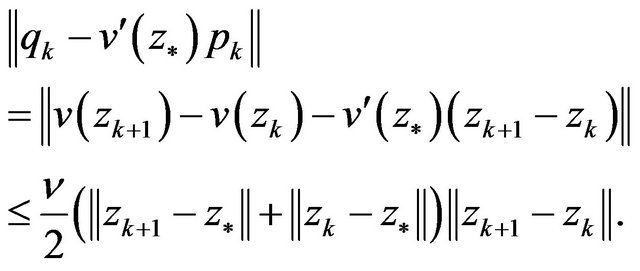

While

and from Lemma 1, we have

(9)

(9)

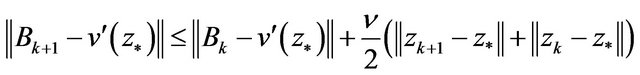

So,

i.e., (6) is true, thereby, from Lemma 3, we get

i.e., (6) is true, thereby, from Lemma 3, we get

The claim holds.

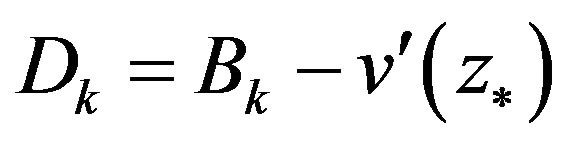

Theorem 2 Under above-mentioned assumptions, if ,

,  in Theorem 1 satisfy that

in Theorem 1 satisfy that

(10)

(10)

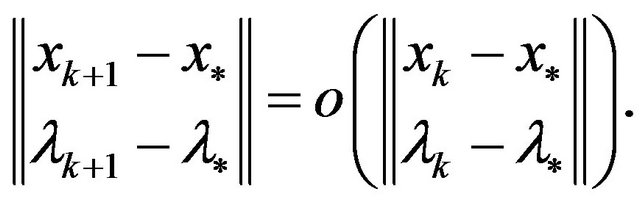

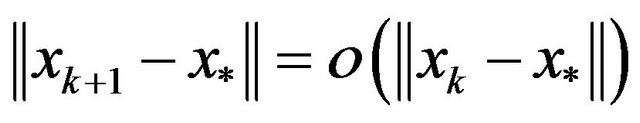

then  is superlinearly convergent to

is superlinearly convergent to , i.e.,

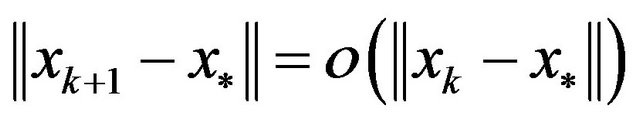

, i.e.,

Proof. From Lemma 3, we only prove that  and

and

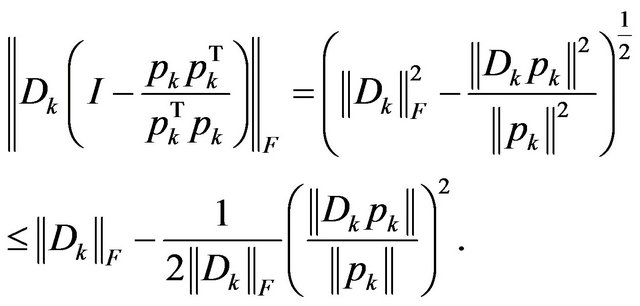

satisfy (7). Denote ,

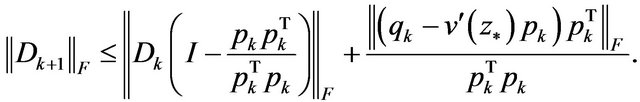

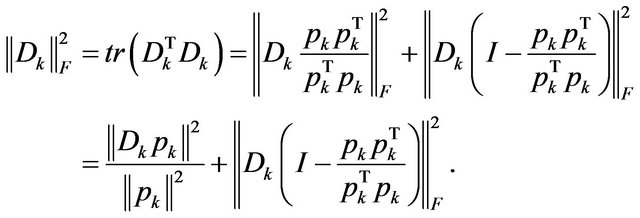

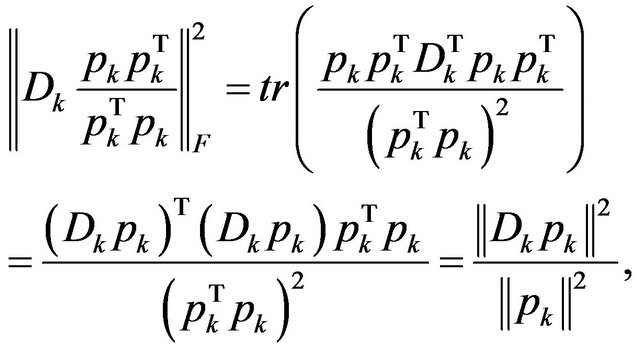

,  norm,

norm,  Frobenius norm. From (8),

Frobenius norm. From (8),

(11)

(11)

Since

So,

(12)

(12)

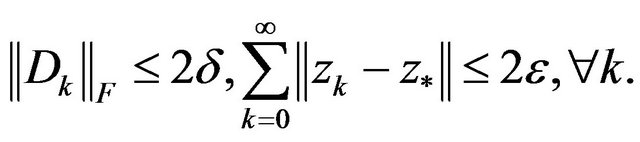

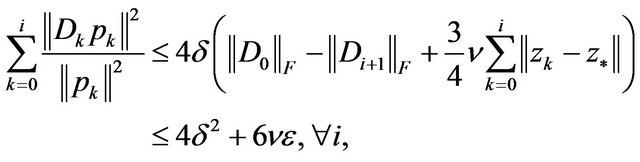

In addition, from (6), (10), using the method of mathematical induction, it is not difficult to prove that

(13)

(13)

thereby,

(14)

(14)

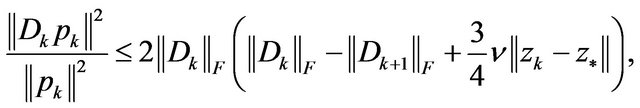

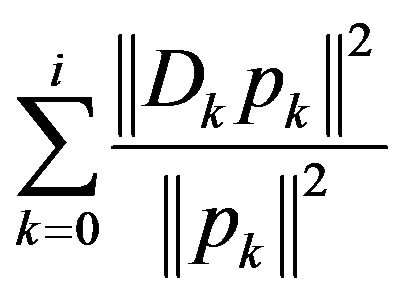

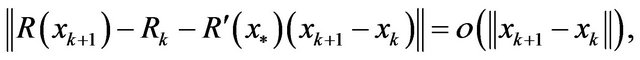

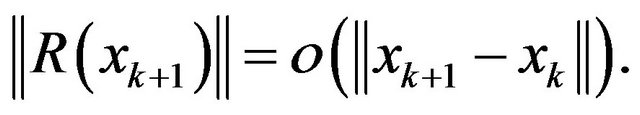

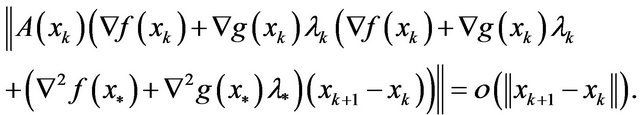

Thus, from (9), (12), (13), we have

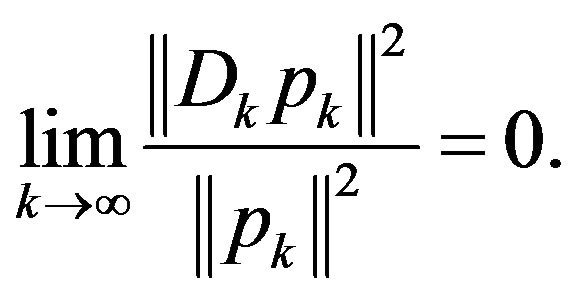

i.e.  is convergent, thereby,

is convergent, thereby,

From Theorem 2, we don’t conclude that  is superlinearly convergent to

is superlinearly convergent to , i.e.,

, i.e.,

In the sequel, we discuss one condition which assures that

In the sequel, we discuss one condition which assures that  is superlinearly convergent to

is superlinearly convergent to .

.

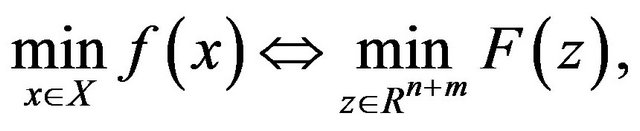

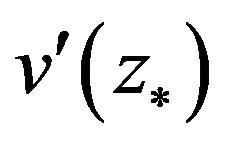

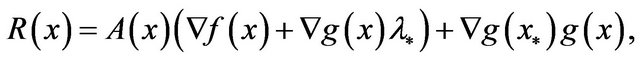

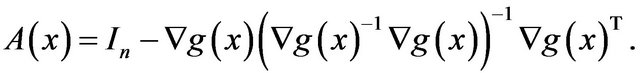

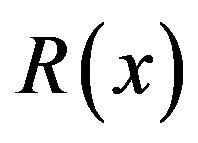

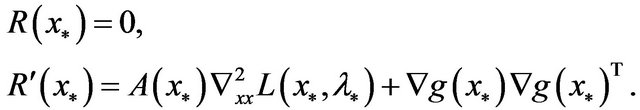

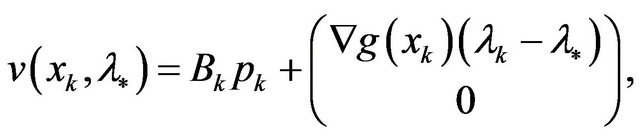

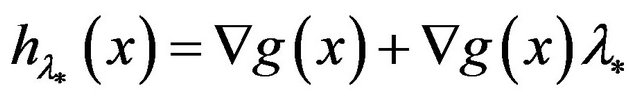

Lemma 4 Let  be a function defined by

be a function defined by

where

Then,  , if and only if

, if and only if

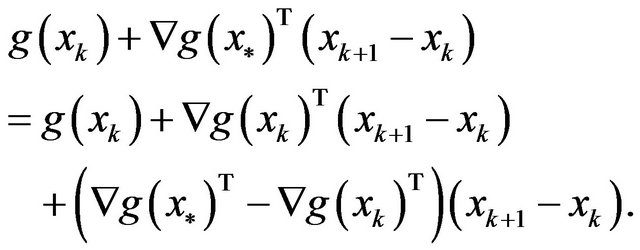

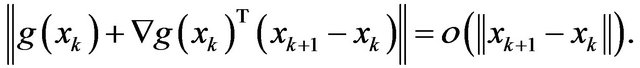

Proof. Obviously, using the triangle inequality, it holds that

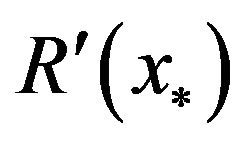

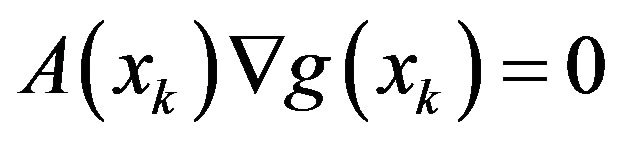

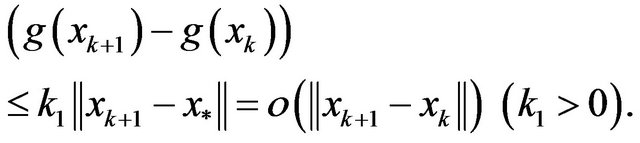

In addition, from A1, we know that  is continuously differentiable, and

is continuously differentiable, and

(15)

(15)

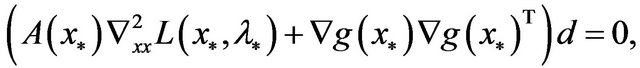

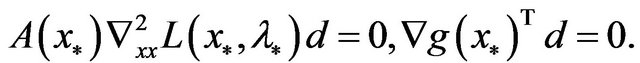

It holds that  is nonsingular. In fact, let

is nonsingular. In fact, let , and

, and

the facts that  has full rank,

has full rank,  is semi-positive definite and

is semi-positive definite and  is positive definite imply that

is positive definite imply that

So,

which is a contradiction.

Thereby, from A4 and Lemma 2, there exist  , such that

, such that

So,

.

.

The claim holds.

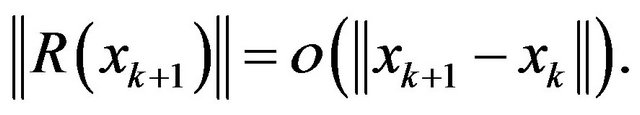

Theorem 3 Under above-mentioned conditions,

is superlinearly convergent to if and only if

if and only if

and  i.e.,

i.e.,

(16)

(16)

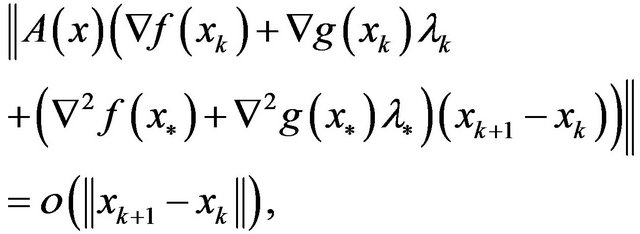

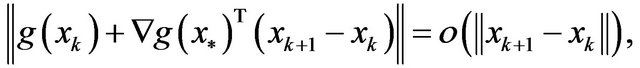

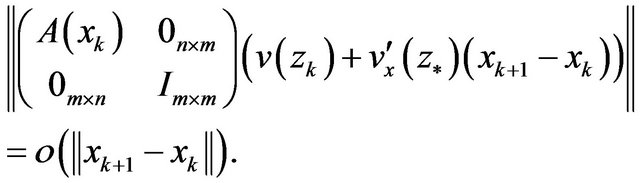

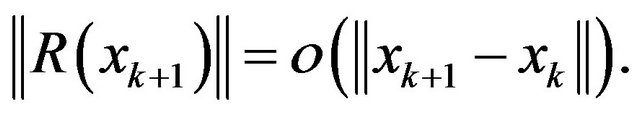

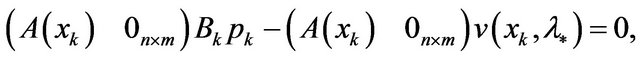

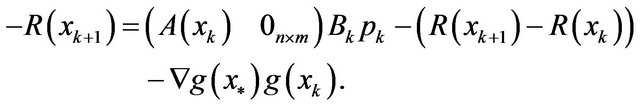

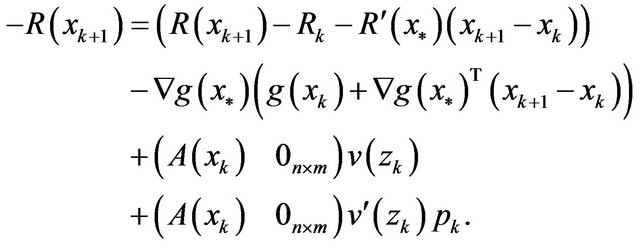

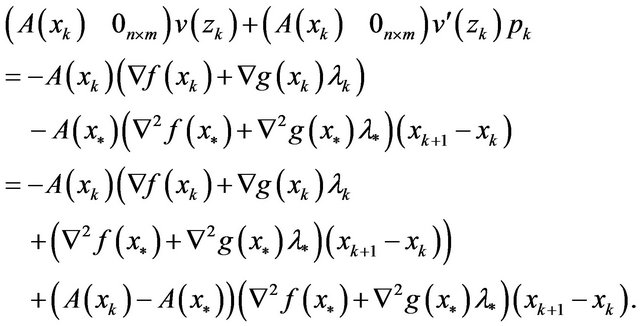

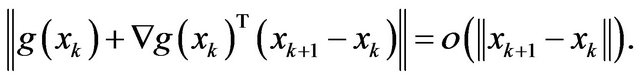

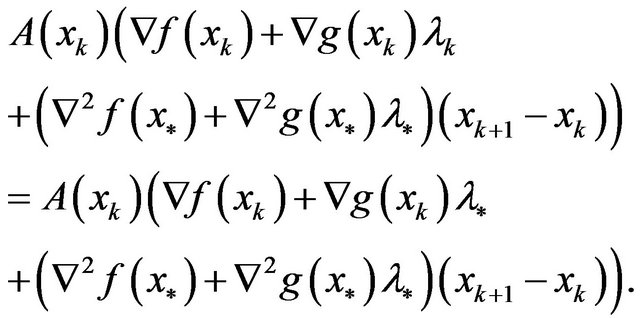

Proof. The sufficiency: Suppose that (16) holds. We only prove that

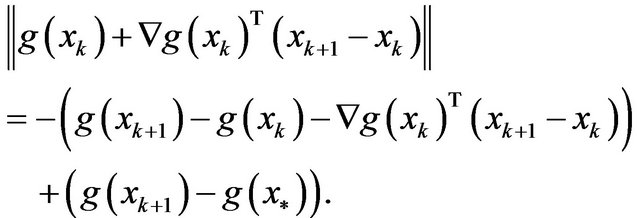

From (4), we have

So, the fact  implies that

implies that

thereby,

From (15), we have

So,

So,

While

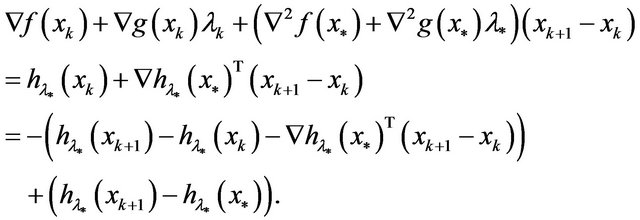

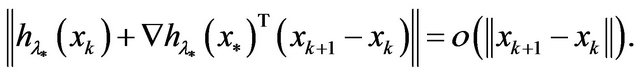

In addition, according to Lemma 1, we have

so, from (16), it holds that

The necessity: Suppose that

i.e.,

On one hand,

So, in order to prove that

While

According to Lemma 1, we have

and

So,

On the other hand, the fact  implies that

implies that

Let , then

, then

It is easy to see that

It is easy to see that

Thereby,

The claim holds.

3. Acknowledgements

This work was supported in part by NNSF (No. 11061 011) of China and Guangxi Fund for Distinguished Young Scholars (2012GXSFFA060003).

REFERENCES

- M. J. D. Powell, “A Fast Algorithm for Nonlinearly Constrained Optimization Calculations,” In: G. A. Watson, Ed., Numerical Analysis, Springer-Verlag, Berlin, 1978, pp. 144-157. doi:10.1007/BFb0067703

- S.-P. Han, “Superlinearly Convergent Variable Metric Algorithm for General Nolinear Programming Problem,” Mathematical Programming, Vol. 11, No. 1, 1976, pp. 263-282. doi:10.1007/BF01580395

- D. Q. Mayne and E. Polak, “A Superlinearly Convergent Algorithm for Constrained Optimization Problems,” Mathematical Programming Studies, Vol. 16, 1982, pp. 45- 61. doi:10.1007/BFb0120947

- J.-B. Jian, C.-M. Tang, Q.-J. Hu and H.-Y. Zheng, “A Feasible Descent SQP Algorithm for General Constrained Optimization without Strict Complementarity,” Journal of Computational and Applied Mathematics, Vol. 180, No. 2, 2005, pp. 391-412. doi:10.1016/j.cam.2004.11.008

- Z. Jin and Y. Q. Wang, “A Simple Feasible SQP Method for Inequality Constrained Optimization with Global and Superlinear Convergence,” Journal of Computational and Applied Mathematics, Vol. 233, No. 1, 2010, pp. 3060- 3073. doi:10.1016/j.cam.2009.11.061

- Y.-F. Yang, D.-H. Li and L. Q. Qi, “A Feasible Sequential Linear Equation Method for Inequality Constrained Optimization,” SIAM Journal on Optimization, Vol. 13, No. 4, 2003, pp. 1222-1244. doi:10.1137/S1052623401383881

- Z. B. Zhu, “An Interior Point Type QP-Free Algorithm with Superlinear Convergence for Inequality Constrained Optimization,” Applied Mathematical Modelling, Vol. 31, No. 6, 2007, pp. 1201-1212. doi:10.1016/j.apm.2006.04.019

- J. B. Jian, D. L. Han and Q. J. Xu, “A New Sequential Systems of Linear Equations Algorithm of Feasible Descent for Inequality Constrained Optimization,” Acta Mathematica Sinica, English Series, Vol. 26, No. 12, 2010, pp. 2399-2420. doi:10.1007/s10114-010-7432-0

- Y. Yuan and W. Sun, “Theory and Methods of Optimization,” Science Press, Beijing, 1997.