Journal of Computer and Communications, 2014, 2, 53-60 Published Online September 2014 in SciRes. http://www.scirp.org/journal/jcc http://dx.doi.org/10.4236/jcc.2014.211007 How to cite this paper: Rusydi, M.I., Sasaki, M. and Ito, S. (2014) Calculate Target Position of Object in 3-Dimensional Area Based on the Perceived Locations Using EOG Signals. Journal of Computer and Communications, 2, 53-60. http://dx.doi.org/10.4236/jcc.2014.211007 Calculate Target Position of Object in 3-Dimensional Area Based on the Perceived Locations Using EOG Signals Muhammad Ilhamdi Rusydi1,2, Minoru Sasaki1, Satoshi Ito1 1Department of Mechanical Engineering, Gifu University, 1-1 Yanagido, Gifu City, 501-1193, Jap an 2Department of Electrical Engineering, Andalas University, Limau Manis, Padang City, 25163, Indonesia Email: q3812204@edu.gifu-u.ac.jp, sasaki@gifu-u.ac.jp, satoshi@edu.gifu-u.ac.jp Received July 2014 Abstract EOG is a biosignal which occurs during eye activities such as eye movement and blink. EOG has a linear relationship with gaze distance. Detection object position in 3-dimensional area using gaze motion was proposed in this research. To calculate the distance of gaze motion in pixel unit, affine transform method was developed. The homogeneous matrix from five geometry processes: trans- formation-1, rotation, transformation-2, shear, and dilatation was determined. To give tracking ability in 3-dimensional area, two cameras were attached each in front of and top side of object. The cameras were accessed by voluntary blink. The EOG characteristic of blink eye was deter- mined based on the absolute ratio between positive peak and negative peak which was greater than 1. Every blink toggled the active camera. The position of object was given by the perceived locations from the two cameras. Every movement in pixel coordinate was converted into centime- ter unit. Then, the perceived location was used to calculate to the base coordinate. The result shows that the blink method successfully accessed the camera. Both of the cameras could show the location of object from their side. Calculating the gaze distance using affine transform also gave a satisfied result. Using this method controlling a machine in 3-dimensional area by EOG could be developed. Keywords Gaze Motion, Blink, Affine Transform, Perceived Location, Camera 1. Introduction Electro-oculograph (EOG) is a biosignal occurred by eye activities. The activities include eye movements and blink [1]. The difference polarity between cornea and retina is the basic concept to generate EOG signals from the activities. Vertebrates have positive polarity in cornea and negative polarity in retina. Invertebrates have negative polarity in cornea and positive polarity in retina [2]. EOG has a linear relationship with eye movement. The linear area for horizontal is about ±45˚ and vertical area has slightly smaller area than horizontal [3].  M. I. Rusydi et al. The relationship between eye movements and EOG was studied by [4] using neural network approach. The mapping of EOG in two-dimensional (2D) eye movement using multi-layer perceptrons was done with accuracy approximately 1.09˚. Neural network classified EOG signal into seven commands [5]; straight, right, left, up, down and blink. The features were wavelet coefficients, auto-regression coefficients , and power spectral density (PSD). EOG was also processed by neural network to detect subnormal eye (macular degeneration). This system was made to help physician make diagnosis for patients. The feature was Arden ration which is the ratio be- tween amplitude of EOG in a light and dark condition. EOG signals were modeled using neural network to build a prosthetic eye [6]. This model could detect eye blink signals rather than remove them using median filter. EOG was also availably classified by fuzzy algorithm [7]. In this research, EOG was identified by directions. There were four direction, right, left, up, and down. There were two inputs, horizontal input and vertical input. The inputs from horizontal and vertical were grouped into high signal, mid signal and low signal. This research accessed a user interface on a monitor to activate some functions, such as game, key test, TV controller, and ad- justment system. A research in [8] helped disable people to access internet applications using EOG signal. This systems was design to let users write, search and move cursor using EOG. EOG signals were implemented in various areas. In [9], EOG was used to control mouse functions. Gadget control by classifying EOG with Deterministic Finite Automata was introduced by [10]. EOG signals were also developed to control a wheelchair to help disable people [11]. The accuracy of the system was approximately ±2˚. In [12], an improvement system of automatic wheelchair with EOG signal was developed with PIC micro- controller. In [13], research to combine EOG and two degrees of freedom robot manipulator was introduced. EOG sig- nals were grouped by four positive thresholds and four negative thresholds for both vertical and horizontal gaze motions. The values of thresholds are varied among persons. These thresholds were used to control velocity of robot. Blink was used if the robot reached a target. In [14], a 2-dof planar robot was controlled by gaze motions. EOG signals were controlled by creating vector equations between horizontal and vertical signals. Blink was al- so used to operate this system. In this study, EOG signal was used to detect object positions in 3-dimensional area. One camera was placed in front of object and another camera was hanged to give top view of object. Affine transform method was used to generate the relationship between gaze motion and EOG signal. 2. EOG This experiment used NF-Instrument sensor. NF Instrument has a Head Box, a Processor Box, and 4 electrodes, as shown by Figure 1. The electrodes sent the data to 5202-EEG Head Box. This box amplified the signals be- fore sending them to the 5201-Processor Box. Filtering processes are automatically given to the signal in the processor box since it has embedded filters. The filters are 60 Hz low pass filter (LPF), 1.6 Hz high pass filter (HPF), 60 Hz hum filter. Another low pas filter (LPF) was applied with 60 Hz cutoff frequency [15] due to the standard noise from the electrical source at this experiment. The frequency sampling was set to 100 Hz. The four electrodes are channel 1 (Ch1), channel 2 (Ch2), minus and ground. Their positions were determined from the previous research in [16]. The Elefix paste, serie Z-401 CE, produced by Nihon Konden was a gel put between skin and electrodes to stick them. This is a low impedance and high conductivity of gel. There were four features of EOG used in this research: threshold, slope of signal, peak of signal and integral of absolute EOG signals as shown by Figure 2. The threshold were positive threshold (Th +) and negative thre- shold (Th −). Threshold values varied among the persons. The thresholds were used to detect a gaze signal started and ended. The slope was determined based on the first threshold which was passed by the signal. Since the threshold were two, the slopes of signals were also two: positive slope (+) or positive EOG and negative slope (-) or nega- tive EOG. Table 1 shows the combination of slopes. The combinations were determined from the Ch1 and Ch2. There are four combinations. Area 1 was the area with negative signal from Ch1 and Ch2. Area two was the area with positive signal from Ch1 and negative signal from Ch2. Area 3 was the opposite of area 1 and area 4 was the negation of area 2. The integral of absolute EOG signal for each channel ( ) was determined by summing the absolute value of the EOG signal, as shown by Equation (1) [16].  M. I. Rusydi et al. Figure 1. NF instrument. Figure 2. EOG signal and the features. Table 1. Combination of four slopes of EOG. Area 1 Area 2 Area 3 Area 4 Ch1 Negative (−) Positive (+) Positive (+) Negative (−) Ch2 Negative (−) Negative (−) Positive (+) Positive (+) = + ΩΩ +− ∫∫ _ EOGChi EOG(t) dtEOG(t) dt Chi Chi int (1) With , and . The relationship between gaze motion distance and EOG signal was shown by Equation (2). The integral of absolute EOG was normalized ( ) to range it from 0 to 1. Gaze motion distance has a linear relationship with it. The distance could be horizontal (right and left) and vertical (up and down). The constant variable A and B determined in [Kenya conference] were depend on the directions (up, down, left amd right).. = + Chi DistanceA* norm_int_ EOGB (2) With 12=iforupordown(v)andforrightorleft gazemotion(u) , = Chi norm_int_ EOGnormalizationofintegral EOG , and  M. I. Rusydi et al. =Distancevpixel( up,down )andupixel(right ,left ) . Affine transform was used to improve the performance of detecting location. There were five geometry processes sequentially combined: translation-1, rotation, translation-2, shear and dilatation. This steps resulted homogeneous matrix as shown by Equation (3) [17]. [][ ][][][] 21= −−HomogeneousMatrixDilatationShear TranslationRotation Translation (3) With 1111 1 222 22 0 01 s (cosmsin)s(sinmcos)a HomogeneousMatrixs(sinmcos)s(cosmsin)a θ+θ− θ+θ =θ+θθ+ θ , 11 1 (() ()) xy xxyy asTcos Tsin TmTsin Tcos T=θ−θ− +θ+θ− , 22 2 (() ()) xyyx yx a sTsin Tcos TmTcos Tsin T=θ−θ− +θ−θ− , ,= = xy THorizontaltranslation factor TVerticaltranslation factor , 12 ,= = mVerticalshear factor mHorizontalshear factor and 12 ,= =sHorizontalscalefactorsVertical scalefactor . The relationship between pixel location produced by EOG signal and pixel location transformed by affine matrix is represented by Equation (4). u' v' 11 = u Homogeneous Matrixv (4) With (u,v) pixellocationtransfromed=before and (u',v')pixellocationafter transformed= . 3. Object Location in 3-D Area In order to calculate object position in 3-D area, two cameras were set up as the front camera and top camera, as shown by Figure 3. The cameras were produced by Digital Cowboy. The first camera was DC-NCR20U used for the front view the second camera was DC-NCR13U used for the top view. There was a target (red) hanged up inside the working areas of both cameras. Operator looked at the target on a monitor. The size of the monitor was 34 cm (horizontal) × 27 cm (vertical) or 1020 s pixel (horizontal × 720 pixels (vertical). The normal distance between operator and the monitor was fixed around 40 cm. The initial position of gaze point was on the center of the monitor. This point was marked by green circle as shown in Figure 4(a) and Figure 4(b). The front view had wall as the background and the top view has floor as the background. As shown in Figure 5(a), there is a perceived location on z-axis when the operator looked at the red target. It was called zp. The per- ceived location in y-axis was named as yp. Front camera was C1 and the top camera was C2. On yz-plane, the front camera was located on (d1y, d1z) and the top camera was located on (d2y, d2z). The center line of front camera (C1c) was exactly same with its height to the floor (d1z), but the center line of top camera was C2c. Based on the yz-plane as shown by Figure 5(b), the position of front camera was located on (d1y, d1x) and d1x was equal to zero, “0”. The perceived location in the x-axis was xp. Every pixel movement on the monitor could be transformed to centimeter unit by Equations (5), (6) and (7). 0 19=mov_x.cm/pixel* horizontal_pixel (5) 0 21 =mov _y(cm).cm/pixel* vertical _pixel (6) 0 18=mov _ z.cm/pixel* vertical _pixel (7) Location of objects in 3-D area was determined from the relationship among the linear equations from line 1 and line 2 in Figure 5(a) and line 3 in Figure 5(b). On the yz-plane, the basic linear equation is represented by Equation (8). For line 1, the equation is shown by Equation (9).  M. I. Rusydi et al. Figure 3. The position of two cameras and target. Figure 4. Area of view from (a) Front camera and (b) top camera. Figure 5. Position of target from (a) yz-plane and (b) yx-plane. (8) (9) C1 was located on (d1y, d1z). It was passed by line 1. By substituting this point to Equations (9), the intersec-  M. I. Rusydi et al. tion between line 1 and z-axis is determined by Equation (10). (10) The gradient of line 1 is shown by Equation (11). (11) (12) The equation is shown by Equation (12) for line 2. Since location of C2 was passed by the line 2, (d2y, d2z), was also one of the point crossed by the line 2. The intersection between line 2 and z-axis is determined by Equ- ation (13). (13) The gradient of line 2 is shown by Equations (14). (14) Since the target was located on the intersection between line 1 and line 2, so Equation (9) is equal to Equation (12), so the target position in y-axis is calculated by Equations (15). (15) Based on the result from Equations (15), the position of target in z-axis could be calculated by sub stituting this value to Equations (9) or (12). The location of target in x-axis is calculated after line 3 equation was determined by Equation (16). (16) The slope of this line was shown by Equation (17). (17). The intersection of line 3 to the x-axis was determined by the location of C1 in xy-plane which was passed by this line. It is shown by Equations (18). (18) 4. Result and Discussion There were to targets which their locations were calculated by EOG signal, as shown by Figure 6(a) and Figu re 6(b). The result of tracking target positions using EOG is compared to the real positions. Table 2 shows the re- sult in pixel and Table 3 gives the location in centimeter. There are two important part in this research, first is the affine transform which process the EOG pixel. Second is the eye blink to access the camera. Affine transform performed a solid performance in tracking object. The error was approximately around 0.86˚ ± 0.67˚ in the horizontal and 0.54˚ ± 0.34˚ in the vertical. The key was how the affine transform rotate the pixel rotation based on a reference line from each area [16]. Accessing the camera using blinking EOG was successfully done in this research. The ratio was determined from [18] which varied among the operators. 5. Conclusions This research used two cameras which were accessed by eye blink. Operator gazes to the target twice. First the gaze motion in front side and second the gaze motion in top side. Between the gaze motion, there was a eye blink activity to toggle the active camera.  M. I. Rusydi et al. Figure 6. Target positions in a monitor from (a) Front camera and (b) top camera. Table 2. Perceived location of target (x, y, z). Pixel Target 1 Target 2 Real (315, 219, 78) (−295, 110, −235) EOG (298, 190,70) (−275, 95, −240) Table 3. Real location of target (x, y, z). cm Target 1 Target 2 Real (50, −75, 136) (−45, −50, 91) EOG (48, −73, 137) (−44, −48, 93) Based on the result, the method of calculating object position in 3-D area using EOG signal is available to be developed as the alternative method in tracking object. This research only used voluntary blink to access the camera. Some failures in accessing the camera occurred when the operator felt tired and performed the involuntary blink. References [1] Cristian-Cezar, P., Fl orin, G. and Doru, T. (2012) EOG-Based Visual Navigation Interface Development. Expert Sys- tems with Application, 9, 10857-10866. [2] Malmmivuo, J. and Plonsey, R. (1995) Bioelectromagnetism. Oxford University Press, New York. [3] Dhine, K. and Er ic, P. (2002) Classification of EOG for Human Computer Interface. 2nd Joint EMBS/BMES Confe- rence, Houston, TX, USA, 23-26 October 2002. [4] Coughlin, M.J., Cutmore, T.R.H. and Hine, T.J. (2004) Automated Eye Tracking System Calibration Using Artificial Neural Networks. Comput Methods Programs Biomed, 76, 207-220. http://dx.doi.org/10.1016/j.cmpb.2004.06.001 [5] Banerjee, A., Datta, S., Pal, M., Konar, A., Tibarelawa, D.N . and Janarthanan, R. (2013) Classifying Electrooculogram to Detect Directional Eye Movements. Proceedia Technology, 10, 67-7 5. [6] Gu, J.J., Meng, M. , Cook, A. and Liu, X.P. (2006) Design, Sensing and Control of a Robotic Prosthetic Eye for Natural Eye Movement. Applied Bionics and Biomechanics, 1, 29-41. http://dx.doi.org/10.1533/abbi.2005.0024 [7] Deng, Y.L., Hsu, C. L., Lin, T.C., Tuan, J.S. and Chang, S.H. (2010) EOG-Based Human-Computer Interface System Development. Expert Systems with Application, 37, 3337-3343. http://dx.doi.org/10.1016/j.eswa.2009.10.017 [8] Lledo, L.D., Ubeda, A., Ianez, E. and Azorin, J.M. (2013) Internet Browsing Application based on Electrooculography for Disabled People. Expert Systems with Applications, 40, 2640-2648. http://dx.doi.org/10.1016/j.eswa.2012.11.012 [9] Harun, H. and Mansor, W. (2009) EOG Signal Detection for Home Appliances Activation. In: Proceedings of 5th In- ternational Colloquium on Signal Processing and Its Applications (CSPA), Kuala Lumpur, Malaysia, 6-8 March 2009. [10] Ghandi, T. , Trikha, M., Santosh, J. and Anand, S. (2010) Development of an Expert Multitask Gadget Controlled by  M. I. Rusydi et al. Voluntary Eye Movements. Expert Systems with Application, 37, 4204-4211. http://dx.doi.org/10.1016/j.eswa.2009.11.082 [11] Bera, R., Boquete, L., Mazo, M. and Lopez, E. (2002) Wheelchair Guidance Strategies Using EOG. Journal of Intelli- gent and Robotic Systems, 34, 279-299. http://dx.doi.org/10.1023/A:1016359503796 [12] Dev, A., Chacko, H.C. and Varghese, R. (2012) Eye Controlled Wheel Chair Using EOG. In: Proceedings of Interna- tional Conference on Computing and Control Engineering, Chennai, India, April 2012. [13] Eduardo, I., Jose, M.A., Eduardo, F. and Andre, U. (2010) Interface Based on Electrooculography for Velocity Control of a Robot Arm. Applied Bionics and Biomechanics, 7, 199-207. http://dx.doi.org/10.1080/11762322.2010.503107 [14] Sasaki, M., Ito, S., Takeda, K., Okamoto, T. and Rusydi, M.I. (2013) Developing a Two-Link Robot Arm Controller Using Voluntary Blink. In: Proceedings of the 22nd MAGDA Conference, Miyazaki, Japan, December 2013. [15] Rusydi, M .I. , Okamoto, T., Ito, S. and Sasaki, M. (2014) Rotation Matrix to Operate a Robot Manipulator for 2D Ana- log Tracking Object Using Electrooculography. Robotics, 3, 289-309. http://dx.doi.org/10.3390/robotics3030289 [16] Rusydi, M.I., Mori, Y., Okamoto, T., Sasaki, M. and Ito, S. (2012) Using EOG Signal to Control Manipulator. In: Proceedings of the 7th Asia Pacific Symposium on Applied Electromagnetics and Mechanis, Ho Chi Minh City, Viet- nam, July 2012. [17] Rusydi, M.I., Sasaki, M. and Ito, S. (2014) Affine Transform to Reform Pixel Coordinate of EOG Signals for Control- ling Robot Manipulators Using Gaze Motions. Sensor, 14, 10107-10123. http://dx.doi.org/10.3390/s140610107 [18] Okamaoto, T., Sasaki, M., Ito, S., Takeda, K. and Rusydi, M.I. (2013) Using Gaze Point and Blink Detection to Con- trol Robot Arm by Use of the EOG Signal. In: Proceedings of the 22nd MAGDA Conference, Miyazaki, Japan, De- cember 2013.

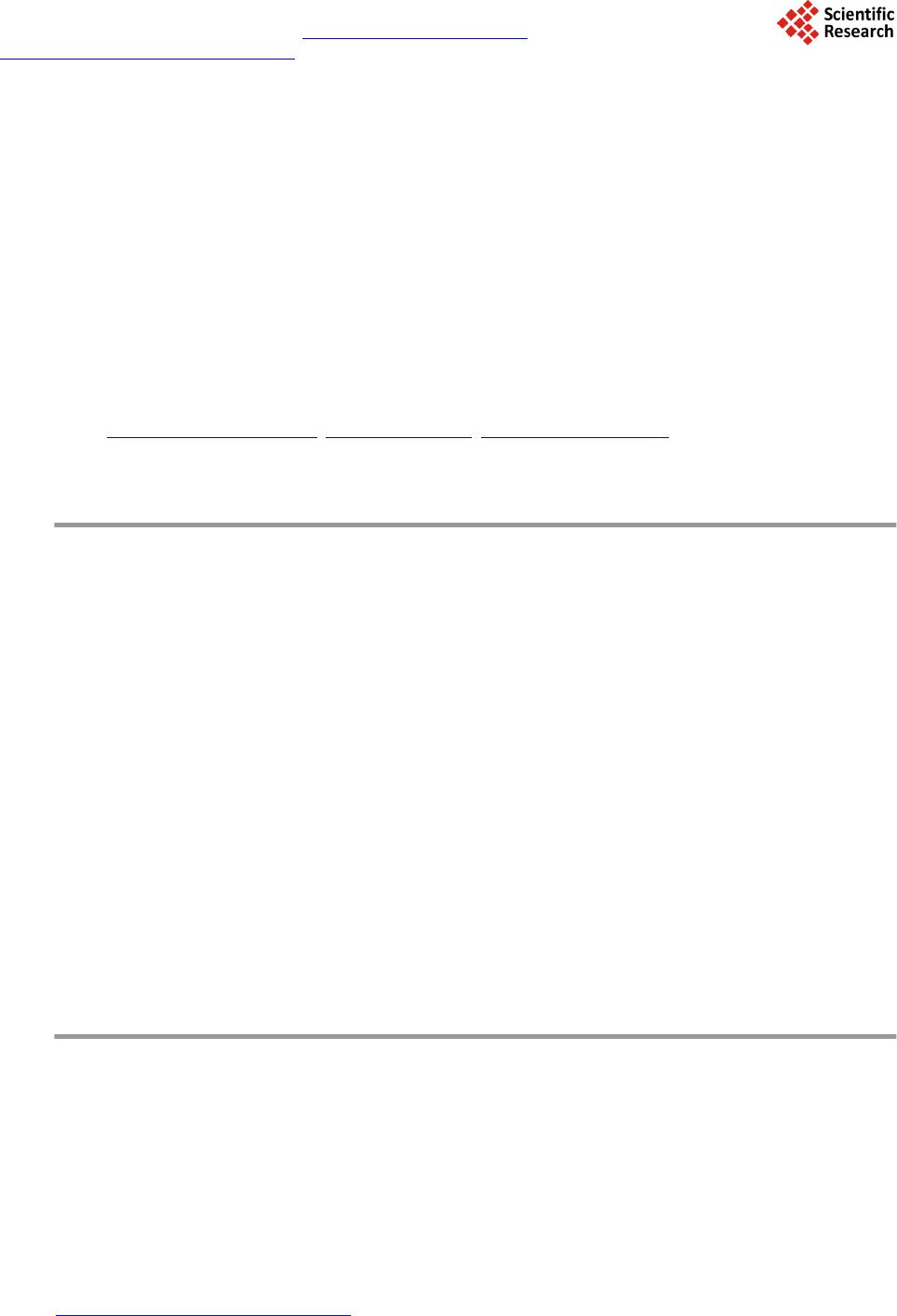

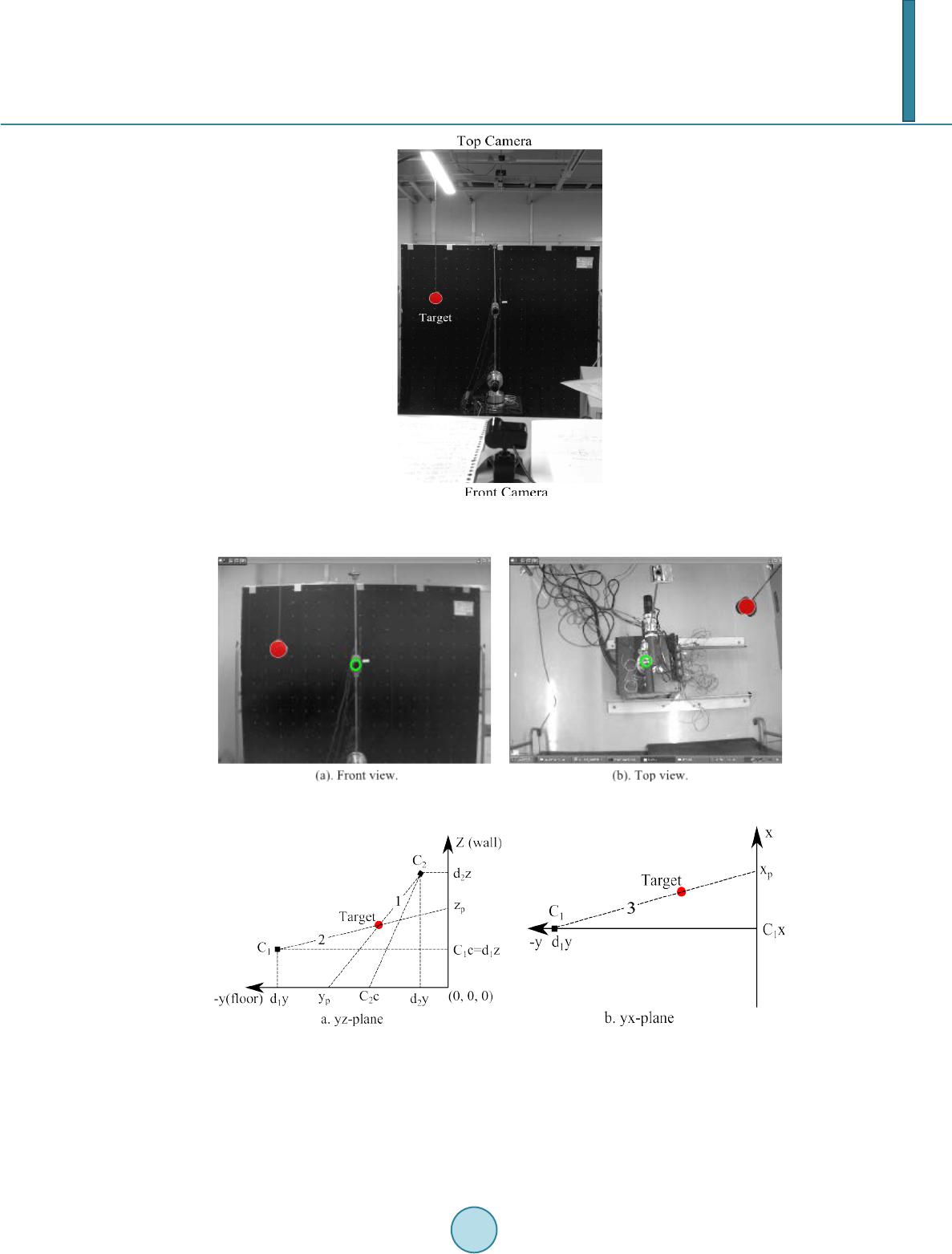

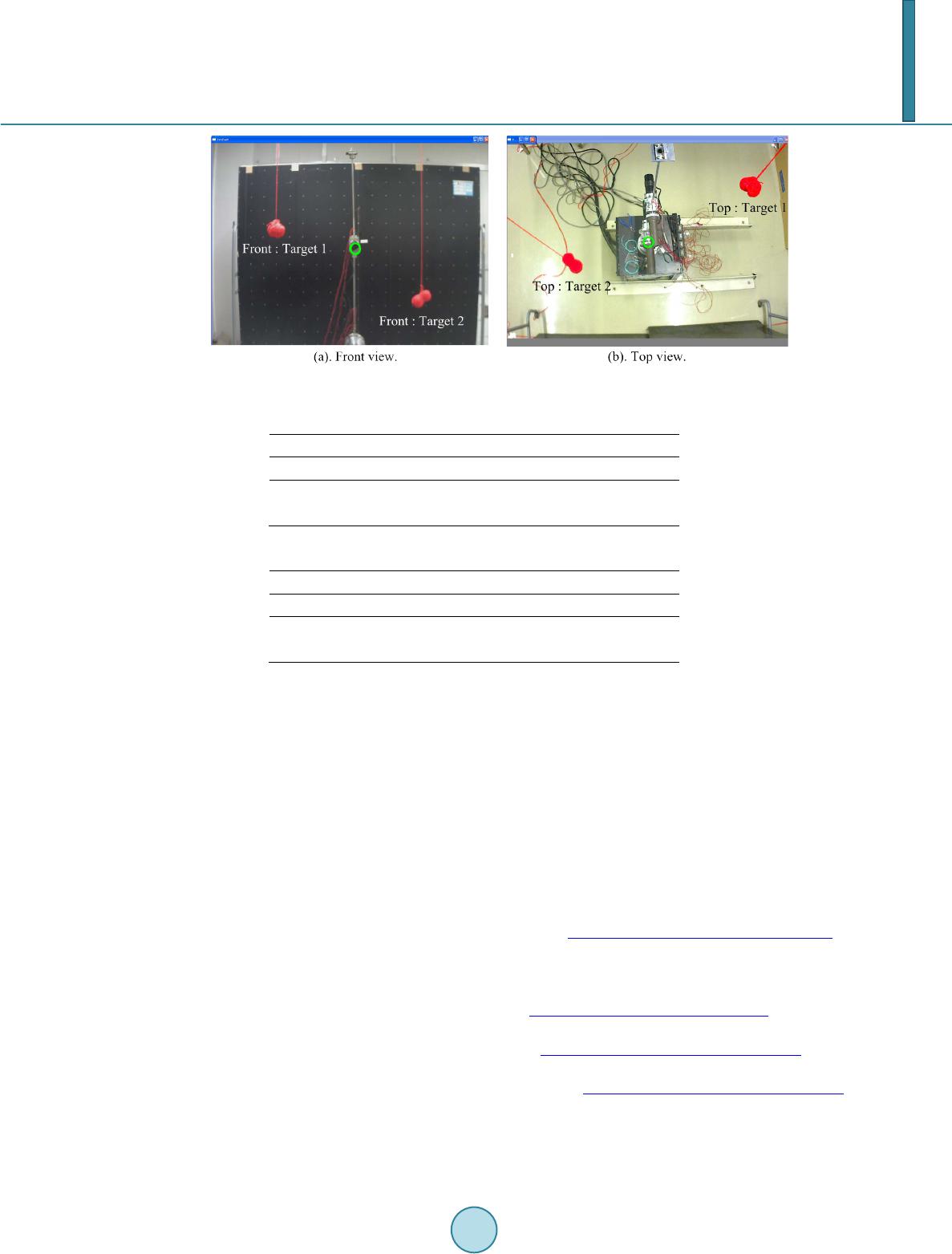

|