F. K. FARAMARZI ET AL.

Copyright © 2013 SciRes. ENG

ACC TPTN/ TPTNFPFN=++ ++ (5)

In our classification task, the minor ity class is labeled

as positive, and the majority class is labeled as negative.

TP, TN, FP and FN are the numbers of true positive, true

negative, false positive and false negative, respectively.

If we use imbalanced dataset, even when the classifier

classifies all the minority instances incorrectly and all the

majority instances correctly, the accuracy is high because

the majority instances are more than minority ones. For

this reason, we also use sensitivity (SEN), specificity

(SPEC) and Area Under Curve (AUC) to evaluate the

performance of the predictor. AUC is a measure that de-

termines the quality of the prediction by calculating the

area under Receiver Operating Characteristic (ROC)

curve. ROC curve is a graphical plot of the true-positive

rate vs. false-positive rate. For the perfect predictor the

AUC is equal 1. Sensitivity and specificity are also given

by following Equations (6) and (7):

(6 )

(7)

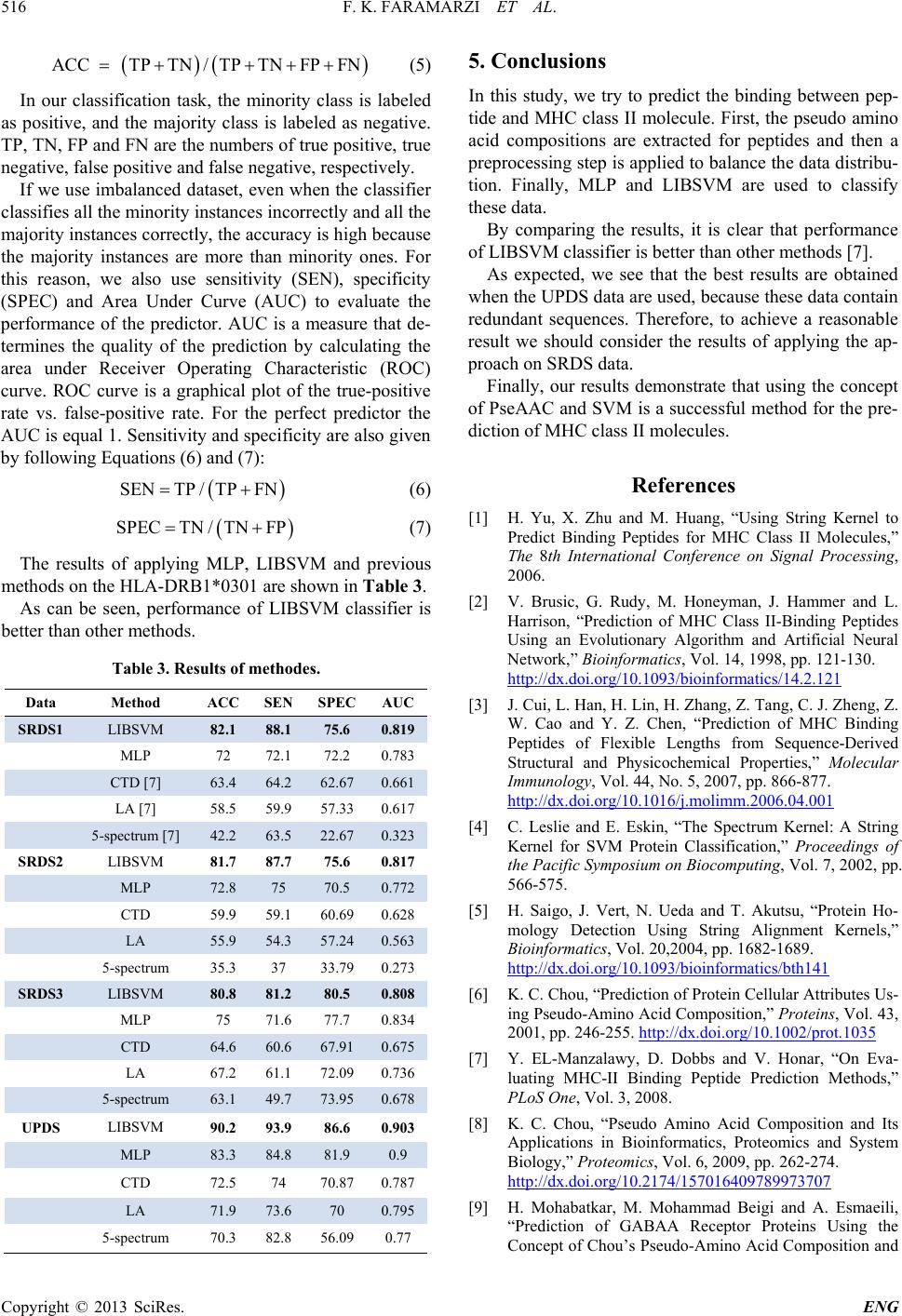

The results of applying MLP, LIBSVM and previous

methods on the HLA-DRB1*0301 are shown in Table 3.

As can be seen, performance of LIBSVM classifier is

better than other methods.

Table 3. Results of methodes.

Data Method ACC SEN SPEC AUC

SRDS1 LIBSVM 82.1 88.1 75.6 0.819

MLP 72 72.1 72.2 0.783

CTD [7] 63.4 64.2 62.67 0.661

LA [7] 58.5 59.9 57.33 0.617

5-spectrum [7] 42.2 63.5 22.67 0.323

SRDS2 LIBSVM 81.7 87.7 75.6 0.817

MLP 72.8 75 70.5 0.772

CTD 59.9 59.1 60.69 0.628

LA 55.9 54.3 57.24 0.563

5-spectrum 35.3 37 33.79 0.273

SRDS3 LIBSVM 80.8 81.2 80.5 0.808

MLP 75 71.6 77.7 0.834

CTD 64.6 60.6 67.91 0.675

LA 67.2 61.1 72.09 0.736

5-spectrum 63.1 49.7 73.95 0.678

UPDS LIBSVM 90.2 93.9 86.6 0.903

MLP 83.3 84.8 81.9 0.9

CTD 72.5 74 70.87 0.787

LA 71.9 73.6 70 0.795

5-spectrum 70.3 82.8 56.09 0.77

5. Conclusions

In this study, we try to predict the binding between pep-

tide and MHC class II molecule. First, the pseudo amino

acid compositions are extracted for peptides and then a

preprocessing step is applied to balance the data distribu-

tion. Finally, MLP and LIBSVM are used to classify

these data.

By comparing the results, it is clear that performance

of LIBSVM classifier is better than other methods [7].

As expected, we see that the best results are obtained

when the U PD S data are used, because these data contain

redundant sequences. Therefore, to achieve a reasonable

result we should consider the results of applying the ap-

proach on SRDS data.

Finally, our r esults demonstrate that using the concept

of PseAAC and SVM is a successful method for the pre-

diction of MHC class II molecules.

References

[1] H. Yu, X. Zhu and M. Huang, “Using String Kernel to

Predict Binding Peptides for MH C Class II Molecules,”

The 8th International Conference on Signal Processing,

2006.

[2] V. Brusic, G. Rudy, M. Honeyman, J. Hammer and L.

Harrison, “Prediction of MHC Class II-Binding Peptides

Using an Evolutionary Algorithm and Artificial Neural

Network,” Bioinformatics, Vol. 14, 1998, pp. 121-130.

http://dx.doi.org/10.1093/bioinformatics/14.2.121

[3] J. Cui, L. Han, H. Lin, H. Zhang, Z. Tang, C. J. Zheng, Z.

W. Cao and Y. Z. Chen, “Prediction of MHC Binding

Peptides of Flexible Lengths from Sequence-Derived

Structural and Physicochemical Properties,” Molecular

Immunology, Vol. 44, No. 5, 2007, pp. 866-877.

http://dx.doi.org/10.1016/j.molimm.2006.04.001

[4] C. Leslie and E. Eskin, “The Spectrum Kernel: A String

Kernel for SVM Protein Classification,” Proceedings of

the Pacific Symposium on Biocomputing, Vol. 7, 2002, pp.

566-575.

[5] H. Saigo, J. Vert, N. Ueda and T. Akutsu, “Protein Ho-

mology Detection Using String Alignment Kernels,”

Bioinformatics, Vol. 20,2004, pp. 1682-1689.

http://dx.doi.org/10.1093/bioinformatics/bth141

[6] K. C. Chou, “Prediction of Protein Cellular Attributes Us-

ing Pseudo-Amino Acid Composition,” Proteins, Vol. 43,

2001, pp. 246-255. http://dx.doi.org/10.1002/prot.1035

[7] Y. EL-Manzalawy, D. Dobbs and V. Honar, “On Eva-

luating MHC-II Binding Peptide Prediction Methods,”

PLoS One, Vol. 3, 2008.

[8] K. C. Chou, “Pseudo Amino Acid Composition and Its

Applications in Bioinformatics, Proteomics and System

Biology,” Proteomics, Vol. 6, 2009, pp. 262-274.

http://dx.doi.org/10.2174/157016409789973707

[9] H. Mohabatkar, M. Mohammad Beigi and A. Esmaeili,

“Prediction of GABAA Receptor Proteins Using the

Concept of Chou’s Pseudo-Amino Acid Composition and