Advances in Remote Sensing

Vol.05 No.03(2016), Article ID:70845,11 pages

10.4236/ars.2016.53016

Segment-Based Terrain Filtering Technique for Elevation-Based Building Detection in VHR Remote Sensing Images

Alaeldin Suliman*, Yun Zhang

Department of Geodesy and Geomatics Engineering, University of New Brunswick, Fredericton, New Brunswick, Canada

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 30 July 2016; accepted 23 September 2016; published 26 September 2016

ABSTRACT

Building detection in very high resolution (VHR) remote sensing images is crucial for many urban planning and management applications. Since buildings are elevated objects, the incorporation of elevation data provides a mean to reliable detection. However, almost all existing methods of elevation-based building detection must first generate a normalized Digital Surface Model (nDSM). This model is generated by processes of extracting and subtracting terrain elevations from the DSM data. The generation of accurate nDSM is still a challenging task to some extent. This paper introduces a segment-based terrain filtering (SegTF) technique to filter out the terrain elevations directly using DSM elevations. This technique has four steps: elevation co-registration, image segmentation, slope calculation, and building detection. These steps of the developed technique were applied to a dataset that consisted of a VHR image and a corresponding DSM for detecting buildings. The result of the building detection was evaluated and found to be 100% correct with an overall detection quality of 93%. These values indicate a highly reliable and promising technique for mapping buildings in VHR images.

Keywords:

Building Detection, Terrain Filtering, Elevation Normalization, VHR Imagery

1. Introduction

Building information plays an important role in geo-information systems, and urban planning and management applications. The most cost-effective and widely available geo-data for mapping buildings is the remotely sensed very high resolution (VHR) images. These images contain an extraordinary level of detailed information that allows an accurate and detailed mapping of man-made urban objects, including buildings. However, the high level of ground details brings many challenges for pixel-based image classification and mapping techniques. These challenges are caused by the spectral intra-diversity and inter-similarity of urban objects [1] . Consequently, building detection in VHR images has become an active area of research for the past few years [2] [3] .

Since buildings are elevated objects, the key feature for delineating these objects is the aboveground elevation information. Therefore, integrating the two dimensional VHR images with the corresponding height information should provide a more complete surface representation that allows reliable elevation-based building detection. To be ideal for detecting and mapping buildings, this representation requires accurate co-registration of the optical and elevation data as well as precise normalization of the elevation data [4] [5] . Normalizing the elevation data is a critical step for all elevation-based building detection procedures, and a challenging one by itself. This study, thus, is interested in developing a methodology that would avoid the normalization step (filtering bare-ground effects) that is typically required for the process of detecting buildings based on elevation data.

Elevation data can be generated by different remote sensing techniques based on photogrammetric and LiDAR (light-detection-and-ranging) technologies. However, these techniques provide height information only for the top of surfaces such as buildings and trees; in other words, digital surface models (DSMs). Digital terrain models (DTMs), on the other hand, are a representation of the bare-ground (terrain) elevations. They are produced after applying classification or filtering on the DSM to identify terrain features from off-terrain objects. When DTMs are subtracted from their corresponding DSMs, normalized digital surface models (nDSMs) are created. They describe only above-terrain elevations [6] [7] .

Various filtering techniques have been developed to filter (classify) terrain from off-terrain objects in DSMs. Based on the data source, these techniques can be categorized as either techniques for LiDAR-based DSMs or techniques for photogrammetric-based DSMs [8] . On the one hand, LiDAR-based DSMs are generated from point-cloud data type, and generally characterized by accurate elevations, sharp edge preservation, and very low existence of noise or outliers. Additionally, due to the availability of the first and last return pulses, the ground and non-ground elevations are recorded for the same location. On the other hand, photogrammetric-based DSMs are derived from stereo matching of overlapping images. Hence, the resulting DSMs usually suffer from relatively unreliable elevations, occlusion gaps, unclear building edges, and outliers due to mismatches in the low textured areas [8] .

Sithole and Vosselman [9] reviewed eight algorithms for DTMs extraction from LiDAR point-could data. They concluded that all reviewed algorithms follow one of four approaches for defining ground points; slope- based, block-minimum, surface-based, and clustering/segmentation. In slope-based algorithms, the slope between two neighboring points is measured and compared against a threshold. The block-minimum techniques select a horizontal plane with a buffer zone to work as a discrimination function. Surface-based techniques use a parametric surface with a corresponding buffer zone that defines a region in 3D space where ground points are expected to reside. Finally, clustering/segmentation algorithms aggregate a set of points based on their elevation data and assign these points to an off-terrain object if their cluster is above its neighbors.

Photogrammetric- or stereo-based DSMs can be derived from either airborne or space-borne stereo images. Directly applying LiDAR-based DSM filtering algorithms on stereo-based DSM data may fail to filter off-ter- rain objects, especially at building edges due to the smooth intensity transition from ground to the building roof. The process is further complicated by the lack of the multiple returns that are exploited by LiDAR-based DSM filtering algorithms. However, the existence of image data is advantageous as color and spectral information can be utilized for filtering the ground. An example for such approach is presented by [10] , where a dense DSMs is generated from multiple airborne images supplemented with the results of a pixel-based classification. However, pixel-based classification usually produces inaccurate (noisy) thematic classes. Additionally, urban objects such as building roofs and parking lots have high spectral and spatial similarities that make the spectral-based techniques of classification fail to distinguish one class from the other.

For the case of DSMs generated from space-borne stereo images, Krauß and Reinartz [11] presented an appealing integration of classification information and a steep-edge detection algorithm for DTM extraction and DSM enhancement. However, the steep-edge detection algorithm assumes that the lower elevation at any steep edge represents the bare-earth. Clearly, this assumption may not be valid in many dense urban areas, for example in the case of adjacent building roofs of different heights as well as when there is no road to separate between the buildings. Their approach was also challenged in the case of using classification information from the single-band panchromatic image of Cartosat-1 that provided a very limited discrimination power [12] .

Based on the conducted review on DTM extraction algorithms, it has been noticed that most of these algorithms do not consider the existence of image data that correspond to the elevation models. Even when image data are incorporated, building roofs and parking lots are usually excluded due to their confusing spectral similarity. Moreover, it is clearly observed that most of the DTM filtering algorithms are usually designed to process elevation data in either pixel or point cloud formats, hence suffering from the large size of the data. Therefore, different to all the reviewed techniques, if the processing unit is converted from individual pixels into image segments (i.e., a group of pixels), the generated segments would be less sensitive to the spectral and elevation variances within the objects. Additionally, these segments would also create sophisticated types of morphological and contextual information that can help in detecting and filtering terrain-level objects [13] [14] .

Consequently, this study argues that clustering DSM elevations based on meaningful image segments would achieve more reliable building detection. As such, this study takes the advantage of the availability of a VHR image and its relevant DSM dataset to segment the elevations. It also suggests that classifying the segmented objects should be based on the slopes calculated between each segment and the surrounding ones.

In the present paper, therefore, a segment-based terrain filtering (SegTF) technique is proposed to identify off- terrain segments using un-normalized DSM elevations. The technique utilizes parameter-optimized image segments generated based on the image spectral information to guarantee the best possible boundary representation for image objects. The identification of the off-terrain segments in this technique is based on slope information among the optimized image segments. Considering that this is a very localized and relative operation among neighboring segments. Hence, as the work novelty, there is no need to normalize the DSM. An early and concise version of this work was presented in [15] .

The rest of the paper is outlined as follows: the proposed SegTF technique is detailed in Section 2. The elevation-based building detection based on the developed SegTF is described in Section 3. The test data, results, and discussions are provided in Section 4. Finally, the drawn conclusions are given in Section 5.

2. Segment-Based Terrain Filtering (SegTF) Technique

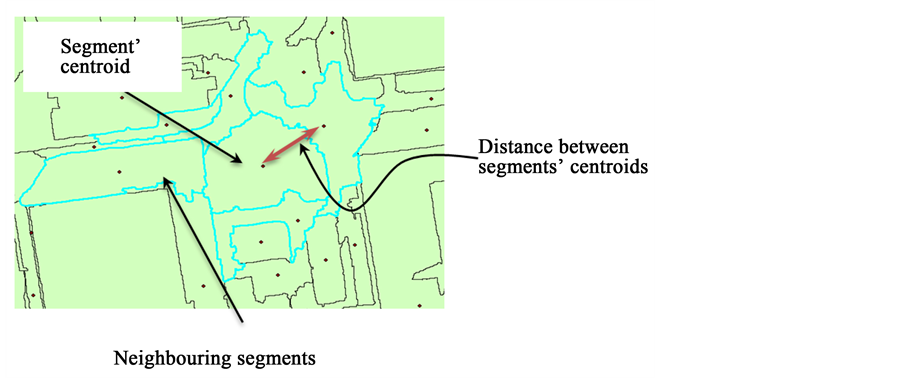

Before describing the concept of the SegTF technique, is it assumed that 1) DSM elevation data are accurately co-registered to the relevant image, 2) the relevant image is optimally segmented, and 3) each image segment is abstracted by a representative point (RP) which is the segment’s inner-centroid point. Finally, 4) the average elevation of each segment is assigned to its RP. Figure 1 illustrates an example of a segment surrounded by its neighbors. The dots in this figure indicate the location of each segment’s RP.

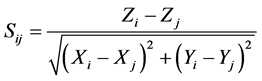

The concept of SegTF technique is straightforward. It calculates the slope value for each image segments with respect to all of its neighboring ones. For a segment to be considered a neighbor of another, a boundary must be shared between them. The slope values are computed as described in Equation (1). In this equation, while the nominator represents the elevation difference between the segment under consideration and its neighboring one,

Figure 1. A segment, its representative point, and its neighboring segments.

the denominator represents the planimetric distance between the two segments’ RPs as indicated in Figure 1. The technique calculates the slope information for each segment with respect to all its neighboring ones.

(1)

(1)

where, Sij is the slope from the segment under consideration (i) to the neighboring segment (j);  and

and  are the generalized elevations for segments i and j respectively;

are the generalized elevations for segments i and j respectively;  represent the RP planimetric coordinates of segment (i), and

represent the RP planimetric coordinates of segment (i), and  represent the RP planimetric coordinates of segment (j).

represent the RP planimetric coordinates of segment (j).

The flowchart for the process of the SegTF technique is provided in Figure 2. As shown, the algorithm loops

Figure 2. Flowchart for the proposed segment-based terrain filtering (SegTF) algorithm.

through the generated segments to calculate the slopes for each segment with respect to all of its neighbors. Then, it examines the maximum slope value against an empirically defined threshold value (T). If the maximum slope value is larger than the selected threshold, then the segment is considered an off-terrain object. Otherwise, it would be classified as a terrain object.

The terrain-level class includes objects like traffic areas, grass lands, water bodies, and shadows. On the other hand, off-terrain objects include only building and trees. The SegTF technique does not cater for the case of green roofs (vegetation lie on top of a building roof) in the image since this case rarely appears in urban areas.

3. Elevation-Based Building Detection Methodology

Based on the arguments in Section 1, the main and typical steps of the elevation-based building detection methodology is intended to 1) cluster the DSM elevations based on image segmentation, and 2) filter out the terrain-level objects based on the measured slope information. After incorporating the developed SegTF technique, Figure 3 is a flowchart for the specific five steps required to achieve building detection accordingly. The method starts by co-registering the optical and elevation datasets, followed by an optimized segmentation of the image. The average height for each segment is then calculated in the third step. The last two steps are for filtering out the terrain-level objects, using the SegTF technique, and detecting buildings. Further descriptions for these steps are provided in the following subsections.

Optical-elevation data co-registration―This step is required to integrate the elevation data in a DSM with the optical data of the corresponding VHR imagery. Optical-elevation data co-registration can be implemented in a straightforward fashion using image-to-image registration techniques that use 2D mapping functions and a set of matching points. However, this type of co-registration will suffer from severe misregistration when the VHR image is acquired off-nadir [4] . In this case, the solution of line-of-sight DSMs is recommended for achieving co-registration with sub-pixel accuracy. This solution is based on incorporating sensor model information for accurate co-registration as introduced by Suliman and Zhang [4] .

Image optimized segmentation―After achieving a successful co-registration, the VHR image needs to be segmented to reduce its complexity. The image segmentation technique to be used must divide the image into objects based on a measure of color or spectral homogeneity. Baatz and Schäpe [16] developed a multiresolution segmentation technique that was found later to be one of the most appropriate techniques for segmenting VHR

Figure 3. Flowchart for the elevation-based building detection methodology.

images in urban areas [17] . The results of implementing this technique depend heavily on the selected values for the segmentation parameters (scale, compactness, and smoothness). Trial-and-error approach is usually followed until an acceptable result is achieved. To remedy this situation, Tong, Maxwell, Zhang and Dey [18] developed a Fuzzy-based Segmentation Parameter (FbSP) optimizer. It is a supervised segmentation tool for automated determination of the optimal parameters for the multiresolution segmentation. Hence, this tool is recommended for the proposed building detection methodology.

Height generalization―This step calculates and assigns elevation values to the RPs of the segments generated by the previous step. The boundary of each image segment is first converted into a polygon in a vector format. Each polygon typically contains several elevation values enclosed within its boundary. Each polygon should be assigned only one elevation value that is simply the average of these elevation values. This generalization by averaging mitigates any error caused by misregistration between the two datasets as well as removes the effects of elevation outliers resulting from stereo-based approaches.

The RPs are used to abstract the image segments in point features. Hence, these RPs must be guaranteed to be within their corresponding segments. For the convex polygon shapes, this representative point is the centroid that lies inside the polygon boundary. However, if the centroid point lies outside the boundary, then the representative point shall be the center of the largest circle that fits inside the polygon as introduced by Garcia-Castellanos and Lombardo [19] . Examples of such inner-centroids contained within their segments are shown earlier in Figure 1.

Segment-based terrain filtering (SegTF) technique―By now, each segment has one elevation value assigned to its RP and indicating the height of that segment above the vertical/elevation datum. The vertical dimension of the RPs is the object-space elevation, while the horizontal dimensions are the image-space coordinates. The slopes between each segment and all of its neighbors can now be calculated. The distances between the RPs and the generalized elevations are used to detect the off-terrain objects as described in the SegTF technique (Section 2).

Building detection and post-processing―Once off-terrain objects are detected based on executing the SegTF technique, the results usually need to be post-processed for enhancement. Some off-terrain objects may have been over-segmented into some smaller objects. In this case, thus, the finishing step shall include merging the neighboring off-terrain segments. Furthermore, there may be some small objects (have the noise appearance) that were misdetected because of their elevations. Such objects can be removed following an area-based thresholding. However, prior to the merging of segments, the off-terrain objects detected in the previous step include building roofs and trees. Thus, building segments need to be further discerned from other non-building objects. Additionally, specific scenarios were noticed in early experiments where SegTF technique would fail in identifying a building. Most of these incidents can be resolved by applying rule-based filtering or morphological functions.

One such case is caused by the existence of large trees in the scene. Fortunately, vegetation objects can be identified easily using vegetation indices based on the spectral information of the VHR images. However, this does not work in the case of trees without leaves. In this case, the standard deviation of the elevations within each segment can be used as a discrimination feature. Building roofs have a smoother texture than trees do and, hence, have relatively lower values of standard deviations.

Another case may arise when some objects related to shadow areas are detected as elevated objects. This situation is caused when a shadow segment covers a part of the building and, thus, incorporates its elevations in its generalized elevation. This situation may also be caused by a slight misregistration between the image and elevation data at the edges of buildings. In this scenario, the average of brightness values in the relevant segment shall provide a simple delineation component to exclude shadows from the detected off-terrain objects.

A third case noticed in the early experiments is related to the small and slightly elevated objects on top of building roofs. SegTF technique cannot detect such objects surrounded by other roof segments since they have slope values lower than the selected threshold. To resolve this, a rule can be applied to assign the class of building rooftop to any segment surrounded completely by building segments.

4. Datasets and Experiments

4.1. Test Data

The datasets used in this study were a VHR airborne imagery acquired by ADS40 linear array sensor along with the corresponding LiDAR-based DSM for the same area. The datasets cover an area in Woolpert, Colorado, US as shown in Figure 4. The ground resolution of the VHR imagery is 0.5 m, while the LiDAR DSM has a resolution of 2.5 m.

4.2. Experimental Results and Discussion

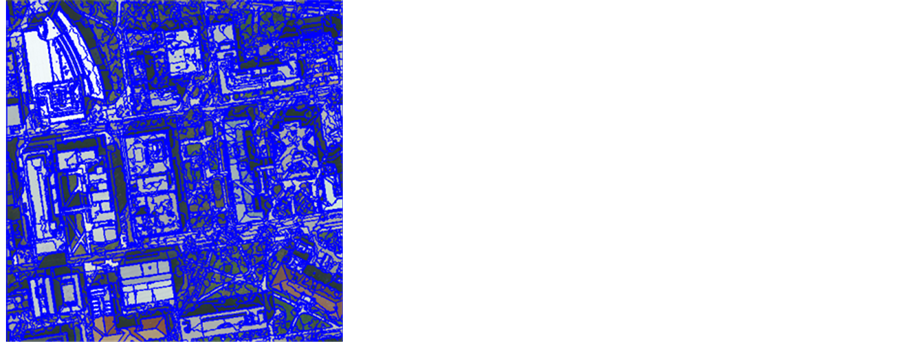

Since the image is of a nadir view, a direct image-to-image co-registration was implemented as it was deemed sufficient to yield successful co-registration between the image and the DSM. The image was then segmented using multiresolution segmentation algorithm. Because the achieved segmentation result was not acceptable even after a few trial-and-errors, the segmentation parameters were optimized by executing the FbSP tool. Figure 5 illustrates the initial image segmentation and the optimized result after executing the FbSP tool.

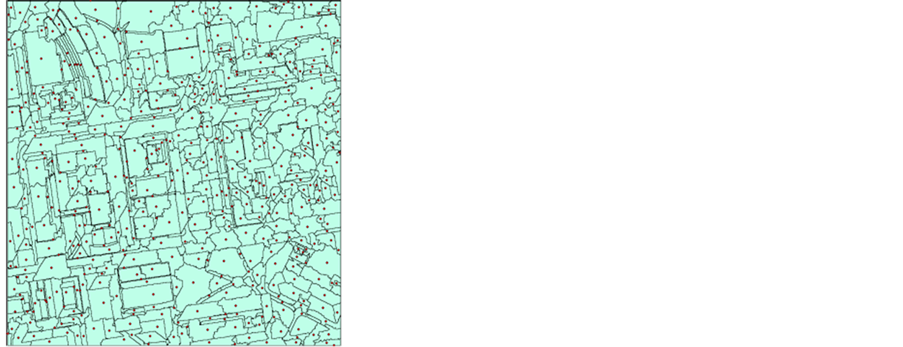

The boundary of each image segment was then converted into a polygon and representative points (RP) were generated. Finally, the DSM elevations were generalized by assigning each RP the mean value of all elevation values within the corresponding segment. Figure 6 shows the calculated RPs for all of the generated polygons along with the generalization of the co-registered DSM.

Thereafter, the SegTF technique was implemented after a Python tool was developed to conduct this task automatically. At each RP, slopes to the neighboring segments were calculated; the maximum value of these slopes was compared against a threshold value. This threshold was determined empirically to reflect the expected gradient of urban areas.

Figure 4. Test data used in the study: (a) VHR imagery; (b) LiDAR-based DSM.

Figure 5. Results of image segmentation: (a) Initial result; (b) Optimized segmentation using FbSP tool.

Figure 6. Generalization of elevations: (a) RPs and polygons of image segments; (b) Generalized DSM elevation.

The detection result was post-processed after applying the thresholding criterion and map roughly the building roofs. Figure 7(a) shows the initial detection result. Trees and shadow segments were detected as off-terrain objects, while some roof objects were missed. The standard deviation values were calculated for all detected off-terrain polygons. The values higher than the 30 percentile of the standard deviation range were deemed representing tree branches and, hence, eliminated from the class of buildings. Likewise, the brightness of the all VHR bands was calculated and the values lower than the 20 percentile of the brightness range were eliminated from buildings class since they considered representing shadows attached to building edges and have some high elevation values.

While Figure 7(b) shows the achieved result after detecting trees and shadow segments, Figure 7(c) shows the result after excluding these segments. After that, the remaining segments were merged and the segments surrounded by building segments were assigned to the building object detection result. The final finished building detection result in Figure 7(d).

4.3. Accuracy Assessment

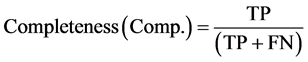

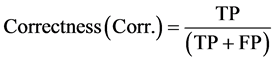

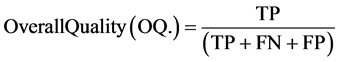

To assess the quality of the detection, this study adopts the commonly used performance measures of completeness, correctness, and overall quality. While completeness is the percentage of entities in the reference data that are successfully detected, correctness indicates how well the detected entities match the reference data. Finally, the overall quality of the results provides a compound performance metric that balances completeness and correctness. The formulas for these three measures are expressed as follows:

(2)

(2)

where the true positive (TP) is the number of building objects appear in both detection result and the reference data. The false negative (FN) is the number of building objects in the reference dataset that are not detected. The false positive (FP) represents the number of building objects that are detected but do not correspond to the reference dataset [20] .

For the purpose of accuracy assessment, a reference dataset was generated manually by visually identifying and digitizing all buildings in the test image. The detection performance measures were then calculated and listed in Table 1. The total areas in pixels of true positive (TP), false negative (FV), and false positive (FP) were

Figure 7. Intermediate results in building detection: (a) Initial SegTF filtering result; (b) Detected trees and shadows segments; (c) After removing trees and shadows segments; (d) The final finished building detection result.

Table 1. Buidling detection accuracy measures.

used as the input entities in the equations for performance measures.

Based on Table 1, the detection result was 100% correct. This means that all detected buildings are also in the reference dataset without any false positive. Thus, this value indicates a high level of reliability for the results of the developed technique. On the other hand, the completeness measure indicates that 7% of building pixels were missed from being detected. The main reason for this condition is that roof segments surrounded by other roof objects of the same or slightly different elevations would have a very small slope values. Therefore, they would not pass through the filter and would not be considered as building objects. However, the post processing and finishing procedures are capable to improve the detected building objects.

Finally, the overall quality performance value of 93% shown in this table signifies the capability of the developed algorithm and its usefulness in detecting buildings. This quality is also depicted visually in the finished detection results in Figure 7(d).

The high success rate for detecting buildings based on the SegTF technique is attributed to three reasons that, at the same time, validate the premise for this research; 1) the incorporation of the elevation information with VHR imagery, 2) achieving successful and accurate image-elevation data co-registration, and 3) reducing the sensitivity to the misregistration by segmenting the surface and averaging the elevation within the boundaries of each segment

5. Conclusions

This paper introduced the Segment-based Terrain Filtering (SegTF) technique that aims to filter out terrain features from a DSM. Based on the un-normalized DSM elevations, this technique calculates slope information among image segments to distinguish the off-terrain from the terrain ones. Considering the technique is founded on a very localized and relative operation, its novelty lies in detecting off-terrain segments without the need for extracting the DTM elevations.

The validity of the SegTF technique is demonstrated through elevation-based building detection in VHR remote sensing imagery. Using radiometric-based image segments and co-registered DSM elevations, the technique was able to identify building roof objects without generating nDSM elevations as it is typically required in most elevation-based building detection methods. The result of building detection was evaluated and found to be 100% correct with an overall detection quality of 93%. These values indicate a highly reliable and promising technique for detecting buildings in remotely sensed VHR images.

There were few incidents when the SegTF algorithm was challenged. Two of these incidents are associated with segments corresponding to trees (without leaves) and building shadows. In both cases, the generalized elevation for such a segment is an aggregate of elevations for different objects within the segment. Another case is actually when the thresholding operation missed detecting building roof segments that are completely surrounded by other roof segments. Most of these limitations can easily be corrected in the finishing and post-processing step. Nevertheless, a more robust treatment of these cases should be considered. Hence, future research will address these cases in addition to other challenges associated with more complex and extended size urban environments.

Acknowledgements

Also, the authors would appreciate the comments of the anonymous reviewers to improve this paper.. The authors would like to acknowledge the Trimble Navigation for providing the tutorial test data used in this research.

Funding

This study was funded in part by the Libyan Ministry of Higher Education and Research (LMHEAR) and in part by the Canada Chair Research (CRC) program.

Cite this paper

Alaeldin Suliman,Yun Zhang, (2016) Segment-Based Terrain Filtering Technique for Elevation-Based Building Detection in VHR Remote Sensing Images. Advances in Remote Sensing,05,192-202. doi: 10.4236/ars.2016.53016

References

- 1. Salehi, B., Zhang, Y., Zhong, M. and Dey, V. (2012) A Review of the Effectiveness of Spatial Information Used in Urban Land Cover Classification of VHR Imagery. International Journal of Geoinformatics, 8, 35-51.

- 2. Doxani, G., Karantzalos, K. and Tsakiri-Strati, M. (2015) Object-Based Building Change Detection from a Single Multispectral Image and Pre-Existing Geospatial Information. Photogrammetric Engineering and Remote Sensing, 81. 481-489.

http://dx.doi.org/10.14358/PERS.81.6.481 - 3. Khosravi, I., Momeni, M. and Rahnemoonfar, M. (2014) Performance Evaluation of Object-Based and Pixel-Based Building Detection Algorithms from Very High Spatial Resolution Imagery. Photogrammetric Engineering and Remote Sensing, 80, 519-528.

http://dx.doi.org/10.14358/PERS.80.6.519-528 - 4. Suliman, A. and Zhang, Y. (2015) Development of Line-of-Sight Digital Surface Model for Co-Registering Off-Nadir VHR Satellite Imagery with Elevation Data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 8, 1913-1923.

http://dx.doi.org/10.1109/JSTARS.2015.2407365 - 5. Mishra, R. and Zhang, Y. (2012) A Review of Optical Imagery and Airborne LiDAR Data Registration Methods. The Open Remote Sensing Journal, 5, 54-63.

http://dx.doi.org/10.2174/1875413901205010054 - 6. Weidner, U. and Forstner, W. (1995) Towards Automatic Building Extraction from High-Resolution Digital Elevation Models. ISPRS Journal of Photogrammetry and Remote Sensing, 50, 38-49.

http://dx.doi.org/10.1016/0924-2716(95)98236-S - 7. Sefercik, U., Karakis, S., Bayik, C., Alkan, M. and Yastikli, N. (2014) Contribution of Normalized DSM to Automatic Building Extraction from HR Mono Optical Satellite Imagery. European Journal of Remote Sensing, 47, 575-591.

http://dx.doi.org/10.5721/EuJRS20144732 - 8. Baltsavias, E.P. (1999) A Comparison between Photogrammetry and Laser Scanning. ISPRS Journal of Photogrammetry and Remote Sensing, 54, 83-94.

http://dx.doi.org/10.1016/S0924-2716(99)00014-3 - 9. Sithole, G. and Vosselman, G. (2004) Experimental Comparison of Filter Algorithms for Bare-Earth Extraction from Airborne Laser Scanning Point Clouds. ISPRS Journal of Photogrammetry and Remote Sensing, 59, 85-101.

http://dx.doi.org/10.1016/S0924-2716(99)00014-3 - 10. Wiechert, A. and Gruber, M. (2010) DSM and True Ortho Generation with the UltraCam-L: A Case Study. Proceedings of the American Society for Photogrammetry and Remote Sensing (ASPRS) Annual Conference, San Diego, CA.

- 11. KrauB, T. and Reinartz, P. (2010) Urban Object Detection Using a Fusion Approach of Dense Urban Digital Surface Models and VHR Optical Satellite Stereo Data. The International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, 38, 1-6.

http://www.isprs.org/proceedings/XXXVIII/1-W17/ - 12. Tian, J., Krauss, T. and Reinartz, P. (2014) DTM Generation in Forest Regions from Satellite Stereo Imagery. The International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, 40, 401-405.

http://dx.doi.org/10.5194/isprsarchives-XL-1-401-2014 - 13. Zhang, X., Feng, X. and Xiao, P. (2015) Multi-Scale Segmentation of High-Spatial Resolution Remote Sensing Images Using Adaptively Increased Scale Parameter. Photogrammetric Engineering and Remote Sensing, 81, 461-470.

http://dx.doi.org/10.14358/PERS.81.6.461 - 14. Argyridis, A. and Argialas, D.P. (2015) A Fuzzy Spatial Reasoner for Multi-Scale Geobia Ontologies. Photogrammetric Engineering and Remote Sensing, 81, 491-498.

http://dx.doi.org/10.14358/PERS.81.6.491 - 15. Suliman, A., Zhang, Y. and Al-Tahir, R. (2016) Slope-Based Terrain Filtering for Building Detection in Remotely Sensed VHR Images. Proceedings of the Imaging & Geospatial Technology Forum (IGTF), Fort Worth, Texas, 11-15 April 2016, Unpublished.

http://conferences.asprs.org/Fort-Worth-2016/Conference/Proceedings - 16. Baatz, M. and Schape, A. (2000) Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation. In: Strobl, J., Blaschke, T. and Griesbner, G., Eds., Angewandte Geographische Informations-Verarbeitung, XII, Wichmann Verlag, Karlsruhe, Germany, 12-23.

- 17. Dey, V. (2013) Image Segmentation Techniques for Urban Land Cover Segmentation of VHR Imagery: Recent Developments and Future Prospects. International Journal of Geoinformatics, 9, 15-35.

- 18. Tong, H., Maxwell, T., Zhang, Y. and Dey, V. (2012) A Supervised and Fuzzy-Based Approach to Determine Optimal Multi-Resolution Image Segmentation Parameters. Photogrammetric Engineering and Remote Sensing, 78, 1029-1044.

http://dx.doi.org/10.14358/PERS.78.10.1029 - 19. Garcia-Castellanos, D. and Lombardo, U. (2007) Poles of Inaccessibility: A Calculation Algorithm for the Remotest Places on Earth. Scottish Geographical Journal, 123, 227-233.

http://dx.doi.org/10.1080/14702540801897809 - 20. Rutzinger, M., Rottensteiner, F. and Pfeifer, N. (2009) A Comparison of Evaluation Techniques for Building Extraction from Airborne Laser Scanning. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2, 11-20.

http://dx.doi.org/10.1109/JSTARS.2009.2012488

NOTES

*Corresponding author.