Circuits and Systems

Vol.07 No.08(2016), Article ID:67376,13 pages

10.4236/cs.2016.78139

Multimodal Medical Image Fusion in Non-Subsampled Contourlet Transform Domain

Periyavattam Shanmugam Gomathi1, Bhuvanesh Kalaavathi2

1Department of Electronics and Communication Engineering, V.S.B. Engineering College, Affiliated to Anna University, Karur, India

2Department of Computer Science and Engineering, K.S.R. Institute for Engineering and Technology, Affiliated to Anna University, Tiruchengode, India

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 24 March 2016; accepted 20 April 2016; published 15 June 2016

ABSTRACT

Multimodal medical image fusion is a powerful tool for diagnosing diseases in medical field. The main objective is to capture the relevant information from input images into a single output image, which plays an important role in clinical applications. In this paper, an image fusion technique for the fusion of multimodal medical images is proposed based on Non-Subsampled Contourlet Transform. The proposed technique uses the Non-Subsampled Contourlet Transform (NSCT) to decompose the images into lowpass and highpass subbands. The lowpass and highpass subbands are fused by using mean based and variance based fusion rules. The reconstructed image is obtained by taking Inverse Non-Subsampled Contourlet Transform (INSCT) on fused subbands. The experimental results on six pairs of medical images are compared in terms of entropy, mean, standard deviation, QAB/F as performance parameters. It reveals that the proposed image fusion technique outperforms the existing image fusion techniques in terms of quantitative and qualitative outcomes of the images. The percentage improvement in entropy is 0% - 40%, mean is 3% - 42%, standard deviation is 1% - 42%, QAB/F is 0.4% - 48% in proposed method comparing to conventional methods for six pairs of medical images.

Keywords:

Image Fusion, Non-Subsampled Contourlet Transform (NSCT), Medical Imaging, Fusion Rules

1. Introduction

The image fusion is the process of combining two or more images to form a single fused image which can provide more reliable and accurate information. It is useful for human visual and machine perception or further analysis and image processing tasks [1] [2] . The image fusion plays an important role in medical imaging, machine vision, remote sensing, microscopic imaging and military applications. Over the last few decades, medical imaging plays an important role in a large number of healthcare applications including diagnosis, treatment, etc. [3] [4] . The main objective of multimodal medical image fusion is to capture the most relevant information from input images into a single output image which is useful in clinical applications. The different modalities of medical images contain complementary information of human organs and tissues which help the physicians to diagnose the diseases. The multimodality medical images such as Computed Tomography (CT), Magnetic Resonance Angiography (MRA), Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), Ultrasonography (USG), Single-Photon Emission Computed Tomography (SPECT) images, X-rays, etc. can provide limited information. These multimodality medical images cannot provide comprehensive and accurate information. For example, MRI, CT, USG and MRA images are the structural medical images which provide high resolution images with anatomical information, whereas PET, SPECT and functional MRI (fMRI) images are functional medical images which provide low-spatial resolution images with functional information. Hence, anatomical and functional medical images can be integrated to obtain more useful information about the same object. It helps in diagnosing diseases accurately and reduces storage cost by storing the single fused image instead of multiple-input images.

So far, many image fusion techniques have been suggested in the literature. Some of the techniques are related to multimodality medical image fusion [1] - [10] . The image fusion techniques comprise of three categories such as pixel level, feature level and decision level fusions [11] . The pixel level image fusion is usually employed for medical image fusion, because of easy implementation and computational efficiency [12] . Hence, it is focused in the proposed work.

The simplest image fusion technique creates the fused image by taking the average of the source images, pixel by pixel. But, this method reduces the contrast of the fused image [13] . The techniques based on Principal Component Analysis (PCA), Intensity-Hue-Saturation (IHS), Independent Component Analysis (ICA), and Brovey transform (BT) produce spectral degradation [14] . The pyramidal methods such as Gradient pyramid (GP) [15] , Laplacian pyramid (LP) [16] , Ratio-of-low-pass pyramid (ROLP) [17] , Contrast-pyramid (CP) [18] , Morphological pyramid [19] suffer from blocking effects. Therefore, these are not highly appropriate methods for medical image fusion [20] . Discrete Wavelet Transform (DWT) based techniques which are commonly used, is good at isolated discontinuities and cannot preserve the salient features of the source images efficiently, and introduce artifacts and inconsistencies in the fused images [23] . Several Multiscale Geometric Analysis (MGA) tools such as Curvelet, Contourlet and NSCT, etc. are developed which do not suffer from the problems of the wavelet. Many image fusion techniques based on these MGA tools have been suggested by researchers [21] - [29] .

In medical image fusion, preservation of edge information is needed, but DWT based fusion may produce peculiarities along the edges and it captures limited directional information along vertical, horizontal and diagonal directions [30] . To overcome the problem of discrete wavelet transform, Contourlet transform has been proposed which gives the asymptotic optimal representation of contours and has been used for image fusion [31] . However, the upsampling and downsampling process of Contourlet transform produces shift-variance and having pseudo-Gibbs phenomena in the fused image [32] . Later, Non-subsampled Contourlet transform (NSCT) was proposed by Cunha et al. [33] which inherit the advantages of Contourlet transform, while effectively suppressing pseudo-Gibbs phenomena. The unique structure of NSCT, namely, two shift-invariant components, Non-Subsampled Pyramids (NSP) and Non-Subsampled Directional Filter Banks (NSDFB) imparts NSCT with shift invariance and redundancy properties, thereby making it an ideal and attractive MST for image fusion methods.

In this paper, a multimodal medical image fusion technique is proposed based on NSCT. The NSCT is applied on source images which decompose the images into low and high frequency components. The low frequency components of NSCT are fused by the maximum local mean scheme and high frequency components are fused by the maximum local variance scheme. The use of variance enhances the fusion scheme by preserving the edges in the images. These combinations preserve more details in the source images and improve the quality of the fused images. The fused image is obtained by taking Inverse Non-Subsampled Contourlet Transform (INSCT) with the coefficients obtained from all the frequency bands. The efficiency of the suggested technique is carried out by fusion experiments on different multimodality medical image pairs. Both qualitative and quantitative image analysis reveals that the proposed framework provides a better fusion results compared to the conventional image fusion techniques.

The rest of the paper is organized as follows. The NSCT is described in Section II followed by the proposed medical image fusion method in Section III. Results and discussions are given in Section IV and conclusion is given in Section V.

2. Non-Subsampled Contourlet Transform (NSCT)

The NSCT was introduced by Cunha et al. which is based on the theory of Contourlet Transform (CT) which achieves better results in image processing in geometric transformations [33] . The CT is a shift variant because it contains both down-samplers and up-samplers in the Laplacian Pyramid (LP) and Directional Filter Bank (DFB) stages. NSCT is a shift invariant, multi-scale and multi-directional transform which has a very vibrant implementation. It is obtained by using the Non-subsampled Pyramid Filter Bank (NSP or NSPFB) and the Non-subsampled Directional Filter Bank (NSDFB). Figure 1 shows the decomposition framework of the NSCT.

2.1. Non-Subsampled Pyramid Filter Bank

The multiscale property is ensured by the non-subsampled pyramid filter bank using two-channel non-subsampled filter bank. At each decomposition level, one low-frequency image and one high-frequency image can be produced. In the subsequent NSP decomposition level, the low-frequency components are decomposed iteratively to capture the singularities in the image. As a result, NSP can result in L + 1 sub-images, which consists of one low frequency image and L high frequency images where L denotes the number of decomposition levels. The sub-images have the same size as the source image.

2.2. Non-Subsampled Directional Filter Bank

The Non-subsampled Directional Filter Bank is a two-channel NSDFB which are constructed by combining the directional fan filter banks. The NSDFB ensures the NSCT with the multi-direction property and provides more directional detail information. The NSDFB is achieved by eliminating downsamplers and upsamplers in each two-channel filter bank in DFB tree structure and upsampling filters accordingly [33] [34] . NSDFB allows the direction decomposition with k levels in each high-frequency subbands from NSPFB and then produces 2 k directional subbands with the same size as the source images. Thus, the NSDFB provides the NSCT with multidirectional performance and gives more precise directional detail information to get more accurate results [29] . Therefore, NSCT provides better frequency selectivity and an important property of the shift-invariance on

Figure 1. Decomposition of NSCT framework.

Figure 2. General procedure for NSCT based image fusion.

account of non-subsampled operation. The size of sub-images decomposed by NSCT is same and image fusion based on NSCT can mitigate the effects of misregistration in the fused images [25] . Therefore, NSCT is more suitable for medical image fusion. The general procedure for NSCT based image fusion is shown in Figure 2. The image fusion steps based on NSCT can be summarized below.

Step 1: NSCT is applied on source images to obtain lowpass subband coefficients and highpass directional subband coefficients at each scale and each direction. NSPFB and NSDFB are used to complete multiscale decomposition and multi-direction decomposition.

Step 2: The transformed coefficients are performed with fusion rules to select NSCT coefficients of the fused image.

Step 3: The fused image is constructed by performing an inverse NSCT to the selected coefficients obtained from Step 2.

3. Proposed Medical Image Fusion Method

The architecture of the proposed image fusion method is shown in Figure 3. The multimodal medical images are preprocessed before they are used for fusion. The preprocessing includes image registration and image resizing.

The images which are obtained by different modalities might be of different orientations. Hence, they are needed to be registered before they are fused. Image Registration is a fundamental task in image processing. It is the process of spatially aligning two or more images of a scene. Given a point in one image, the registration will determine the positions of the same point in another image. The source images are assumed to be spatially registered, which is a common assumption in image fusion [35] . Various techniques [36] can be applied to medical image registration. A thorough survey of image registration techniques can be referred to [37] .

The images to be fused may be of different sizes. For image fusion, size of the images must be the same. If the images vary in size, image scaling is required. Image scaling is the process of resizing an image. This is done by interpolating the smaller size image by rows and columns duplication.

Filtering is required for removing noise from the images, if noise is present in the input images. Median filtering is one kind of smoothing technique. All smoothing techniques are effective at removing noise, but adversely affect edges. When reducing the noise in an image, it is important to preserve the edges which are needed for medical image fusion. Edges are important to the visual appearance of images. For small to moderate levels of noise, the median filter is demonstrably better than Gaussian blur at removing noise whilst preserving edges for a given, fixed window size [38] . For speckle noise and additive noise, median filtering is particularly effective [39] . Like lowpass filtering, median filtering smoothes the image and is thus useful in reducing noise. Unlike lowpass filtering, median filtering can preserve discontinuities and can smooth a few pixels whose values differ significantly from their surroundings without affecting the other pixels. The median filter is also a sliding-window spatial filter, but it replaces the center value in the window with the median of all the pixel values in the window. Median filtering is a nonlinear process useful in reducing impulsive or salt-and-pepper noise. It is also useful in preserving edges in an image while reducing noise.

Figure 3. Proposed image fusion method.

In the proposed architecture as shown in Figure 3, A, B and F represent the two source images and the resultant fused image, respectively. ,

,  indicates the low-frequency subband (LFS) of the images A and B at the scale K.

indicates the low-frequency subband (LFS) of the images A and B at the scale K. ,

,  represent the high-frequency subband (HFS) of the images A and B at scale k, (

represent the high-frequency subband (HFS) of the images A and B at scale k, ( ) and direction h.

) and direction h.

3.1. Fusion of Low Frequency Subbands

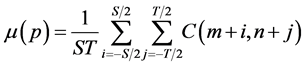

The coefficients in the low-frequency subbands represent the approximation component of the input images. Most of the information of input images is available in the low-frequency band. Hence, for fusing the low frequency coefficients, we propose a scheme by computing the mean (μ) in a neighborhood to select low frequency coefficients. Mean (μ) gives a measure of the average gray level in the region over which the mean is computed. It is calculated on the low frequency components of the input images within a 3-by-3 window and the frequency components which have a higher value of mean are selected as the fusion coefficients among the low frequency components. The formula for mean (μ) is given in Equation (1).

(1)

(1)

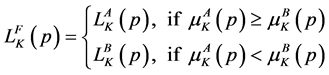

where ST is the window size, μ(p) denotes the mean value of coefficients centered at m, n in the window of ST and C represents the multi-scale decomposition coefficient in the low frequency subband. For the sake of notational simplicity, m, n corresponding to the location of each coefficient, is denoted by p. After calculating the mean of all coefficients in the low-frequency band, the corresponding coefficients with higher magnitude of mean are chosen into the fused image. The fusion scheme used for the low-frequency bands can be illustrated as follows:

(2)

(2)

3.2. Fusion of High Frequency Subbands

The objective of an image fusion is that the fused image should not discard any useful information present in the input images and should preserve the detailed information such as edges, lines and boundaries of the image. These details of the image are usually present in the high frequency subbands. Hence, it is important to find the appropriate method to fuse the detailed components of source images. The conventional methods do not consider the neighbouring coefficients. But, pixel in an image will have some relations with its neighbouring pixels, which implies that coefficients in high frequency subbands will also have some relations with its neighbouring coefficients.

Variance is used to characterize the details of the local region (3 × 3) in high-frequency sub-images. Processing of high-frequency coefficients has a direct effect on clarity and distortion of the image. Because of the importance of these coefficients for preservation of edges and details, the variance of a sub-image neighborhood characterizes the degree of change of that neighborhood. In addition, in a local area, if there is strong correlation among pixels, then the characteristic information embodied in any pixel is shared by the surrounding pixels. The fusion rule of selecting larger variance is efficient when the variances corresponding to the image pixels vary greatly in local areas [40] [41] .

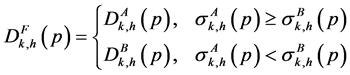

Hence, for fusing high frequency coefficients, variance based fusion rule is used which computes the variance in a neighbourhood to select the high frequency coefficients and also, this method produces large coefficients on the edges. The formula for the variance is given below.

(3)

(3)

where ST is the window size. The μ(p) and σ(p) denote the mean and variance values of the coefficients centered at m, n in the window of ST and C represents the multi-scale decomposition coefficient. Then, for selecting high frequency coefficients, the following fusion rule has been implemented.

(4)

(4)

The procedures of our method can be summarized as follows.

1) The input images to be fused must be registered to assure that the corresponding pixels are aligned.

2) Decompose the images using NSCT to get low and high frequency subbands.

3) The coefficients of low frequency subband of NSCT are selected by Equation (1) and Equation (2).

4) The coefficients of high frequency subbands of NSCT are selected by Equation (3) and Equation (4).

5) Perform the Inverse NSCT (INSCT) with the combined coefficients obtained from steps 3 and 4.

4. Results and Discussion

In our multimodal medical image fusion technique, evaluation index system is established to evaluate the fused images. The indices used in our framework measure the amount of information present in the fused image, contrast of the fused image, average intensity of the fused image, edge information present in the fused image. The indices are Entropy [2] , Mean, Standard deviation [2] and Edge based similarity measure (QAB/F) [21] .

The proposed multimodal medical image fusion method has been implemented in MATLAB R2010a by taking different multimodality medical images. We have taken CT, MRI and PET images as experimental images. The performance of our method is compared with the fusion results obtained from pixel averaging method [42] , the conventional DWT method with maximum selection rule [43] , NSCT [29] , NSCT [21] . Like most of the literatures, we assume the input images to be in perfect registration. In the proposed method, for implementing NSCT, maximally flat filters and diamond maxflat filters are used as pyramidal and directional filter banks respectively. The decomposition level of NSCT is [1 2 3 4]. In proposed framework, the window size 3 × 3 is considered which has been proved to be more effective for calculating the mean and variance [44] [45] .

In order to evaluate the performance of the proposed image fusion method, six pairs of medical source images are considered as shown in Figure 4. The image pairs in Figure 4 (a1-a2, b1-b2, c1-c2, d1-d2, e1-e2 and f1-f2) are CT, MRI, T1-weighted MR (MR-T1), MR-GAD (Generalized Anxiety Disorder) and PET images. The corresponding pixels of two input images have been co-aligned perfectly. The proposed medical fusion method is applied to these image sets. For comparison, the same experimental images are used for all the existing methods.

The experimental results of the five different fusion methods are shown in Figure 5. Compared with the original source images, the fused image obtained from all the five methods, contains information about bones and tissues, which cannot be seen in the separate CT, MRI or PET images. By seeing the images in Figure 5 (a7-f7), the result of the proposed method is better than the other methods. The result of the pixel averaging method gives least values for all the indices because the information of bones and tissues are blurred. The results of NSCT based method is good compared to pixel averaging and DWT based methods.

The fused images obtained by the proposed method are more informative and have higher contrast than the input medical images which is helpful in visualization and interpretation. The fused image contains both soft tissue (from Figure 4(d1)) and bone information (from Figure 4(d2)). Similarly, the other fused images contain information from both the corresponding input images. The resultant fused images obtained by NSCT are visually similar to the fused images obtained by the proposed method. But, in quantitative analysis, we found that the fused images obtained by the proposed method have higher quantitative results than the methods of NSCT. Pixel averaging and DWT methods suffer from the problem of contrast reduction. It is clear from the images of Figure 5(a3-f3, a4-f4) that the pixel averaging and DWT methods have lost large amount of image details. We can see clearly from the resultant fused images given in Figure 5(a7-f7) that the proposed method results in high clarity, high information content and low contrast reduction. Hence, it is clear from the subjective analysis that the proposed method is more effective in fusing multimodality medical images and superior than other state-of-the-art medical image fusion techniques. An expert radiologist (Dr. P. Parimala, Ananya scans, Karur-1, Tamil Nadu, India) was asked to do subjective evaluation of fused images obtained by proposed method and compared methods. According to clinician opinion, fused result of compared methods suffer from the problem of contrast reduction than the proposed method (Figure 5(a7-f7)) which can be seen from the given results of Figure 5 and an expert also found that the fused images obtained by proposed method, are clearer, higher contrast and more informative than the source medical images.

Table 1 and Figure 6 show the Entropy, Mean, Standard deviation and QAB/F values of the different quantitative measures of the fused images obtained by both conventional and proposed image fusion techniques. The highest values are indicated by “bold” values in Table 1 for the quantitative measure. The higher values of QAB/F indicate that the fused images obtained by proposed method have more edge strength than the other methods.

Figure 4. Source images: (a1) = T1-weighted MR, (a2) = MRA, (b1) = MR-GAD (generalized anxiety disorder) (b2) = CT, (c1) = MRI, (c2) = PET, (d1) = MRI, (d2) = CT, (e1) = CT, (e2) = PET, (f1) = CT, (f2) = MRI.

Figure 5. Fused Images: (a3), (b3), (c3), (d3), (e3), (f3)―Pixel Averaging; (a4), (b4), (c4), (d4), (e4), (f4)―DWT; (a5), (b5), (c5), (d5), (e5), (f5)―NSCT [29] ; (a6), (b6), (c6), (d6), (e6), (f6)―NSCT [21] ; (a7), (b7), (c7), (d7), (e7), (f7)―Proposed method.

Table 1. Evaluation indices for fused medical images.

Figure 6. Objective performance (entropy, mean, QAB/F, standard deviations) comparisons.

Similarly, the higher values of Entropy for the fused images show that the fused images obtained by the proposed method have more information content than the other fused images.

From Table 1, the standard deviation’s values of the resultant fused images are higher, which indicates that the fused images obtained by our proposed method have higher contrast than the fused images obtained by other image fusion techniques. Hence, it is clear from Table 1 that the fused images obtained by the proposed method are more informative and high contrast which is helpful in visualization and interpretation. Most of the existing state- of-the-art image fusion techniques suffer from contrast reduction, blocking effects and loss of image details, etc.

In our proposed method, the multi-scale, multi-directional and shift invariance properties of NSCT along with the use of different fusion rules have been used in such a way that it can capture the fine details present in the input medical images into the fused image without reducing the contrast of the image. Hence, it is obvious from the results and comparisons that the fused images obtained by the proposed method are more informative and high contrast which is helpful for the physicians in their diagnosis and treatment.

5. Conclusion

In this paper, a multimodal medical image fusion method is proposed based on Non-Subsampled Contourlet Transform (NSCT), which consists of three steps. In the first step, the medical images to be fused are decomposed into low and high frequency components by Non-Subsampled Contourlet Transform. In the second step, two different fusion rules are used for fusing the low frequency and high frequency bands which preserve more information in the fused image along with improved quality. The low frequency bands are fused by using local mean fusion rule, whereas high frequency bands are fused by using local variance fusion rule. In the last step, the fused image is reconstructed by Inverse Non-Subsampled Contourlet Transform (NSCT) with the composite coefficients. The percentage improvement in entropy is 0% - 40%, mean is 3% - 42%, standard deviation is 1% - 42%, QAB/F is 0.4% - 48% in proposed method comparing to conventional methods for six pairs of medical images. The visual and evaluation indices comparisons reveal that proposed method improves the details of the fused images than the conventional fusion methods.

Acknowledgements

The authors thank Dr. P. Parimala M.B.B.S, DMRD, (Ananya Scans, Karur-1, Tamil Nadu, India) for the subjective evaluation of the fused images.

Cite this paper

Periyavattam Shanmugam Gomathi,Bhuvanesh Kalaavathi, (2016) Multimodal Medical Image Fusion in Non-Subsampled Contourlet Transform Domain. Circuits and Systems,07,1598-1610. doi: 10.4236/cs.2016.78139

References

- 1. Wang, A., Sun, H. and Guan, Y. (2006) The Application of Wavelet Transform to Multi-modality Medical Image Fusion. IEEE International Conference on Networking, Sensing and Control, Ft. Lauderdale, 270-274.

http://dx.doi.org/10.1109/icnsc.2006.1673156 - 2. Yang, Y., Dong Sun Park, D.S., Huang, S. and Rao, N. (2010) Medical Image Fusion via an Effective Wavelet-Based Approach. EURASIP Journal on Advances in Signal Processing, 2010, Article ID: 579341.

- 3. Wang, A., Qi, C., Dong, J., Meng, S. and Li, D. (2013) Multimodal Medical Image Fusion in Non-Subsampled Contourlet Transform Domain. IEEE International Conference on ICMIC, Harbin, 16-18 August 2013, 169-172.

- 4. Al-Azzawi, N. and Abdullah, W.A.K.W. (2011) Medical Image Fusion Schemes Using Contourlet Transform and PCA Based. In: Zheng, Y., Ed., Image Fusion and Its Applications, InTech, 93-110.

- 5. James, A. P. and Dasarathy, B V. (2014) Medical Image Fusion: A Survey of the State of The art. Information Fusion, 19, 4-19.

http://dx.doi.org/10.1016/j.inffus.2013.12.002 - 6. Wang, L., Li, B. and Tian, L. (2014) Multi-Modal Medical Image Fusion Using the Inter-Scale and intra-scale dependencies between image shift-invariant shearlet coefficients. Information Fusion, 19, 20-28.

http://dx.doi.org/10.1016/j.inffus.2012.03.002 - 7. Liu, Z., Yin, H., Chai, Y. and Yang, S. X. (2014) A Novel Approach for Multimodal Medical Image Fusion. Expert systems with applications, 41, 7425-7435.

http://dx.doi.org/10.1016/j.eswa.2014.05.043 - 8. Xu, Z. (2014) Medical Image Fusion Using Multi-Level Local Extrema. Information Fusion, 19, 38-48.

http://dx.doi.org/10.1016/j.inffus.2013.01.001 - 9. Daneshvar, S. and Ghassemian, H. (2010) MRI and PET Image Fusion by Combining HIS and Retina-Inspired Models. Information Fusion, 11, 114-123.

http://dx.doi.org/10.1016/j.inffus.2009.05.003 - 10. Singh, R., Vatsa, M. and Noore, A. (2009) Multimodal Medical Image Fusion Using Redundant Discrete Wavelet Transform. Seventh International Conference on Advances in Pattern Recognition, Kolkata, 4-6 February 2009, 232- 235.

http://dx.doi.org/10.1109/icapr.2009.97 - 11. Shivappa, S.T., Rao, B.D. and Trivedi, M.M. (2008) An Iterative Decoding Algorithm for Fusion of Multimodal Information. EURASIP Journal on Advances in Signal Processing, 2008, Article ID: 478396.

- 12. Redondo, R., Sroubek, F., Fischer, S. and Cristobal, G. (2009) Multifocus Image Fusion Using the Log-Gabor Transform and a Multisize Windows Technique. Information Fusion, 10, 163-171.

http://dx.doi.org/10.1016/j.inffus.2008.08.006 - 13. Li, S. and Yang, B. (2008) Multifocus Image Fusion Using Region Segmentation and Spatial Frequency. Image and Vision Computing, 26, 971-979.

http://dx.doi.org/10.1016/j.imavis.2007.10.012 - 14. Yonghong, J. (1998) Fusion of Landsat TM and SAR Images Based on Principal Component Analysis. Remote Sensing Technology and Application, 13, 46-49.

- 15. Petrovic, V S. and Xydeas, C.S. (2004) Gradient-Based Multiresolution Image Fusion. IEEE Transaction on Image Processing, 13, 228-237.

http://dx.doi.org/10.1109/TIP.2004.823821 - 16. Burt, P.J. and Adelson, E.H. (1983) The Laplacian Pyramid as a Compact Image Code. IEEE Transaction on Communications, 31, 532-540.

http://dx.doi.org/10.1109/TCOM.1983.1095851 - 17. Toet, A. (1989) Image Fusion by a Ratio of Low-Pass Pyramid. Pattern Recognition Letters, 9, 245-253.

http://dx.doi.org/10.1016/0167-8655(89)90003-2 - 18. Pu, T. and Ni, G. (2000) Contrast-Based Image Fusion Using the Discrete Wavelet Transform. Optical Engineering, 39, 2075-2082.

http://dx.doi.org/10.1117/1.1303728 - 19. Toet, A. (1989) A Morphological Pyramidal Image Decomposition. Pattern Recognition Letters, 9, 255-261.

http://dx.doi.org/10.1016/0167-8655(89)90004-4 - 20. Ehlers, M. (1991) Multisensor Image Fusion Techniques in Remote Sensing. ISPRS Journal of Photogrammetry & Remote Sensing, 51, 311-316.

http://dx.doi.org/10.1016/0924-2716(91)90003-e - 21. Bhatnagar, G., Wu, Q.M.J. and Liu, Z. (2013) Directive Contrast Based Multimodal Medical Image Fusion in NSCT Domain. IEEE Transactions on Multimedia, 15, 1014-1024.

http://dx.doi.org/10.1109/TMM.2013.2244870 - 22. Bhatnagar, G., Wu, Q.M.J. and Liu, Z. (2015) A New Contrast Based Multimodal Medical Image Fusion Framework. Neurocomputing, 157, 143-152.

http://dx.doi.org/10.1016/j.neucom.2015.01.025 - 23. Yang, L., Guo, B.L. and Ni, W. (2008) Multimodality Medical Image Fusion Based on Multiscale Geometric Analysis of Contourlet Transform. Neurocomputing, 72, 203-211.

http://dx.doi.org/10.1016/j.neucom.2008.02.025 - 24. Li, S., Yang, B. and Hu, J. (2011) Performance Comparison of Different Multi-Resolution Transforms for Image Fusion. Information Fusion, 12, 74-84.

http://dx.doi.org/10.1016/j.inffus.2010.03.002 - 25. Das, S. and Kundu, M.K. (2012) NSCT-Based Multimodal Medical Image Fusion Using Pulse-Coupled Neural Network and Modified Spatial Frequency. Medical & Biological Engineering & Computing, 50, 1105-1114.

http://dx.doi.org/10.1007/s11517-012-0943-3 - 26. Li, T. and Wang, Y. (2011) Biological Image Fusion Using a NSCT Based Variable-Weight Method. Information Fusion, 12, 85-92.

http://dx.doi.org/10.1016/j.inffus.2010.03.007 - 27. Zhang, Q. and Guo, B.L. (2009) Multi-Focus Image Fusion Using the Nonsubsampled Contourlet Transform. Signal Process, 89, 1334-1346.

http://dx.doi.org/10.1016/j.sigpro.2009.01.012 - 28. Yang, Y., Tong, S., Huang, S. and Lin, P. (2014) Log-Gabor Energy Based Multimodal Medical Image Fusion in NSCT Domain. Computational and Mathematical Methods in Medicine, 2014, Article ID: 835481.

http://dx.doi.org/10.1155/2014/835481 - 29. Qu, X.B., Yan, J.W., Xiao, H.Z. and Zhu, Z.Q. (2008) Image Fusion Algorithm Based on Spatial Frequency-Motivated Pulse Coupled Neural Networks in Nonsubsampled Contourlet Transform Domain. Acta Automatica Sinica, 34, 1508- 1514.

http://dx.doi.org/10.1016/S1874-1029(08)60174-3 - 30. Ali, F.E., El-Dokany, I.M., Saad, A.A. and Abd El-Samie, F.E. (2008) Curvelet Fusion of MR and CT Images. Pro- gress in Electromagnetics Research C, 3, 215-224.

http://dx.doi.org/10.2528/PIERC08041305 - 31. Do, M.N. and Vetterli, M. (2005) The Contourlet Transform: An Efficient Directional Multiresolution Image Representation. IEEE Transactions on Image Processing, 14, 2091-2106.

http://dx.doi.org/10.1109/TIP.2005.859376 - 32. Qu, X.B., Yan, J.W. and Yang, G.D. (2009) Multifocus Image Fusion Method of Sharp Frequency Localized Contourlet Transform Domain Based on Sum-Modified-Laplacian. Optics and Precision Engineering, 17, 1203-1212.

- 33. Cunha, A.L., Zhou, J. and Do, M.N. (2006) The Nonsubsampled Contourlet Transform: Theory, Design, and Applications. IEEE Transactions on Image Processing, 15, 3089-3101.

http://dx.doi.org/10.1109/TIP.2006.877507 - 34. Do, M.N. and Vetterli, M. (2005) The Contourlet Transform: An Efficient Directional Multiresolution Image Representation. IEEE Transactions on Image Processing, 14, 2091-2106.

http://dx.doi.org/10.1109/TIP.2005.859376 - 35. Blum, R.S., Xue, Z. and Zhang, Z. (2005) Overview of Image Fusion. In: Blum, R.S. and Liu, Z., Eds., Multi-Sensor Image Fusion and Its Applications, CRC Press, Boca Raton, FL, 1-35.

- 36. Maintz, J.B.A. and Viergever, M.A. (1998) A Survey of Medical Image Registration. Medical Image Analysis, 2, 1-36.

http://dx.doi.org/10.1016/S1361-8415(01)80026-8 - 37. Zitova, B. and Flusser, J. (2003) Image Registration Methods: A Survey. Image and Vision Computing, 21, 977-1000.

http://dx.doi.org/10.1016/S0262-8856(03)00137-9 - 38. Arce, G.R. (2005) Nonlinear Signal Processing: A Statistical Approach. John Wiley & Sons, New Jersey.

- 39. Arias-Castro, E. and Donoho, D.L. (2009) Does Median Filtering Truly Preserve Edges Better than Linear Filtering? Annals of Statistics, 37, 1172-1206.

http://dx.doi.org/10.1214/08-AOS604 - 40. Guixi, L., Chunhu, L. and Wenjie, L. (2004) A New Fusion Arithmetic Based on Small Wavelet Multi-Resolution Decomposition. Journal of Optoelectronics—Laser, 18, 344-347.

- 41. Zanxia, Q., Jiaxiong, P. and Hongqun, W. (2003) Remote Sensing Image Fusion Based on Small Wavelet Transform’s Local Variance. Journal of Huazhong University of Science and Technology: Natural Science, 6, 89-91.

- 42. Mitianoudis, N. and Stathaki, T. (2007) Pixel-Based and Region-Based Image Fusion Schemes Using ICA Bases. Information Fusion, 8, 131-142.

http://dx.doi.org/10.1016/j.inffus.2005.09.001 - 43. Chipman, L.J., Orr, T.M. and Graham, L.N. (1995) Wavelets and Image Fusion. Proceedings of the IEEE International Conference on Image Processing, Vol. 3, Washington DC, 23-26 October 1995, 248-251.

http://dx.doi.org/10.1109/icip.1995.537627 - 44. Liu, G.X. and Yang, W.H. (2002) A Wavelet-Decomposition-Based Image Fusion Scheme and Its Performance Evaluation. Acta Automatica Sinica, 28, 927-934.

- 45. Li, M., Zhang, X.Y. and Mao, J. (2008) Neighboring Region Variance Weighted Mean Image Fusion Based on Wavelet Transform. Foreign Electronic Measurement Technology, 27, 5-6.