A. AKBAŞ ET AL.

Copyright © 2013 SciRes. ENG

Table 2. Ensemble classification results.

Ada BoostM1 +

Classif ie r

Performance Values

Acc Kappa Mcc ROC

BayesNet

0.987 0.910 0.910 0.995

NaiveBayes

0.949 0.566 0.594 0.865

SMO

0.942 0.430 0.479 0.880

Ibk

0.912 0.284 0.315 0.642

RandomForest

0.991 0.939 0.940 0.998

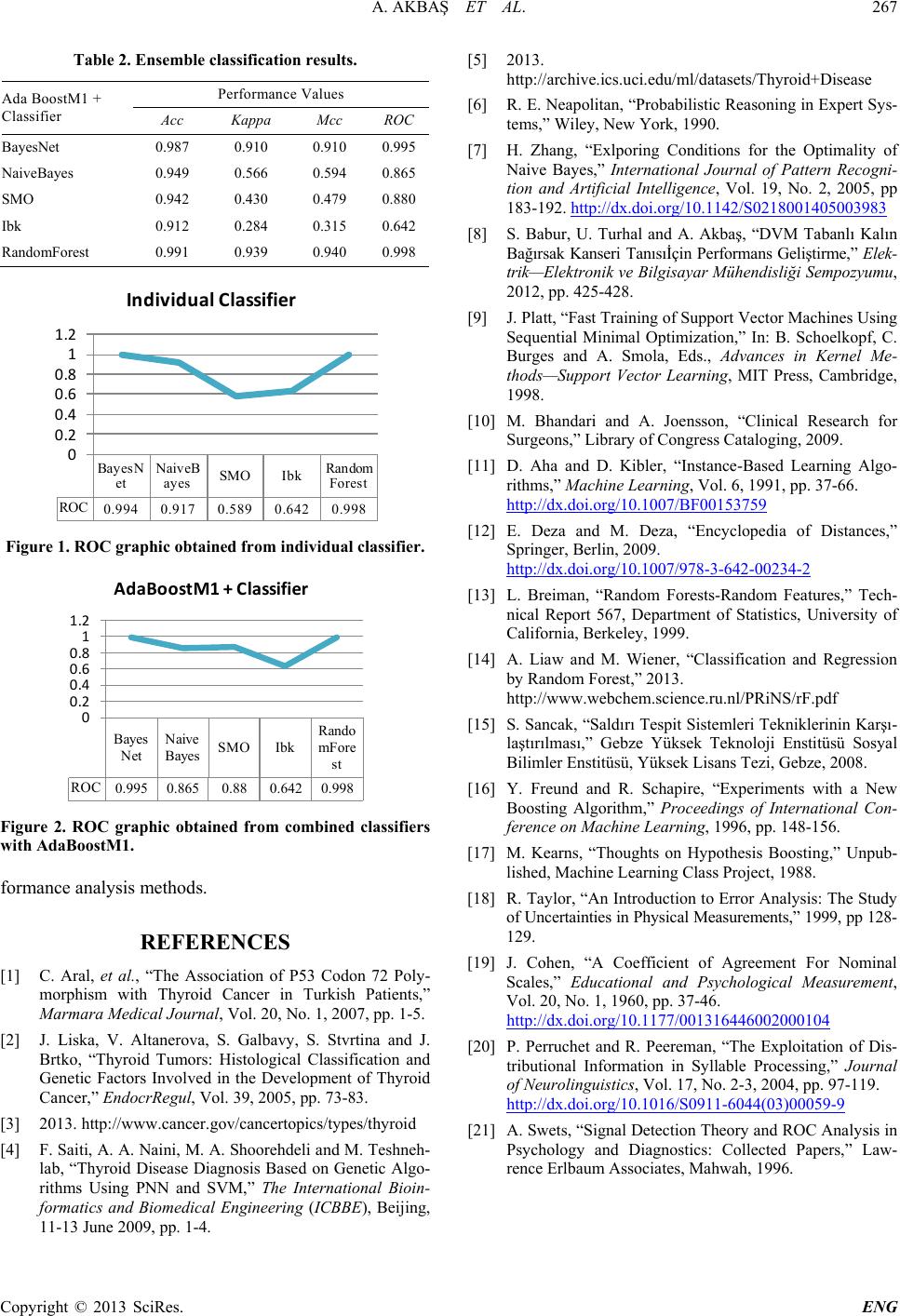

Figure 1. ROC graphic obtained from individual classifier.

Figure 2. ROC graphic obtained from combined classifiers

with AdaBoostM1.

formance analysis methods.

REFERENCES

[1] C. Aral, et al., “The Association of P53 Codon 72 Poly-

morphism with Thyroid Cancer in Turkish Patients,”

Marmara Medical Journal, Vol. 20, No. 1, 2007, pp. 1-5.

[2] J. Liska, V. Altanerova, S. Galbavy, S. Stvrtina and J.

Brtko, “Thyroid Tumors: Histological Classification and

Genetic Factors Involved in the Development of Thyroid

Cancer,” EndocrRegul, Vol. 39, 2005, pp. 73-83.

[3] 2013. http://www.cancer.gov/cancertopics/types/thyroid

[4] F. Saiti, A. A. Naini , M. A. Shoorehdeli and M. Teshneh-

lab, “Thyroid Disease Diagnosis Based on Genetic Algo-

rithms Using PNN and SVM,” The International Bioin-

formatics and Biomedical Engineering (ICBBE), Beijing,

11-13 June 2009, pp. 1-4.

[5] 2013.

http://archive.ics.uci.edu/ml/datasets/Thyroid+Disease

[6] R. E. Neapolitan, “Probabilistic Reasoning in Expert Sys-

tems,” Wiley, New York, 1990.

[7] H. Zhang, “Exlporing Conditions for the Optimality of

Naive Bayes,” International Journal of Pattern Recogni-

tion and Artificial Intelligence, Vol. 19, No. 2, 2005, pp

183-192. http://dx.doi.org/10.1142/S0218001405003983

[8] S. Babur, U. Turhal and A. Akbaş, “DVM Tabanlı Kalın

Bağırsak Kanseri Tanısıİçin Performans Geliştirme,” Elek-

trik—Elektronik ve Bilgisayar Mühendisliği Sempozyumu,

2012, pp. 425-428.

[9] J. Platt, “Fast Training of Support Vector Machines Using

Sequential Minimal Optimization,” In: B. Schoelkopf, C.

Burges and A. Smola, Eds., Advances in Kernel Me-

thods—Support Vector Learning, MIT Press, Cambridge,

1998.

[10] M. Bhandari and A. Joensson, “Clinical Research for

Surgeons,” Library of Congress Cataloging, 2009.

[11] D. Aha and D. Kibler, “Instance-Based Learning Algo-

rithms,” Machine Learning, Vol. 6, 1991, pp. 37-66.

http://dx.doi.org/10.1007/BF00153759

[12] E. Deza and M. Deza, “Encyclopedia of Distances,”

Springer, Berlin, 2009.

http://dx.doi.org/10.1007/978-3-642-00234-2

[13] L. Breiman, “Random Forests-Random Features,” Tech-

nical Report 567, Department of Statistics, University of

California, Berkeley, 1999.

[14] A. Liaw and M. Wiener, “Classification and Regression

by Random Forest,” 2013.

http://www.webchem.science.ru.nl/PRiNS/rF.pdf

[15] S. Sancak, “Saldırı Tespit Sistemleri Tekniklerinin Karşı-

laştırılması,” Gebze Yüksek Teknoloji Enstitüsü S osy a l

Bilimler Enstitüsü, Yüksek Lisans Tezi, Gebze, 2008.

[16] Y. Freund and R. Schapire, “Experiments with a New

Boosting Algorithm,” Proceedings of International Con-

ference on Machine Learning, 1996, pp. 148-156.

[17] M. Kearns, “Thoughts on Hypothesis Boosting,” Unpub-

lished, Machine Learning Class Project, 1988.

[18] R. Taylor, “An Introduction to Error Analysis: The Study

of Uncertainties in Physical Measurements,” 1999, pp 128-

129.

[19] J. Cohen, “A Coefficient of Agreement For Nominal

Scales,” Educational and Psychological Measurement,

Vol. 20, No. 1, 1960, pp. 37-46.

http://dx.doi.org/10.1177/001316446002000104

[20] P. Perruchet and R. Peereman, “The Exploitation of Dis-

tributional Information in Syllable Processing,” Journal

of Neurolinguistics, Vol. 17, No. 2-3, 2004, pp. 97-119.

http://dx.doi.org/10.1016/S0911-6044(03)00059-9

[21] A. Swets, “Signal Detect ion Theory and ROC Analysis in

Psychology and Diagnostics: Collected Papers,” Law-

rence Erlbaum Associates, Mahwah, 1996.