Journal of Software Engineering and Applications, 2013, 6, 47-52 http://dx.doi.org/10.4236/jsea.2013.610A006 Published Online October 2013 (http://www.scirp.org/journal/jsea) 47 A Survey of Software Test Estimation Techniques Kamala Ramasubramani Jayakumar1, Alain Abran2 1Amitysoft Technologies, Chennai, India; 2École de Technologie Supérieure—University of Quebec, Montreal, Canada. Email: jayakumar@amitysoft.com Received August 30th, 2013; revised September 27th, 2013; accepted October 5th, 2013 Copyright © 2013 Kamala Ramasubramani Jayakumar, Alain Abran. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, d istribution, and reproduction in any medium, provided the original work is p rop erly cited. ABSTRACT Software testing has become a primary business for a number of IT services companies, and estimation, which remains a challenge in software development, is even more challenging in software testing. This paper presents an overview of software test estimation techniques surveyed, as well as some of the challenges that need to be overcome if the founda- tions of these software testing estimation techniques are to be improved. Keywords: Software Testing; Testing Estimation; Software Estimation; Outsourcing 1. Introduction Software testing has become a complex endeavor, owing to the multiple levels of testing th at are required, such as component testing, integration testing, and system testing, as well as the types of testing that need to be carried out, such as functional testing, performance testing, and secu- rity testing [1]. It is critical that relevant estimation tech- niques be used in software testing, depending on the scope what the testing called for. Furthermore, software testing has become an industry of its own over the years, with the emergence of inde- pendent testing serv ices firms and IT services companies establishing testing services as a business unit. Test esti- mation consists of the estimation of effort and cost for a particular level of testing, using various methods, tools, and techniques. The incorrect estimation of testing effort often leads to an inadequate amount of testing, which, in turn, can lead to failures of software systems once they are deployed in organizations. Estimation is the most critical activity in software testing, and an unavoidable one, but it is often performed in haste, with those respon- sible for it merely hoping for the best. Test estimation techniques have often been derived from generic software development estimation tech- niques, in which testing figures are as one of the phases of the software development life cycle, as in the CO- COMO 81 and COCOMO II models [2]. Test estimation in an outsourcing context differs sig- nificantly from test estimation embedded in software de- velopment, owing to several process factors related to development organization and testing organization, in addition to factors related to the product to be tested. Distinct pricing approaches, such as time and material, fixed-bid, output-based, and outcome-based pricing, are followed by the industry based on customer needs. An adequate estimation technique is essential for all pricing models except for time and material pricing. The focus of the survey reported here is the estimation of effort for testing, and not the estimation of cost or schedule. 2. Evaluation Criteria and Groups of Estimation Techniques For this study, the following criteria have been selected to analyze test estimation techniques: 1. Customer view of requirements: This criterion makes it possible to determine whether the estimation tech- nique looks at the software requirements from a cus- tomer viewpoint or from the technical/implementa- tion viewpoint: estimation based on the customer viewpoint assures the customer that he is getting a fair price (i.e. estimate) in a competitive market for what he is asking for in terms of quantity and quality, and that an increase or decrease in price (i.e. the esti- mated effort) is directly related to increases or de- creases in the number of functions and/or levels of quality expected, and not on how efficient, or other- wise, a supplier is at delivering software using dif- ferent sets of tools and with different groups of peo- ple. 2. Functional size as a prerequisite to estimation: Most estimation methods use some form of size, which is either implicit or explicit in effort estimation: when Copyright © 2013 SciRes. JSEA  A Survey of Software Test Estimation Technique s 48 size is not explicit, benchmarking and performance studies across projects and organizations are not pos- sible. Functional size can be measured using either international standards or locally defined sizing me- thods. 3. Mathematical validity: Surprisingly, quite a few es- timation techniques have evolved over the years, mostly based only on “feel good” factor and ignor- ing the validity of their mathematical foundations. This criterion looks at the metrological foundation of the proposed estimation techniques. A valid mathe- matical foundation provides a sound basis for further improvements. 4. Verifiability: The estimate produced must be verifi- able, in order to inspire confidence. Verifiability makes the estimate more dependable. 5. Benchmarking: It is essential in an outsourcing con- text that estimates be comparable across organizations, as this can help in benchmarking and performance improvement. The genesis of the estimation techniques is looked at to determine whether or not benchmark- ing is feasible. Estimation techniques are based on a number of dif- ferent philosophies. For the purposes of this survey, these techniques have been classified in to the following groups: 1) techniques based on judgment and rules of thumb, 2) techniques based on analogy and work breakdown, 3) techniques based on factors and weights, 4) techniques based on size, 5) fuzzy and other models. There are also, of course, several variations of tech- niques in each group. In this paper, we present a few representative techniques from each group to illustrate their basis for estimation, as well as their strengths and weaknesses. 3. Survey Findings Using the five criteria described in the previous section, Table 1 presents a high level analysis of each of the above groups of techniques for th e estimation of softw are testing. Comments specific to each group of testing tech- niques are presented su bsequently. 3.1. Judgment and Rule of Thumb Techniques Delphi [3]: a classic estimation technique in which ex- perts are involved in determining individual estimates for a particular set of requirements based on their own earlier experience. Multiple iterations take place during which the experts learn the reasoning from other experts, and rework their estimates in subsequent iterations. The final estimate is picked from the narrowed range of values estimated by experts in the last iteration. Wide-band Delphi [4]: a technique enabling interac- tion between experts to arrive at a decision point. A quick estimate is provided by experts knowledgeable in the domain and in testing, but the resulting estimate will be very approximate, and should be applied with caution. Estimates are not verifiable in this case, and benchmark- ing is possible. These techniques mostly take the imple- mentation view of requirements, and functional size is often ignored. Rule of Thumb: estimates which are based on ratios and rules pre-established by individuals or by experi- enced estimators, but without a well documented and independently verifiable basis. Typically, functional size is not considered in this group of testing estimation techniques. They are not bas- ed on the analysis of well documented historical data, and benchmarking is not feasible. 3.2. Analogy and Work Breakdown Techniques Analogy-based [3]: techniques involving comparison of the components of the software under test with standard components, for which test effort is known based on his- torical data. The total estimate of all the components of the software to be tested is further adjusted based on project-specific factors and the management effort re- quired, such as planning and review. Table 1. Summary analysis of strengths and weaknesses of estimation techniques for testing. Criteria estimation techniques Customer view of requirements Functional size as a prerequisite Mathematical validity Verifiable Bench marking 1) Judgment & rule of thumb NO NO Not applicable NO NO 2) Analogy & work breakdown NO NO YES YES Partially, and only when standards are used 3) Factor & weight NO NO NO-units are most often ignored YES NO 4) Size YES YES Varies with sizing technique selected YES YES 5) Fuzzy & other models Partially Most often, No YES, in general, but at times units are ignored Partially Partially, and only when standards are used Copyright © 2013 SciRes. JSEA  A Survey of Software Test Estimation Technique s 49 Proxy-based (PROBE) [5]: a technique for estimating development effort, which can be adapted for estimating testing efforts for smaller projects. When the components of the product to be tested are significantly different from the standard components, a new baseline has to be estab- lished. Validation based on historical data is weak and fuzzy in this case. Functional size is not considered in such techniques, and benchmarking is not feasible. Task-based [3]: a typical work breakdown-based es- timation method where all testing tasks are listed and three-point estimates for each task are calculated with a combination of the Delphi Oracle and Three Point tech- niques [6]. One of the options offered by this method for arriving at an expected estimate for each task is a Beta distribution formula. The individual estimates are then cumulated to compute the total effort for all the tasks. Variations of these techniques, such as Bottom-Up and Top-Down, are based on how the tasks are identified. These techniques can work in a local context within an organization, where similar types of projects are exe- cuted. Benchmarking is not possible, since there is no agreed definition of what constitutes a task or work break- down. Test Case Enumeration-based [3]: an estimation me- thod which starts with the identification of all the test cases to be executed. An estimate of the expected effort for testing each test case is calculated, using a Beta dis- tribution formula, for instance. A major drawback of this technique is that significant effort has to be expended to prepare test cases before estimating for testing. This technique can work in a context where there is a clear understanding of what constitutes a test case. Productiv- ity measurements of testing activities and benchmarking are not possible. 3.3. Factor and Weight-Based Estimation Test Point Analysis [7]: a technique in which dynamic and static test points are calculated to arrive at a test point total. Dynamic test points are calculated based on function points, fun ctionality-dep enden t factors, and qu a- lity characteristics. Function-dependent factors, such as user importance, usage intensity, interfacing require- ments, complexity, and uniformity are given a rating ba- sed on predefined ranges of values. Dynamic quality characteristics, such as suitability, security, usab ility, and efficiency, are rated between 0 and 6 to calculate dy- namic test points. Static points are assigned based on the applicability of each of the quality characteristic as per ISO 9126. Each applicable quality characteristic is as- signed a value of 16 and summed to obtain the total num- ber of static test points. The test point total is converted to effort based on ratings for a set of productivity and environmental factors. While this technique appears to take into account several relevant factors, these factors do not have the same measurement units, which makes it difficult to use them in mathematical operations. Function ality- and qua- lity-dependent factors are added together to obtain Dy- namic Test Point, and subsequently to arrive at a Test Point size, which again is the sum of three different quantities with potentially d ifferent units of measu rement. The basic mathematical principles of addition and multi- plication are forgotten in the Test Point Analysis method. As such, it can be referred to as a complex “feel good” method. Use Case Test Points: a technique suggested [7] as an alternative to Test Points and derived from Use Case- based estimation for software development. Unadjusted Use Case Test Points are calculated as the sum of the actors multiplied by each actor’s weight from an actors’ weight table and the total number of use cases multip lied by a weight factor, which depends on the number of transactions or scenarios for each use case. Weights as- signed to each of the technical and environmental factors are used to convert un adju sted use case po ints to adj usted use case points. A conversion factor accounting for tech- nology/process language is used to convert adjusted use case points into test effort. This is another example of a “feel good” estimation method: ordinal scale values are inappropriately transformed into interval scale values, which are then multiplied with th e in tention of arriving at a ratio scale value, which exposes th e weak mathematical foundation. Although this technique takes a user’s view- point of requirements, it is not amenable to benchmark- ing. Test Execution Points [8]: a technique which esti- mates test execution effort based on system test size. Each step of the test specifications is analyzed based on characteristics exercised by the test step, such as screen navigation, file manipulation, and network usage. Each characteristic that impacts test size and test execution is rated on an ordinal scale—low, average, and high—and execution points are assigned. Authors have stopped short of suggesting approaches that can be adopted for converting test size to execution effort. The task of de- veloping test specifications prior to estimation requires a great deal of effort in itself. Since test effort has to be estimated long before the product is built, it would not be possible to use this technique early in the life cycle. Test team efficiency is factored into another variation of the estimation model for test execution effort. The Cognitive Information Complexity Measurement Mo- del [9] uses the count of operators and identifiers in the source code coupled with McCabe’s Cyclomatic Com- plexity measure. The measures used in this model lack the basic metrological foundations for quantifying com- plexity [10], and the validity of such measurements for estimating test execution effort has not been demons- trated. Copyright © 2013 SciRes. JSEA  A Survey of Software Test Estimation Technique s 50 3.4. Software Size-Based Estimation The Size-based: an estimation approach in which size is used and a regression model is built based on historical data collected adopting standard definitions. Here, size is one of the key input parameters in estimation. Some of the techniques use the conversion factors to convert size into effort. Regression models built using size directly enable the estimation of effort based on historical data. Test Size -based [3]: an estimation technique proposed for projects involving independent testing. The size of the functional requirements in Function Points using IFPUG’s Function Point Analysis (FPA) [11] (ISO 20926) is converted to unadjusted test points through a conversion factor. Based on an assessment of the appli- cation, the programming language, and the scope of the testing, weights from a weight table are assigned to test points. Unadjusted test points are modified using a com- posite weighting factor to arrive at a test point size. Next, test effort in person hours is computed by multiplying Test Point Size by a productivity factor. FPA, the first generation of functional size measurement methods, suf- fers from severe mathematical flaws with respect to its treatment of Base Functional Components, their weights in relation to a complexity assessment, and the Value Adjustment Factor used to convert Unadjusted Function Points to Adjusted Function Points. The mathematical limitations of FPA have been discussed in [10]. In spite of these drawbacks, this technique has been used by the industry and adopted for estimating test effort. Perform- ance benchmarking across organizations is possible with this technique for business application projects. Other estimation models, such as COCOMO and SLIM, and their implementation in the form of estima- tion tools, along with other tools such as SEER and Knowledge Plan [12], employ size based estimation of software development effort from which testing effort is derived, often in proportion to the total estimated effort. Usually, the size of software, measured in SLOC, is an input parameter for these models. There are provisions to backfire function point size and convert it to SLOC to use as input, although this action actually increases un- certainty in the estimate. These models use a set of pro- ject data and several predetermined factors and weights to make up an estimation model. The intention behind using these models and tools is interesting, in that they capture a host of parameters that are expected to influ- ence the estimate. Abran [10] has observed that many parameters of COCOMO models are described by lin- guistic values, and their influence is determined by ex- pert opinion rather than on the basis of information from descriptive engineering repositories. It should be noted that some of these models are built based on a limited set of project data and others with a large dataset, but they primarily use a black box approach. Some of the tools use predetermined equations, rather than the data directly. These models and automated tools can provide a false sense of security, when the raw data behind them cannot be accessed for independent validation and to gain ad- ditional insights. ISBSG equations [13]: techniques derived from esti- mation models based on a repository of large project datasets. These equations are based on a few significant parameters that influence effort estimates, as analyzed through hundreds of projects data from the open ISBSG database. Such estimation equations are built as a white box approach, with the ab ility to understand the underly- ing data and learn from them. Using a similar approach, practical estimation models using functional size can be developed by the organizations themselves. Another stu- dy of projects in the ISBSG database has come up with interesting estimation models for software testing using functional size [14]. Capers Jones [15] mentions that FPA and COSMIC Function Points [16] provide interesting insights into the quantum of test cases (also referred to as “test volume”), and proposes various rules of thumb based on function points in order to calculate the number of test cases re- quired and to estimate the potential number of defects. Estimate of test cases and defects lead to estimate of ef- forts for overall testing. However, the authors caution against using rule of thumb methods, indicating that they are not accurate and should not be used for serious busi- ness purposes. Non functional requirements, like functional require- ments, are quite critical in software testing. An approach for estimating the test volume and effort is proposed in [17], where the initial estimate based on functional re- quirements is adjusted subsequently by taking into con- sideration non functional requirements. This model uses COSMIC Function Points, the first of the 2nd genera- tion functional size measurement methods adopted by the ISO [18], as it overcomes the limitations of the 1st gener- ation of Function Point sizing methods. Estimates for non functional testing are arrived at based on graphical as- sessment of non functional requirements of project data. Estimation models developed by performing regression analysis between COSMIC Function Points and develop- ment effort have been successfully used in the industry [19]. Estimation models built using historical project data with a COSMIC Function Point size have a strong ma- thematical foundation, are verifiable, and take into ac- count the customer view of requirements. In addition, they are amenable to benchmarking and performance stu- dies. 3.5. Fuzzy Inference & Other Models Fuzzy models have been proposed to account for in- comeplete and/or uncertain input information available Copyright © 2013 SciRes. JSEA  A Survey of Software Test Estimation Technique s 51 for estimation. One of the fuzzy logic approaches [20] uses COCOMO [2] as a foundation for fuzzy inference using mode and size as inputs. Even though this ap- proach is proposed for estimating software development effort, it could be adopted for estimating testing effort as well. Another approach has been proposed by Ranjan et al. [21]. This technique again uses COCOMO as the ba- sis on which KLOC is used as an input, and development effort is calculated using effort adjustment factors based on cost drivers. Francisco Valdès [22] designed a fuzzy logic estima- tion process in his Ph. D thesis. His approach is the pur- est form of the application of fuzzy logic to the software estimation context, since it does not use any of the other estimation techniques. The model allows experts to de- cide on the most significant input variables for the kinds of projects in which th e model will be applied . Th e mem- bership function is defined for the input variables, and the values are assigned based on expert opinion. This creates fuzzy values that are used in inference rule exe- cution. Unlike other expert judgement-based methods, where the knowledge resides with experts, here the knowl- edge is captured in the form of inference rules and stays within the organization. The estimates produced by these models can also be verified. However, setting up a fuzzy rule base requires time and the availability of experts in the domain/tech nology for wh ich esti mation is being per- formed. Software Development Estimation techniques using Artificial Neural Networks (ANN) [23,24] and Case- Based Reasoning (CBR) [25] are reported by researchers. There is an implementation of ANN for software testing [26], which inherits the drawbacks from Use Case based estimation. ANN and CBR have to be further investi- gated for arriving at practical and mathematically correct estimation of software testing. 4. Summary and Key Research Needs Judgment & Rule of Thumb-based techniques are quick to produce very approximate estimates, but the estimates are not verifiable and of unknown ranges of uncertainty. Analogy & Work Break-Down techniques may be effective when they are fine-tuned for technologies and processes adopted for testing. They take an implementa- tion view of requirements, and cannot be used for bench- markin g purposes. Factor & Weight-based techniques perform several illegal mathematical operations , and lose scientific credi- bility in the process. Th ey can serve as “feel good” tech- niques. Functional Size-based techniques are more amenable to performance studies and benchmarking. They tend to produce more accurate estimates [13]. COSMIC Func- tional Size-based estimation overcomes the limitations of the first generation of th e Function Point method. Estimation Tools based on black-box datasets have to be used very judiciously, with an understanding of where the models come from and the kind of data used to build them. Innovative approaches, such as Fuzzy Inference, Ar- tificial Neural Networks, and Case-based Reasoning, are yet to be adopted in the industry for estimating test- ing effort. The estimation techniques surveyed here are currently limited in their scope for application in outsourced soft- ware testing projects, and do not attempt to tackle esti- mates for all types of testing. The classification of test effort estimation techniques presented here is also ap- plicable for software development effort estimation tech- niques with the same strengths and weakness. Prior to pursuing further research to improve any one specific estimation technique, it would be of interest to develop a more refined view of the evaluation criteria identified in this paper, and to explore, in either software engineering or other disciplines, what candidate solutions or approaches could bring about the most benefit in terms of correcting weaknesses that have been identified or adding new strengths. REFERENCES [1] ISO, “ISO/IEC DIS 29119—1, 2, 3, & 4: Software and Systems Engineering—Software Testing—Part 1, 2, 3, & 4,” International Organization for Standardization (ISO), Geneva, 2012. [2] B. W. Boehm, “Software Cost Estimation with CO- COMO II,” Prentice Hall, Upper Saddle River, 2000. [3] M. Chemuturi, “Test Effort Estimation,” 2012. http://chemuturi.com/Test%20Effort%20Estimation.pdf [4] M. Nasir, “A Survey of Software Estimation Techniques and Project Planning Practices,” 7th ACIS International Conference on Software Engineering (SNPD’06), Las Ve- gas, 19-20 June 2006, pp. 305-310. [5] W. Humphrey, “A Discipline for Software Engineering,” Addison Wesley, Boston, 1995. [6] R. Black, “Test Estimation—Tools & Techniques for Rea- listic Predictions of Your Test Effort,” 2012. http://www.rbcs-us.com/software-testing-resources/librar y/basic-library [7] M. Kerstner, “Software Test Effort Estimation Methods,” Graz University of Technology, Graz, 2012. www.kerstner.at/en/2011/02/software-test-effort-estimati on-methods [8] E. Aranha and P. Borba, “Sizing System Tests for Esti- mating Test Execution Effort,” Federal University of Pernambuco, Brazil & Motorola Industrial Ltd, CO- COMO Forum 2007, Brazil, 2012. [9] D. G. E. Silva, B. T. De Abreu and M. Jino, “A Simple Approach for Estimation of Execution Effort of Func- tional Test Cases,” International Conference on Software Copyright © 2013 SciRes. JSEA  A Survey of Software Test Estimation Technique s Copyright © 2013 SciRes. JSEA 52 Testing Verification and Validation, Denver, April 2009, pp. 289-298. [10] A. Abran, “Software Metrics and Software Metrology,” Wiley & IEEE Computer Society Press, Hoboken, 2010. http://dx.doi.org/10.1002/9780470606834 [11] IFPUG, “Function Point Counting Practices Manual, Ver- sion 4.2.1,” International Fun ction Point s Users Group, New Je r s e y , 2005. [12] S. Basha and P. Dhavachelvan, “Analysis of Empirical Software Effort Estimation Models,” International Jour- nal of Computer Science and Information Security, Vol. 7, No. 2, 2010, pp. 68-77. [13] P. R. Hill, “Practical Project Estimation,” 2nd Edition, In- ternational Software Benchmarking Standards Group (ISBSG), V i c t o r ia , 2005. [14] K. R. Jayakumar and A. Abran, “Analysis of ISBSG Data for Understanding Software Testing Efforts,” IT Confi- dence Conference, Rio, 3 October 2013. [15] C. Jones, “Estimating Software Costs,” 2nd Edition, Tata McGraw-Hill, New York, 2007. [16] A. Abran, J.-M. Desharnais, S. Oligny, D. St-Pierre and C. Symons, “The COSMIC Functional Size Measurement Method Version 3.0.1, Measurement Manual, The COS- MIC Implementation Guide for ISO/IEC 19761:2003,” Common Software Measurements International Consor- tium (COSMIC), Montreal, 2009. [17] A. Abran, J. Garbajosa and L. Cheikhi, “Estimating the Test Volume and Effort for Testing and Verification & Validation,” IWSM-Mensura Conference, 5-9 November 2007, UIB-Universitat de les Illes Baleares, Spain, 2007, pp. 216- 234. http://www.researchgate.net/publication/228354130_Estimat ing_the_Test_Volume_and_Effort_for_Testing_and_Verific ation__Validatio n [18] ISO, “ISO/IEC 19761: Software Engineering—COSMIC —A Functional Size Measurement Method,” International Organization for Standardization (ISO), Geneva, 2011. [19] R. Dumke and A. Abran, “Case Studies of COSMIC Us- age and Benefits in Industry, COSMIC Function Points— Theory and Advanced Practices,” CRC Press, Boca Raton, 2011. [20] P. V. G. D. Prasad Reddy, K. R. Sudha and P. Rama Sree, “Application of the Fuzzy Logic Approach to Software Estimation,” International Journal of Advanced Com- puter Science and Applications, Vol. 2, No. 5, 2011, pp. 87-92. [21] P. R. Srivastava, “Estimation of Software Testing Effort: An Intelligent Approach,” 20th International Symposium on Software Reliability Engineering (ISSRE), Mysore, 2009. http://www.researchgate.net/publication/235799428_Estimat ion_of_Softw are_Testing_ Effort_An_intellig ent_A pproach [22] F. Valdès, “Design of the Fuzzy Logic Estimation Proc- ess,” Ph.D. Thesis, University of Quebec, Quebec, 2011. [23] A. S. Grewal, V. Gupta and R. Kumar, “Comparative Ana- lysis of Neural Network Techniques for Estimation,” In- ternational Journal of Computer Applications, Vol. 67, No. 11, 2013, pp. 31-34. [24] J. Kaur, S. Sing, K. S. Kahlon and P. Bassi, “Neural Net- work—A Novel Technique for Software Effort Estima- tion,” International Journal of Computer Theory and En- gineering, Vol. 2, No. 1, 2010, pp. 17-19. [25] Y. A. Hassan and T. Abu, “Predicting the Cost Estimation of Software Projects Using Case-Based Reasoning,” The 6th International Conference on Information Technology (ICIT 2013), Cape Town, 8-10 May 2013. [26] C. Abhishek, V. P. Kumar, H. Vitta and P. R. Srivastava, “Test Effort Estimation Using Neural Network,” Journal of Software Engineering & Applications, Vol. 3, No. 4, 2010, pp. 331-340. http://dx.doi.org/10.4236/jsea.2010.34038

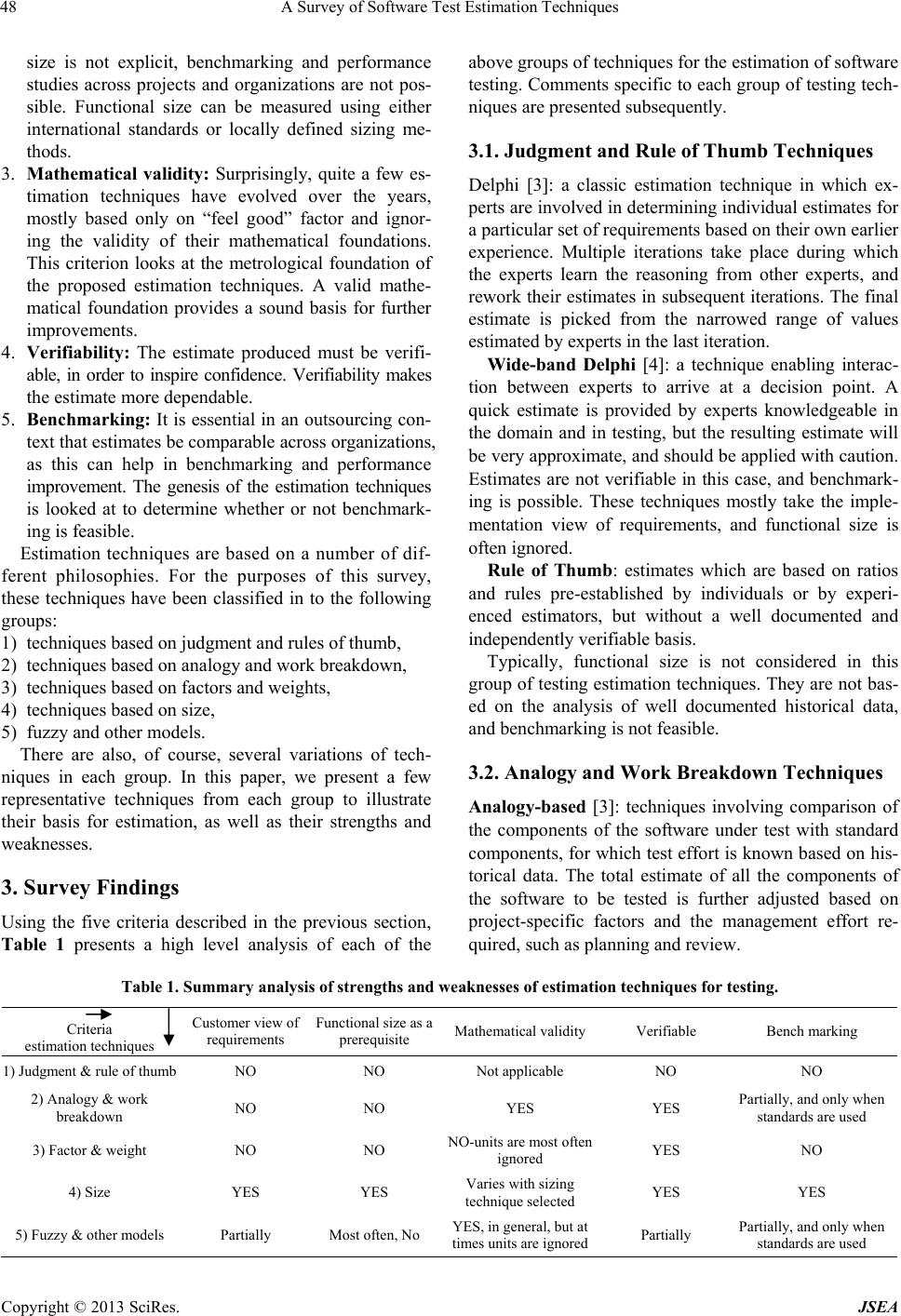

|